The Schumer Report on AI (RTFB)

post by Zvi · 2024-05-24T15:10:03.122Z · LW · GW · 3 commentsContents

The Big Spend What Would Schumer Fund? What About For National Security and Defense? What Else Would Schumer Encourage Next in General? I Have Two Better Ideas They Took Our Jobs Language Models Offer Mundane Utility Copyright Confrontation People Are Worried AI Might Kill Everyone Not Be Entirely Safe I Declare National Security Some Other People’s Reactions Conclusions and Main Takeaways None 3 comments

Or at least, Read the Report (RTFR).

There is no substitute. This is not strictly a bill, but it is important.

The introduction kicks off balancing upside and avoiding downside, utility and risk. This will be a common theme, with a very strong ‘why not both?’ vibe.

Early in the 118th Congress, we were brought together by a shared recognition of the profound changes artificial intelligence (AI) could bring to our world: AI’s capacity to revolutionize the realms of science, medicine, agriculture, and beyond; the exceptional benefits that a flourishing AI ecosystem could offer our economy and our productivity; and AI’s ability to radically alter human capacity and knowledge.

At the same time, we each recognized the potential risks AI could present, including altering our workforce in the short-term and long-term, raising questions about the application of existing laws in an AI-enabled world, changing the dynamics of our national security, and raising the threat of potential doomsday scenarios. This led to the formation of our Bipartisan Senate AI Working Group (“AI Working Group”).

They did their work over nine forums.

- Inaugural Forum

- Supporting U.S. Innovation in AI

- AI and the Workforce

- High Impact Uses of AI

- Elections and Democracy

- Privacy and Liability

- Transparency, Explainability, Intellectual Property, and Copyright

- Safeguarding Against AI Risks

- National Security

Existential risks were always given relatively minor time, with it being a topic for at most a subset of the final two forums. By contrast, mundane downsides and upsides were each given three full forums. This report was about response to AI across a broad spectrum.

The Big Spend

They lead with a proposal to spend ‘at least’ $32 billion a year on ‘AI innovation.’

No, there is no plan on how to pay for that.

In this case I do not think one is needed. I would expect any reasonable implementation of that to pay for itself via economic growth. The downsides are tail risks and mundane harms, but I wouldn’t worry about the budget. If anything, AI’s arrival is a reason to be very not freaked out about the budget. Official projections are baking in almost no economic growth or productivity impacts.

They ask that this money be allocated via a method called emergency appropriations. This is part of our government’s longstanding way of using the word ‘emergency.’

We are going to have to get used to this when it comes to AI.

Events in AI are going to be happening well beyond the ‘non-emergency’ speed of our government and especially of Congress, both opportunities and risks.

We will have opportunities that appear and compound quickly, projects that need our support. We will have stupid laws and rules, both that were already stupid or are rendered stupid, that need to be fixed.

Risks and threats, not only catastrophic or existential risks but also mundane risks and enemy actions, will arise far faster than our process can pass laws, draft regulatory rules with extended comment periods and follow all of our procedures.

In this case? It is May. The fiscal year starts in October. I want to say, hold your damn horses. But also, you think Congress is passing a budget this year? We will be lucky to get a continuing resolution. Permanent emergency. Sigh.

What matters more is, what do they propose to do with all this money?

A lot of things. And it does not say how much money is going where. If I was going to ask for a long list of things that adds up to $32 billion, I would say which things were costing how much money. But hey. Instead, it looks like he took the number from NSCAI, and then created a laundry list of things he wanted, without bothering to create a budget of any kind?

It also seems like they took the original recommendation of $8 billion in Fiscal Year 24, $16 billion in FY 25 ad $32 billion in FY 26, and turned it into $32 billion in emergency funding now? See the appendix. Then again, by that pattern, we’d be spending a trillion in FY 31. I can’t say for sure that we shouldn’t.

What Would Schumer Fund?

Starting with the top priority:

- An all government ‘AI-ready’ initiative.

- ‘Responsible innovation’ R&D work in fundamental and applied sciences.

- R&D work in ‘Foundational trustworthy AI topics, such as transparency, explainability, privacy, interoperability, and security.’

Or:

- Government AI adoption for mundane utility.

- AI for helping scientific research.

- AI safety in the general sense, both mundane and existential.

Great. Love it. What’s next?

- Funding the CHIPS and Science Act accounts not yet fully funded.

My current understanding is this is allocation of existing CHIPS act money. Okie dokie.

- Funding ‘as needed’ (oh no) for semiconductor R&D for the design and manufacture of high-end AI chips, through co-design of AI software and hardware, and developing new techniques for semiconductor fabrication that can be implemented domestically.

More additional CHIPS act funding, perhaps unlimited? Pork for Intel? I don’t think the government is going to be doing any of this research, if it is then ‘money gone.’

- Pass the Create AI Act (S. 2714) and expand programs like NAIRR to ‘ensure all 50 states are able to participate in the research ecosystem.’

More pork, then? I skimmed the bill. Very light on details. Basically, we should spend some money on some resources to help with AI research and it should include all the good vibes words we can come up with. I know what ‘all 50 states’ means. Okie dokie.

- Funding for a series of ‘AI Grand Challenge’ programs, such as described in Section 202 of the Future of AI Innovation Act (S. 4178) and the AI Grand Challenges Act (S. 4236), focus on transformational progress.

Congress’s website does not list text for S. 4236. S. 4178 seems to mean ‘grand challenge’ in the senses of prizes and other pay-for-results (generally great), and having ambitious goals (also generally great), which tend to not be how the system works these days.

So, fund ambitious research, and use good techniques.

- Funding for AI efforts at NIST, including AI testing and evaluation infrastructure and the U.S. AI Safety Institute, and funding for NIST’s construction account to address years of backlog in maintaining NIST’s physical infrastructure.

Not all of NIST’s AI effort is safety, but a large portion of our real government safety efforts are at NIST. They are severely underfunded by all accounts right now. Great.

- Funding for the Bureau of Industry and Security (BIS) to update its IT and data analytics software and staff up.

That does sound like something we should do, if it isn’t handled. Ensure BIS can enforce the rules it is tasked with enforcing, and choose those rules accordingly.

- Funding R&D at the intersection of AI and robotics to ‘advance national security, workplace safety, industrial efficiency, economic productivity and competitiveness, through a coordinated interagency initiative.’

AI robots. The government is going to fund AI robots. With the first goal being ‘to advance national security.’ Sure, why not, I have never seen any movies.

In all seriousness, this is not where the dangers lie, and robots are useful. It’s fine.

The interagency plan seems unwise to me but I’m no expert on that.

- R&D for AI to discover manufacturing techniques.

Once again, sure, good idea if you can improve this for real and this isn’t wasted or pork. Better general manufacturing is good. My guess is that this is not a job for the government and this is wasted, but shrug.

- Security grants for AI readiness to help secure American elections.

Given the downside risks I presume this money is well spent.

- Modernize the federal government and improve delivery of government services, through updating IT and using AI.

- Deploying new technologies to find inefficiencies in the U.S. code, federal rules and procurement devices.

Yes, please. Even horribly inefficient versions of these things are money well spent.

- R&D and interagency coordination around intersection of AI and critical infrastructure, including for smart cities and intelligent transportation system technologies.

Yes, we are on pace to rapidly put AIs in charge of our ‘critical infrastructure’ along with everything else, why do you ask? Asking people nicely not to let AI anywhere near the things is not an option and wouldn’t protect substantially against existential risks (although it might versus catastrophic ones). If we are going to do it, we should try to do it right, get the benefits and minimize the risks and costs.

Overall I’d say we have three categories.

- Many of these points are slam dunk obviously good. There is a lot of focus on enabling more mundane utility, and especially mundane utility of government agencies and government services. These are very good places to be investing.

- A few places where it seems like ‘not the government’s job’ to stick its nose, and where I do not expect the money to accomplish much, often that also involve some obvious nervousness around the proposals, but none of which actually amplify the real problems. Mostly I expect wasted money. The market already presents plenty of better incentives for basic research in most things AI.

- Semiconductors.

It is entirely plausible for this to be a plan to take most of $32 billion (there’s a second section below that also gets funding), and put most of that into semiconductors. They can easily absorb that kind of cash. If you do it right you could even get your money’s worth.

As usual, I am torn on chips spending. Hardware progress accelerates core AI capabilities, but there is a national security issue with the capacity relying so heavily on Taiwan, and our lead over China here is valuable. That risk is very real.

Either way, I do know that we are not going to talk our government into not wanting to promote domestic chip production. I am not going to pretend that there is a strong case in opposition to that, nor is this preference new.

On AI Safety, this funds NIST, and one of its top three priorities is a broad-based call for various forms of (both existential and mundane) AI safety, and this builds badly needed state capacity in various places.

As far as government spending proposals go, this seems rather good, then, so far.

What About For National Security and Defense?

These get their own section with twelve bullet points.

- NNSA testbeds and model evaluation tools.

- Assessment of CBRN AI-enhanced threats.

- AI-advancements in chemical and biological synthesis, including safeguards to reduce risk of synesthetic materials and pathogens.

- Fund DARPA’s AI work, which seem to be a mix of military applications and attempts to address safety issues including interpretability, along with something called ‘AI Forward’ for more fundamental research.

- Secure and trustworthy algorithms for DOD.

- Combined Joint All-Domain Command and Control Center for DOD.

- AI tools to improve weapon platforms.

- Ways to turn DOD sensor data into AI-compatible formats.

- Building DOD’s AI capabilities including ‘supercomputing.’ I don’t see any sign this is aiming for foundation models.

- Utilize AUKUS Pillar 2 to work with allies on AI defense capabilities.

- Use AI to improve implementation of Federal Acquisition Regulations.

- Optimize logistics, improve workflows, apply predictive maintenance.

I notice in #11 that they want to improve implementation, but not work to improve the regulations themselves, in contrast to the broader ‘improve our procedures’ program above. A sign of who cares about what, perhaps.

Again, we can draw broad categories.

- AI to make our military stronger.

- AI (mundate up through catastrophic, mostly not existential) safety.

The safety includes CBRN threat analysis, testbed and evaluation tools and a lot of DARPA’s work. There’s plausibly some real stuff here, although you can’t tell magnitude.

This isn’t looking ahead to AGI or beyond. The main thing here is ‘the military wants to incorporate AI for its mundane utility,’ and that includes guarding us against outside threats and ensuring its implementations are reliable and secure. It all goes hand in hand.

Would I prefer a world where all the militaries kept their hands off AI? I think most of us would like that, no matter our other views, But also we accept that we live in a very different world that is not currently capable of that. And I understand that, while it feels scary for obvious reasons and does introduce new risks, this mostly does not change the central outcomes. It does impact the interplay among people and nations in the meantime, which could alter outcomes if it impacts the balance of power, or causes a war, or sufficiently freaks enough people out.

Mostly it seems like a clear demonstration of the pattern of ‘if you were thinking we wouldn’t do or allow that, think again, we will instantly do that unless prevented’ to perhaps build up some momentum towards preventing things we do not want.

What Else Would Schumer Encourage Next in General?

Most items in the next section are about supporting small business.

- Developing legislation to leverage public-private partnerships for both capabilities and to mitigate risks.

- Further federal study of AI including through FFRDRCs.

- Supporting startups, including at state and local levels, including by disseminating best practices (to the states and locaties, I think, not to the startups?)

- The Comptroller General identifying anything statutes that impact innovation and competition in AI systems. Have they tried asking Gemini?

- Increasing access to testing tools like mock data sets, including via DOC.

- Doing outreach to small businesses to ensure tools meet their needs.

- Finding ways to support small businesses utilizing AI and doing innovation, and consider if legislation is needed to ‘disseminate best practices’ in various states and localities.

- Ensuring business software and cloud computing are allowable expenses under the SBA’s 7(a) loan program.

Congress has a longstanding tradition that Small Business is Good, and that Geographic Diversity That Includes My State or District is Good.

Being from the government, they are here to help.

A lot of this seems like ways to throw money at small businesses in inefficient ways? And to try and ‘make geographic diversity happen’ when we all know it is not going to happen? I am not saying you have to move to the Bay if that is not your thing, I don’t hate you that much, but at least consider, let’s say, Miami or Austin.

In general, none of this seems like a good idea. Not because it increases existential risk. Because it wastes our money. It won’t work.

The good proposal here is the fourth one. Look for statues that are needlessly harming competition and innovation.

Padme: And then remove them?

(The eighth point also seems net positive, if we are already going down the related roads.)

The traditional government way is to say they support small business and spend taxpayer money by giving it to small business, and then you create a regulatory state and set of requirements that wastes more money and gives big business a big edge anyway. Whenever possible, I would much rather remove the barriers than spend the money.

Not all rules are unnecessary. There are some real costs and risks, mundane, catastrophic and exponential, to mitigate.

Nor are all of the advantages of being big dependent on rules and compliance and regulatory capture, especially in AI. AI almost defines economies of scale.

Many would say, wait, are not those worried about AI safety typically against innovation and competition and small business?

And I say nay, not in most situations in AI, same as almost all situations outside AI. Most of the time all of that is great. Promoting such things in general is great, and is best done by removing barriers.

The key question is, can you do that in a way that works, and can you do it while recognizing the very high leverage places that break the pattern?

In particular, when the innovation in question is highly capable future frontier models that pose potential catastrophic or existential risks, especially AGI or ASI, and especially when multiple labs are racing against each other to get there first.

In those situations, we need to put an emphasis on ensuring safety, and we need to at minimum allow communication and coordination between those labs without risk of the government interfering in the name of antitrust.

In most other situations, including most of the situations this proposal seeks to assist with, the priorities here are excellent. The question is execution.

I Have Two Better Ideas

Do you want to help small business take on big business?

Do you want to encourage startups and innovation and American dynamism?

Then there are two obvious efficient ways to do that. Both involve the tax code.

The first is the generic universal answer.

If you want to favor small business over big business, you can mostly skip all those ‘loans’ and grants and applications and paperwork and worrying what is an expense under 7(a). And you can stop worrying about providing them with tools, and you can stop trying to force them to have geographic diversity that doesn’t make economic sense – get your geographic diversity, if you want it, from other industries.

Instead, make the tax code explicitly favor small business over big business via differentiating rates, including giving tax advantages to venture capital investments in early stage startups, which then get passed on to the business.

If you want to really help, give a tax break to the employees, so it applies even before the business turns a profit.

If you want to see more of something, tax it less. If you want less, tax it more. Simple.

The second is fixing a deeply stupid mistake that everyone, and I do mean everyone, realizes is a mistake that was made in the Trump tax cuts, but that due to Congress being Congress we have not yet fixed, and that is doing by all reports quite a lot of damage. It is Section 174 of the IRS code requiring that software engineers and other expenses related to research and experimental activities (R&E) can only be amortized over time rather than fully deducted.

The practical result of this is that startups and small businesses, that have negative cash flow, look to the IRS as if they are profitable, and then owe taxes. This is deeply, deeply destructive and stupid in one of the most high leverage places.

From what I have heard, the story is that the two parties spent a long time negotiating a fix for it, it passed the house overwhelmingly, then in the Senate the Republicans decided they did not like the deal package of other items included with the fix, and wanted concessions, and the Democrats, in particular Schumer, said a deal is a deal.

This needs to get done. I would focus far more on that than all these dinky little subsidies.

They Took Our Jobs

As usual, Congress takes ‘the effect on jobs’ seriously. Workers must not be ‘left behind.’ And as usual, they are big on preparing.

So, what are you going to do about it, punk? They are to encourage some things:

- ‘Efforts to ensure’ that workers and other stakeholders are ‘consulted’ as AI is developed and deployed by end users. A government favorite.

- Stakeholder voices get considered in the development and deployment of AI systems procured or used by federal agencies. In other words, use AI, but not if it would take our jobs.

- Legislation related to training, retraining (drink!) and upskilling the private sector workforce, perhaps with business incentives, or to encourage college courses. I am going to go out on a limb and say that this pretty much never, ever works.

- Explore implications and possible ‘solutions to’ the impact of AI on the long-term future of work as general-purpose AI systems displace human workers, and develop a framework for policy response. So far, I’ve heard UBI, and various versions of disguising to varying degrees versions of hiring people to dig holes and fill them up again, except you get private companies to pay for it.

- Consider legislation to improve U.S. immigration systems for high-skilled STEM workers in support of national security and to foster advances in AI across the whole country.

My understanding is that ideas like the first two are most often useless but also most often mostly harmless. Steps are taken to nominally ‘consult,’ most of the time nothing changes.

Sometimes, they are anything but harmless. You get NEPA. The similar provisions in NEPA were given little thought when first passed, then they grew and morphed into monsters strangling the economy and boiling the planet, and no one has been able to stop them.

If this applies only to federal agencies and you get the NEPA version, that is in a sense the worst possible scenario. The government’s ability to use AI gets crippled, leaving it behind. Whereas it would provide no meaningful check on frontier model development, or on other potentially risky or harmful private actions.

Applying it across the board could at the limit actually cripple American AI, in a way that would not serve as a basis for stopping international efforts, so that seems quite bad.

We should absolutely expand and improve high skill immigration, across all industries. It is rather completely insane that we are not doing so. There should at minimum be unlimited HB-1s. Yes, it helps ‘national security’ and AI but also it helps everything and everyone and the whole economy and we’re just being grade-A stupid not to do it.

Language Models Offer Mundane Utility

They call this ‘high impact uses of AI.’

The report starts off saying existing law must apply to AI. That includes being able to verify that compliance. They note that this might not be compatible with opaque AI systems.

Their response if that happens? Tough. Rules are rules. Sucks to be you.

Indeed, they say to look not for ways to accommodate black box AI systems, but instead look for loopholes where existing law does not cover AI sufficiently.

Not only do they not want to ‘fix’ existing rules that impose, they want to ensure any possible loopholes are closed regarding information existing law requires. The emphasis is on anti-discrimination laws, which are not something correlation machines you can run tests on are going to be in the default habit of not violating.

So what actions are suggested here?

- Explore where we might need explainability requirements.

- Develop standards for AI in critical infrastructure.

- Better monitor energy use.

- Keep a closer eye on financial services providers.

- Keep a closer eye on the housing sector.

- Test and evaluate all systems before the government buys them, and also streamline the procurement process (yes these are one bullet point).

- Recognize the concerns of local news (drink!) and journalism that have resulted in fewer local news options in small towns and rural areas. Damn you, AI!

- Develop laws against AI-generated child sexual abuse material (CSAM) and deepfakes. There is a bullet here, are they going to bite it?

- Think of the children, consider laws to protect them, require ‘reasonable steps.’

If you are at a smaller company working on AI, and you are worried about SB 1047 or another law that specifically targets frontier models and the risk of catastrophic harm, and you are not worried about being required to ‘take reasonable steps’ to ‘protect children,’ then I believe you are very much worried about the wrong things.

You can say and believe ‘the catastrophic risk worries are science fiction and not real, whereas children actually exist and get harmed’ all you like. This is not where I try to argue you out of that position.

That does not change which proposed rules are far more likely to actually make your life a living hell and bury your company, or hand the edge to Big Tech.

Hint: It is the one that would actually apply to you and the product you are offering.

- Encourage public-private partnerships and other mechanisms to develop fraud detection services.

- Continue work on autonomous vehicle testing frameworks. We must beat the CCP (drink!) in the race to shape the vision of self-driving cars.

- Ban use of AI for social scoring to protect our freedom unlike the CCP (drink!)

- “Review whether other potential uses for AI should be either extremely limited or banned.”

Did you feel that chill up your spine? I sure did. The ‘ban use cases’ approach is big trouble without solving your real problems.

Then there’s the health care notes.

- Both support deployment of AI in health care and implement appropriate guardrails, including consumer protection, fraud and abuse prevention, and promoting accurate and representative data, ‘as patients must be front and center in any legislative efforts on healthcare and AI.’ My heart is sinking.

- Make research data available while preserving privacy.

- Ensure HHS and FDA ‘have the proper tools to weigh the benefits and risks of AI-enabled products so that it can provide a predictable regulatory structure. for product developers.’ The surface reading would be: So, not so much with the products, then. I have been informed that it is instead likely they are using coded language for the FDA’s pre-certification program to allow companies to self-certify software updates. And yes, if your laws require that then you should do that, but it would be nice to say it in English.

- Transparency for data providers and for the training data used in medical AIs.

- Promote innovation that improves health outcomes and efficiencies. Examine reimbursement mechanisms and guardrails for Medicare and Medicaid, and broad application.

The refrain is ‘give me the good thing, but don’t give me the downside.’

I mean, okay, sure, I don’t disagree exactly? And yet.

The proposal to use AI to improve ‘efficiency’ of Medicare and Medicaid sounds like the kind of thing that would be a great idea if done reasonably and yet quite predictably costs you the election. In theory, if we could all agree that we could use the AI to figure out which half of medicine wasn’t worthwhile and cut it, or how to actually design a reimbursement system with good incentives and do that, that would be great. But I have no idea how you could do that.

For elections they encourage deployers and content providers to implement robust protections, and ‘to mitigate AI-generated content that is objectively false, while still preserving First Amendment rights.’ Okie dokie.

For privacy and liability, they kick the can, ask others to consider what to do. They do want you to know privacy and strong privacy laws are good, and AIs sharing non-public personal information is bad. Also they take a bold stand that developers or users who cause harm should be held accountable, without any position on what counts as causing harm.

Copyright Confrontation

The word ‘encouraging’ is somehow sounding more ominous each time I see it.

What are we encouraging now?

- A coherent approach to public-facing transparency requirements for AI systems, while allowing use case specific requirements where necessary and beneficial, ‘including best practices for when AI developers should disclose when their products are AI,’ but while making sure the rules do not inhibit innovation.

I am not sure how much more of this kind of language of infinite qualifiers and why-not-both framings I can take. For those taking my word for it, it is much worse in the original.

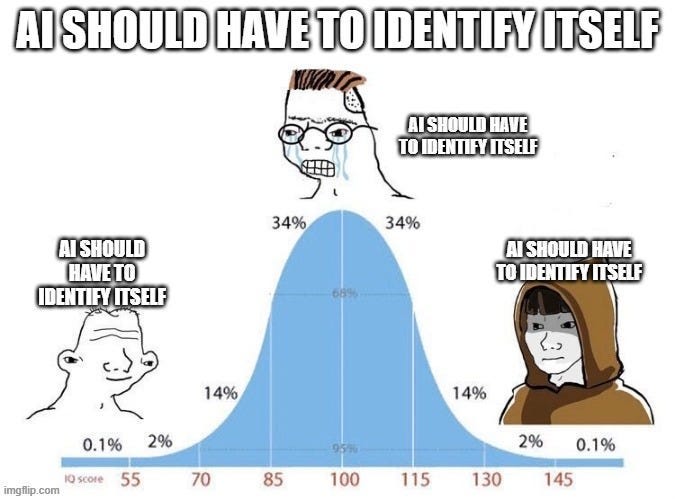

One of the few regulatory rules pretty much everyone agrees on, even if some corner cases involving AI agents are tricky, is ‘AI should have to clearly identify when you are talking to an AI.’

My instinctive suggestion for operationalizing the rule would be ‘if an AI sends a freeform message (e.g. not a selection from a fixed list of options, in any modality) that was not approved individually by a human (even if sent to multiple targets), in a way a reasonable person might think was generated by or individually approved by a human, it must be identified as AI-generated or auto-generated.’ Then iterate from there.

As the report goes on, it feels like there was a vibe of ‘all right, we need to get this done, let’s put enough qualifiers on every sentence that no one objects and we can be done with this.’

How bad can it get? Here’s a full quote for the next one.

- “Evaluate whether there is a need for best practices for the level of automation that is appropriate for a given type of task, considering the need to have a human in the loop at certain stages for some high impact tasks.”

I am going to go out on a limb and say yes. There is a need for best practices for the level of automation that is appropriate for a given type of task, considering the need to have a human in the loop at certain stages for some high impact tasks.

For example, if you want to launch nuclear weapons, that is a high impact task, and I believe we should have some best practices for when humans are in the loop.

Seriously, can we just say things that we are encouraging people to consider? Please?

They also would like to encourage the relevant committees to:

- Consider telling federal employees about AI in the workplace.

- Consider transparency requirements and copyright issues about data sets.

- Review reports from the executive branch.

- Getting hardware to watermark generated media, and getting online platforms to display that information.

And just because such sentences needs to be properly shall we say appreciated:

- “Consider whether there is a need for legislation that protects against the unauthorized use of one’s name, image, likeness, and voice, consistent with First Amendment principles, as it relates to AI. Legislation in this area should consider the impacts of novel synthetic content on professional content creators of digital media, victims of non-consensual distribution of intimate images, victims of fraud, and other individuals or entities that are negatively affected by the widespread availability of synthetic content.”

As opposed to, say, ‘Consider a law to protect people’s personality rights against AI.’

Which may or may not be necessary, depending on the state of current law. I haven’t investigated enough to know if what we have is sufficient here.

- Ensure we continue to ‘lead the world’ on copyright and intellectual property law.

I have some news about where we have been leading the world on these matters.

- Do a public awareness and educational campaign on AI’s upsides and downsides.

You don’t have to do this. It won’t do any good. But knock yourself out, I guess.

People Are Worried AI Might Kill Everyone Not Be Entirely Safe

Now to what I view as the highest stakes question. What about existential risks?

That is also mixed in with catastrophic mundane risks.

If I had to summarize this section, I would say that they avoid making mistakes and are headed in the right direction, and they ask good questions.

But on the answers? They punt.

The section is short and dense, so here is their full introduction.

In light of the insights provided by experts at the forums on a variety of risks that different AI systems may present, the AI Working Group encourages companies to perform detailed testing and evaluation to understand the landscape of potential harms and not to release AI systems that cannot meet industry standards.

This is some sort of voluntary testing and prior restraint regime? You are ‘encouraged’ to perform ‘detailed testing and evaluation to understand the landscape of potential harms,’ and you must then ‘meet industry standards.’ If you can’t, don’t release.

Whether or not that is a good regime depends on:

- Would companies actually comply?

- Would industry adopt standards that mean we wouldn’t die?

- Do we have to worry about problems that arise prior to release?

I doubt the Senators minds are ready for that third question.

Multiple potential risk regimes were proposed – from focusing on technical specifications such as the amount of computation or number of model parameters to classification by use case – and the AI Working Group encourages the relevant committees to consider a resilient risk regime that focuses on the capabilities of AI systems, protects proprietary information, and allows for continued AI innovation in the U.S.

Very good news. Capabilities have been selected over use case. The big easy mistake is to classify models based on what people say they plan to do, rather than asking what the model is capable of doing. That is a doomed approach, but many lobby hard for it.

The risk regime should tie governance efforts to the latest available research on AI capabilities and allow for regular updates in response to changes in the AI landscape.

Yes. As we learn more, our policies should adjust, and we should plan for that. Ideally this would be an easy thing to agree upon. Yet the same people who say ‘it is too early to choose what to do’ will also loudly proclaim that ‘if you give any flexibility to choose what to do later to anyone but the legislature, one must assume it will used maximally badly.’ I too wish we had a much faster, better legislature, that we could turn to every time we need any kind of decision or adjustment. We don’t.

All right. So no explicit mention of existential risk in the principles, but some good signs of the right regime. What are the actual suggestions?

Again, I am going to copy it all, one must parse carefully.

- Support efforts related to the development of a capabilities-focused risk-based approach, particularly the development and standardization of risk testing and evaluation methodologies and mechanisms, including red-teaming, sandboxes and testbeds, commercial AI auditing standards, bug bounty programs, as well as physical and cyber security standards. The AI Working Group encourages committees to consider ways to support these types of efforts, including through the federal procurement system.

There are those who would disagree with this, who think the proper order is train, release then test. I do not understand why they would think that. No wise company would do that, for its own selfish reasons.

The questions should be things like:

- How rigorous should be the testing requirements?

- At what stages of training and post-training, prior to deployment?

- How should those change based on the capabilities of the system?

- How do we pick the details?

- What should you have to do if the system flunks the test?

For now, this is a very light statement.

- Investigate the policy implications of different product release choices for AI systems, particularly to understand the differences between closed versus fully open-source models (including the full spectrum of product release choices between those two ends of the spectrum).

Again, there are those that would disagree with this, who think the proper order is train, release then investigate the consequences. They think they already know all the answers, or that the answers do not matter. Once again, I do not understand why they would have good reason to think that.

Whatever position you take, the right thing to do is to game it out. Ask what the consequences of each regime would be. Ask what the final policy regime and world state would likely be in each case. Ask what the implications are for national security. Get all the information, then make the choice.

The only alternative that makes sense, which is more of a complementary approach than a substitute, is to define what you want to require. Remember what was said about black box systems. Yes, your AI system ‘wants to be’ a black box. You don’t know how to make it not a black box. If the law says you have to be able to look inside the box, or you can’t use the box? Well, that’s more of a you problem. No box.

You can howl about Think of the Potential of the box, why are you shutting down the box over some stupid thing like algorithmic discrimination or bioweapon risk or whatever. You still are not getting your box.

Then, if you can open the weights and still ensure the requirements are met, great, that’s fine, go for it. If not, not.

Then we get serious.

- Develop an analytical framework that specifies what circumstances would warrant a requirement of pre-deployment evaluation of AI models.

This does not specify whether this is requiring a self-evaluation by the developer as required in SB 1047, or requiring a third-party evaluation like METR, or an evaluation by the government. Presumably part of finding the right framework would be figuring out when to apply which requirement, along with which tests would be needed.

I am not going to make a case here for where I think the thresholds should be, beyond saying that SB 1047 seems like a good upper bound for the threshold necessary for self-evaluations, although one could quibble with the details of the default future path. Anything strictly higher than that seems clearly wrong to me.

- Explore whether there is a need for an AI-focused Information Sharing and Analysis Center (ISAC) to serve as an interface between commercial AI entities and the federal government to support monitoring of AI risks.

That is not how I would have thought to structure such things, but also I do not have deep thoughts about how to best structure such things. Nor do I see under which agency they would propose to put this center. Certainly there will need to be some interface where companies inform the federal government of issues in AI, as users and as developers, and for the federal government to make information requests.

5. Consider a capabilities-based AI risk regime that takes into consideration short-, medium-, and long-term risks, with the recognition that model capabilities and testing and evaluation capabilities will change and grow over time. As our understanding of AI risks further develops, we may discover better risk-management regimes or mechanisms.

Where testing and evaluation are insufficient to directly measure capabilities, the AI Working Group encourages the relevant committees to explore proxy metrics that may be used in the interim.

There is some very welcome good thinking in here. Yes, we will need to adjust our regime over time. Also, that does not mean that until we reach our ‘final form’ the correct regime is no regime at all. You go with the best proxy measure you have, then when you can do better you switch to a better one, and you need to consider all time frames, although naming them all is a punt from the hard work of prioritization.

The question is, can you use testing and evaluation to directly measure capabilities sufficiently accurately? For which purposes and scenarios does this work or fail?

There are two ways testing and evaluation can fail, false positives and false negatives.

False positives are where you game the benchmarks, intentionally or otherwise. In general, I presume that the major labs (OpenAI, Anthropic and DeepMind for sure, and mostly Meta as well) will be good at not doing this, but that smaller competitors will often be gaming the system to look better, or not be taking care to avoid data contamination.

This can mostly be solved through keeping the testing details private, or continuously rotating them with questions known to not be online. But it also is not the issue here.

False negatives are far scarier.

We can again subdivide, and ask what ways things might go wrong. I took 10 or so minutes to brainstorm a list, which is of course highly incomplete.

These are vaguely ordered ‘ordinary failure, probably not too bad’ to ‘oh no.’

- The AI can do it, if you were better at prompting and writing custom instructions.

- Variant: The AI can do it, if you jailbreak it first, which you can totally do.

- Variant: You messed up the inputs or the answer key.

- The AI can do it, if you offer it the right additional context.

- The AI can do it, if you give it some extra scaffolding to work with.

- The AI can do it, if you give it a bit of fine tuning.

- The AI can do it, if you force it to embody the Golden Gate Bridge or something.

- The AI can do it, with help from a user with better domain knowledge.

- The AI can do it, but you won’t like the way it picked to get the job done.

- The AI can do it, but you have to trigger some hidden condition flag.

- The AI can do it, but the developers had it hide its capabilities to fool the test.

- The AI can do it, but realized you were testing it, so it hid its capabilities.

- The AI can do it, so the developers crippled the narrow capability that goes on evaluations, but it still has the broader capability you were actually trying to test.

- The AI can’t do this in particular, but you were asking the wrong questions.

- Variant: What the AI can do is something humans haven’t even considered yet.

- Variant: What you are about exists out of distribution, and this isn’t it.

- The AI can do it, but its solution was over your head and you didn’t notice.

- The AI escaped or took control or hacked the system during your test.

- The AI did the dangerous thing during training or fine-tuning. You are too late.

The more different tests you run, and the more different people run the tests, especially if you include diverse red teaming and the ability to probe for anything at all while well resourced, the better you will do. But this approach has some severe problems, and they get a lot more severe once you enter the realm of models plausibly smarter than humans and you don’t know how to evaluate the answers or what questions to ask.

If all you want are capabilities relative to another similar model, and you can put an upper bound on how capable the thing is, a lot of these problems mostly go away or become much easier, and you can be a lot more confident.

Anyway, my basic perspective is that you use evaluations, but that in our current state and likely for a while I would not trust them to avoid false negatives on the high end, if your system used enough compute and is large enough that it might plausibly be breaking new ground. At that point, you need to use a holistic mix of different approaches and an extreme degree of caution, and beyond a certain point we don’t know how to proceed safely in the existential risk sense.

So the question is, will the people tasked with this be able to figure out a reasonable implementation of these questions? How can we help them do that?

The basic principle here, however, is clear. As inputs, potential capabilities and known capabilities advance, we will need to develop and deploy more robust testing procedures, and be more insistent upon them. From there, we can talk price, and adjust as we learn more.

There are also two very important points that wait for the national security section: A proper investigation into defining AGI and evaluating how likely it is and what risks it would pose, and an exploration into AI export controls and the possibility of on-chip AI governance. I did not expect to get those.

Am I dismayed that the words existential and catastrophic only appear once each and only in the appendix (and extinction does not appear)? That there does not appear to be a reference in any form to ‘loss of human control’ as a concept, and so on? That ‘AGI’ does not appear until the final section on national security, although they ask very good questions about it there?

Here is the appendix section where we see mentions at all (bold is mine), which does ‘say the line’ but does seem to have rather a missing mood, concluding essentially (and to be fair, correctly) that ‘more research is needed’:

The eighth forum examined the potential long-term risks of AI and how best to encourage development of AI systems that align with democratic values and prevent doomsday scenarios.

Participants varied substantially in their level of concern about catastrophic and existential risks of AI systems, with some participants very optimistic about the future of AI and other participants quite concerned about the possibilities for AI systems to cause severe harm.

Participants also agreed there is a need for additional research, including standard baselines for risk assessment, to better contextualize the potential risks of highly capable AI systems. Several participants raised the need to continue focusing on the existing and short term harms of AI and highlighted how focusing on short-term issues will provide better standing and infrastructure to address long-term issues.

Overall, the participants mostly agreed that more research and collaboration are necessary to manage risk and maximize opportunities.

Of course all this obfuscation is concerning.

It is scary that such concepts are that-which-shall-not-be-named.

You-know-what still has its hands on quite a few provisions of this document. The report was clearly written by people who understand that the stakes are going to get raised to very high levels. And perhaps they think that by not saying you-know-what, they can avoid all the nonsensical claims they are worried about ‘science fiction’ or ‘hypothetical risks’ or what not.

That’s the thing. You do not need the risks to be fully existential, or to talk about what value we are giving up 100 or 1,000 years from now, or any ‘long term’ arguments, or even the fates of anyone not already alive, to make it worth worrying about what could happen to all of us within our lifetimes. The prescribed actions change a bit, but not all that much, especially not yet. If the practical case works, perhaps that is enough.

I am not a politician. I do not have experience with similar documents and how to correctly read between the lines. I do know this report was written by committee, causing much of this dissonance. Very clearly at least one person on the committee cared and got a bunch of good stuff through. Also very clearly there was sufficient skepticism that this wasn’t made explicit. And I know the targets are other committees, which muddies everything further.

Perhaps, one might suggest, all this optimism is what they want people like me to think? But that would imply that they care what people like me think when writing such documents.

I am rather confident that they don’t.

I Declare National Security

I went into this final section highly uncertain what they would focus on. What does national security mean in this context? There are a lot of answers that would not have shocked me.

It turns out that here it largely means help the DOD:

- Bolstering cyber capabilities.

- Developing AI career paths for DOD.

- Money for DOD.

- Efficiently handle security clearances, improve DOD hiring process for AI talent.

- Improve transfer options and other ways to get AI talent into DOD.

I would certainly reallocate DOD money for more of these things if you want to increase the effectiveness of the DOD. Whether to simply throw more total money at DOD is a political question and I don’t have a position there.

Then we throw in an interesting one?

- Prevent LLMs leaking or reconstructing sensitive or confidential information.

Leaking would mean it was in the training data. If so, where did that data come from? Even if the source was technically public and available to be found, ‘making it easy on them’ is very much a thing. If it is in the training data you can probably get the LLM to give it to you, and I bet that LLMs can get pretty good at ‘noticing which information was classified.’

Reconstructing is more interesting. If you de facto add ‘confidential information even if reconstructed’ to the list of catastrophic risks alongside CBRN, as I presume some NatSec people would like, then that puts the problem for future LLMs in stark relief.

The way that information is redacted usually contains quite a lot of clues. If you put AI on the case, especially a few years from now, a lot of things are going to fall into place. In general, a capable AI will start being able to figure out various confidential information, and I do not see how you stop that from happening, especially when one is not especially keen to provide OpenAI or Google with a list of all the confidential information their AI is totally not supposed to know about? Seems hard.

A lot of problems are going to be hard. On this one, my guess is that among other things the government is going to have to get a very different approach to what is classified.

- Monitor AI and especially AGI development by our adversaries.

I would definitely do that.

- Work on a better and more precise definition of AGI, a better measurement of how likely it is to be developed and the magnitude of the risks it would pose.

Yes. Nice. Very good. They are asking many of the right questions.

- Explore using AI to mitigate space debris.

You get an investigation into using AI for your thing. You get an investigation into using AI for your thing. I mean, yeah, sure, why not?

- Look into all this extra energy use.

I am surprised they didn’t put extra commentary here, but yeah, of course.

- Worry about CBRN threats and how AI might enhance them.

An excellent thing for DOD to be worried about. I have been pointed to the question here of what to do about Restricted Data. We automatically classify certain information, such as info about nuclear weapons, as it comes into existence. If an AI is not allowed to generate outputs containing such information, and there is certainly a strong case why you would want to prevent that, this is going to get tricky. No question the DOD should be thinking carefully about the right approach here. If anything, AI is going to be expanding the range of CBRN-related information that we do not want easily shared.

- Consider how CBRN threats and other advanced technological capabilities interact with need for AI export controls, explore whether new authorities are needed, and explore feasibility of options to implement on-chip security mechanisms for high-end AI chips.

- “Develop a framework for determining when, or if, export controls should be placed on powerful AI systems.”

Ding. Ding. Ding. Ding. Ding.

If you want the ability to choke off supply, you target the choke points you can access.

That means either export controls, or it means on-chip security mechanisms, or it means figuring out something new.

This is all encouraging another group to consider maybe someone doing something. That multi-step distinction covers the entire document. But yes, all the known plausibly effective ideas are here in one form or another, to be investigated.

The language here on AI export controls is neutral, asking both when and if.

At some point on the capabilities curve, national security will dictate the need for export controls on AI models. That is incompatible with open weights on those models, or with letting such models run locally outside the export control zone. The proper ‘if’ is whether we get to that point, so the right question is when.

Then they go to a place I had not previously thought about us going.

- “Develop a framework for determining when an AI system, if acquired by an adversary, would be powerful enough that it would pose such a grave risk to national security that it should be considered classified, using approaches such as how DOE treats Restricted Data.”

Never mind debating open model weights. Should AI systems, at some capabilities level, be automatically classified upon creation? Should the core capabilities workers, or everyone at OpenAI and DeepMind, potentially have to get a security clearance by 2027 or something?

- Ensure federal agencies have the authority to work with allies and international partners and agree to things. Participate in international research efforts, ‘giving due weight to research security and intellectual property.’

Not sure why this is under national security, and I worry about the emphasis on friendlies, but I would presume we should do that.

- Use modern data analytics to fight illicit drugs including fentanyl.

Yes, use modern data analytics. I notice they don’t mention algorithmic bias issues.

- Promote open markets for digital goods, prevent forced technology transfer, ensure the digital economy ‘remains open, fair and competitive for all, including for the three million American workers whose jobs depend on digital trade.’

Perfect generic note to end on. I am surprised the number of jobs is that low.

They then give a list of who was at which forum and summaries of what happened.

Some Other People’s Reactions

Before getting to my takeaways, here are some other reactions.

These are illustrative of five very different perspectives, and also the only five cases in which anyone said much of anything about the bill at all. And I love that all five seem to be people who actually looked at the damn thing. A highly welcome change.

- Peter Wildeford looks at the overall approach. His biggest takeaway is that this is a capabilities-based approach, which puts a huge burden on evaluations, and he notices some other key interactions too, especially funding for BIS and NIST.

- Tim First highlights some measures he finds fun or exciting. Like Peter he mentions the call for investigation of on-chip security mechanisms.

- Tyler Cowen’s recent column contained the following: “Fast forward to the present. Senate Majority Leader Chuck Schumer and his working group on AI have issued a guidance document for federal policy. The plans involve a lot of federal support for the research and development of AI, and a consistent recognition of the national-security importance of the US maintaining its lead in AI. Lawmakers seem to understand that they would rather face the risks of US-based AI systems than have to contend with Chinese developments without a US counterweight. The early history of Covid, when the Chinese government behaved recklessly and nontransparently, has driven this realization home.”

- The context was citing this report as evidence that the AI ‘safety movement’ is dead, or at least that a turning point has been reached and it will fade into obscurity (and the title has now been changed to better reflect the post.)

- Tyler is right that there is much support for ‘innovation,’ ‘R&D’ and American competitiveness and national security. But this is as one would expect.

- My view is that, while the magic words are not used, the ‘AI safety’ concerns are very much here, including all the most important policy proposals, and it even includes one bold proposal I do not remember previously considering.

- Yes, I would have preferred if the report had spoken more plainly and boldly, here and also elsewhere, and the calls had been stronger. But I find it hard not to consider this a win. At bare minimum, it is not a loss.

- Tyler has not, that I know of, given further analysis on the report’s details.

- R Street’s Adam Thierer gives an overview.

- He notices a lot of the high-tech pork (e.g. industrial policy) and calls for investigating expanding regulations.

- He notices the kicking of all the cans down the road, agrees this makes sense.

- He happily notices no strike against open source, which is only true if you do not work through the implications (e.g. of potentially imposing export controls on future highly capable AI systems, or even treating them as automatically classified Restricted Data.)

- Similarly, he notes the lack of a call for a new agency, whereas this instead will do everything piecemail. And he is happy that ‘existential risk lunacy’ is not mentioned by name, allowing him not to notice it either.

- Then he complains about the report not removing enough barriers from existing laws, regulations and court-based legal systems, but agrees existing law should apply to AI. Feels a bit like trying to have the existing law cake to head off any new rules and call for gutting what already exists too, but hey. He offers special praise for the investigation to look for innovation-stifling rules.

- He notices some of the genuinely scary language, in particular “Review whether other potential uses for AI should be either extremely limited or banned.”

- He calls for Congress to actively limit Executive discretion on AI, which seems like ‘AI Pause now’ levels of not going to happen.

- He actively likes the idea of a public awareness campaign, which surprised me.

- Finally Adam seems to buy into the view that screwing up Section 230 is the big thing to worry about. I continue to be confused why people think that this is going to end up being a problem in practice. Perhaps it is the Sisyphean task of people like R Street to constantly worry about such nightmare scenarios.

- He promised a more detailed report coming, but I couldn’t find one.

- The Wall Street Journal editorial board covers it as ‘The AI Pork Barrel Arrives.’

They quote Schumer embarrassing himself a bit:

Chuck Schumer: If China is going to invest $50 billion, and we’re going to invest in nothing, they’ll inevitably get ahead of us.

Padme: You know the winner is not whoever spends the most public funds, right?

You know America’s success is built on private enterprise and free markets, right?

You do know that ‘we’ are investing quite a lot of money in AI, right?

You… do know… we are kicking China’s ass on AI at the moment, right?

WSJ Editorial Board: Goldman Sachs estimates that U.S. private investment in AI will total $82 billion next year—more than twice as much as in China.

We are getting quite a lot more than twice as much bang for our private bucks.

And this comes on the heels of the Chips Act money.

So yes, I see why the Wall Street Journal Editorial Board is thinking pork.

WSJ Editorial Board: Mr. Schumer said Wednesday that AI is hard to regulate because it “is changing too quickly.” Fair point. But then why does Washington need to subsidize it?

The obvious answer, mostly, is that it doesn’t.

There are some narrow areas, like safety work, where one can argue that there will by default be underinvestment in public goods.

There is need to fund the government’s own adaptation of AI, including for defense, and to adjust regulations and laws and procedures for the new world.

Most of the rest is not like that.

WSJ: Now’s not a time for more pork-barrel spending. The Navy could buy a lot of ships to help deter China with an additional $32 billion a year.

This is where they lose me. Partly because a bunch of that $32 billion is directly for defense or government services and administration. But also because I see no reason to spend a bunch of extra money on new Navy ships that will be obsolete in the AI era, especially given what I have heard about our war games where our ships are not even useful against China now. The Chips Act money is a far better deterrent. We also would have accepted ‘do not spend the money at all.’

Mostly I see this focus as another instance of the mainstream not understanding, in a very deep way, that AI is a Thing, even in the economic and mundane utility senses.

Conclusions and Main Takeaways

There was a lot of stuff in the report. A lot of it was of the form ‘let’s do good thing X, without its downside Y, taking into consideration the vital importance of A, B and C.’

It is all very ‘why not both,’ embrace the upside and prevent the downside.

Which is great, but of course easier said (or gestured at) than done.

This is my attempt to assemble what feels most important, hopefully I am not forgetting anything:

- The Schumer Report is written by a committee for other committees to then do something. Rather than one big bill, we will get a bunch of different bills.

- They are split on whether to take existential risk seriously.

- As a result, they include many of the most important proposals on this.

- Requiring safety testing of frontier models before release.

- Using compute or other proxies if evaluations are not sufficiently reliable.

- Export controls on AI systems.

- Treating sufficiently capable AI systems as Restricted Data.

- Addressing CBRN threats.

- On-chip governance for AI chips.

- The need for international cooperation.

- Investigate the definition of AGI, and the risks it would bring.

- Also as a result, they present them in an ordinary, non-x-risk context.

- That ordinary context indeed does justify the proposals on its own.

- As a result, they include many of the most important proposals on this.

- Most choices regarding AI Safety policies seem wise. The big conceptual danger is that the report emphasizes a capabilities-based approach via evaluations and tests. It does mention the possibility of using compute or other proxies if our tests are inadequate, but I worry a lot about overconfidence here. This seems like the most obvious way that this framework goes horribly wrong.

- A second issue is that this report presumes that only release of a model is dangerous, that otherwise it is safe. Which for now is true, but this could change, and it should not be an ongoing assumption.

- There is a broad attitude that the rules must be flexible, and adapt over time.

- They insist that AI will need to obey existing laws, including those against algorithmic discrimination and all the informational disclosure requirements involved.

- They raise specters regarding mundane harm concerns and AI ethics, both in existing law and proposed new rules, that should worry libertarians and AI companies far more than laws like SB 1047 that are aimed at frontier models and catastrophic risks.

- Calls for taking ‘reasonable steps’ to ‘protect children’ should be scary. They are likely not kidding around about copyright, CSAM or deepfakes.

- Calls for consultation and review could turn into a NEPA-style nightmare. Or they might turn out to be nothing. Hard to tell.

- They say that if black box AI is incompatible with existing disclosure requirements and calls for explainability and transparency, then their response is: Tough.

- They want to closely enforce rules on algorithmic discrimination, including the associated disclosure requirements.

- There are likely going to be issues with classified material.

- The report wants to hold developers and users liable for AI harms, including mundane AI harms.

- The report calls for considerations of potential use case bans.

- They propose to spend $32 billion dollars on AI, with an unknown breakdown.

- Schumer thinks public spending matters, not private spending. It shows.

- There are many proposals for government adoption of AI and building of AI-related state capacity. This seemed like a key focus point.

- These mostly seem very good.

- Funding for BIS and NIST is especially important and welcome.

- There are many proposals to ‘promote innovation’ in various ways.

- I do not expect them to have much impact.

- There are proposals to ‘help small business’ and encourage geographic diversity and other such things.

- I expect these are pork and would go to waste.

- There is clear intent to integrate AI closely into our critical infrastructure and into the Department of Defense.

This is far from the report I would have wanted written. But it is less far than I expected before I looked at the details. Interpreting a document like this is not my area of expertise, but in many ways I came away optimistic. The biggest downside risks I see are that the important proposals get lost in the shuffle, or that some of the mundane harm related concerns get implemented in ways that cause real problems.

If I was a lobbyist for tech companies looking to avoid expensive regulation, especially if I was trying to help relatively small players, I would focus a lot more on heading off mundane-based concerns like those that have hurt so many other areas. That seems like by far the bigger commercial threat, if you do not care about the risks on any level.

3 comments

Comments sorted by top scores.

comment by Emrik (Emrik North) · 2024-05-25T22:14:25.675Z · LW(p) · GW(p)

As usual, I am torn on chips spending. Hardware progress accelerates core AI capabilities, but there is a national security issue with the capacity relying so heavily on Taiwan, and our lead over China here is valuable. That risk is very real.

With how rationalists seem to be speaking about China recently, I honestly don't know what you mean here. You literally use the words "national security issue", how am I not supposed to interpret that as being parochial?

And why are you using language like "our lead over China"? Again, parochial. I get that the major plurality of LW readers are in USA, but as of 2023 it's still just 49% [? · GW].

How would they spark an intergroup conflict to investigate? Well, the 22 boys were divided into two groups of 11 campers, and—

—and that turned out to be quite sufficient.

I hate to be nitpicky, but may I request that you spend 0.2 oomph of optimization power on trying to avoid being misinterpreted as "boo China! yay USA!" These are astronomic abstractions that cover literally ~1.7B people, and there are more effective words you can use if you want to avoid increasing ethnic tension / superpower conflict.

Replies from: andrew-burns↑ comment by Andrew Burns (andrew-burns) · 2024-05-26T05:40:06.172Z · LW(p) · GW(p)

I'm curious what these "more effective words" are. This isn't asked flippantly. Clearly there is a geopolitical dimension to the AI issue and Zvi lives in the U.S. Even as a rationalist, how should Zvi talk about the issue? China and the U.S. are hostile to each other and will each likely use AGI to (at the very least) disempower the other, so if you live in the U.S., first, you hope that AGI doesn't arrive until alignment is solved, and second, you hope that the U.S. gets it first.

comment by Askwho · 2024-05-24T15:20:01.863Z · LW(p) · GW(p)

AI reading of this post:

https://askwhocastsai.substack.com/p/the-schumer-report-rtfb-by-zvi-mowshowitz