Posts

Comments

I have a nightmare disorder which can absolutely ruin my week, but I wouldn't really call myself "literally brain damaged".

Huh, yeah, this is basically the opposite of how things work for me?

I get into spirals a lot. I can have a positive spiral: I sleep well, get out of bed feeling rested, start the day with a small easy task, get a feeling of accomplishment, feel more confident about starting a bigger task, eventually get into flow, have a very productive day, by 7pm I'm satisfied and decide to start cooking dinner, so I'm ready to go to sleep at a reasonable hour and have another great day tomorrow.

I also get into negative spirals: I wake up feeling tired because I had a nightmare, pick up my phone and scroll social media in bed, encounter some upsetting news, start playing video games to distract myself, suddenly it's 11pm and I forgot to eat so I stay up late to get groceries and cook, so I don't get much sleep, so the next day I'm tired again and irritated at myself, so I'm more likely to get back on social media...

I can't remember to brush my teeth 99% of the time. If I forget once, then the habit is broken, and I'll forget again tomorrow. Then I'll have forgotten for three days and it won't even be on my mind anymore. Soon it'll be psychologically difficult to think about brushing my teeth because I feel bad about the fact I haven't done it in a week. Negative spirals.

There's just a lot of things in my life that I need to be 100% absolutely consistent with, no exceptions, and it's worth it for me to dip into resources to make that happen. 99% isn't a stable number; it's too easy for it to become 98% and too easy for that to become 1%. If I notice a 100% thing becoming a 99% thing, I need to treat that as very urgent and fix it before a spiral starts.

(Yes, this is very delicate and a terrible system which creates gigantic setbacks in my life whenever there's a change, like needing to move house. But I have to acknowledge that that's how I work so that I can fix it.)

I'm surprised you decided not to prioritise exercise!

I realised reading this comment that when I ask myself, "How have I become more hardworking?" I don't think about exercise at all. But if I asked myself the mirror question - "How have I become less hardworking?" - I think about the time when I accidentally stopped exercising (because I moved further away from a dojo and couldn't handle the public transit - and then, some years later, because of confining myself to my apartment during the pandemic) and it was basically like taking a sledgehammer to my mental health. I can't recommend exercise strongly enough; it helps with sleep, mood, motivation, energy, everything. (Not everyone experiences this, but enough people do that it seems very much worth trying!)

Okay, I'm not opposed to the project of inventing fun games with no one winner - I mean, I enjoy Dungeons & Dragons - but I think games with one winner are awesome. I like the discipline imposed by them.

I'm not sure I can put into words what I mean by discipline; it's related to the nameless virtue. But, for example, sometimes in a computer game I find myself thinking, "wow this game is badly designed; it'd be more fun and realistic if it rewarded a good balance of archers and spearmen and cavalry, but I'm pretty sure the archer unit is so cheap and high ground is so accessible that I can just spam the archer unit and win". I then have a choice; I can do the thing that seems fun and elegant to me and build a realistic army, or I can spam the archer unit and win. I can either complain, or I can win.

To the extent that I'm stretching a rationalist muscle in games at all - and often I'm not, I'm just having fun, not everything I do needs to be justified as rational and virtuous - I think it's that muscle: "ignore the temptation to adopt a really cool and fun map, and instead use a map that actually describes the territory". This requires a certain harshness; I can do stuff that makes me feel good and lose and keep losing until I change my strategy. I can complain, "The designers clearly intended this thing to be a powerful strategy, so it should work!" - and if it isn't actually the best strategy, I will lose. This teaches me to abandon what "should" be true, and pay attention to what actually is true.

The original designers' intent very rarely comes across in games without very extensive testing and rebalancing IME. Maybe the game is designed so that you need to do X to win, but the very best competitive players will often create a metagame where everyone ignores X and does Y to win. Preventing this from happening requires so much playtesting, and then if your game gets a big enough audience that there's a semipro or pro scene, it generally happens anyway. It's like trying to beat the market; you're just one game designer, and arrayed against you are the forces of thousands of smart people all trying to win your game. Unless you write "you can't win without doing X" into the rulebook, or unless your game is very very simple, someone will find a way to win without doing X. (Maybe this isn't true for board games, but I think it's pretty true about video games, which are more of my experience. Esports games are constantly patched to tune down the dominance of whatever the latest powerful strat is. Card games also often have the problem of a card needing to be changed because, in combination with some other card, it's being used in an unexpectedly powerful way. So I think it's probably applicable to board games that are played competitively.)

I think your project is cool, but I also like games with a winner and a loser; I don't think you need to explain why they're bad to explain why your thing is good!

I think answering "how should you behave when you're sharing resources with people with different values?" is one of the projects of contractarian ethics, which is why I'm a fan.

A known problem in contractarian ethics is how people with more altruistic preferences can get screwed over by egalitarian procedures that give everyone's preferences equal weight (like simple majority votes). For example, imagine the options in the poll were "A: give one ice cream to everyone" and "B: give two ice creams, only to the people whose names begin with consonants". If Selfish Sally is in the minority, she'll probably defect because she wants ice cream. When Altruistic Ally is in the minority, she reasons that more total utility is created by option B - since consonant names are in the majority, and they get twice as much ice cream - so she won't defect and she'll miss out on ice cream. Maybe she's even totally fine with this outcome, because she has tuistic preferences (she prefers other people to be happy, not as a way of negotiating with them, simply as an end-in-itself) satisfied by giving Sally ice cream. But maybe this implies that, iterated over many such games, nice altruistic kind people will systematically be given less ice cream than selfish mean people! That might not be a characteristic that we want our moral system to have; we might even want to reward people for being nice.

So we could tell Ally to disregard her tuistic preference (her preference for Sally to receive ice cream as an end-in-itself) and vote like a Homo economicus, since that's what Sally will do and we want a fair outcome for Ally. But maybe then, iterated over many games, Ally won't be happy with the actual outcomes involved - because we're asking her to disregard genuine altruistic preferences that she actually has, and she might be unhappy if someone else gets screwed over by that.

In this game you have an additional layer of complexity, since some people might have made their initial vote by asking, "What value do I think has the most universal benefit for everyone?" and others might have made the vote by asking, "What's my personal favourite value?" - Those people are then facing very different moral decisions when asked, "Do you want to force your value on everyone else?"

If people who made their initial decision by considering the best value for everyone are also less likely to choose to force their value on everyone, while people who made their initial decision selfishly are also more likely to choose to force it on others, then we'd have an interesting problem. Luckily it looks similar to this existing known problem; unluckily, I don't think the contractarians have a great solution for us yet.

OK, "top level post on the biology of sexual dimorphism in primates" added to my todo list (though it might be a while since I'm working on another sequence). Now that I know you're a bacteria person, this makes more sense! I'm a human evolution person, so you wrote it very differently to how I would've. (If you'd like an introductory textbook, I always recommend Laland and Brown's Sense and Nonsense.) I don't know as much about the very earliest origins in bacteria, so that was super interesting to read about!

The stuff about adding a third sex reminded me somewhat of the principle that it's unstable to have an imbalance between the sexes; even if it would be optimal for the tribe to have one male and many females, an individual mother in such a gender-imbalanced tribe would maximise her number of grandchildren by having a male child. I've seen this used as the default example to prod undergrads out of group-selection wrongthink, so I'm curious if this is as universally known as I think it is.

I can't tell you whether to edit the post, but I think it's very common for people to act/joke/suggest as though the study of human evolution inevitably leads towards racist/sexist conclusions. In part, this is because fields like anthropology have a terrible history with sweeping conclusions like "women just evolved to be weaker" and other sins like "let's make a categorization system that ranks every race", which has certainly been used by unscientific movements like Red Pill. But anthropology has done a lot to clean up its act, as a field, and I don't think this is ground we want to cede. The study of human evolutionary biology has only ever confirmed, for me, that discrimination on grounds like race and sex is fundamentally misguided. So I try to push back gently, when I see it, against the implication that studying sexual selection or sexual dimorphism will lead towards bigoted beliefs. Studying science will generally lead away from bigoted beliefs, because bigoted beliefs are generally not true. (I don't intend this to come across as harsh criticism, either! I just want to explain why I think this is worth caring about.)

And yes, many people will upvote this kind of post because I think folks appreciate the virtue of scholarship. We have a lot of people who can write down their ideas on how to improve your thinking, but fewer who can come in from a specific field and explain the object-level in detail. You deserve many upvotes for citing your sources!

it appears there is no heart react on LessWrong, which is sad because I want to give this comment a lil <3

Well, yes. The correct response to noticing "it's really convenient to believe X, so I might be biased towards X" isn't to immediately believe not-X. It's to be extra careful to use evidence and good reasoning to figure out whether you believe X or not-X.

I'm the kind of person who seems to do really badly in typical office environments. I also found that while working on my attempt at a startup, I was very easily able to regularly put in 16-hour days and wasn't really bothered by it at all. But then my startup attempt totally failed, so maybe I wasn't actually doing very good work?

Regardless, what works for me is basically all based on lots of tested self-knowledge.

For example, I like making my environment hyper-comfy. I do better work in my pyjamas, on my laptop in bed, with a mug of coffee in hand. I also do great work curled up under a tree in the park with some cake. This is totally the opposite of what I've heard from other people - that putting on a suit and going into the office helps them. But for me, it's like anything my brain categorises as "work" is aversive and I don't want to do it, but anything my brain categorises as "not work" is fun and easy. It turns out that doing graphic design in my bed is "not work", but doing it in anything resembling an office building is "work". For me. You might have the opposite experience! So I think my advice is less "put your pyjamas on" and more "acquire a level of self-knowledge where you know whether you work better in pyjamas or in a suit, because you've checked".

I think it's also really important to ignore all the bad techniques that people suggest. For example, for years I followed advice like "tell yourself that if you just do the task for only five minutes, you can reward yourself with chocolate afterwards". I think this is terrible and bad and caused long-term damage to my productivity, because it essentially involves accepting this framework of "work is suffering and aversive and terrible, but if you suffer through it, then your life can be nice again afterwards". This is a bad framing! It's really important to me to affirm that work is rewarding or fulfilling or fun, it's something I'm interested in or passionate about, I want to do it, etc - and if I don't believe those things, I need to notice that and treat it as an alarm and dig into what the problem is, not take it for granted like a background truth! I basically spent years reinforcing the belief "if getting something done is important for your goals, then it's Work, which means it's inherently unpleasant and aversive" and I now think that's just about the worst thing you can believe. I notice myself feeling aversive about doing something for no other reason than that doing that thing would accomplish my long-term goals. But the same task, if categorised as "not work", becomes fun and enjoyable. Now I really try to avoid the bad strategy, and instead think, "I'll sit under the trees in this beautiful park and get this task done on my laptop while munching these delicious chocolates and it'll be great," NOT "I'll just finish this hateful aversive task and then I can have chocolate and go to the park afterwards".

I also now actively try to avoid advice like "take breaks to avoid burnout" because I've noticed that it hurts me; if I mentally categorise a task as "the sort of unpleasant work that I'd need to take breaks from" then I'll be less likely to do it. Just telling myself "this task isn't actually unpleasant, so I don't want to take any breaks from it, because I'm enjoying doing the task more than I'd enjoy taking a break" seems like it sometimes just... makes that thing true. And also, taking "breaks" seems to mostly be harmful to me, because I end up doing things (like mindlessly scrolling Twitter) which are net harmful to my mental state. It's much more important for me to actively pursue enjoyable things, and to very rarely try to "rest", because my brain seems to think that being really miserable counts as "rest" so long as I just avoid doing anything. Joy requires work - even if it's just "if I want to experience the joy of going to the Botanic Gardens, I gotta shower and put shoes on".

And I also strongly experience something I call "momentum" - doing one task makes it easier to do another, and succeeding at several tasks makes it seem more fun and enjoyable to do the next task. So, rather than taking a break, I need tasks that have low activation costs and high completion chances, to bootstrap a success spiral. Things like having Duolingo on my phone, so when my brain tells me it's tired, I have a small easy task I can do - "complete a Duolingo lesson". Then I don't fall into the negative spiral - lying in bed and scrolling Twitter, which makes me feel worse, which makes me feel a stronger need to take a break, which makes me scroll Twitter more, which makes me feel worse, etc. Instead I get into a positive spiral - completing my Duolingo lesson successfully, which makes me feel accomplished and a bit energised, which makes it easier to start a second task, which gets me into flow, so it's easier to start a third task...

But some people would be incredibly miserable if they tried to make that work for them, and would literally hospitalise themselves if they tried to minimise the amount of breaks they get and also rest less, so, like, YMMV and you should just test the things that actually work for you. I suspect I'm weird. I also suspect I'm literally never going to be able to make offices work for me, and normal employment kinda sucks. But I only know that about myself because I tried being employed in an open office and I was like wow, this sucks so much!

If things aren't working yet, you should probably test more things! Get all the ideas, figure out your specific quirks, test out what works for you (which might be the literal opposite of what works for someone else), try to be actively curious in the "hmm I wonder what would happen if I poked this with a stick?" sense.

If I could work extremely hard doing things I don't like, without any burnouts, eat only healthy food without binge eating spirals, honestly enjoy doing exercises, have only meaningful rest without exausting my will power and generally be fully intellectually and emotionally consistent, completely subjugating my urges to my values... but ONLY by being really mean and cruel and careless to myself...

Man, that would suck! That would be a really inconvenient world! That would be a world where I'm forced to choose either "I don't want to be mean to myself, even if I could save lots of people's lives by doing that, so I'm just going to deliberately leave all those people to die" or "I'm going to be mean to myself because I think it's ethically obligatory", and I really don't want to make that choice!

I much prefer a world where a choice like "I'm going to be nice and careful to myself because actually that's the best way to be more productive, and being mean isn't sustainable" is an option on the table. Way more convenient. I really hope it's the one we live in.

yep, fair! Do you think the point would come across better if Alice was nice? (I wasn't sure I could make Alice nice without an extra few thousand words, but maybe someone more skilful could.)

I think a lot of us have voices in our heads that are meaner than Alice, so if you think Alice is going to cause burnout, I think we need a response that is better than Bob's (and better than "I'm just going to reject all assholes out of hand", because I can't use that on myself!)

I think antivaxxers could plausibly pose a higher infection risk because they're unusually likely to hang out with other unvaccinated people, or to do other bad decisionmaking. Someone who's unvaccinated because they're scared of needles might still make good decisions otherwise - like they might stay home if they're feeling a sniffle, or test themselves for COVID if their housemate is sick.

Also, you want to exclude unvaccinated people because they pose an infection risk, so you already wanted to exclude anyone who posts "I hate vaccines" on Facebook. You're just worried about the incentives or selection effects if you use an honour system, because some people will lie and say they're vaccinated when they aren't. I'm suggesting that the incentives or selection effects aren't as negative if you only require silence, so nobody has to actually lie.

What would you think about a solution like "if you're not vaccinated and you loudly say so then we'll ban you, but otherwise you'll get away with it"?

I can see how it'd be negative to filter out "unvaccinated and honest about it", creating selection for "unvaccinated and willing to lie about it"; you don't like liars. But I also think I'm more willing to accept someone who's quietly unvaccinated because they're very scared of needles (but who also basically agrees that vaccines are good, and is sort of ashamed about being unvaccinated), and less willing to accept someone who regularly posts on Facebook about how vaccines were invented by Satanists in government to inject compliance drugs so the Illuminati can take over. When I frame it as selecting for people who are "unvaccinated and willing to shut up about it", rather than selecting for people who are "vaccinated and dishonest about it", I think I like the sound of that selection effect a lot more. So I think I'd be interested in a policy like "if we find out that you're unvaccinated then we'll ban you, but we're also not trying very hard to find out". Maybe there's a useful distinction between requiring dishonesty and requiring silence?

Corner brackets are pretty! I usually just connect every word with a hyphen if they're intended to be read together, eg. "In this definitely-not-silly example sentence, the potentially-ambiguous bits are all hyphen-connected".

This is a very tiny thing, but I really don't like using "Alice, Bob, Carol, Dave/Dan, Eve/Erin, Frank" as the generic characters in parables/dialogues/problems. Why are we alternating binary genders?? Even leaving aside nonbinary inclusivity, it's literally just clearer and easier to write if I've got a he, a she, and a zie (rather than it being ambiguous whether "she" refers to Alice or Carol). I'm not always consistent with it, but generally my imaginary characters are more like Alice, Bob, Charlie, Delilah, Ethan, Fern, etc, and Charlie and Fern use gender-neutral pronouns like they/them or xe/xir. (Though I've also been contemplating the idea that it's better to cycle the names and use different ones per post, so you can refer back to the ideas using the names as a handle: Alice, Bob, Charlie, Delilah, Ethan, Fern, Georgia, Hassan, Indie, Julie, Kasimir, Lei...)

(edited for brevity)

Yep, I think my university called these "special topics" or "selected topics" papers sometimes. As in, a paper called "Special Topics In X" would just be "we got three really good researchers who happen to study different areas of X, we asked them each to spend one-third of the year teaching you about their favourite research, and then we test you on those three areas at the end of the year". Downside is that you don't necessarily get the optimal three topics that you wanted to learn about, upside is you get to learn from great researchers.

oh, great, I'm glad someone is doing this! Will you collect some data about how your students respond, and write up what you feel worked well or badly? Are you aware of any existing syllabi that you took inspiration from? It'd be great if people doing this sort of thing could learn from one another!

Hmm, does your response change if they're housemates or something like that?

I agree there'd be no controversy about Alice deciding not to hire Bob because he doesn't meet her standards, and I think there'd be little controversy over some org deciding to hire Bob over Alice because he's more likeable. But, if it makes the post work better for you, you can totally pretend that instead of talking about membership in "the rationalist community", they're talking about "membership in the Greater Springfield Rationalist Book Club that meets on Tuesdays in Alice and Bob's group house". I think Alice kicking Bob out of that would be much more contentious and controversial!

yes, definitely!

(I wrote way too much in this comment while waiting for my lentils to finish simmering; I apologise!)

I don't think it's necessarily intended to be bad or excessively stylized, but it's intended to be rude for sure. I didn't want to write a preachy thing!

Three kinda main reasons that I made Alice suck, deliberately:

Firstly, later in my sequence I want to talk about ways that Alice could achieve her goals better.

Secondly, I kind of want to be able to sit with the awkward dissonant feeling of, "huh, Alice is rude and mean and making me feel bad and maybe she shouldn't say those things, and ALSO, Alice being an infinitely flawed person would still not actually be a good justification for me to save fewer lives than I think I can save if I try (or otherwise fail according to my own values and my own ethics), and hm, holding those two ideas in juxtaposition feels uncomfy for me, let's poke that!"

I feel like a lot of truthseeking mindsets involve getting comfy with that sorta "huh, this juxtaposition is super uncomfy and I'm going to sit with it anyway" kinda mental state.

Thirdly, I have a voice in my head that gets WAY meaner than Alice! I totally sometimes have thoughts like, "Wow, I'm such a worthless hypocrite for preaching EA things online even though I don't have as much impact as I could if I tried harder, I'm totally just lying to myself about thinking I'm burned out because I'm lazy, I should go flog myself in penance!*"

*mild hyperbole for humour

I can respond by thinking something like, "Go away, stupid voice in my head, you're rude and mean and I don't want to listen to you." I could also respond by deliberately seeking out lots of reassuring blog posts that say "burnout is super bad and you're morally obligated to be happy!" and try to pretend that I'm definitely not engaging in any confirmation bias, no, definitely not, I definitely feel reassured by all of these definitely-true posts about the thing I really wanted to believe anyway. But maybe there's a way better thing where I can think, "Nope, I made really good models and rigorously tested this, so I'm actually for real confident that I can't be more ethical than I currently am, even after I looked into the dark and really asked myself the question and prepared myself to discover information that I might not like, and so I don't have to listen to this mean voice because I know that it's wrong."

But as long as I haven't ACTUALLY looked into the dark, or so long as I've been biased and incomplete in my investigations, I'll always have the little doubt in the back of my mind that says, "well, maybe Alice is right" - so I'll never be able to get rid of the mean Alice voice. There's about a thousand different rationality posts I could link here; generally LessWrong is a good place to acquire a gut feeling for "huh, just professing a belief sure does feel different to actually believing something because you checked".

I think that circles us back to your hot take: if Bob makes really good models about his capabilities and has really sought truth about all this, then maybe he'll BOTH be better able to refute Alice's criticisms AND even be able to persuade Alice to act more sustainably. But maybe he actually has to really do that work, and maybe that work isn't possible to do properly unless he's really truthseeking, and maybe really truthseeking would require him to also be okay with learning "I can and should do more" if that were to turn out to be true. Knowing that he was capable of concluding "I can and should do more" (if that were true) might be a prerequisite to being able to convince Alice that he legitimately reached the conclusion "I can't or shouldn't do more".

And if he actually does the truthseeking, then maybe he should bully Alice to do less! The interesting question for me then is: can I get myself to be curious about whether that's true, like really actually curious, like the kind of curious where I want to believe the truth no matter what the truth turns out to be, because the truth matters?

If you mean this literally, it's a pretty extraordinary claim! Like, if Alice is really doing important AI Safety work and/or donating large amounts of money, she's plausibly saving multiple lives every year. Is the impact of being rude worse than killing multiple people per year?

(Note, I'm not saying in this comment that Alice should talk the way she does, or that Alice's conversation techniques are effective or socially acceptable. I'm saying it's extraordinary to claim that the toxic experience of Alice's friends is equivalently bad to "any good she can do herself". I think basically no amount of rudeness is equivalently bad to how good it is to save a life and/or help avert the apocalypse, but if you think it's morally equivalent then I'd be really curious for your reasoning.)

Absolutely not.

I definitely have a mini Alice voice inside my head. I also have a mini Bob voice inside my head. They fight, like, all the time. I'd love help in resolving their fights!

If Bob isn't reflectively consistent, their utility functions could currently be the same in some sense, right? (They might agree on what Bob's utility function should be - Bob would happily press a button that makes him want to donate 30%, he just doesn't currently want to do that and doesn't think he has access to such a button.)

Huh, interesting! I definitely count myself as agreeing with Alice in some regards - like, I think I should work harder than I currently do, and I think it's bad that I don't, and I've definitely done some amount to increase my capacity, and I'm really interested in finding more ways to increase my capacity. But I don't feel super indignant about being told that I should donate more or work harder - though I might feel pretty indignant if Alice is being mean about it! I'd describe my emotions as being closer to anxiety, and a very urgent sense of curiosity, and a desire for help and support.

(Planned posts later in the sequence cover things like what I want Alice to do differently, so I won't write up the whole thing in a comment.)

I think if someone wasn't indignant about Alice's ideas, but did just disagree with Alice and think she was wrong, we might see lots of comments that look something like: "Hmm, I think there's actually a 80% probability that I can't be any more ethical than I currently am, even if I did try to self-improve or self-modify. I ran a test where I tried contributing 5% more of my time while simultaneously starting therapy and increasing the amount of social support that I felt okay asking for, and in my journal I noted an increase in my sleep needs, which I thought was probably a symptom of burnout. When I tried contributing 10% more, the problem got a lot worse. So it's possible that there's some unknown intervention that would let me do this (that's about ~15% of my 20% uncertainty), but since the ones I've tried haven't worked, I've decided to limit my excess contributions to no more than 5% above my comfortable level."

I think these are good habits for rationalists: using evidence, building models, remembering that 0 and 1 aren't probabilities, testing our beliefs against the territory, etc.

Obviously I can't force you to do any of that. But I'd like to have a better model about this, so if I saw comments that offered me useful evidence that I could update on, then I'd be excited about the possibility of changing my mind and improving my world-model.

What specifically would you expect to not go well? What bad things will happen if Bob greatly ups his efforts? Why will they happen?

Are there things we could do to mitigate those bad things? How could we lower the probability of the bad things happening? If you don't think any risk reduction or mitigation is possible at all, how certain are you about that?

Can we test this?

Do you think it's worthwhile to have really precise, careful, detailed models of this aspect of the world?

Hm, my background here is just an undergrad degree and a lot of independent reasoning, but I think you're massively undervaluing the whole "different reproductive success victory-conditions cause different adaptations" thing. I don't think it's fair at all to dismiss the entire thing as a Red Pill thing; many of the implications can be pretty feminist!

I don't think it matters that much that Bateman's original research is pretty weak. There's a whole body of research you're waving away there, and a lot of the more recent stuff is much much stronger research!

You don't necessarily have to talk about sexual competition at all. You can just say, for instance, that female reproductive success is bounded - human women in extant hunter-gatherer tribes typically have one child and then wait several years before having the next. If a woman spends twenty years having children and can only have one child per four years, then she's only going to have five children. Her incentives are to maximise the success of those five children and the resources she can give each child. Meanwhile, a man could have anywhere between zero children and... however many Genghis Khan had, so his incentives tend much more strongly towards risk-taking and having as much sex as possible.

Of course there's massive variation between species; there's massive variation in how every and any trait/dynamic plays out depending on the context and environment. But we can generally come up with reasons why particular species might work the way they do; for example, I've heard the hypothesis that the fish species with very tiny males are adapted for the fact that finding a conspecific female to mate with in the gigantic open ocean is basically random chance, so there's no point investing in males being able to do anything except drift around and survive for a long period until they stumble across a female. Humans aren't a rare species in a giant open ocean, so male humans don't have to really rely on just stumbling across female humans through sheer luck after weeks of drifting on the currents.

You don't have to bring "males face more competition than females" into it at all. You can just say "whichever parent has higher parental investment is likely to have stricter bounds on reproductive success, so they'll adapt to compete more over resources like foods, while the low-investment parent competes more over access to mates". Then when you look at specific species, you can analyse how sexual dimorphism in that particular species is affected by the roles each sex plays in that species and also the species' context and environment.

Sometime when it's not 2am, if it'd be helpful, I'd be happy to pull out some examples of papers that I think are well-written or insightful. Questions like "ok, so, if males maximise their fitness by having as many mates as possible, what the heck is going on with monogamy? Is there even any evidence that human men in extant hunter-gatherer tribes have much variation in their reproductive success caused by being good at hunting or being high-status or whatever? For that matter, what the heck is going on with meerkats?" are genuinely interesting open research questions, I don't really think they're associated with the red pill people, they're things the field is approaching with a sense of curiosity and confusion, and also I would really like to know what the heck is going on with meerkats.

You doubt that it would work very well if Alice nags everyone to be more altruistic. I'm curious how confident you are that this doesn't work and whether you'd propose any better techniques that might work better?

For myself, I notice that being nagged to be more altruistic is unpleasant and uncomfortable. So I might be biased to conclude that it doesn't work, because I'm motivated to believe it doesn't work so that I can conveniently conclude that nobody should nag me; so I want to be very careful and explicit in how I reason and consider evidence here. (If it does work, that doesn't mean it's good; you could think it works but the harms outweigh the benefits. But you'd have to be willing to say "this works but I'm still not okay with it" rather than "conveniently, the unpleasant thing is ineffective anyway, so we don't have to do it!")

(PS. yes, I too am very glad that people like Bob exist, and I think it's good they exist!)

Word of God, as the creator of both Alice and Bob: Bob really does claim to be an EA, want to belong to EA communities, say he's a utilitarian, claim to be a rationalist, call himself a member of the rationalist community, etc. Alice isn't lying or wrong about any of that. (You can get all "death of the author" and analyse the text as though Bob isn't a rationalist/EA if you really want, but I think that would make for a less productive discussion with other commenters.)

Speaking for myself personally, I'd definitely prefer that people came and said "hey we need you to improve or we'll kick you out" to my face, rather than going behind my back and starting a whisper campaign to kick me out of a group. So if I were Bob, I definitely wouldn't want Alice to just go talk to Carol and Dave without talking to me first!

But more importantly, I think there's a part of the dialogue you're not engaging with. Alice claims to need or want certain things; she wants to surround herself with similarly-ethical people who normalise and affirm her lifestyle so that it's easier for her to keep up, she wants people to call her out if she's engaging in biased or motivated reasoning about how many resources she can devote to altruism or how hard she can work, she wants Bob to be honest with her, etc. In your view, is it ever acceptable for her to criticise Bob? Is there any way for her to get what she wants which is, in your eyes, morally acceptable? If it's never morally acceptable to tell people they're wrong about beliefs like "I can't work harder than this", how do you make sure those beliefs track truth?

Those questions aren't rhetorical; the dialogue isn't supposed to have a clear hero/villain dynamic. If you have a really awesome technique for calibrating beliefs about how much you can contribute which doesn't require any input from anyone else, then that sounds super useful and I'd like to hear about it!

I don't think anyone would dispute that Alice is being extremely rude! Indeed she is deliberately written that way (though I think people aren't reading it quite the way I wrote it because I intended them to be housemates or close friends, so Alice would legitimately know some amount about Bob's goals and values.)

I think a real conversation involving a real Bob would definitely involve lots more thoughtful pauses that gave him time to think. Luckily it's not a real conversation, just a blog post trying to stay within a reasonable word limit. :(

Alice is not my voice; this is supposed to inspire questions, not convince people of a point. For instance: is there a way to achieve what Alice wants to achieve, while being polite and not an asshole? Do you think the needs she expresses can be met without hurting Bob?

Alice is, indeed, a fictional character - but clearly some people exist who are extremely ethical. There's people who go around donating 50%, giving kidneys to strangers, volunteering to get diseases in human challenge trials, working on important things rather than their dream career, thinking about altruism in the shower, etc.

Where do you think is the optimal realistic point on the spectrum between Alice and Bob?

Do you think it's definitely true that Bob would be doing it already if he could? Or do you think there exist some people who could but don't want to, or who have mistaken beliefs where they think they couldn't but could if they tried, or who currently can't but could if they got stronger social support from the community?

This seems like you understood my intent; I'm glad we communicated! Though I think Bob seeing a therapist is totally an action that Alice would support, if he thinks that's the best test of her ideas - and importantly if he thinks the test genuinely could go either way.

I'm sorry I don't have time to respond to all of this, but I think you might enjoy Money: The Unit Of Caring: https://www.lesswrong.com/posts/ZpDnRCeef2CLEFeKM/money-the-unit-of-caring

(Sorry, not sure how to make neat-looking links on mobile.)

Hmm, this isn't really what I'm trying to get across when I use the phrase "least convenient possible world". I'm not talking about being isekaid into an actually different world; I'm just talking about operating under uncertainty, and isolating cruxes. Alice is suggesting that Bob - this universe's Bob - really might be harmed more by rest-more advice than by work-harder advice, really might find it easier to change himself than he predicts, etc. He doesn't know for certain what's true (ie "which universe he's in") until he tries.

Let's use an easier example:

Jane doesn't really want to go to Joe's karaoke party on Sunday. Joe asks why. Jane says she doesn't want to go because she's got a lot of household chores to get done, and she doesn't like karaoke anyway. Joe really wants her to go, so he could ask: "If I get all your chores done for you, and I change the plan from karaoke to bowling, then will you come?"

You could phrase that as, "In the most convenient possible world, would you come then?" but Joe isn't positing that there's an alternate-universe bowling party that alternate-universe Jane might attend (but this universe's Jane doesn't want to attend because in this universe it's a karaoke party). He's just checking to see whether Jane's given her REAL objections. She might say, "Okay, yeah, so long as it's not karaoke then I'll happily attend." Or she might say, "No, I still don't really want to go." In the latter case, Joe has discovered that the REAL reason Jane doesn't want to go is something else - maybe she just doesn't like him and she said the thing about chores just to be polite, or maybe she doesn't want to admit that she's staying home to watch the latest episode of her favourite guilty-pleasure sitcom, or something.

If "but what about the PR?" is Bob's real genuine crux, he'll say, "Yeah, if the PR issues were reversed then I'd commit harder for sure!" If, on the other hand, it's just an excuse, then nothing Alice says will convince Bob to work harder - even if she did take the time to knock down all his arguments (which in this dialogue she does not).

Hmm, I don't know how this got started, but once it got started, there's a really obvious mechanism for continuing and reinforcing it; if you're gay and you want to meet other gay people, and you've heard there's gay people at the opera, then now you want to join the opera. Also if you're really homophobic and want to avoid gay people, then you'll avoid the opera, which might then make it safer for gay people.

I could randomly start a rumour that gay people really love aikido, and if I was sufficiently successful at getting everyone to believe it, then maybe it'd soon become a self-fulfilling prophecy - since homophobes would pull out of aikido, and gay people would join.

I wonder how you could test this? Could you just survey some opera fans about why they enjoy opera, and see if anything shows up as correlated on the demographic-monitoring bit of the survey? Could you do some kind of experimental design where people are told about an imaginary new hobby you've invented, and told that it's common or uncommon among their demographics, and then they rate how interested they are in the hobby?

Hmm, I think I could be persuaded into putting it on the EA Forum, but I'm mildly against it:

- It is literally about rationality, in the sense that it's about the cognitive biases and false justifications and motivated reasoning that cause people to conclude that they don't want to be any more ethical than they currently are; you can apply the point to other ethical systems if you want, like, Bob could just as easily be a religious person justifying why he can't be bothered to do any pilgrimages this year while Alice is a hotshot missionary or something. I would hope that lots of people on LW want to work harder on saving the world, even if they don't agree with the Drowning Child thing; there are many reasons to work harder on x-risk reduction.

- It's the sort of spicy that makes me worried that EAs will consider it bad PR, whereas rationalists are fine with spicy takes because we already have those in spades. I think people can effectively link to it no matter where it is, so posting it in more places isn't necessarily beneficial?

- I don't agree with everything Alice says but I do think it's very plausible that EA should be a big tent that welcomes everyone - including people who just want to give 10% and not do anything else - whereas my personal view is that the rationality community should probably be more elitist; we're supposed to be a self-improve-so-hard-that-you-end-up-saving-the-world group, damnit, not a book club for insight porn.

Also it's going to be part of a sequence (conditional on me successfully finishing the other posts), and I feel like the sequence overall belongs more on LW.

I genuinely don't really know how the response to the Drowning Child differs between LW and EA! I guess I would probably say more people on the EA Forum probably donate money to charity for Drowning-Child-related reasons, but more people on LW are probably interested in philosophy qua philosophy and probably more people on LW switched careers to directly work on things like AI safety. I don't suppose there's survey/census data that we could look up?

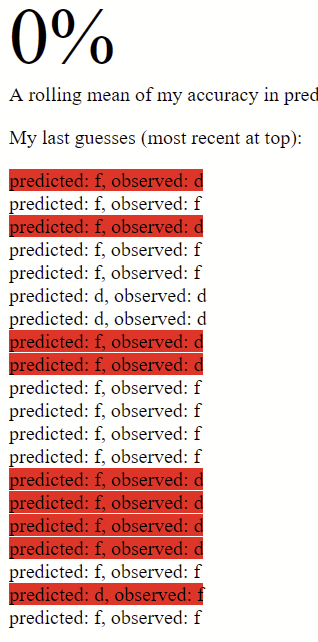

I have enough integrity to not pretend to believe in CDT just so I can take your money, but I will note that I'm pretty sure the linked Aaronson oracle is deterministic, so if you're using the linked one then someone could just ask me to give them a script for a series of keys to press that gets a less-than-50% correct rate from the linked oracle over 100 key presses, and they could test the script. Then they could take your money and split it with me.

Of course, if you're not actually using the linked oracle and secretly you have a more sophisticated oracle which you would use in the actual bet, then you shouldn't be concerned. This isn't free will, this could probably be bruteforced.

I disagree that we're confusing multiple issues; my central point is that these things are deeply related. They form a pattern - a culture - which makes bike theft and rape not comparable in the way the OP wants them to be comparable.

You might not think that 4 through 6 count as 'victim-blaming', but they all contribute to the overall effect on the victim. Whether your advice is helpful or harmful can depend on a lot of factors - including whether a victim is being met with suspicion or doubt, whether a victim feels humiliated, and whether a victim feels safe reporting.

If someone is currently thinking, "Hmm, my bike got stolen. That sucks. I wonder how I can get that to not happen again?" then your advice to buy a different lock is probably going to be helpful! The victim is likely to want to listen to it, and be in a good mental state to implement that advice in the near future, and they're not really going to be worried that you have some ulterior motive for giving the advice. When someone says, "Have you considered buying a different brand of bike lock?" I'm not scared that they're going to follow-up that question by saying something like, "Well, it's just that since you admit yourself that you didn't buy the exact brand of bike lock I'm recommending, I don't believe your bike was really stolen and I'm going to tell all our friends that you're a reckless idiot who doesn't lock their bike properly so they shouldn't give you any sympathy about this so-called theft."

If we lived in a world that had this sort of culture around bike theft - victim-disbelieving, victim-shaming, victim-blaming or whatever else you want to call it - then people might be thinking things like, "Oh my god my bike got stolen, I'm so scared to even tell anyone because I don't know if they'll believe me, what if they think I made it up? What if they tell me I'm too irresponsible and just shouldn't ever ride bikes in the future ever again? What if they tell me I'm damaged goods because this happened to me?"

In that world, if someone tells you that their bike was stolen, responding, "did you lock it?" is an asshole thing to do. Because there will be some fraction of people who ask, "did you lock it?" and then, after that, say things like, "well, if you didn't use a D-lock on both the wheels and the frame, then you probably just consented for someone to borrow it and you're misremembering. You can't go around saying your bike got stolen when it was probably just borrowed - I mean, imagine if your bike is found and the person who borrowed it gets arrested! You'd ruin someone's life just because you misremembered giving them permission to borrow your bike. Next time, if you don't consent for someone to take your bike, just use at least five locks."

People who are feeling scared and vulnerable are not likely to be receptive to advice about bike locks, or feeling ready to go to the supermarket and get a new bike lock. If you offer advice about bike locks in that world, instead of thinking, "hmm that's a great idea, I'll go to the shops right now and buy that recommended bike lock," they are more likely to be thinking, "oh fuck are they implying that they don't believe my bike was really stolen? Are they going to tell my friends that I'm a stupid reckless person because I didn't lock my bike properly?" In the world where we have a victim-blaming victim-shaming victim-disbelieving culture around bike theft, you need to reassure the bike theft victim that you are not going to be that asshole.

Rationality isn't always about making the maximally theoretically correct statements all the time. Rationality is systematized winning. It doesn't matter if the statement "you should buy a better bike lock" is literally true. It matters whether saying that statement causes good outcomes to happen. For bike theft, it probably causes good outcomes; the person hears the statement, goes out and buys a better bike lock, and their bike is less likely to be stolen in future. For rape, it causes bad outcomes; the person worries that you're not a safe/supportive person to talk to, shuts down, and hides in their room to cry. You can argue that the hide-in-the-room-and-cry trauma response is irrational, and that doesn't matter even one iota, because being an aspiring rationalist is about taking the actions with the best expected outcomes in an imperfect world where sometimes humans are imperfect and sometimes people are traumatised. You don't control other people's actions; you control your own. (And in our imperfect world, it's not irrational for rape victims to be scared of talking to people who send signals that they might engage in victim-blaming/victim-shaming/victim-disbelieving.)

If people were forced to bet on their beliefs, I think most people would be forced to admit that they do understand this on some level; when you say "try buying this different bike lock" the expected outcome is that the victim is somewhat more likely to go shopping and buy that bike lock, whereas when you say "try wearing less revealing clothing" the expected outcome is that the victim feels crushed and traumatised and stops listening to you. When people give that advice, I don't think they are actually making the victim any less likely to be raped again - they're mostly just feeling righteous about saying things that they think the victim should listen to in some abstract sense. (To back this up, a lot of the advice that is most commonly shared - like "don't wear revealing clothing" or "don't walk down dark alleys at night" or "shout fire, don't shout rape" - is basically useless or wrong. Rape is not mostly committed by complete strangers in dark alleys, and covering more skin doesn't make someone less likely to be raped.)

If rationality was all about making the purest theoretically true statements, then sure, whatever, let's go ahead and taboo some words. But rationality is about winning, so let's take context into account and talk about the expected outcomes of our actions.

If you like, just aggregate all the "victim-blaming"/"victim-disbelieving"/"victim-humiliating" things into the question, "From the perspective of the victim who just disclosed something, what is p(this person is about to say or do something unpleasant | this person has said words that sound like unsolicited advice)?"

...Admittedly, I'm not sure the percentage it reports is always accurate; here's a screenshot of it saying 0% while it clearly has correct guesses in the history (and indeed, a >50% record in recent history). I'm not sure if that percentage is possibly reporting something different to what I'm expecting it to track?

I'm surprised you're willing to bet money on Aaronson oracles; I've played around with them a bit and I can generally get them down to only predicting me around 30-40% correctly. (The one in my open browser tab is currently sitting at 37% but I've got it down to 25% before on shorter runs).

I use relatively simple techniques:

- deliberately creating patterns that don't feel random to humans (like long strings of one answer) - I initially did this because I hypothesized that it might have hard-coded in some facts about how humans fail at generating randomness, now I'm not sure why it works; possibly it's easier for me to control what it predicts this way?

- once a model catches onto my pattern, waiting 2-3 steps before varying it (again, I initially did this because I thought it was more complex than it was, and it might be hypothesizing that I'd change my pattern once it let me know that it caught me; now I know the code's much simpler and I think this probably just prevents me from making mistakes somehow)

- glancing at objects around me (or tabs in my browser) for semi-random input (I looked around my bedroom and saw a fitness leaflet for F, a screwdriver for D, and a felt jumper for another F)

- changing up the fingers I'm using (using my first two fingers to press F and D produces more predictable input than using my ring and little finger)

- pretending I'm playing a game like osu, tapping out song beats, focusing on the beat rather than the letter choice, and switching which song I'm mimicking every ~line or phrase

- just pressing both keys simultaneously so I'm not consciously choosing which I press first

Knowing how it works seems anti-helpful; I know the code is based on 5-grams, but trying to count 6-long patterns in my head causes its prediction rate to jump to nearly 80%. Trying to do much consciously at all, except something like "open my mind to the universe and accept random noise from my environment", lets it predict me. But often I have a sort of 'vibe' sense for what it's going to predict, so I pick the opposite of that, and the vibe is correct enough that I listen to it.

There might be a better oracle out there which beats me, but this one seems to have pretty simple code and I would expect most smart people to be able to beat it if they focus on trying to beat the oracle rather than trying to generate random data.

If you're still happy to take the bet, I expect to make money on it. It would be understandable, however, if you don't want to take the bet because I don't believe in CDT.

Wow, without context, that's a really weird claim that makes me not want to engage with you. It's really common for people with misogynist beliefs to declare a rape allegation "manifestly false" even when it's true, because they have a lot of false beliefs around sexual assault or around the psychology of trauma. Without knowing anything about your situation, it makes me update much more strongly towards believing you're a misogynist and/or believing you have bad epistemics than towards any object-level belief.

I've been involved in investigating lots of misconduct cases in another community, and have basically never encountered an allegation that was manifestly false; even in cases where I thought a claim was false, it was ambiguous and always incredibly difficult to make a call. Importantly it was (and should be) painful to make those calls, full of anxiety that we got it wrong and the awareness of how badly it would hurt somebody to disbelieve a true allegation; I can't imagine being so confident in that conclusion that I'd then go online and use "I've heard multiple stories about this" as though it were solid evidence for the frequency of false allegations. I expect I'm exposed to something like 100x more stories about sexual misconduct than the average person, but I only know of one allegation that I'd say I know for sure was false; I'd be really surprised if someone knew of more without having held a similar investigatory position in a community. It strikes me as much more likely that people with very poor epistemic hygiene are repeating secondhand "false allegation" stories with much more confidence than is warranted.

I figured I'd write something rather than just not replying, on the off-chance that you're not aware of this; I don't intend to be rude, just to be clear about why I won't engage further. Have a nice day.

If you can come up with some way to test this, then I will bet large-to-me amounts of money that people make up "my thing got stolen" at vastly higher rates than they make up rape. Like, telling your teacher/employer that you were late because your bike was stolen seems like an excuse you can get away with making once per few months. Getting a friend to borrow your bike so you can bum $50 off your parents for a new bike seems like something teenagers regularly attempt - like, I literally saw this sort of scam run by the other kids in high school and discussed on school buses. Putting a GoFundMe on the internet, asking for money to replace your bike because it was stolen and you can't work without it, seems like it would get a sympathetic response in some circles. If I was going to make up a crime for attention, theft just seems way easier....?

There is a vast difference in how compassionately this advice comes across precisely because bike theft is not something that people engage in very much actual victim-blaming about.

If I have had my bike stolen, I basically expect that people will be sympathetic about it. People will suggest making a police report, while also commiserating with me about the very low likelihood of the thief being caught and punished. Housemates might lend me a bike if I need to go places. If I'm late to class because I had to walk, I expect my professor will say something like, "Oh, that sucks, I'm sorry your bike was stolen." Fundamentally this means that when I tell someone that my bike was stolen, I may be feeling upset but not particularly vulnerable, and that means it's a perfectly good time to give me practical advice about which kind of lock to buy. Though if the bike was expensive or had sentimental value, I might still hope that people asked "are you okay?" before launching into advice about locks.

I have never heard anyone claim "oh, so-and-so's bike wasn't really stolen, they just made that up for attention" or "you shouldn't prosecute bike thieves because false bike-theft allegations can really hurt people" or "if you've ever had your bike stolen then some people just won't want to date you anymore" or anything else that would make me scared to tell someone that my bike had been stolen. We do not have a culture of victim-blaming or victim-disbelieving around bike theft.

If someone has been raped, they are frequently not optimistic about getting a sympathetic response. They may be too embarrassed to tell anyone. If the rapist is in their social circle, they may be worried that they'll be painted as a liar or ostracised from their social circle, because the rapist will loudly tell everyone that they're lying. They may be urged not to report anything to the police, or not to say anything in public at all, because they might "ruin the life" of their rapist. If the trauma impacts their performance at school or work, they might be worried that they'll be told it's "all in their head" and get no accommodations. This is all part of a wider abusive pattern of victim-blaming, in which victims of rape are scared to talk about what happened because of the numerous different negative responses they might get. When a victim of rape talks about their experiences, they are often in an extremely vulnerable state of mind and often anxious about whether they'll be believed. If your response is to immediately ask "what were you wearing?" or "were you drunk?" then you are, in fact, being a gigantic asshole. That is not an appropriate time to offer advice. Offering advice after the assault has already happened can come across as scary because of the fear that you'll follow up with "well it's your fault for wearing that" or "well if you were drunk then I don't believe you because you might have misremembered something".

Generally, people don't say that you're a misogynist victim-blaming monster if you give well-intentioned advice when that advice is appropriate (ie. usually before an assault could happen), like offering to call someone a taxi so they'll get home safely, or passing on what you've heard about a certain man's misconduct so people can decide whether to stay away from him, or suggesting that someone write down where they'll be and when they expect to return before they set out on a blind date. It's victim-blaming when you make yourself part of a wider pattern that terrifies victims into not wanting to speak at all about what's happened to them.

I'm not a physics student, but I absolutely feel I should have been able to generate more than one hypothesis here! I have definitely enjoyed watching science videos that talk about really cool ceramics that get used in building spacecraft, which can be glowing red-hot and nevertheless safe to touch because of how non-conductive they are. So it's not like I wasn't aware of the possibility that some materials have weird properties here. It's just that I generated a single hypothesis - the instructor flipped the plate around - and was super-satisfied with being correct.

And maybe I get Bayes points for being correct, since "the instructor flipped the plate around" is the right answer (assuming it's a real story) and "the instructor went to all the trouble of constructing a two-sided plate out of really weird materials purely in order to fuck with his physics students" is a wrong answer. But where I think I went wrong is feeling derisive towards the silly incorrect physics students who say things like "maybe something weird is happening with heat conduction?" and feeling superior to them because they were just guessing the teacher's password. When, actually, "something weird is going on relating to conduction" is a thought which could have led to me generating and considering more than one hypothesis.

....I'm a little mindblown by reading this, honestly, because I read 'Fake Explanations' when I was like eleven years old, and I really felt like it changed the way I thought and was extremely influential on me at that early point in my life, and I kept telling people this story, and also I never thought of this, and now I am strongly negatively updating against my own success at internalising the lessons here.

I guess the lesson from this is that the correct answer isn't "it's really obvious that the instructor flipped the plate around and the students should have realised this as soon as they Noticed They Were Confused", but "when you encounter confusing information, you should feel comfortable remaining confused until you have actually spent some time generating more hypotheses and learning more information". The answer of "the plate was flipped around" is semi-obvious (it's often the first hypothesis generated by smart people when I recount this story to them) and we all... stopped thinking at that point, and patted ourselves on the back for being so rational?

This feels a bit like it deserves to inspire a top-level post along the lines of "there is a second higher-level version of the Fake Explanations post, which points out that weird metals is a possible explanation, and if you laughed at the guy who was considering the possibility of the plate being made out of some sort of weird material and considered yourself superior for not being so stupid, then you should feel bad and go reread the stuff about motivated stopping". Or something.

I strongly disagree, and I'm curious why you think this? I have known a number of rationalists who got into SSC/ACX more than LessWrong or rather than LessWrong, and I've found them to generally be extremely sidetracked into constantly debating social justice issues / nerdsniped by interesting politics - often to the point where I would strongly prefer not to attend meetups that are marketed as "Lesswrong + ACX" rather than just "LessWrong", because I don't want to be exposed to that.

I got into LW in high school, and looking back, the most useful thing I got out of LW was "just do stuff".

Humans are pretty bad at predicting, in advance, what is going to work well and what they're going to enjoy or excel at. We need empirical evidence. So just go take that internship, do that volunteering, found that student society, email that academic to ask to discuss their paper, enter that competition, sign up for those extra classes, etc. Have a sense that more is possible, and that you will genuinely be an awesomer person if you are Doing Stuff rather than being on Twitter or Tiktok all evening, and that you might learn new things about yourself if you Just Try Stuff. Don't dismiss yourself as underqualified or undeserving.

The point of rationality is to win at life. Reading blogs is useful if it's helping you win at life, and if you realise it's not helping you win at life, then you can and should read less blogs. If the blogs are helping, read more of them. But don't lose sight of the idea that this is supposed to be making you Win At Life.

You don't need permission from anyone to Just Do Useful Stuff. You can come up with an idea for a useful project - like, I don't know, starting a student society or lunchtime club about EA at your high school, or making a film documentary about some area of psychology or charity, or doing a review of some scientific literature and writing up your results to publish on LW, or whatever. And if you don't have the skills yet, then you can go learn them - by signing up for classes, or finding video tutorials on youtube, or just getting started and learning-by-doing. And maybe someone will find your research/work/project genuinely helpful. The point is that you don't wait for a wise old wizard to appear and hand you a Quest before you save the world; just spot an area of the world that needs improving, and go improve it. You have a lot more time in high school for this sort of proactivity than you'll have as an adult, and it is a really really really insanely good habit to build while you're young.

Overwatch is a hero shooter where every player has a different role and different abilities. As an experiment maybe a year ago, I once asked the best monkey player I knew at the time (4200 elo on a 0-5000 scale) to 1v1 the worst Bastion player I knew (under 1000 elo). In the neutral, the Bastion player consistently won despite the yawning chasm between their ratings. This is because monkey is a tank designed to take space and counter snipers and isolate squishy targets from their healers, and is not a character designed to 1v1 a Bastion. If you are missing three people from your team, you are missing three of the six key roles. The best player of all time playing Reinhardt could still probably lose a 1v1 to a bronze Pharah.

Running 2-3 higher-skill players versus 4-6 lower-skill players in variety PUGs, I've generally found that the lower-skill players very consistently win unless we give the 3 higher-skilled players an additional advantage like extra HP or damage. But that's with a ton of obvious confounding factors - my higher rated players might be more inclined to just play for fun, plus the lower-skill players in my community are still reasonably strategic from exposure to team environments.

The first result of my YouTube search is https://www.youtube.com/watch?v=ZfhdHUQbcNA which, as you predict, goes in favour of the GMs. But I think there's very easy tweaks (such as to team composition) that would allow the bronze players to do better. You can see that the first round actually goes to overtime for quite a while, so on paper it's pretty close. Not really analysing this in depth as it's 8am.

Changing the number of players is a pretty popular option in Overwatch custom games and content; people love "can six bronze players beat three grandmasters?" videos.

We easily have the option to change many aspects of the game - for instance, we can let the weaker team deal 150% damage or give the stronger team longer cooldowns - but in my experience it isn't popular. People learn split-second gut-level reactions and habits for certain things, and part of being a "good player" is knowing instinctively whether you can tank a certain shot when you peek it or knowing when your ability will come off cooldown. Handicapping people by changing those learned values messes with their instincts, and it doesn't feel good to be handicapped that way; people enjoy making the challenge more difficult much more than they enjoy changes that negate their pre-existing skill and nullify their hard work.

You may find this source interesting: https://onlinelibrary.wiley.com/doi/full/10.1002/ajpa.23148

I remember reading that some hunter-gatherers have diet breadth entirely set by the calorie per hour return rate: take the calories and time expended to acquire the food (eg effort to chase prey) against the calorie density of the food to get the caloric return rate, and compare that to the average expected calories per hour of continuing to look for some other food. Humans will include every food in their diet for which making an effort to go after that food has a higher expected return than continuing to search for something else, ie they'll maximise variety in order to get calories faster. I can't find the citation for it right now though. (Also I apologise if that explanation was garbled, it's 2am)