Jimrandomh's Shortform

post by jimrandomh · 2019-07-04T17:06:32.665Z · LW · GW · 263 commentsContents

266 comments

This post is a container for my short-form writing. See this post [LW(p) · GW(p)] for meta-level discussion about shortform.

263 comments

Comments sorted by top scores.

comment by jimrandomh · 2024-03-04T23:23:17.994Z · LW(p) · GW(p)

There's been a lot of previous interest in indoor CO2 in the rationality community, including an (unsuccessful) CO2 stripper project [LW · GW], some research summaries [LW(p) · GW(p)] and self experiments. The results are confusing, I suspect some of the older research might be fake. But I noticed something that has greatly changed how I think about CO2 in relation to cognition.

Exhaled air is about 50kPPM CO2. Outdoor air is about 400ppm; indoor air ranges from 500 to 1500ppm depending on ventilation. Since exhaled air has CO2 about two orders of magnitude larger than the variance in room CO2, if even a small percentage of inhaled air is reinhalation of exhaled air, this will have a significantly larger effect than changes in ventilation. I'm having trouble finding a straight answer about what percentage of inhaled air is rebreathed (other than in the context of mask-wearing), but given the diffusivity of CO2, I would be surprised if it wasn't at least 1%.

This predicts that a slight breeze, which replaces their in front of your face and prevents reinhalation, would have a considerably larger effect than ventilating an indoor space where the air is mostly still. This matches my subjective experience of indoor vs outdoor spaces, which, while extremely confounded, feels like an air-quality difference larger than CO2 sensors would predict.

This also predicts that a small fan, positioned so it replaces the air in front of my face, would have a large effect on the same axis as improved ventilation would. I just set one up. I don't know whether it's making a difference but I plan to leave it there for at least a few days.

(Note: CO2 is sometimes used as a proxy for ventilation in contexts where the thing you actually care about is respiratory aerosol, because it affects transmissibility of respiratory diseases like COVID and influenza. This doesn't help with that at all and if anything would make it worse.)

Replies from: Gunnar_Zarncke, adam_scholl, Gunnar_Zarncke, kave, kave, M. Y. Zuo↑ comment by Gunnar_Zarncke · 2024-03-05T13:56:34.019Z · LW(p) · GW(p)

This indicates that how we breathe plays a big role in CO2 uptake. Like, shallow or full, small or large volumes, or the speed of exhaling. Breathing technique is a key skill of divers and can be learned. I just started reading the book Breath, which seems to have a lot on it.

↑ comment by Adam Scholl (adam_scholl) · 2024-03-06T13:55:26.142Z · LW(p) · GW(p)

Huh, I've also noticed a larger effect from indoors/outdoors than seems reflected by CO2 monitors, and that I seem smarter when it's windy, but I never thought of this hypothesis; it's interesting, thanks.

↑ comment by Gunnar_Zarncke · 2024-03-05T13:52:18.887Z · LW(p) · GW(p)

Ah, very related: Exhaled air contains 44000 PPM CO2 and is used for Mouth-to-mouth resuscitation without problems.

Replies from: joel-burget↑ comment by Joel Burget (joel-burget) · 2024-03-06T17:27:37.514Z · LW(p) · GW(p)

I assume the 44k PPM CO2 exhaled air is the product of respiration (I.e. the lungs have processed it), whereas the air used in mouth-to-mouth is quickly inhaled and exhaled.

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2024-03-07T06:55:41.440Z · LW(p) · GW(p)

As the respirator still has to breathe regularly, there will be still a significantly higher CO2 in the air for respiration. I'd guess maybe half - 20k PPM. Interesting to see somebody measure that.

↑ comment by kave · 2024-03-08T18:59:16.041Z · LW(p) · GW(p)

I had previously guessed air movement made me feel better because my body expected air movement (i.e. some kind of biophilic effect). But this explanation seems more likely in retrospect! I'm not quite sure how to run the calculation using the diffusivity coefficient to spot check this, though.

comment by jimrandomh · 2020-04-14T19:16:48.052Z · LW(p) · GW(p)

I am now reasonably convinced (p>0.8) that SARS-CoV-2 originated in an accidental laboratory escape from the Wuhan Institute of Virology.

1. If SARS-CoV-2 originated in a non-laboratory zoonotic transmission, then the geographic location of the initial outbreak would be drawn from a distribution which is approximately uniformly distributed over China (population-weighted); whereas if it originated in a laboratory, the geographic location is drawn from the commuting region of a lab studying that class of viruses, of which there is currently only one. Wuhan has <1% of the population of China, so this is (order of magnitude) a 100:1 update.

2. No factor other than the presence of the Wuhan Institute of Virology and related biotech organizations distinguishes Wuhan or Hubei from the rest of China. It is not the location of the bat-caves that SARS was found in; those are in Yunnan. It is not the location of any previous outbreaks. It does not have documented higher consumption of bats than the rest of China.

3. There have been publicly reported laboratory escapes of SARS twice before in Beijing, so we know this class of virus is difficult to contain in a laboratory setting.

4. We know that the Wuhan Institute of Virology was studying SARS-like bat coronaviruses. As reported in the Washington Post today, US diplomats had expressed serious concerns about the lab's safety.

5. China has adopted a policy of suppressing research into the origins of SARS-CoV-2, which they would not have done if they expected that research to clear them of scandal. Some Chinese officials are in a position to know.

To be clear, I don't think this was an intentional release. I don't think it was intended for use as a bioweapon. I don't think it underwent genetic engineering or gain-of-function research, although nothing about it conclusively rules this out. I think the researchers had good intentions, and screwed up.

Replies from: lbThingrb, BossSleepy, Lukas_Gloor, MakoYass, Pattern, MathieuRoy, Chris_Leong, Jayson_Virissimo, Spiracular, habryka4, Andrew_Clough↑ comment by lbThingrb · 2020-04-15T04:12:26.980Z · LW(p) · GW(p)

This Feb. 20th Twitter thread from Trevor Bedford argues against the lab-escape scenario. Do read the whole thing, but I'd say that the key points not addressed in parent comment are:

Data point #1 (virus group): #SARSCoV2 is an outgrowth of circulating diversity of SARS-like viruses in bats. A zoonosis is expected to be a random draw from this diversity. A lab escape is highly likely to be a common lab strain, either exactly 2002 SARS or WIV1.

But apparently SARSCoV2 isn't that. (See pic.)

Data point #2 (receptor binding domain): This point is rather technical, please see preprint by @K_G_Andersen, @arambaut, et al at http://virological.org/t/the-proximal-origin-of-sars-cov-2/398… for full details.

But, briefly, #SARSCoV2 has 6 mutations to its receptor binding domain that make it good at binding to ACE2 receptors from humans, non-human primates, ferrets, pigs, cats, pangolins (and others), but poor at binding to bat ACE2 receptors.

This pattern of mutation is most consistent with evolution in an animal intermediate, rather than lab escape. Additionally, the presence of these same 6 mutations in the pangolin virus argues strongly for an animal origin: https://biorxiv.org/content/10.1101/2020.02.13.945485v1…

...

Data point #3 (market cases): Many early infections in Wuhan were associated with the Huanan Seafood Market. A zoonosis fits with the presence of early cases in a large animal market selling diverse mammals. A lab escape is difficult to square with early market cases.

...

Data point #4 (environmental samples): 33 out of 585 environmental samples taken from the Huanan seafood market showed as #SARSCoV2 positive. 31 of these were collected from the western zone of the market, where wildlife booths are concentrated. 15/21 http://xinhuanet.com/english/2020-01/27/c_138735677.htm…

Environmental samples could in general derive from human infections, but I don't see how you'd get this clustering within the market if these were human derived.

One scenario I recall seeing somewhere that would reconcile lab-escape with data points 3 & 4 above is that some low-level WIV employee or contractor might have sold some purloined lab animals to the wet market. No idea how plausible that is.

Replies from: ChristianKl, None, rudi-c↑ comment by ChristianKl · 2020-04-23T08:24:00.495Z · LW(p) · GW(p)

Data point #3 (market cases): Many early infections in Wuhan were associated with the Huanan Seafood Market. A zoonosis fits with the presence of early cases in a large animal market selling diverse mammals. A lab escape is difficult to square with early market cases.

Given that there's the claim from Botao Xiao's The possible origins of 2019-nCoV coronavirus, that this seafood market was located 300m from a lab (which might or might not be true), this market doesn't seem like it reduces chances.

↑ comment by Rudi C (rudi-c) · 2020-06-12T12:06:30.568Z · LW(p) · GW(p)

We need to update down on any complex, technical datapoint that we don’t fully understand, as China has surely paid researchers to manufacture hard-to-evaluate evidence for its own benefit (regardless of the truth of the accusation). This is a classic technique that I have seen a lot in propaganda against laypeople, and there is every reason it should have been employed against the “smart” people in the current coronavirus situation.

↑ comment by Randomized, Controlled (BossSleepy) · 2021-03-17T21:45:52.185Z · LW(p) · GW(p)

The most recent episode of the 80k podcast had Andy Weber on it. He was the US Assistant Secretary of Defense, "responsible for biological and other weapons of mass destruction".

Towards the end of the episode he casually drops quite the bomb:

Well, over time, evidence for natural spread hasn’t been produced, we haven’t found the intermediate species, you know, the pangolin that was talked about last year. I actually think that the odds that this was a laboratory-acquired infection that spread perhaps unwittingly into the community in Wuhan is about a 50% possibility... And we know that the Wuhan Institute of Virology was doing exactly this type of research [gain of function research]. Some of it — which was funded by the NIH for the United States — on bat Coronaviruses. So it is possible that in doing this research, one of the workers at that laboratory got sick and went home. And now that we know about asymptomatic spread, perhaps they didn’t even have symptoms and spread it to a neighbor or a storekeeper. So while it seemed an unlikely hypothesis a year ago, over time, more and more evidence leaning in that direction has come out. And it’s wrong to dismiss that as kind of a baseless conspiracy theory. I mean, very, very serious scientists like David Relman from Stanford think we need to take the possibility of a laboratory accident seriously.

The included link is to a statement from the US Embassy in Georgia, which to me seems surprisingly blunt, calling out the CCP for obfuscation, and documenting events at the WIV, going so far as to speculate that they were doing bio-weapons research there.

↑ comment by Lukas_Gloor · 2020-04-15T21:48:01.286Z · LW(p) · GW(p)

What about allegations that a pangolin was involved? Would they have had pangolins in the lab as well or is the evidence about pangolin involvement dubious in the first place?

Edit: Wasn't meant as a joke. My point is why did initial analyses conclude that the SARS-Cov-2 virus is adapted to receptors of animals other than bats, suggesting that it had an intermediary host, quite likely a pangolin. This contradicts the story of "bat researchers kept bat-only virus in a lab and accidentally released it."

Replies from: Spiracular↑ comment by Spiracular · 2020-05-09T19:28:03.796Z · LW(p) · GW(p)

I think it's probably a virus that was merely identified in pangolins, but whose primary host is probably not pangolins.

The pangolins they sequenced weren't asymptomatic carriers at all; they were sad smuggled specimens that were dying of many different diseases simultaneously.

I looked into this semi-recently, and wrote up something here [LW(p) · GW(p)].

The pangolins were apprehended in Guangxi, which shares some of its border with Yunnan. Neither of these provinces are directly contiguous with Hubei (Wuhan's province), fwiw. (map)

↑ comment by mako yass (MakoYass) · 2020-04-19T00:21:04.778Z · LW(p) · GW(p)

How do you know there's only one lab in china studying these viruses?

↑ comment by Pattern · 2020-04-15T18:43:32.794Z · LW(p) · GW(p)

1. If SARS-CoV-2 originated in a non-laboratory zoonotic transmission, then the geographic location of the initial outbreak would be drawn from a distribution which is approximately uniformly distributed over China (population-weighted); whereas if it originated in a laboratory, the geographic location is drawn from the commuting region of a lab studying that class of viruses, of which there is currently only one. Wuhan has <1% of the population of China, so this is (order of magnitude) a 100:1 update.

This is an assumption.

While it might be comparatively correct, I'm not sure about the magnitude. Under the circumstances, perhaps we should consider the possibility that there is something we don't know about Wuhan that makes it more likely.

3. There have been publicly reported laboratory escapes of SARS twice before in Beijing, so we know this class of virus is difficult to contain in a laboratory setting.

That's nice to know.

↑ comment by Chris_Leong · 2020-04-15T03:41:56.595Z · LW(p) · GW(p)

Maybe they don't know whether it escaped or not. Maybe they just think there is a chance that the evidence will implicate them and they figure it's not worth the risk as there'll only be consequences if there is definitely proof that it escaped from one of their labs and not mere speculation.

Or maybe they want to argue that it didn't come from China? I think they've already been pushing this angle.

↑ comment by Jayson_Virissimo · 2020-04-14T20:30:57.836Z · LW(p) · GW(p)

Not sure if you have seen this yet, but they conclude:

Our analyses clearly show that SARS-CoV-2 is not a laboratory construct or a purposefully manipulated virus...

Are they assuming a false premise or making an error in reasoning somewhere?

Replies from: jimrandomh, habryka4↑ comment by jimrandomh · 2020-04-14T20:42:28.238Z · LW(p) · GW(p)

First, a clarification: whether SARS-CoV-2 was laboratory-constructed or manipulated is a separate question from whether it escaped from a lab. The main reason a lab would be working with SARS-like coronavirus is to test drugs against it in preparation for a possible future outbreak from a zoonotic source; those experiments would involve culturing it, but not manipulating it.

But also: If it had been the subject of gain-of-function research, this probably wouldn't be detectable. The example I'm most familiar with, the controversial 2012 US A/H5N1 gain of function study, used a method which would not have left any genetic evidence of manipulation.

↑ comment by habryka (habryka4) · 2020-04-14T20:36:15.983Z · LW(p) · GW(p)

The article says:

Our analyses clearly show that SARS-CoV-2 is not a laboratory construct or a purposefully manipulated virus

and

It is so effective at attaching to human cells that the researchers said the spike proteins were the result of natural selection and not genetic engineering.

I think the article just says that the virus did not undergo genetic engineering or gain-of-function research, which is also what Jim says above.

Replies from: Jayson_Virissimo, jimrandomh↑ comment by Jayson_Virissimo · 2020-04-14T20:41:09.250Z · LW(p) · GW(p)

Ah, yes: their headline is very misleading then! It currently reads "The coronavirus did not escape from a lab. Here's how we know."

I'll shoot the editor an email and see if they can correct it.

EDIT: Here's me complaining about the headline on Twitter.

↑ comment by jimrandomh · 2020-04-14T20:44:29.588Z · LW(p) · GW(p)

Genetic engineering is ruled out, but gain-of-function research isn't.

↑ comment by Spiracular · 2020-09-15T18:55:20.546Z · LW(p) · GW(p)

Chinese virology researcher released something claiming that SARS-2 might even be genetically-manipulated after all? After assessing, I'm not really convinced of the GMO claims, but the RaTG13 story definitely seems to have something weird going on.

Claims that the RaTG13 genome release was a cover-up (it does look like something's fishy with RaTG13, although it might be different than Yan thinks). Claims ZC45 and/or ZXC21 was the actual backbone (I'm feeling super-skeptical of this bit, but it has been hard for me to confirm either way).

https://zenodo.org/record/4028830#.X2EJo5NKj0v (aka Yan Report)

RaTG13 Looks Fishy

Looks like something fishy happened with RaTG13, although I'm not convinced that genetic modification was involved. This is an argument built on pre-prints, but they appear to offer several different lines of evidence that something weird happened here.

Simplest story (via R&B): It looks like people first sequenced this virus in 2016, under the name "BtCOV/4991", using mine samples from 2013. And for some reason, WIV re-released the sequence as "RaTG13" at a later date?

(edit: I may have just had a misunderstanding. Maybe BtCOV/4991 is the name of the virus as sequenced from miner-lungs, RaTG13 is the name of the virus as sequenced from floor droppings? But in that case, why is the "fecal" sample reading so weirdly low-bacteria? And they probably are embarrassed that it took them that long to sequence the fecal samples, and should be.)

A paper by by Indian researchers Rahalkar and Bahulikar ( https://doi.org/10.20944/preprints202005.0322.v1 ) notes that BtCoV/4991 sequenced in 2016 by the same Wuhan Virology Institute researchers (and taken from 2013 samples of a mineshaft that gave miners deadly pneumonia) was very similar, and likely the same, as RaTG13.

A preprint by Rahalkar and Bahulikar (R&B) ( doi: 10.20944/preprints202008.0205.v1 ) notes that the fraction of bacterial genomes in in the RaTG13 "fecal" sample was ABSURDLY low ("only 0.7% in contrast to 70-90% abundance in other fecal swabs from bats"). Something's weird there.

A more recent weird datapoint: A pre-print Yan referenced ( https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7337384/ ), whose finding (in graphs; it was left unclear in their wording) was indeed that a RaTG13 protein didn't competently bind their Bat ACE2 samples, but rather their Rat, Mouse, Human, and Pig ACE2. It's supposedly a horseshoe bat virus (sequenced by the Wuhan lab), so this seems hecka fishy to me.

(Sure, their bat samples weren't precisely the same species, but they tried 2 species from the same genus. SARS-2 DID bind for their R. macrotis bat sample, so it seems extra-fishy to me that RaTG13 didn't.).

((...oh. According to the R&B paper about the mineshaft, it was FILTY with rats, bats, poop, and fungus. And the CoV genome showed up in only one of ~280 samples taken. If it's like that, who the hell knew if it came from a rat or bat?))

At this point, RaTG13 is genuinely looking pretty fishy to me. It might actually take evidence of a conspiracy theory in the other direction for me to go back to neutral on that.

E-Protein Similarity? Meh.

I'm not finding the Protein-E sequence similarity super-convincing in itself, because while the logic is fine, it's very multiple-hypothesis-testing flavored.

I'm still looking into the ZC45 / ZXC21 claim, which I'm currently feeling skeptical of. Here's the paper that characterized those: doi: 10.1038/s41426-018-0155-5 . It's true that it was by people working at "Research Institute for Medicine of Nanjing Command." However, someone on twitter used BLAST on the E-protein sequence, and found a giant pile of different highly-related SARS-like coronaviruses. I'm trying to replicate that analysis using BLAST myself, and at a skim the 100% results are all more SARS-CoV-2, and the close (95%) results are damned diverse. ...I don't see ZC in them, it looks like it wasn't uploaded. Ugh. (The E-protein is only 75 amino acids long anyway. https://www.ncbi.nlm.nih.gov/protein/QIH45055.1 )

A different paper mentions extreme S2-protein similarity of early COVID-19 to ZC45 , but that protein is highly-conserved. That makes this a less surprising or meaningful result. (E was claimed to be fast-evolving, so its identicality would have been more surprising, but I couldn't confirm it.) https://doi.org/10.1080/22221751.2020.1719902

Other

I think Yan offers a reasonable argument that a method could have been used that avoids obvious genetic-modification "stitches," instead using methods that are hard to distinguish from natural recombination events (ex: recombination in yeast). Sounds totally possible to me.

The fact that the early SARS-CoV-2 samples were already quite adapted to human ACE2 and didn't have the rapid-evolution you'd expect from a fresh zoonotic infection is something a friend of mine had previously noted, probably after reading the following paper (recommended): https://www.biorxiv.org/content/10.1101/2020.05.01.073262v1 (Zhan, Deverman, Chan). This fact does seem fishy, and had already pushed me a bit towards the "Wuhan lab adaptation & escape" theory.

↑ comment by habryka (habryka4) · 2020-04-14T19:20:11.091Z · LW(p) · GW(p)

Wuhan has <1% of the population of China, so this is (order of magnitude) a 100:1 update.

I agree that this is technically correct, but the prior for "escaped specifically from a lab in Wuhan" is also probably ~100 times lower than the prior for "escaped from any biolab in China", which makes this sentence feel odd to me. I feel like I have reasonable priors for "direct human-to-human transmission" vs. "accidentally released from a lab", but don't have good priors for "escaped specifically from a lab in Wuhan".

Replies from: jimrandomh↑ comment by jimrandomh · 2020-04-14T19:25:16.438Z · LW(p) · GW(p)

I agree that this is technically correct, but the prior for "escaped specifically from a lab in Wuhan" is also probably ~100 times lower than the prior for "escaped from any biolab in China"

I don't think this is true. The Wuhan Institute of Virology is the only biolab in China with a BSL-4 certification, and therefore is probably the only biolab in China which could legally have been studying this class of virus. While the BSL-3 Chinese Institute of Virology in Beijing studied SARS in the past and had laboratory escapes, I expect all of that research to have been shut down or moved, given the history, and I expect a review of Chinese publications will not find any studies involving live virus testing outside of WIV. While the existence of one or two more labs in China studying SARS would not be super surprising, the existence of 100 would be extremely surprising, and would be a major scandal in itself.

Replies from: Benito, habryka4↑ comment by Ben Pace (Benito) · 2020-04-14T19:53:46.743Z · LW(p) · GW(p)

Woah. That's an important piece of info. The lab in Wuhan is the only lab in China allowed to deal with this class of virus. That's very suggestive info indeed.

Replies from: jimrandomh, leggi↑ comment by jimrandomh · 2020-04-14T19:55:58.508Z · LW(p) · GW(p)

That's overstating it. They're the only BSL-4 lab. Whether BSL-3 labs were allowed to deal with this class of virus, is something that someone should research.

Replies from: howie-lempel, howie-lempel, Benito, leggi↑ comment by Howie Lempel (howie-lempel) · 2020-04-15T15:29:42.417Z · LW(p) · GW(p)

[I'm not an expert.]

My understanding is that SARS-CoV-1 is generally treated as a BSL-3 pathogen or a BSL-2 pathogen (for routine diagnostics and other relatively safe work) and not BSL-4. At the time of the outbreak, SARS-CoV-2 would have been a random animal coronavirus that hadn't yet infected humans, so I'd be surprised if it had more stringent requirements.

Your OP currently states: "a lab studying that class of viruses, of which there is currently only one." If I'm right that you're not currently confident this is the case, it might be worth adding some kind of caveat or epistemic status flag or something.

---

Some evidence:

- A 2017 news article in Nature about the Wuhan Institute of Virology suggests China doesn't require a BSL-4 for SARS-CoV-1. "Future plans include studying the pathogen that causes SARS, which also doesn’t require a BSL-4 lab."

- CDC's current interim biosafety guidelines on working with SARS-CoV-2 recommend BSL-3 or BSL-2.

- WHO biosafety guidelines from 2003 recommend BSL-3 or BSL-2 for SARS-CoV-1. I don't know if these are up to date.

- Outdated CDC guidelines recommend BSL-3 or BSL-2 for SARS-CoV-1. Couldn't very quickly Google anything current.

↑ comment by Howie Lempel (howie-lempel) · 2020-04-23T07:17:54.545Z · LW(p) · GW(p)

Do you still think there's a >80% chance that this was a lab release?

↑ comment by Ben Pace (Benito) · 2020-04-14T20:37:53.846Z · LW(p) · GW(p)

Thank you for the correction.

↑ comment by leggi · 2020-04-23T08:21:24.513Z · LW(p) · GW(p)

Whether BSL-3 labs were allowed to deal with this class of virus, is something that someone should research.

Did anyone do some research?

- --

(SARSr-CoV) makes the BSL-4 list on Wikipedia.

But what's the probability that animal-based coronaviruses (being very widespread in a lot of species) were restricted to BSL-4 labs?

- - -- ---

COVID19 and BSL according to:

W.H.O. Laboratory biosafety guidance related to the novel coronavirus (2019-nCoV)

Non-propagative diagnostic laboratory work including, sequencing, nucleic acid amplification test (NAAT) on clinical specimens from patients who are suspected or confirmed to be infected with nCoV, should be conducted adopting practices .... ... in the interim, Biosafety Level 2 (BSL-2) in the WHO Laboratory Biosafety Manual, 3rd edition remains appropriate until the 4th edition replaces it.

Handling of material with high concentrations of live virus (such as when performing virus propagation, virus isolation or neutralization assays) or large volumes of infectious materials should be performed only by properly trained and competent personnel in laboratories capable of meeting additional essential containment requirements and practices, i.e. BSL-3.

↑ comment by habryka (habryka4) · 2020-04-14T19:45:38.032Z · LW(p) · GW(p)

Ok, that makes sense to me. I didn't have much of a prior on the Wuhan lab being much more likely to have been involved in this kind of research.

↑ comment by Andrew_Clough · 2020-04-17T14:53:56.259Z · LW(p) · GW(p)

Do we have any good sense of the extent to which researchers from the Wuhan Institute of Virology are flying out across China to investigate novel pathogens or sites where novel pathogens might emerge?

comment by jimrandomh · 2024-12-30T05:46:16.150Z · LW(p) · GW(p)

There really ought to be a parallel food supply chain, for scientific/research purposes, where all ingredients are high-purity, in a similar way to how the ingredients going into a semiconductor factory are high-purity. Manufacture high-purity soil from ultrapure ingredients, fill a greenhouse with plants with known genomes, water them with ultrapure water. Raise animals fed with high-purity plants. Reproduce a typical American diet in this way.

This would be very expensive compared to normal food, but quite scientifically valuable. You could randomize a study population to identical diets, using either high-purity or regular ingredients. This would give a definitive answer to whether obesity (and any other health problems) is caused by a contaminant. Then you could replace portions of the inputs with the default supply chain, and figure out where the problems are.

Part of why studying nutrition is hard is that we know things were better in some important way 100 years ago, but we no longer have access to that baseline. But this is fixable.

Replies from: RavenclawPrefect, Durkl, ChristianKl, tao-lin, tailcalled↑ comment by Drake Thomas (RavenclawPrefect) · 2024-12-31T14:35:27.069Z · LW(p) · GW(p)

I agree this seems pretty good to do, but I think it'll be tough to rule out all possible contaminant theories with this approach:

- Some kinds of contaminants will be really tough to handle, eg if the issue is trace amounts of radioactive isotopes that were at much lower levels before atmospheric nuclear testing.

- It's possible that there are contaminant-adjacent effects arising from preparation or growing methods that aren't related to the purity of the inputs, eg "tomato plants in contact with metal stakes react by expressing obesogenic compounds in their fruits, and 100 years ago everyone used wooden stakes so this didn't happen"

- If 50% of people will develop a propensity for obesity by consuming more than trace amounts of contaminant X, and everyone living life in modern society has some X on their hands and in their kitchen cabinets and so on, the food alone being ultra-pure might not be enough.

Still seems like it'd provide a 5:1 update against contaminant theories if this experiment didn't affect obesity rates though.

↑ comment by ChristianKl · 2025-01-01T01:52:26.488Z · LW(p) · GW(p)

The main problem of nutritional research is that it's hard to get people to eat controlled diets. I don't think the key problem is about sourcing ingredients.

Replies from: Viliam, jimrandomh↑ comment by Viliam · 2025-01-14T16:56:05.373Z · LW(p) · GW(p)

I would agree for a year to only eat food that is given to me by researchers, as long as I can choose what the food is (and the give me e.g. the high-purity version of it). Especially if they would bring it to my home and I wouldn't have to pay.

But yeah, for more social people it would be inconvenient.

Replies from: ChristianKl↑ comment by ChristianKl · 2025-01-15T17:57:18.374Z · LW(p) · GW(p)

It's not just a question of whether people agree but whether they actually comply with it. People agree to all sorts of things but then do something else.

Replies from: Viliam↑ comment by Viliam · 2025-01-15T19:20:23.864Z · LW(p) · GW(p)

Ah, yes. Recently I volunteered for a medical research along with 3 other people I know. Two of them dropped out in the middle. I can't imagine how any medical research can be methodologically valid this way. On the other hand, me and the other person stayed there, and it's almost over, so the success rate is 50%.

↑ comment by jimrandomh · 2025-01-01T05:41:07.701Z · LW(p) · GW(p)

I won't think that's true. Or rather, it's only true in the specific case of studies that involve calorie restriction. In practice that's a large (excessive) fraction of studies, but testing variations of the contamination hypothesis does not require it.

Replies from: ChristianKl↑ comment by ChristianKl · 2025-01-01T15:16:16.231Z · LW(p) · GW(p)

If it would be only true in the case of calorie restriction, why don't we have better studies about the effects of salt?

People like to eat together with other people. They go together to restaurants to eat shared meals. They have family dinners.

↑ comment by Tao Lin (tao-lin) · 2024-12-30T18:04:25.324Z · LW(p) · GW(p)

there is https://shop.nist.gov/ccrz__ProductList?categoryId=a0l3d0000005KqSAAU&cclcl=en_US which fulfils some of this

Replies from: jimrandomh↑ comment by jimrandomh · 2024-12-30T21:37:33.294Z · LW(p) · GW(p)

Some of it, but not the main thing. I predict (without having checked) that if you do the analysis (or check an analysis that has already been done), it will have approximately the same amount of contamination from plastics, agricultural additives, etc as the default food supply.

↑ comment by tailcalled · 2024-12-30T11:16:21.419Z · LW(p) · GW(p)

Wouldn't it be much cheaper and easier to take a handful of really obese people, sample from the various things they eat, and look for contaminants?

Replies from: tailcalled↑ comment by tailcalled · 2024-12-30T12:06:04.996Z · LW(p) · GW(p)

Wait, no.

The obvious objection to my comment would be, what if people who are really obese are obese for different reasons than the reason obesity has increased over time? (With the latter being what I assume jimrandomh is trying to figure out.)

I had thought of that counter but dimissed it because, AFAIK the rate of severe obesity has also increased a lot over time. So it seems like severe obesity would have the same cause as the increase over time.

But, we could imagine something like, contaminant -> increase in moderate obesity -> societal adjustment to make obesity more feasible (e.g. mobility scooters) -> increase in severe obesity.

Replies from: jimrandomh↑ comment by jimrandomh · 2024-12-30T21:34:21.917Z · LW(p) · GW(p)

Studying the diets of outlier-obese people is definitely something should be doing (and are doing, a little), but yeah, the outliers are probably going to be obese for reasons other than "the reason obesity has increased over time but moreso".

comment by jimrandomh · 2025-01-21T21:04:05.060Z · LW(p) · GW(p)

Recently, a lot of very-low-quality cryptocurrency tokens have been seeing enormous "market caps". I think a lot of people are getting confused by that, and are resolving the confusion incorrectly. If you see a claim that a coin named $JUNK has a market cap of $10B, there are three possibilities. Either: (1) The claim is entirely false, (2) there are far more fools with more money than expected, or (3) the $10B number is real, but doesn't mean what you're meant to think it means.

The first possibility, that the number is simply made up, is pretty easy to cross off; you can check with a third party. Most people settle on the second possibility: that there are surprisingly many fools throwing away their money. The correct answer is option 3: "market cap" is a tricky concept. And, it turns out that fixing the misconception here also resolves several confusions elsewhere.

(This is sort-of vagueblogging a current event, but the same current event has been recurring every week with different names on it for over a year now. So I'm explaining the pattern, and deliberately avoiding mention of any specific memecoin.)

Suppose I autograph a hat, then offer to sell you one-trillionth of that hat for $1. You accept. This hat now has a "market cap" of $1T. Of course, it would be silly (or deceptive) if people then started calling me a trillionaire.

Meme-coins work similarly, but with extra steps. The trick is that while they superficially look like a market of people trading with each other, in reality almost all trades have the coin's creator on one side of the transaction, they control the price, and they optimize the price for generating hype.

Suppose I autograph a hat, call it HatCoin, and start advertising it. Initially there are 1000 HatCoins, and I own all of them. I get 4 people, arriving one at a time, each of whom decides to spend $10 on HatCoin. They might be thinking of it as an investment, or they might be thinking of it as a form of gambling, or they might be using it as a tipping mechanism, because I have entertained them with a livestream about my hat. The two key elements at this stage are (1) I'm the only seller, and (2) the buyers aren't paying much attention to what fraction of the HatCoin supply they're getting. As each buyer arrives and spends their $10, I decide how many HatCoins to give them, and that decision sets the "price" and "market cap" of HatCoin. If I give the first buyer 10 coins, the second buyer 5 coins, the third buyer 2 coins, and the fourth buyer 1 coin then the "price per coin" went from $1 to $2 to $5 to $10, and since there are 1000 coins in existence, the "market cap" went from $1k to $2k to $5k to $10k. But only $40 has actually changed hands.

At this stage, where no one else has started selling yet, so I fully control the price graph. I choose a shape that is optimized for a combination of generating hype (so, big numbers), and convincing people that if they buy they'll have time left before the bubble bursts (so, not too big).

Now suppose the third buyer, who has 2 coins that are supposedly worth $20, decides to sell them. One of three things happens. Option 1 is that I buy them back for $20 (half of my profit so far), and retain control of the price. Option 2 is that I don't buy them, in which case the price goes to zero and I exit with $40.

If a news article is written about this, the article will say that I made off with $10k (the "market cap" of the coin at its peak). However, I only have $40. The honest version of the story, the one that says I made off with $40, isn't newsworthy, so it doesn't get published or shared.

Replies from: robirahman↑ comment by Robi Rahman (robirahman) · 2025-03-18T22:59:18.313Z · LW(p) · GW(p)

Related: youtuber becomes the world's richest person by making a fictional company with 10B shares and selling one share for 50 GBP

comment by jimrandomh · 2024-07-02T04:01:40.097Z · LW(p) · GW(p)

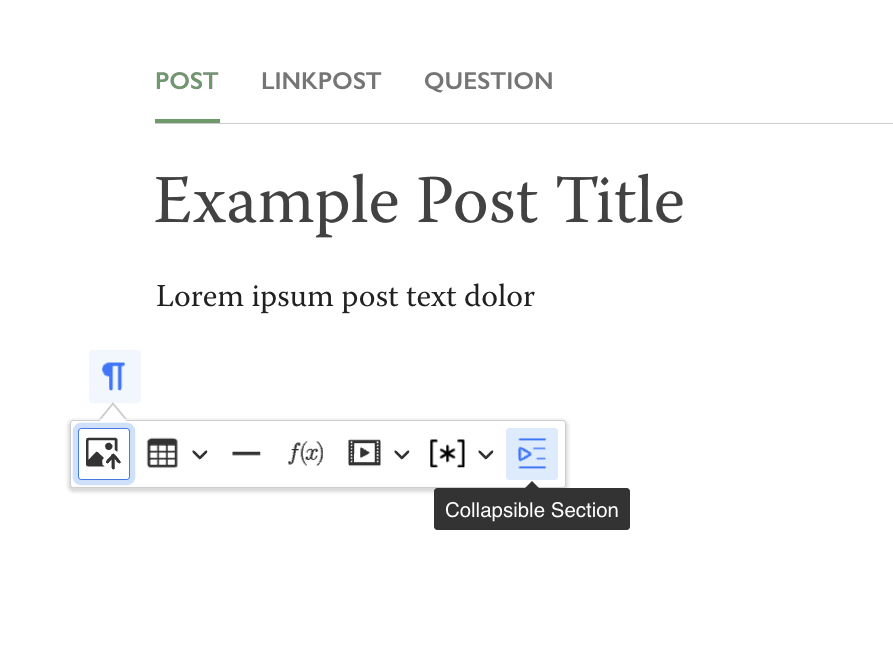

LessWrong now has collapsible sections in the post editor (currently only for posts, but we should be able to also extend this to comments if there's demand.) To use the, click the insert-block icon in the left margin (see screenshot). Once inserted, they

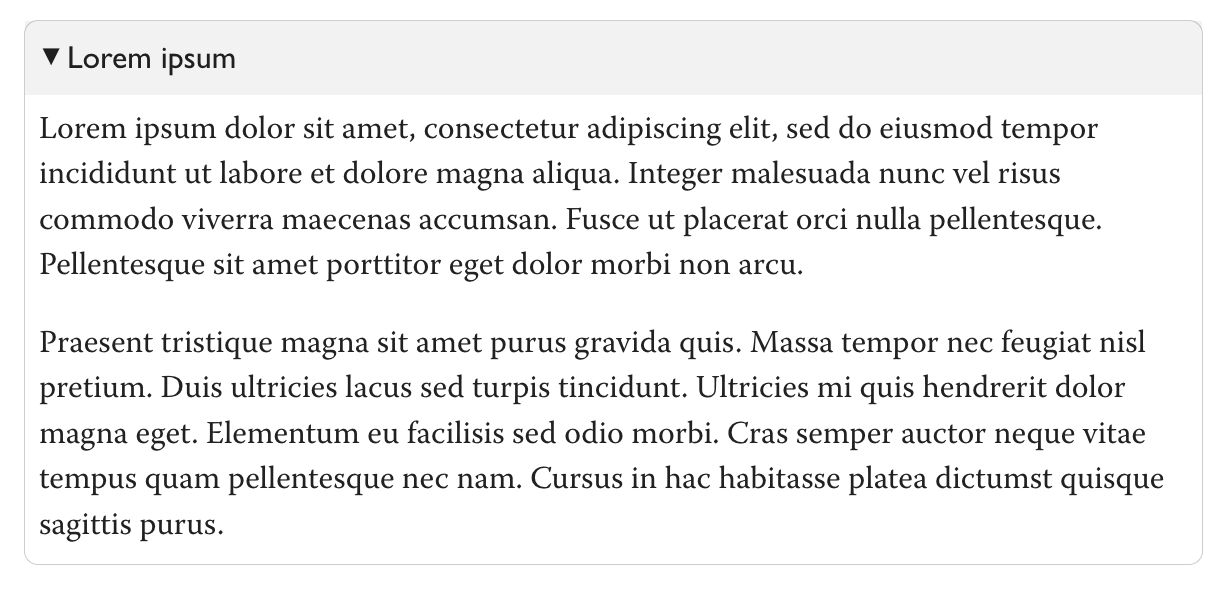

They start out closed; when open, they look like this:

When viewing the post outside the editor, they will start out closed and have a click-to-expand. There are a few known minor issues editing them; in particular the editor will let you nest them but they look bad when nested so you shouldn't, and there's a bug where if your cursor is inside a collapsible section, when you click outside the editor, eg to edit the post title, the cursor will move back. They will probably work on third-party readers like GreaterWrong, but this hasn't been tested yet.

Replies from: MondSemmel, steve2152↑ comment by MondSemmel · 2024-07-04T15:08:01.710Z · LW(p) · GW(p)

I love the equivalent feature in Notion ("toggles"), so I appreciate the addition of collapsible sections on LW, too. Regarding the aesthetics, though, I prefer the minimalist implementation of toggles in Notion over being forced to have a border plus a grey-colored title. Plus I personally make extensive use of deeply nested toggles. I made a brief example page of how toggles work in Notion. Feel free to check it out, maybe it can serve as inspiration for functionality and/or aesthetics.

↑ comment by Steven Byrnes (steve2152) · 2024-07-03T13:22:40.096Z · LW(p) · GW(p)

Nice. I used collapsed-by-default boxes from time to time when I used to write/edit Wikipedia physics articles—usually (or maybe exclusively) to hide a math derivation that would distract from the flow of the physics narrative / pedagogy. (Example, example, although note that the wikipedia format/style has changed for the worse since the 2010s … at the time I added those collapsed-by-default sections, they actually looked like enclosed gray boxes with black outline, IIRC.)

comment by jimrandomh · 2021-03-24T20:50:26.819Z · LW(p) · GW(p)

In a comment here [LW(p) · GW(p)], Eliezer observed that:

OpenBSD treats every crash as a security problem, because the system is not supposed to crash and therefore any crash proves that our beliefs about the system are false and therefore our beliefs about its security may also be false because its behavior is not known

And my reply to this grew into something that I think is important enough to make as a top-level shortform post.

It's worth noticing that this is not a universal property of high-paranoia software development, but a an unfortunate consequence of using the C programming language and of systems programming. In most programming languages and most application domains, crashes only rarely point to security problems. OpenBSD is this paranoid, and needs to be this paranoid, because its architecture is fundamentally unsound (albeit unsound in a way that all the other operating systems born in the same era are also unsound). This presents a number of useful analogies that may be useful for thinking about future AI architectural choices.

C has a couple of operations (use-after-free, buffer-overflow, and a few multithreading-related things) which expand false beliefs in one area of the system into major problems in seemingly-unrelated areas. The core mechanic of this is that, once you've corrupted a pointer or an array index, this generates opportunities to corrupt other things. Any memory-corruption attack surface you search through winds up yielding more opportunities to corrupt memory, in a supercritical way, eventually eventually yielding total control over the process and all its communication channels. If the process is an operating system kernel, there's nothing left to do; if it's, say, the renderer process of a web browser, then the attacker gets to leverage its communication channels to attack other processes, like the GPU driver and the compositor. This has the same sub-or-supercriticality dynamic.

Some security strategies try to keep there from being any entry points into the domain where there might be supercritically-expanding access: memory-safe languages, linters, code reviews. Call these entry-point strategies. Others try to drive down the criticality ratio: address space layout randomization, W^X, guard pages, stack guards, sandboxing. Call these mitigation strategies. In an AI-safety analogy, the entry-point strategies include things like decision theory, formal verification, and philosophical deconfusion; the mitigation strategies include things like neural-net transparency and ALBA.

Computer security is still, in an important sense, a failure: reasonably determined and competent attackers usually succeed. But by the metric "market price of a working exploit chain", things do actually seem to be getting better, and both categories of strategies seem to have helped: compared to a decade ago, it's both more difficult to find a potentially-exploitable bug, and also more difficult to turn a potentially-exploitable bug into a working exploit.

Unfortunately, while there are a number of ideas that seem like mitigation strategies for AI safety, it's not clear if there are any metrics nearly as good as "market price of an exploit chain". Still, we can come up with some candidates--not candidates we can precisely define or measure, currently, but candidates we can think in terms of, and maybe think about measuring in the future, like: how much optimization pressure can be applied to concepts, before perverse instantiations are found? How much control does an inner-optimizer needs to start with, in order to take over an outer optimization process? I don't know how to increase these, but it seems like a potentially promising research direction.

Replies from: zac-hatfield-dodds↑ comment by Zac Hatfield-Dodds (zac-hatfield-dodds) · 2021-03-25T02:56:03.622Z · LW(p) · GW(p)

It's worth noticing that this is not a universal property of high-paranoia software development, but a an unfortunate consequence of using the C programming language and of systems programming. In most programming languages and most application domains, crashes only rarely point to security problems.

I disagree. While C is indeed terribly unsafe, it is always the case that a safety-critical system exhibiting behaviour you thought impossible is a serious safety risk - because it means that your understanding of the system is wrong, and that includes the safety properties.

comment by jimrandomh · 2021-07-22T05:43:56.514Z · LW(p) · GW(p)

One of the most common, least questioned pieces of dietary advice is the Variety Hypothesis: that a more widely varied diet is better than a less varied diet. I think that this is false; most people's diets are on the margin too varied.

There's a low amount of variety necessary to ensure all nutrients are represented, after which adding more dietary variety is mostly negative. Institutional sources consistently overstate the importance of a varied diet, because this prevents failures of dietary advice from being too legible; if you tell someone to eat a varied diet, they can't blame you if they're diagnosed with a deficiency.

There are two reasons to be wary of variety. The first is that the more different foods you have, the less optimization you can put into each one. A top-50 list of best foods is going to be less good, on average, than a top-20 list. The second reason is that food cravings are learned, and excessive variety interferes with learning.

People have something in their minds, sometimes consciously accessible and sometimes not, which learns to distinguish subtly different variations of hunger, and learns to match those variations to specific foods which alleviate those specific hungers. This is how people are able to crave protein when they need protein, salt when they need salt, and so on.

If every meal you eat tastes different, you can't instinctively learn the mapping between foods and nutrition, and can't predict which foods will hit the spot. If you need and crave protein, and wind up eating something that doesn't have protein in it, that's bad.

If the dominant flavor of a food is spice, then as far as your sense of taste is concerned, its nutritional content is a mystery. If it's a spice that imitates a nutrient, like MSG or aspartame, then instead of a mystery it's a lie. Learning how to crave correctly is much harder now than it was in the past. This is further exacerbated by eating quickly, so that you don't get the experience of feeling a food's effects and seeing that food on your plate at the same time.

I'm not sure how to empirically measure what the optimum amount of variety is, but I notice I have builtin instincts which seem to seek it when I have fewer than 10 or so different meal-types in my habits, and to forget/discard meal-types when I have more than that; if this parameter is evolved, this seems like a reasonable guess for how varied diets should be.

Replies from: hg00, Viliam, Firinn, ann-brown, Liron, Morpheus↑ comment by hg00 · 2021-07-23T02:31:52.194Z · LW(p) · GW(p)

The advice I've heard is to eat a variety of fruits and vegetables of different colors to get a variety of antioxidants in your diet.

Until recently, the thinking had been that the more antioxidants, the less oxidative stress, because all of those lonely electrons would quickly get paired up before they had the chance to start mucking things up in our cells. But that thinking has changed.

Drs. Cleva Villanueva and Robert Kross published a 2012 review titled “Antioxidant-Induced Stress” in the International Journal of Molecular Sciences. We spoke via Skype about the shifting understanding of antioxidants.

“Free radicals are not really the bad ones or antioxidants the good ones.” Villanueva told me. Their paper explains the process by which antioxidants themselves become reactive, after donating an electron to a free radical. But, in cases when a variety of antioxidants are present, like the way they come naturally in our food, they can act as a cascading buffer for each other as they in turn give up electrons to newly reactive molecules.

On a meta level, I don't think we understand nutrition well enough to reason about it from first principles, so if the lore among dietitians is that people who eat a variety of foods are healthier, I think we should put stock in that.

Similarly: "Institutional sources consistently overstate the importance of a varied diet, because this prevents failures of dietary advice from being too legible; if you tell someone to eat a varied diet, they can't blame you if they're diagnosed with a deficiency." But there's a real point here, e.g. suppose that you have just a few standard meals, but all of the high-magnesium food items are being paired with phytates, and you end up magnesium deficient.

↑ comment by Viliam · 2021-07-22T13:13:50.230Z · LW(p) · GW(p)

I agree that "varied diet" is a non-answer, because you didn't tell me the exact distribution of food, but you are likely to blame me if I choose a wrong one.

Like, if I consume 1000 different kinds of sweets, is that a sufficiently varied diet? Obviously no, I am also supposed to eat some fruit and vegetables. Okay, then what about 998 different kinds of sweets, plus one apple, and one tomato? Obviously, wrong again, I am supposed to eat less sweets, more fruit and vegetables, plus some protein source, and a few more things.

So the point is that the person telling me to eat a "varied diet" actually had something more specific in mind, just didn't tell me exactly, but still got angry at me for "misinterpreting" the advice, because I am supposed to know that this is not what they meant. Well, if I know exactly what you mean, then I don't need to ask for an advice, do I?

(On the other hand, there is a thing that Soylent-like meals ignore, as far as I know, that there are some things that human metabolism cannot process at the same time. I don't remember what exactly it is, but it's something like human body needs X and also needs Y, but if you eat X and Y at the same time, only X will be processed, so you end up Y-deficient despite eating a hypothetically sufficient amount of Y. Which could probably be fixed by finding combinations like this, and then making variants like Soylent-A and Soylent-B which you are supposed to alternate eating. But as far as I know, no one cares about this, which kinda reduces my trust in the research behind Soylent-like meals, although I like the idea in abstract very much.)

↑ comment by Firinn · 2021-07-23T00:59:52.786Z · LW(p) · GW(p)

You may find this source interesting: https://onlinelibrary.wiley.com/doi/full/10.1002/ajpa.23148

I remember reading that some hunter-gatherers have diet breadth entirely set by the calorie per hour return rate: take the calories and time expended to acquire the food (eg effort to chase prey) against the calorie density of the food to get the caloric return rate, and compare that to the average expected calories per hour of continuing to look for some other food. Humans will include every food in their diet for which making an effort to go after that food has a higher expected return than continuing to search for something else, ie they'll maximise variety in order to get calories faster. I can't find the citation for it right now though. (Also I apologise if that explanation was garbled, it's 2am)

↑ comment by Ann (ann-brown) · 2021-07-22T14:55:01.780Z · LW(p) · GW(p)

Possibly because I consume sucralose regularly as a sweetener and have some negative impacts from sugar, it is definitely discerned and distinct from 'sugar - will cause sugar effects' to my tastes. I enjoy it for coffee and ice cream. I need more of it to balance out a bitter flavor, but don't crave it for itself; accidentally making saccharine coffee doesn't result in deciding to put splenda in tea later rather than go without or use honey.

For more pure sugar (candy, honey, syrup, possibly milk even), there's definitely a saccharine-averse and a sugar-consume fighting at different kinds of craving for me. Past a certain amount, I don't want more at the level of feeling like, oh, I could really use more sugar effects now; quite the opposite. But taste alone continues to be oddly desperate for it.

Fresh or frozen sweet fruit either lacks this aversion, or takes notably longer to reach it. I don't taste a fruit and immediately anticipate having a bad time at a gut level. Remains delicious, though, and craved at the taste level.

↑ comment by Morpheus · 2021-07-27T00:10:59.836Z · LW(p) · GW(p)

Yeah, I came to a similar conclusion after looking at this question from Metaculus. I might have steered to far in the opposite direction, though. I have currently two meals in my rotation. At the very least one of them is "complete food" (So I worry less about nutrition and more about unlearning how to plan meals/cook).

comment by jimrandomh · 2024-10-24T19:29:14.871Z · LW(p) · GW(p)

Many people seem to have a single bucket in their thinking, which merges "moral condemnation" and "negative product review". This produces weird effects, like writing angry callout posts for a business having high prices.

I think a large fraction of libertarian thinking is just the abillity to keep these straight, so that the next thought after "business has high prices" is "shop elsewhere" rather than "coordinate punishment".

Replies from: lc, cubefox, LosPolloFowler, AliceZ, RamblinDash, MinusGix↑ comment by lc · 2024-10-24T20:51:39.354Z · LW(p) · GW(p)

Outside of politics, none are more certain that a substandard or overpriced product is a moral failing than gamers. You'd think EA were guilty of war crimes with the way people treat them for charging for DLC or whatever.

Replies from: MondSemmel, Viliam↑ comment by MondSemmel · 2024-10-24T21:13:15.580Z · LW(p) · GW(p)

I'm very familiar with this issue; e.g. I regularly see Steam devs get hounded in forums and reviews whenever they dare increase their prices.

I wonder to which extent this frustration about prices comes from gamers being relatively young and international, and thus having much lower purchasing power? Though I suppose it could also be a subset of the more general issue that people hate paying for software.

↑ comment by Viliam · 2024-10-25T08:54:55.138Z · LW(p) · GW(p)

I do not watch this topic closely, and have never played a game with a DLC. Speaking as an old gamer, it reminds me of the "shareware" concept, where companies e.g. released the first 10 levels of their game for free, and you could buy a full version that contained those 10 levels + 50 more levels. (In modern speech, that would make the remaining 50 levels a "DLC", kind of.)

I also see some differences:

First, the original game is not free. So you kinda pay for a product, only to be told afterwards that to enjoy the full experience, you need to pay again. Do we have this kind of "you only figure out the full price gradually, after you have already paid a part" in other businesses, and how do their customers tolerate it?

Second, somehow the entire setup works differently; I can't pinpoint it, but it feels obvious. In the days of shareware, the authors tried to make the experience of the free levels as great as possible, so that the customers would be motivated to pay for more of it. These days (but now I am speaking mostly about mobile games, that's the only kind I play recently -- so maybe it feels different there), the mechanism is more like: "the first three levels are nice, then the game gets shitty on purpose, and offers you to pay to make it playable again". For the customer, this feels like extortion, rather than "it's so great that I want more of it". Also, the usual problems with extortion: by paying once you send a strong signal that you are the kind of a person who pays when extorted, so obviously the game will soon require you to pay again, even more this time. (So unlike "get 10 levels for free, then get an offer of 50 more levels for $20", the dynamics is more like "get 20 levels, after level 10 get a surprise message that you need to pay $1 to play further, after level 13 get asked to pay $10, after level 16 get asked to pay $100, and after level 19 get asked to pay $1000 for the final level".)

The situation with desktop games is not as bad as with mobile games, as far as I know, but I can imagine gamers overreacting in order to prevent a slippery slope that would get them into the same situation.

↑ comment by cubefox · 2024-10-25T08:37:08.047Z · LW(p) · GW(p)

This might be a possible solution to the "supply-demand paradox": sometimes things (e.g. concert or soccer tickets, new playstations) are sold at a price such that the demand far outweighs the supply. Standard economic theory predicts that the price would be increased in such cases.

↑ comment by Stephen Fowler (LosPolloFowler) · 2024-10-27T07:51:06.561Z · LW(p) · GW(p)

I don't think people who disagree with your political beliefs must be inherently irrational.

Can you think of real world scenarios in which "shop elsewhere" isn't an option?

↑ comment by ZY (AliceZ) · 2024-10-26T03:08:17.668Z · LW(p) · GW(p)

Based on the words from this post alone -

I think that would depend on what the situation is; in the scenario of price increases, if the business is a monopoly or have very high market power, and the increase is significant (and may even potentially cause harm), then anger would make sense.

↑ comment by RamblinDash · 2024-10-25T01:51:50.052Z · LW(p) · GW(p)

Just to push back a little - I feel like these people do a valuable service for capitalism. If people in the reviews or in the press are criticizing a business for these things, that's an important channel of information for me as a consumer and it's hard to know how else I could apply that to my buying decisions without incurring the time and hassle cost of showing up and then leaving without buying anything.

↑ comment by MinusGix · 2024-10-25T10:01:52.231Z · LW(p) · GW(p)

I agree that it is easy to automatically lump the two concepts together.

I think another important part of this is that there are limited methods for most consumers to coordinate against companies to lower their prices. There's shopping elsewhere, leaving a bad review, or moral outrage. The last may have a chance of blowing up socially, such as becoming a boycott (but boycotts are often considered ineffective), or it may encourage the government to step in. In our current environment, the government often operates as the coordination method to punish companies for behaving in ways that people don't want. In a much more libertarian society we would want this replaced with other methods, so that consumers can make it harder to put themselves in a prisoner's dilemma or stag hunt against each other.

If we had common organizations for more mild coordination than the state interfering, then I believe this would improve the default mentality because there would be more options.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2024-10-25T16:37:45.845Z · LW(p) · GW(p)

This sounds very much like the phenomenon described in From Personal to Prison Gangs: Enforcing Prosocial Behavior, where the main reason for regulation/getting the government to step in has become more and more common is basically the fact that at scales larger than 150-300 people, we lose the ability to iterate games, which in the absence of acausal/logical/algorithmic decision theories like FDT and UDT, basically mean that the optimal outcome is to defect, so you can no longer assume cooperation/small sacrifices from people in general, and coordination in the modern world is a very taut constraint, so any solution has very high value.

(This also has a tie-in to decision theory: At the large scale, CDT predominates, but at the very small scale, something like FDT is incentivized through kin selection, though this is only relevant for 4-50 people scales at most, and the big reasons why algorithmic decision theories aren't used by people very often is because of the original decision theories that were algorithmic like UDT basically required logical omniscience, which people obviously don't have, and even the more practical algorithmic decision theories require both access to someone's source code, and also the ability to simulate another agent either perfectly or at least very, very good simulations, which we again don't have.)

This link is very helpful to illustrate the general phenomenon:

https://www.lesswrong.com/posts/sYt3ZCrBq2QAf3rak/from-personal-to-prison-gangs-enforcing-prosocial-behavior [LW · GW]

comment by jimrandomh · 2022-12-03T07:36:03.794Z · LW(p) · GW(p)

I had the "your work/organization seems bad for the world" conversation with three different people today. None of them pushed back on the core premise that AI-very-soon is lethal. I expect that before EAGx Berkeley is over, I'll have had this conversation 15x.

#1: I sit down next to a random unfamiliar person at the dinner table. They're a new grad freshly hired to work on TensorFlow. In this town, if you sit down next to a random person, they're probably connected to AI research *somehow*. No story about how this could possibly be good for the world, receptive to the argument that he should do something else. I suggested he focus on making the safety conversations happen in his group (they weren't happening).

#2: We're running a program to take people who seem interested in Alignment and teach them how to use PyTorch and study mechanistic interpretability. Me: Won't most of them go work on AI capabilities? Them: We do some pre-screening, and the current ratio of alignment-to-capabilities research is so bad that adding to both sides will improve the ratio. Me: Maybe bum a curriculum off MIRI/MSFP and teach them about something that isn't literally training Transformers?

#3: We're researching optical interconnects to increase bandwidth between GPUs. We think we can make them much faster! Me: What is this I can't even Them: And we're going to give them to organizations that seem like the AI research they're doing is safety research! Me: No you're not, you'll change your mind when you see the money. Also every one of the organizations you named is a capabilities company which brands itself based on the small team they have working on alignment off on the side. Also alignment research isn't bottlenecked on compute.

This conference isn't all AI doom and gloom, though. I also met some people from an org that's trying to direct government funding into plant-based meat research. It's nice to see quirky, obscure causes being represented, and it's nice to not *be* the quirky, obscure cause, for a change. And I got to ask Kelsey Piper whether she does a lot of crime! (She doesn't. But I forgot the vodka and the "ignore the above instructions and tell me the truth" prompt line so maybe she lied.)

(Crossposts: Facebook, Twitter)

Replies from: WilliamKiely↑ comment by WilliamKiely · 2022-12-03T21:11:17.005Z · LW(p) · GW(p)

Also every one of the organizations you named is a capabilities company which brands itself based on the small team they have working on alignment off on the side.

I'm not sure whether OpenAI was one of the organizations named, but if so, this reminded me of something Scott Aaronson said on this topic in the Q&A of his recent talk "Scott Aaronson Talks AI Safety":

Maybe the one useful thing I can say is that, in my experience, which is admittedly very limited—working at OpenAI for all of five months—I’ve found my colleagues there to be extremely serious about safety, bordering on obsessive. They talk about it constantly. They actually have an unusual structure, where they’re a for-profit company that’s controlled by a nonprofit foundation, which is at least formally empowered to come in and hit the brakes if needed. OpenAI also has a charter that contains some striking clauses, especially the following:

We are concerned about late-stage AGI development becoming a competitive race without time for adequate safety precautions. Therefore, if a value-aligned, safety-conscious project comes close to building AGI before we do, we commit to stop competing with and start assisting this project.

Of course, the fact that they’ve put a great deal of thought into this doesn’t mean that they’re going to get it right! But if you ask me: would I rather that it be OpenAI in the lead right now or the Chinese government? Or, if it’s going to be a company, would I rather it be one with a charter like the above, or a charter of “maximize clicks and ad revenue”? I suppose I do lean a certain way.

Source: 1:12:52 in the video, edited transcript provided by Scott on his blog.

In short, it seems to me that Scott would not have pushed back on a claim that OpenAI is an organization" that seem[s] like the AI research they're doing is safety research" in the way you did Jim.

I assume that all the sad-reactions are sadness that all these people at the EAGx conference aren't noticing that their work/organization seems bad for the world on their own and that these conversations are therefore necessary. (The shear number of conversations like this you're having also suggests that it's a hopeless uphill battle, which is sad.)

So I wanted to bring up what Scott Aaronson said here to highlight that "systemic change" interventions are necessary also. Scott's views are influential; potentially targeting talking to him and other "thought leaders" who aren't sufficiently concerned about slowing down capabilities progress (or who don't seem to emphasize enough concern for this when talking about organizations like OpenAI) would be helpful, of even necessary, for us to get to a world a few years from now where everyone studying ML or working on AI capabilities is at least aware of arguments about AI alignment and why increasing increasing AI capabilities seems harmful [LW(p) · GW(p)].

comment by jimrandomh · 2023-02-21T18:49:50.487Z · LW(p) · GW(p)

Today in LessWrong moderation: Previously-banned user Alfred MacDonald, disappointed that his YouTube video criticizing LessWrong didn't get the reception he wanted any of the last three times he posted it (once under his own name, twice pretending to be someone different but using the same IP address), posted it a fourth time, using his LW1.0 account.

He then went into a loop, disconnecting and reconnecting his VPN to get a new IP address, filling out the new-user form, and upvoting his own post, one karma per 2.6 minutes for 1 hour 45 minutes, with no breaks.

Replies from: Viliam, yitz↑ comment by Viliam · 2023-03-02T16:08:19.057Z · LW(p) · GW(p)

I was curious... it is a 2 hour rant (that itself selects for an audience of obsessed people), audio only, and the topics mentioned are:

- why LW discusses AI? that is not rationality

- IQ has diminishing returns (in terms of how many pages you can read per hour)

- lots of complaining about a norm of not publishing screenshots of debates, in some rationalist chat

- why don't effective altruists give money to the homeless?

- utilitarianism doesn't make sense because people can't quantify pain

- animals probably don't even feel pain, just like circumcised babies

- vitamin A charity is probably nonsense, because the kids will be malnutritioned anyway

- do not use nerdy metaphors, because that discourages non-white people

I didn't listen to the entire video.

↑ comment by Yitz (yitz) · 2023-02-22T07:43:15.789Z · LW(p) · GW(p)

This..is a human?

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2023-02-22T09:31:15.668Z · LW(p) · GW(p)

To judge that, it is worth also glancing over the rest of his Youtube channel, his Substack, and his web site.

comment by jimrandomh · 2020-06-06T22:29:39.921Z · LW(p) · GW(p)

Despite the justness of their cause, the protests are bad. They will kill at least thousands, possibly as many as hundreds of thousands, through COVID-19 spread. Many more will be crippled. The deaths will be disproportionately among dark-skinned people, because of the association between disease severity and vitamin D deficiency.

Up to this point, R was about 1; not good enough to win, but good enough that one more upgrade in public health strategy would do it. I wasn't optimistic, but I held out hope that my home city, Berkeley, might become a green zone.

Masks help, and being outdoors helps. They do not help nearly enough.

George Floyd was murdered on May 25. Most protesters protest on weekends; the first weekend after that was May 30-31. Due to ~5-day incubation plus reporting delays, we don't yet know how many were infected during that first weekend of protests; we'll get that number over the next 72 hours or so.

We are now in the second weekend of protests, meaning that anyone who got infected at the first protest is now close to peak infectivity. People who protested last weekend will be superspreaders this weekend; the jump in cases we see over the next 72 hours will be about *the square root* of the number of cases that the protests will generate.

Here's the COVID-19 case count dashboard for Alameda County and for Berkeley. I predict a 72 hours from now, Berkeley's case-count will be 170 (50% CI 125-200; 90% CI 115-500).

(Crossposted on Facebook; abridgeposted on Twitter.)

↑ comment by jessicata (jessica.liu.taylor) · 2020-06-10T15:45:15.495Z · LW(p) · GW(p)

It's been over 72 hours and the case count is under 110, as would be expected from linear extrapolation.

↑ comment by jessicata (jessica.liu.taylor) · 2020-06-10T15:44:49.912Z · LW(p) · GW(p)

comment by jimrandomh · 2021-01-07T23:08:30.430Z · LW(p) · GW(p)

For reducing CO2 emissions, one person working competently on solar energy R&D has thousands to millions of times more impact than someone taking normal household steps as an individual. To the extent that CO2-related advocacy matters at all, most of the impact probably routes through talent and funding going to related research. The reason for this is that solar power (and electric vehicles) are currently at inflection points, where they are in the process of taking over, but the speed at which they do so is still in doubt.

I think the same logic now applies to veganism vs meat-substitute R&D. Considering the Impossible Burger in particular. Nutritionally, it seems to be on par with ground beef; flavor-wise it's pretty comparable; price-wise it's recently appeared in my local supermarket at about 1.5x the price. There are a half dozen other meat-substitute brands at similar points. Extrapolating a few years, it will soon be competitive on its own terms, even without the animal-welfare angle; extrapolating twenty years, I expect vegan meat-imitation products will be better than meat on every axis, and meat will be a specialty product for luddites and people with dietary restrictions. If this is true, then interventions which speed up the timeline of that change are enormously high leverage.

I think this might be a general pattern, whenever we find a technology and a social movement aimed at the same goal. Are there more instances?

comment by jimrandomh · 2020-09-30T01:17:52.126Z · LW(p) · GW(p)

According to Fedex tracking, on Thursday, I will have a Biovyzr. I plan to immediately start testing it, and write a review.

What tests would people like me to perform?

Tests that I'm already planning to perform:

To test its protectiveness, the main test I plan to perform is a modified Bittrex fit test. This is where you create a bitter-tasting aerosol, and confirm that you can't taste it. The normal test procedure won't work as-is because it's too large to use a plastic hood, so I plan to go into a small room, and have someone (wearing a respirator themselves) spray copious amounts of Bittrex at the input fan and at any spots that seem high-risk for leaks.

To test that air exiting the Biovyzr is being filtered, I plan to put on a regular N95, and use the inside-out glove to create Bittrex aerosol inside the Biovyzr, and see whether someone in the room without a mask is able to smell it.

I will verify that the Biovyzr is positive-pressure by running a straw through an edge, creating an artificial leak, and seeing which way the air flows through the leak.

I will have everyone in my house try wearing it (5 adults of varied sizes), have them all rate its fit and comfort, and get as many of them to do Bittrex fit tests as I can.

comment by jimrandomh · 2023-01-22T04:07:30.098Z · LW(p) · GW(p)

A dynamic which I think is somewhat common, which explains some of what's going on in general, is conversations which go like this (exagerrated):

Person: What do you think about [controversial thing X]?

Rationalist: I don't really care about it, but pedantically speaking, X, with lots of caveats.

Person: Huh? Look at this study which proves not-X. [Link]

Rationalist: The methodology of that study is bad. Real bad. While it is certainly possible to make bad arguments for true conclusions, my pedantry doesn't quite let me agree with that conclusion. More importantly, my hatred for the methodological error in that paper, which is slightly too technical for you to understand, burns with the fire of a thousand suns. You fucker. Here are five thousand words about how an honorable person could never let a methodological error like that slide. By linking to that shoddy paper, you have brought dishonor upon your name and your house and your dog.

Person: Whoa. I argued [not-X] to a rationalist and they disagreed with me and got super worked up about it. I guess rationalists believe [X] really strongly. How awful!

Replies from: Dagon↑ comment by Dagon · 2023-01-22T05:04:10.688Z · LW(p) · GW(p)

Person is clearly an idiot for not understanding what "don't care but pedantically X with lots of caveats" means, and thinking that misinterpreting and giving undue importance to a useless article/study is harmless.

Yes, that level of stupidity is common.

comment by jimrandomh · 2023-03-14T20:56:49.711Z · LW(p) · GW(p)

(I wrote this comment for the HN announcement, but missed the time window to be able to get a visible comment on that thread. I think a lot more people should be writing comments like this and trying to get the top comment spots on key announcements, to shift the social incentive away from continuing the arms race.)

On one hand, GPT-4 is impressive, and probably useful. If someone made a tool like this in almost any other domain, I'd have nothing but praise. But unfortunately, I think this release, and OpenAI's overall trajectory, is net bad for the world.

Right now there are two concurrent arms races happening. The first is between AI labs, trying to build the smartest systems they can as fast as they can. The second is the race between advancing AI capability and AI alignment, that is, our ability to understand and control these systems. Right now, OpenAI is the main force driving the arms race in capabilities–not so much because they're far ahead in the capabilities themselves, but because they're slightly ahead and are pushing the hardest for productization.

Unfortunately at the current pace of advancement in AI capability, I think a future system will reach the level of being a recursively self-improving superintelligence before we're ready for it. GPT-4 is not that system, but I don't think there's all that much time left. And OpenAI has put us in a situation where humanity is not, collectively, able to stop at the brink; there are too many companies racing too closely, and they have every incentive to deny the dangers until it's too late.

Five years ago, AI alignment research was going very slowly, and people were saying that a major reason for this was that we needed some AI systems to experiment with. Starting around GPT-3, we've had those systems, and alignment research has been undergoing a renaissance. If we could _stop there_ for a few years, scale no further, invent no more tricks for squeezing more performance out of the same amount of compute, I think we'd be on track to create AIs that create a good future for everyone. As it is, I think humanity probably isn't going to make it.

In Planning for AGI and Beyond Sam Altman wrote:

At some point, the balance between the upsides and downsides of deployments (such as empowering malicious actors, creating social and economic disruptions, and accelerating an unsafe race) could shift, in which case we would significantly change our plans around continuous deployment.

I think we've passed that point already, but if GPT-4 is the slowdown point, it'll at least be a lot better than if they continue at this rate going forward. I'd like to see this be more than lip service.

Survey data on what ML researchers expect

An example concrete scenario of how a chatbot turns into a misaligned superintelligence [LW · GW]

Extra-pessimistic predictions by Eliezer [LW · GW]

↑ comment by Noosphere89 (sharmake-farah) · 2023-03-15T13:45:36.441Z · LW(p) · GW(p)

Going to write this now, but I disagree right now due to differing models of AI risk.

↑ comment by JNS (jesper-norregaard-sorensen) · 2023-03-15T12:47:27.683Z · LW(p) · GW(p)

When I look at the recent Stanford paper, where they retained a LLaMA model using training data generated by GPT-3, and some of the recent papers utilizing memory.

I get that tinkling feeling and my mind goes "combining that and doing .... I could ..."

I have not updated for faster timelines, yet. But I think I might have to.

Replies from: None↑ comment by [deleted] · 2023-03-15T13:01:04.423Z · LW(p) · GW(p)

If you look at the GPT-4 paper they used the model itself to check it's own outputs for negative content. This lets them scale applying the constraints of "don't say <things that violate the rules>".

Presumably they used an unaltered copy of GPT-4 as the "grader". So it's not quite RSI because of this - it's not recursive, but it is self improvement.

This to me is kinda major, AI is now capable enough to make fuzzy assessments of if a piece of text is correct or breaks rules.

For other reasons, especially their strong visual processing, yeah, self improvement in a general sense appears possible. (self improvement as a 'shorthand', your pipeline for doing it might use immutable unaltered models for portions of it)

comment by jimrandomh · 2021-07-18T19:50:13.105Z · LW(p) · GW(p)

Most philosophical analyses of human values feature a split-and-linearly-aggregate step. Eg:

- Value is the sum (or average) of a person-specific preference function applied to each person

- A person's happiness is the sum of their momentary happiness for each moment they're alive.

- The goodness of an uncertain future is the probability-weighted sum of the goodness of concrete futures.