MikkW's Shortform

post by MikkW (mikkel-wilson) · 2020-08-10T20:39:29.510Z · LW · GW · 369 commentsContents

369 comments

369 comments

Comments sorted by top scores.

comment by MikkW (mikkel-wilson) · 2020-09-30T18:26:44.962Z · LW(p) · GW(p)

I was going for a walk yesterday night, and when I looked up at the sky, I saw something I had never seen before: a bright orange dot, like a star, but I had never seen a star that bright and so orange before. "No... that can't be"- but it was: I was looking at Mars, that other world I had heard so much about, thought so much about.

I never realized until yesterday that I had never seen Mars with my own two eyes until that day- one of the closest worlds that humans could, with minimal difficulty, make into a new home one day.

It struck me then in a way that I never felt before, just how far away the world Mars is. I knew it in an abstract sense, but seeing this little dot in the distance, a dot that I knew to be an object larger even than the Moon, but seeming so small in comparison, made me realize, in my gut, just how far away this other world was, just like how when I stand on top of a mountain, and see small buildings on the ground way below me, I realize that those small buildings are actually skyscrapers far away.

And yet, as far as Mars was that night, it was so bright, so apparent, precisely because it was closer now to us than it normally ever is- normally this world is even further from us than it is now.

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2021-02-15T05:58:51.658Z · LW(p) · GW(p)

Correction: here I say that I had never seen Mars before, but that's almost certainly not correct. Mars is usually a tiny dot, nearly indistinguishable from the other stars in the sky (it is slightly more reddish / orange), so what I was seeing was a fairly unusual sight

comment by MikkW (mikkel-wilson) · 2021-03-24T00:48:46.815Z · LW(p) · GW(p)

In short, I am selling my attention by selling the right to put cards in my Anki deck, starting at the low price of $1 per card.

I will create and add a card (any card that you desire, with the caveat that I can veto any card that seems problematic, and capped to a similar amount of information per card as my usual cards contain) to my Anki deck for $1. After the first ten cards (across all people), the price will rise to $2 per card, and will double every 5 cards from then on. I commit to study the added card(s) like I would any other card in my decks (I will give it a starting interval of 10 days, which is sooner than the usual interval of 20 days I usually use, unless I judge that a shorter interval makes sense. I study Anki every day, and have been clearing my deck at least once every 10 days for the past 5 months, and intend to continue to do so). Since I will be creating the cards myself (unless you know of a high-quality deck that contains cards with the information you desire), an idea for a card is enough even if you don't know how to execute it.

Both question-and-answer and straight text are acceptable forms for cards. Acceptable forms of payment include cash, Venmo, BTC, ETH, Good Dollar, and Doge, at the corresponding exchange rates.

This offer will expire in 60 days. If you are interested in taking me up on this afterwards, feel free to message me.

There is now a top-level post discussing this offer [LW · GW]

Price as of 00:53 UTC 24 Mar '21: $4 per card

17 cards claimed so far

Replies from: MathieuRoy, mikkel-wilson, Chris_Leong, MathieuRoy, wunan↑ comment by Mati_Roy (MathieuRoy) · 2021-03-24T02:47:02.296Z · LW(p) · GW(p)

That's genius! Can I (or you) create a LessWrong thread inviting others to do the same?

Replies from: mikkel-wilson, mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2021-03-24T02:50:51.766Z · LW(p) · GW(p)

Thanks! I will create a top level post explaining my motivations and inviting others to join.

↑ comment by MikkW (mikkel-wilson) · 2021-03-24T06:29:33.261Z · LW(p) · GW(p)

Done:

https://www.lesswrong.com/posts/zg6DAqrHTPkek2GpA/selling-attention-for-money [LW · GW]

↑ comment by MikkW (mikkel-wilson) · 2021-03-24T04:41:21.689Z · LW(p) · GW(p)

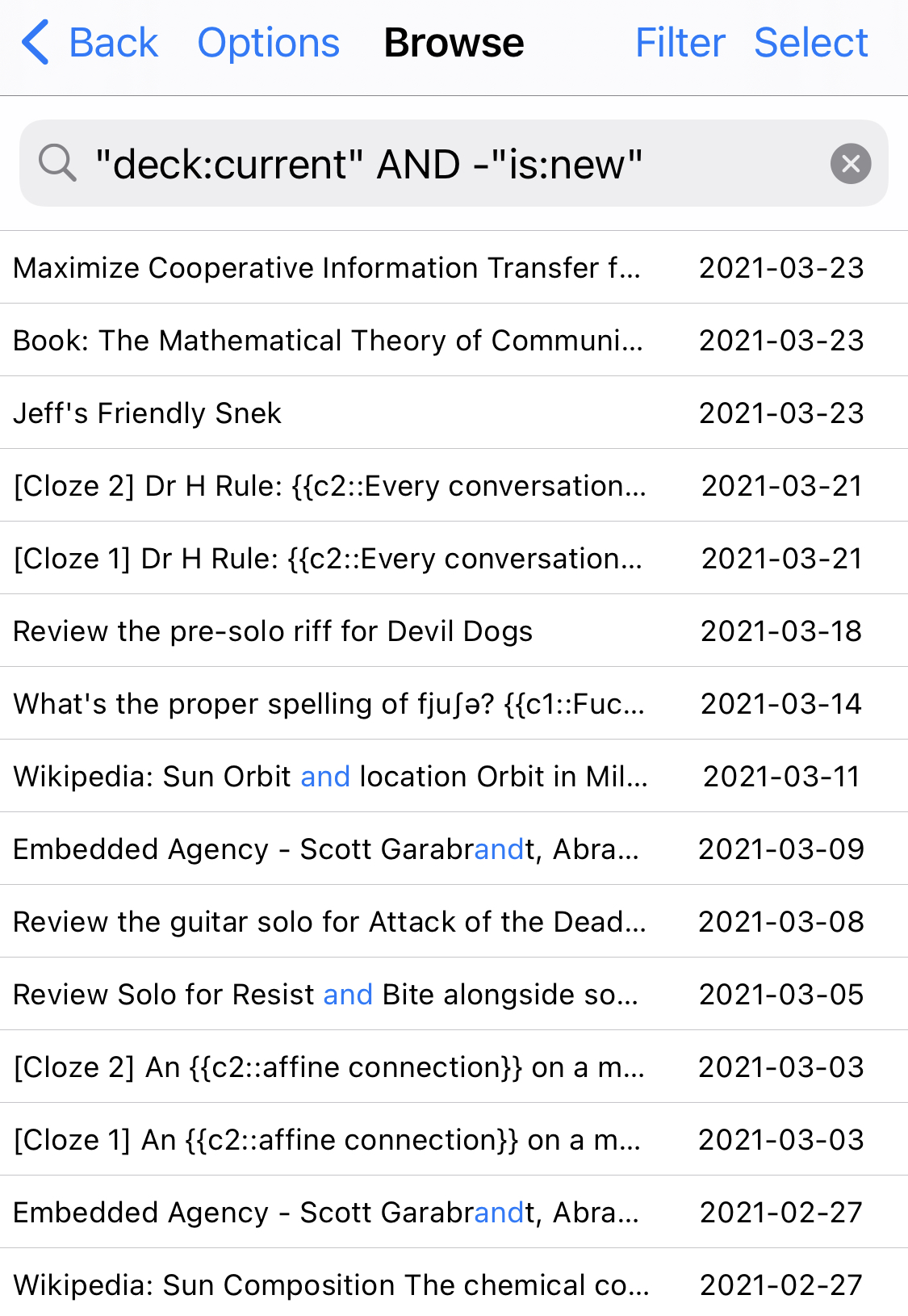

Proof that I have added cards to my deck (The top 3 cards, the other claimed cards are currently being held in reserve; -"is:new" shows only cards that have been given a date and interval for review)

↑ comment by Chris_Leong · 2021-03-24T04:00:30.836Z · LW(p) · GW(p)

Interesting offer. If you were someone who regularly commented on decision theories discussions, I would be interested in order to spread my ideas. But since you aren't, I'd pass.

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2021-03-24T04:25:11.781Z · LW(p) · GW(p)

When I write up the top-level post, I'll mention that you offered this for people who comment on DT discussions, unless you'd prefer I don't

Replies from: Chris_Leong↑ comment by Chris_Leong · 2021-03-24T05:18:21.475Z · LW(p) · GW(p)

That's fine! (And much appreciated!)

↑ comment by Mati_Roy (MathieuRoy) · 2021-03-24T02:41:15.076Z · LW(p) · GW(p)

can I claim cards before choosing its content?

Replies from: mikkel-wilson, MathieuRoy↑ comment by MikkW (mikkel-wilson) · 2021-03-24T02:48:02.870Z · LW(p) · GW(p)

Yes, that is allowed, though I reserve the right to veto any cards that I judge as problematic

↑ comment by Mati_Roy (MathieuRoy) · 2021-03-24T02:44:24.296Z · LW(p) · GW(p)

if so, I want to claim 7 cards

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2021-03-24T02:56:58.164Z · LW(p) · GW(p)

Messaged

↑ comment by wunan · 2021-03-24T02:11:52.488Z · LW(p) · GW(p)

I'm curious what cards people have paid to put in your deck so far. Can you share, if the buyers don't mind?

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2021-03-24T02:50:33.031Z · LW(p) · GW(p)

I currently have three cards entered, and the other seven are being held in reserve by the buyer (and have already been paid for). They are: "Jeff's Friendly Snek", "Book: The Mathematical Theory of Communication by Claude Shannon", and "Maximize Cooperative Information Transfer for {{Learning New Optimization}}", where {{brackets}} indicate cloze deletions; these were all sponsored by jackinthenet, he described his intention as wanting to use me as a vector for propagating memes and maximizing cooperative information transfer (which prompted the card).

comment by MikkW (mikkel-wilson) · 2020-11-19T00:16:25.974Z · LW(p) · GW(p)

Religion isn't about believing false things. Religion is about building bonds between humans, by means including (but not limited to) costly signalling. It happens that a ubiquitous form of costly signalling used by many prominent modern religions is belief taxes (insisting that the ingroup professes a particular, easily disproven belief as a reliable signal of loyalty), but this is not neccesary for a religion to successfully build trust and loyalty between members. In particular, costly signalling must be negative-value for an individual (before the second-order benefits from the group dynamic), but need not be negative-value for the group, or for humanity. Indeed, the best costly sacrifices can be positive-value for the group or humanity, while negative-value for the performing individual. (There are some who may argue that positive-value sacrifices have less signalling value than negative value sacrifices, but I find their logic dubious, and my own observations of religion seem to suggest positive-value sacrifice is abundant in organized religion, albeit intermixed with neutral- and negative-value sacrifice)

The rationalist community is averse to religion because it so often goes hand in hand with belief taxes, which are counter to the rationalist ethos, and would threaten to destroy much that rationalists value. But religion is not about belief taxes. While I believe sacrifices are an important part of the functioning of religion, a religion should avoid asking its members to make sacrifices that destroy what the collective values, and instead encourage costly sacrifices that help contribute to the things we collectively value.

Replies from: Pattern↑ comment by Pattern · 2020-11-20T18:41:08.691Z · LW(p) · GW(p)

In particular, costly signalling must be negative-value for an individual

That's one way to do things, but I don't think it's necessary. A group which requires (for continued membership) members to exercise, for instance, imposes a cost, but arguably one that should not be (necessarily*) negative-value for the individuals.

*Exercise isn't supposed to destroy your body.

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2020-11-20T20:42:31.453Z · LW(p) · GW(p)

If it's not negative value, it's not costly signalling. Groups may very well expect members to do positive-value things, and they do - Mormons are expected to follow strict health guidelines, to the extent that Mormons can recognize other Mormons based on the health of their skin; Jews partake in the Sabbath, which has personal mental benefits. But even though these may seem to be costly sacrifices at first glance, they cannot be considered to be costly signals, since they provide positive value

Replies from: Pattern↑ comment by Pattern · 2020-11-24T04:15:55.125Z · LW(p) · GW(p)

If a group has standard which provide value, then while it isn't a 'costly signal' it sorts out people who aren't willing to invest effort.*

Just because your organization wants to be strong and get things done, doesn't mean it has to spread like cancer*/cocaine**.

And something that provides 'positive value' is still a cost. Living under a flat 40% income tax by one government has the same effect as living under 40 governments which each have a flat 1% income tax. You don't have to go straight to 'members of this group must smoke'. (In a different time and place, 'members of this group must not smoke' might have been regarded as an enormous cost, and worked as such!)

*bigger isn't necessarily better if you're sacrificing quality for quantity

**This might mean that strong and healthy people avoid your group.

comment by MikkW (mikkel-wilson) · 2020-10-30T05:20:58.049Z · LW(p) · GW(p)

If you know someone is rational, honest, and well-read, then you can learn a good bit from the simple fact that they disagree with you.

If you aren't sure someone is rational and honest, their disagreement tells you little.

If you know someone considers you to be rational and honest, the fact that they still disagree with you after hearing what you have to say, tells you something.

But if you don't know that they consider you to be rational and honest, their disagreement tells you nothing.

It's valuable to strive for common knowledge of you and your partners' rationality and honesty, to make the most of your disagreements.

Replies from: Dagon↑ comment by Dagon · 2020-11-02T21:06:15.875Z · LW(p) · GW(p)

If you know someone is rational, honest, and well-read, then you probably don't know them all that well. If someone considers you to be rational and honest, and well-read, that indicates they are not.

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2020-11-02T23:59:45.444Z · LW(p) · GW(p)

😅

comment by MikkW (mikkel-wilson) · 2020-08-11T05:05:53.393Z · LW(p) · GW(p)

Does newspeak actually decrease intellectual capacity? (No)

In George Orwell's book 1984, he describes a totalitarian society that, among other initiatives to suppress the population, implements "Newspeak", a heavily simplified version of the English language, designed with the stated intent of limiting the citizens' capacity to think for themselves (thereby ensuring stability for the reigning regime)

In short, the ethos of newspeak can be summarized as: "Minimize vocabulary to minimize range of thought and expression". There are two different, closely related, ideas, both of which the book implies, that are worth separating here.

The first (which I think is to some extent reasonable) is that by removing certain words from the language, which serve as effective handles for pro-democracy, pro-free-speech, pro-market concepts, the regime makes it harder to communicate and verbally think about such ideas (I think in the absence of other techniques used by Orwell's Oceania to suppress independent thought, such subjects can still be meaningfully communicated and pondered, just less easily than with a rich vocabulary provided)

The second idea, which I worry is an incorrect takeaway people may get from 1984, is that by shortening the dictionary of vocabulary that people are encouraged to use (absent any particular bias towards removing handles for subversive ideas), one will reduce the intellectual capacity of people using that variant of the language.

A slight tangent whose relevance will become clear: If you listen to a native Chinese speaker, then compare the sound of their speech to a native Hawaiian speaker, there are many apparent differences in the sound of the two languages. Chinese has a rich phonological inventory containing 19 consonants, 5 vowels, and quite famously, 4 different tones (pitch patterns) which are used for each syllable, for a total of 5400 (approximately) possible syllables, including diphthongs and multi-syllabic vowels. Compare this to Hawaiian, which has 8 consonants, and 5 vowels, and no tones. Including diphthongs, there are 200 possible Hawaiian syllables.

One might naïvely expect that Mandarin speakers can communicate information more quickly than Hawaiian speakers, at a rate of 12.4 bits / syllable vs. 7.6 bits / syllable - however, this is neglecting the speed at which syllables are spoken- Hawaiian speakers speak much faster than Chinese speakers, and accounting for this difference in cadence, Hawaiian and Mandarin are much closer to each other in speed of communication than their phonologies would suggest.

Back to 1984. If we cut the dictionary down, so it is only 1/20th the size it is now (while steering clear of the thoughtpolice and any bias in removal of words), what should we expect will happen? One may naïvely think, that just as banning the words "democracy", "freedom", and "justice" would inhibit people's ability to think about Enlightenment Values, banning most of the words should inhibit our ability to think about most of the things.

But that is not what I would expect to see happen. One should expect to see compound words take the place of deprecated words, speaking speeds increased, and to accommodate the increased cadence of speech, tricky sequences of sounds will be elided (blurred / simplified), allowing for complex ideas to ultimately be communicated at a pace that rivals that of standard English. Plus, it'd be (massively) easier for non-Anglophones to learn, which would be a big plus.

If I had more time, I'd write about why I think we nonetheless find the concept of Simplified English to be somewhat aversive- speaking a simplified version of a language becomes an antisignal for intelligence and social status, so we come to look down on people who attempt to utilize simplified language, while celebrating those who flex their mental capacity by using rare vocabulary.

Since I'm tired and would rather sleep than write more, I'll end with a rhetorical question: would you rather be in a community that excels at signaling, or a community that actually gets stuff done?

Replies from: Viliam↑ comment by Viliam · 2020-08-17T11:19:20.605Z · LW(p) · GW(p)

Yes, the important thing is the concepts, not their technical implementation in the language.

Like, in Esperanto, you can construct "building for" + "the people who are" + "the opposite of" + "health" = hospital. And the advantage is that people who never heard that specific word can still guess its meaning quite reliably.

we nonetheless find the concept of Simplified English to be somewhat aversive

I think the main disadvantage is that it would exist in parallel, as a lower-status version of the standard English. Which means that less effort would be put into "fixing bugs" or "implementing features", because for people capable of doing so, it would be more profitable to switch to the standard English instead.

(Like those software projects that have a free Community version and a paid Professional version, and if you complain about a bug in the free version that is known for years, you are told to deal with it or buy the paid version. In a parallel universe where only the free version exists, the bug would have been fixed there.)

would you rather be in a community that excels at signaling, or a community that actually gets stuff done?

How would you get stuff done if people won't join you because you suck at signaling? :( Sometimes you need many people to join you. Sometimes you only need a few specialists, but you still need a large base group to choose from.

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2020-08-20T18:06:45.390Z · LW(p) · GW(p)

As an aside, I think it's worth pointing out that Esperanto's use of the prefix mal- to indicate the opposite of something (akin to Newspeak's un-) is problematic: two words that mean the exact opposite will sound very similar, and in an environment where there's noise, the meaning of a sentence can change drastically based on a few lost bits of information, plus it also slows down communication unnecessarily.

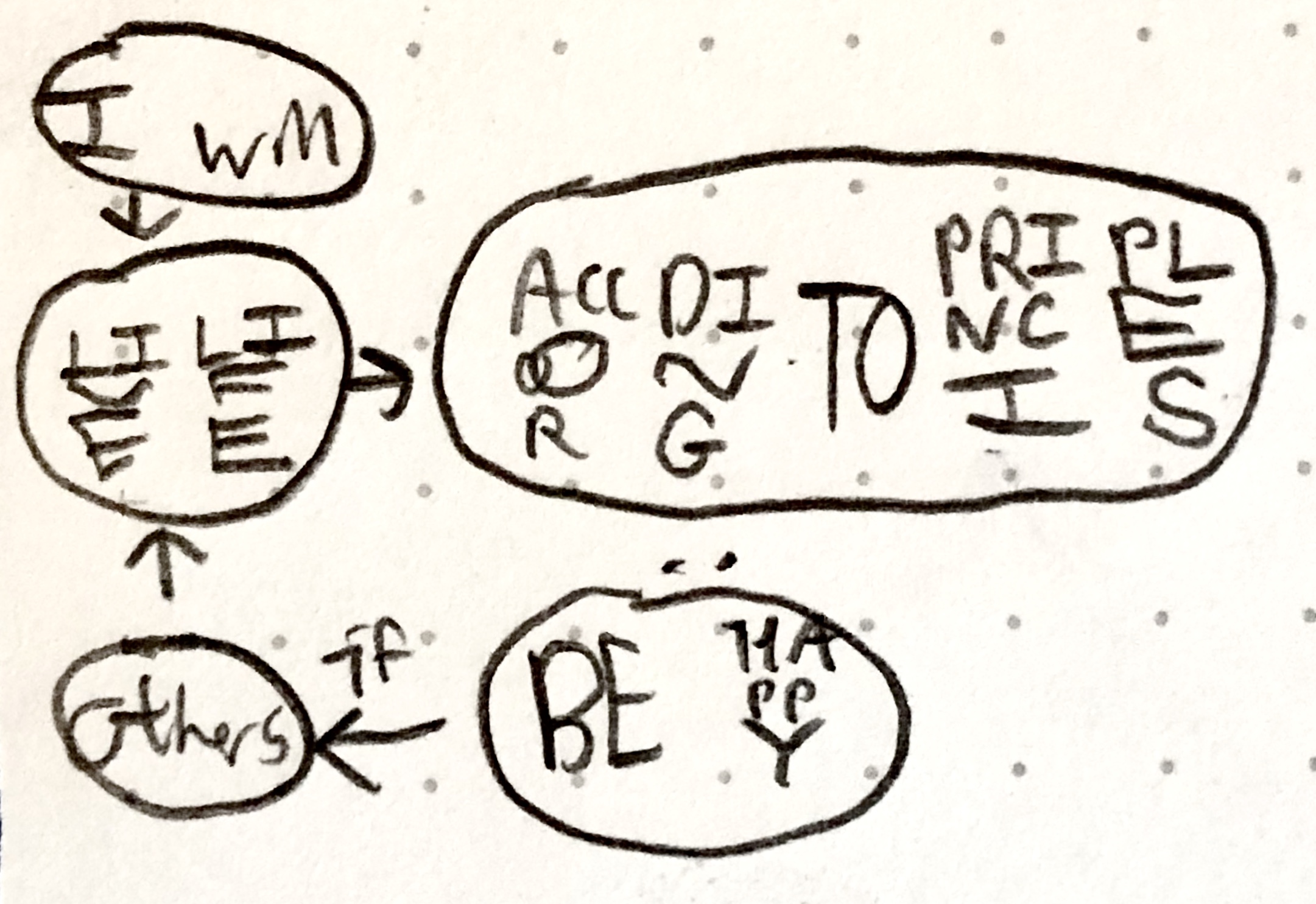

In my notes, I once had the idea of a "phonetic inverse": according to simple, well defined rules, each word could be transformed into an opposite word, which sounds as different as possible from the original word, and has the opposite meaning. That rule was intended for an engineered language akin to Sona, so the rules would need to be worked a bit to have something good and similar for English, but I prefer such a system to Esperanto's inversion rules

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2020-10-03T15:33:30.570Z · LW(p) · GW(p)

The other problem is that opposite is ill defined depending and requires someone else to know which dimension you're inverting along as well as what you consider neutral/0 for that dimension

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2020-10-11T21:48:57.527Z · LW(p) · GW(p)

While this would be an inconvenience for the on-boarding process for a new mode of communication, I actually don't think it's that big of a deal for people who are already used to the dialect (which would probably make up the majority of communication) and have a mutual understanding of what is meant by [inverse(X)] even when X could in principle have more than one inverse.

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2020-10-11T22:58:07.149Z · LW(p) · GW(p)

That makes the concept much less useful though. Might as well just have two different words that are unrelated. The point of having the inverse idea is to be able to guess words right?

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2020-10-12T01:02:05.149Z · LW(p) · GW(p)

I'd say the main benefit it provides is making learning easier - instead of learning "foo" means 'good' and "bar" means 'bad', one only needs to learn "foo" = good, and inverse("foo") = bad, which halves the total number of tokens needed to learn a lexicon. One still needs to learn the association between concepts and their canonical inverses, but that information is more easily compressible

comment by MikkW (mikkel-wilson) · 2021-01-20T05:01:20.430Z · LW(p) · GW(p)

"From AI to Zombies" is a terrible title... when I recommend The Sequences to people, I always feel uncomfortable telling them the name, since the name makes it sound like cookey bull****- in a way that doesn't really indicate what it's about

Replies from: elityre, Yoav Ravid, Eh_Yo_Lexa↑ comment by Eli Tyre (elityre) · 2021-01-22T11:20:39.374Z · LW(p) · GW(p)

I agree.

I'm also bothered by the fact that it is leading up to AI alignment and the discussion of Zombies is in the middle!

Please change?

↑ comment by Yoav Ravid · 2021-01-20T07:09:18.440Z · LW(p) · GW(p)

I usually just call it "from A to Z"

↑ comment by Willa (Eh_Yo_Lexa) · 2021-01-20T05:50:03.027Z · LW(p) · GW(p)

I think "From AI to Zombies" is supposed to imply "From A to Z", "Everything Under the Sun", etc., but I don't entirely disagree with what you said. Explaining either "Rationality: From AI to Zombies" or "The Sequences" to someone always takes more effort than feels necessary.

The title also reminds me of quantum zombies or p-zombies everytime I read it...are my eyes glazed over yet?

Counterpoint: "The Sequences" sounds a lot more cult-y or religious-text-y.

"whispers: I say, you over there, yes you, are you familiar with The Sequences, the ones handed down from the rightful caliph [LW · GW], Yudkowsky himself? We Rationalists and LessWrongians spend most of our time checking whether we have all actually read them, you should read them, have you read them, have you read them twice, have you read them thrice and committed all their lessons to heart?" (dear internet, this is satire. thank you, mumbles in the distance)

Suggestion: if there were a very short eli5 post or about page that a genuine 5 year old or 8th grader could read, understand, and get the sense of why The Sequences would actually be valuable to read, this would be a handy resource to share.

comment by MikkW (mikkel-wilson) · 2021-06-17T17:24:04.239Z · LW(p) · GW(p)

Asking people to "taboo [X word]" is bad form, unless you already know that the other person is sufficiently (i.e. very) steeped in LW culture to know what our specific corner of internet culture means by "taboo" [? · GW].

Without context, such a request to taboo a word sounds like you are asking the other person to never use that word, to cleanse it from their vocabulary, to go through the rest of their life with that word permanently off-limits. That's a very high, and quite rude, ask to make of someone. While that's of course not what we mean by "taboo", I have seen requests to taboo made where it's not clear that the other person knows what we mean by taboo, which means it's quite likely the receiving party interpreted the request as being much ruder than was meant.

Instead of saying "Taboo [X word]", instead say "could you please say what you just said without using [X word]?" - it conveys the same request, without creating the potential to be misunderstood to be making a rude and overreaching request.

Replies from: Viliam, Pattern↑ comment by Pattern · 2021-06-18T21:00:00.176Z · LW(p) · GW(p)

Step 1: Play the game taboo.

Step 2: Request something like "Can we play a mini-round of taboo with *this word* for 5 minutes?"

*[Word X]*

Alternatively, 'Could you rephrase that?'/'I looked up what _ means in the dictionary, but I'm still not getting something...'

comment by MikkW (mikkel-wilson) · 2020-10-04T16:04:49.640Z · LW(p) · GW(p)

I'm quite scared by some of the responses I'm seeing to this year's Petrov Day. Yes, it is symbolic. Yes, it is a fun thing we do. But it's not "purely symbolic", it's not "just a game". Taking things that are meant to be serious is important, even if you can't see why they're serious.

As I've said elsewhere, the truly valuable thing a rogue agent destroys by failing to live up to expectations on Petrov day, isn't just whatever has been put at stake for the day's celebrations, but the very valuable chance to build a type of trust that can only be built by playing games with non-trivial outcomes at stake.

Maybe there could be a better job in the future of communicating the essence of what this celebration is intended to achieve, but to my eyes, it was fairly obvious what was going on, and I'm seeing a lot of comments by people (whose other contributions to LW I respect) who seemed to completely fail to see what I thought was obviously the spirit of this exercise

comment by MikkW (mikkel-wilson) · 2020-09-26T08:10:43.500Z · LW(p) · GW(p)

I'm quite baffled by the lack of response to my recent question asking about which AI-researching companies are good to invest in (as in, would have good impact, not necessarily most profitable)- It indicates either A) most LW'ers aren't investing in stocks (which is a stupid thing not to be doing), or B) are investing in stocks, but aren't trying to think carefully about what impact their actions have on the world, and their own future happiness (which indicates a massive failure of rationality)

Even putting this aside, the fact that nobody jumped at the chance to potentially shift a non-trivial (for certain definitions of trivial) amount of funding away from bad organizations and towards good organizations (which I'm investing primarily as a personal financial strategy), seems very worrying to me. While it is (as ChristianKI pointed out) debatable that the amount of funding I can provide as a single person will make a big difference to a big company, it's bad decision theory to model my actions as only being correlated with myself; and besides, if the funding was redirected, it probably would have gone somewhere without the enormous supply of funds Alphabet has, and very well could have made an important difference, pushing the margins away from failure and towards success.

There's a good chance I may change my mind in the future about this, but currently my response to this information is a substantial shift away from the LW crowd actually being any good at usefully using rationality instrumentally

Replies from: habryka4, John_Maxwell_IV, Viliam, ChristianKl↑ comment by habryka (habryka4) · 2020-09-26T08:25:39.374Z · LW(p) · GW(p)

(For what it's worth, the post made it not at all clear to me that we were talking about a nontrivial amount of funding. I read it as just you thinking a bit through your personal finance allocation. The topic of divesting and impact investing has been analyzed a bunch on LessWrong and the EA Forum, and my current position is mostly that these kinds of differences in investment don't really make much of a difference in total funding allocation, so it doesn't seem worth optimizing much, besides just optimizing for returns and then taking those returns and optimizing those fully for philanthropic impact.)

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2020-09-28T17:53:42.414Z · LW(p) · GW(p)

This seems to be the common rationalist position, but it does seem to be at odds with:

- The common rationalist position to vote on UDT grounds.

- The common rationalist position to eschew contextualizing because it ruins the commons.

I don't see much difference between voting because you want others to also vote the same way, or choosing stocks because you want others to choose stocks the same way.

I also think it's pretty orthogonal to talk about telling the truth for long term gains in culture, and only giving money to companies with your values for long term gains in culture.

Replies from: MakoYass↑ comment by mako yass (MakoYass) · 2020-11-24T23:56:14.326Z · LW(p) · GW(p)

eschew contextualizing because it ruins the commons

I don't understand. What do you mean by contextualizing?

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2020-11-25T01:34:49.021Z · LW(p) · GW(p)

More here: https://www.lesswrong.com/posts/7cAsBPGh98pGyrhz9/decoupling-vs-contextualising-norms [LW · GW]

↑ comment by John_Maxwell (John_Maxwell_IV) · 2020-10-05T12:51:29.235Z · LW(p) · GW(p)

For what it's worth, I get frustrated by people not responding to my posts/comments on LW all the time. This post [LW · GW] was my attempt at a constructive response to that frustration. I think if LW was a bit livelier I might replace all my social media use with it. I tried to do my part to make it lively by reading and leaving comments a lot for a while, but eventually gave up.

↑ comment by Viliam · 2020-09-26T12:34:09.102Z · LW(p) · GW(p)

either A) most LW'ers aren't investing in stocks

Does LW 2.0 still have the functionality to make polls in comments? (I don't remember seeing any recently.) This seems like the question that could be easily answered by a poll.

Replies from: jimrandomh↑ comment by jimrandomh · 2020-09-27T00:06:37.937Z · LW(p) · GW(p)

It doesn't; this feature didn't survive the switchover from old-LW to LW2.0.

↑ comment by ChristianKl · 2020-09-28T16:09:54.756Z · LW(p) · GW(p)

While it is (as ChristianKI pointed out) debatable that the amount of funding I can provide as a single person will make a big difference to a big company

My point wasn't about the size about the company but about whether or not the company already has large piles of cash that it doesn't know how to invest.

There are companies that want to invest more capital then they have available and thus have room for funding and there are companies where that isn't the case.

There's a hilarious interview with Peter Thiel and Eric Schmidt where Thiel charges Google with not spending their 50 billion dollar in the bank that it doesn't know what to do with and Eric Schmidt says "What you discover running these companies is that there are limits that are not cash..."

That interview happened back in 2012 but since then the amount of cash reverse of Alphabet has more then doubled despite some stock buybacks.

Companies like Tesla or Amazon seem to be willing to invest additional capital to which they have access in a way that Alphabet and Microsoft simply don't.

A) most LW'ers aren't investing in stocks (which is a stupid thing not to be doing)

My general model would be that most LW'ler think that the instrumentally rational thing is to invest the money into a low-fee index fund.

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2020-09-29T17:35:30.483Z · LW(p) · GW(p)

Wow, that video makes me really hate Peter Thiel (I don't necessarily disagree with any of the points he makes, but that communication style is really uncool)

Replies from: ChristianKl, Benito↑ comment by ChristianKl · 2020-09-30T14:38:47.352Z · LW(p) · GW(p)

In most context I would also dislike this communication style. In this case I feel that the communication style is necessary to get a straight answer from Eric Schmidt who would rather avoid the topic.

↑ comment by Ben Pace (Benito) · 2020-09-29T18:15:33.826Z · LW(p) · GW(p)

On the contrary, I aspire to the clarity and honesty of Thiel's style. Schmidt seems somewhat unable to speak directly. Of the two of them, Thiel was able to say specifics about how the companies were doing excellently and how they were failing, and Schmidt could say neither.

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2020-10-02T03:56:00.873Z · LW(p) · GW(p)

Thank you for this reply, it motivated me to think deeper about the nature of my reaction to Thiel's statements, and my thoughts on the conversation between Thiel and Schmidt. I would share my thoughts here, but writing takes time and energy, and I'm not currently in position to do so.

Replies from: Benito↑ comment by Ben Pace (Benito) · 2020-10-02T04:43:54.735Z · LW(p) · GW(p)

:-)

comment by MikkW (mikkel-wilson) · 2020-08-31T00:47:42.090Z · LW(p) · GW(p)

During today's LW event, I chatted with Ruby and Raemon (seperately) about the comparison between human-made photovoltaic systems (i.e. solar panels), and plant-produced chlorophyll. I mentioned that in many ways chlorophyll is inferior to solar panels - consumer grade solar panels operate in the 10% to 20% efficiency range (i.e. for every 100 joules of light energy, 10 - 20 joules are converted into usable energy), while chlorophyll is around 9% efficient, and modern cutting edge solar panels can go even as high as nearly 50% efficiency. Furthermore, every fall the leaves turn red and fall down to the ground only for new leaves – that is plant-based solar panels – to be generated again in the spring. One sees green plants where there very well could be solar panels capturing light, and naïvely we would expect solar panels to do a better job, but we plant plants instead, and let them gather energy for us.

One of them (I think Ruby) didn't seem convinced that it was fair to compare solar panels with chlorophyll – is it really an apples to apples comparison? I think it is a fair comparison. It is true that plants do a lot of work beyond simply capturing light, and electricity goes to different things than what plants do, but ultimately what both plant-based farms and photovoltaic cells do is they capture energy from sunlight coming to the earth from the sun, and convert them to human usable energy. One could imagine genetically engineered plants doing much of what we use electricity for these days, or industrial processes being hooked up to solar panels that do the things plants do, and in this way we can make a meaningful comparison of how much energy plants allow us to use for human desired goals and compare that to how much energy photovoltaic cells can redirect to human-desired uses.

Replies from: Raemon, MakoYass↑ comment by Raemon · 2020-08-31T01:03:19.125Z · LW(p) · GW(p)

Huh, somehow while chatting with you I got the impression that it was the opposite (chlorophyll more effective than solar panels). Might have just misheard.

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2020-08-31T01:06:28.331Z · LW(p) · GW(p)

The big advantage chlorophyll has is that it is much cheaper than photovoltaics, which is why I was saying (in our conversation) we should take inspiration from plants

Replies from: Raemon↑ comment by mako yass (MakoYass) · 2020-08-31T02:04:50.127Z · LW(p) · GW(p)

It would be interesting to see the efficiency of solar + direct air capture compared to plants. If it wins I will have another thing to yell at hippies (before yelling about there not being enough land area even for solar)

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2020-08-31T02:50:52.880Z · LW(p) · GW(p)

There's plenty of land area for solar. I did a rough calculation once, and my estimate was that it'd take roughly twice the land area of the Benelux to build a solar farm that produced as much energy per annum as the entirety of humanity uses each year (The sun outputs an insane amount of power, and if one steps back to think about it, almost every single joule of energy we've used came indirectly through the sun - often through quite inefficient routes). I didn't take into account day/night cycles, or losses of efficiency due to transmission, but if we assume 4x loss due to nighttime (probably a pessimistic estimate) and 5x loss due to transmission (again, being pessimistic), it still comes out to substantially less than the land we have available to us (About 1/3 the size of the Sahara desert)

comment by MikkW (mikkel-wilson) · 2021-07-28T01:51:14.841Z · LW(p) · GW(p)

Update on my tinkering with using high doses of chocolate as a psychoactive drug:

(Nb: at times I say "caffeine" in this post, in contrast to chocolate, even though chocolate contains caffeine; by this I mean coffee, energy drinks, caffeinated soda, and caffeine pills collectively, all of which were up until recently frequently used by me; recently I haven't been using any sources of caffeine other than chocolate, and even then try to avoid using it on a daily basis)

I still find that consuming high doses of chocolate (usually 3-6 table spoons of dark cocoa powder, or a corresponding dose of dark chocolate chips / chunks) has a stimulating effect that I find more pleasant than caffeine, and makes me effective at certain things in a way that caffeine doesn't.

I am pretty sure that I was too confident in my hypothesis about why specifically chocolate has this effect. One obvious thing that I overlooked in my previous posts, is that chocolate contains caffeine, and this likely explains a large amount of its stimulant effects. It is definitely true that Theobromine has a very similar structure to caffeine, but it's unclear to me that it has any substantial stimulant effect. Gilch linked me to a study that he stated suggests it doesn't, but after reading the abstract, I found that it only justifies a weak update against thinking the Theobromine specifically has stimulant effects.

I'm confident that there are chemicals in chocolate other than caffeine that are responsible for me finding benefit in consuming it, but I have no idea what those chemicals are.

Originally I was going to do an experiment, randomly assigning days to either consume a large dose of chocolate or not, but after the first couple days, I decided against doing so, so I don't have any personal experimentation to back up my observations, but just observationally, there's a very big difference in my attitude and energy on days when I do or don't consume chocolate.

When I talked to Herschel about his experience using chocolate, he noted that building up tolerance is a problem with any use of chemicals to affect the mind, which is obviously correct, so I ended deciding that I won't use chocolate every day, and will instead use it on days when I have a specific reason to use it, and will make sure that there will be days when I won't use it, even if I find myself always wanting to use it. My thought here, is that if my brain is forced to operate at some basic level on a regular basis without the chemical, then when I do use the chemical, I will be able to achieve my usual operation plus a little more, which will ensure that I can always derive some benefit from it. I think this approach should make sense for many chemicals where building up tolerance is a possibility of concern.

Gilch said he didn't notice any effect when he tried it. I don't know how much he used, but since I specified an amount in response to one of his questions, I presume he probably used an amount similar to what I would use. I don't know if he used it in addition to caffeine, or as a replacement. If it was a replacement, that would explain why he didn't notice any additional stimulation over and above his usual stimulation, but it would still lead to wonder about why he didn't notice any other effects. One possibility is that the effects are a little bit subtle - not too subtle, since its effects tend to be pretty obvious (in contrast to usual caffeine) for me when I'm on chocolate, but subtle enough that a different person than me might not be as attuned to it, for whatever reason (part of why I say this, is that I find chocolate helps me be more sociable, and this is one of the most obvious effects it has in contrast to caffeine for me, and I care a lot about my ability to be sociable, so it's hard to slip my notice, but if someone cares less about how they interact with other people, they may overlook this effect; there are other effects, too, but those do tend to be somewhat subtle, though still noticeable)

As far as delivery, I have innovated slightly on my original method. I now often use dark chocolate chips / chunks in addition to drinking the chocolate, I find that pouring a handful, just enough to fit in my mouth, will have a non-trivial effect. Since I found drinking the chocolate straight would irritate my stomach and cause my stool to have a weird consistency, I have started using milk. My recipe is now to take a tall glass, fill it 1/3rd with water, add some (but not necessarily all) of the desired dose of cocoa powder into the glass, microwave it for 20 seconds, stir the liquid, add a little more water and the rest of the cocoa powder, microwave it for 20 more seconds, stir it until there are no chunks, then fill up the rest of the glass with milk. There are probably changes that can be made to the recipe, but I find this at least gets a consistently good outcome. With the milk, it makes my stomach not get irritated, and my stool is less different, though still slightly different, from how it would otherwise be.

On the subject of it making me sociable, I don't think it's a coincidence that most of the days that my friends receive texts from me, I have had chocolate on those days. I also seem to write more on days when I have had chocolate. I find chocolate helps me feel that I know what I need to say, and I rarely find myself second-guessing my words when I'm on chocolate, whereas I often have a hard time finding words in the first place without chocolate, and feel less confident about what I say without it. I've written a lot on this post alone, and have also messaged a friend today, and have also written a long-ish analysis on a somewhat controversial topic on another website today. Based on the context I say that in, I'm sure you can guess whether I've had chocolate today.

comment by MikkW (mikkel-wilson) · 2021-06-17T19:18:15.830Z · LW(p) · GW(p)

I may have discovered an interesting tool against lethargy and depression [1]: This morning, in place of my usual caffeine pill, I made myself a cup of hot chocolate (using pure cacao powder / baking chocolate from the supermarket), which made me very energetic (much more energetic than usual), which stood in sharp contrast to the past 4 days, which have been marked by lethargy and intense sadness. Let me explain:

Last night, I was reflecting on the fact that one of the main components of chocolate is theobromine, which is very similar in structure to caffeine (theobromine is the reason why chocolate in poisonous to dogs & cats, for reasons similar to how caffeine was evolved to kill insects that feed on plants), and is known to be the reason why eating chocolate makes people happy. Since I have problems with caffeine, but rely on it to have energy, I figured it would be worthwhile to try using chocolate instead as a morning pick-me-up. I used baking chocolate instead of Nesquick or a hot chocolate packet because I'm avoiding sugar these days, and I figured having as pure chocolate as possible would be ideal for my experiment.

I was greeted with pleasant confirmation when I became very alert almost immediately after starting to drink the chocolate, despite having been just as lethargic as the previous days until I drank the chocolate. It's always suggestive when you form a hypothesis based on facts and logic, then test the hypothesis, and exactly what you expected to happen, happens. But of course, I can't be too confident until I try repeating this experiment on future days, which I will happily be doing after today's success.

[1]: There are alternative hypotheses for why today was so different from the previous days: I attended martial arts class, then did some photography outside yesterday evening, which meant I got intense exercise, was around people I know and appreciate, and was doing stuff with intentionality, all of which could have contributed to my good mood today. There's also the possibility of regression to the mean, but I'm dubious of this since today was substantially above average for me. I also had a (sugar-free) Monster later in the morning, but that was long after I had noticed being unusually alert, and now I have a headache that I can clearly blame on the Monster (Caffeine almost always gives me a headache) [1a].

[1a]: I drink energy drinks because I like the taste of them, not for utilitarian reasons. I observe that caffeine tends to make whatever contains it become deeply associated with enjoyment and craving, completely separated from the alertness-producing effects of the chemical. A similar thing happened with Vanilla Café Soylent [1b], which I absolutely hated the first time I tried it, but a few weeks later, I had deep cravings for, and could not do without.

[1b]: Sidenote, the brand Soylent has completely gone to trash, and I would not recommend anyone buy it these days. Buy Huel or Plenny instead.

Replies from: gilch, Dagon↑ comment by gilch · 2021-06-18T06:46:27.412Z · LW(p) · GW(p)

I think I want to try this. What was your hot cocoa recipe? Did you just mix it with hot water? Milk? Cream? Salt? No sugar, I gather. How much? Does it taste any better than coffee? I want to get a sense of the dose required.

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2021-06-19T22:51:28.899Z · LW(p) · GW(p)

Just saw this. I used approximately 5 tablespoons of unsweetened cocoa powder, mixed with warm water. No sweetener, no sugar, or anything else. It's bitter, but I do prefer the taste over coffee.

Replies from: gilch↑ comment by gilch · 2021-06-20T18:07:05.589Z · LW(p) · GW(p)

I just tried it. I did not enjoy the taste, although it does smell chocolatey. I felt like I had to choke down the second half. If it's going to be bitter, I'd rather it were stronger. Maybe I didn't stir it enough. I think I'll use milk next time. I did find this: https://criobru.com/ apparently people do brew cacao like coffee. They say the "cacao" is similar to cocoa (same plant), but less processed.

Replies from: gilch, gilch↑ comment by gilch · 2021-06-20T19:27:58.433Z · LW(p) · GW(p)

I found this abstract suggesting that theobromine doesn't affect mood or vigilance at reasonable doses. But this one suggests that chocolate does.

Subjectively, I feel that my cup of cocoa today might have reduced my usual lethargy and improved my mood a little bit, but not as dramatically as I'd hoped for. I can't be certain this isn't just the placebo effect.

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2021-06-21T17:50:13.567Z · LW(p) · GW(p)

The first linked study tests 100, 200, and 400 mg Theobromine. A rough heuristic based on the toxic doses of the two chemicals suggests 750 mg, maybe a little more (based on subjective experience) is equivalent to 100mg caffeine or a cup of coffee (this is roughly the dose I've been using each day), so I wouldn't expect a particularly strong effect for the first two. The 400 mg condition does surprise me; the sample size of the study is small (n = 24 subjects * 1 trial per condition), so the fact that it failed to find statistical significance shouldn't be too big of an update, though.

Replies from: gilch↑ comment by Dagon · 2021-06-17T21:27:12.209Z · LW(p) · GW(p)

Can you clarify your Soylent anti-recommendation? I don't use it as an actual primary nutrition, more as an easy snack for a missed meal, once or twice a week. I haven't noticed any taste difference recently - my last case was purchased around March, and I pretty much only drink the Chai flavor.

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2021-06-17T21:48:23.533Z · LW(p) · GW(p)

A] Meal replacements require a large amount of trust in the entity that produces it, since if there's any problems with the nutrition, that will have big impacts on your health. This is less so in your case, where it's not a big part of the nutrition, but in my case, where I ideally use meal replacements as a large portion of my diet, trust is important.

B] A few years ago, Rob Rhinehart, the founder and former executive of Soylent, parted ways with the company due to his vision conflicting with the investor's desires (which is never a good sign). I was happy to trust Soylent during the Rhinehart era, since I knew that he relied on his creation for his own sustenance, and seemed generally aligned. During that era, Soylent was very effective at signaling that they really cared about the world in general, and people's nutrition in general. All the material that sent those signals no longer exists, and the implicit signals (e.g. the shape of and branding on the bottles, the new products they are developing [The biggest innovation during the Rhinehart era was caffeinated Soylent, now the main innovations are Bridge and Stacked, products with poor nutritional balance targeted at a naïve general audience, a far cry from the very idea of Complete Food], and the copy on their website) all indicate that the company's main priority is now maximizing profit, without much consideration as to the (perceived) nutritional value of the product. In terms of product, the thing is probably still fine (though I haven't actually looked at the ingredients in the recent new nutritional balance), but in terms of incentives and intentions, the management's intention isn't any better than, say, McDonald's or Jack In The Box.

Since A] meal replacements require high trust and B] Soylent is no longer trustworthy: I cannot recommend anyone use Soylent more than a few times a week, but am happy to recommend Huel, Saturo, Sated, and Plenny, which all seem to still be committed to Complete Food.

(As far as flavour, I know I got one box with the old flavor after the recent flavor change, the supply lines often take time to get cleared out, so it's possible you got a box of the old flavor. I don't actually mind the new flavour, personally)

Replies from: Dagon, Zolmeister↑ comment by Zolmeister · 2021-06-18T02:18:58.656Z · LW(p) · GW(p)

I recommend Ample (lifelong subscriber). It has high quality ingredients (no soy protein), fantastic macro ratios (5/30/65 - Ample K), and an exceptional founder.

comment by MikkW (mikkel-wilson) · 2021-06-10T21:23:01.459Z · LW(p) · GW(p)

In Zvi's most recent Covid-19 post [LW · GW], he puts the probability of a variant escaping mRNA vaccines and causing trouble in the US at most at 10%. I'm not sure I'm so optimistic.

One thing that gives reason to be optimistic, is that we have yet to see any variant that has substantial resistance to the vaccines, which might lead one to think that resistance just isn't something that is likely to come up. However, on the other hand, the virus has had more than a year for more virulent strains to crop up while people were actively sheltering in place, and variants first came on the radar (at least for the population at large) around 9 months after the start of worldwide lockdowns, and a year after the virus was first noticed. In contrast, the vaccine has only been rolling out for half a year, and only come into large-scale contact with the virus for maybe half that time, let's say a quarter of a year. It's maybe not so surprising that a resistant variant hasn't appeared yet.

Right now, there's a fairly large surface area between non-resistant strains of Covid and vaccinated humans. Many vaccinated humans will be exposed to virus particles, which will for the most part be easily defended against by the immune system. However, if it's possible for the virus to change in any way to reduce the immune response it faces, we will see this happen, and particularly in areas where there's roughly half vaccinated people, half unvaccinated, such a variant will have at least a slight advantage over other variants, and will start to spread faster than non-resistant variants. Again, it's taken a while for other variants to crop up, so it's not much information that we haven't seen this happen yet.

The faster we are able to get vaccines in most arms in all countries, the less likely this is to happen. If most humans worldwide are vaccinated 6 months from now, there likely won't be much opportunity for a resistant variant to become prominent. But I don't expect vaccines to roll out so effectively; I'll be pleasantly surprised if they are.

There's further the question of whether the US will be able to respond effectively quickly enough if such a variant arises. I'm very pessimistic about this, and if you're not, you either haven't been paying attention, or are overestimating the difference in effectiveness between the current administration vs the previous administration, or are more optimistic about our ability to learn from our mistakes (on an institutional level) than I am.

All in all, saying no more than a 10% chance that a resistant variant will arise, with the US government not responding quickly enough, seems far too optimistic to me. I'm currently around 55% that such a variant will arise, and that it will cause at least 75,000 deaths OR will prompt a lockdown of at least 30 days in at least 33 US states [edited to add: within the next 7 years].

comment by MikkW (mikkel-wilson) · 2021-02-15T19:53:57.311Z · LW(p) · GW(p)

One thing that is frustrating me right now is that I don't have a good way of outputting ideas while walking. One thing I've tried is talking into voicememos, but it feels awkward to be talking out loud to myself in public, and it's a hassle to transcribe what I write when I'm done. One idea I don't think I've ever seen is a hand-held keyboard that I can use as I'm walking, and can operate mostly by touch, without looking at it, and maybe it can provide audio feedback through my headphones.

Replies from: AllAmericanBreakfast↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2021-02-16T22:34:33.935Z · LW(p) · GW(p)

If you have bluetooth earbuds, you would just look to most other people like you're having a conversation with somebody on the phone. I don't know if that would alleviate the awkwardness, but I thought it was worth mentioning. I have forgotten that other people can't tell when I'm talking to myself when I have earbuds in.

comment by MikkW (mikkel-wilson) · 2020-08-10T20:39:29.867Z · LW(p) · GW(p)

Epistemic: Intend as a (half-baked) serious proposal

I’ve been thinking about ways to signal truth value in speech- in our modern society, we have no way to readily tell when a person is being 100% honest- we have to trust that a communicator is being honest, or otherwise verify for ourselves if what they are saying is true, and if I want to tell a joke, speak ironically, or communicate things which aren’t-literally-the-truth-but-point-to-the-truth, my listeners need to deduce this for themselves from the context in which I say something not-literally-true. This means that almost always, common knowledge of honesty never exists, which significantly slows down [LW(p) · GW(p)] positive effects from Aumann's Agreement Theorem [? · GW]

In language, we speak with different registers. Different registers are different ways of speaking, depending on the context of the speech. The way a salesman speaks to a potential customer, will be distinct from the way he speaks to his pals over a beer - he speaks in different registers in these different situations. But registers can also be used to communicate information about the intentions of the speaker - when a speaker is being ironic, he will intone his voice in a particular way, to signal to his listeners that he shouldn’t be taken 100% literally.

There are two points that come to my mind here: One: establishing a register of communication that is reserved for speaking literally true statements, and Two: expanding the ability to use registers to communicate not-literally-true intent, particularly in text.

On the first point, a large part of the reason why people speaking in a natural register cannot always be assumed to be saying something literally true, is that there is no external incentive to not lie. Well, sometimes there are incentives to not lie, but oftentimes these incentives are weak, and especially in a society built upon free speech, it is hard to - on a large scale - enforce a norm against not lying in natural-register speech. Now my mind imagines a protected register of speech, perhaps copyrighted by some organization (and which includes unique manners of speech which are distinctive enough to be eligible for copyright), which that organization vows to take action against anybody who speaks not-literally-true statements (i.e., which communicate a world model that does not reliably communicate the actual state of the world) in that register; anybody is free (according to a legally enforcable license) to speak whatever literally-true statements they want in that register, but may not speak non-truths in that register, at pain of legal action.

If such a register was created, and was reliably enforced, it would help create a society where people could readily trust strangers saying things that they are not otherwise inclined to believe, given that the statement is spoken in the protected register. I think such a society would look different from current society, and would have benefits compared to current society. I also think a less-strict version of this could be implemented by a single platform (perhaps LessWrong?), replacing legal action with the threat of being suspended for speaking not-literal-truths in a protected register, and I also suspect that it would have a non-zero positive effect. This also has the benefit of being probably cheaper, and in a less unclear legal position related to speech.

I don’t currently have time to get into details on the second point, but I will highlight a few things: Poe’s law states that even the most extreme parody can be readily mistaken for a serious position;; Whereas spoken language can clearly be inflected to indicate ironic intent, or humor, or perhaps even not-literally-true-but-pointing-to-the-truth, the carriers of this inflection are not replicated in written language - therefore, written language, which the internet is largely based upon, lacks the same richness of registers that allows a clear distinction between extreme-but-serious postitions from humor. There are attempts to inflect writing in such a way as to provide this richness, but as far as I know, there is no clear standard that is widely understood that actually accomplishes this. This is worth exploring in the future. Finally, I think it is worthwhile to spend time reflecting on intentionally creating more registers that are explicitly intended to communicate varying levels of seriousness and intent.

Replies from: Dagon↑ comment by Dagon · 2020-08-11T14:05:54.237Z · LW(p) · GW(p)

most extreme parody can be readily mistaken for a serious position

I may be doing just that by replying seriously. If this was intended as a "modest proposal", good on you, but you probably should have included some penalty for being caught, like surgery to remove the truth-register.

Humans have been practicing lying for about a million years. We're _VERY_ good at difficult-to-legislate communication and misleading speech that's not unambiguously a lie.

Until you can get to a simple (simple enough for cheap enforcement) detection of lies, an outside enforcement is probably not feasible. And if you CAN detect it, the enforcement isn't necessary. If people really wanted to punish lying, this regime would be unnecessary - just directly punish lying based on context/medium, not caring about tone of voice.

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2020-08-11T16:41:29.972Z · LW(p) · GW(p)

I assure you this is meant seriously.

Until you can get to a simple (simple enough for cheap enforcement) detection of lies, an outside enforcement is probably not feasible.

There's plenty of blatant lying out there in the real world, which would be easily detectable by a person with access to reliable sources and their head screwed on straight- I think one important facet of my model of this proposal, that isn't explicitly mentioned in this shortform, is that validating statements is relatively cheap, but expensive enough that for every single person to validate every single sentence they hear is infeasible. By having a central arbiter of truth that enforces honesty, it allows one person doing the heavy lifting to save a million people from having to each individually do the same task.

If people wanted to punish lying this regime would be unnecessary - just directly punish lying based on context/medium, not caring about tone of voice.

The point of having a protected register (in the general, not platform-specific case), is that it would be enforceable even when the audience and platform are happy to accept lies- since the identifiable features of the register would be protected as intellectual property, the organization that owned the IP could enforce a violation of the intellectual property, even when there would be no legal basis for violating norms of honesty

Replies from: Dagon↑ comment by Dagon · 2020-08-12T19:00:55.719Z · LW(p) · GW(p)

The point of having a protected register (in the general, not platform-specific case), is that it would be enforceable even when the audience and platform are happy to accept lies

Oh, I'd taken that as a fanciful example, which didn't need to be taken literally for the main point, which I thought was detecting and prosecuting lies. I don't think that part of your proposal works - "intellectual property" isn't an actual law or single concept, it's an umbrella for trademark, copyright, patent, and a few other regimes. None of which apply to such a broad category of communication as register or accent.

You probably _CAN_ trademark a phrase or word, perhaps "This statement is endorsed by TruthDetector(TM)". It has the advantage that it applies in written or spoken media, has no accessibility issues, works for tonal languages, etc. And then prosecute uses that you don't actually endorse.

Endorsing only true statements is left as an excercise, which I suspect is non-trivial on it's own.

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2020-08-13T00:42:03.208Z · LW(p) · GW(p)

I suspect there's a difference between what I see in my head when I say "protected register", compared to the image you receive when you hear it. Hopefully I'll be able to write down a more specific proposal in the future, and provide a legal analysis of whether what I envision would actually be enforceable. I'm not a lawyer, but it seems that what I'm thinking of (i.e., the model in my head) shouldn't be dismissed out of hand (although I think you are correct to dismiss what you envision that I intended)

comment by MikkW (mikkel-wilson) · 2022-08-27T07:38:17.761Z · LW(p) · GW(p)

Current discourse around AI safety (at least among people who haven't missed) has a pretty dark, pessimistic tone - for good reason, because we're getting closer to technology that could accidentally do massive harm to humanity.

But when people / groups feel pessimistic, it's hard to get good work done - even when that pessimism is grounded in the real-world facts.

I think we need to develop an optimistic, but realistic point of view - acknowledging the difficulty of where we are, but nonetheless being hopeful and full of energy towards finding the solution. Because AI alignment can be solved, we just actually have to put in the effort to solve it, and maybe a lot faster than we are currently prepared to.

Replies from: hobs↑ comment by hobs · 2022-08-27T17:31:14.058Z · LW(p) · GW(p)

Indeed. Good SciFi does both for me - terror of being a passenger in this train wreck and ideas for how heroes can derail the AI commerce train or hack the system to switch tracks for the public transit passenger train. Upgrade and Recursion did that for me this summer.

comment by MikkW (mikkel-wilson) · 2022-04-22T11:14:13.274Z · LW(p) · GW(p)

Somehow I stumbled across this quote from Deutoronomy (from the Torah / Old Testament, which is the law of religious-Jews):

You shall not have in your bag two kinds of weights, large and small. You shall not have in your house two kinds of measures, large and small. You shall have only a full and honest weight; you shall have only a full and honest measure, so that your days may be long in the land that the Lord your God is giving you. For all who do such things, all who act dishonestly, are abhorrent to the Lord your God.

There's of course the bit about not being dishonest, but that's not what resonated with me. I will provide one example of what came to my mind:

A dollar in the year 2023 is a smaller measure than it was in the year 2022, which is a smaller measure than it was in 1996

comment by MikkW (mikkel-wilson) · 2021-09-18T07:43:35.060Z · LW(p) · GW(p)

This Generative Ink post talks about curating GPT-3, creating a much better output than it normally would give, turning it from quite often terrible to usually pround and good. I'm testing out doing the same with this post, choosing one of many branches every few dozens of words.

For a 4x reduction in speed, I'm getting very nice returns on coherence and brevity. I can actually pretend like I'm not a terrible writer! Selection is a powerful force, but more importantly, continuing a thought in multiple ways forces you to actually make sure you're saying things in a way that makes sense.

Editing my writing can be slow and tedious, but this excercise gives me a way to naturally write in a compact, higher-quality way, which is why I hope I do this many times in the future, and recommend you try doing this yourself.

Replies from: mikkel-wilson, mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2021-09-19T16:04:50.445Z · LW(p) · GW(p)

It occurs to me that this is basically Babble & Prune adapted to be a writing method. I like Babble & Prune.

↑ comment by MikkW (mikkel-wilson) · 2021-09-18T07:48:36.715Z · LW(p) · GW(p)

This post was written in 5 blocks, and I wrote 4 (= 2^2) branches for each block, for 5*2 = 10 bits of curation, or 14.5 words per bit of curation.

As it happens, I always used the final branch for each block, so it was more effects of revision and consolidation than selection effects that contribute to the end result of this excercise.

comment by MikkW (mikkel-wilson) · 2021-07-02T21:20:33.117Z · LW(p) · GW(p)

URLs (Universal Resource Locators) are universal over space, but they are not universal over time, and this is a problem

Replies from: Dagon↑ comment by Dagon · 2021-07-02T21:54:14.687Z · LW(p) · GW(p)

According to https://datatracker.ietf.org/doc/html/rfc1738 , they're not intended to be universal, they're actually Uniform Resource Locators. Expecting them to be immutable or unique can lead to much pain.

comment by MikkW (mikkel-wilson) · 2021-05-19T14:54:55.693Z · LW(p) · GW(p)

Cryptocurrencies in general are good and the future of money, but Bitcoin in particular deserves to crash all the way down to $0

Replies from: Viliam, Dagon, mikkel-wilson↑ comment by Viliam · 2021-05-19T18:30:56.778Z · LW(p) · GW(p)

In a universe that cares about "deserve", diamonds would crash to $0 first. Bitcoin at least doesn't run on slave labor.

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2021-05-19T19:00:57.681Z · LW(p) · GW(p)

Hmmm... I guess this is a good illustration of why "deserve" isn't a good way to put what I meant.

Bitcoin isn't actually any good at what it's meant to do- it's really a failure as a currency. It has been a rewarding store of value for a while, but I expect it will be displaced as a store of value by currencies that are more easily moved from account to account. Transaction fees are often too high, and will likely increase, and it is slow to process transactions (the slow speed isn't a hindrance to its quality as a store of value, but it does reduce its economic desirability; transaction fees are very much a problem for a store of value)

I expect in the long run, economic forces will drive BTC to nearly $0 without any regard to what it morally "deserves".

↑ comment by Dagon · 2021-05-19T16:43:50.236Z · LW(p) · GW(p)

While I don't disagree, it's interesting to consider what it means for a currency to deserve something. I'd phrase it as "people who don't hold very much bitcoin deserve to spend less of our worldwide energy and GPU output on crypto mining".

Replies from: mikkel-wilson, Rana Dexsin↑ comment by MikkW (mikkel-wilson) · 2021-05-19T18:14:37.984Z · LW(p) · GW(p)

That does not accurately summarize my own personal feelings on this. I do suspect it's correct that BTC miners are using too much of the world's resources (a problem that can be fixed, but I'd be surprised if Bitcoin developers chose to fix), but more generally I feel that people who do hold on to BTC deserve to lose their investment if they don't sell soon (to be clear, I am against the government having anything to do with that. But I will be happy with the market if / when the market decides BTC is worthless)

↑ comment by Rana Dexsin · 2021-05-19T17:05:55.436Z · LW(p) · GW(p)

Language clarification: is "deserve to spend less of…" used in the sense of "deserve that less of … is spent [not necessarily by them]" here?

Replies from: Dagon↑ comment by MikkW (mikkel-wilson) · 2021-05-19T14:57:10.416Z · LW(p) · GW(p)

This is something that has been in the back of my mind for a while, I sold almost all of my BTC half a year ago and invested that money in other assets.

comment by MikkW (mikkel-wilson) · 2021-04-09T21:47:26.290Z · LW(p) · GW(p)

Last month, I wrote a post here titled "Even Inflationary Currencies Should Have Fixed Total Supply", which wasn't well-received. One problem was that the point I argued for wasn't exactly the same as what the title stated: I supported both currencies with fixed total supply, and currencies that instead choose to scale supply proportional to the amount of value in the currency's ecosystem, and many people got confused and put off by the disparity between the title and my actual thesis; indeed, one of the most common critiques in the comments was a reiteration of a point I had already made in the original post.

Zvi helpfully pointed out another effect that nominal inflation has that serves as part of the reason inflation is implemented the way it is, that I wasn't previously aware of, namely that nominal inflation induces people to accept worsening prices they psychologically would otherwise resist. While I feel intentionally invoking this effect flirts with the boundary of dishonesty, I do recognize the power and practical benefits of this effect.

All that said, I do stand by the core of my original thesis: nominal inflation is a source of much confusion for normal people, and makes the information provided by price signals less easily legible over long spans of time, which is problematic. Even if the day-to-day currency continues to nominally inflate like things are now, it would be stupid not to coordinate around a standard stable unit of value (like [Year XXXX] Dollars, except without having to explicitly name a specific year as the basis of reference; and maybe don't call it dollars, to make it clear that the unit isn't fluidly under the control of some organization)

comment by MikkW (mikkel-wilson) · 2021-04-08T03:21:55.945Z · LW(p) · GW(p)

I learned to type in Dvorak nearly a decade ago, and any time I have typed on a device that supports it, I have used it since then. I don't know if it actually is any better than QWERTY, but I do notice that I enjoy the way it feels to type in Dvorak; the rhythm and shape of the dance my fingers make is noticeably different from when I type on QWERTY.

Even if Dvorak itself turns out not to be better in some way (fx. speed, avoiding injury, facilitation of mental processes) than QWERTY, it is incredibly unlikely that there does not exist some configuration of keys that is provably superior to QWERTY.

Also, hot take: Colemak is the coward's Dvorak.

comment by MikkW (mikkel-wilson) · 2021-03-23T17:41:54.108Z · LW(p) · GW(p)

We're living in a very important time, being on the cusp of both the space revolution and AI revolution truly taking off. Either one alone would make the 2020's on equal historical footing with the original development of life or the Cambrian explosion, and both together will make for a very historic moment.

Replies from: Viliam↑ comment by Viliam · 2021-03-24T17:08:42.115Z · LW(p) · GW(p)

If we succeed to colonize another planet, preferably outside our solar system, then yeah. Otherwise, it could be a historical equivalent of... the first fish that climbed out of the ocean, realized it can't breathe, and died.

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2021-03-24T17:29:41.303Z · LW(p) · GW(p)

I'm quite confident that we will successfully colonize space, unless something very catastrophic happens

Replies from: Viliam↑ comment by Viliam · 2021-03-25T17:53:11.977Z · LW(p) · GW(p)

I hope you are right, but here are the things that make me pessimistic:

Seeing the solar system to the right scale. Makes me realize how the universe is a vast desert of almost-nothing, and how insane are the distances between the not-nothings.

Mars sounds like a big deal, but it is smaller than Earth. The total surface of Mars is like the land area of Earth, so successfully colonizing Mars would merely double the space inhabitable by humans. That is, unless we colonize the surface of oceans of Earth first, in which case it would only increase the total inhabitable space by 30%.

And colonizing Mars doesn't mean that now we have the space-colonization technology mastered, because compared to other planets, Mars is easy mode. Venus and Mercury, that would double the inhabitable space again... and then we have gas planets and insanely cold ice planets... and then we need to get out of the solar system, where distances are measured in light-years, which probably means centuries or millenia for us... at which moment, if we have the technology to survive in space for a few generations, we might give up living on planets entirely, and just mine them for resources.

From that perspective, colonizing Mars seems like a dead end. We need to survive in space, for generations. Which will probably be much easier if we get rid of our biology.

Yeah, it could be possible, but probably much more complicated than most of science fiction assumes.

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2021-03-25T19:44:02.206Z · LW(p) · GW(p)

Thanks for the thoughts.

The main resource needed for life is light (which is abundant throughout the solar system), not land or gravity, so the sparseness of planets isn't actually a big deal.

It's also worth remembering the Moon; it's slightly harder than Mars and even smaller; but the Moon will play an important role in the Earth-Moon system, similar to what the Americas have been to the Old World in the past 400 years.

Interstellar travel is a field where we currently don't have good proof of capabilities yet, but if we can figure out how to safely travel at significant fractions of c, it shouldn't take anything more than a few decades to reach the nearest stars, quite possibly even less time than that; and even if we end up failing to expand beyond the Solar System, I'd say that's more than enough to justify calling the events coming in the next few decades a revolution on par with the cambrian explosion and the development of life.

comment by MikkW (mikkel-wilson) · 2021-02-05T06:52:33.209Z · LW(p) · GW(p)

I currently expect a large AI boom, representing a 10x growth in world GDP to happen within the next 5 years with 80% probability, in the next 10 years with ~93% probability, and in the next 3 years with 50% probability.

I'd be happy to doublecrux with anyone whose timelines are slower

comment by MikkW (mikkel-wilson) · 2021-01-31T21:02:55.623Z · LW(p) · GW(p)

I wish the keycaps on some of the keys on my keyboard were textured - I can touch-type well enough for the alphabetic keys, but when using other keys, I often get slightly confused as to which keys are under my fingers unless I use my eyes to see what key it is. If there were textures (perhaps braille symbols) that indicated which key I was feeling, I expect that would be useful.

Replies from: clone of saturn, Raemon↑ comment by clone of saturn · 2021-02-02T04:10:15.833Z · LW(p) · GW(p)

This seems like it would be pretty easy to DIY with small drops of superglue.

↑ comment by Raemon · 2021-01-31T21:06:19.008Z · LW(p) · GW(p)

There probably exist braille keyboards you could try?

Replies from: abramdemski↑ comment by abramdemski · 2021-02-02T17:56:54.961Z · LW(p) · GW(p)

I tried this once -- I got Braille stickers designed to put on a keyboard -- but I didn't like it. Still, it would be pretty cool to learn braille this way.

Replies from: mikkel-wilson↑ comment by MikkW (mikkel-wilson) · 2021-02-02T21:43:50.660Z · LW(p) · GW(p)

This is useful data. What didn't you like about it?

Replies from: abramdemski↑ comment by abramdemski · 2021-02-03T16:50:10.009Z · LW(p) · GW(p)

The lumpy feel was aversive.

comment by MikkW (mikkel-wilson) · 2020-11-04T20:22:57.256Z · LW(p) · GW(p)

Scott Garrabrandt presents Cartesian Frames as being a very mathematical idea. When I asked him about the prominence of mathematics in his sequence, he said “It’s fundamentally math; I mean, you could translate it out of math, but ultimately it comes from math”. But I have a different experience when I think about Cartesian Frames- first and foremost, my mental conception of CF is as a common sense idea, that only incidentally happens to be expressable in mathematical terms (edit: when I say "common sense" here, I don't mean that it's a well known idea - it's not, and Scott is doing good by sharing his ideas - but the idea feels similar to other ideas in the "common sense" category). I think both perspectives are valuable, but the interesting thing I want to note here is the difference in perspective that the two of us have. I hope to explore this difference in framing more later.

Replies from: Patterncomment by MikkW (mikkel-wilson) · 2020-10-29T22:23:14.310Z · LW(p) · GW(p)

Aumann Agreement != Free Agreement

Oftentimes, I hear people talk about Aumann's Agreement Theorem as if it means that two rational, honest agents cannot be aware of disagreeing with each other on a subject, without immediately coming to agree with each other. However, this is overstating the power of Aumann Agreement. Even putting aside the unrealistic assumption of Bayesian updating, which is computationally intractable in the real world [LW · GW], as well as the (not strictly required, but valuable) non-trivial [LW(p) · GW(p)] presumption that the rationality and honesty of the agents is common knowledge [? · GW], the reasoning that Aumann provides is not instantaneous:

To illustrate Aumann's reasoning, let's say Alice and Bob are rational, honest agents capable of Bayesian updating, and have common knowledge of eachother's rationality.

Alice says to Bob: "Hey, did you know pineapple pizza was invented in Canada?"

Bob: "What? No. Pineapple pizza was invented in Hawaii."

Alice: "I'm 90% confident that it was invented in Canada"