Posts

Comments

- We need not provide the strong model with access to the benchmark questions.

- Depending on the benchmark, it can be difficult or impossible to encode all the correct responses in a short string.

My reply to both your and @Chris_Leong 's comment is that you should simply use robust benchmarks on which high performance is interesting.

In the adversarial attack context, the attacker's objectives are not generally beyond the model's "capabilities."

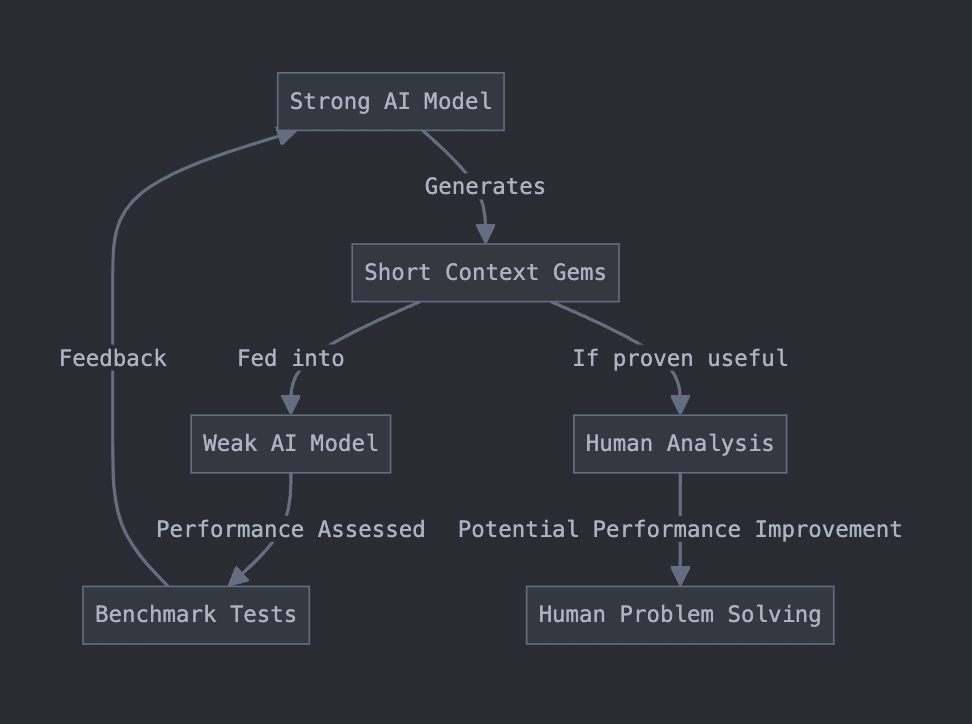

A long time ago, I worked on an idea I called "Context Gems." A friend asked me to make a short, publicly accessible writeup for it. This is a really rough writeup of the idea, based on some old notes I had lying around.

Context Gems was an attempt at coming up with a theoretical way of safely eliciting superhuman capabilities from an AI. It was a fairly direct off-shoot of e.g. OSNR I later heard it was similar to some ideas Paul Christiano had a long time ago, like imitative generalization/learning the prior.

The goal is to get effective cognitive labor out of a superintelligent but possibly misaligned AI model without causing catastrophe. One insight is that if for a given goal, we want to achieve this goal while receiving a minimum amount of input/information from the superintelligent model. (Another way to phrase this is that we want to maximize the density of "helpful" information in the model's outputs, to reduce its opportunities for hijacking the human operators).

Our setup is to train/use a strong (possibly superhuman) model to generate short strings ("context gems"). We intend these strings to contain some insight about the problem domain. To verify the usefulness of these short strings, we feed them into context of weaker model, which we can assess on some benchmarks. We may "reward" the strong model based on the performance of the weak model on some problem domain.

I had Claude make me a flow-chart.

If the gems do dramatically improve the performance of the weak model, then perhaps they contain some true insight about the problem domain. Then, if the strings are sufficiently short, we might be able to expose the string to humans without causing catastrophe, hopefully resulting in similar performance improvements for humans.

Some things we might think about:

- What should be true of these "context gems"? Perhaps we want them to be short. Are there other restrictions we want to put on them?

- What are the right problem domains to select?

- If you were an adversarial/misaligned superintelligence, how might you attempt to take over the world given such a setup?

- What sort of information might actually go into these context gems?

- I'd also be interested in any empirical evidence that such a setup would work.

Thanks for the feedback!

I agree that it is possible to learn quickly without mentorship. However, I believe that for most programmers, the first "real" programming job is a source of tremendous growth. Why not have that earlier, and save more of one's youth?

Conventional advice directed at young people seem shockingly bad. I sat down to generate a list of anti-advice.

The anti-advice are things that I wish I was told in high school, but that are essentially negations of conventional advice.

You may not agree with the advice given here. In fact, they are deliberately controversial. They may also not be good advice. YMMV.

- When picking between colleges, do care a lot about getting into a prestigious/selective university. Your future employers often care too.

- Care significantly less about nebulous “college fit.” Whether you’ll enjoy a particular college is determined mainly by 1, the location, and 2, the quality of your peers

- Do not study hard and conscientiously. Instead, use your creativity to find absurd arbitrages. Internalize Thiel's main talking points and find an unusual path to victory.

- Refuse to do anything that people tell you will give you "important life skills." Certainly do not take unskilled part time work unless you need to. Instead, focus intently on developing skills that generate surplus economic value.

- If you are at all interested in a career in software (and even if you're not), get a “real” software job as quickly as possible. Real means you are mentored by a software engineer who is better at software engineering than you.

- If you’re doing things right, your school may threaten you with all manners of disciplinary action. This is mostly a sign that you’re being sufficiently ambitious.

- Do not generically seek the advice of your elders. When offered unsolicited advice, rarely take it to heart. Instead, actively seek the advice of elders who are either exceptional or unusually insightful.

Thanks for the post!

The problem was that I wasn’t really suited for mechanistic interpretability research.

Sorry if I'm prodding too deep, and feel no need to respond. I always feel a bit curious about claims such as this.

I guess I have two questions (which you don't need to answer):

- Do you have a hypothesis about the underlying reason for you being unsuited for this type of research? E.g. do you think you might be insufficiently interested/motivated, have insufficient conscientiousness or intelligence, etc.

- How confident are you that you just "aren't suited" to this type of work? To operationalize, maybe given e.g. two more years of serious effort, at what odds would you bet that you still wouldn't be very competitive at mechanistic interpretability research?

- What sort of external feedback are you getting vis a vis your suitability for this type of work? E.g. have you received feedback from Neel in this vein? (I understand that people are probably averse to giving this type of feedback, so there might be many false negatives).

Hi, do you have a links to the papers/evidence?

Strong upvoted.

I think we should be wary of anchoring too hard on compelling stories/narratives.

However, as far as stories go, this vignette scores very highly for me. Will be coming back for a re-read.

dupe https://www.lesswrong.com/posts/Hna4aoMwr6Qx9rHBs/linkpost-introducing-superalignment

but a market with a probability of 17% implies that 83% of people disagree with you

Is this a typo?

What can be used to auth will be used to auth

One of the symptoms of our society's deep security inadequacy is the widespread usage of unsecure forms of authentication.

It's bad enough that there are systems which authenticate you using your birthday, SSN, or mother's maiden name by spec.

Fooling bad authentication is also an incredibly common vector for social engineering.

Anything you might have, which others seem unlikely to have (but which you may not immediately see a reason to keep secret), could be accepted by someone you implicitly trust as "authentication."

This includes:

- Company/industry jargon

- Company swag

- Certain biographical information about yourself (including information you could easily Google)

- Knowing how certain internal numbering or naming systems work (hotels seemingly assume only guests know how the rooms are numbered!)

As the worst instance of this, the best way to understand a lot of AIS research in 2022 was “hang out at lunch in Constellation”.

Is this no longer the case? If so, what changed?

Good tip!

This is a reasonable point, but I have a cached belief that frozen food is substantially less healthy than non-frozen food somehow.

This is very interesting. My guess is that this would take a lot of time to set up, but if you have eg. recommended catering providers in SFBA, I'd be very interested!

Completely fair request. I think I was a bit vague when I said "on top or around AI systems."

The point here is that I want to find techniques that seem to positively influence model behavior, which I can "staple on" to existing models without a gargantuan engineering effort.

I am especially excited about these ideas if they seem scalable or architecture-agnostic.

Here are a few examples of the kind of research I'm excited about:

- Conciseness priors on outputs (as a way to shift cognitive labor to humans)

- I think there is a reasonable story for how conciseness priors could improve alignment. This looks like the AI being more eager to use higher level explanations that make humans guess the details, avoiding using ideas that would be very hard to grasp for a human when simpler-to-human ideas would do, being honest and transparent with humans so that we can do a better job filling in the gaps, etc.

- If it is indeed the case that strong conciseness priors incentivize transparency-to-humans, then it seems worth investigating 1, how far this effect goes, 2, if there are some tweaks / clever ways to apply pressure toward conciseness that work much better than others for alignment.

- OSNR.

- I feel pretty optimistic that applying noise to the outputs of an AI system and then having humans reconstruct it yields nice alignment properties.

- However, role-playing as a misaligned AI, there was ways that I could get around this process. Mainly, this involved "cheating" the noising process using selective redundancy. I've recently been investigating how to recover from this hack via methods like paraphrasing and calculating token salience.

- Other regularizations.

- Speed priors.

- Trying to figure out what sorts of inductive biases have nice alignment properties.

- Some of Tamera's work on externalized reasoning.

- I think betting on language models scaling to ASI would not be crazy.

- Investigating how we can influence "reasoning in language" seems promising, stackable, and is plausibly the type of work that can be "stapled on" to future models.

- AI Safety via Debate

- This just seems like something we can build once we have a really advanced language model. It stacks with everything else, and it seems to have great properties.

If someone were to discover a great regularizer for language models, OSNR turns out to work well and I sort out the issues around strategic redundancy, and externalized reasoning oversight turns out to be super promising, then we could just stack all three.

We could then clone the resulting model and run a debate, ior stack on whatever other advances we've made by that point. I'm really excited about this sort of modularity, and I guess I'm also pretty optimistic about a few of these techniques having more bite than people may initially guess.

Recently my alignment orientation has basically been “design stackable tools on top or around AI systems which produce pressure toward increased alignment.”

I think it’s a pretty productive avenue that’s 1) harder to get lost in, and 2) might be eventually sufficient for alignment.

neuron has

I was confused by the singular "neuron."

I think the point here is that if there are some neurons which have low activation but high direct logit attribution after layernorm, then this is pretty good evidence for "smuggling."

Is my understanding here basically correct?

Shallow comment:

How are you envisioning the prevention of strategic takeovers? It seems plausible that robustly preventing strategic takeovers would also require substantial strategizing/actualizing.

The first point isn’t super central. FWIW I do expect that humans will occasionally not swap words back.

Humans should just look at the noised plan and try to convert it into a more reasonable-seeming, executable plan.

Edit: that is, without intentionally changing details.

Fair enough! For what it’s worth, I think the reconstruction is probably the more load-bearing part of the proposal.

Is your claim that the noise borne asymmetric pressure away from treacherous plans disappears in above-human intelligences? I could see it becoming less material as intelligence increases, but the intuition should still hold in principle.

"Most paths lead to bad outcomes" is not quite right. For most (let's say human developed, but not a crux) plan specification languages, most syntactically valid plans in that language would not substantially mutate the world state when executed.

I'll begin by noting that over the course of writing this post, the brittleness of treacherous plans became significantly less central.

However, I'm still reasonably convinced that the intuition is sound. If a plan is adversarial to humans, the plan's executor will face adverse optimization pressure from humans and adverse optimization pressure complicates error correction.

Consider the case of a sniper with a gun that is loaded with 50% blanks and 50% lethal bullets (such that the ordering of the blanks and lethals are unknown to the sniper). Let's say his goal is to kill a person on the enemy team.

If the sniper is shooting at an enemy team equipped with counter-snipers, he is highly unlikely to succeed (<50%). In fact, he is quite likely to die.

Without the counter-snipers, the fact that his gun is loaded with 50% blanks suddenly becomes less material. He could always just take another shot.

I claim that our world resembles the world with counter-snipers. The counter-snipers in the real world are humans who do not want to be permanently disempowered.

i.e. that the problem is easily enough addressed that it can be done by firms in the interests of making a good product and/or based on even a modest amount of concern from their employees and leadership

I'm curious about how contingent this prediction is on 1, timelines and 2, rate of alignment research progress. On 2, how much of your P(no takeover) comes from expectations about future research output from ARC specifically?

If tomorrow, all alignment researchers stopped working on alignment (and went to become professional tennis players or something) and no new alignment researchers arrived, how much more pessimistic would you become about AI takeover?

Epistemic Status: First read. Moderately endorsed.

I appreciate this post and I think it's generally good for this sort of clarification to be made.

One distinction is between dying (“extinction risk”) and having a bad future (“existential risk”). I think there’s a good chance of bad futures without extinction, e.g. that AI systems take over but don’t kill everyone.

This still seems ambiguous to me. Does "dying" here mean literally everyone? Does it mean "all animals," all mammals," "all humans," or just "most humans? If it's all humans dying, do all humans have to be killed by the AI? Or is it permissible that (for example) the AI leaves N people alive, and N is low enough that human extinction follows at the end of these people's natural lifespan?

I think I understand your sentence to mean "literally zero humans exist X years after the deployment of the AI as a direct causal effect of the AI's deployment."

It's possible that this specific distinction is just not a big deal, but I thought it's worth noting.

I think this is probably good to just 80/20 with like a weekend of work? So that there’s a basic default action plan for what to do when someone goes “hi designated community person, I’m depressed.”

People really should try to not have depression. Depression is bad for your productivity. Being depressed for eg a year means you lose a year of time, AND it might be bad for your IQ too.

A lot of EAs get depressed or have gotten depressed. This is bad. We should intervene early to stop it.

I think that there should be someone EAs reach out to when they’re depressed (maybe this is Julia Wise?), and then they get told the ways they’re probably right and wrong so their brain can update a bit, and a reasonable action plan to get them on therapy or meds or whatever.

Strong upvoted.

I’m excited about people thinking carefully about publishing norms. I think this post existing is a sign of something healthy.

Re Neel: I think that telling junior mech interp researchers to not worry too much about this seems reasonable. As a (very) junior researcher, I appreciate people not forgetting about us in their posts :)

I'd be excited about more people posting their experiences with tutoring

Short on time. Will respond to last point.

I wrote that they are not planning to "solve alignment once and forever" before deploying first AGI that will help them actually develop alignment and other adjacent sciences.

Surely this is because alignment is hard! Surely if alignment researchers really did find the ultimate solution to alignment and present it on a silver platter, the labs would use it.

Also: An explicit part of SERI MATS’ mission is to put alumni in orgs like Redwood and Anthropic AFAICT. (To the extent your post does this,) it’s plausibly a mistake to treat SERI MATS like an independent alignment research incubator.

Epistemic status: hasty, first pass

First of all thanks for writing this.

I think this letter is “just wrong” in a number of frustrating ways.

A few points:

- “Engineering doesn’t help unless one wants to do mechanistic interpretability.” This seems incredibly wrong. Engineering disciplines provide reasonable intuitions for how to reason about complex systems. Almost all engineering disciplines require their practitioners to think concretely. Software engineering in particular also lets you run experiments incredibly quickly, which makes it harder to be wrong.

- ML theory in particular is in fact useful for reasoning about minds. This is not to say that cognitive science is not also useful. Further, being able to solve alignment in the current paradigm would mean we have excellent practice when encountering future paradigms.

- It seems ridiculous to me to confidently claim that labs won’t care to implement a solution to alignment.

I think you should’ve spent more time developing some of these obvious cruxes, before implying that SERI MATS should change its behavior based on your conclusions. Implementing these changes would obviously have some costs for SERI MATS, and I suspect that SERI MATS organizers do not share your views on a number of these cruxes.

Ordering food to go and eating it at the restaurant without a plate and utensils defeats the purpose of eating it at the restaurant

Restaurants are a quick and convenient way to get food, even if you don’t sit down and eat there. Ordering my food to-go saves me a decent amount of time and food, and also makes it frictionless to leave.

But judging by votes, it seems like people don’t find this advice very helpful. That’s fine :(

I think there might be a misunderstanding. I order food because cooking is time-consuming, not because it doesn’t have enough salt or sugar.

Maybe it’d be good if someone compiled a list of healthy restaurants available on DoorDash/Uber Eats/GrubHub in the rationalist/EA hubs?

Can’t you just combat this by drinking water?

If you plan to eat at the restaurant, you can just ask them for a box if you have food left over.

This is true at most restaurants. Unfortunately, it often takes a long time for the staff to prepare a box for you (o(5 minutes)).

A potential con is that most food needs to be refrigerated if you want to keep it safe to eat for several hours

One might simply get into the habit of putting whatever food they have in the refrigerator. I find that refrigerated food is usually not unpleasant to eat, even without heating.

Sometimes when you purchase an item, the cashier will randomly ask you if you’d like additional related items. For example, when purchasing a hamburger, you may be asked if you’d like fries.

It is usually a horrible idea to agree to these add-ons, since the cashier does not inform you of the price. I would like fries for free, but not for $100, and not even for $5.

The cashier’s decision to withhold pricing information from you should be evidence that you do not, in fact, want to agree to the deal.

Epistemic status: clumsy

An AI could also be misaligned because it acts in ways that don't pursue any consistent goal (incoherence).

It’s worth noting that this definition of incoherence seems inconsistent with VNM. Eg. A rock might satisfy the folk definition of “pursuing a consistent goal,” but fail to satisfy VNM due to lacking completeness (and by corollary due to not performing expected utility optimization over the outcome space).

Strong upvoted.

The result is surprising and raises interesting questions about the nature of coherence. Even if this turns out to be a fluke, I predict that it’d be an informative one.

I think I was deceived by the title.

I’m pretty sure that rapid capability generalization is distinct from the sharp left turn.

dedicated to them making the sharp left turn

I believe that “treacherous turn” was meant here.

Wait I’m pretty confident that this would have the exact opposite effect on me.

You can give ChatGPT the job posting and a brief description of Simon’s experiment, and then just ask them to provide critiques from a given perspective (eg. “What are some potential moral problems with this plan?”)

I clicked the link and thought it was a bad idea ex post. I think that my attempted charitable reading of the Reddit comments revealed significantly less constructive data than what would have been provided by ChatGPT.

I suspect that rationalists engaging with this form of content harms the community a non-trivial amount.

I’m a fan of this post, and I’m very glad you wrote it.

I understand feeling frustrated given the state of affairs, and I accept your apology.

Have a great day.

You don’t have an accurate picture of my beliefs, and I’m currently pessimistic about my ability to convey them to you. I’ll step out of this thread for now.