Fund me please - I Work so Hard that my Feet start Bleeding and I Need to Infiltrate University

post by Johannes C. Mayer (johannes-c-mayer) · 2024-05-18T19:53:10.838Z · LW · GW · 37 commentsContents

Bleeding Feet and Dedication Collaboration as Intelligence Enhancer Hardcore Gamedev University Infiltration Over 9000% Mean Increase Don't pay me, but my collaborators Join me The Costly Signal Research Artifacts On Portfolios None 37 comments

Thanks to Taylor Smith for doing some copy-editing this.

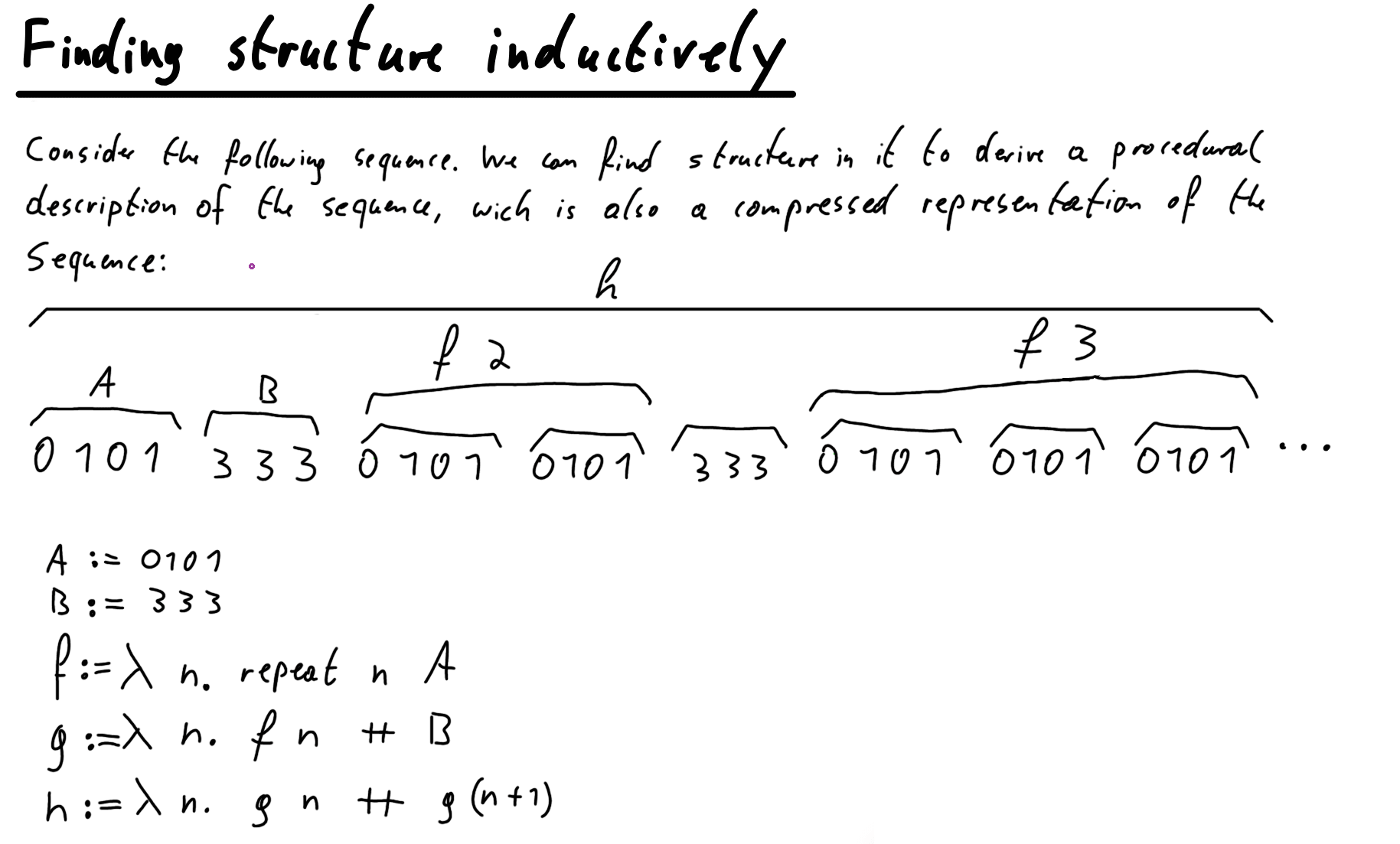

In this article, I tell some anecdotes and present some evidence in the form of research artifacts about how easy it is for me to work hard when I have collaborators. If you are in a hurry I recommend skipping to the research artifact section [LW · GW].

Bleeding Feet and Dedication

During AI Safety Camp (AISC) 2024, I was working with somebody on how to use binary search to approximate a hull that would contain a set of points, only to knock a glass off of my table. It splintered into a thousand pieces all over my floor.

A normal person might stop and remove all the glass splinters. I just spent 10 seconds picking up some of the largest pieces and then decided that it would be better to push on the train of thought without interruption.

Sometime later, I forgot about the glass splinters and ended up stepping on one long enough to penetrate the callus. I prioritized working too much. A pretty nice problem to have, in my book.

[Edit 2024-05-19] The point is that this is irrational, and I have the problem of working too much. But this is a problem that's much easier to solve than "I have trouble making myself do anything". More details here [LW(p) · GW(p)].

Collaboration as Intelligence Enhancer

It was really easy for me to put in over 50 hours per week during AISC[1] (where I was a research lead). For me, AISC mainly consisted of meeting somebody 1-on-1 and solving some technical problem together. Methylphenidate helps me with not getting distracted when I am on my own, though Methylphenidate is only the number 2 productivity enhancer. For me, the actual ADHD cure seems to be to take methylphenidate while working 1-on-1 with somebody.

But this productivity enhancement is not just about the number of hours I can put in. There is a qualitative difference. I get better at everything. Seriously. Usually, I am bad at prioritization, but when I work with somebody, it usually feels, in retrospect, like over 75% of the time was spent working on the optimal thing (given our state of knowledge at the time). I've noticed similar benefits for my abilities in writing, formalizing things, and general reasoning.

Hardcore Gamedev University Infiltration

I don't quite understand why this effect is so strong. But empirically, there is no doubt it's real. In the past, I spent 3 years making video games. This was always done in teams of 2-4 people. We would spend 8-10 hours per day, 5-6 days a week in the same room. During that time, I worked on this VR "game" where you fly through a 4D fractal (check out the video by scrolling down or on YouTube).

For that project, the university provided a powerful tower computer. In the last week of the project, my brain had the brilliant idea to just sleep in the university to save the commute. This also allowed me to access my workstation on Sunday when the entire university was closed down. On Monday the cleaning personnel of the University almost called the cops on me. But in the end, we simply agreed that I would put on a sign on the door so that I wouldn't scare them to death. Also, I later learned that the University security personnel did patrols with K-9s, but somehow I got lucky and they never found me.

I did have a bag with food and a toothbrush, which earned me laughs from friends. As there were no showers, on the last day of the project you could literally smell all the hard work I had put in. Worth it.

Over 9000% Mean Increase

I was always impressed by how good John Wentworth is at working. During SERI MATS, he would eat with us at Lightcone. As soon as all the high-utility conversation topics were finished, he got up – back to work.

And yet, John said that working with David Lorell 1-on-1 makes him 3-5x more productive (iirc). I think for me working with somebody is more like a 15-50x increase.

Without collaborators, I am struggling hard with my addiction to learning random technical stuff. In contrast to playing video games and the like, there are usually a bunch of decent reasons to learn about some particular technical topic. Only when I later look at the big picture do I realize — was that actually important?

Don't pay me, but my collaborators

There are multiple people from AISC who would be interested in working with me full-time if payed. Enough money to just pay one person would give me over 75% of the utility. If I need to choose only one collaborator, I'd choose Bob (fake name, but I have a real person in mind). I have almost no money, but I can cheaply live at my parent's place. So the bottleneck is to get funding for Bob.

Bob would like ideally $90k per year, though any smaller amounts would still be very helpful. I would use it to work with Bob full-time until the funds run out.

I might also consider choosing somebody other than Bob who would be willing to work for less.

Join me

Of course, another way to resolve this issue is to find other collaborators that I don't need to pay right now. Check out this Google Doc if you might be interested in collaborating with me.

The Costly Signal

Research Artifacts

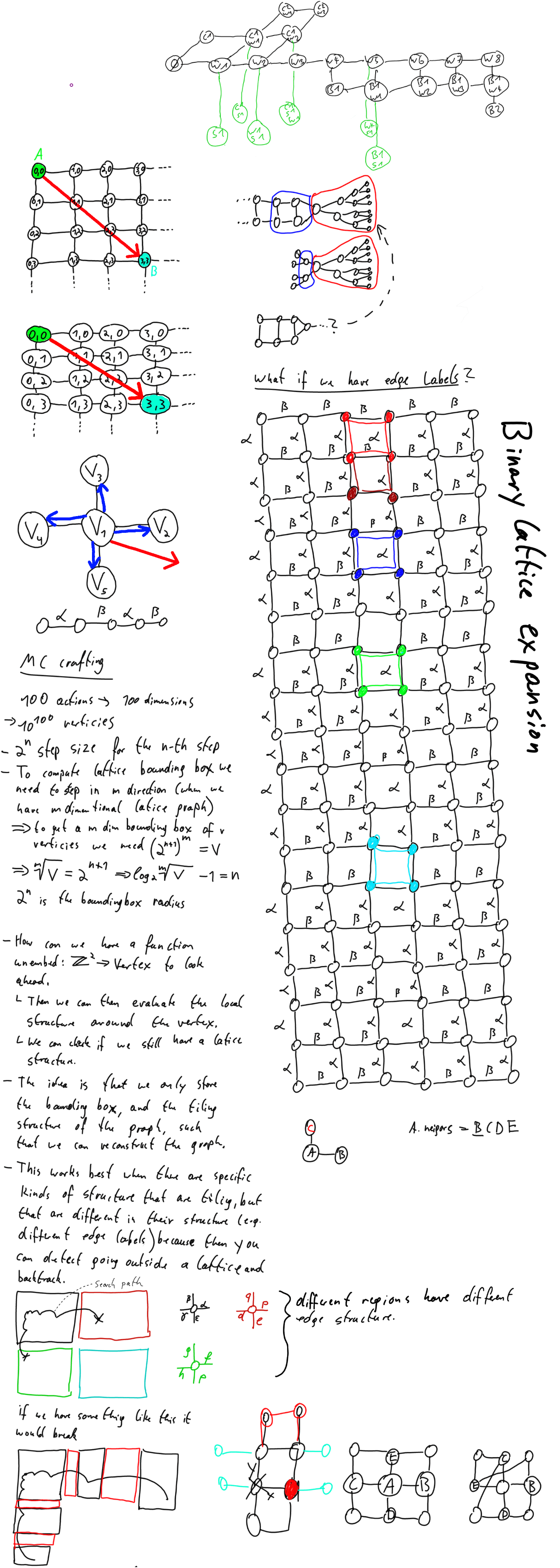

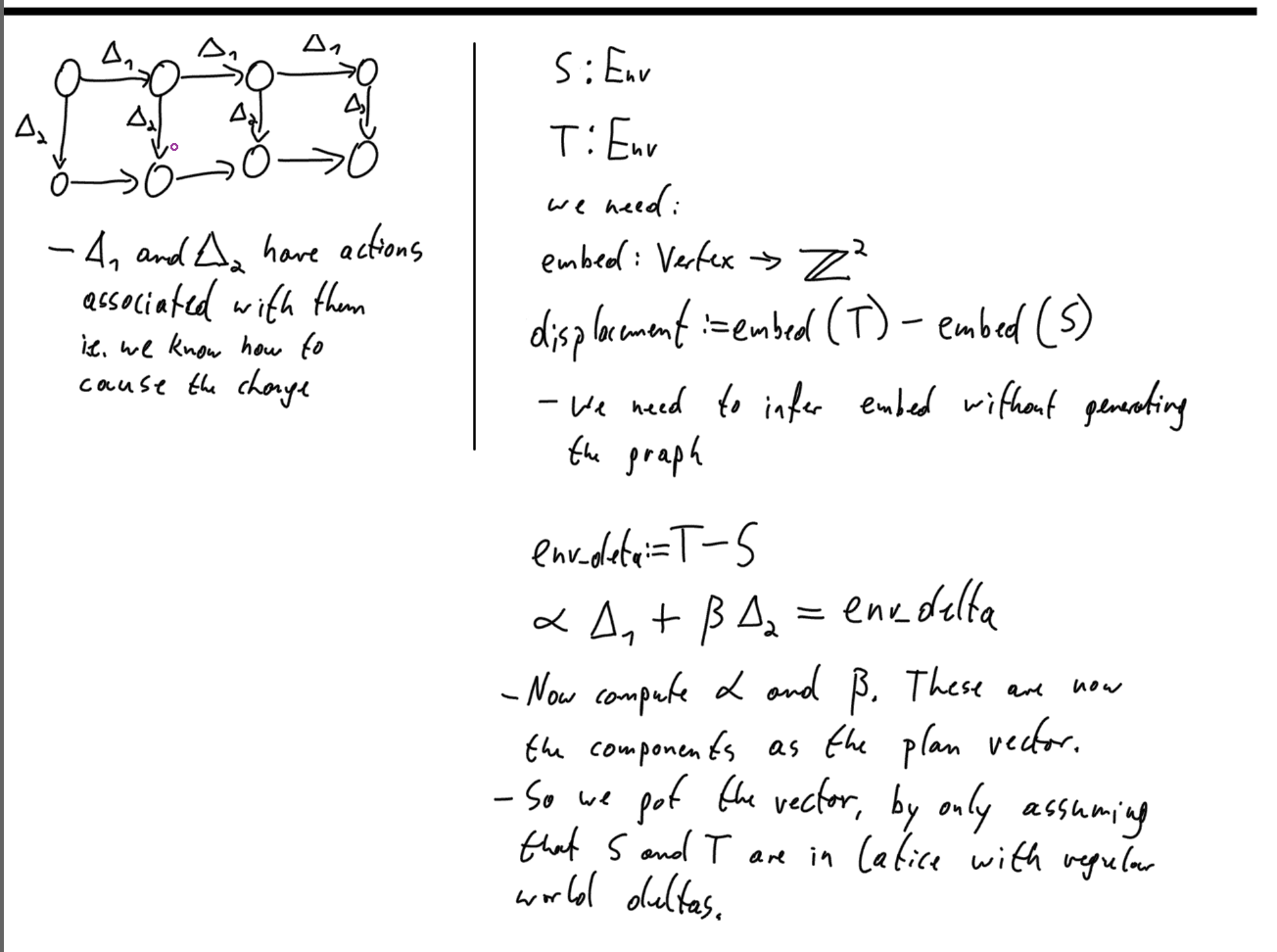

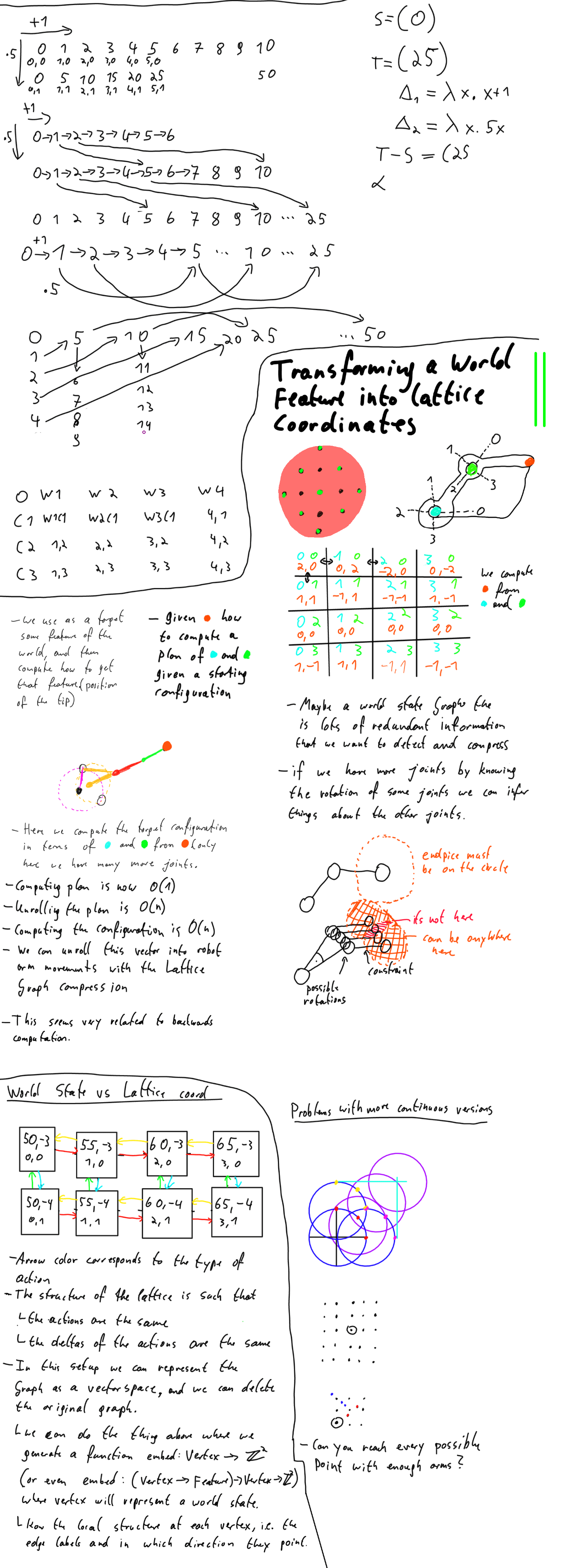

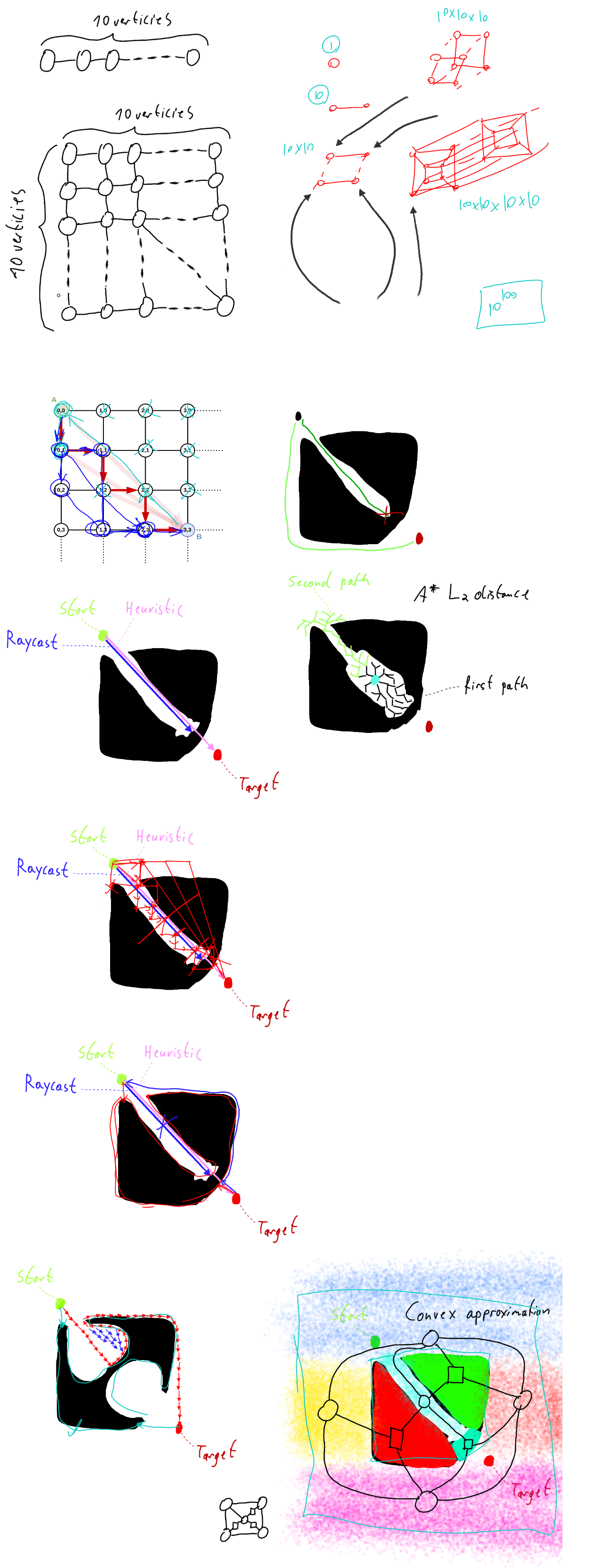

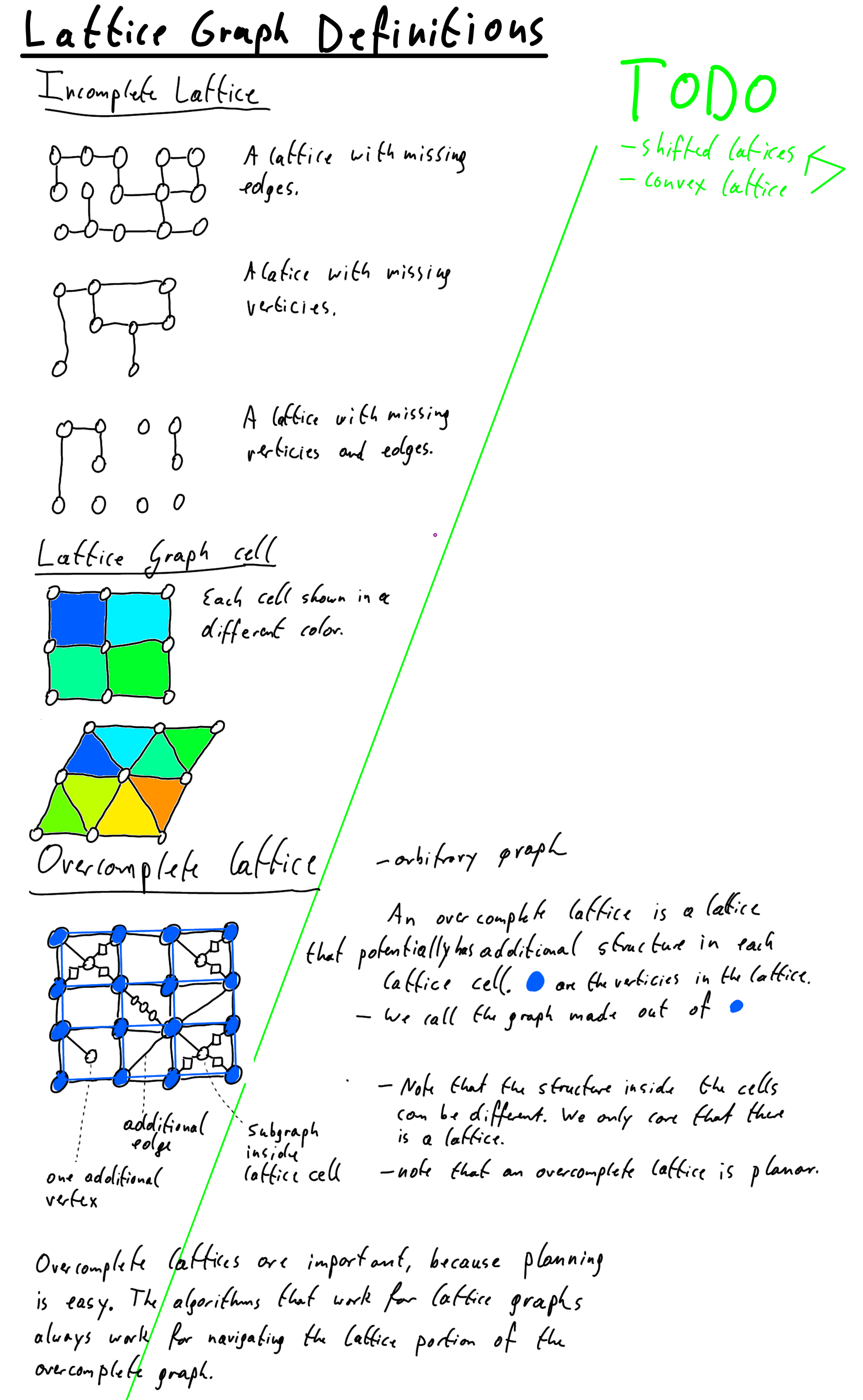

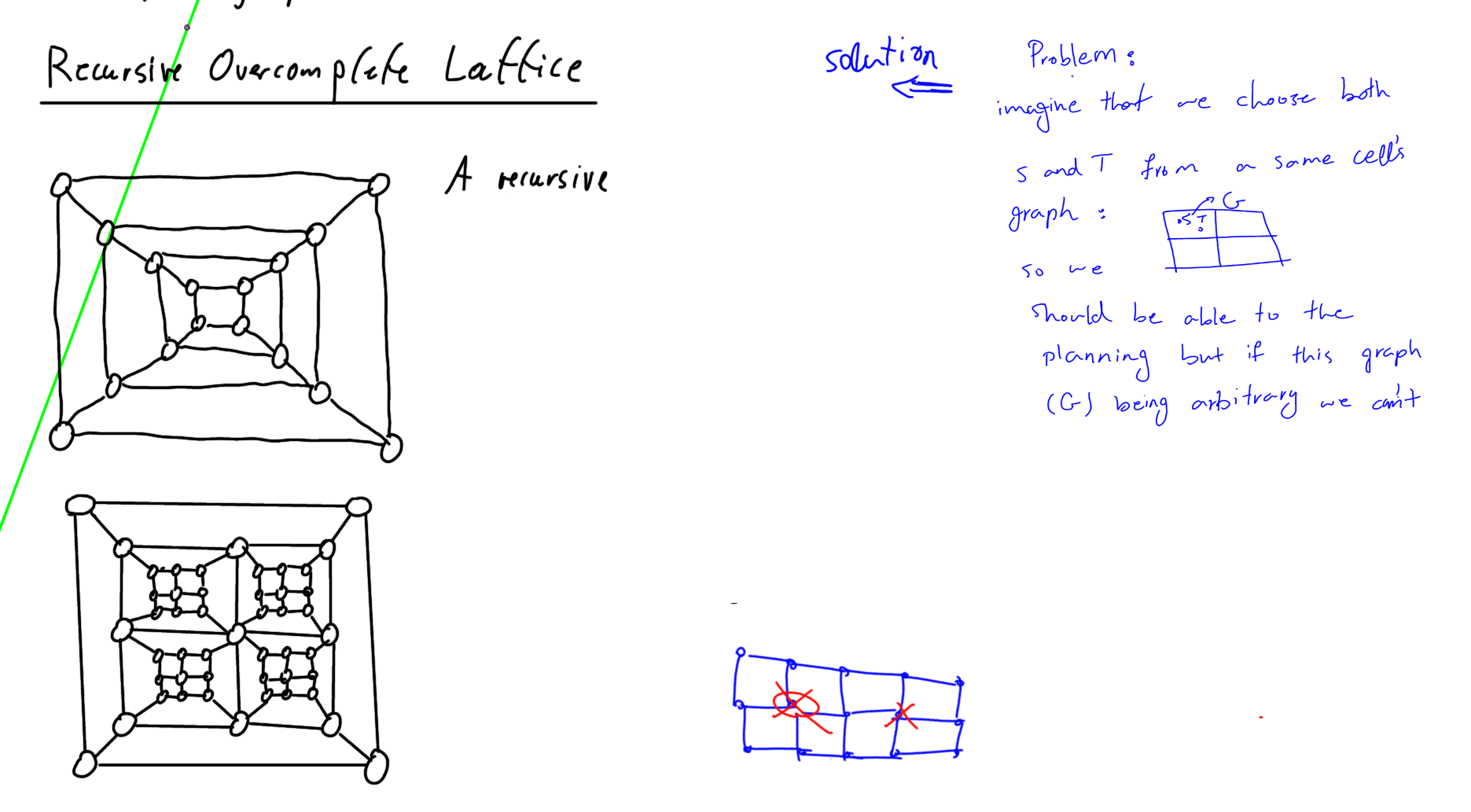

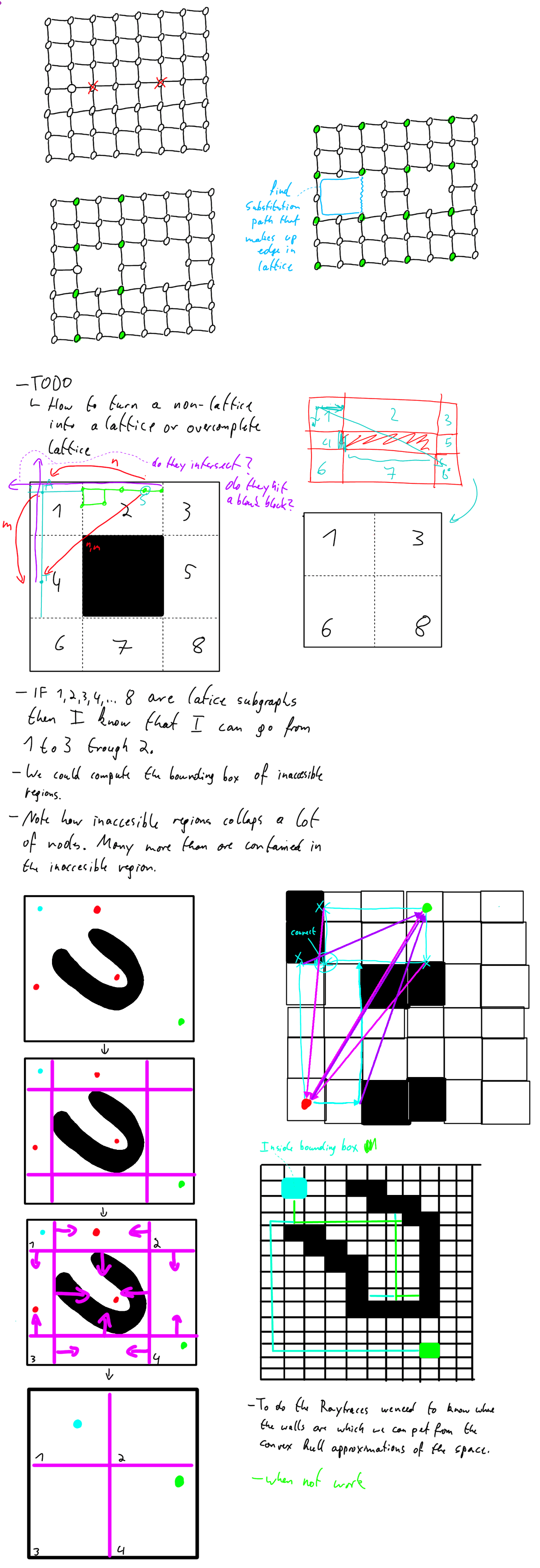

I don't have a portfolio of polished research results. But what I do have is a bunch of research artifacts, produced during AISC - i.e. various <documents/whiteboards> that were created during the process of doing research. I expect faking something like this is very hard.

Over 90% of the content in these artifacts is written down by me, though heavily influenced by whoever I was working with at the time. For a list of collaborators and a short description of what I am working on, see here.

I hope, at minimum, this demonstrates that I am able to put in the time (when I have collaborators). Optimistically, these artifacts not only show that I can put in the time (with collaborators) but also demonstrate basic technical competence.

None of these documents try to communicate the "why is this good". Rather, I hope that looking at them will make somebody think "This seems like the kind of research artifact that somebody who moves in the right direction might produce." I expect that if I were to look through similar lists made by other people, it would allow me to better evaluate them. But I am not sure to what extend other people would be able to do this, and I am highly uncertain about how well I would be able to do it myself.

In any case here is the list of artifacts:

- Here are all the pictures I took of my physical whiteboards during AISC.

- In the Science Algorithm Research Log, I write down rough notes about things I understood after figuring them out on a whiteboard. I created this doc just as AISC ended.

During AISC, we used Eraser extensively; here are a few of the boards, ordered after some intuition of what I think would be best for you to look at (note that some are very large and might take some time to load in):

- What is a good concept

- Bottleneck Abstraction and Planning

- Various topics: Abstraction / Program Synthesis / Hull Tiling / Defining World Model / Didactic Goals

- Running A World Model Backwards to Plan

- Minecraft Crafting and World Model Inference

- Forward VS Backward Planning / Network routing and Amorphous computing

- World Model Structure / Planning Proving Isomorphism / Running the world model Backwards

- A bunch of considerations about inferring world models (Note that there are multiple spaced apart columns of content)

- A tiny board about Program Search

I also have 61 hours of video recordings of me working during AISC (for logistical reasons, not linked here).

If anybody does vaguely consider funding me, I expect that you would significantly update towards funding me after talking for me in a video chat. Empirically, people seem to think a lot higher of me when I meet them face to face, 1-on-1. Also, I can make you understand what I am doing and why.

Alas, I think it's quite unlikely that this article will make somebody fund me. It's just that I noticed how extremely slow I am (without collaborators) to create a proper grant application.

On Portfolios

Let me briefly spend some time outlining my current models of what a good research portfolio would look like, in the hope that somebody can tell me how to improve them.

I have this game design portfolio website that showcases all the games I worked on. Making all these games took 3 years. This is a signal that's very hard to fake, and also highly legible. When you watch the video for a game, you can easily understand what's going on within a few moments. Even a person who never tried to make a game can see that, yes, that is a game, and it seems to be working.

I don't have such a convincing portfolio for doing research yet. And doing this seems to be much harder. Usually, the evaluation of such a portfolio requires technical expertise - e.g. how would you know if a particular math formalism makes sense if you don't understand the mathematical concepts out of which the formalism is constructed?

Of course, if you have a flashy demo, it's a very different situation. Imagine I had a video of an algorithm that learns Minecraft from scratch within a couple of real-time days, and then gets a diamond in less than 1 hour, without using neural networks (or any other black box optimization). It does not require much technical knowledge to see the significance of that.

But I don't have that algorithm, and if I had it, I would not want to make that publicly known. And I am unsure what is the cutoff value. When would something be bad to publish? All of this complicates things.

Right now, I have a highly uncertain model which tells me that without such a concrete demo (though it probably could be significantly less impressive) people would not fund me. I expect that at least in part this is because my models about what constitutes good research are significantly different from those of most grantmakers. Multiple people have told me that projects about "Let's figure out <intelligence / how to build an AGI we understand>" are not well liked by grantmakers. I expect this is because they expect the problem to be too hard. People would first need to prove that they are actually able to make any progress at all on this extremely hard problem. And probably, they think that even if somebody could solve the problem, it would take too much time for it to make a difference.

If anybody has better insights than me into why this is the case, that would be helpful. What would make somebody happy to fund a project like "Let's figure out <intelligence / how to build an AGI we understand>"?

Lastly, I leave you with another artifact: a digital whiteboard created over maybe 10-20 hours during AISC (again, you are not supposed to try to understand all the details):

- ^

Note that for unknown reasons I need to sleep 10-12 hours. Otherwise I get a significant intelligence debuff.

37 comments

Comments sorted by top scores.

comment by Alex_Altair · 2024-05-19T01:05:38.915Z · LW(p) · GW(p)

Hey Johannes, I don't quite know how to say this, but I think this post is a red flag about your mental health. "I work so hard that I ignore broken glass and then walk on it" is not healthy.

I've been around the community a long time and have seen several people have psychotic episodes. This is exactly the kind of thing I start seeing before they do.

I'm not saying it's 90% likely, or anything. Just that it's definitely high enough for me to need to say something. Please try to seek out some resources to get you more grounded.

Replies from: Emrik North, None↑ comment by Emrik (Emrik North) · 2024-05-19T02:08:18.452Z · LW(p) · GW(p)

It's a reasonable concern to have, but I've spoken enough with him to know that he's not out of touch with reality. I do think he's out of sync with social reality, however, and as a result I also think this post is badly written and the anecdotes unwisely overemphasized. His willingness to step out of social reality in order to stay grounded with what's real, however, is exactly one of the main traits that make me hopefwl about him.

I have another friend who's bipolar and has manic episodes. My ex-step-father also had rapid-cycling BP, so I know a bit about what it looks like when somebody's manic.[1] They have larger-than-usual gaps in their ability to notice their effects on other people, and it's obvious in conversation with them. When I was in a 3-person conversation with Johannes, he was highly attuned to the emotions and wellbeing of others, so I have no reason to think he has obvious mania-like blindspots here.

But when you start tuning yourself hard to reality, you usually end up weird in a way that's distinct from the weirdness associated with mania. Onlookers who don't know the difference may fail to distinguish the underlying causes, however. ("Weirdness" is a larger cluster than "normality", but people mostly practice distinguishing between samples of normality, so weirdness all looks the same to them.)

- ^

I was also evaluated for it after an outlier depressive episode in 2021, so I got to see the diagnostic process up close. Turns out I just have recurring depressions, and I'm not bipolar.

↑ comment by [deleted] · 2024-05-19T12:04:59.947Z · LW(p) · GW(p)

I think this post is a red flag about your mental health. "I work so hard that I ignore broken glass and then walk on it" is not healthy.

Seems like a rational prioritization to me if they were in an important moment of thought and didn't want to disrupt it. (Noting of course that 'walking on it' was not intentional and was caused by forgetting it was there.)

Also, I would feel pretty bad if someone wrote a comment like this after I posted something. (Maybe it would have been better as a PM)

Replies from: Kaj_Sotala, Mo Nastri↑ comment by Kaj_Sotala · 2024-05-19T16:59:26.924Z · LW(p) · GW(p)

Seems like a rational prioritization to me if they were in an important moment of thought and didn't want to disrupt it. (Noting of course that 'walking on it' was not intentional and was caused by forgetting it was there.)

This sounds like you're saying that they made a rational prioritization and then, separately from that, forgot that it was there. But those two events are not separate: the forgetting-and-then-walking-on-it was a predictable consequence of the earlier decision to ignore it and instead focus on work. I think if you model the first decision as a decision to continue working and to also take on a significant risk of hurting your feet, it doesn't seem so obviously rational anymore. (Of course it could be that the thought in question was just so important that it was worth the risk. But that seems unlikely to me.)

As the OP says, a "normal person might stop and remove all the glass splinters". Most people, in thinking whether to continue working or whether to clean up the splinters, wouldn't need to explicitly consider the possibility that they might forget about the splinters and step on them later. This would be incorporated into the decision-making process implicitly and automatically, by the presence of splinters making them feel uneasy until they were cleaned up. The fact that this didn't happen suggests that the OP might also ignore other signals relevant to their well-being.

The fact that the OP seems to consider this event a virtue to highlight in the title of their post, is also a sign that they are systematically undervaluing their own well-being in a way that to me seems very worrying.

Also, I would feel pretty bad if someone wrote a comment like this after I posted something. (Maybe it would have been better as a PM)

Probably most people would. But I think it's also really important for there to be clear, public signals that the community wants people to take their well-being seriously and doesn't endorse people hurting themselves "for the sake of the cause".

The EA and rationalist communities are infamous for having lots of people burning themselves out through extreme self-sacrifice. If someone makes a post where they present the act of working until their feet start bleeding as a personal virtue, and there's no public pushback to that, then that sends the implicit signal that the community endorses that reasoning. That will then contribute to unhealthy social norms that cause people to burn themselves out. The only way to counteract that is by public comments that make it clear that the community wants people to take care of themselves, even if that makes them (temporarily) less effective.

To the OP: please prioritize your well-being first. Self-preservation is one of the instrumental convergent drives [? · GW]; you can only continue to work if you are in good shape.

Replies from: johannes-c-mayer, None↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2024-05-19T17:22:13.393Z · LW(p) · GW(p)

I am probably bad at valuing my well-being correctly. That said I don't think the initial comment made me feel bad (but maybe I am bad at noticing if it would). Rather now with this entire comment stream, I realize that I have again failed to communicate.

Yes, I think this was irrational to not clean up the glass. That is the point I want to make. I don't think it is virtuous to have failed in this way at all. What I want to say is: "Look I am running into failure modes because I want to work so much."

Not running into these failure modes is important, but these failure modes where you are working too much are much easier to handle than the failure mode of "I can't get myself to put in at least 50 hours of work per week consistently."

While I do think that it is true, I am probably very bad in general at optimizing for myself to be happy. But the thing is while I was working so hard during AISC I was most of the time very happy. The same when I made these games. Most of the time I did these things because I deeply wanted to.

There where moments during AISC where I felt like I was close to burning out, but this was the minority. Mostly I was much happier than baseline. I think usually I don't manage to work as hard and as long as I'd like, and that is a major source of unhappiness for me.

So it seems that the problem that Alex seems to see, in me working very hard (that I am failing to take my happiness into account) is actually solved by me working very hard, which is quite funny.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2024-05-19T19:32:36.303Z · LW(p) · GW(p)

Yes, I think this was irrational to not clean up the glass. That is the point I want to make. I don't think it is virtual to have failed in this way at all. What I want to say is: "Look I am running into failure modes because I want to work so much."

Ah! I completely missed that, that changes my interpretation significantly. Thank you for the clarification, now I'm less worried for you since it no longer sounds like you have a blindspot around it.

Not running into these failure modes is important, but these failure modes where you are working too much are much easier to handle than the failure mode of "I can't get myself to put in at least 50 hours of work per week consistently."

While I do think that it is true, I am probably very bad in general at optimizing for myself to be happy. But the thing is while I was working so hard during AISC I was most of the time very happy. The same when I made these games. Most of the time I did these things because I deeply wanted to.

It sounds right that these failure modes are easier to handle than the failure mode of not being able to do much work.

Though working too much can lead to the failure mode of "I can't get myself put in work consistently". I'd be cautious in that it's possible to feel like you really enjoy your work... and then burn out anyway! I've heard several people report this happening to them. The way I model that is something like... there are some parts of the person [? · GW] that are obsessed with the work, and become really happy about being able to completely focus on the obsession. But meanwhile, that single-minded focus can lead to the person's other needs not being met, and eventually those unmet needs add up and cause a collapse.

I don't know how much you need to be worried about that, but it's at least good to be aware of.

↑ comment by [deleted] · 2024-05-19T17:28:19.107Z · LW(p) · GW(p)

This sounds like you're saying that they made a rational prioritization and then, separately from that, forgot that it was there

That implication wasn't intended. I agree that (for basic reasons) the probability of a small cut was higher given their choice.

Rather, the action itself seems rational to me when considering:

- That outcome seems unprobable (at least if they were sitting down), but actual in this particular timeline.

- The effects of a cut on the foot are really low (with I'd guess >99.5% probability, for an otherwise healthy person - on reflection, maybe not cumulatively low enough for the also-small payoff?), and if so ~certain to not significantly curtail progress.

That doesn't necessarily imply the policy which produced the action is rational, though. But when considering the two hypotheses: (1) OP is mentally unwell, and (2) They have some them-specific reason[1] for following a policy which outputs actions like this, I considered (2) to be a lot more probable.

Meta: This comment is (genuinely) very hard/overwhelming-feeling for me to try to reply to, for a few reasons specific to my mind, mainly about {unmarked assumptions} and {parts seeming to be for rhetorical effect}. (For that reason I'll let others discuss this instead of saying much further)

I think it's also really important for there to be clear, public signals that the community wants people to take their well-being seriously

I agree with this, but I think any 'community norm reinforcing messages' should be clearly about norms rather than framed about an individual, in cases like this where there's just a weak datapoint about the individual.

- ^

A simple example would be "Having introspected and tested different policies before determining that they're not at risk of burnout from the policy which gives this action."

A more complex example would be "a particular action can be irrational in isolation but downstream of a (suboptimal but human-attainable) policy which produces irrational behavior less than is typical", which (now) seems to me to be what OP was trying to show with this example given their comment

↑ comment by Mo Putera (Mo Nastri) · 2024-05-20T01:27:11.932Z · LW(p) · GW(p)

Holden advised against this:

Jog, don’t sprint. Skeptics of the “most important century” hypothesis will sometimes say things like “If you really believe this, why are you working normal amounts of hours instead of extreme amounts? Why do you have hobbies (or children, etc.) at all?” And I’ve seen a number of people with an attitude like: “THIS IS THE MOST IMPORTANT TIME IN HISTORY. I NEED TO WORK 24/7 AND FORGET ABOUT EVERYTHING ELSE. NO VACATIONS."

I think that’s a very bad idea.

Trying to reduce risks from advanced AI is, as of today, a frustrating and disorienting thing to be doing. It’s very hard to tell whether you’re being helpful (and as I’ve mentioned, many will inevitably think you’re being harmful).

I think the difference between “not mattering,” “doing some good” and “doing enormous good” comes down to how you choose the job, how good at it you are, and how good your judgment is (including what risks you’re most focused on and how you model them). Going “all in” on a particular objective seems bad on these fronts: it poses risks to open-mindedness, to mental health and to good decision-making (I am speaking from observations here, not just theory).

That is, I think it’s a bad idea to try to be 100% emotionally bought into the full stakes of the most important century - I think the stakes are just too high for that to make sense for any human being.

Instead, I think the best way to handle “the fate of humanity is at stake” is probably to find a nice job and work about as hard as you’d work at another job, rather than trying to make heroic efforts to work extra hard. (I criticized heroic efforts in general here.)

I think this basic formula (working in some job that is a good fit, while having some amount of balance in your life) is what’s behind a lot of the most important positive events in history to date, and presents possibly historically large opportunities today.

Also relevant are the takeaways from Thomas Kwa's effectiveness as a conjunction of multipliers [EA(p) · GW(p)], in particular:

Replies from: Emrik North, mesaoptimizer

- It's more important to have good judgment than to dedicate 100% of your life to an EA project. If output scales linearly with work hours, then you can hit 60% of your maximum possible impact with 60% of your work hours. But if bad judgment causes you to miss one or two multipliers, you could make less than 10% of your maximum impact. (But note that working really hard can sometimes enable multipliers-- see this comment by Mathieu Putz [EA(p) · GW(p)].)

- Aiming for the minimum of self-care is dangerous [EA · GW].

↑ comment by Emrik (Emrik North) · 2024-05-21T03:31:39.168Z · LW(p) · GW(p)

I think both "jog, don't sprint" and "sprint, don't jog" is too low-dimensional as advice. It's good to try to spend 100% of one's resources on doing good—sorta tautologically. What allows Johannes to work as hard as he does, I think, is not (just) that he's obsessed with the work, it's rather that he understands his own mind well enough to navigate around its limits. And that self-insight is also what enables him aim his cognition at what matters—which is a trait I care more about than ability to work hard.

People who are good at aiming their cognition at what matters sometimes choose to purposefwly flout[1] various social expectations in order to communicate "I see through this distracting social convention and I'm willing to break it in order to aim myself more purely at what matters". Readers who haven't noticed that some of their expectations are actually superfluous or misaligned with altruistic impact, will mistakenly think the flouter has low impact-potential or is just socially incompetent.

By writing the way he does, Johannes signals that he's distancing himself from status-related putative proxies-for-effectiveness, and I think that's a hard requirement for aiming more purely at the conjunction of multipliers [EA · GW][2] that matter. But his signals will be invisible to people who aren't also highly attuned to that conjunction.

- ^

"flouting a social expectation": choosing to disregard it while being fully aware of its existence, in a not-mean-spirited way.

- ^

I think the post uses an odd definition of "conjunction", but it points to something important regardless. My term for this bag of nearby considerations is "costs of compromise [EA(p) · GW(p)]":

there are exponential costs to compromising what you are optimizing for in order to appeal to a wider variety of interests

↑ comment by mesaoptimizer · 2024-05-20T11:26:52.895Z · LW(p) · GW(p)

I think what quila is pointing at is their belief in the supposed fragility of thoughts at the edge of research questions. From that perspective I think their rebuttal is understandable, and your response completely misses the point: you can be someone who spends only four hours a day working and the rest of the time relaxing, but also care a lot about not losing the subtle and supposedly fragile threads of your thought when working.

Note: I have a different model of research thought, one that involves a systematic process towards insight, and because of that I also disagree with Johannes' decisions.

Replies from: johannes-c-mayer, Mo Nastri↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2024-05-21T09:13:54.086Z · LW(p) · GW(p)

How much does this [LW(p) · GW(p)] line up with your model.

Replies from: mesaoptimizer, mesaoptimizer↑ comment by mesaoptimizer · 2024-05-21T10:33:16.790Z · LW(p) · GW(p)

Quoted from the linked comment:

Rather, I’m confident that executing my research process will over time lead to something good.

Yeah, this is a sentiment I agree with and believe. I think that it makes sense to have a cognitive process that self-corrects and systematically moves towards solving whatever problem it is faced with. In terms of computability theory, one could imagine it as an effectively computable function that you expect will return you the answer -- and the only 'obstacle' is time / compute invested.

I think being confident, i.e. not feeling hopeless in doing anything, is important. The important takeaway here is that you don’t need to be confident in any particular idea that you come up with. Instead, you can be confident in the broader picture of what you are doing, i.e. your processes.

I share your sentiment, although the causal model for it is different in my head. A generalized feeling of hopelessness is an indicator of mistaken assumptions and causal models in my head, and I use that as a cue to investigate why I feel that way. This usually results in me having hopelessness about specific paths, and a general purposefulness (for I have an idea of what I want to do next), and this is downstream of updates to my causal model that attempts to track reality as best as possible.

↑ comment by mesaoptimizer · 2024-05-21T10:35:17.982Z · LW(p) · GW(p)

Note that when I said I disagree with your decisions, I specifically meant the sort of myopia in the glass shard story -- and specifically because I believe that if your research process / cognition algorithm is fragile enough that you'd be willing to take physical damage to hold onto an inchoate thought, maybe consider making your cognition algorithm more robust.

Replies from: Emrik North, None↑ comment by Emrik (Emrik North) · 2024-05-21T17:10:33.243Z · LW(p) · GW(p)

Edit: made it a post [LW · GW].

On my current models of theoretical[1] insight-making, the beginning of an insight will necessarily—afaict—be "non-robust"/chaotic. I think it looks something like this:

- A gradual build-up and propagation of salience wrt some tiny discrepancy between highly confident specific beliefs

- This maybe corresponds to simultaneously-salient neural ensembles whose oscillations are inharmonic[2]

- Or in the frame of predictive processing: unresolved prediction-error between successive layers

- Immediately followed by a resolution of that discrepancy if the insight is successfwl

- This maybe corresponds to the brain having found a combination of salient ensembles—including the originally inharmonic ensembles—whose oscillations are adequately harmonic.

- Super-speculative but: If the "question phase" in step 1 was salient enough, and the compression in step 2 great enough, this causes an insight-frisson[3] and a wave of pleasant sensations across your scalp, spine, and associated sensory areas.

This maps to a fragile/chaotic high-energy "question phase" during which the violation of expectation is maximized (in order to adequately propagate the implications of the original discrepancy), followed by a compressive low-energy "solution phase" where correctness of expectation is maximized again.

In order to make this work, I think the brain is specifically designed to avoid being "robust"—though here I'm using a more narrow definition of the word than I suspect you intended. Specifically, there are several homeostatic mechanisms which make the brain-state hug the border between phase-transitions as tightly as possible. In other words, the brain maximizes dynamic correlation length between neurons[4], which is when they have the greatest ability to influence each other across long distances (aka "communicate"). This is called the critical brain hypothesis, and it suggests that good thinking is necessarily chaotic in some sense.

Another point is that insight-making is anti-inductive [LW · GW].[5] Theoretical reasoning is a frontier that's continuously being exploited based on the brain's native Value-of-Information-estimator, which means that the forests with the highest naively-calculated-VoI are also less likely to have any low-hanging fruit remaining. What this implies is that novel insights are likely to be very narrow targets—which means they could be really hard to hold on to for the brief moment between initial hunch and build-up of salience. (Concise handle: epistemic frontiers are anti-inductive.)

- ^

I scope my arguments only to "theoretical processing" (i.e. purely introspective stuff like math), and I don't think they apply to "empirical processing".

- ^

Harmonic (red) vs inharmonic (blue) waveforms. When a waveform is harmonic, efferent neural ensembles can quickly entrain to it and stay in sync with minimal metabolic cost. Alternatively, in the context of predictive processing, we can say that "top-down predictions" quickly "learn to predict" bottom-up stimuli.

- ^

I basically think musical pleasure (and aesthetic pleasure more generally) maps to 1) the build-up of expectations, 2) the violation of those expectations, and 3) the resolution of those violated expectations. Good art has to constantly balance between breaking and affirming automatic expectations. I think the aesthetic chills associates with insights are caused by the same structure as appogiaturas—the one-period delay of an expected tone at the end of a highly predictable sequence.

- ^

I highly recommend this entire YT series!

- ^

I think the term originates from Eliezer, but Q Home has more relevant discussion on it—also I'm just a big fan of their

chaoticoptimal reasoning style in general. Can recommend! 🍵

↑ comment by [deleted] · 2024-05-21T17:14:22.551Z · LW(p) · GW(p)

I think what quila is pointing at is their belief in the supposed fragility of thoughts at the edge of research questions.

Yes, thanks for noticing and making it explicit. It seems I was modelling Johannes as having a similar cognition type, since it would explain their behavior, which actually had a different cause.

I believe that if your research process / cognition algorithm is fragile enough that you'd be willing to take physical damage[1] to hold onto an inchoate thought, maybe consider making your cognition algorithm more robust.

My main response to 'try to change your cognition algorithm if it is fragile' is to remind that human minds tend to work differently on unexpected dimensions. (Of course, you know this abstractly, and have probably read the same post about the 'typical mind fallacy'. But the suggestion seems like harmful advice to follow for some of the minds it's directed at.) (Alternatively, since you wrote 'maybe', this comment can be seen as describing a kind of case where it would be harmful)

My highest value mental states are fragile: they are hard to re-enter at will once left, and they take some subconscious effort to preserve/cultivate. They can also feel totally immersing and overwhelming, when I manage to enter them. (I don't feel confident in my ability to qualitatively write more, as much as I would like to (maybe not here or now)).

This is analogous to Johannes' situation in a way. They believe the problem they have of working too hard is less bad to have than the standard problem of not feeling motive to work. The specific irrational behavior their problem caused also 'stands out' more to onlookers, since it's not typical. (One wouldn't expect the top comment here if one described succumbing to akrasia; but if akrasia was rare in humans, such that the distribution over most probable causes included some worrying possibilities, we might)

In the same way, I feel like my cognition-algorithm is in a local optima which is better than the standard one, where one lesser-problem I face is that my highest output mental states are 'fragile', and because this is not typical it may (when read of in isolation) seem like a sign of 'a negative deviation from the normal local optima, which this person would be better off if they corrected'.

From my inside perspective, I don't want to try to avoid fragile mental states, because I think it would only be a possible change as a more general directional change away from 'how my cognition works (at its best)' towards 'how human cognition typically works'.

(And because the fragility-of-thought feels like a small problem, once I learned to work around it, e.g learning to preserve states and augmenting with external notes. At least when compared to the problem most have of not having a chance at generating insights of a high enough quality as our situation necessitates.)

... although, if you knew of a method to reduce fragility while not reducing other things, then I'd love to try it :)

- ^

On 'willing to take physical damage ...', footnoted because it seems like a minor point - This seems like another case of avoiding the typical-mind-fallacy being important, since different minds have different pain tolerances / levels of experienced pain from a cut.

↑ comment by Mo Putera (Mo Nastri) · 2024-05-21T01:34:19.498Z · LW(p) · GW(p)

I think you're right that I missed their point, thanks for pointing it out.

I have had experiences similar to Johannes' anecdote re: ignoring broken glass to not lose fragile threads of thought; they usually entailed extended deep work periods past healthy thresholds for unclear marginal gain, so the quotes above felt personally relevant as guardrails. But also my experiences don't necessarily generalize (as your hypothetical shows).

I'd be curious to know your model, and how it compares to some of John Wentworth's posts on the same IIRC.

Replies from: mesaoptimizer↑ comment by mesaoptimizer · 2024-05-21T11:39:07.358Z · LW(p) · GW(p)

I wrote a bit about it in this comment [LW(p) · GW(p)].

I think that conceptual alignment research of the sort that Johannes is doing (and that I also am doing, which I call "deconfusion") is just really difficult. It involves skills that are not taught to people, that seems very unlikely that you'd learn by being mentored in traditional academia (including when doing theoretical CS or non-applied math PhDs), that I only started wrapping my head around after some mentorship from two MIRI researchers (that I believe I was pretty lucky to get), and even then I've spent a ridiculous amount of time by myself trying to tease out patterns to figure out a more systematic process of doing this.

Oh, and the more theoretical CS (and related math such as mathematical logic) you know, the better you probably are at this -- see how Johannes tries to create concrete models of the inchoate concepts in his head? Well, if you know relevant theoretical CS and useful math, you don't have to rebuild the mathematical scaffolding all by yourself.

I don't have a good enough model of John Wentworth's model for alignment research to understand the differences, but I don't think I learned all that much from John's writings and his training sessions that were a part of his MATS 4.0 training regimen, as compared to the stuff I described above.

Replies from: johannes-c-mayer↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2024-05-22T21:39:58.579Z · LW(p) · GW(p)

What <mathematical scaffolding/theoretical CS> do you think I am recreating? What observations did you use to make this inference? (These questions are not intended to imply any subtext meaning.)

Replies from: mesaoptimizer↑ comment by mesaoptimizer · 2024-05-23T07:49:02.835Z · LW(p) · GW(p)

Well, if you know relevant theoretical CS and useful math, you don’t have to rebuild the mathematical scaffolding all by yourself.

I didn't intend to imply in my message that you have mathematical scaffolding that you are recreating, although I expect it may be likely (Pearlian causality perhaps? I've been looking into it recently and clearly knowing Bayes nets is very helpful). I specifically used "you" to imply that in general this is the case. I haven't looked very deep into the stuff you are doing, unfortunately -- it is on my to-do list.

comment by TsviBT · 2024-05-18T21:43:40.266Z · LW(p) · GW(p)

(IMO this research isn't promising as alignment research because no existing research is promising as alignment research, but is more promising as alignment research than most stuff that I'm aware of that does get funded as alignment research.)

comment by Emrik (Emrik North) · 2024-05-18T23:37:48.747Z · LW(p) · GW(p)

I strongly endorse Johannes' research approach. I've had 6 meetings with him, and have read/watched a decent chunk of his posts and YT vids. I think the project is very unlikely to work, but that's true of all projects I know of, and this one seems at least better than almost all of them. (Reality doesn't grade on a curve.)

Still, I really hope funders would consider funding the person instead of the project, since I think Johannes' potential will be severely stifled unless he has the opportunity to go "oops! I guess I ought to be doing something else instead" as soon as he discovers some intractable bottleneck wrt his current project. He's literally the person I have the most confidence in when it comes to swiftly changing path to whatever he thinks is optimal, and it would be a real shame if funding gave him an incentive to not notice reasons to pivot. (For more on this, see e.g. Steve's post [LW · GW].)

I realize my endorsement doesn't carry much weight for people who don't know me, and I don't have much general clout here, but if you're curious here's my EA forum profile [EA · GW] and twitter. On LW, I'm mostly these users {this [LW · GW], 1 [LW · GW], 2 [LW · GW], 3 [LW · GW], 4 [LW · GW]}. Some other things which I hope will nudge you to take my endorsement a bit more seriously:

- I've been working full-time on AI alignment since early 2022.

- I rarely post about my work, however, since I'm not trying to "contribute"—I'm trying to do.

- EA has been my top life-priority since 2014 (I was 21).

- I've read the Sequences in their entirety at least once. (Low bar, but worth mentioning.)

- I have no academic or professional background because I'm financially secure with disability money. This means I can spend 100% of my time following my own sense of what's optimal for me without having to take orders or produce impressive/legible artifacts.

- I think Johannes will be much more effective if he has the same freedom, and is not tied to any particular project. I really doubt anyone other than him will be better able to evaluate what the optimal use of his time is.

Edit: I should mention that Johannes hasn't prompted me to say any of this. I took notice of him due to the candor of his posts and reached out by myself a few months ago.

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-05-19T00:13:41.118Z · LW(p) · GW(p)

I absolutely agree that it makes more sense to fund the person (or team) rather than the project. I think that it makes sense to evaluate a person's current best idea, or top few ideas when trying to decide whether they are worth funding.

Ideally, yes, I think it'd be great if the funders explicitly gave the person permission to pivot so long as their goal of making aligned AI remained the same.

Maybe a funder would feel better about this if they had the option to reevaluate funding the researcher after a significant pivot?

comment by Algon · 2024-05-18T22:17:16.441Z · LW(p) · GW(p)

Alas, I think it's quite unlikely that this article will make somebody fund me. It's just that I noticed how extremely slow I am (without collaborators) to create a proper grant application.

IDGI. Why don't you work w/ someone to get funding? If you're 15x more productive, then you've got a much better shot at finding/filling out grants and then getting funding for you and your partner.

EDIT:

Also, you're a game dev and hence good at programming. Surely you could work for free as an engineer at an AI alignment org or something and then shift into discussions w/ them about alignment?

↑ comment by Emrik (Emrik North) · 2024-05-18T23:41:10.456Z · LW(p) · GW(p)

Surely you could work for free as an engineer at an AI alignment org or something and then shift into discussions w/ them about alignment?

To be clear: his motivation isn't "I want to contribute to alignment research!" He's aiming to actually solve the problem. If he works as an engineer at an org, he's not pursuing his project, and he'd be approximately 0% as usefwl.

Replies from: nathan-helm-burger, Algon↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-05-18T23:55:58.228Z · LW(p) · GW(p)

So am I. So are a lot of would-be researchers. There are many people who think they have a shot at doing this. Most are probably wrong. I'm not saying an org is a good solution for him or me. It would have to be an org willing to encompass and support the things he had in mind. Same with me. I'm not sure such orgs exist for either of us.

With a convincing track-record, one can apply for funding to found or co-found a new org based on your ideas. That's a very high bar to clear though.

The FAR AI org might be an adequate solution? They are an organization for coordinating independent researchers.

↑ comment by Algon · 2024-05-18T23:52:01.288Z · LW(p) · GW(p)

Oh, I see! That makes a lot more sense. But he should really write up/link to his project then, or his collaborator's project.

Replies from: johannes-c-mayer, Emrik North↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2024-05-19T07:46:48.373Z · LW(p) · GW(p)

I have this description but it's not that good, because it's very unfocused. That's why I did not link it in the OP. The LessWrong dialog linked at the top of the post is probably the best thing in terms of describing the motivation and what the project is about at a high level.

Replies from: Algon↑ comment by Algon · 2024-05-19T20:45:50.384Z · LW(p) · GW(p)

I can't see a link to any LW dialog at the top.

Replies from: johannes-c-mayer↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2024-05-19T20:46:33.401Z · LW(p) · GW(p)

At the top of this document.

Replies from: Algon↑ comment by Emrik (Emrik North) · 2024-05-19T00:07:12.302Z · LW(p) · GW(p)

He linked his extensive research log on the project above, and has made LW posts of some of their progress. That said, I don't know of any good legible summary of it. It would be good to have. I don't know if that's one of Johannes' top priorities, however. It's never obvious from the outside what somebody's top priorities ought to be.

↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-05-18T23:49:39.379Z · LW(p) · GW(p)

Yeah, so... I find myself feeling like I have some things in common with the post author's situation. I don't think "work for free at an alignment org" is really an option? I don't know about any alignment orgs offering unpaid internships. An unpaid worker isn't free for an org, you still need to coordinate them, assess their output, etc. The issues with team bloat and how much to try to integrate a volunteer are substantial.

I wish I had someone I could work with on my personal alignment agenda, but it's not necessarily easy to find someone interested enough in the same topic and trustworthy enough to want to commit to working with them.

Which brings up another issue. Research which has potential capabilities side-effects is always going to be a temptation to some degree. How can potential collaborators or grant makers trust that researchers will resist the temptation to cash in on powerful advances and also prevent the ideas from leaking? If the ideas are unsafe to publish then the ideas can't contribute piecemeal to the field of alignment research, they have to be valuable alone. That places a much higher bar for success. Which makes it seem like a riskier bet from the perspective of funders. One way to partially ameliorate this issue of trust is having Orgs/Companies. They can thoroughly investigate a person's competence and trustworthiness. Then the person can potentially contribute to a variety of different projects once onboarded. Management can supervise and ensure that individual contributors are acting in alignment with the company's values and rules. That's a hard thing for a grant-making institution to do. They can't afford that level of initial evaluation, much less the ongoing supervision and technical guidance. So... Yeah. Tougher problem than it seems on first glance.

comment by TeaTieAndHat (Augustin Portier) · 2024-05-19T11:34:13.352Z · LW(p) · GW(p)

"As there were no showers, on the last day of the project you could literally smell all the hard work I had put in.": that’s the point where I’d consider dragging out the history nerds. This, for instance, could have been useful :-)

comment by philip_b (crabman) · 2024-05-19T05:27:48.131Z · LW(p) · GW(p)

I would like to make a recommendation to Johannes that he should try to write and post content in a way that invokes less feelings of cringe in people. I know it does invoke that because I personally feel cringe.

Still, I think that there isn’t much objectively bad about this post. I’m not saying the post is very good or convincing. I think its style is super weird but that should be considered to be okay in this community. These thoughts remind me of something Scott Alexander once wrote - that sometimes he hears someone say true but low status things - and his automatic thoughts are about how the person must be stupid to say something like that, and he has to consciously remind himself that what was said is actually true.

Also, all these thoughts about this social reality sadden me a little - why oh why is AI safety such a status-concerned and “serious business” area nowadays?

Replies from: Mo Nastri, johannes-c-mayer↑ comment by Mo Putera (Mo Nastri) · 2024-05-20T09:39:42.405Z · LW(p) · GW(p)

These thoughts remind me of something Scott Alexander once wrote - that sometimes he hears someone say true but low status things - and his automatic thoughts are about how the person must be stupid to say something like that, and he has to consciously remind himself that what was said is actually true.

For anyone who's curious, this is what Scott said, in reference to him getting older – I remember it because I noticed the same in myself as I aged too:

I look back on myself now vs. ten years ago and notice I’ve become more cynical, more mellow, and more prone to believing things are complicated. For example: [list of insights] ...

All these seem like convincing insights. But most of them are in the direction of elite opinion. There’s an innocent explanation for this: intellectual elites are pretty wise, so as I grow wiser I converge to their position. But the non-innocent explanation is that I’m not getting wiser, I’m just getting better socialized. ...

I’m pretty embarassed by Parable On Obsolete Ideologies, which I wrote eight years ago. It’s not just that it’s badly written, or that it uses an ill-advised Nazi analogy. It’s that it’s an impassioned plea to jettison everything about religion immediately, because institutions don’t matter and only raw truth-seeking is important. If I imagine myself entering that debate today, I’d be more likely to take the opposite side. But when I read Parable, there’s…nothing really wrong with it. It’s a good argument for what it argues for. I don’t have much to say against it. Ask me what changed my mind, and I’ll shrug, tell you that I guess my priorities shifted. But I can’t help noticing that eight years ago, New Atheism was really popular, and now it’s really unpopular. Or that eight years ago I was in a place where having Richard Dawkins style hyperrationalism was a useful brand, and now I’m (for some reason) in a place where having James C. Scott style intellectual conservativism is a useful brand. A lot of the “wisdom” I’ve “gained” with age is the kind of wisdom that helps me channel James C. Scott instead of Richard Dawkins; how sure am I that this is the right path?

Sometimes I can almost feel this happening. First I believe something is true, and say so. Then I realize it’s considered low-status and cringeworthy. Then I make a principled decision to avoid saying it – or say it only in a very careful way – in order to protect my reputation and ability to participate in society. Then when other people say it, I start looking down on them for being bad at public relations. Then I start looking down on them just for being low-status or cringeworthy. Finally the idea of “low-status” and “bad and wrong” have merged so fully in my mind that the idea seems terrible and ridiculous to me, and I only remember it’s true if I force myself to explicitly consider the question. And even then, it’s in a condescending way, where I feel like the people who say it’s true deserve low status for not being smart enough to remember not to say it. This is endemic, and I try to quash it when I notice it, but I don’t know how many times it’s slipped my notice all the way to the point where I can no longer remember the truth of the original statement.

This was back in 2017.

↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2024-05-19T09:13:09.625Z · LW(p) · GW(p)

For which parts do you feel cringe?

Replies from: crabman↑ comment by philip_b (crabman) · 2024-05-19T10:23:37.680Z · LW(p) · GW(p)

Replied in PM.