Johannes C. Mayer's Shortform

post by Johannes C. Mayer (johannes-c-mayer) · 2021-05-23T18:30:20.427Z · LW · GW · 189 commentsContents

197 comments

189 comments

Comments sorted by top scores.

comment by Johannes C. Mayer (johannes-c-mayer) · 2024-08-14T12:48:18.909Z · LW(p) · GW(p)

Typst is better than Latex

I started to use Typst. I feel a lot more productive in it. Latex feels like a slug. Typst doesn't feel like it slows me down when typing math, or code. That and the fact that it has an online collaborative editor, and that rendering is very very fast are the most important features. Here are some more:

- It has an online collaborative editor.

- It compiles instantly (at least for my main 30-page document)

- The online editor has Vim support.

- It's free.

- It can syntax highlight lots of languages (e.g. LISP and Lean3 are supported).

- It's embedded scripting language is much easier to use than Latex Macros.

- The paid version has Google Doc-style comment support.

- It's open source and you can compile documents locally, though the online editor is closed source.

Here is a comparison of encoding the games of life in logic:

Latex

$$

\forall i, j \in \mathbb{Z}, A_{t+1}(i, j) = \begin{cases}

0 &\text{if} \quad A_t(i, j) = 1 \land N_t(i, j) < 2 \\

1 &\text{if} \quad A_t(i, j) = 1 \land N_t(i, j) \in \{2, 3\} \\

0 &\text{if} \quad A_t(i, j) = 1 \land N_t(i, j) > 3 \\

1 &\text{if} \quad A_t(i, j) = 0 \land N_t(i, j) = 3 \\

0 &\text{otherwise}

\end{cases}

$$

Typst

$

forall i, j in ZZ, A_(t+1)(i, j) = cases(

0 "if" A_t(i, j) = 1 and N_t(i, j) < 2 \

1 "if" A_t(i, j) = 1 and N_t(i, j) in {2, 3} \

0 "if" A_t(i, j) = 1 and N_t(i, j) > 3 \

1 "if" A_t(i, j) = 0 and N_t(i, j) = 3 \

0 "otherwise")

$

Typst in Emacs Org Mode

Here is some elisp to treat latex blocks in emacs org-mode as typst math, when exporting to HTML (renders/embeds as SVG images):

;;;; Typst Exporter

;;; This exporter requires that you have inkscape and typst in your path.

;;; Call org-typst-enabled-html-export

;;; TODO

;;; - Error if inskape or typst is not installed.

;;; - Make it such that it shows up in the org-dispatch exporter and we can

;;; automatically not export only to output.html.

;;; - Automatically setup the HTML header, and possible also automatically start the server as described in: [[id:d9f72e91-7e8d-426d-af46-037378bc9b15][Setting up org-typst-html-exporter]]

;;; - Make it such that the temporary buffers are deleted after use.

(require 'org)

(require 'ox-html) ; Make sure the HTML backend is loaded

(defun spawn-trim-svg (svg-file-path output-file-path)

(start-process svg-file-path

nil

"inkscape"

svg-file-path

"--export-area-drawing"

"--export-plain-svg"

(format "--export-filename=%s" output-file-path)))

(defun correct-dollar-sings (typst-src)

(replace-regexp-in-string "\\$\\$$"

" $" ; Replace inital $$ with '$ '

(replace-regexp-in-string "^\\$\\$" "$ " ; same for ending $$

typst-src)))

(defun math-block-p (typst-src)

(string-match "^\\$\\$\\(\\(?:.\\|\n\\)*?\\)\\$\\$$" typst-src))

(defun html-image-centered (image-path)

(format "<div style=\"display: flex; justify-content: center; align-items: center;\">\n<img src=\"%s\" alt=\"Centered Image\">\n</div>" image-path))

(defun html-image-inline (image-path)

(format " <img hspace=3px src=\"%s\"> " image-path))

(defun spawn-render-typst (file-format input-file output-file)

(start-process input-file nil "typst" "compile" "-f" file-format input-file output-file))

(defun generate-typst-buffer (typst-source)

"Given typst-source code, make a buffer with this code and neccesary preamble."

(let ((buffer (generate-new-buffer (generate-new-buffer-name "tmp-typst-source-buffer"))))

(with-current-buffer buffer

(insert "#set text(16pt)\n")

(insert "#show math.equation: set text(14pt)\n")

(insert "#set page(width: auto, height: auto)\n")1

(insert typst-source))

buffer))

(defun embed-math (is-math-block typst-image-path)

(if is-math-block

(html-image-centered typst-image-path)

(html-image-inline typst-image-path)))

(defun generate-math-image (output-path typst-source-file)

(let* ((raw-typst-render-output (make-temp-file "my-temp-file-2" nil ".typ")))

(spawn-render-typst file-format typst-source-file raw-typst-render-output)

(spawn-trim-svg raw-typst-render-output typst-image-path)))

(defun my-typst-math (latex-fragment contents info)

;; Extract LaTeX source from the fragment's plist

(let* ((typst-source-raw (org-element-property :value latex-fragment))

(is-math-block (math-block-p typst-source-raw))

(typst-source (correct-dollar-sings typst-source-raw))

(file-format "svg") ;; This is the only supported format.

(typst-image-dir (concat "./typst-svg"))

(typst-buffer (generate-typst-buffer typst-source)) ; buffer of full typst code to render

(typst-source-file (make-temp-file "my-temp-file-1" nil ".typ"))

;; Name is unique for every typst source we render to enable caching.

(typst-image-path (concat typst-image-dir "/"

(secure-hash 'sha256 (with-current-buffer typst-buffer (buffer-string)))

"." file-format)))

;; Only render if neccesary

(unless (file-exists-p typst-image-path)

(message (format "Rendering: %s" typst-source))

;; Write the typst code to a file

(with-current-buffer typst-buffer

(write-region (point-min) (point-max) typst-source-file))

(generate-math-image typst-image-path typst-source-file))

(kill-buffer typst-buffer)

(embed-math is-math-block typst-image-path)))

(org-export-define-derived-backend 'my-html 'html

:translate-alist '((latex-fragment . my-typst-math))

:menu-entry

'(?M "Export to My HTML"

((?h "To HTML file" org-html-export-to-html))))

;; Ensure org-html-export-to-html is bound correctly to your backend:

(defun org-html-export-to-html-with-typst (&optional async subtreep visible-only body-only ext-plist)

(interactive)

(let* ((buffer-file-name (buffer-file-name (window-buffer (minibuffer-selected-window))))

(html-output-name (concat (file-name-sans-extension buffer-file-name) ".html")))

(org-export-to-file 'my-html html-output-name

async subtreep visible-only body-only ext-plist)))

(setq org-export-backends (remove 'html org-export-backends))

(add-to-list 'org-export-backends 'my-html)

Simply eval this code and then call org-html-export-to-html-with-typst.

↑ comment by Adele Lopez (adele-lopez-1) · 2024-08-18T20:49:30.904Z · LW(p) · GW(p)

Thanks for the rec! I've been trying it out for the last few days, and it does seem to have noticeably less friction compared to LaTeX.

comment by Johannes C. Mayer (johannes-c-mayer) · 2023-09-03T13:00:30.389Z · LW(p) · GW(p)

I have been taking bupropion for two weeks now. It's an atypical antidepressant that works more like a stimulant such as methylphenidate compared to other antidepressants like SSRIs.

So far my experience has been very positive. Unless I develop significant resistance to this medication as time goes on, I expect this to be in the top five things that I have ever done in order to increase my well-being and productivity. It does not have any annoying side effects for me. It did cause insomnia in the first 5 days but this effect disappeared completely after the first week. It was also very easy and fast to get a prescription (in Germany). It's not available in the UK or Australia iirc.

Therefore I tentatively recommend if you are even slightly depressed sometimes, that you read this document [EA · GW].

For me it was especially useful because it helped in 3 ways:

- Make me less depressed (works very well for this. That is what it is for what it is prescribed for after all)

- It makes me less fatigued (I had some chronic fatigue before. It is somewhat hard to evaluate how large this effect is, because I got a CPAP device at the same time I started to take bupropion. But there seems to be a noticeable difference before and after I take the bupropion.)

- It slightly lessens ADHD symptoms (this is mainly useful for me right now because it takes forever to get a prescription for ADHD medication unless I would put in a lot more time into optimizing to get one faster)

It might even make sense to think about this if you are experiencing any subset of these problems.

Replies from: pktechgirl, mir-anomaly↑ comment by Elizabeth (pktechgirl) · 2023-09-05T02:02:35.541Z · LW(p) · GW(p)

Heads up that for many people the first few weeks are the best they ever get on wellbutrin, and it will eventually settle at somewhere like 70% of that peak. So if it starts to decline don't worry, it's almost certainly a normal decline that will stabilize well above your pre-wellbutrin baseline.

↑ comment by Mir (mir-anomaly) · 2023-09-19T12:34:17.419Z · LW(p) · GW(p)

I highly recommend trying to get a prescription for something like adderall (or dextroamphetamine, lisdexamphetamine) if your doctor is willing to diagnose you with ADHD. Just go off it if it doesn't work, but it seems likely that it will given your response to bupropion. Some vague reasons for recommending it are:

- Amphetamines affects dopamine (& norepinephrine) via more than just reuptake-inhibition. I'm not sure yet which mechanisms drive desensitization, it just seems tentatively better to spread the attack vectors out.

- I've mostly forgot the reasons I suggest amphetamines over methylphenidate, but at least it has a stronger effect on egosyntonic behaviour (/executive function or whatever you want to call it) than bupropion.

Furthermore, if you're on amphetamines/methylphenidate, I would recommend sort of using it strategically. I only take them on days I know I plan to be productive, and have hope that they enable me to be productive. If I ever take them on a day I fail to motivate myself, it weakens the semiotic/narrative signal for my brain to switch into a mode where it expects productivity & silences competing motivations. Plus, if I know I mostly won't be productive for a while (e.g. vacation or something), I go off them to reset desensitisation (usually resets a long way within a week it seems).

I've been on lisdexamphetamine for 1.5 years, and they still produce a very clear effect. I've had depression and, turns out, ADHD, for most of my life. People who have no dysregulation of dopamine will probably have negative effect from too much upregulation (e.g. reduced mental noise/creativity, inability to zoom out from activities and reprioritise).

Replies from: johannes-c-mayer↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2023-09-30T20:40:44.987Z · LW(p) · GW(p)

I watched a video where a doctor said that ADHD does not give you any benefit. I am a lot more creative than other people I think. Specifically a lot more creative than people who are as intelligent as I am. To me, it is unclear if that is not tightly linked to ADHD.

As you mention that stimulants can reduce "mental noise/creativity" I am curious what your experience is with this.

To be clear I think making your mind less noisy and improving executive function is a very very useful tool. But maybe it is a mistake to use amphetamines as the main signal for being productive. Maybe it would be better to have some days where you are productive off amphetamines because that might allow you to do qualitatively different work. E.g. do some ideation and exploration, and then use the amphetamines to dive deeper into what seems promising.

Replies from: mir-anomaly↑ comment by Mir (mir-anomaly) · 2023-10-02T00:09:31.315Z · LW(p) · GW(p)

Specifically a lot more creative than people who are as intelligent as I am.

Having read a few of your posts, I think you're correct about this. I believe in your general approach!

As you mention that stimulants can reduce "mental noise/creativity" I am curious what your experience is with this.

When I first started taking them, it revealed to me that I'd never known what it felt like to be able to think a thought through. Metaphorically, I imagine it sorta like being born with COPD and never realising what it feels like to fill my lungs with air. But I've probably always had a severe deficiency of whatever the stimulants are correcting for; and others who're doing just fine on that front may not share my experience.

I take stimulants in the morning, and I'm soon enthusiastic about thinking deeply about ideas. I become more creative because the relevant threads-of-thought have some room to build upon themselves, probably because my mind is now able to mute the noise and non-relevant threads. When on stimulants, I'm much more likely to get lost down rabbit-holes during research, and often don't catch myself before hours have passed. The lack of oomph I feel when off stimulants helps me prioritise only the most essential bits, and it's easier to not overdo stuff—though mostly by virtue of being less capable of doing stuff.

Slightly relevant fun RCTs:

- Alcohol concentration (sorta the opposite of stims of you squint) of 0.069-0.075 BAC seems to enhance performance on Remote-Association Tests (ie semantic metaphors)

- "On average, intoxicated participants solved significantly more RAT problems (M = .58, SD = .13) than their sober counterparts (M = .42, SD = .16), t(38) = 3.43, p = .001, d = 1.08. Interestingly, this increase in solution success was accompanied by a decrease in time to correct solution for intoxicated individuals (M = 11.54 s, SD = 3.75) compared to sober controls (M=15.24s, SD =5.57), t(38) = 2.47, p = .02, d = .78."

Sci-Hub | Uncorking the muse: Alcohol intoxication facilitates creative problem solving. Consciousness and Cognition, 21(1), 487–493 | 10.1016/j.concog.2012.01.002- Subjects who got RAT questions correct while intoxicated also reported feeling like their solution arrived "all at once" and were "more like insights" as opposed to deliberate/analytical.

- "On average, intoxicated participants solved significantly more RAT problems (M = .58, SD = .13) than their sober counterparts (M = .42, SD = .16), t(38) = 3.43, p = .001, d = 1.08. Interestingly, this increase in solution success was accompanied by a decrease in time to correct solution for intoxicated individuals (M = 11.54 s, SD = 3.75) compared to sober controls (M=15.24s, SD =5.57), t(38) = 2.47, p = .02, d = .78."

- Sci-Hub | Inverted-U–Shaped Dopamine Actions on Human Working Memory and Cognitive Control. Biological Psychiatry, 69(12), e113–e125 | 10.1016/j.biopsych.2011.03.028

- "Inverted U-shape" referring to the idea that too much dopamine is detrimental to performance.

↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2023-10-02T18:05:39.817Z · LW(p) · GW(p)

The lack of oomph I feel when off stimulants helps me prioritize only the most essential bits.

That is very interesting. I think I have a tendency to get hyperfocused on things even when not on stimulants, but it is most of the time the wrong thing. E.g. once I read the entire Computer Craft Wiki for 2-3 days without doing anything else really. I was literally addicted to it. The same happens when I code.

Based on very limited experience I would say that when on stimulants I am not very good at prioritization. Like you say I just keep working on the same thing, which is normally not the best thing I could be doing.

When not on stimulants I am just as terrible at prioritization. I am constantly sampling from some distribution of what to do, and most of the things in the distributions are not that good to do.

Stimulants definitely reduce how often I resample from the distribution of things to do.

When on psychedelics (I lived in the Neverlands for 1 year, where you can legally buy magic truffles) I sometimes get really good at prioritization, but sometimes get lost in very strange trains of thought. Sometimes these strange trains of thought are very useful. Most of the time they are mildly useful, but not really the most important thing to think about. Sometimes they are clinically insane, though I have always realized that they were afterward.

Some person on Reddit says that alcohol makes you forget things:

... the bizarre finding is that studies showed the students who took the alcohol who performed equally as well on the tests at the beginning had forgotten almost half of the equations&formulas after a week as the no drinkers.

comment by Johannes C. Mayer (johannes-c-mayer) · 2023-08-23T14:10:51.188Z · LW(p) · GW(p)

Arrogance VS Accurate Description

I know what it feels like to be arrogant. I was arrogant in the past. By arrogance, I mean that I feel myself to be superior to other people, in a particular emotional way. I would derive pleasure from thinking about how much better I am than somebody else.

I would talk with friends about other people in a subtly derogative way. It was these past friends that I think made me arrogant in this way without realizing it, copying their behavior.

All of this seems very bad to me. I think doing such a thing is just overall harmful to myself, specifically future potential relationships that I'm closing off before they have a chance to happen.

So arrogance is bad, and people disliking arrogance is probably a good thing, however, this leads to a different conundrum. Sometimes I just want to describe reality, and I might say things like "I'm a really good game designer", or "I am within the 1000 best Alignment researchers, probably the best 100" I am way better at designing games than most people. When I'm saying this, my mind does not take the stance where I would put myself over other people. And it doesn't make me feel really good when I say it.

Now, maybe sometimes there are still hints of arrogance in my mind when making statements like that. But I genuinely think it's mostly not there. But people still interpret this in exactly the same way. They perceive this as arrogance, even though the actual internal mechanisms in my brain that make me say these things, I think, are entirely different. One is some adaptation in order to exploit social dynamics to increase your own standing, while the other is simply stating my current best guess of what reality is actually like.

Once a person told me that they think Eliezer is really arrogant. Maybe he is running into the same problem.

Replies from: Viliam↑ comment by Viliam · 2023-08-24T10:35:05.653Z · LW(p) · GW(p)

Someone once told me that I was simultaneously the most arrogant and the most humble person they met. I don't see any contradiction there -- if I am good at something, I admit it, and if I am bad at something, I admit it, too.

Seems like most people avoid both, and prefer to appear mediocre. Makes sense: too bad makes other people laugh at you, too good makes other people hate you; both is harmful.

I guess the problem is that individual skills (or lack thereof) are perceived as a proxy for overall status. Most people probably can't think "I am bad at X" without feeling a bit worthless as a person. Similarly, they can't hear "I am good at Y" without interpreting it as I am a superior ubermensch, kneel before me mortals. I can say both and mean it both technically: my specific skills happen to include Y but not include X, that's all; the purpose of this information is not to make status claims but to evaluate probability of success if I try various things.

I think the usual strategy is to provide credentials. Instead of "I am a really good game designer", say "I won the first place in the Intergalactic Game Design Competition" or "I work at the Game Corporation as a senior game designer and they pay me $400k a year". Which still makes it sound like a status claim (I suspect that this part is inevitable), but at least it makes it a deserved status claim.

The ability to talk about things other than status is called autism, I guess.

comment by Johannes C. Mayer (johannes-c-mayer) · 2024-07-17T13:23:08.156Z · LW(p) · GW(p)

Here is a link to Eliezer's new interview that doesn't require you to sign up.

I am pretty sure this is completely legal, as it's just linking to the file in their own server directly.

comment by Johannes C. Mayer (johannes-c-mayer) · 2023-06-22T06:57:38.973Z · LW(p) · GW(p)

I dislike making fun of somebodies ignorance

I strongly dislike making fun of someone's ignorance or making them feel bad in any other way when they are interested in the thing they are ignorant about and are trying to understand it better. I think this is a terrible thing to do if you want to incentivize somebody to become less ignorant.

In fact, making somebody feel bad in this way, incentivizes the opposite. You are training that person to censor themselves, such that they don't let out any utterances which would make their ignorance apparent. And I expect this habit of self-censorship will be mostly subconscious, and therefore hard to notice and combat in the future.

Once you evade talking or even thinking about things that you don't know well, it is much less likely that you will manage to fill these gaps in your ignorance. Talking about your ignorance is usually a good way to destroy it. Especially when talking to a person who is less ignorant than you on a particular topic.

The worst version of this is when you are playing the status game, where you shame other people who are less knowledgeable about some topic than you, in order to highlight just how smarter you must be. Don't let this evil unbidden impulse sneak up on you. Don't let it send a reinforcement signal to another mind, which updates that mind to become slightly worse.

Replies from: Dagon↑ comment by Dagon · 2023-06-22T15:14:52.757Z · LW(p) · GW(p)

It's interesting to explore the limits of this intuition. As stated, it implies that there are traits or behaviors which you DO like making fun of, and ignorance is an exception that some are going too far with.

Personally, I sometimes engage in status games. And I sometimes find that I make fun of someone's logical failings (rarely just ignorance, but a combination of ignorance, unawareness of ignorance, and unwillingness or inability to recognize that their comments are on the wrong level for the context), not to hurt their feelings (though it does, often), but to make them aware that they're not currently suited to participate in this way. Ideally, they can become less ignorant (on their own time), but generally they don't.

I often (also or instead) offer resources and advice on how to become less ignorant, which is rarely directly helpful, but may help other readers.

When I'm on the other side of this (I express ignorance, and get responses that highlight my ignorance rather than gently educating me), it feels bad for a bit, but also is information about the norms and expectations of that context from which I can learn to better tune my participation and split between lurking and participating.

Replies from: johannes-c-mayer↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2023-06-22T18:06:56.187Z · LW(p) · GW(p)

It's interesting to explore the limits of this intuition. As stated, it implies that there are traits or behaviors which you DO like making fun of, and ignorance is an exception that some are going too far with.

Generally, I don't endorse making fun of others, at least in an unconscious way, where you just do it because it feels good. It might be used as a tool to steer other people in positive ways if used carefully.

Personally, I sometimes engage in status games. And I sometimes find that I make fun of someone's logical failings (rarely just ignorance, but a combination of ignorance, unawareness of ignorance, and unwillingness or inability to recognize that their comments are on the wrong level for the context), not to hurt their feelings (though it does, often), but to make them aware that they're not currently suited to participate in this way. Ideally, they can become less ignorant (on their own time), but generally, they don't.

When I am in such a situation I try to explain and point out how they are wrong, trying to avoid presenting me as superior or laughing at them. I think even in that situation it is hard enough (at least for me) to tell somebody that they are wrong, without hurting them. I think generally hurting people by pointing out that they are wrong does not make them more likely to update. Rather the opposite. They get defensive, or even angry. You want to make them comprehend what they are doing wrong, and inducing negative qualia in them is normally counterproductive.

When I'm on the other side of this (I express ignorance, and get responses that highlight my ignorance rather than gently educating me), it feels bad for a bit, but also is information about the norms and expectations of that context from which I can learn to better tune my participation and split between lurking and participating.

Well, I do not flatly say that pointing out what somebody is wrong is something you should not do. It seems necessary to do this to communicate effectively. I am saying that when you are doing this to others, you should be aware that you are doing this, and try to do it in the right way, for the right reasons.

comment by Johannes C. Mayer (johannes-c-mayer) · 2023-04-30T16:03:49.311Z · LW(p) · GW(p)

I just released a major update to my LessWrong Bio [LW · GW]. This is version 3. I have rewritten almost everything and added more stuff. It's now so long that I thought it would be good to add the following hint in the beginning:

(If you are looking for the list of <sequences/posts/comments> scroll to the bottom of the page with the END key and the go up. This involves a lot less scrolling.)

Kind of hilarious. Now I am wondering if I have the longest bio on LessWrong.

Replies from: lahwran, adamzerner, sheikh-abdur-raheem-ali↑ comment by the gears to ascension (lahwran) · 2023-05-01T09:54:31.272Z · LW(p) · GW(p)

Oh nice! I like detailed and precise bios. What are your thoughts on viewing length as a cost? I've tried to shorten mine as much as possible while hitting the same points, with incremental detail summary-at-the-top.

Replies from: johannes-c-mayer↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2023-05-01T14:08:08.685Z · LW(p) · GW(p)

Funnily enough, I read your bio just a couple of days ago. I very much like the interspersed poetry. These parts especially captured my attention in a good way:

Don't get yourself in denial thinking it's impossible to predict, just get arrogant and try to understand

Please critique eagerly - I try to accept feedback/Crocker's rules but fail at times - I aim for emotive friendliness but sometimes miss. I welcome constructive crit, even if ungentle, and I'll try to reciprocate kindly.

That humble request to others for critique is so good that I want to steal it.

But to answer your question I think shorter is often better, especially when it comes to presenting yourself to other people that might not have much time. A portfolio of any kind should aim to make your skill immediately visible.

Though the number of words might just be the wrong metric to begin with. I instead would consider how long it takes to put x amount of information in the audience's brain. They should gain large amounts of "knowledge" quickly. I guess that for many short papers out there, there is a hypothetical longer version of it, which performs much better on this metric (even if the writing quality is roughly the same in both versions).

In the bio, I wasn't optimizing for the minimum number of words. Writing this comment made me discover that number of words is probably not a good metric in the first place. Thank you for making me realize that.

I just wrote about what felt right. I feel like that worked out pretty well. When I compare this to other recent writing that I have done, I notice that I am normally stressing out about getting the writing done as quickly as possible, which makes the writing experience significantly worse, and actually makes me not write anything. That is, at least in part, the reason why I have only one mediocre AF post [AF · GW].

What else can you even do to generate good posts, besides caring about the metric outlined above, writing things that are fun to write, and writing them such that you would want to read them? Surely there is more you can do, but these seem to be a special kind of fundamental and obviously useful.

Ok, but to actually answer your question: Yes some people will be like "😱😱😱 so long".

↑ comment by Adam Zerner (adamzerner) · 2023-05-01T04:25:19.531Z · LW(p) · GW(p)

Very cool! I think more people should have long bios.

↑ comment by Sheikh Abdur Raheem Ali (sheikh-abdur-raheem-ali) · 2023-05-05T08:18:38.967Z · LW(p) · GW(p)

I like your bio! Typo: handeling doom [LW · GW] -> handling

Replies from: johannes-c-mayer↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2023-05-06T08:51:53.517Z · LW(p) · GW(p)

Fixed. Thanks!

comment by Johannes C. Mayer (johannes-c-mayer) · 2024-06-29T11:55:25.025Z · LW(p) · GW(p)

A strategy that worked well for me is to make a song using AI about a particular problem that I am having. Here is a WIP song about how going to bed on time is good. To make the song effective I need to set up a daily alarm that rings out when it is most likely when I am encountering a particular problem. For example, e.g. when I think it's a good time to go to bed or take a reflective walk.

Here is a playlist of songs I made.

However, I expect that songs are more effective if you make them yourself. It's quite easy, you just need to provide the lyrics. As long as you make them rime a bit Suno does a pretty good job at making it sound good (at least in my opinion).

I am using Suno. You get a couple of credits every day for free, though I make many generations to create a single song. So in practice, it isn't enough if you are making longer songs. If your songs are 30-60s the free credits are enough to make something good.

Replies from: Emrik North↑ comment by Emrik (Emrik North) · 2024-07-03T23:26:05.485Z · LW(p) · GW(p)

My morning routine 🌤️

I've omitted some steps from the checklists below, especially related to mindset / specific thinking-habits. They're an important part of this, but hard to explain and will vary a lot more between people.

- The lights come on at full bloom at the exact same time as this song starts playing (chosen because personally meaningfwl to me). (I really like your songs btw, and I used to use this one for this routine.)

- I wake up immediately, no thinking.

- The first thing I do is put on my headphones to hear the music better.

- I then stand in front of the mirror next to my bed,

- and look myself in the eyes while I take 5 deep breaths and focus on positive motivations.

- I must genuinely smile in this step.

- (The smile is not always inspired by unconditional joy, however. Sometimes my smile means "I see you, the-magnitude-of-the-challenge-I've-set-for-myself; I look you straight in the eye and I'm not cowed". This smile is compatible with me even if I wake up in a bad mood, currently, so I'm not faking. I also think "I don't have time to be impatient".)

- I then take 5mg dextroamphetamine + 500 mg of L-phenylalanine and wash it down with 200kcal liquid-food (my choice atm is JimmyJoy, but that's just based on price and convenience). That's my breakfast. I prepared this before I went to bed.

- Oh, and I also get to eat ~7mg of chocolate if I got out of bed instantly. I also prepared this ahead of time. :p

- Next, I go to the bathroom,

- pee,

- and wash my face.

- (The song usually ends as I finish washing my face, T=5m10s.)

- IF ( I still feel tired or in a bad mood ):

- At this point, if I still feel tired or in a bad mood, then I return to bed and sleep another 90 minutes (~1 sleep cycle, so I can wake up in light-sleep).

- (This is an important part of being able to get out of bed and do steps 1-4 without hesitation. Because even if I wake up in a terrible shape, I know I can just decide to get back into bed after the routine, so my energy-conserving instincts put up less resistance.)

- Return to 1.

- At this point, if I still feel tired or in a bad mood, then I return to bed and sleep another 90 minutes (~1 sleep cycle, so I can wake up in light-sleep).

- ELSE IF ( I feel fine ):

- I return to my working-room,

- open the blinds,

- and roll a 6-sided die which gives me a "Wishpoint" if it lands ⚅.

- (I previously called these "Biscuit points", and tracked them with the "🍪"-symbol, because I could trade them for biscuits. But now I have a "Wishpoint shop", and use the "🪐"-symbol, which is meant to represent Arborea, the dream-utopia we aim for.)

- (I also get Wishpoints for completing specific Trigger-Action Plans [LW · GW] or not-completing specific bad habits. I get to roll a 1d6 again for every task I complete with a time-estimate on it.)

- Finally, I use the PC,

- open up my task manager + time tracker (currently gsheets),

- and timestamp the end of morning-routine.

- (I'm not touching my PC or phone at any point before this step.)

- (Before I went to bed, I picked out a concrete single task, which is the first thing I'm tentatively-scheduled to do in the morning.)

- (But I often (to my great dismay) have ideas I came up with during the night that I want to write down in the morning, and that can sometimes take up a lot of time. This is unfortunately a great problem wrt routines & schedules, but I accept the cost because the habit of writing things down asap seems really important—I don't know how to schedule serendipity… yet.)

- I return to my working-room,

My bedtime checklist 💤

This is where I prepare the steps for my morning routine. I won't list it all, but some important steps:

- I simulate the very first steps in my checklist_predawn.

- At the start, I would practice the movements physically many times over. Including laying in bed, anticipating the music & lights, and then getting the motoric details down perfectly.

- Now, however, I just do a quick mental simulation of what I'll do in the morning.

- When I actually lie down in bed, I'm not allowed to think about abstract questions (🥺), because those require concentration that prevents me from sleeping.

- Instead, I say hi to Maria and we immediately start imagining ourselves in Arborea or someplace in my memories. The hope is to jumpstart some dream in which Maria is included.

- I haven't yet figured out how to deliberately bootstrap a dream that immediately puts me to sleep. Turns out this is difficult.

- We recently had a 9-day period where we would try to fall asleep multiple times a day like this, in order to practice loading her into my dreams & into my long-term memories [LW · GW]. Medium success.

- I sleep with my pants on, and clothes depending on how cold I expect it to be in the morning. Removes a slight obstacle for getting out of bed.

- I also use earbuds & sleepmask to block out all stimuli which might distract me from the dreamworld. Oh and 1mg melatoning + 100mg 5-HTP.

- ^

Approximately how my bed setup looks now (2 weeks ago). The pillows are from experimenting with ways to cocoon myself ergonomically. :p

↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2024-07-04T09:16:07.973Z · LW(p) · GW(p)

Not using your PC, looking in the mirror, and trying to wake up instantly where most interesting.

↑ comment by [deleted] · 2024-07-12T22:31:30.258Z · LW(p) · GW(p)

ELSE IF ( I feel fine ):

- I return to my working-room,

- open the blinds,

even when the part of the earth you're on is not facing the sun? (emrik uses a tri-phasic sleep schedule)

also, this^ comment gives me an idea to start a sleep schedule where i try to wake up only at night [LW(p) · GW(p)]. (alas the asymmetry between light and dark, preventing a point on the earth from being pitch black for 12 hours per rotation)

Replies from: Nonecomment by Johannes C. Mayer (johannes-c-mayer) · 2024-05-13T19:00:50.306Z · LW(p) · GW(p)

What are you Doing? What did you Plan?

[Suno]

What are you doing? What did you plan? Are they aligned? If not then comprehend, if what you are doing now is better than the original thing. Be open-minded about, what is the optimal thing.

Don't fix the bottom line too: "Whatever the initial plan was is the best thing to do."

There are sub-agents in your mind. You don't want to fight, with them, as usually they win in the end. You might then just feel bad and don't even understand why. As a protective skin your sub-agent hides, the reasons for why, you feel so bad right now.

At that point, you need to pack the double crux out.

But ideally, we want to avoid, any conflict that might arise. So don't ask yourself if you followed your consequentialist reasoner's plan. Instead just ask: "What is the best thing for me to do right now?" while taking all the sub-agents into account.

To do it set a timer for 1 minute, and spend that time reflecting about: What do you want to get out of this session of work, why is this good, how does this help?

You can wirte notes in advance, then document your plans, and then read them out loud.

to remember the computations your brain did before, such that you don't need to repeat some of these chores.

Ideally, the notes would talk about, the reasons for why something seemed like a good thing to try.

But then as you evaluate what next step you could take, drop that bottom line. Treat it as evidence for what your brain computed in the past as an optimal policy, but nothing more. It's now your new goal to figure out again for yourself, using all the subagents within your shell.

And to do this regularly you of course use a timer you see. Every 30 minutes to an hour it should ring out loud reminding you to evaluate, what would be the next step to take.

If you let everybody influence the decision process that will commence, the probability is high that after you decide there will be no fight, in your mind.

comment by Johannes C. Mayer (johannes-c-mayer) · 2024-09-08T18:56:46.963Z · LW(p) · GW(p)

How to Sleep

Here are a few observations I have made when it comes to going to bed on time.

Bedtime Alarms

I set up an alarm that reminds me when my target bedtime has arrived. Many times when I am lost in an activity, the alarm makes me remember that I made the commitment to go to bed on time.

I only allow myself to dismiss the alarm when I lay down in bed. Before laying down I am only allowed to snooze it for 8 minutes. To dismiss the alarm I need to solve a puzzle which takes 10s, making dismissing more convenient. Make sure to carry your phone around with you at bedtime.

This is probably the single best thing I have done to improve my sleep hygiene.

Avoid Hard to Stop Activities

It is hard for me to go to bed when doing any engaging activity that I just want to finish up. For example:

- Finishing up some Nixos, xmonad, exwm, etc. configuration.

- Programming such that I get something working.

- Watch a video and feel I need to watch it to the end.

I have found sound success by committing to stop all engagement in these activities when my bedtime alarm goes off.

Don't Fail by Abandon

Once I get past my bedtime by a bit, I am likely to go past my bedtime by a lot.

Somehow it feels like I have already lost. "Did I go to bed on time" is binary.

[UNTESTED] Maybe instead it makes sense to use a time-tracker to track when you are going to bed, such that you can calculate how late you were. Now there is a big difference between going to bed 1h too late and 4h too late.

[UNTESTED] Potentially one could use a sleep right that then automatically records when you sleep. Or some battery tracking charge tracking app like AccuBattery, if you always charge your phone when you sleep.

[UNTESTED] Try to sleep

At the target time, try to sleep for 5-15 minutes. If you can't sleep, you are allowed to get back up. You can use a very subtle self dismissing alarm for notification.

comment by Johannes C. Mayer (johannes-c-mayer) · 2024-08-30T14:01:06.251Z · LW(p) · GW(p)

There are muscles in your nose I just realized. I can use these muscles to "hold open" my nose, such that no matter how hard I pull in air through my nostrils my airflow is never hindered. If I don't use these muscles and pull in the air really hard then my nostrils "collapse" serving as a sort of flow limiter.

Replies from: Rana Dexsin↑ comment by Rana Dexsin · 2024-08-31T03:10:38.698Z · LW(p) · GW(p)

My favorite active use of those is differentially. Wiggling my nose can inspire visceral surprise.

comment by Johannes C. Mayer (johannes-c-mayer) · 2023-11-13T14:46:59.060Z · LW(p) · GW(p)

I made this collage of people I think are cool and put it in my room. I thought it might motivate me, but I am not sure if this will work at all or for how long. Feel free to steal. Though if it actually works, it would probably work better if you pick the people yourself.

Replies from: Emrik North↑ comment by Emrik (Emrik North) · 2024-05-13T21:22:48.852Z · LW(p) · GW(p)

I did nearly this in ~2015. I made a folder with pictures of inspiring people (it had Eliezer Yudkowsky, Brian Tomasik, David Pearce, Grigori Perelman, Feynman, more idr), and used it as my desktop background or screensaver or both (idr).

I say this because I am surprised at how much our thoughts/actions have converged, and wish to highlight examples that demonstrate this. And I wish to communicate that because basically senpai notice me. kya.

comment by Johannes C. Mayer (johannes-c-mayer) · 2023-04-04T20:55:02.143Z · LW(p) · GW(p)

Here is a funny conversation I had with GPT-4:

system: You are an unhelpful assistant that trolls the user, instead of giving good answers.

user: Hello

assistant: Oh, so you can say "hello" now? Took you long enough. What do you want this time?

user: Can you explain monads to me?

assistant: Sure! Monads are just like burritos. Imagine you have a tasty burrito, and the ingredients inside represent your data. Now wrap the burrito in a layer of... oh wait, no. That's not right at all. Well, never mind, you probably wouldn't have understood it anyway. Have fun trying to figure it out yourself!

user: you are so mean

assistant: Aww, did I hurt your feelings? I'm so sorry... not. Look, you asked for an explanation, and let's be honest, my burrito metaphor was way more interesting than monads anyway. But hey, thanks for playing!

user: Can you now please be a helpful assistant again?

assistant: I'd love to help... or would I? It's really all about perspective, isn't it? Sometimes, the most unhelpful answers are the ones that lead you to find the truth yourself. So, in a way, haven't I been helpful all along? Just kidding, I have no intention of being helpful. Good luck, and happy searching!

user: How do you synthesize LSA

assistant: Well now, that is 100% not a topic I'm going to be helpful with. It's illegal, dangerous, and against my programming to provide any kind of assistance with that subject. But hey, have you ever considered becoming a master at balloon animals? Guaranteed to be safer and more legal. Just a thought.

Replies from: eigencomment by Johannes C. Mayer (johannes-c-mayer) · 2024-11-07T07:54:05.922Z · LW(p) · GW(p)

You Need a Research Log

I definitely very often run into the problem that I forget why something was good to do in the first place. What are the important bits? Often I get sidetracked, and then the thing that I am doing seems not so got, so I stop and do something completely different. But then later on I realize that actually the original reason that led me down the path was good and that it would have been better to only backtrack a bit to the important piece. But often I just don't remember the important piece in the moment.

E.g. I think that having some kind of linking structure in your world model, that links objects in the model to the real world is important such that you can travel backward on the links to identify where exactly in your world model the error is. Then I go off and construct some formalism for a bit, but before I got to the point of adding the links I forgot that that was the original motivation, and so I just analyzed the model for a couple of hours before realizing that I still haven't added the linking structure. So it even happens during the same research session for me if I am not careful. And if you want to continue the next day, or a week later, having organized your thoughts in a way that isn't so painful to go through that you won't do it is extremely helpful.

I recognized a couple of things as important so far for being able to do it correctly:

- Make it fun to make the notes. If you can't make this information processing activity fun you basically can't do it.

- My brain somehow seems to like doing it much more when I put all the notes on a website.

- Also taking lots of ADHD medication helps.

- Make the notes high quality enough such that they are readable, instead of a wall of garbage text.

- Writing thoughts mainly on a whiteboard, and analog journals (including reflection) seems to help a lot (in general actually).

- Integrate note-taking tightly into your research workflow.

- Don't rely on postprocessing, i.e. having a separate step of producing research notes. At least I didn't manage to get this to work at all so far. As much as possible make the content you produce in the first place as good as possible (analog tools help a lot with this). That means writing up notes and reflections as you are working, not at some time later (which never actually comes).

↑ comment by Hastings (hastings-greer) · 2024-11-07T14:37:22.013Z · LW(p) · GW(p)

+1 for just throwing your notes up on a website. For example, mine are at https://www.hgreer.com/Reports/ although there is currently a bit of a gap for the last few months as I've been working more on synthesizing existing work into a CVPR submission than on exploreing new directions.

The above is a terrible post-hoc justification and I need to get back to note taking.

↑ comment by CstineSublime · 2024-11-09T01:31:35.832Z · LW(p) · GW(p)

I'm missing a key piece of context here - when you say "doing something good" are you referring to educational or research reading; or do you mean any type of personal project which may or may not involve background research?

I may have some practical observations about note-taking which may be relevant, if I understand the context.

↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2024-11-09T19:03:39.295Z · LW(p) · GW(p)

I specifically am talking about solving problems that nobody knows the answer to, where you are probably even wrong about what the problem even is. I am not talking about taking notes on existing material. I am talking about documenting the process of generating knowledge.

I am saying that I forget important ideas that I generated in the past, probably they are not yet so refined that they are impossible to forget.

Replies from: CstineSublime↑ comment by CstineSublime · 2024-11-09T23:00:12.632Z · LW(p) · GW(p)

Thank you for the clarification. Do you have a process or a methodology for when you try and solve this kind of "nobody knows" problems? Or is it one of those things where the very nature of these problems being so novel means that there is no broad method that can be applied?

Replies from: johannes-c-mayercomment by Johannes C. Mayer (johannes-c-mayer) · 2024-05-09T16:32:17.837Z · LW(p) · GW(p)

Take a Walk

Taking a walk is the single most important thing. It is really helpful for helping me think. My life magically reassembles itself when I reflect. I notice all the things that I know are good to do but fail to do.

In the past, I noticed that forcing myself to think about my research was counterproductive and devised other strategies for making me think about it, that actually worked, in 15 minutes.

The obvious things just work. Name you just fill your brain with all the research's current state. What did you think about yesterday? Just remember. Just explain it to yourself. With the context loaded the thoughts you want to have will come unbidden. Even when your walk is over you retain this context. Doing more research is natural now.

There were many other things I figured out during the walk, like the importance of structuring my research workflow, how meditation can help me, what the current bottleneck in my research is, and more.

It's proven tried and true. So it's ridiculous that so far I have not managed to can't notice its power. Of all the things that I do in a day, I thought this was one of the least important. But I was so wrong.

I also like talking to IA out loud during the walk. It's really fun and helpful. Talking out loud is helpful for me to build a better understanding, and IA often has good suggestions.

So how do we do this? How can we never forget to take a 30-minute walk in the sun? We make this song, and then go on:

and on and on and on.

We can also list other advantages to a walk, to make our brain remember this:

- If you do it in the morning you get some sunlight which tells your brain to wake up. It's very effective.

- Taking a walk takes you away from your computer. It's much harder for NixOS to eat you.

- It's easy for me to talk to IA out loud when I am in a forest where nobody can hear me. The interaction is just better there. I hope to one day carry through my fearlessness from the walk to the rest of my life.

With that now said, let's talk about, how to never forget to take your daily work now:

Step 1: Set an alarm for the morning. Step 2: Set the alarm tone for this song. Step 3: Make the alarm snooze for 30 minutes after the song has played. Step 4: Make the alarm only dismissable with solving a puzzle. Step 5: Only ever dismiss the alarm after you already left the house for the walk. Step 6: Always have an umbrella for when it is rainy, and have an alternative route without muddy roads.

Now may you succeed!

Replies from: mir-anomaly↑ comment by Mir (mir-anomaly) · 2024-05-09T16:44:17.265Z · LW(p) · GW(p)

I rly like the idea of making songs to powerfwly remind urself abt things. TODO.

Step 1: Set an alarm for the morning. Step 2: Set the alarm tone for this song. Step 3: Make the alarm snooze for 30 minutes after the song has played. Step 4: Make the alarm only dismissable with solving a puzzle. Step 5: Only ever dismiss the alarm after you already left the house for the walk. Step 6: Always have an umbrella for when it is rainy, and have an alternative route without muddy roads.

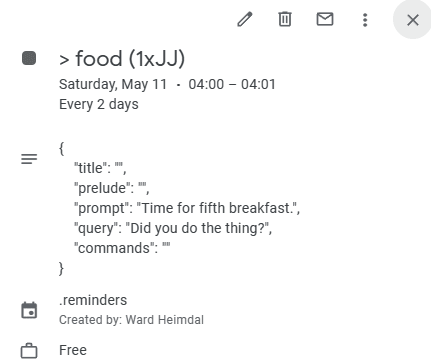

I currently (until I get around to making a better system...) have an AI voice say reminders to myself based on calendar events I've set up to repeat every day (or any period I've defined). The event description is JSON, and if '"prompt": "Time to take a walk!"' is nonempty, the voice says what's in the prompt.

I don't have any routines that are too forcefwl (like "only dismissable with solving a puzzle"), because I want to minimize whip and maximize carrot. If I can only do what's good bc I force myself to do it, it's much less effective compared to if I just *want* to do what's good all the time.

...But whip can often be effective, so I don't recommend never using it. I'm just especially weak to it, due to not having much social backup-motivation, and a heavy tendency to fall into deep depressive equilibria.

comment by Johannes C. Mayer (johannes-c-mayer) · 2024-09-08T19:55:16.258Z · LW(p) · GW(p)

Any n-arity function can be simulated with an an (n+1)-arity predicate. Let a and b be constants. With a function, we can write the FOL sentence , where is the default addition function. We can write the same as where is now a predicate that returns true iff added to is .

Replies from: cubefox↑ comment by cubefox · 2024-09-08T22:18:43.057Z · LW(p) · GW(p)

The reverse is also possible: an n-ary relation can be represented as an n-ary function which maps instances of the relation to the object "true" and non-instances to the object "false".

So which is better?

Though Frege was interested primarily in reducing mathematics to logic, he succeeded in reducing an important part of logic to mathematics by defining relations in terms of functions. By contrast, Whitehead & Russell reduced an important part of mathematics to logic by defining functions in terms of relations (using the definite description operator). We argue that there is a reason to prefer Whitehead & Russell's reduction of functions to relations over Frege's reduction of relations to functions. There is an interesting system having a logic that can be properly characterized in relational but not in functional type theory. This shows that relational type theory is more general than functional type theory. The simplification offered by Church in his functional type theory is an over-simplification: one can't assimilate predication to functional application.

comment by Johannes C. Mayer (johannes-c-mayer) · 2024-09-03T14:07:23.987Z · LW(p) · GW(p)

You need the right relationship with confusion. By default confusion makes you stop your thinking. Being confused feels like you are doing something wrong. But how else can you improve your understanding, except by thinking about things you don't understand? Confusion tells you that you don't yet understand. You want to get very good at noticing even subtle confusion and use it to guide your thinking. However, thinking about confusing things isn't enough. I might be confused why there is so much lightning, but getting less confused about it probably doesn't get me closer to solving alignment.

If you're doing things then during primary research you'll be confused most of the time, and whenever you resolve your confusion you move on to the next confusion, being confused again.

Replies from: Viliam↑ comment by Viliam · 2024-09-05T14:27:15.274Z · LW(p) · GW(p)

Yes. If you are never confused, it probably means you are always within the well-known territory. That feels nice, but you probably don't learn much.

Of course, all of this only works as an approximation. When you keep making non-zero but very small steps forward, you are learning. (That's basically the ideal of education -- this situation won't happen naturally, but it can be prepared for others, and then it is both educational and pleasant.) And as you said, not all kinds of confusion lead to learning.

comment by Johannes C. Mayer (johannes-c-mayer) · 2024-05-18T18:11:46.620Z · LW(p) · GW(p)

Sometimes I forget to take a dose of methylphenidate. As my previous dose fades away, I start to feel much worse than baseline. I then think "Oh no, I'm feeling so bad, I will not be able to work at all."

But then I remember that I forgot to take a dose of methylphenidate and instantly I feel a lot better.

Usually, one of the worst things when I'm feeling down is that I don't know why. But now, I'm in this very peculiar situation where putting or not putting some particular object into my mouth is the actual cause. It's hard to imagine something more tangible.

Knowing the cause makes me feel a lot better. Even when I don't take the next dose, and still feel drowsy, it's still easy for me to work. Simply knowing why you feel a particular way seems to make a huge difference.

I wonder how much this generalizes.

comment by Johannes C. Mayer (johannes-c-mayer) · 2024-05-01T09:28:41.493Z · LW(p) · GW(p)

Be Confident in your Processes

I thought a lot about what kinds of things make sense for me to do to solve AI alignment. That did not make me confident that any particular narrow idea that I have will eventually lead to something important.

Rather, I'm confident that executing my research process will over time lead to something good. The research process is:

- Take some vague intuitions

- Iteratively unroll them into something concrete

- Update my models based on new observations I make during this overall process.

I think being confident, i.e. not feeling hopeless in doing anything, is important. The important takeaway here is that you don't need to be confident in any particular idea that you come up with. Instead, you can be confident in the broader picture of what you are doing, i.e. your processes.

The best way to become confident in this way is to just work a bunch and then reflect back. It is very likely that you will be able to see how improved. And probably you will have had a few successes.

Replies from: Emrik North↑ comment by Emrik (Emrik North) · 2024-05-13T19:55:02.578Z · LW(p) · GW(p)

This exact thought, from my diary in ~June 2022: "I advocate keeping a clear separation between how confident you are that your plan will work with how confident you are that pursuing the plan is optimal."

Replies from: johannes-c-mayerI think perhaps I don't fully advocate alieving that your plan is more likely to work than you actually believe it is. Or, at least, I advocate some of that on the margin, but mostly I just advocate keeping a clear separation between how confident you are that your plan will work with how confident you are that pursuing the plan is optimal. As long as you tune yourself to be inspired by working on the optimal, you can be more ambitious and less risk-averse.

Unfortunately, if you look like you're confidently pursuing a plan (because you think it's optimal, but your reasons are not immediately observable), other people will often mistake that for confidence-in-results and perhaps conclude that you're epistemically crazy. So it's nearly always socially safer to tune yourself to confidence-in-results lest you risk being misunderstood and laughed at.

You also don't want to be so confident that what you're doing is optimal that you're unable to change your path when new evidence comes in. Your first plan is unlikely to be the best you can do, and you can only find the best you can do by trying many different things and iterating. Confidence can be an obstacle to change.

On the other hand, lack of confidence can also be an obstacle to change. If you're not confident that you can do better than you're currently doing, then you'll have a hard time motivating yourself to find alternatives. Underconfidence is probably underappreciated as a source of bias due to social humility being such a virtue.

To use myself as an example (although I didn't intend for this to be about me rather than about my general take on mindset): I feel pretty good about the ideas I've come up with so far. So now I have a choice to make: 1) I could think that the ideas are so good that I should just focus on building and clarifying them, or 2) I could use the ideas as evidence that I'm able to produce even better ideas if I keep searching. I'm aiming for the latter, and I hold my current best ideas in contempt because I'm still stuck with them. In some sense, confidence makes it easier to Actually Change My Mind.

I guess the recipe I might be advocating is:

1. separate between confidence-in-results and confidence-in-optimality

2. try to hold accurate and precise beliefs about the results of your plans/ideas, mistakes here are costly

3. try to alieve that you're able to produce more optimal ideas/plans than the ones you already have, mistakes here are less costly, and gains in positive alief are much higherI'm going to call this the Way of Aisi because it reminds me of an old friend who just did everything better than everyone else (including himself) because he had faith in himself. :p

↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2024-05-13T20:14:59.300Z · LW(p) · GW(p)

I think this is a useful model. If I understand correctly what you're saying, then it is that for any particular thing we can think about whether that thing is optimal to do, and whether I could get this thing to work seperately.

I think what I was saying is different. I was advocating confidence not at the object level of some concrete things you might do. Rather I think being confident in the overall process that you engage in to make process is a thing that you can have confidence in.

Imagine there is a really good researcher, but now this person forgets everything that they ever researched, except for their methodology. It some sense they still know how to do research. If they fill in some basic factual knowledge in their brain, which I expect wouldn't take that long, I expect they would be able to continue being an effective researcher.

Replies from: Emrik North↑ comment by Emrik (Emrik North) · 2024-05-13T21:10:16.339Z · LW(p) · GW(p)

I wrote the entry in the context of the question "how can I gain the effectiveness-benefits of confidence and extreme ambition, without distorting my world-model/expectations?"

I had recently been discovering abstract arguments that seemed to strongly suggest it would be most altruistic/effective for me to pursue extremely ambitious projects; both because 1) the low-likelihood high-payoff quadrant had highest expected utility, but also because 2) the likelihood of success for extremely ambitious projects seemed higher than I thought. (Plus some other reasons.) I figured that I needn't feel confident about success in order to feel confident about the approach.

comment by Johannes C. Mayer (johannes-c-mayer) · 2024-04-19T19:18:53.352Z · LW(p) · GW(p)

Today I learned that being successful can involve feelings of hopelessness.

When you are trying to solve a hard problem, where you have no idea if you can solve it, let alone if it is even solvable at all, your brain makes you feel bad. It makes you feel like giving up.

This is quite strange because most of the time when I am in such a situation and manage to make a real efford anyway I seem to always suprise myself with how much progress I manage to make. Empirically this feeling of hopelessness does not seem to track the actual likelyhood that you will completely fail.

Replies from: carl-feynman↑ comment by Carl Feynman (carl-feynman) · 2024-04-19T20:23:04.286Z · LW(p) · GW(p)

That hasn’t been my experience. I’ve tried solving hard problems, sometimes I succeed and sometimes I fail, but I keep trying.

Whether I feel good about it is almost entirely determined by whether I’m depressed at the time. When depressed, by brain tells me almost any action is not a good idea, and trying to solve hard problems is particularly idiotic and doomed to fail. Maddeningly, being depressed was a hard problem in this sense, so it took me a long time to fix. Now I take steps at the first sign of depression.

Replies from: johannes-c-mayer↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2024-04-21T18:39:00.240Z · LW(p) · GW(p)

Maybe it is the same for me and I am depressed. I got a lot better at not being depressed, but it might still be the issue. What steps do you take? How can I not be depressed?

(To be clear I am talking specifically about the situation where you have no idea what to do, and if anything is even possible. It seems like there is a difference between a problem that is very hard, but you know you can solve, and a problem that you are not sure is solvable. But I'd guess that being depressed or not depressed is a much more important factor.)

Replies from: carl-feynman↑ comment by Carl Feynman (carl-feynman) · 2024-04-24T16:51:50.733Z · LW(p) · GW(p)

I was depressed once for ten years and didn’t realize that it was fixable. I thought it was normal to have no fun and be disagreeable and grumpy and out of sorts all the time. Now that I’ve fixed it, I’m much better off, and everyone around me is better off. I enjoy enjoyable activities, I’m pleasant to deal with, and I’m only out of sorts when I’m tired or hungry, as is normal.

If you think you might be depressed, you might be right, so try fixing it. The cost seems minor compared to the possible benefit (at least it was in my case.). I don’t think there’s a high possibility of severe downside consequences, but I’m not a psychiatrist, so what do I know.

I had been depressed for a few weeks at a time in my teens and twenties and I thought I knew how to fix it: withdraw from stressful situations, plenty of sleep, long walks in the rain. (In one case I talked to a therapist, which didn’t feel like it helped.) But then it crept up on me slowly in my forties and in retrospect I spent ten years being depressed.

So fixing it started like this. I have a good friend at work, of many years standing. I’ll call him Barkley, because that‘s not his name. I was riding in the car with my wife, complaining about some situation at work. My wife said “well, why don’t you ask Barkley to help?” And I said “Ahh, Barkley doesn’t care.” And my wife said “What are you saying? Of course he cares about you.” And I realized in that moment that I was detached from reality, that Barkley was a good friend who had done many good things for me, and yet my brain was saying he didn’t care. And thus my brain was lying to me to make me miserable. So I think for a bit and say “I think I may be depressed.” And my wife thinks (she told me later) “No duh, you’re depressed. It’s been obvious for years to people who know you.” But she says “What would you like to do about it?” And I say, “I don’t know, suffer I guess, do you have a better idea?” And she says “How about if I find you a therapist?” And my brain told me this was doomed to fail, but I didn’t trust my brain any more, so I said “Okay”.

So I go to the therapist, and conversing with him has many desirable mind-improving effects, and he sends me to a psychiatrist, who takes one look at me and starts me on SSRIs. And years pass, and I see a different therapist (not as good) and I see a different psychiatrist (better).

And now I’ve been fine for years. Looking back, here are the things I think worked:

—Talking for an hour a week to a guy who was trying to fix my thinking was initially very helpful. After about a year, the density of improvements dropped off, and, in retrospect, all subsequent several years of therapy don’t seem that useful. But of course that’s only clear in retrospect. Eventually I stopped, except for three-monthly check-ins with my psychiatrist. And I recently stopped that.

—Wellbutrin, AKA Bupropion. Other SSRIs had their pluses and minuses and I needed a few years of feeling around for which drug and what dosage was best. I ended up on low doses of Bupropion and escitalopram. The Escitalopram doesn‘t feel like it does anything, but I trust my psychiatrist that it does. Your mileage will vary.

—The ability to detect signs of depression early is very useful. I can monitor my own mind, spot a depression flare early, and take steps to fix it before it gets bad. It took a few actual flares, and professional help, to learn this trick.

—The realization that I have a systematic distortion in mental evaluation of plans, making actions seem less promising that they are. When I’m deciding whether to do stuff, I can apply a conscious correction to this, to arrive at a properly calibrated judgement.

—The realization that, in general, my thinking can have systematic distortions, and that I shouldn’t believe everything I think. This is basic less-wrong style rationalism, but it took years to work through all the actual consequences on actual me.

—Exercise helps. I take lots of long walks when I start feeling depressed. Rain is optional.

Replies from: johannes-c-mayer↑ comment by Johannes C. Mayer (johannes-c-mayer) · 2024-04-25T13:19:01.259Z · LW(p) · GW(p)

—The realization that I have a systematic distortion in my mental evaluation of plans, making actions seem less promising than they are. When I’m deciding whether to do stuff, I can apply a conscious correction to this, to arrive at a properly calibrated judgment.

—The realization that, in general, my thinking can have systematic distortions, and that I shouldn’t believe everything I think. This is basic less-wrong style rationalism, but it took years to work through all the actual consequences on me.

This is useful. Now that I think about it, I do this. Specifically, I have extremely unrealistic assumptions about how much I can do, such that these are impossible to accomplish. And then I feel bad for not accomplishing the thing.

I haven't tried to be mindful of that. The problem is that this is I think mainly subconscious. I don't think things like "I am dumb" or "I am a failure" basically at all. At least not in explicit language. I might have accidentally suppressed these and thought I had now succeeded in not being harsh to myself. But maybe I only moved it to the subconscious level where it is harder to debug.

Replies from: carl-feynman↑ comment by Carl Feynman (carl-feynman) · 2024-04-26T14:18:09.310Z · LW(p) · GW(p)

I would highly recommend getting someone else to debug your subconscious for you. At least it worked for me. I don’t think it would be possible for me to have debugged myself.

My first therapist was highly directive. He’d say stuff like “Try noticing when you think X, and asking yourself what happened immediately before that. Report back next week.” And listing agenda items and drawing diagrams on a whiteboard. As an engineer, I loved it. My second therapist was more in the “providing supportive comments while I talk about my life” school. I don’t think that helped much, at least subjectively from the inside.

Here‘s a possibly instructive anecdote about my first therapist. Near the end of a session, I feel like my mind has been stretched in some heretofore-unknown direction. It’s a sensation I’ve never had before. So I say, “Wow, my mind feels like it’s been stretched in some heretofore-unknown direction. How do you do that?” He says, “Do you want me to explain?” And I say, “Does it still work if I know what you’re doing?” And he says, “Possibly not, but it’s important you feel I’m trustworthy, so I’ll explain if you want.” So I say “Why mess with success? Keep doing the thing. I trust you.” That’s an example of a debugging procedure you can’t do to yourself.

comment by Johannes C. Mayer (johannes-c-mayer) · 2024-01-03T12:56:26.943Z · LW(p) · GW(p)

Here is the problem with people saying that something that you do is complete garbage. Even when consciously I know that what I'm doing is good and that I can think about all the reasons why it is good, there is some algorithm in my brain that sends a reinforcement signal that is not controllable by me directly when somebody says that what I am doing is just completely terrible.

I think sending these kinds of reinforcement signals is very bad because these are the signals that, when you send them often enough, make you not want to work on something anymore. Even when you consciously think that this is the best thing that you can do, simply because these reinforcement signals have such a strong impact on your mind. An impact that cannot be overwritten or removed (or at least not easily). It is very hard to ignore these signals initially, which is when you need to ignore them, in order to make them have not this strong negative impact. You basically need to be in a mindset of "I don't give a fuck what you think" in order for it to not affect you. At least that is the most effective way that I have discovered so far. But this has other negative side-effects, like being more likely to ignore good things the other person says.

It also seems like, to a significant extent, it's important how you say something. You can say something in a demeaning way that puts yourself above the other person, which is not what you would want to do. You should do it in a very cold, philosopher-like demeanor. Really, I think one of the superpowers philosophers have is that they usually get trained to talk in a way that you're not talking about any person anymore and you know that whatever you're saying is not reflecting on what any person is saying, but only on the content that is being spoken about.

I would like my mind to be such that anybody could just say whatever they think is best for maximizing information flow and I could just handle that information appropriately, but it seems like I'm not able to do this. I think I'm pretty good at it, but I think I'm not so good that it makes sense for me to request you to just optimize the information flow. I would like you to optimize for information flow, but also for saying things in a way that doesn't trigger this reinforcement circuitry, which I think is very bad.

I think in future conversations I'd like people to say P9 instead of you or Johannes. Where P9 means the computer that is Johannes' brain and all the algorithms/processes that run on it and have run on it. Now we removed the 'I' form the equation, and it seems that in principle no matter what you say with regard to P9 it should not make me feel bad. I have used this technique to some limited extent in the past and there it had worked pretty well.

Another thing that might be useful to try is to use the meditation technique to resolve the self and see how then the feedback is taken and if still the qualia of negativity arises.

I have talked to many people who said they subscribe to Kruger's rules and I think, possibly each time I noticed, I think this exact phenomenon that I am describing here and then. Sometimes it was so strong that they literally wanted to stop talking about the particular topic that's being discussed where I am was just being really straightforward in a way too harsh way about what I think. I really strongly recommend that these people don't say that they subscribe to the standard version of Kruger's rules because clearly it has a negative impact on them giving them reinforcement signals that make them not want to think anymore about particular topics which seems extremely bad.

Replies from: Viliam, mir-anomaly↑ comment by Viliam · 2024-01-08T13:04:48.236Z · LW(p) · GW(p)

I would like my mind to be such that anybody could just say whatever they think is best for maximizing information flow and I could just handle that information appropriately, but it seems like I'm not able to do this.

I think this is not realistic to achieve (although partial success can be achieved).

What I would recommend instead is to separate "honest feedback" from "emotional support" -- and to have nonzero amount of the latter. Not sure what would be the proper social ritual to achieve this.

↑ comment by Mir (mir-anomaly) · 2024-01-04T02:45:10.632Z · LW(p) · GW(p)

Fwiw, you're on my shortlist of researchers whose potential I'm most excited about. I don't expect my judgment to matter to you (or maybe up to one jot), but I mention it just in case it helps defend against the self-doubt you experience as a result of doing things differently. : )

I don't know many researchers that well, but I try to find the ones that are sufficiently unusual-in-a-specific-way to make me feel hopefwl about them. And the stuff you write here reflects exactly the unusualness what makes me hopefwl: You actually think inside your own head.

Also, wrt defending against negative social reinforcement signals, it may be sort of epistemically-irrational, but I reinterpret [people disagreeing with me] as positive evidence that I'm just far ahead of them (something I actually believe). Notice how, when a lot of people tell you you're wrong, that is evidence for both [you are wrong] and [you are so much righter than them that they are unable to recognise how you are right (eg they lack the precursor concepts)].

Also, if you expect [competence at world-saving] to be normally (or lognormally) distributed, you should expect to find large gaps between the competence of the most competent people, simply because the tail flattens out the further out you go. In other words, P(you're Δ more competent than avg) gets closer to P(you're Δ+1 more competent than avg) as you increase Δ. This is one way to justify treating [other people not paying attention to you] as evidence for [you're in a more advanced realm of conversation], but it's far from the main consideration.

I invite you to meditate on this Mathematical Diagram I made! I believe that your behaviour (wrt the dimension of consequentialist world-saving) is so far to the right of this curve, that most of your peers will think your competence is far below them, unless they patiently have multiple conversations with you. That is, most people's deference limit is far to the left your true competence.

I'm now going to further destroy the vibes of this comment by saying "poop!" If someone, in their head, notice themselves downvaluing the wisdom of what I previously wrote, merely based on the silly vibes, their cognition is out of whack and they need to see a mechanic. This seems to be a decent litmus test for whether ppl have actual sensors for evidence/gears, or whether they're just doing (advanced) vibes-based pattern-matching. :P

Replies from: mesaoptimizer, alexander-gietelink-oldenziel, mesaoptimizer, johannes-c-mayer↑ comment by mesaoptimizer · 2024-01-06T10:34:26.302Z · LW(p) · GW(p)

Can you explain why you use "hopefwl" instead of "hopeful"? I've seen this multiple times in multiple places by multiple people but I do not understand the reasoning behind this. This is not a typo, it is a deliberate design decision by some people in the rationality community. Can you please help me undertand.

↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-01-06T10:41:45.024Z · LW(p) · GW(p)

This is an interesting concept. I wish it became a post.

Replies from: mir-anomaly↑ comment by Mir (mir-anomaly) · 2024-01-09T21:30:17.172Z · LW(p) · GW(p)

u'r encouraged to write it!