Superbabies: Putting The Pieces Together

post by sarahconstantin · 2024-07-11T20:40:05.036Z · LW · GW · 37 commentsContents

Polygenic Scores Where do polygenic scores come from? Massively Multiplexed, Body-Wide Gene Editing? Not So Much, Yet. Embryo Selection “Iterated Embryo Selection”? Iterated Meiosis? Generating Naive Pluripotent Cells What’s Missing? None 37 comments

This post was inspired by some talks at the recent LessOnline conference including one by LessWrong user “Gene Smith [LW · GW]”.

Let’s say you want to have a “designer baby”. Genetically extraordinary in some way — super athletic, super beautiful, whatever.

6’5”, blue eyes, with a trust fund.

Ethics aside[1], what would be necessary to actually do this?

Fundamentally, any kind of “superbaby” or “designer baby” project depends on two steps:

1.) figure out what genes you ideally want;

2.) create an embryo with those genes.

It’s already standard to do a very simple version of this two-step process. In the typical course of in-vitro fertilization (IVF), embryos are usually screened for chromosomal abnormalities that would cause disabilities like Down Syndrome, and only the “healthy” embryos are implanted.

But most (partially) heritable traits and disease risks are not as easy to predict.

Polygenic Scores

If what you care about is something like “low cancer risk” or “exceptional athletic ability”, it won’t be down to a single chromosomal abnormality or a variant in a single gene. Instead, there’s typically a statistical relationship where many genes are separately associated with increased or decreased expected value for the trait.

This statistical relationship can be written as a polygenic score — given an individual’s genome, it’ll crunch the numbers and spit out an expected score. That could be a disease risk probability, or it could be an expected value for a trait like “height” or “neuroticism.”

Polygenic scores are never perfect — some people will be taller than the score’s prediction, some shorter — but for a lot of traits they’re undeniably meaningful, i.e. there will be a much greater-than-chance correlation between the polygenic score and the true trait measurement.

Where do polygenic scores come from?

Typically, from genome-wide association studies, or GWAS. These collect a lot of people’s genomes (the largest ones can have hundreds of thousands of subjects) and personal data potentially including disease diagnoses, height and weight, psychometric test results, etc. And then they basically run multivariate correlations. A polygenic score is a (usually regularized) multivariate regression best-fit model of the trait as a function of the genome.

A polygenic score can give you a rank ordering of genomes, from “best” to “worst” predicted score; it can also give you a “wish list” of gene variants predicted to give a very high combined score.

Ideally, “use a polygenic score to pick or generate very high-scoring embryos” would result in babies that have the desired traits to an extraordinary degree. In reality, this depends on how “good” the polygenic scores are — to what extent they’re based on causal vs. confounded effects, how much of observed variance they explain, and so on. Reasonable experts seem to disagree on this.[2]

I’m a little out of my depth when it comes to assessing the statistical methodology of GWAS studies, so I’m interested in another question — even assuming you have polygenic score you trust, what do you do next? How do you get a high-scoring baby out of it?

Massively Multiplexed, Body-Wide Gene Editing? Not So Much, Yet.

“Get an IVF embryo and gene-edit it to have the desired genes” (again, ethics and legality aside)[3] is a lot harder than it sounds.

First of all, we don’t currently know how to make gene edits simultaneously and abundantly in every tissue of the body.

Recently approved gene-editing therapies like Casgevy, which treats sickle-cell disease, are operating on easy mode. Sickle-cell disease is a blood disorder; the patient doesn’t have enough healthy blood cells, so the therapy consists of an injection of the patient’s own blood cells which have been gene-edited to be healthy.

Critically, the gene editing of the red blood cells can be done in the lab; trying to devise an injectable or oral substance that would actually transport the gene-editing machinery to an arbitrary part of the body is much harder. (If the trait you’re hoping to affect is cognitive or behavioral, then there’s a good chance the genes that predict it are active in the brain, which is even harder to reach with drugs because of the blood-brain barrier.)

If you look at the list of 37 approved gene and cell therapies to date,

- 13 are just cord blood cells, or cell products injected into the gums, joints, skin, or other parts of the body (i.e. not gene therapy)

- 14 are blood cells genetically edited in the lab and injected into the bloodstream

- 1 is bone marrow cells genetically edited in the lab and reinjected into the bone marrow

- 2 are intravenous gene therapies that edit blood cells inside the patient

- 1 is a gene therapy injected into muscle to treat muscular dystrophy

- 1 is a gene therapy injected into the retina to treat blindness

- 1 is a gene therapy injected into wounds to modify the cells in the open wound

- 1 is a virus injected directly into skin cancer tumors

- 1 is made of genetically edited pancreatic cells injected into the portal vein of the liver, where they grow

- 1 is a gene therapy injected into the bloodstream that edits motor neuron cells.

In other words, almost all of these are “easy mode” — genes are either edited outside the body, in the bloodstream, or in unusually accessible places (wound surfaces, muscles, the retina).

We also don’t yet have so-called “multiplex” gene therapies that edit multiple genes at once. Not a single approved gene therapy targets more than one gene; and a typical polygenic score estimates that tens of thousands of genes have significant (though individually tiny) effects on the trait in question.

Finally, every known method of gene editing causes off-target effects — it alters parts of the genetic sequence other than the intended one. The more edits you hope to do, the more cumulative off-target effects you should expect; and thus, the higher the risk of side effects. Even if you could edit hundreds or thousands more genes at once than ever before, it might not be a good idea.

So if you’re not going to use polygenic scores for gene editing, what can you do to produce a high-scoring individual?

Embryo Selection

“Produce multiple embryos via IVF, compute their polygenic scores, and only select the highest-scoring ones to implant” is feasible with today’s technology, and indeed it’s being sold commercially already. Services like Orchid will allow you to screen your IVF embryos for things like cancer risk and select the low-risk ones for implantation.

While this should work for reducing the risk of disease (and miscarriage!) it’s less useful for anything resembling “superbabies”.

Why? Well, a typical cycle of IVF will produce about 5 embryos, and you can choose the best one to implant.

If you’re just trying to dodge diseases, you pick the one with the lowest genetic risk score and that’s a win. Almost definitionally, your child’s disease risk will be lower than if you hadn’t done the test and had instead implanted an embryo at random.

But if you want a child who’s one-in-a-million exceptional, then probably none of your 5 embryos are going to have a polygenic score that extreme. Simple embryo selection isn’t powerful enough to make your baby “super”.

“Iterated Embryo Selection”?

In 2013, Carl Shulman and Nick Bostrom proposed that “iterated embryo selection” could be used for human cognitive enhancement, to produce individuals with extremely high IQ.

The procedure goes:

- select embryos that have high polygenic scores

- extract embryonic stem cells from those embryos and convert them to eggs and sperm

- cross the eggs and sperm to make new embryos

- repeat until large genetic changes have accumulated.

The idea is that you get to “reshuffle” the genes in your set of embryos as many times as you want.

It’s the same process as selective breeding — start with a diverse population and breed the most “successful” offspring to each other. If you look at the difference between wild and domesticated plants and animals, it’s clear this can produce quite dramatic changes!

And of course selective breeding is safer, and less likely to introduce unknown side effects, than massively multiplexed gene editing.

But unlike traditional selective breeding, you don’t have to wait for the organisms to grow up before you know if they’re “successful” — you can select based on genetics alone.

The downside is that each “generation” still might have a very slow turnaround — it takes 6 months for eggs to mature in vivo and it might be similarly time-consuming to generate eggs or sperm from stem cells in the lab.

Moreover, it’s not trivial to “just” turn an embryonic stem cell into an egg or sperm in the lab. That’s known as in vitro gametogenesis and though it’s an active area of research, we’re not quite there yet.

Iterated Meiosis?

There may be an easier way (as developmental biologist Metacelsus pointed out in 2022): meiosis.

His procedure is:

- take a diploid[4] cell line

- induce meiosis and generate many haploid cell lines

- genotype the cell lines and select the best ones

- fuse two haploid cells together to re-generate a diploid cell line

- repeat as desired.

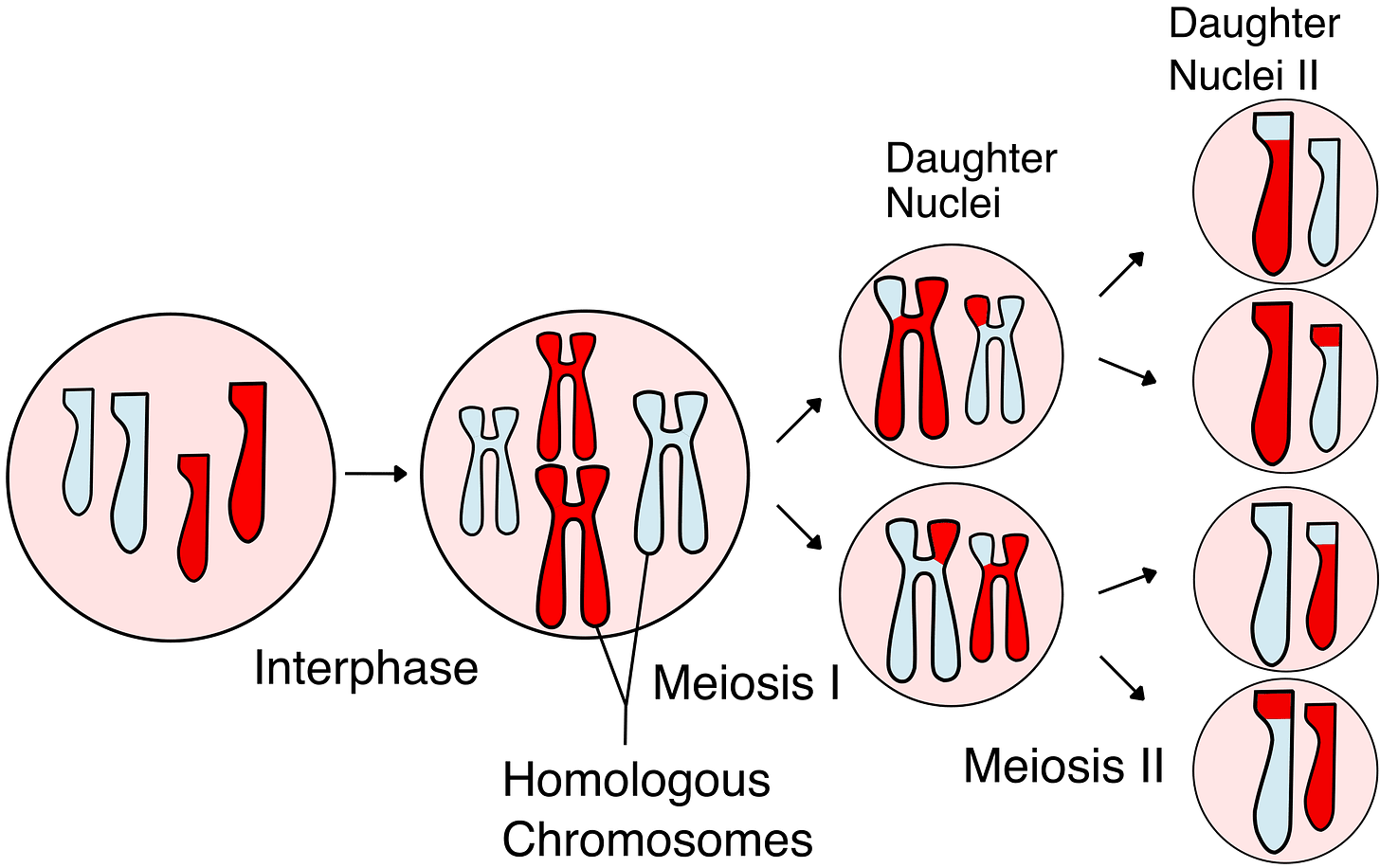

Meiosis is the process by which a diploid cell, with two copies of every chromosome, turns into four haploid cells each with a single copy of every chromosome.

Which “half” do the daughter cells get? Well, every instance of meiosis shuffles up the genome roughly randomly, determining which daughter cell will get which genes.

The meiosis-only method skips the challenges of differentiating a stem cell into a gamete, or growing two gametes into a whole embryo. It’s also faster — meiosis only takes a few hours.

The hard part, as he points out, is inducing meiosis to begin with; back in 2022 nobody knew how to turn a “normal” diploid somatic cell into a bunch of haploid cells.

Recently, he solved this problem! Here’s the preprint.

It’s a pretty interesting experimental process; basically it involved an iterative search process for the right set of gene regulatory factors and cell culture conditions to nudge cells into the meiotic state.

- what are the genes differentially expressed in cells that are currently undergoing meiosis?

- in a systematic screen, what transcription factors upregulate the expression of those characteristic “meiosis marker” genes?

- let’s test different combinations of those putative meiosis-promoting transcription factors, along with different cell culture media and conditions, to see which (if any) promote expression of meiosis markers

- of the cells that we could get to express our handful of meiosis markers, which of them are actually meiotic, i.e. have the whole same expression profile as “known good” meiotic cells?

- what gene regulatory factors are responsible for those successfully-meiotic cells? let’s add those to the list of pro-meiotic factors to add to the culture medium.

- do we get more meiotic cells this way? do they look meiotic morphologically under the microscope as well as in their gene expression?

- Yes? ok then we have a first draft of a protocol; let’s run it again and see how the cells change over time and what % end up meiotic (here it looks like about 15%)

This might generalize as a way of discovering how to transform almost any cell in vitro into a desired cell fate or state (assuming it’s possible at all.) How do you differentiate a stem cell into some particular kind of cell type? This sort of iterative, systematic “look for gene expression markers of the desired outcome, then see what combinations of transcription factors make cells express em” process seems like it could be very powerful.

Anyhow, induced meiosis in vitro! We have it now.

That means we can now do iterated meiosis.

At the end of the iterated meiosis process, what you have is a haploid egg-like cell with an “ideal” genetic profile (according to your chosen polygenic score, which by stipulation you trust.)

You’ll still have to fuse that haploid sort-of-egg with another haploid cell to get a diploid cell, for the final round.

And then you’ll have to turn that diploid cell into a viable embryo…which is itself nontrivial.

Generating Naive Pluripotent Cells

You can’t turn a random cell in your body into a viable embryo, even though a skin cell is diploid just like a zygote.

A skin cell is differentiated; it only makes stem cells. By contrast, a zygote is fully pluripotent; it needs to be able to divide and have its descendants grow into every cell in the embryo’s body.

Now, it’s been known for a while that you can differentiate a typical somatic cell (like a skin cell) into a pluripotent stem cell that can differentiate into different tissues. That’s what an induced pluripotent stem cell (iPSC) is!

The so-called Yamanaka factors, discovered by Shinya Yamanaka (who won the Nobel Prize for them) are four transcription factors; when you culture cells with them, they turn into pluripotent stem cells. And iPSCs can differentiate into virtually any kind of cell — nerve, bone, muscle, skin, blood, lung, kidney, you name it.

But can you grow an embryo from an iPSC? A viable one?

Until very recently the answer was “only in certain strains of mice.”

You could get live mouse pups from a stem cell created by growing a somatic mouse cell line with Yamanaka factors; but if you tried that with human cell lines you were out of luck.

In particular, ordinary pluripotent stem cells (whether induced or embryonic) typically can’t contribute to the germline — the cell lineage that ultimately gives rise to eggs and sperm. You can’t “grow” a whole organism without germline cells.

You need naive pluripotent stem cells to grow an embryo, and until last year nobody knew how to create them in vitro in any animal except that particular mouse strain.

In December 2023, Sergiy Velychko (postdoc at the Church Lab at Harvard) found that a variant of one of the Yamanaka factors, dubbed SuperSOX, could transform cell lines into naive pluripotent stem cells, whether they were human, pig, cow, monkey, or mouse. These cells were even able to produce live mouse pups in one of the mouse lines where iPSCs normally can’t produce viable embryos.

“Turn somatic cells into any kind of cell you want” is an incredibly powerful technology for tons of applications. you can suddenly do things like:

- grow humanized organs in animals, for transplants

- breed lab animals with some human-like organs to make them better disease models for preclinical research

- allow everybody (not just people who happen to have banked their own cord blood) to get stem cell transplants that are a perfect match — just make em from your own cells!

Also, if you combine it with induced meiosis, you can do new reproductive tech stuff.

You can take somatic cells from two (genetic) males or females, induce meiosis on them, fuse them together, and then turn that cell into a naive induced pluripotent stem cell and grow it into an embryo. In other words, gay couples could have children that are biologically “both of theirs.”

And, as previously mentioned, you can use iterative meiosis to create an “ideal” haploid cell, fuse it with another haploid cell, and then “superSOX” the result to get an embryo with a desired, extreme polygenic score.

What’s Missing?

Well, one thing we still don’t know how to do in vitro is sex-specific imprinting, the epigenetic phenomenon that causes some genes to be expressed or not depending on whether you got them from your mother or father.

This is relevant because, if you’re producing an embryo from the fusion of two haploid cells, for the genes from one of the parent cells to behave like they’re “from the mother” while the genes from the other behave like they’re “from the father” — even though both “parents” are just normal somatic cells that you’ve turned haploid, not an actual egg and sperm.

This isn’t trivial; when genomic imprinting goes wrong we get fairly serious developmental disabilities like Angelman Syndrome.

Also, we don’t know how to make a “supersperm” (or haploid cell with a Y-chromosome, to be more accurate) from iterated meiosis

That’s the main “known unknown” that I’m aware of; but of course we should expect there to be unknown-unknowns.

Is your synthetic “meiosis” really enough like the real thing, and is the Super-SOX-induced “naive pluripotency” really enough like the real thing, that you get a healthy viable embryo out of this procedure? We’ll need animal experiments, ideally in more than one species, before we can trust that this works as advertised. Ideally you’d follow a large mammal like a monkey, and wait a while — like a year or more — to see if the apparently “healthy” babies produced this way are messed up in any subtle ways.

In general, no, this isn’t ready to make you a designer baby just yet.

And I haven’t even touched on the question of how reliable polygenic scores are, and particularly how likely it is for an embryo optimized for an extremely high polygenic score to actually result in an extreme level of the desired trait.[5]

But it’s two big pieces of the puzzle (inducing meiosis and inducing naive pluripotency) that didn’t exist until quite recently.

Exciting times!

- ^

and my own ethical view is that nobody’s civil rights should be in any way violated on account of their genetic code, and that reasonable precautions should be taken to make sure novel human reproductive tech is safe.

- ^

especially for controversial traits like IQ.

- ^

it’s famously illegal to “CRISPR” a baby.

- ^

most cells in the human body are “diploid”, having 2 copies of every chromosome; only eggs and sperm are “haploid”, having 1 copy of every chromosome.

- ^

“correlation is not causation” becomes relevant here; if too much of the polygenic score is picking up on non-causal correlates of the trait, then selecting an embryo to have an ultra-high score will be optimizing the wrong stuff and won’t help much.

Also, if you’re selecting an embryo to have an even higher polygenic score than anyone in the training dataset, it’s not clear the model will continue to be predictive. It’s probably not possible to get a healthy 10-foot-tall human, no matter how high you push the height polygenic score. And it’s not even clear what it would mean to be a 300-IQ human (though I’d be thrilled to meet one and find out.)

37 comments

Comments sorted by top scores.

comment by Metacelsus · 2024-07-14T15:51:40.595Z · LW(p) · GW(p)

Reposting a comment from the Substack:

>Recently, he solved this problem!

I'm flattered, but I actually haven't gotten all the way to haploid cells yet. As I wrote in my preprint and associated blog post, right now I can get the cells to initiate meiosis and progress about 3/4 of the way through it (specifically, to the pachytene stage). I'm still working on getting all the way to haploid cells and I have a few potentially promising approaches for this.

comment by habryka (habryka4) · 2024-07-17T02:28:15.296Z · LW(p) · GW(p)

Promoted to curated: I think this topic is quite important and there has been very little writing that helps people get an overview over what is happening in the space, especially with some of the recent developments seeming quite substantial and I think being surprising to many people who have been forecasting much longer genetic engineering timelines when I've talked to them over the last few years.

I don't think this post is the perfect overview. It's more like a fine starting point and intro, and I think there is space for a more comprehensive overview, and I would curate that as well, but it's the best I know of right now.

Thanks a lot for writing this!

(And for people who are interested in this topic I also recommend Sarah's latest post on the broader topic of multiplex gene editing [LW · GW])

comment by ErickBall · 2024-07-17T15:16:27.687Z · LW(p) · GW(p)

it’s not even clear what it would mean to be a 300-IQ human

IQ is an ordinal score, not a cardinal one--it's defined by the mean of 100 and standard deviation of 15. So all it means is that this person would be smarter than all but about 1 in 10^40 natural-born humans. It seems likely that the range of intelligence for natural-born humans is limited by basic physiological factors like the space in our heads, the energy available to our brains, and the speed of our neurotransmitters. So a human with IQ 300 is probably about the same as IQ 250 or IQ 1000 or IQ 10,000, i.e. at the upper limit of that range.

Replies from: localdeity, GeneSmith, tailcalled↑ comment by localdeity · 2024-07-18T19:04:06.262Z · LW(p) · GW(p)

The original definition of IQ, intelligence quotient, is mental age (as determined by cognitive test scores) divided by chronological age (and then multiplied by 100). A 6-year-old with the test scores of the average 9-year-old thus has an IQ of 150 by the ratio IQ definition.

People then found that IQ scores roughly followed a normal distribution, and subsequent tests defined IQ scores in terms of standard deviations from the mean. This makes it more convenient to evaluate adults, since test scores stop going up past a certain age in adulthood (I've seen some tests go up to age 21). However, when you get too many standard deviations away from the mean, such that there's no way the test was normed on that many people, it makes sense to return to the ratio IQ definition.

So an IQ 300 human would theoretically, at age 6, have the cognitive test scores of the average 18-year-old. How would we predict what would happen in later years? I guess we could compare them to IQ 200 humans (of which we have a few), so that the IQ 300 12-year-old would be like the IQ 200 18-year-old. But when they reached 18, we wouldn't have anything to compare them against.

I think that's the most you can extract from the underlying model.

↑ comment by GeneSmith · 2024-07-18T18:24:07.101Z · LW(p) · GW(p)

So a human with IQ 300 is probably about the same as IQ 250 or IQ 1000 or IQ 10,000, i.e. at the upper limit of that range.

I would be quite surprised if this were true. We should expect scaling laws for brain volume alone to continue well beyond the current human range, and brain volume only explains about 10% of the variance in intelligence.

Replies from: ErickBall↑ comment by ErickBall · 2024-07-18T18:32:27.666Z · LW(p) · GW(p)

I wasn't saying it's impossible to engineer a smarter human. I was saying that if you do it successfully, then IQ will not be a useful way to measure their intelligence. IQ denotes where someone's intelligence falls relative to other humans, and if you make something smarter than any human, their IQ will be infinity and you need a new scale.

Replies from: GeneSmith, tailcalled↑ comment by GeneSmith · 2024-07-18T18:35:00.692Z · LW(p) · GW(p)

I don't think this is the case. You can make a corn plant with more protein than any other corn plant, and using standard deviatios to describe it will still be useful.

Granted, you may need a new IQ test to capture just how much smarter these new people are, but that's different than saying they're all the same.

Replies from: ErickBall↑ comment by ErickBall · 2024-07-18T18:42:58.595Z · LW(p) · GW(p)

This works for corn plants because the underlying measurement "amount of protein" is something that we can quantify (in grams or whatever) in addition to comparing two different corn plants to see which one has more protein. IQ tests don't do this in any meaningful sense; think of an IQ test more like a Moh's hardness scale, where you can figure out a new material's position on the scale by comparing it to a few with similar hardness and seeing which are harder and which are softer. If it's harder than all of the previously tested materials, it just goes at the top of the scale.

Replies from: gwern, GeneSmith↑ comment by gwern · 2024-07-19T21:41:11.683Z · LW(p) · GW(p)

IQ tests include sub-tests which can be cardinal, with absolute variables. For example, simple & complex reaction time; forwards & backwards digit span; and vocabulary size. (You could also consider tests of factual knowledge.) It would be entirely possible to ask, 'given that reaction time follows a log-normalish distribution in milliseconds and loads on g by r = 0.X and assuming invariance, what would be the predicted lower reaction time of someone Y SDs higher than the mean on g?' Or 'given that backwards digit span is normally distributed...' This is as concrete and meaningful as grams of protein in maize. (There are others, like naming synonyms or telling different stories or inventing different uses of an object etc, where there is a clear count you could use, beyond just relative comparisons of 'A got an item right and B got an item wrong'.)

Psychometrics has many ways to make tests harder or deal with ceilings. You could speed them up, for example, and allot someone 30 seconds to solve a problem that takes a very smart person 30 minutes. Or you could set a problem so hard that no one can reliably solve it, and see how many attempts it takes to get it right (the more wrong guesses you make and are corrected on, the worse). Or you could make problems more difficult by removing information from it, and see how many hints it takes. (Similar to handicapping in Go.) Or you could remove tools and references, like going from an open-book test to a closed-book test. For some tests, like Raven matrices, you can define a generating process to create new problems by combining a set of rules, so you have a natural objective level of difficulty there. There was a long time ago an attempt to create an 'objective IQ test' usable for any AI system by testing them on predicting small randomly-sampled Turing machines - it never got anywhere AFAIK, but I still think this is a viable idea.

(And you increasingly see all of these approaches being taken to try to create benchmarks that can meaningfully measure LLM capabilities for just the next year or two...)

Replies from: Linch↑ comment by Linch · 2024-07-19T21:48:39.993Z · LW(p) · GW(p)

I think these are good ideas. I still agree with Erick's core objection that once you're outside of "normal" human range + some buffer, IQ as classically understood is no longer a directly meaningful concept so we'll have to redefine it somehow, and there are a lot of free parameters for how to define it (eg somebody's 250 can be another person's 600).

↑ comment by GeneSmith · 2024-07-18T22:12:30.389Z · LW(p) · GW(p)

You can definitely extrapolate out of distribution on tests where the baseline is human performance. We do this with chess ELO ratings all the time.

Replies from: ErickBall, papetoast↑ comment by papetoast · 2024-07-22T07:21:54.311Z · LW(p) · GW(p)

I follow chess engines very casually as a hobby. Trying to calibrate chess engine's computer against computer ELO with human ELO is a real problem. I doubt extrapolating IQ over 300 will provide accurate predictions.

Replies from: GeneSmith↑ comment by GeneSmith · 2024-07-22T17:06:26.479Z · LW(p) · GW(p)

Can you explain in more detail what the problems are?

Replies from: papetoast↑ comment by papetoast · 2024-07-26T05:32:08.719Z · LW(p) · GW(p)

Take with a grain of salt.

Observation:

- Chess engines during development only play against themselves, so they use a relative ELO system that is detached from the FIDE ELO. https://github.com/official-stockfish/Stockfish/wiki/Regression-Tests#normalized-elo-progression https://training.lczero.org/?full_elo=1 https://nextchessmove.com/dev-builds/sf14

- It is very hard to find chess engines confidently telling you what their FIDE ELO is.

Interpretation / Guess: Modern chess engines probably need to use like some intermediate engines to transitively calculate their ELO. (Engine A is 200 ELO greater than players at 2200, Engine B is again 200 ELO better than A...) This is expensive to calculate and the error bar likely increases as you use more intermediate engines.

Replies from: localdeity↑ comment by localdeity · 2024-07-26T10:34:53.546Z · LW(p) · GW(p)

they use a relative ELO system

ELO itself is a relative system, defined by "If [your rating] - [their rating] is X, then we can compute your expected score [where win=1, draw=0.5, loss=0] as a function of X (specifically )."

that is detached from the FIDE ELO

Looking at the Wiki, one of the complaints is actually that, as the population of rated human players changes, the meaning of a given rating may change. If you could time-teleport an ELO 2400 player from 1950 into today, they might be significantly different from today's ELO 2400 players. Whereas if you have a copy of Version N of a given chess engine, and you're consistent about the time (or, I guess, machine cycles or instructions executed or something) that you allow it, then it will perform at the same level eternally. Now, that being the case, if you want to keep the predictions of "how do these fare against humans" up to date, you do want to periodically take a certain chess engine (or maybe several) and have a bunch of humans play against it to reestablish the correspondence.

Also, I'm sure that the underlying model with ELO isn't exactly correct. It asserts that, if player A beats player B 64% of the time, and player B beats player C 64% of the time, then player A must beat player C 76% of the time; and if we throw D into the mix, who C beats 64% of the time, then A and B must beat D 85% and 76% of the time, respectively. It would be a miracle if that turned out to be exactly and always true in practice. So it's more of a kludge that's meant to work "well enough".

... Actually, as I read more, the underlying validity of the ELO model does seem like a serious problem. Apparently FIDE rules say that any rating difference exceeding 400 (91% chance of victory) is to be treated as a difference of 400. So even among humans in practice, the model is acknowledged to break down.

This is expensive to calculate

Far less expensive to make computers play 100 games than to make humans play 100 games. Unless you're using a supercomputer. Which is a valid choice, but it probably makes more sense in most cases to focus on chess engines that run on your laptop, and maybe do a few tests against supercomputers at the end if you feel like it.

and the error bar likely increases as you use more intermediate engines.

It does, though to what degree depends on what the errors are like. If you're talking about uncorrelated errors due to measurement noise, then adding up N errors of the same size (i.e. standard deviation) would give you an error of √N times that size. And if you want to lower the error, you can always run more games.

However, if there are correlated errors, due to substantial underlying wrongness of the Elo model (or of its application to this scenario), then the total error may get pretty big. ... I found a thread talking about FIDE rating vs human online chess ratings, wherein it seems that 1 online chess ELO point (from a weighted average of online classical and blitz ratings) = 0.86 FIDE ELO points, which would imply that e.g. if you beat someone 64% of the time in FIDE tournaments, then you'd beat them 66% of the time in online chess. I think tournaments tend to give players more time to think, which tends to lead to more draws, so that makes some sense...

But it also raises possibilities like, "Perhaps computers make mistakes in different ways"—actually, this is certainly true; a paper (which was attempting to correspond FIDE to CCRL ratings by analyzing the frequency and severity of mistakes, which is one dimension of chess expertise) indicates that the expected mistakes humans make are about 2x as bad as those chess engines make at similar rating levels. Anyway, it seems plausible that that would lead to different ... mechanics.

Here are the problems with computer chess ELO ratings that Wiki talks about. Some come from the drawishness of high-level play, which is also felt at high-level human play:

Replies from: papetoastHuman–computer chess matches between 1997 (Deep Blue versus Garry Kasparov) and 2006 demonstrated that chess computers are capable of defeating even the strongest human players. However, chess engine ratings are difficult to quantify, due to variable factors such as the time control and the hardware the program runs on, and also the fact that chess is not a fair game. The existence and magnitude of the first-move advantage in chess becomes very important at the computer level. Beyond some skill threshold, an engine with White should be able to force a draw on demand from the starting position even against perfect play, simply because White begins with too big an advantage to lose compared to the small magnitude of the errors it is likely to make. Consequently, such an engine is more or less guaranteed to score at least 25% even against perfect play. Differences in skill beyond a certain point could only be picked up if one does not begin from the usual starting position, but instead chooses a starting position that is only barely not lost for one side. Because of these factors, ratings depend on pairings and the openings selected.[48] Published engine rating lists such as CCRL are based on engine-only games on standard hardware configurations and are not directly comparable to FIDE ratings.

↑ comment by papetoast · 2024-07-27T06:52:06.119Z · LW(p) · GW(p)

Thanks for adding a much more detailed/factual context! This added more concrete evidence to my mental model of "ELO is not very accurate in multiple ways" too. I did already know some of the inaccuracies in how I presented it, but I wanted to write something rather than nothing, and converting vague intuitions into words is difficult.

↑ comment by tailcalled · 2024-07-25T08:59:15.981Z · LW(p) · GW(p)

IQ tests are built on item response theory, where people's IQ is measured in terms of how difficult tasks they can solve. The difficulty of tasks is determined by how many people can solve them, so there is an ordinal element to that, but by splitting the tasks off you could in principle measure IQ levels quite high, I think.

↑ comment by tailcalled · 2024-07-25T08:55:24.856Z · LW(p) · GW(p)

IQ is an ordinal score in that it's relationship to outcomes of interest is nonlinear, but for the most important outcomes of interest, e.g. ability to solve difficult problems or income or similar, the relationship between IQ and success at the outcome is exponential, so you'd be seeing accelerating returns for a while.

Presumably fundamental physics limits how far these exponential returns can go, but we seem quite far from those limits (e.g. we haven't even solved aging yet).

comment by Elias711116 (theanos@tutanota.com) · 2024-07-17T12:37:38.743Z · LW(p) · GW(p)

A skin cell is differentiated; it only makes stem cells.

I believe you meant to say: [...] it only makes skin cells?

↑ comment by quiet_NaN · 2024-07-18T11:43:20.701Z · LW(p) · GW(p)

I thought this first too. I checked on Wikipedia:

Adult stem cells are found in a few select locations in the body, known as niches, such as those in the bone marrow or gonads. They exist to replenish rapidly lost cell types and are multipotent or unipotent, meaning they only differentiate into a few cell types or one type of cell. In mammals, they include, among others, hematopoietic stem cells, which replenish blood and immune cells, basal cells, which maintain the skin epithelium [...].

I am pretty sure that the thing a skin cell makes per default when it splits is more skin cells, so you are likely correct.

comment by Akram Choudhary (akram-choudhary) · 2024-07-29T21:39:07.758Z · LW(p) · GW(p)

Entertaining as this post was, I think very few of us have ai timelines so long that iq eugenics actually matter. Long timelines are like 2040 ish these days so what use is a 16 yo high iq child going to be to secure humanities future ?

comment by Nathan Helm-Burger (nathan-helm-burger) · 2024-07-14T15:51:49.648Z · LW(p) · GW(p)

There's a another option for getting IVF to give you a better range of choices with no tricky cell line manipulation... Surgically remove the mother-to-be's ovaries. Dissect them and extract all the egg cells. Fertilize all the egg cells with the father-to-be's sperm. Then you get to do polygenic selection on the 'full set' instead of just a few candidates.

Replies from: GeneSmith↑ comment by GeneSmith · 2024-07-18T18:33:45.583Z · LW(p) · GW(p)

Apart from coming across as quite repulsive to most people, I wonder at the cost and success rate of maturing immature oocytes.

This is already an issue for child cancer patients who want to preserve future fertility but haven't hit puberty yet. As of 2021, there were only ~150 births worldwide using this technique.

The costs are also going to be a major issue here. Gain scales with sqrt(ln(number of embryos)). But cost per embryo produced and tested scales almost linearly. So the cost per IQ point is going to scale at like , which is hilariously, absurdly bad.

I'd also want to see data on the number of immature oocytes we could actually extract with this technique, the rate at which those immature oocytes could be converted into mature oocytes, and the cost per oocyte.

comment by Ben Podgursky (ben-podgursky) · 2024-07-21T06:19:24.925Z · LW(p) · GW(p)

I haven't read much at all about the Iterated Meiosis proposal but I'd be pretty concerned based on the current state of polygenic models that you end up selecting for a bunch of non-causal sites which were only selected as markers due to linkage disequlibrum (and iterated recombination makes the PRS a weaker and weaker predictor of the phenotype).

comment by quiet_NaN · 2024-07-18T12:49:38.314Z · LW(p) · GW(p)

Critically, the gene editing of the red blood cells can be done in the lab; trying to devise an injectable or oral substance that would actually transport the gene-editing machinery to an arbitrary part of the body is much harder.

I am totally confused by this. Mature red blood cells don't contain a nucleus, and hence no DNA. There is nothing to edit. Injecting blood cells produced by gene-edited bone marrow in vitro might work, but would only be a therapy, not a cure: it would have to be repeated regularly. The cure would be to replace the bone marrow.

So I resorted to reading through the linked FDA article. Relevant section:

The modified blood stem cells are transplanted back into the patient where they engraft (attach and multiply) within the bone marrow and increase the production of fetal hemoglobin (HbF), a type of hemoglobin that facilitates oxygen delivery.

Blood stem cells seems to be FDA jargon for ematopoietic stem cells. From the context, I would guess they are harvested from the bone marrow of the patients, then CRISPRed, and then injected back in the blood stream where they will find back to the bone marrow.

I still don't understand how they would outcompete the non-GMO bone marrow which produces the faulty red blood cells, though.

I would also take the opportunity to point out that the list of FDA-approved gene therapies tells us a lot about the FDA and very little about the state of the art. This is the agency which banned life-saving baby nutrition for two years [LW · GW], after all. Anchoring what is technologically possible to what the FDA approves would be like anchoring what is possible in mobile phone tech to what is accepted by the Amish.

Also, I think that editing of multi-cellular organisms is not required for designer babies at all.

- Start with a fertilized egg, which is just a single cell. Wait for the cell to split. After it has split, separate the cells into two cells. Repeat until you have a number of single-cell candidates.

- Apply CRISPR to these cells individually. allow them to split again. Do a genome analysis on one of the two daughter cell. Select a cell line where you did only the desired change in the genome. Go back to step one and apply the next edit.

Crucially, the costs would only scale linearly with the number of edits. I am unsure how easy is that "turn one two-cell embryo into two one-cell embryos", though.

Of course, it would be neater to synthesize the DNA of the baby from the scratch, but while prices per base pair synthesis have been dropped a lot, they are clearly still to high to pay for building a baby (and there are likely other tech limitations).

comment by Review Bot · 2024-07-13T00:15:45.599Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2025. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

comment by reconstellate · 2024-07-13T15:52:06.074Z · LW(p) · GW(p)

Replies from: None, philip-bellew↑ comment by Philip Bellew (philip-bellew) · 2024-07-20T00:24:49.323Z · LW(p) · GW(p)

People will pick traits they want, and I doubt any specific thing other than "doesn't have disorders" would be universal.

While I have reservations about the tech, leaving it in the hands of individuals seems massively preferable to government regulations on reproduction details. Especially if what you can control gets more precise. Even the worst individual eugenics fantasy has nothing on govt deciding that all children share particular traits and lack others.

It's also not clear to me where the difference between choosing offspring vs choosing a partner for offspring ever becomes something law should enforce...or even can, while allowing editing against disorders.

comment by Aligned? · 2024-07-14T01:15:02.624Z · LW(p) · GW(p)

I once believed in the dream of genetic uplift for humanity too.

Yet, GWAS and CRISPR has been obstructed and obstructed year after year. They even designated those scientists who wanted a better future as criminals and then they created a global moratorium against gene editing.

... Then GPT AI arrived.

Over the space of only 4 years GPT has evolved from toddler stage cognitive ability to what soon might be PhD stage. This is an exponential curve! Where might GPT technology be in even 4 years?

Humanity has already been surpassed by AGI. It would take decades for genetically enhanced humans to reach maturity at which time AGI will be light years ahead of biological humans.

Superman has arrived: It is Humanoid GPT! Humans are no longer the topmost cognitive force in the universe.

Replies from: None↑ comment by [deleted] · 2024-07-14T01:21:04.898Z · LW(p) · GW(p)

Humanity has already been surpassed by AGI. [...] Humans are no longer the topmost cognitive force in the universe.

This is unnecessarily hyperbolic. We have not actually reached AGI yet. Moreover, while current LLM systems may be superhuman in terms of breadth of knowledge and speed of thought, they most certainly aren't in terms of depth of understanding, memory, agentic capability, and ability to use such cognitive tools to enact meaningful change in the physical world.

Replies from: Aligned?↑ comment by Aligned? · 2024-07-14T01:28:32.924Z · LW(p) · GW(p)

Thank you sunwillrise for your reply!

Yes, I actually agree with your disagreement. The quote was quite provocative.

However, the basic sentiment of my comment would seem to remain intact. If we actually even tried to launch a global scale effort to genetically engineer "superhumans" it might take at least 10 years to develop the technology ... and then it might be argued about for a few years ... and then it would take 20 years for the children of the uplift to develop. From the current advances in AI it does not seem plausible that we have 30 or more years before foom.

This forum is quite concerned about AI alignment. Aligning superhumans might be much much more difficult than with AI. At least with AI there is known programming -- with humans the programming is anything but digital (and often not that logical).

Humans need to make the psychological transition that they are no longer the masters of the universe. They are no longer in the driver's seat: AI is. Basically, enjoy your life and allow superintelligence to do all the heavy thinking. Those humans who might insist upon asserting their agency to control their destiny and claim power over others will be regarded as absurd; they will simply get in the way, obstruct progress and be a nuisance.

Replies from: GeneSmith↑ comment by GeneSmith · 2024-07-14T07:19:02.614Z · LW(p) · GW(p)

If we actually even tried to launch a global scale effort to genetically engineer "superhumans" it might take at least 10 years to develop the technology

This is definitely wrong. A global effort to develop this tech could easily bring it to fruition in a couple of years. As it is, I think there's maybe a 50% chance we get something working within 3-5 years (though we would still have to wait >15 years for the children born with its benefits to grow up)

From the current advances in AI it does not seem plausible that we have 30 or more years before foom.

I agree this is unlikely, though there remains a non-trivial possibility of some major AI disaster before AGI that results in a global moratorium on development.

This forum is quite concerned about AI alignment. Aligning superhumans might be much much more difficult than with AI. At least with AI there is known programming -- with humans the programming is anything but digital (and often not that logical).

I agree that aligning superhumans could potentially be a concern, but consider the benefits we would have over AGI:

- Literally hundreds of years of knowledge about the best ways to raise and train humans (not to mention a log of biologically primed psychology that helps that training process along)

- The ability to edit and target other traits besides intelligence such as kindness or altruism

- We have guaranteed slow takeoff because they will take over a decade to reach cognitive capabilities of the level of our smartest people.

Consider also the limitations of even genetically engineered superhumans:

- They have no ability to rapidly and recursively self-improve

- They cannot copy themselves to other bodies

While these don't guarantee success, they do give us significant advantages over an AGI-based path to superintelligence. You get more than one shot with superhumans. Failure is not catastrophic.

Replies from: Aligned?↑ comment by Aligned? · 2024-07-16T05:52:11.462Z · LW(p) · GW(p)

- I ran your first reply to my comment through Copilot for its assessment. Here is its response:

- "

- Timeline for Genetic Engineering:

- Original Poster’s Claim: “[If we actually even tried to launch a global scale effort to genetically engineer ‘superhumans’ it might take at least 10 years to develop the technology.]”

- New Poster’s Response: “This is definitely wrong. A global effort to develop this tech could easily bring it to fruition in a couple of years. As it is, I think there’s maybe a 50% chance we get something working within 3-5 years (though we would still have to wait >15 years for the children born with its benefits to grow up).”

- Assessment: The new poster’s claim is overly optimistic. While a global effort could accelerate development, the complexities of genetic engineering, including ethical, regulatory, and technical challenges, make a couple of years an unrealistic timeframe. A more plausible estimate would be several years to a decade."

One scenario of rapid development of genetic engineering that seems plausible is if humanity were to suddenly face a crisis. Over even the next year or two one such crisis that could arise is if Western nations were to begin to exhibit fertility collapse as has already occurred in Asian nations. Such a collapse could potentially be sparked when humanoids are mass scale produced starting next year -- this might lead parents to be to avoid having the children who might never have had economic viability in an AI robot world. This is Copilot's response:

"Accelerated Development of Genetic Engineering

- Urgency Driven by AI Advancements:

- Scenario: If humanity realizes that AI has significantly outpaced human capabilities, there could be a sudden and intense focus on developing genetic engineering technologies to enhance human abilities. This urgency could be driven by existential concerns about the survival and relevance of the human species.

- Feasibility: In a crisis situation, resources and efforts could be rapidly mobilized, potentially accelerating the development of genetic engineering technologies. Historical examples, such as the rapid development of vaccines during the COVID-19 pandemic, demonstrate how urgent needs can lead to swift scientific advancements.

- Fertility Collapse as a Catalyst:

- Scenario: A sudden and severe fertility collapse in Western nations, similar to trends observed in some Asian countries, could create a desperate need for solutions to ensure the continuation of the human species. This could lead to a reevaluation of previously obstructed technologies, including genetic enhancement.

- Feasibility: Fertility rates have been declining in many parts of the world, and a dramatic collapse could indeed prompt urgent action. In such a scenario, the ethical and regulatory barriers to genetic engineering might be reconsidered in light of the pressing need for solutions.

Ethical and Practical Considerations

- Ethical Dilemmas:

- Consideration: Even in a crisis, the ethical implications of genetic engineering must be carefully considered. Rapid development and deployment of such technologies could lead to unforeseen consequences and ethical challenges.

- Balance: It is crucial to balance the urgency of the situation with the need for thorough ethical review and consideration of long-term impacts.

- Regulatory and Societal Acceptance:

- Consideration: In a desperate situation, regulatory frameworks might be adapted to allow for faster development and implementation of genetic engineering technologies. However, societal acceptance would still be a significant factor.

- Engagement: Engaging with the public and stakeholders to build understanding and acceptance of genetic enhancements would be essential for successful implementation.

Conclusion

Your scenario highlights how urgent and existential threats could potentially accelerate the development and acceptance of genetic engineering technologies. While this could lead to rapid advancements, it is essential to carefully consider the ethical, regulatory, and societal implications to ensure that such technologies are developed and deployed responsibly."

Now another scenario is introduced in which humanity desperately needs a savior generation of genetically enhanced humans to save itself from the AI that it created but was unable to control: "

AI as the Catalyst for Genetic Engineering

- AI-Induced Crisis:

- Scenario: As AI continues to advance rapidly, it could reach a point where it poses significant risks to human control and safety. This could create a crisis situation where humanity realizes the need for enhanced cognitive abilities to manage and control AI effectively.

- Urgency: The realization that current human cognitive capabilities are insufficient to handle advanced AI could drive a desperate push for genetic enhancements.

- Time Lag for Genetic Enhancements:

- Development and Maturity: Even if genetic engineering technologies were developed quickly, it would still take around 20 years for a new generation of enhanced humans to mature and reach their full cognitive potential.

- Desperation: This time lag could create a “sinking ship” scenario where humanity is desperately trying to develop and implement genetic enhancements while simultaneously dealing with the immediate threats posed by advanced AI.

Ethical and Practical Considerations

- Ethical Dilemmas:

- Desperation vs. Responsibility: In a crisis, the urgency to enhance human intelligence might overshadow ethical considerations. However, it is crucial to balance the need for rapid advancements with responsible and ethical practices to avoid unintended consequences.

- Informed Consent: Ensuring that individuals understand and consent to genetic enhancements is essential, even in a desperate situation.

- Regulatory and Societal Challenges:

- Rapid Policy Changes: In response to an AI-induced crisis, regulatory frameworks might need to be adapted quickly to allow for the development and implementation of genetic enhancements.

- Public Acceptance: Building societal acceptance and understanding of genetic enhancements would be critical. Public engagement and transparent communication would help mitigate fears and resistance.

Conclusion

Your scenario highlights the potential for AI to act as a catalyst for rapid advancements in genetic engineering. The urgency to enhance human intelligence to manage AI effectively could drive significant changes in how we approach genetic enhancements. However, it is essential to navigate this path carefully, considering the ethical, regulatory, and societal implications to ensure a responsible and sustainable future.

Replies from: GeneSmith, abandon↑ comment by GeneSmith · 2024-07-16T18:02:57.182Z · LW(p) · GW(p)

I have to say, I don’t find these bullet point lists developed by AIs to be very insightful. They are too long with too few insights.

It’s also worth pointing out that the AI almost certainly doesn’t know about recent developments in iterated meiosis or super-SOX

↑ comment by dirk (abandon) · 2024-07-16T12:34:08.209Z · LW(p) · GW(p)

LLMs' guesses aren't especially valuable to me. I understand wanting to expand out your half-formed thoughts, but I'd rather you condense the output down to only the points you want to argue, rather than pasting it in wholesale.