AI timeline predictions: are we getting better?

post by Stuart_Armstrong · 2012-08-17T07:07:11.196Z · LW · GW · Legacy · 81 commentsContents

81 comments

EDIT: Thanks to Kaj's work, we now have more rigorous evidence on the "Maes-Garreau law" (the idea that people will predict AI coming before they die). This post has been updated with extra information. The original data used for this analysis can now be found through here.

Thanks to some sterling work by Kaj Sotala and others (such as Jonathan Wang and Brian Potter - all paid for by the gracious Singularity Institute, a fine organisation that I recommend everyone look into), we've managed to put together a databases listing all AI predictions that we could find. The list is necessarily incomplete, but we found as much as we could, and collated the data so that we could have an overview of what people have been predicting in the field since Turing.

We retained 257 predictions total, of various quality (in our expanded definition, philosophical arguments such as "computers can't think because they don't have bodies" count as predictions). Of these, 95 could be construed as giving timelines for the creation of human-level AIs. And "construed" is the operative word - very few were in a convenient "By golly, I give a 50% chance that we will have human-level AIs by XXXX" format. Some gave ranges; some were surveys of various experts; some predicted other things (such as child-like AIs, or superintelligent AIs).

Where possible, I collapsed these down to single median estimate, making some somewhat arbitrary choices and judgement calls. When a range was given, I took the mid-point of that range. If a year was given with a 50% likelihood estimate, I took that year. If it was the collection of a variety of expert opinions, I took the prediction of the median expert. If the author predicted some sort of AI by a given date (partial AI or superintelligent AI), I took that date as their estimate rather than trying to correct it in one direction or the other (there were roughly the same number of subhuman AIs as suphuman AIs in the list, and not that many of either). I read extracts of the papers to make judgement calls when interpreting problematic statements like "within thirty years" or "during this century" (is that a range or an end-date?).

So some biases will certainly have crept in during the process. That said, it's still probably the best data we have. So keeping all that in mind, let's have a look at what these guys said (and it was mainly guys).

There are two stereotypes about predictions in AI and similar technologies. The first is the Maes-Garreau law: technologies as supposed to arrive... just within the lifetime of the predictor!

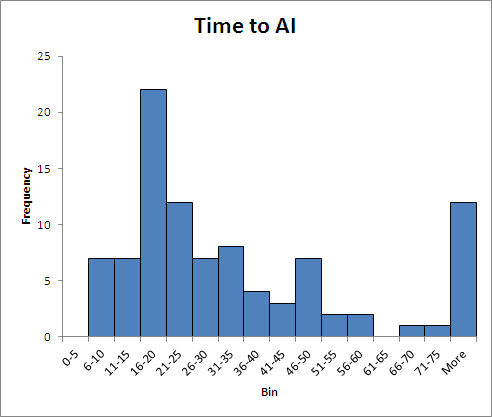

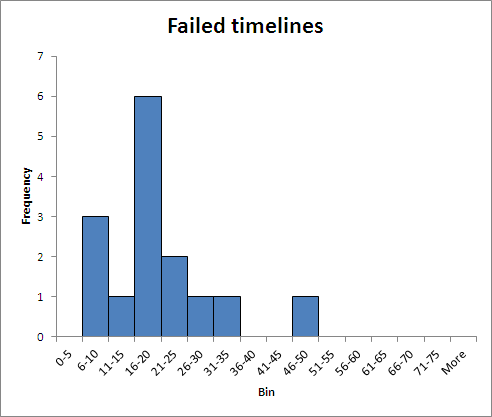

The other stereotype is the informal 20-30 year range for any new technology: the predictor knows the technology isn't immediately available, but puts it in a range where people would still be likely to worry about it. And so the predictor gets kudos for addressing the problem or the potential, and is safely retired by the time it (doesn't) come to pass. Are either of these stereotypes born out by the data? Well, here is a histogram of the various "time to AI" predictions:

As can be seen, the 20-30 year stereotype is not exactly born out - but a 15-25 one would be. Over a third of predictions are in this range. If we ignore predictions more than 75 years into the future, 40% are in the 15-25 range, and 50% are in the 15-30 range.

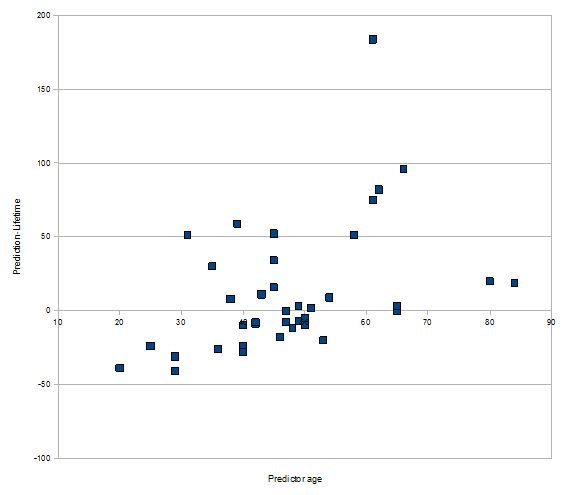

Apart from that, there is a gradual tapering off, a slight increase at 50 years, and twelve predictions beyond three quarters of a century. Eyeballing this, there doesn't seem to much evidence for the Maes-Garreau law. Kaj looked into this specifically, plotting (life expectancy) minus (time to AI) versus the age of the predictor; the Maes-Garreau law would expect the data to be clustered around the zero line:

Most of the data seems to be decades out from the zero point (note the scale on the y axis). You could argue, possibly, that fifty year olds are more likely to predict AI just within their lifetime, but this is a very weak effect. I see no evidence for the Maes-Garreau law - of the 37 prediction Kaj retained, only 6 predictions (16%) were within five years (in either direction) of the expected death date.

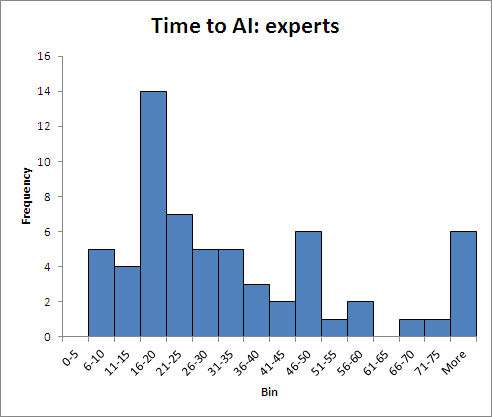

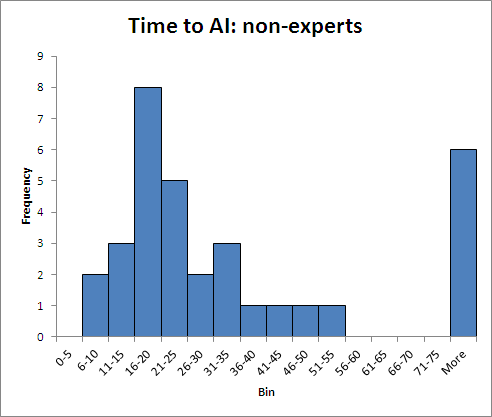

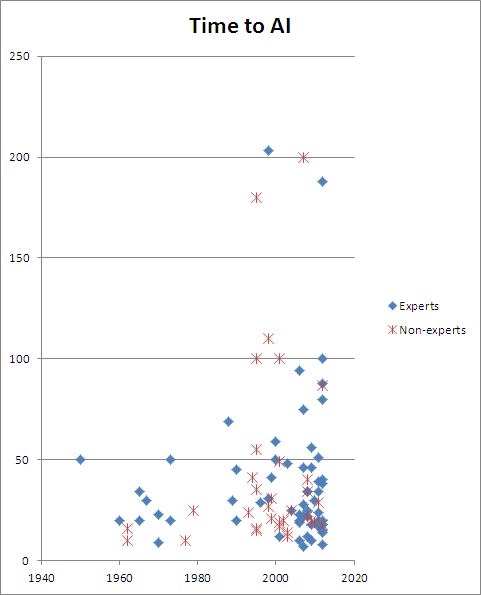

But not all predictions are created equal. 62 of the predictors were labelled "experts" in the analysis - these had some degree of expertise in fields that were relevant to AI. The other 33 were amateurs - journalists, writers and such. Decomposing into these two groups showed very little difference, though:

The only noticeable difference is that amateurs lacked the upswing at 50 years, and were relatively more likely to push their predictions beyond 75 years. This does not look like good news for the experts - if their performance can't be distinguished from amateurs, what contributions is their expertise making?

But I've been remiss so far - combining predictions that we know are false (because their deadline has come and gone) with those that could still be true. If we look at predictions that have failed, we get this interesting graph:

This looks very similar to the original graph. The main difference being the lack of very long range predictions. This is not, in fact, because there has not yet been enough time for these predictions to be proved false, but because prior to the 1990s, there were actually no predictions with a timeline greater than fifty years. This can best be seen on this scatter plot, which plots the time predicted to AI against the date the prediction was made:

As can be seen, as time elapses, people become more willing to predict very long ranges. But this is something of an artefact - in the early days of computing, people were very willing to predict that AI was impossible. Since this didn't give a timeline, their "predictions" didn't show up on the graph. It recent times, people seem a little less likely to claim AI is impossible, replaced by these "in a century or two" timelines.

Apart from that one difference, predictions look remarkably consistent over the span: modern predictors are claiming about the same time will elapse before AI arrives as their (incorrect) predecessors. This doesn't mean that the modern experts are wrong - maybe AI really is imminent this time round, maybe modern experts have more information and are making more finely calibrated guesses. But in a field like AI prediction, where experts lack feed back for their pronouncements, we should expect them to perform poorly, and for biases to dominate their thinking. This seems the likely hypothesis - it would be extraordinarily unlikely that modern experts, free of biases and full of good information, would reach exactly the same prediction distribution as their biased and incorrect predecessors.

In summary:

- Over a third of predictors claim AI will happen 16-25 years in the future.

- There is no evidence that predictors are predicting AI happening towards the end of their own life expectancy.

- There is little difference between experts and non-experts (some possible reasons for this can be found here).

- There is little difference between current predictions, and those known to have been wrong previously.

- It is not unlikely that recent predictions are suffering from the same biases and errors as their predecessors.

81 comments

Comments sorted by top scores.

comment by MichaelHoward · 2012-08-15T00:10:21.819Z · LW(p) · GW(p)

we've managed to put together a databases listing all AI predictions that we could find...

Have you looked separately at the predictions made about milestones that have now happened (e.g. beat Grand Master/respectable amateur at Jeopardy!/chess/driving/backgammon/checkers/tic-tac-toe/WWII) for comparison with the future/AGI predictions?

I'm especially curious about the data for people who have made both kinds of prediction, what correlations are there, and how the predictions of things-still-to-come look when weighted by accuracy of predictions of things-that-happened-by-now.

Replies from: gwern↑ comment by gwern · 2012-08-17T19:09:19.016Z · LW(p) · GW(p)

Are there any long-term sets of predictions for anything but chess? I don't recall reading anyone ever speculating about AI and, say, Jeopardy! before info about IBM's Watson began to leak.

EDIT: XiXiDu points out on Google+ that there have been predictions and bets made on computer Go. That's true, but I'm not sure how far back they go - with computer chess, the predictions start in the 1940s or 1950s, giving around a ~50 year window. With computer Go, I expect it to be over by 2030 or so, giving a 40 year window if people started seriously prognosticating back in the '90s, well before Monte-Carlo Trees revolutionized computer Go.

comment by lukeprog · 2012-08-14T18:51:07.900Z · LW(p) · GW(p)

all paid for by the gracious Singularity Institute - a fine organisation that I recommend everyone look into

Lol, this sounds to me like I was personally twisting one of your arms while you typed this post with the other hand. :)

Replies from: Alicorn, Stuart_Armstrong, None, gwern↑ comment by Alicorn · 2012-08-14T19:03:24.381Z · LW(p) · GW(p)

Personally? I think you'd have your assistant do that.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2012-08-15T09:16:51.486Z · LW(p) · GW(p)

Now I have a mental image of somebody twisting Stuart's arm with one hand, while using his other hand to fill in a Google Docs spreadsheet to record the amount of time he's spent twisting people's arms today.

↑ comment by Stuart_Armstrong · 2012-08-14T21:36:57.857Z · LW(p) · GW(p)

Can I have my wrist back now, please master?

comment by JenniferRM · 2012-08-17T10:32:56.879Z · LW(p) · GW(p)

I copy and pasted the "Time To AI" chart and did some simple graphic manipulations to make the vertical and horizontal axis equal, extend the X-axis, and draw diagonal lines "down and to the right" to show which points predicted which dates. It was an even more interesting graphic that way!

It sort of looked like four or five gaussians representing four or five distinct theories were on display. All the early predictions (I assume that first one is Turing himself) go with a sort of "robots by 2000" prediction scheme that seems consistent with the Jetson's and what might have happened without "the great stagnation". All of the espousers of this theory published before the AI winter and you can see a gap in predictions being made on the subject from about 1978 to about 1994. Predicting AGI arrival in 2006 was never trendy, it seems to have always been predicted earlier or later.

The region from 2015 thru 2063 has either one or two groups betting on it because instead of "guassian-ish" it is strongly weighted towards the front end, suggesting perhaps a bimodal group that isn't easy to break into two definite groups. One hump sometimes predicts dates out as late as the 2050's, but the main group really likes the 2020's and 2030's. The first person to express anything like this theory was an expert in about 1979 (before the AI winter really set in, which is interesting) and I'm not sure who it was off the top of my head. There's a massive horde expressing this general theory, but they seem to have come in a wave of non-experts during the dotcom bubble (predicting early-ish) and then there's a gap in the aftermath of the bubble, then a wave of experts predicting a bit later.

Like 2006, the year 2072 is not very trendy for AGI predictions. However around 2080 to 2110 there seems to be a cluster that was lead by three non-expert opinions expressed in 1999 to 2003 (ie the dotcom bubble aftermath). A few years later five experts chime in to affirm the theory. I don't recognize the theory by name or rhetoric but my rough label for their theory might be "the singularity is late" just based on the sparse data.

The final coherent theory seems to be four people predicting "2200", my guess here is just that its really far in the future and a nice round number. Four people do this, two experts and two non-experts. It looks like two pre-bubble and two post bubble?

For what its worth, eyeballing my re-worked "Time to AI" figure indicates a median of about 2035, and my last moderately thoughtful calculation gave a median arrival of AGI at about 2037, with later arrivals being more likely to be "better" and, in the meantime, prevention of major wars or arms races being potentially more important to work on than AGI issues. The proximity of these dates to the year 2038 is pure ironic gravy, though I have always sort of suspected that one chunk of probability mass should take the singularity seriously because if it happens then it will be enormously important, while another chunk of probability mass should be methodologically mindful of the memetic similarities between the Y2K Bug and the Singularity (i.e. both of them being non-supernatural computer-based eschatologies which, whatever their ultimate truth status, would naturally propagate in roughly similar ways before the fact was settled).

Replies from: datadataeverywhere↑ comment by datadataeverywhere · 2012-09-12T10:41:14.050Z · LW(p) · GW(p)

How many degrees of freedom does your "composition of N theories" theory have? I'm not inclined to guess, since I don't know how you went about this. I just want to point out that 260 is not many data points; clustering is very likely going to give highly non-reproducible results unless you're very careful.

Replies from: JenniferRM↑ comment by JenniferRM · 2012-09-12T16:22:24.319Z · LW(p) · GW(p)

I went about it by manipulating the starting image in Microsoft Paint, stretching, annotating, and generally manipulating it until the "biases" (like different scales for the vertical and horizontal axis) were gone and inferences that seemed "sorta justified" had been crudely visualized. Then I wrote text that put the imagery into words, attempting to functionally serialize the image (like being verbally precise where my visualization seemed coherent, and verbally ambiguous where my visualization seemed fuzzy, and giving each "cluster" a paragraph).

Based on memory I'd guess it was 90-250 minutes of pleasantly spent cognitive focus, depending on how you count? (Singularity stuff is just a hobby, not my day job, and I'm more of a fox than an hedgehog.) The image is hideous relative to "publication standards for a journal", and an honest methods section would mostly just read "look at the data, find a reasonable and interesting story, and do your best to tell the story well" and so it would probably not be reproducible by people who didn't have similar "epistemic tastes".

Despite the limits, if anyone wants to PM me an email address (plus a link back to this comment to remind me what I said here), I can forward the re-processed image to you so you can see it in all its craptastic glory.

comment by CarlShulman · 2012-08-15T00:53:29.150Z · LW(p) · GW(p)

You might break out the predictions which were self-selected, i.e. people chose to make a public deal of their views, as opposed to those which were elicited from less selected groups, e.g. surveys at conferences. One is more likely to think it worth talking about AI timelines in public if one thinks them short.

comment by Shmi (shminux) · 2012-08-15T20:17:22.346Z · LW(p) · GW(p)

I take one thing from this post. Provided your analysis can be trusted, I should completely ignore anyone's predictions on the matter as pure noise.

Replies from: Eliezer_Yudkowsky↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-08-22T07:52:01.565Z · LW(p) · GW(p)

I don't disagree - unless that means you use your own prediction instead, which is the usual unconscious sequelae to 'distrust the experts'. What epistemic state do you end up in after doing this ignoring?

Replies from: shminux↑ comment by Shmi (shminux) · 2012-08-22T15:31:51.324Z · LW(p) · GW(p)

Zeroth approximation: even the experts don't know, I am not an expert, so I know even less, thus I should not make any of my decisions based on singularity-related arguments.

First approximation: find a reference class of predictions that were supposed to come true within 50 years or so, unchanged for decades, and see when (some of them) are resolved. This does not require an AI expert, but rather a historian of sorts. I am not one, and the only obvious predictions in this class are the Rapture/2nd coming and other religious end-of-the-world scares. Another standard example is the proverbial flying car. I'm sure there ought to be more examples, some of them are technological predictions that actually came true. Maybe someone here can suggest a few. Until then, I'm stuck with the zeroth approximation.

Replies from: Eliezer_Yudkowsky, wedrifid, V_V, Dolores1984, army1987↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-08-22T17:35:48.817Z · LW(p) · GW(p)

Putting smarter-than-human AI into the same class as the Rapture instead of the same class as, say, predictions for progress of space travel or energy or neuroscience, sounds to me suspiciously like reference class tennis. Your mind knows what it expects the answer to be, and picks a reference class accordingly. No doubt many of these experts did the same.

And so, once again, "distrust experts" ends up as "trust the invisible algorithm my brain just used or whatever argument I just made up, which of course isn't going to go wrong the way those experts did".

(The correct answer was to broaden confidence intervals in both/all directions.)

Replies from: shminux↑ comment by Shmi (shminux) · 2012-08-22T18:32:00.905Z · LW(p) · GW(p)

I do not believe that I was engaging in the reference class tennis. I tried hard to put AI into the same class as "predictions for progress of space travel or energy or neuroscience", but it just didn't fit. Space travel predictions (of the low-earth-orbit variety) slowly converged in the 40s and 50s with the development of rocket propulsion, ICBMs and later satellites. I am not familiar with the history of abundant energy predictions before and after the discovery of nuclear energy, maybe someone else is. Not sure what neuroscience predictions you are talking about, feel free to clarify.

Replies from: wedrifid↑ comment by wedrifid · 2012-08-25T16:47:54.672Z · LW(p) · GW(p)

I do not believe that I was engaging in the reference class tennis.

You weren't, given the way Eliezer defines the term and the assumptions specified in your comment. I happen to disagree with you but your comment does not qualify as reference class tennis. Especially since you ended up assuming that the reference class is insufficiently populated to even be used unless people suggest things to include.

↑ comment by wedrifid · 2012-08-25T16:45:08.417Z · LW(p) · GW(p)

Zeroth approximation: even the experts don't know, I am not an expert, so I know even less, thus I should not make any of my decisions based on singularity-related arguments.

That is making a decision based on singularity-related arguments. Denial isn't a privileged case that you can invoke when convenient. An argument that arguments for or against X should be ignored is an argument relating to X.

First approximation: find a reference class of predictions that were supposed to come true within 50 years or so, unchanged for decades, and see when (some of them) are resolved.

That seems to be a suitable prior to adopt if you have reason to believe that the AI related predictions are representatively sampled from the class of 50 year predictions. It's almost certainly better than going with "0.5". In the absence of any evidence whatsoever about either AI itself or those making the predictions it would even be an appropriate prediction to use for decision making. Of course if I do happen to have any additional information and ignore it then I am just going to be assigning probabilities that are subjectively objectively false. It doesn't matter how much I plead that I am just being careful.

↑ comment by V_V · 2012-08-22T19:57:44.089Z · LW(p) · GW(p)

- Energy-efficient controlled nuclear fusion

- Space colonization

- Widespread use of videophones (to make actual videocalls)

More failed (just googled them, I didn't check): top-30-failed-technology-predictions

And successful: Ten 100-year predictions that came true

Replies from: Jesper_Ostman, shminux↑ comment by Jesper_Ostman · 2012-09-03T02:25:21.257Z · LW(p) · GW(p)

No videocalls - what about the widespread skyping?

Replies from: V_V↑ comment by Shmi (shminux) · 2012-08-22T20:12:53.872Z · LW(p) · GW(p)

The interesting part is looking back and figuring out if they are in the same reference class as AGI.

Replies from: V_V↑ comment by V_V · 2012-08-22T21:12:44.352Z · LW(p) · GW(p)

Nuclear fusion is always 30-50 years in the future, so it seems very much AI-like in this respect.

I can't find many dates for space colonization, but I'm under the impression that is typically predicted either in the relatively near future (20-25 years) or in the very far future (centuries, millennia).

"Magical" nanotechnology (that is, something capable of making grey goo) is now predicted in 30-50 years, but I don't know how stable this prediction has been in the past.

AGI, fusion, space and nanotech also share the reference class of cornucopian predictions.

Replies from: CarlShulman↑ comment by CarlShulman · 2012-09-17T04:16:13.620Z · LW(p) · GW(p)

AGI, fusion, space and nanotech also share the reference class of cornucopian predictions.

Although there have been some pretty successful cornucopian predictions too: mass production, electricity, scientific agriculture (pesticides, modern crop breeding, artificial fertilizer), and audiovisual recording. By historical standards developed countries do have superabundant manufactured goods, food, music/movies/news/art, and household labor-saving devices.

Replies from: V_V↑ comment by V_V · 2012-09-17T10:39:33.839Z · LW(p) · GW(p)

Were those developments predicted decades in advance? I'm talking about relatively mainstream predictions, not predictions by some individual researcher who could have got it right by chance.

Replies from: CarlShulman↑ comment by CarlShulman · 2012-09-17T11:11:46.528Z · LW(p) · GW(p)

Reader's Digest and the like. All you needed to do was straightforward trend extrapolation during a period of exceptionally fast change in everyday standards of living.

↑ comment by Dolores1984 · 2012-08-22T17:16:00.634Z · LW(p) · GW(p)

Flying cars may be a bad example. They've been possible for some time, but as it turns out, nobody wants a street-legal airplane bad enough to pay for it.

Replies from: shminux, Furcas↑ comment by Shmi (shminux) · 2012-08-22T18:07:32.275Z · LW(p) · GW(p)

What people usually mean when they talk about flying cars is something as small, safe, convenient and cheap as a ground car.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2012-08-23T10:33:43.796Z · LW(p) · GW(p)

What people usually mean when they talk about flying cars is something as small, safe, convenient and cheap as a ground car.

There will never be any such thing. The basic problem with the skycar idea -- the "Model T" airplane for the masses -- is that skycars have inherent and substantial safety hazards compared to ground transport. If a groundcar goes wrong, you only have its kinetic energy to worry about, and it has brakes to deal with that. It can still kill people, and it does, tens of thousands every year, but there are far more minor accidents that do lesser damage, and an unmeasurable number of incidents where someone has avoided trouble by safely bringing the car to a stop.

For a skycar in flight, there is no such thing as a fender-bender. It not only travels faster (it has to, for lift, or the fuel consumption for hovering goes through the roof, and there goes the cheapness), but has in addition the gravitational energy to get rid of when it goes wrong. From just 400 feet up, it will crash at at least 100mph.

When it crashes, it could crash on anything. Nobody is safe from skycars. When a groundcar crashes, the danger zone is confined to the immediate vicinity of the road.

Controlling an aircraft is also far more difficult than controlling a car, taking far more training, partly because the task is inherently more complicated, and partly because the risks of a mistake are so much greater.

Optimistically, I can't see the Moller skycars or anything like them ever being more than a niche within general aviation.

Replies from: Michael_Sullivan, shminux, wedrifid↑ comment by Michael_Sullivan · 2012-08-23T13:26:25.680Z · LW(p) · GW(p)

You say that "There will never be any such thing", but your reasons tell only why the problem is hard and much harder than one might think at first, not why it is impossible. Surely the kind of tech needed for self-driving cars, perhaps an order of magnitude more complicated, would make it possible to have safe, convenient, cheap flying cars or their functional equivalent.

At worst, the reasons you state would make it AI-complete, and even that seems unreasonably pessimistic.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2012-08-23T17:45:08.923Z · LW(p) · GW(p)

I'll cop to "never" being an exaggeration.

The safety issue is a showstopper right now, and will be until computer control reaches the point where cars and aircraft are routinely driven by computer, and air traffic control is also done by computer. Not before mid-century for this.

Then you have the problem of millions -- hundreds of millions? -- of vehicles in the air travelling on independent journeys. That's a problem that completely dwarfs present-day air traffic control. More computers needed.

They are also going to be using phenomenal amounts of fuel. Leaving aside sci-fi dreams of convenient new physics, those Moller craft have to be putting at least 100kW into just hovering. (Back of envelope calculation based on 1 ton weight and 25 m/s downdraft velocity, and ignoring gasoline-to-whirling-fan losses.) Where's that coming from? Cold fusion?

"Never" turns into "not this century", by my estimate.

Of course, if civilisation falls instead, "never" really does mean never, at least, never by humans.

Replies from: shminux↑ comment by Shmi (shminux) · 2012-08-23T18:54:51.678Z · LW(p) · GW(p)

Leaving aside sci-fi dreams of convenient new physics

Or new technology relying on existing physics? Then yes, conventional jets and turbines are not going to cut it.

↑ comment by Shmi (shminux) · 2012-08-23T15:34:29.250Z · LW(p) · GW(p)

It not only travels faster (it has to, for lift, or the fuel consumption for hovering goes through the roof, and there goes the cheapness), but has in addition the gravitational energy to get rid of when it goes wrong. From just 400 feet up, it will crash at at least 100mph.

Failure of imagination, based on postulating the currently existing means of propulsion (rocket or jet engines). Here are some zero energy (but progressively harder) alternatives: buoyant force, magnetic hovering, gravitational repulsion. Or consult your favorite hard sci-fi. Though I agree, finding an alternative to jet/prop/rocket propulsion is the main issue.

When it crashes, it could crash on anything. Nobody is safe from skycars.

If it doesn't have to fall when there is an engine malfunction, it does not have to crash.

Controlling an aircraft is also far more difficult than controlling a car, taking far more training, partly because the task is inherently more complicated, and partly because the risks of a mistake are so much greater.

This is actually an easy problem. Most current planes use fly-by-wire, and newer fighter planes are computer-assisted already, since they are otherwise unstable. Even now it is possible to limit the user input to "car, get me there". Learning to fly planes or drive cars will soon enough be limited to niche occupations, like racing horses.

Incidentally, computer control will also take care of the pilot/driver errors, making fender-benders and mid-air collisions a thing of the past.

Optimistically, I can't see the Moller skycars or anything like them ever being more than a niche within general aviation.

Absolutely, this is a dead end.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2012-08-23T16:42:10.281Z · LW(p) · GW(p)

Failure of imagination, based on postulating the currently existing means of propulsion (rocket or jet engines). Here are some zero energy (but progressively harder) alternatives: buoyant force, magnetic hovering, gravitational repulsion. Or consult your favorite hard sci-fi.

I also can dream.

↑ comment by wedrifid · 2012-08-25T17:04:14.823Z · LW(p) · GW(p)

There will never be any such thing. The basic problem with the skycar idea -- the "Model T" airplane for the masses -- is that skycars have inherent and substantial safety hazards compared to ground transport.

Your certainty seems bizarre. There seems to assumption that the basic problem ("being up in the air is kinda dangerous") is unsolvable as a technical problem. The engineering capability and experience behind the "Model T" was far inferior to the engineering capability and investment we are capable of now and in the near future. Moreover, one of the greatest risks involved with the Model T was that it was driven by humans. Flying cars need not be limited to human control.

There is no particularly good reason to assume that flying cars couldn't be made as safe as the cars we drive on the ground today. Whether it happens is a question of economics, engineering and legislative pressure.

↑ comment by Furcas · 2012-08-22T17:41:46.751Z · LW(p) · GW(p)

Are you sure they're possible? I'm not an engineer, but I'd have guessed there are still some problems to solve, like finding a means of propulsion that doesn't require huge landing pads, and controls that the average car driver could learn and master in a reasonably short period of time.

Replies from: DaFranker, Desrtopa, Dolores1984↑ comment by DaFranker · 2012-08-22T17:51:24.027Z · LW(p) · GW(p)

VTOLs are possible. Many UAVs are VTOL aircraft. Make a bigger one that can carry a person and a few grocery bags instead of a sensor battery, add some wheels for "Ground Mode", and you've essentially got a flying car. An extremely impractical, high-maintenance, high-cost, airspace-constricted, inefficient, power-hungry flying car that almost no one will want to buy, but a flying car nonetheless.

I'm not an expert either, but it seems to me like the difference between "flying car" and "helicopter with wheels" is mostly a question of distance in empirical thingspace-of-stuff-we-could-build, which is a design and fitness-for-purpose issue.

↑ comment by Desrtopa · 2012-08-22T18:00:35.823Z · LW(p) · GW(p)

How broad is your classification of a "car?" If it's fairly broad, then helicopters can reasonably be said to be flying cars. They require landing pads, but not necessarily "huge" ones depending on the type of helicopter, and one can earn a helicopter license with 40 hours of practical training.

Most people's models of "flying cars," for whatever reason, seem to entail no visible method of attaining lift though. By that criterion we can still be said to have flying cars, but maybe only ones that are pretty lousy at flying.

↑ comment by Dolores1984 · 2012-08-23T00:27:37.925Z · LW(p) · GW(p)

The moller skycar certainly exists, although it appears to be still very much a prototype.

↑ comment by A1987dM (army1987) · 2012-08-22T22:06:25.772Z · LW(p) · GW(p)

find a reference class of predictions that were supposed to come true within 50 years or so, unchanged for decades

Electric power from nuclear fusion springs to mind.

comment by Luke_A_Somers · 2012-08-15T09:58:15.950Z · LW(p) · GW(p)

Can we look at the accuracy of predictions concerning AI problems that are not the hard AI problem - speech recognition, say, or image analysis? Some of those could have been fulfilled.

comment by Kaj_Sotala · 2012-08-14T18:47:02.131Z · LW(p) · GW(p)

The database does not include the ages of the predictors, unfortunately, but the results seem to contradict the Maes-Garreau law. Estimating that most predictors were likely not in their fifties and sixties, it seems that the majority predicted AI would likely happen some time before their expected demise.

Actually, this was a miscommunication - the database does include them, but they were in a file Stuart wasn't looking at. Here's the analysis.

Of the predictions that could be construed to be giving timelines for the creation of human-level AI, 65 predictions either had ages on record, or were late enough that the predictor would obviously be dead by then. I assumed (via gwern's suggestion) everyone's life expectency to be 80 and then simply checked whether the predicted date would be before their expected date of death. This was true for 31 of the predictions and false for 34 of them.

Those 66 predictions included several cases where somebody had made multiple predictions over their lifetime, so I also made a comparison where I only picked the earliest prediction of everyone who had made one. This brought the number of predictions down to 46, out of which 19 had AI showing up during the predictor's lifetime and 27 were not.

Replies from: Stuart_Armstrong, Unnamed↑ comment by Stuart_Armstrong · 2012-08-14T19:33:27.411Z · LW(p) · GW(p)

Can you tell how many were close to limit? That's what the "law" is mainly about.

Replies from: Kaj_Sotala, Kaj_Sotala↑ comment by Kaj_Sotala · 2012-08-15T08:22:35.581Z · LW(p) · GW(p)

Okay, so here I took the the predicted date for AI, and from that I subtracted expected year of death for a person. So if they predict that AI will be created 20 years before their death, this comes out as -20, and if they say it will be created 20 years after their death, 20.

This had the minor issue that I was assuming everyone's life expectancy to be 80, but some people lived to make predictions after that age. That wasn't an issue in just calculating true/false values for "will this event happen during one's lifetime", but here it was. So I redefined life expectancy to be 80 years if the person is at most 80 years old, or X years if the person is X years old. That's somewhat ugly, but aside for actually looking up actuarial statistics for each age and year separately, I don't know of a better solution.

These are the values of that calculation. I used only the data with multiple predictions by the same people eliminated, as doing otherwise would give an undue emphasis on a very small number of individuals and the dataset is small enough as it is:

-41, -41, -39, -28, -26, -24, -20, -18, -12, -10, -10, -9, -8, -8, -7, -5, 0, 0, 2, 3, 3, 8, 9, 11, 16, 19, 20, 30, 34, 51, 51, 52, 59, 75, 82, 96, 184.

Eyeballing that, looks pretty evenly distributed to me. Also, here's a scatterplot of age of predictor vs. time to AI: http://kajsotala.fi/Random/ScatterAgeToAI.jpg

And here's age of predictor vs. the (prediction-lifetime) figure, showing that younger people are more likely to predict AI within their lifetimes, which makes sense: http://kajsotala.fi/Random/ScatterAgeToPredictionLifetime.jpg

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2012-08-15T10:07:05.033Z · LW(p) · GW(p)

Updated the main post with your new information, thanks!

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2012-08-15T11:14:17.000Z · LW(p) · GW(p)

You're quite welcome. :-)

↑ comment by Kaj_Sotala · 2012-08-14T20:06:33.289Z · LW(p) · GW(p)

I'll give it a look.

↑ comment by Unnamed · 2012-08-14T19:20:24.434Z · LW(p) · GW(p)

Is that more or less than what we'd expect if there was no relationship between age and predictions? If you randomly paired predictions with ages (sampling separately from the two distributions), what proportion would be within the "lifetime"?

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2012-08-15T09:19:30.419Z · LW(p) · GW(p)

Looks roughly like it - see this comment.

comment by novalis · 2012-08-14T20:14:18.305Z · LW(p) · GW(p)

This is a fantastic post. It would be great if you would put the raw data up somewhere. I have no specific reason for asking this other than that it seems like good practice generally. Also, what was your search process for finding predictions?

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2012-08-14T21:39:03.340Z · LW(p) · GW(p)

Will probably do that at some point when I write up the final paper - though the data also belongs to SI, and they might want to do stuff before putting it out.

comment by NancyLebovitz · 2012-08-16T15:23:22.629Z · LW(p) · GW(p)

The other stereotype is the informal 20-30 year range for any new technology: the predictor knows the technology isn't immediately available, but puts it in a range where people would still be likely to worry about it. And so the predictor gets kudos for addressing the problem or the potential, and is safely retired by the time it (doesn't) come to pass.

When I saw that, 30 years seemed long to me. Considering how little checking there is of predictions, a shorter period (no need for retirement, just give people enough time to forget) seemed more likely.

And behold:

As can be seen, the 20-30 year stereotype is not exactly born out - but a 15-25 one would be.

comment by David_Gerard · 2012-08-15T11:01:01.232Z · LW(p) · GW(p)

The Wikipedia article on the Maes-Garreau law is marked for prospective deletion because it's a single-reference neologism. Needs evidence of wider use.

comment by Kaj_Sotala · 2012-08-15T09:30:42.475Z · LW(p) · GW(p)

If it was the collection of a variety of expert opinions, I took the prediction of the median expert.

Hmm, I wonder if we're not losing valuable data this way. E.g. you mentioned that before 1990, there were no predictions with a timeline of more than 50 years, but I seem to recall that one of the surveys from the seventies or eighties had experts giving such predictions. Do we have a reason to treat the surveys as a single prediction, if there's the possibility to break them down into X independent predictions? That's obviously not possible if the survey only gives summary statistics, but at least some of them did say things like "8 experts in this survey put AI 20 years away".

That would also allow us to implement Carl's suggestion of comparing self-selected and non-self-selected experts - otherwise there won't be enough non-self-selected predictions to do anything useful with.

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2012-08-15T13:28:50.876Z · LW(p) · GW(p)

Possible. The whole data analysis process is very open to improvements.

comment by RolfAndreassen · 2012-08-14T17:23:26.274Z · LW(p) · GW(p)

The only noticeable difference is that amateurs lacked the upswing at 50 years, and were relatively more likely to push their predictions beyond 75 years. This does not look like good news for the experts - if their performance can't be distinguished from amateurs, what contribution is their expertise making?

I believe you can put your case even a bit more strongly than this. With this amount of data, the differences you point out are clearly within the range of random fluctuations; the human eye picks them out, but does not see the huge reference class of similarly "different" distributions. I predict with confidence over 95% that a formal statistical analysis would find no difference between the "expert" and "amateur" distributions.

Replies from: Stuart_Armstrong, olalonde, Will_Sawin↑ comment by Stuart_Armstrong · 2012-08-14T17:31:47.808Z · LW(p) · GW(p)

I agree. I didn't do a formal statistical analysis, simply because with such little data and the potential biases, it would only give us a spurious feeling of certainty.

↑ comment by olalonde · 2012-08-14T18:11:39.950Z · LW(p) · GW(p)

Perhaps their contribution is in influencing the non experts? It is very likely that the non experts base their estimates on whatever predictions respected experts have made.

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2012-08-14T18:16:18.245Z · LW(p) · GW(p)

Seems pretty unlikely - because you'd then expect the non-experts to have the same predicted dates as the experts, but not the same distribution of time to AI.

Also the examples I saw were mainly of non-experts saying: AI will happen around here because well, I say so. (sometimes spiced with Moore's law).

↑ comment by Will_Sawin · 2012-08-16T18:26:29.266Z · LW(p) · GW(p)

It seems quite likely to me a priori that "experts" would be driven to make fewer extreme predictions because they're more interested in defending their status by adapting a moderate position and also more able to do so.

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2012-08-16T21:34:01.454Z · LW(p) · GW(p)

Is that really a priori? ie did you come up with that idea before seeing this post?

Replies from: Will_Sawin, handoflixue↑ comment by Will_Sawin · 2012-08-17T01:46:56.952Z · LW(p) · GW(p)

Did I? No.

Would I have? I'm pretty sure.

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2012-08-17T09:11:30.609Z · LW(p) · GW(p)

Then we'll never know - hindsight bias is the bitchiest of bitches.

Replies from: lukeprog, Will_Sawin↑ comment by Will_Sawin · 2012-08-19T02:36:37.263Z · LW(p) · GW(p)

I'm trying to use the outside view to combat it. It is hard for me to think up examples of experts making more extreme-sounding claims than interested amateurs. The only argument the other way that I can think of is that AI itself is so crazy that seeing it occur in less that 100 years is the extreme position, and the other way around is moderate, but I don't find that very convincing.

In addition, I don't see reason to believe I'm different from lukeprog or handoflixue.

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2012-08-19T08:56:24.103Z · LW(p) · GW(p)

Philosophy experts are very fond of saying AI is impossible, neuroscientist experts seem to often proclaim it'll take centuries... By the time you break it down into categories and consider the different audiences and expert cultures, I think we have too little data to say much.

↑ comment by handoflixue · 2012-08-16T23:16:00.020Z · LW(p) · GW(p)

I would a priori assume that "experts" with quote marks are mainly interested in attention, and extreme predictions here are unlikely to get positive attention (Saying AI will happen in 75+ years is boring, saying it will happen tomorrow kills your credibility)

So, for me at least, yes.

comment by taw · 2012-08-14T20:03:32.042Z · LW(p) · GW(p)

But in a field like AI prediction, where experts lack feed back for their pronouncements, we should expect them to perform poorly, and for biases to dominate their thinking.

And that's pretty much the key sentence.

There is little difference between experts and non-experts.

Except there's no such thing as AGI expert.

Replies from: Stuart_Armstrong↑ comment by Stuart_Armstrong · 2012-08-14T21:38:08.897Z · LW(p) · GW(p)

There are classes of individuals that might be plausibly effective at predicting AGI - but this now appears to not be the case.

Replies from: None↑ comment by [deleted] · 2012-08-15T00:59:31.469Z · LW(p) · GW(p)

So, what taw said.

Replies from: None↑ comment by [deleted] · 2012-08-15T12:05:34.359Z · LW(p) · GW(p)

No, they're completely different. Taw's said that there are no people in a certain class; Stuart_Armstrong said that there is strong evidence that there are no people in a certain class.

Replies from: evand↑ comment by evand · 2012-08-16T17:57:59.234Z · LW(p) · GW(p)

Actually, what Stuart_Armstrong said was that we have shown certain classes of people (that we thought might be experts) are not, as a class, experts. The strong evidence is that we have not yet found a way to distinguish the class of experts. Which is, in my opinion, weak to moderate evidence that the class does not exist, not strong evidence. When it comes to trying to evaluate predictions on their own terms (because you're curious about planning for your future life, for instance) the two statements are similar. In other cases (for example, trying to improve the state of the art of AI predictions, or predictions of the strongly unknown more generally), the two statements are meaningfully different.

comment by MrCheeze · 2012-08-30T18:46:54.927Z · LW(p) · GW(p)

Man, people's estimations seem REALLY early. The idea of AI in fifty years seems almost absurd to me.

Replies from: Mitchell_Porter↑ comment by Mitchell_Porter · 2012-08-31T01:33:15.048Z · LW(p) · GW(p)

Why? Are you thinking of an AI-in-a-laptop? You should think in terms of, say, a whole data center as devoted to a single AI. This is how search engines already work - a single "information retrieval" algorithm, using techniques from linear algebra to process the enormous data structures in which the records are kept, in order to answer a query. The move towards AI just means that the algorithm becomes much more complicated. When I remember that we already have warehouses of thousands of networked computers, tended by human staff, containing distributed proto-AIs that interact with the public ... then imminent AI isn't hard to imagine at all.

Replies from: MrCheezecomment by A1987dM (army1987) · 2012-08-17T10:09:49.315Z · LW(p) · GW(p)

a slight increase at 50 years

Consistent with a fluctuation in Poisson statistics, as far as I can tell. (I usually draw sqrt(N) error bars on such kind of graphs. And if we remove the data point at around X = 1950, my eyeball doesn't see anything unusual in the scatterplot around the Y = 50 line)

comment by MichaelHoward · 2012-08-15T00:03:05.428Z · LW(p) · GW(p)

Have you looked separately at the predictions made about milestones that have now happened (e.g. win Jeopardy!, drive in traffic, beat Grand Master/respectable amateur at chess/backgammon/checkers/tic-tac-toe/WW2, compute astronomical tables) for comparison with the future/AGI predictions?

I'm especially curious about the data for people who have made both kinds of prediction, what correlations are there, and how the predictions of things-still-to-come look when weighted by accuracy of predictions of things-that-happened-by-now.

comment by MichaelHoward · 2012-08-15T00:00:51.619Z · LW(p) · GW(p)

We've managed to put together a databases listing all AI predictions that we could find.

Have you looked separately at the predictions made about milestones that have now happened (e.g. win Jeopardy!, drive in traffic, beat Grand Master/respectable amateur at chess/backgammon/checkers/tic-tac-toe, compute astronomical tables) for comparison with the future/AGI predictions?

I'm especially curious about the data for people who have made both kinds of prediction, what correlations are there, and how the predictions of things-still-to-come look when weighted by accuracy of predictions of things-that-happened-by-now.

comment by Subjective1 · 2013-04-13T17:20:50.968Z · LW(p) · GW(p)

I believe that developing of an "Intelligent" machines will did not happen in the observable future. I am stating so because, although it is possible to design of an artificial subjective system capable to determine its own behavior, today science did not provide the factual basis for such development. AI is driven by illusory believes and that will prevent the brake true.

Replies from: MugaSofer↑ comment by MugaSofer · 2013-04-13T18:03:53.958Z · LW(p) · GW(p)

Sorry, not sure if I'm parsing you right - you're saying AI is possible but we wont figure it out within, say, the next century, correct? If I got that right, could you explain why? I personally would expect brain uploads "in the observable future" even if we couldn't write an AI from scratch, and I think most people here would agree.

Also, you might want to work on your grammar/editing - are you by any chance not a native English speaker?