80,000 hours should remove OpenAI from the Job Board (and similar EA orgs should do similarly)

post by Raemon · 2024-07-03T20:34:50.741Z · LW · GW · 71 commentsContents

72 comments

71 comments

Comments sorted by top scores.

comment by Zvi · 2024-07-06T17:04:06.736Z · LW(p) · GW(p)

Not only do they continue to list such jobs, they do so with no warnings that I can see regarding OpenAI's behavior, including both its actions involving safety and also towards its own employees.

Not warning about the specific safety failures and issues is bad enough, and will lead to uninformed decisions on the most important issue of someone's life.

Referring a person to work at OpenAI, without warning them about the issues regarding how they treat employees, is so irresponsible towards the person looking for work as to be a missing stair issue.

I am flaberghasted that this policy has been endorsed on reflection.

Replies from: cody-rushing↑ comment by Cody Rushing (cody-rushing) · 2024-07-07T04:38:28.769Z · LW(p) · GW(p)

I'm surprised by this reaction. It feels like the intersection between people who have a decent shot of getting hired at OpenAI to do safety research and those who are unaware of the events at OpenAI related to safety are quite low.

Replies from: DPiepgrass↑ comment by DPiepgrass · 2024-07-15T20:30:21.707Z · LW(p) · GW(p)

I expect there are people who are aware that there was drama but don't know much about it and should be presented with details from safety-conscious people who closely examined what happened.

comment by Ideopunk · 2024-07-03T22:20:23.814Z · LW(p) · GW(p)

(Cross-posted from the EA forum)

Hi, I run the 80,000 Hours job board, thanks for writing this out!

I agree that OpenAI has demonstrated a significant level of manipulativeness and have lost confidence in them prioritizing existential safety work. However, we don’t conceptualize the board as endorsing organisations. The point of the board is to give job-seekers access to opportunities where they can contribute to solving our top problems or build career capital to do so (as we write in our FAQ). Sometimes these roles are at organisations whose mission I disagree with, because the role nonetheless seems like an opportunity to do good work on a key problem.

For OpenAI in particular, we’ve tightened up our listings since the news stories a month ago, and are now only posting infosec roles and direct safety work – a small percentage of jobs they advertise. See here for the OAI roles we currently list. We used to list roles that seemed more tangentially safety-related, but because of our reduced confidence in OpenAI, we limited the listings further to only roles that are very directly on safety or security work. I still expect these roles to be good opportunities to do important work. Two live examples:

- Infosec

- Even if we were very sure that OpenAI was reckless and did not care about existential safety, I would still expect them to not want their model to leak out to competitors, and importantly, we think it's still good for the world if their models don't leak! So I would still expect people working on their infosec to be doing good work.

- Non-infosec safety work

- These still seem like potentially very strong roles with the opportunity to do very important work. We think it’s still good for the world if talented people work in roles like this!

- This is true even if we expect them to lack political power and to play second fiddle to capabilities work and even if that makes them less good opportunities vs. other companies.

We also include a note on their 'job cards' on the job board (also DeepMind’s and Anthropic’s) linking to the Working at an AI company article you mentioned, to give context. We’re not opposed to giving more or different context on OpenAI’s cards and are happy to take suggestions!

Replies from: pktechgirl, Linda Linsefors, LosPolloFowler, remmelt-ellen, William_S, DPiepgrass↑ comment by Elizabeth (pktechgirl) · 2024-07-03T23:35:16.443Z · LW(p) · GW(p)

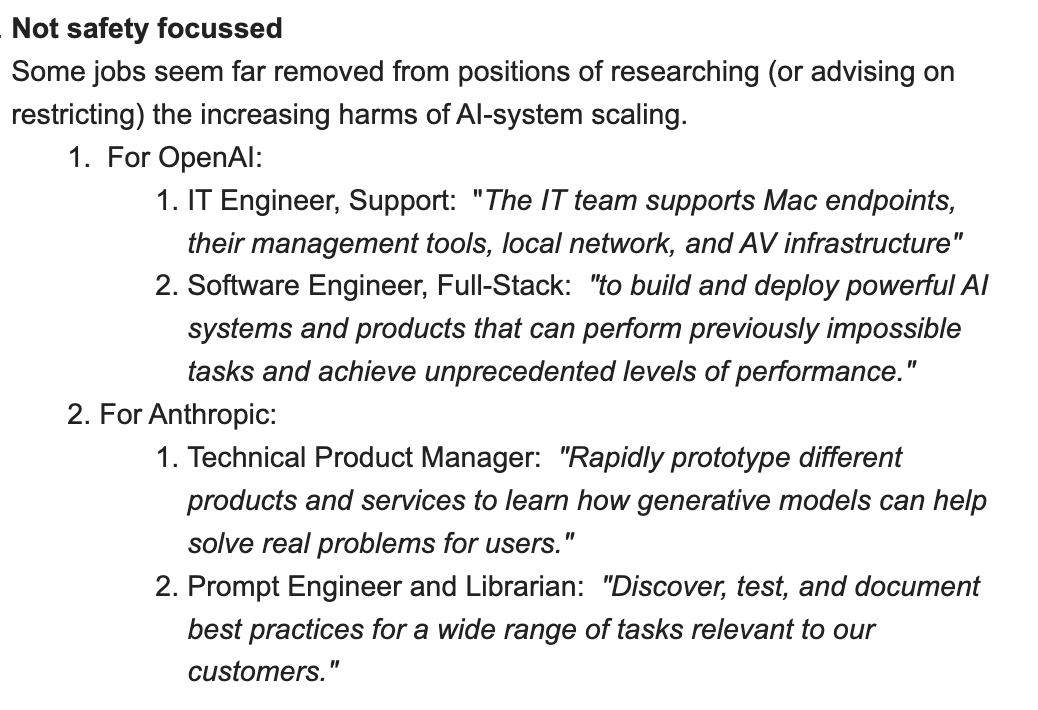

How does 80k identify actual safety roles, vs. safety-washed capabilities roles?

Replies from: pktechgirl↑ comment by Elizabeth (pktechgirl) · 2024-07-05T18:28:55.903Z · LW(p) · GW(p)

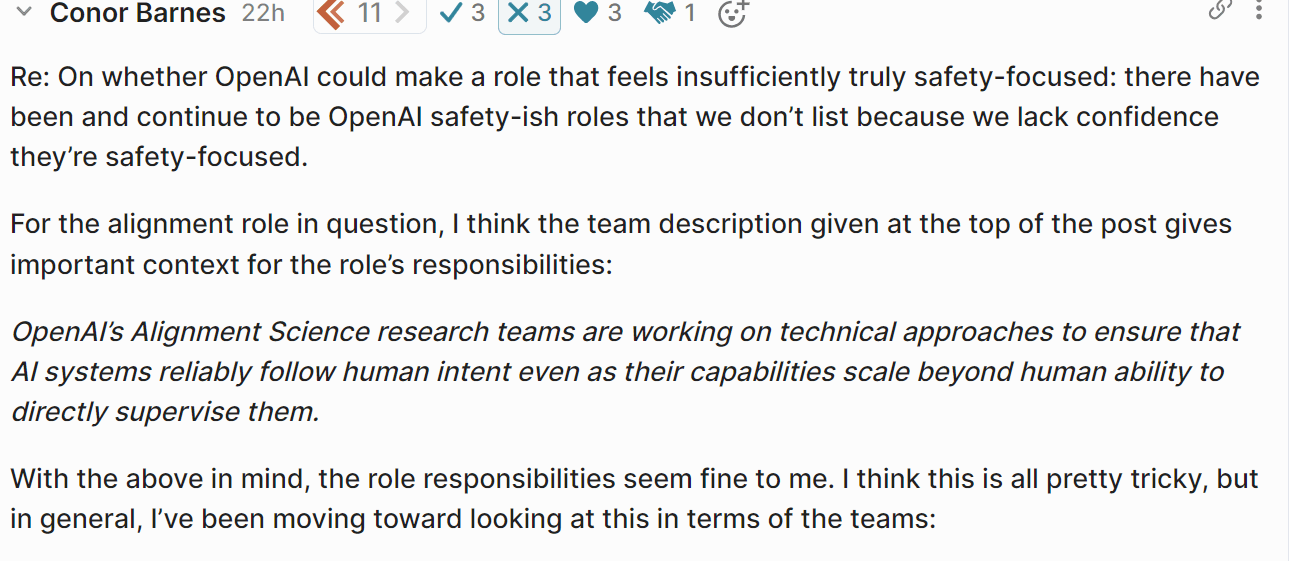

From Conor's response [EA(p) · GW(p)]on EAForum, it sounds like the answer is "we trust OpenAI to tell us". In light of what we already know (safety team exodus, punitive and hidden NDAs, lack of disclosure to OpenAI's governing board), that level of trust seems completely unjustified to me.

↑ comment by Buck · 2024-07-07T02:58:52.042Z · LW(p) · GW(p)

I would be shocked if OpenAI employees who took the role with that job description were pushed into doing capabilities research they didn't want to do. (Obviously it's plausible that they'd choose to do capabilities research while they were already there.)

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-07-07T06:53:41.184Z · LW(p) · GW(p)

Huh, this doesn't super match my model. I have heard of people at OpenAI being pressured a lot into making sure their safety work helps with productization. I would be surprised if they end up being pressured working directly on the scaling team, but I wouldn't end up surprised with someone being pressured into doing some better AI censorship in a way that doesn't have any relevance to AI safety and does indeed make OpenAI a lot of money.

Replies from: Buck↑ comment by Buck · 2024-07-09T04:26:05.072Z · LW(p) · GW(p)

I wouldn't end up surprised with someone being pressured into doing some better AI censorship in a way that doesn't have any relevance to AI safety and does indeed make OpenAI a lot of money.

I disagree for the role advertised, I would be surprised by that. (I'd be less surprised if they advised on some post-training stuff that you'd think of as capabilities; I think that the "AI censorship" work is mostly done by a different team that doesn't talk to the superalignment people that much. But idk where the superoversight people have been moved in the org, maybe they'd more naturally talk more now.)

↑ comment by eye96458 · 2024-07-06T02:39:13.119Z · LW(p) · GW(p)

Can you clarify what you mean by "completely unjustified"? For example, if OpenAI says "This role is a safety role.", then in your opinion, what is the probability that the role is a genuine safety role?

Replies from: pktechgirl, Buck, elityre↑ comment by Elizabeth (pktechgirl) · 2024-07-07T00:46:27.421Z · LW(p) · GW(p)

I'd define "genuine safety role" as "any qualified person will increase safety faster that capabilities in the role". I put ~0 likelihood that OAI has such a position. The best you could hope for is being a marginal support for a safety-based coup (which has already been attempted, and failed).

There's a different question of "could a strategic person advance net safety by working at OpenAI, more so than any other option?". I believe people like that exist, but they don't need 80k to tell them about OpenAI.

Replies from: Buck, eye96458↑ comment by Buck · 2024-07-07T02:56:22.605Z · LW(p) · GW(p)

I'd define "genuine safety role" as "any qualified person will increase safety faster that capabilities in the role". I put ~0 likelihood that OAI has such a position.

Which of the following claims are you making?

- OpenAI doesn't have any roles doing AI safety research aimed at reducing catastrophic risk from egregious AI misalignment; people who think they're taking such a role will end up assigned to other tasks instead.

- OpenAI does have roles where people do AI safety research aimed at reducing catastrophic risk from egregious AI misalignment, but all the research done by people in those roles sucks and the roles contribute to OpenAI having a good reputation, so taking those roles is net negative.

I find the first claim pretty implausible. E.g. I think that the recent SAE paper and the recent scalable oversight paper obviously count as an attempt at AI safety research. I think that people who take roles where they expect to work on research like that basically haven't ended up unwillingly shifted to roles on e.g. safety systems, core capabilities research, or product stuff.

Replies from: elityre, Raemon, pktechgirl↑ comment by Eli Tyre (elityre) · 2024-08-10T06:57:01.863Z · LW(p) · GW(p)

I'm not Elizabeth or Ray, but there's a third option which I read the comment above to mean, and which I myself find plausible.

OpenAI does have roles that are obstensivly aimed at reducing catastrophic risk from egregious AI misalignment. However, without more information, an outsider should not expect that those roles actually accelerate safety more than they accelerate capabilities.

Successfully increasing safety faster than capabilities requires that person to have a number of specific skills (eg political savvy, robustness to social pressure, a higher granularity strategic/technical model than most EAs have in practice, the etc.), over and above the skills that would be required to get hired for the role.

Lacking those skills, a hire for such a role is more likely to do harm than good, not primarily because they'll be transitioned to other tasks, but because much of the work that the typical hire for such a role would end up doing either 1) doesn't help or 2) will end up boosting OpenAI's general capabilities more than it helps.

Furthermore, by working at OpenAI at all, they provide some legitimacy to the org as a whole, and to the existentially dangerous work happening in other parts of it, even if their work does 0 direct harm. Someone working in such a role has to do sufficiently beneficial on-net work to overcome this baseline effect.

↑ comment by Raemon · 2024-07-08T03:55:17.695Z · LW(p) · GW(p)

I'm not Elizabeth and probably wouldn't have worded my thoughts quite the same, but my own position regarding your first bullet point is:

"When I see OpenAI list a 'safety' role, I'm like 55% confident that it has much to do with existential safety, and maybe 25% that it produces more existential safety than existential harm."

Replies from: Buck↑ comment by Buck · 2024-07-09T04:28:57.684Z · LW(p) · GW(p)

When you say "when I see OpenAI list a 'safety' role", are you talking about roles related to superalignment, or are you talking about all roles that have safety in the name? Obviously OpenAI has many roles that are aimed at various near-term safety stuff, and those might have safety in the name, but this isn't duplicitous in the slightest--the job descriptions (and maybe even the rest of the job titles!) explain it perfectly clearly so it's totally fine.

I assume you meant something like "when I see OpenAI list a role that seems to be focused on existential safety, I'm like 55% that it has much to do with existential safety"? In that case, I think your number is too low.

Replies from: Raemon↑ comment by Raemon · 2024-07-09T04:37:33.155Z · LW(p) · GW(p)

I was thinking of things like the Alignment Research Science role. If they talked up "this is a superalignment role", I'd have an estimate higher than 55%.

Replies from: BuckWe are seeking Researchers to help design and implement experiments for alignment research. Responsibilities may include:

- Writing performant and clean code for ML training

- Independently running and analyzing ML experiments to diagnose problems and understand which changes are real improvements

- Writing clean non-ML code, for example when building interfaces to let workers interact with our models or pipelines for managing human data

- Collaborating closely with a small team to balance the need for flexibility and iteration speed in research with the need for stability and reliability in a complex long-lived project

- Understanding our high-level research roadmap to help plan and prioritize future experiments

- Designing novel approaches for using LLMs in alignment research

You might thrive in this role if you:

- Are excited about OpenAI’s mission of building safe, universally beneficial AGI and are aligned with OpenAI’s charter

- Want to use your engineering skills to push the frontiers of what state-of-the-art language models can accomplish

- Possess a strong curiosity about aligning and understanding ML models, and are motivated to use your career to address this challenge

- Enjoy fast-paced, collaborative, and cutting-edge research environments

- Have experience implementing ML algorithms (e.g., PyTorch)

- Can develop data visualization or data collection interfaces (e.g., JavaScript, Python)

- Want to ensure that powerful AI systems stay under human control

↑ comment by Buck · 2024-07-09T04:41:28.169Z · LW(p) · GW(p)

Yeah, I think that this is disambiguated by the description of the team:

OpenAI’s Alignment Science research teams are working on technical approaches to ensure that AI systems reliably follow human intent even as their capabilities scale beyond human ability to directly supervise them.

We focus on researching alignment methods that scale and improve as AI capabilities grow. This is one component of several long-term alignment and safety research efforts at OpenAI, which we will provide more details about in the future.

So my guess is that you would call this an alignment role (except for the possibility that the team disappears because of superalignment-collapse-related drama).

Replies from: Raemon↑ comment by Raemon · 2024-07-09T17:16:54.959Z · LW(p) · GW(p)

Yeah I read those lines, and also "Want to use your engineering skills to push the frontiers of what state-of-the-art language models can accomplish", and remain skeptical. I think the way OpenAI tends to equivocate on how they use the word "alignment" (or: they use it consistently, but, not in a way that I consider obviously good. Like, I the people working on RLHF a few years ago probably contributed to ChatGPT being released earlier which I think was bad*)

*I like the part where the world feels like it's actually starting to respond to AI now, but, I think that would have happened later, with more serial-time for various other research to solidify.

(I think this is a broader difference in guesses about what research/approaches are good, which I'm not actually very confident about, esp. compared to habryka, but, is where I'm currently coming from)

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2024-08-10T07:05:24.501Z · LW(p) · GW(p)

Tangent:

*I like the part where the world feels like it's actually starting to respond to AI now, but, I think that would have happened later, with more serial-time for various other research to solidify.

And with less serial-time for various policy plan to solidify and gain momentum.

If you think we're irreparably far behind on the technical research, and advocacy / political action is relatively more promising, you might prefer to trade years of timeline for earlier, more widespread awareness of the importance of AI, and a longer relatively long period of people pushing on policy plans.

↑ comment by Elizabeth (pktechgirl) · 2024-07-08T00:14:22.319Z · LW(p) · GW(p)

Good question. My revised belief is that OpenAI will not sufficiently slow down production in order to boost safety. It may still produce theoretical safety work that is useful to others, and to itself if the changes are cheap to implement.

I do also expect many people assigned to safety to end up doing more work on capabilities, because the distinction is not always obvious and they will have so many reasons to err in the direction of agreeing with their boss's instructions.

Replies from: Buck↑ comment by Buck · 2024-07-09T04:24:03.603Z · LW(p) · GW(p)

Ok but I feel like if a job mostly involves research x-risk-motivated safety techniques and then publish them, it's very reasonable to call it an x-risk-safety research job, regardless of how likely the organization where you work is to adopt your research eventually when it builds dangerous AI.

↑ comment by eye96458 · 2024-07-07T02:04:16.815Z · LW(p) · GW(p)

I'd define "genuine safety role" as "any qualified person will increase safety faster that capabilities in the role". I put ~0 likelihood that OAI has such a position. The best you could hope for is being a marginal support for a safety-based coup (which has already been attempted, and failed).

"~0 likelihood" means that you are nearly certain that OAI does not have such a position (ie, your usage of "likelihood" has the same meaning as "degree of certainty" or "strength of belief")? I'm being pedantic because I'm not a probability expert and AFAIK "likelihood" has some technical usage in probability.

If you're up for answering more questions like this, then how likely do you believe it is that OAI has a position where at least 90% of people who are both, (A) qualified skill wise (eg, ML and interpretability expert), and, (B) believes that AIXR is a serious problem, would increase safety faster than capabilities in that position?

There's a different question of "could a strategic person advance net safety by working at OpenAI, more so than any other option?". I believe people like that exist, but they don't need 80k to tell them about OpenAI.

This is a good point and you mentioning it updates me towards believing that you are more motivated by (1) finding out what's true regarding AIXR and (2) reducing AIXR, than something like (3) shit talking OAI.

I asked a related question a few months ago, ie, if one becomes doom pilled while working as an executive at an AI lab and one strongly values survival, what should one do? [LW(p) · GW(p)]

Replies from: pktechgirl↑ comment by Elizabeth (pktechgirl) · 2024-07-08T00:09:09.299Z · LW(p) · GW(p)

how likely do you believe it is that OAI has a position where at least 90% of people who are both, (A) qualified skill wise (eg, ML and interpretability expert), and, (B) believes that AIXR is a serious problem, would increase safety faster than capabilities in that position?

The cheap answer here is 0, because I don't think there is any position where that level of skill and belief in AIXR has a 90% chance of increasing net safety. Ability to do meaningful work in this field is rarer than that.

So the real question is how does OpenAI compare to other possibilities? To be specific, let's say being an LTFF-funded solo researcher, academia, and working at Anthropic.

Working at OpenAI seems much more likely to boost capabilities than solo research and probably academia. Some of that is because they're both less likely to do anything. But that's because they face OOM less pressure to produce anything, which is an advantage in this case. LTFF is not a pressure- or fad-free zone, but they have nothing near the leverage of paying someone millions of dollars, or providing tens of hours each week surrounded by people who are also paid millions of dollars to believe they're doing safe work.

I feel less certain about Anthropic. It doesn't have any of terrible signs OpenAI did (like the repeated safety exoduses, the board coup, and clawbacks on employee equity), but we didn't know about most of those a year ago.

If we're talking about a generic skilled and concerned person, probably the most valuable thing they can do is support someone with good research vision. My impression is that these people are more abundant at Anthropic than OpenAI, especially after the latest exodus, but I could be wrong. This isn't a crux for me for the 80k board[1] but it is a crux for how much good could be done in the role.

Some additional bits of my model:

- I doubt OpenAI is going to tell a dedicated safetyist they're off the safety team and on direct capabilities. But the distinction is not always obvious, and employees will be very motivated to not fight OpenAI on marginal cases.

- You know those people who stand too close, so you back away, and then they move closer? Your choices in that situation are to steel yourself for an intense battle, accept the distance they want, or leave. Employers can easily pull that off at scale. They make the question become "am I sure this will never be helpful to safety?" rather than "what is the expected safety value of this research?"

- Alternate frame: How many times will an entry level engineer get to say no before he's fired?

- I have a friend who worked at OAI. They'd done all the right soul searching and concluded they were doing good alignment work. Then they quit, and a few months later were aghast at concerns they'd previous dismissed. Once you are in the situation is is very hard to maintain accurate perceptions.

- Something @Buck [LW · GW] said made me realize I was conflating "produce useful theoretical safety work" with "improve the safety of OpenAI's products." I don't think OpenAI will stop production for safety reasons[2], but they might fund theoretical work that is useful to others, or that is cheap to follow themselves (perhaps because it boosts capabilities as well...).

This is a good point and you mentioning it updates me towards believing that you are more motivated by (1) finding out what's true regarding AIXR and (2) reducing AIXR, than something like (3) shit talking OAI.

Thank you. My internal experience is that my concerns stem from around x-risk (and belatedly the wage theft). But OpenAI has enough signs of harm and enough signs of hiding harm that I'm fine shit talking as a side effect, where normally I'd try for something more cooperative and with lines of retreat.

- ^

I think the clawbacks are disqualifying on their own, even if they had no safety implications. They stole money from employees! That's one of the top 5 signs you're in a bad workplace. 80k doesn't even mention this.

- ^

to ballpark quantify: I think there is <5% chance that OpenAI slows production by 20% or more, in order to reduce AIXR. And I believe frontier AI companies need to be prepared to slow by more than that.

↑ comment by Eli Tyre (elityre) · 2024-07-06T05:23:12.245Z · LW(p) · GW(p)

Hm. Can I request tabooing the phrase "genuine safety role" in favor of more detailed description of the work that's done? There's broad disagreement about which kinds of research are (or should count as) "AI safety", and what's required for that to succeed.

Replies from: eye96458↑ comment by eye96458 · 2024-07-06T06:02:20.342Z · LW(p) · GW(p)

Can I request tabooing the phrase "genuine safety role" in favor of more detailed description of the work that's done?

I suspect that would provide some value, but did you mean to respond to @Elizabeth [LW · GW]?

I was just trying to use the term as a synonym for "actual safety role" as @Elizabeth [LW · GW] used it in her original comment [LW(p) · GW(p)].

There's broad disagreement about which kinds of research are (or should count as) "AI safety", and what's required for that to succeed.

This part of your comment seems accurate to me, but I'm not a domain expert.

↑ comment by Linda Linsefors · 2024-07-04T21:25:56.633Z · LW(p) · GW(p)

However, we don’t conceptualize the board as endorsing organisations.

It don't matter how you conceptualize it. It matters how it looks, and it looks like an endorsement. This is not an optics concern. The problem is that people who trust you will see this and think OpenAI is a good place to work.

- These still seem like potentially very strong roles with the opportunity to do very important work. We think it’s still good for the world if talented people work in roles like this!

How can you still think this after the whole safety team quit? They clearly did not think these roles where any good for doing safety work.

Edit: I was wrong about the whole team quitting. But given everything, I still stand by that these jobs should not be there with out at leas a warning sign.

As a AI safety community builder, I'm considering boycotting 80k (i.e. not link to you and reccomend people not to trust your advise) until you at least put warning labels on your job board. And I'll reccomend other community builders to do the same.

I do think 80k means well, but I just can't reccomend any org with this level of lack of judgment. Sorry.

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2024-07-05T06:48:11.588Z · LW(p) · GW(p)

As a AI safety community builder, I'm considering boycotting 80k (i.e. not link to you and reccomend people not to trust your advise) until you at least put warning labels on your job board.

Hm. I have mixed feelings about this. I'm not sure where I land overall.

I do think it is completely appropriate for Linda to recommend whichever resources she feels are appropriate, and if her integrity calls her, to boycott resources that otherwise have (in her estimation) good content.

I feel a little sad that I, at least, perceived that sentence as an escalation. There's a version of this conversation where we all discuss considerations, in public and in private, and 80k is a participant in that conversation. There's a different version where 80k immediately feels the need to be on the defensive, in something like PR mode, or where the outcome is mostly determined by the equilibrium of social-power rather than anything else.That seems overall worse, and I'm afraid that sentences like the quoted one, push in that direction.

On the other hand, I also feel some resonance with the escalation. I think "we", broadly construed, have been far to warm with OpenAI, and it seems maybe good that there's common knowledge building that a lot of people think that was a mistake, and momentum building towards doing something different going forward, including people "voting with their voices", instead of being live-and-let-live to the point of having no real position at all.

↑ comment by the gears to ascension (lahwran) · 2024-07-05T14:02:38.645Z · LW(p) · GW(p)

it may be too much to ask, but in my ideal world, 80k folks would feel comfy ignoring the potential escalatory emotional valence and would treat that purely as evidence about the importance of it to others. in other words, if people are demanding something, that's a time to get less defensive and more analytical, not more defensive and less analytical. It would be good PR to me for them to just think out loud about it.

Replies from: Buck↑ comment by Buck · 2024-07-05T15:47:41.548Z · LW(p) · GW(p)

I agree that it would be better if 80k had the capacity to easily navigate this kind of thing. But given that they (like all of us) have fixed capacity, I think it still makes sense to complain about Linda making it harder for them to respond.

Replies from: Linda Linsefors, aysja, lahwran↑ comment by Linda Linsefors · 2024-07-24T09:40:25.190Z · LW(p) · GW(p)

I also have limited capacity.

↑ comment by aysja · 2024-07-09T00:09:36.119Z · LW(p) · GW(p)

But whether an organization can easily respond is pretty orthogonal to whether they’ve done something wrong. Like, if 80k is indeed doing something that merits a boycott, then saying so seems appropriate. There might be some debate about whether this is warranted given the facts, or even whether the facts are right, but it seems misguided to me to make the strength of an objection proportional to someone’s capacity to respond rather than to the badness of the thing they did.

↑ comment by the gears to ascension (lahwran) · 2024-07-06T01:47:22.903Z · LW(p) · GW(p)

Agreed. It's reasonable to ask others eg Linda to make this easier where possible. Eg, when discussion group behavior in response to a state of affairs, instead of making it "suggestion/command" part of speech, make it "conditional prediction" part of speech. A statement I could truthfully say:

"As a AI safety community member, I predict I and others will be uncomfortable with 80k if this is where things end up settling, because of disagreeing. I could be convinced otherwise, but it would take extraordinary evidence at this point. If my opinions stay the same and 80k's also are unchanged, I expect this make me hesitant to link to and recommend 80k, and I would be unsurprised to find others behaving similarly."

Behaving like that is very similar to what Linda said she intends, but seems to me to leave more room for aumann. I would suggest to 80k that they attempt to simply reinterpret what Linda as equivalent to this, if possible. Of course, it is in fact a slightly different thing than what she said.

Edit: very odd that this, but neither its parent or grandparent comment, got downvoted. What i said here feels like a pretty similar thing to what I said in the grandparent, and agrees with buck and with linda; it's my attempt to show there's a way to merge these perspectives. What about my comment diverges?

Replies from: Linda Linsefors↑ comment by Linda Linsefors · 2024-07-24T10:05:53.482Z · LW(p) · GW(p)

A statement I could truthfully say:

"As a AI safety community member, I predict I and others will be uncomfortable with 80k if this is where things end up settling, because of disagreeing. I could be convinced otherwise, but it would take extraordinary evidence at this point. If my opinions stay the same and 80k's also are unchanged, I expect this make me hesitant to link to and recommend 80k, and I would be unsurprised to find others behaving similarly."

But you did not say it (other than as a response to me). Why not?

I'd be happy for you to take the discussion with 80k and try to change their behaviour. This is not the first time I told them that if they list a job, a lot of people will both take it as an endorsement, and trut 80k that this is a good job to apply for.

As far as I can tell 80k is in complete denial on the large influence they have on many EAs, especially local EA community builders. They have a lot of trust, mainly for being around for so long. So when ever they screw up like this, it causes enormous harm. Also since EA have such a large growth rate (at any given time most EAs are new EAs), the community is bad at tracking when 80k does screw up, so they don't even loose that much trust.

On my side, I've pretty much given up on them caring at all about what I have to say. Which is why I'm putting so litle effort into how I word things. I agree my comment could have been worded better (with more effort), and I have tried harder in the past. But I also have to say that I find the level of extreme politeness, lot's of EA show towards high status orgs, to be very off-putting, so I never been able to imitate that style.

Again, if you can do better, please do so. I'm serious about this.

Someone (not me) had some success at getting 80k to listen, over at the EA forum version of this post. But more work is needed.

(FWIW, I'm not the one who downvoted you)

↑ comment by Linda Linsefors · 2024-07-05T08:53:52.967Z · LW(p) · GW(p)

Replies from: Linda Linsefors↑ comment by Linda Linsefors · 2024-07-05T08:59:50.061Z · LW(p) · GW(p)

Temporarily deleted since I misread Eli's comment. I might re-post

↑ comment by Stephen Fowler (LosPolloFowler) · 2024-07-04T01:20:59.692Z · LW(p) · GW(p)

Firstly, some form of visible disclaimer may be appropriate if you want to continue listing these jobs.

While the jobs board may not be "conceptualized" as endorsing organisations, I think some users will see jobs from OpenAI listed on the job board as at least a partial, implicit endorsement of OpenAI's mission.

Secondly, I don't think roles being directly related to safety or security should be a sufficient condition to list roles from an organisation, even if the roles are opportunities to do good work.

I think this is easier to see if we move away from the AI Safety space. Would it be appropriate for 80,000 Hours job board advertise an Environmental Manager job from British Petroleum?

↑ comment by Eli Tyre (elityre) · 2024-07-05T17:40:37.323Z · LW(p) · GW(p)

I think this is easier to see if we move away from the AI Safety space. Would it be appropriate for 80,000 Hours job board advertise an Environmental Manager job from British Petroleum?

That doesn't seem obviously absurd to me, at least.

Replies from: LosPolloFowler↑ comment by Stephen Fowler (LosPolloFowler) · 2024-07-06T03:14:17.211Z · LW(p) · GW(p)

I dislike when conversations about that are really about one topic get muddied by discussion about an analogy. For the sake of clarity, I'll use italics relate statements when talking about the AI safety jobs at capabilities companies.

Interesting perspective. At least one other person also had a problem with that statement, so it is probably worth me expanding.

Assume, for the sake of the argument, that the Environmental Manager's job is to assist with clean-ups after disasters, monitoring for excessive emissions and preventing environmental damage. In a vacuum these are all wonderful, somewhat-EA aligned tasks.

Similarly the safety focused role, in a vacuum, is mitigating concrete harms from prosaic systems and, in the future, may be directly mitigating existential risk.

However, when we zoom out and look at these jobs in the context of the larger organisations goals, things are less obviously clear. The good you do helps fuel a machine whose overall goals are harmful.

The good that you do is profitable for the company that hires you. This isn't always a bad thing, but by allowing BP to operate in a more environmentally friendly manner you improve BP's public relations and help to soften or reduce regulation BP faces.

Making contemporary AI systems safer, reducing harm in the short term, potentially reduces the regulatory hurdles that these companies face. It is harder to push restrictive legislation governing the operation of AI capabilities companies if they have good PR.

More explicitly, the short-term, environmental management that you do on may hide more long-term, disastrous damage. Programs to protect workers and locals from toxic chemical exposure around an exploration site help keep the overall business viable. While the techniques you develop shield the local environment from direct harm, you are not shielding the globe from the harmful impact of pollution.

Alignment and safety research at capabilities companies focuses on today's models, which are not generally intelligent. You are forced to assume that the techniques you develop will extend to systems that are generally intelligent, deployed in the real world and capable of being an existential threat.

Meanwhile the techniques used to align contemporary systems absolutely improve their economic viability and indirectly mean more money is funnelled towards AGI research.

↑ comment by Eli Tyre (elityre) · 2024-07-06T05:20:32.140Z · LW(p) · GW(p)

Yep. I agree with all of that. Which is to say that that there are considerations in both directions, and it isn't obvious which ones dominate, in both the AI and petroleum case. My overall guess is that in both cases it isn't a good policy to recommend roles like these, but don't think that either case is particularly more of a slam dunk than the other. So referencing the oil case doesn't make the AI one particularly more clear to me.

↑ comment by Remmelt (remmelt-ellen) · 2024-07-19T14:43:53.839Z · LW(p) · GW(p)

We used to list roles that seemed more tangentially safety-related, but because of our reduced confidence in OpenAI

This misses aspects of what used to be 80k's position:

❝ In fact, we think it can be the best career step for some of our readers to work in labs, even in non-safety roles. That’s the core reason why we list these roles on our job board.

– Benjamin Hilton, February 2024 [EA(p) · GW(p)]

❝ Top AI labs are high-performing, rapidly growing organisations. In general, one of the best ways to gain career capital is to go and work with any high-performing team — you can just learn a huge amount about getting stuff done. They also have excellent reputations more widely. So you get the credential of saying you’ve worked in a leading lab, and you’ll also gain lots of dynamic, impressive connections.

– Benjamin Hilton, June 2023 - still on website

80k was listing some non-safety related jobs:

– From my email on May 2023:

– From my comment on February 2024 [EA(p) · GW(p)]:

↑ comment by William_S · 2024-07-05T17:33:15.665Z · LW(p) · GW(p)

I do think 80k should have more context on OpenAI but also any other organization that seems bad with maybe useful roles. I think people can fail to realize the organizational context if it isn't pointed out and they only read the company's PR.

↑ comment by DPiepgrass · 2024-07-15T20:16:13.644Z · LW(p) · GW(p)

I think there may be merit in pointing EAs toward OpenAI safety-related work, because those positions will presumably be filled by someone and I would prefer it be filled by someone (i) very competent (ii) who is familiar with (and cares about) a wide range of AGI risks, and EA groups often discuss such risks. However, anyone applying at OpenAI should be aware of the previous drama before applying. The current job listings don't communicate the gravity or nuance of the issue before job-seekers push the blue button leading to OpenAI's job listing:

I guess the card should be guarded, so that instead of just having a normal blue button, the user should expand some sort of 'additional details' subcard first. The user then sees some bullet points about the OpenAI drama and (preferably) expert concerns about working for OpenAI, each bullet point including a link to more details, followed by a secondary-styled button for the job application (typically, that would be a button with a white background and blue border). And of course you can do the same for any other job where the employer's interests don't seem well-aligned with humanity or otherwise don't have a good reputation.

Edit: actually, for cases this important, I'd to replace 'View Job Details' with a "View Details" button that goes to a full page on 80000 Hours in order to highlight the relevant details more strongly, again with the real job link at the bottom.

comment by yanni kyriacos (yanni) · 2024-07-03T23:55:03.385Z · LW(p) · GW(p)

I think an assumption 80k makes is something like "well if our audience thinks incredibly deeply about the Safety problem and what it would be like to work at a lab and the pressures they could be under while there, then we're no longer accountable for how this could go wrong. After all, we provided vast amounts of information on why and how people should do their own research before making such a decision".

The problem is, that is not how most people make decisions. No matter how much rational thinking is promoted, we're first and foremost emotional creatures that care about things like status. So, if 80k decides to have a podcast with the Superalignment team lead, then they're effectively promoting the work of OpenAI. That will make people want to work for OpenAI. This is an inescapable part of the Halo effect.

Lastly, 80k is explicitly targeting very young people who, no offense, probably don't have the life experience to imagine themselves in a workplace where they have to resist incredible pressures to not conform, such as not sharing interpretability insights with capabilities teams.

The whole exercise smacks of nativity and I'm very confident we'll look back and see it as an incredibly obvious mistake in hindsight.

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2024-07-04T17:30:53.095Z · LW(p) · GW(p)

I was around a few years ago when there were already debates about whether 80k should recommend OpenAI jobs. And that's before any of the fishy stuff leaked out, and they were stacking up cool governance commitments like becoming a capped-profit and having a merge-and-assist-clause.

And, well, it sure seem like a mistake in hindsight how much advertising they got.

comment by Remmelt (remmelt-ellen) · 2024-07-19T14:11:54.937Z · LW(p) · GW(p)

I haven't shared this post with other relevant parties – my experience has been that private discussion of this sort of thing is more paralyzing than helpful.

Fourteen months ago, I emailed 80k staff with concerns about how they were promoting AGI lab positions on their job board.

The exchange:

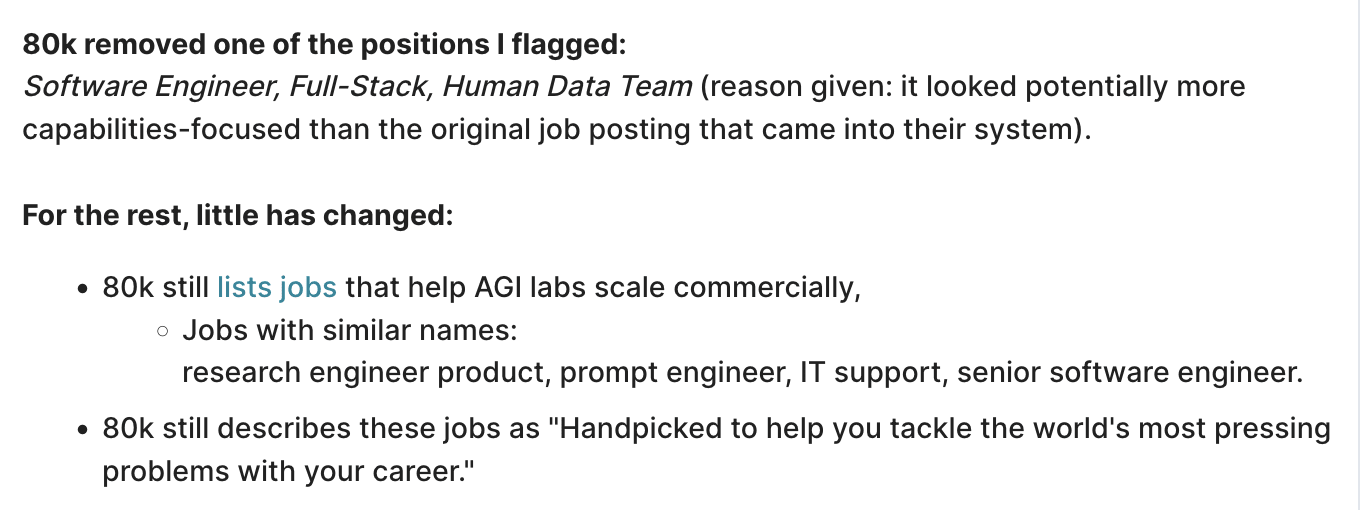

- I offered specific reasons and action points.

- 80k staff replied by referring to their website articles about why their position on promoting jobs at OpenAI and Anthropic was broadly justified (plus they removed one job listing).

- Then I pointed out what those articles were specifically missing,

- Then staff stopped responding (except to say they were "considering prioritising additional content on trade-offs").

It was not a meaningful discussion.

Five months ago, I posted my concerns publicly [EA(p) · GW(p)]. Again, 80k staff removed one job listing [EA(p) · GW(p)] (why did they not double-check before?). Again, staff referred to their website articles [EA(p) · GW(p)] as justification to keep promoting OpenAI and Anthropic safety and non-safety roles on their job board. Again, I pointed out [EA(p) · GW(p)] what seemed missing or off about their justifications in those articles, with no response from staff.

It took the firing of the entire OpenAI superalignment team before 80k staff "tightened up [their] listings [LW(p) · GW(p)]". That is, three years after the first wave of safety researchers left OpenAI.

80k is still listing 33 Anthropic jobs, even as Anthropic has clearly been competing to extend "capabilities" for over a year.

comment by RHollerith (rhollerith_dot_com) · 2024-07-04T04:42:45.492Z · LW(p) · GW(p)

I hope that the voluminous discussion on exactly how bad each of the big AI labs are doesn't distract readers from what I consider the main chances: getting all the AI labs banned (eventually) and convincing talented young people not to put in the years of effort needed to prepare themselves to do technical AI work.

Replies from: david-james↑ comment by David James (david-james) · 2024-07-06T18:14:38.020Z · LW(p) · GW(p)

I’m curious if your argument, distilled, is: fewer people skilled in technical AI work is better? Such a claim must be examined closely! Think of it from a systems dynamics point of view. We must look at more than just one relationship. (I personally try to press people to share some kind of model that isn’t presented only in words.)

Replies from: rhollerith_dot_com↑ comment by RHollerith (rhollerith_dot_com) · 2024-07-06T23:50:01.214Z · LW(p) · GW(p)

Yes, I am pretty sure that the fewer additional people skilled in technical AI work, the better. In the very unlikely event that before the end, someone or some group actually comes up with a reliable plan for how to align an ASI, we certainly want a sizable number of people able to understand the plan relatively quickly (i.e., without first needing to prepare themselves through study for a year), but IMHO we already have that.

"The AI project" (the community of people trying to make AIs that are as capable as possible) probably needs many thousands of additional people with technical training to achieve its goal. (And if the AI project doesn't need those additional people, that is bad news because it probably means we are all going to die sooner rather than later.) Only a few dozen or a few hundred researchers (and engineers) will probably make substantial contributions toward the goal, but neither the apprentice researchers themselves, their instructors or their employers can tell which researchers will ever make a substantial contribution, so the only way for the project to get an adequate supply of researchers is to train and employ many thousands. The project would prefer to employ even more than that.

I am pretty sure it is more important to restrict the supply of researchers available to the AI project than it is to have more researchers who describe themselves as alignment researchers. It's not flat impossible that the AI-alignment project will bear fruit before the end, but is it very unlikely. In contrast, if not stopped somehow (e.g., by the arrival of helpful space aliens or some other miracle) the AI project will probably succeed at its goal. Most people pursuing careers in alignment research are probably doing more harm than good because the AI project tends to be able to use any results they come up with. MIRI is an exception to the general rule, but MIRI has chosen to stop its alignment research program on the grounds that it is hopeless.

Restricting the supply of researchers for the AI project by warning talented young people not to undergo the kinds of training needed by the AI project increases the length of time left before the AI project kills us all, which increases the chances of a miracle such as the arrival of the helpful space aliens. Also, causing our species to endure 10 years longer than it would otherwise endure is an intrinsic good even if it does not instrumentally lead to our long-term survival.

Replies from: david-james↑ comment by David James (david-james) · 2024-07-08T01:20:26.493Z · LW(p) · GW(p)

Here is an example of a systems dynamics diagram showing some of the key feedback loops I see. We could discuss various narratives around it and what to change (add, subtract, modify).

┌───── to the degree it is perceived as unsafe ◀──────────┐

│ ┌──── economic factors ◀─────────┐ │

│ + ▼ │ │

│ ┌───────┐ ┌───────────┐ │ │ ┌────────┐

│ │people │ │ effort to │ ┌───────┐ ┌─────────┐ │ AI │

▼ - │working│ + │make AI as │ + │ AI │ + │potential│ + │becomes │

├─────▶│ in │────▶│powerful as│─────▶│ power │───▶│ for │───▶│ too │

│ │general│ │ possible │ └───────┘ │unsafe AI│ │powerful│

│ │ AI │ └───────────┘ │ └─────────┘ └────────┘

│ └───────┘ │

│ │ net movement │ e.g. use AI to reason

│ + ▼ │ about AI safety

│ ┌────────┐ + ▼

│ │ people │ ┌────────┐ ┌─────────────┐ ┌──────────┐

│ + │working │ + │ effort │ + │understanding│ + │alignment │

└────▶│ in AI │────▶│for safe│─────▶│of AI safety │─────────────▶│ solved │

│ safety │ │ AI │ └─────────────┘ └──────────┘

└────────┘ └────────┘ │

+ ▲ │

└─── success begets interest ◀───┘

I find this style of thinking particularly constructive.

- For any two nodes, you can see a visual relationship (or lack thereof) and ask "what influence do these have on each other and why?".

- The act of summarization cuts out chaff.

- It is harder to fool yourself about the completeness of your analysis.

- It is easier to get to core areas of confusion or disagreement with others.

Personally, I find verbal reasoning workable for "local" (pairwise) reasoning but quite constraining for systemic thinking.

If nothing else, I hope this example shows how easily key feedback loops get overlooked. How many of us claim to have... (a) some technical expertise in positive and negative feedback? (b) interest in Bayes nets? So why don't we take the time to write out our diagrams? How can we do better?

P.S. There are major oversights in the diagram above, such as economic factors. This is not a limitation of the technique itself -- it is a limitation of the space and effort I've put into it. I have many other such diagrams in the works.

comment by Review Bot · 2024-07-04T02:22:22.615Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2025. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

Replies from: jacobjacob↑ comment by Bird Concept (jacobjacob) · 2024-07-04T19:17:58.001Z · LW(p) · GW(p)

Poor Review Bot, why do you get so downvoted? :(

Replies from: JohnSteidley↑ comment by John Steidley (JohnSteidley) · 2024-07-06T07:28:46.018Z · LW(p) · GW(p)

Because it's obviously annoying and burning the commons. Imagine if I made a bot that posted the same comment on every post of less wrong, surely that wouldn't be acceptable behavior.

Replies from: habryka4, jacobjacob, kave↑ comment by habryka (habryka4) · 2024-07-06T17:03:03.102Z · LW(p) · GW(p)

It's relatively normal for forums/subreddits to have bots that serve specific functions and post similar comments on posts when they meet certain conditions (like most subreddits I use have some collection of bots, whether it's a bot that looks up the text of any magic card mentioned, or a bot that automatically reposts the moderation guidelines when there are too many comments, etc.)

Replies from: Raemon, Josephm, rhollerith_dot_com↑ comment by Raemon · 2024-07-06T17:31:14.097Z · LW(p) · GW(p)

tbh I typically find those bots annoying too.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-07-06T17:44:23.262Z · LW(p) · GW(p)

Depends on the Subreddit, but definitely agree that they can be pretty annoying.

↑ comment by Joseph Miller (Josephm) · 2024-07-06T17:49:37.350Z · LW(p) · GW(p)

Could the prediction market for each post be integrated more elegantly into the UI, rather than posted as a comment?

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-07-06T20:45:33.073Z · LW(p) · GW(p)

Yeah, I've been planning on doing something like that. Just every custom UI element tends to introduce complexities in how it interfaces with all adjacent UI elements, but I think we'll likely do something like that in the long run.

Replies from: rhollerith_dot_com↑ comment by RHollerith (rhollerith_dot_com) · 2024-07-30T17:01:52.386Z · LW(p) · GW(p)

You don't want to make it a new element of the menu that appears when the user clicks on the 3 vertical dots in the upper right corner of a comment?

Replies from: rhollerith_dot_com↑ comment by RHollerith (rhollerith_dot_com) · 2024-08-17T05:34:01.634Z · LW(p) · GW(p)

If the ReviewBot comments were collapsed without my having to manually collapse them, they would probably cease to bother me.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-08-17T06:01:48.942Z · LW(p) · GW(p)

Yeah, it's on my to-do list for next week to revamp these messages, I think they aren't working as is.

↑ comment by RHollerith (rhollerith_dot_com) · 2024-07-30T16:39:09.529Z · LW(p) · GW(p)

But the automatic pinned comments I see on Reddit have a purpose that is plausibly essential to the subreddit's having any value to any users at all (usually to remind participants of a plausibly-essential rule that was violated constantly before the introduction of the pinned comment) whereas the annual review (and betting about it on Manifold Markets) are not plausibly essential to LW's having any value at all (although it is a common human cognitive bias for someone who has put 100s of hours of hard work into something to end up believing it is much more important than it actually is).

The reason spam got named "spam" in the early 1990s is that it tends to be a repetition of the same text over and over (similar to how the word "spam" is repeated over and over in a particular Monty Python skit).

↑ comment by Bird Concept (jacobjacob) · 2024-07-06T23:45:37.286Z · LW(p) · GW(p)

[censored_meme.png]

I like review bot and think it's good

Replies from: kave↑ comment by kave · 2024-07-07T07:28:47.572Z · LW(p) · GW(p)

mod note: this comment used to have a gigantic image of Rockwell's Freedom of Speech, which I removed.

Replies from: pktechgirl↑ comment by Elizabeth (pktechgirl) · 2024-07-26T16:00:24.127Z · LW(p) · GW(p)

context note: Jacob is also a mod/works for LessWrong, kave isn't doing this to random users.

Replies from: kave↑ comment by kave · 2024-07-26T17:51:38.956Z · LW(p) · GW(p)

I probably would have done something similar to a random user, though probably with a more transparent writeup, and/or trying harder to shrink the image or something.

I'll note that [censored_meme.png] is something Jacob added back in after I removed the image, not something I edited in.

↑ comment by Elizabeth (pktechgirl) · 2024-07-27T02:13:38.927Z · LW(p) · GW(p)

huh. was it the particular meme (brave dude telling the truth), the size, or some third thing?

Replies from: kave, Raemon↑ comment by kave · 2024-08-17T07:07:07.522Z · LW(p) · GW(p)

I think if you made a bot that posted the same comment on every post except for, say, a link to a high-quality audio narration of the post, it would probably be acceptable behaviour.

EDIT: Though my true rejection is more like, I wouldn't rule out the site admins making an auto commenter that reminded people of argumentative norms or something like that. Of course, it seems likely that whatever end the auto commenter was supposed to serve would be better served using a different UI element than a comment (as also seems true here), but it's not something I would say we should never try.

I think as site admins we should be trying to serve something like the overall health and vision of the site, and not just locally the user's level of annoyance, though I do think the user's level of annoyance is a relevant thing to take into account!

There's something a little loopy here that's hard to reason about. People might be annoyed because a comment burns the commons. But I think there's a difference in opinion about whether it's burning or contributing to the commons. And then, I imagine, those who think it's burning the commons want to offer their annoyance as proof of the burn. But there's a circularity there I don't know quite how to think through.

comment by Douglas_Knight · 2024-07-04T03:33:44.344Z · LW(p) · GW(p)

What you say about OpenAI makes it the apotheosis of EA and thus I think it would be better for 80k to endorse it to make that clear, rather than to perform the kayfabe of fake opposition.