Extreme risk neutrality isn't always wrong

post by Grant Demaree (grant-demaree) · 2022-12-31T04:05:18.556Z · LW · GW · 19 commentsContents

Intro to the Kelly Criterion Implicit assumption in the Kelly Criterion Treat risk preferences like any other preference Did these ideas affect the FTX crash? Conclusions None 20 comments

Risk neutrality is the idea that a 50% chance of $2 is equally valuable as a 100% chance of $1. This is a highly unusual preference, but it sometimes makes sense:

Exampleville is infested with an always-fatal parasite. All 1,024 residents have the worm.

For each gold coin you pay, a pharmacist will deworm one randomly selected resident.

You only have one gold coin, but the pharmacist offers you a bet. Flip a weighted coin, which comes up heads 51% of the time. If heads, you double your money. If tails, you lose it all.

You can play as many times as you like.

It makes sense to be risk neutral here. If you keep playing until you've saved everyone or go broke, each resident gets a 0.51^10 = 1 in 840 chance of survival. If you don't play, it's 1 in 1,024.

For a few of the most scalable interventions, I think EA is like this. OpenPhil seems to expect a very low rate of diminishing returns for GiveWell's top charities. I wouldn't recommend a double-or-nothing on GiveWell's annual budget at a 51% win rate, but I would at 60%.[1]

Most interventions aren't like this. The second $100M of AI safety research buys much less than the first $100M. Diminishing returns seem really steep.

SBF and Caroline Ellison had much more aggressive ideas. More below on that.

Intro to the Kelly Criterion

Suppose you're offered a 60% double-or-nothing bet. How much should you wager? In 1956, John Kelly, a researcher at Bell Labs, proposed a general solution. Instead of maximizing Expected Value, he maximized the geometric rate of return. Over a very large number of rounds, this is given by

Where is the proportion of your bankroll wagered each round, and is the probability of winning each double-or-nothing bet.

Maximizing with respect to gives

Therefore, if you have a 60% chance of winning, bet 20% of your bankroll on each round. At a 51% chance of winning, bet 2%.

This betting method, the Kelly Criterion, has been highly successful in real life. The MIT Blackjack Team bet this way, among many others.

Implicit assumption in the Kelly Criterion

The Kelly Criterion sneaks in the assumption that you want to maximize the geometric rate of return.

This is normally a good assumption. Maximizing the geometric rate of return makes your bankroll approach infinity as quickly as possible!

But there are plenty of cases there the geometric rate of return is a terrible proxy for your underlying preferences. In the Parasite example above, your bets have a negative geometric rate of return, but they're still a good decision.

Most people also have a preference for reducing the risk of losing much of their starting capital. Betting a fraction of the Kelly Bet gives a slightly lower rate of return but a massively lower risk of ruin.

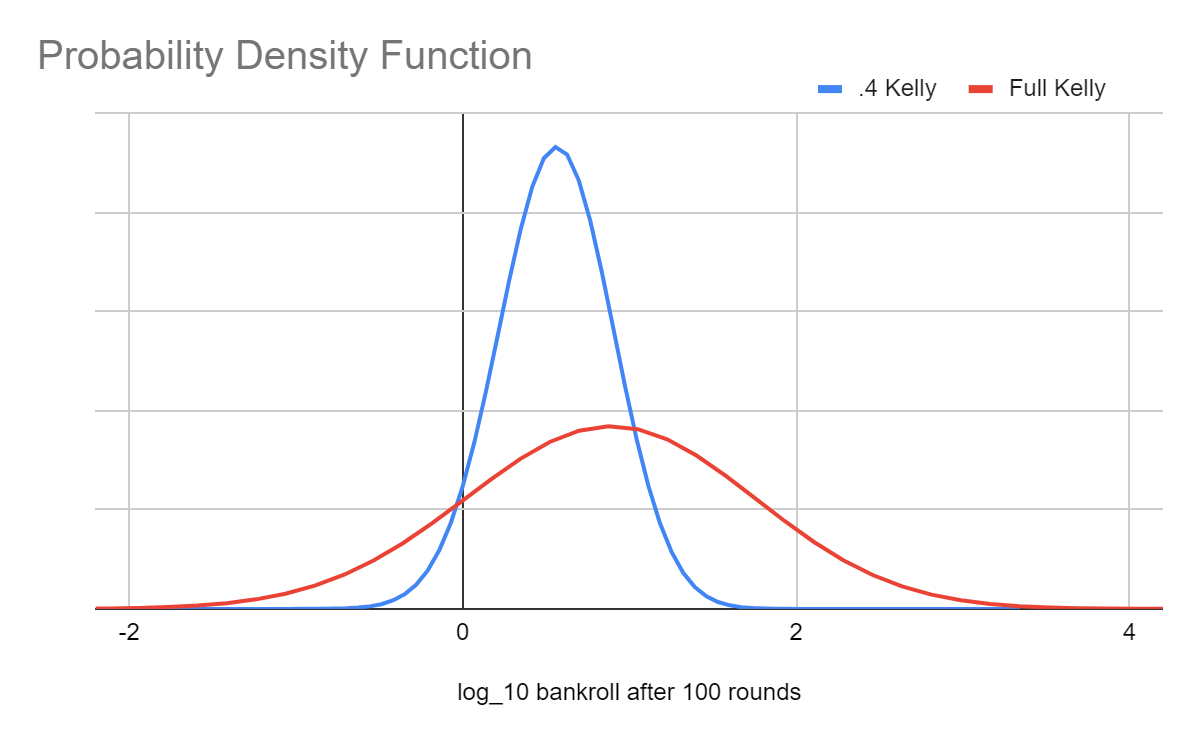

Above: distribution of outcomes after 100 rounds with a 60% chance of winning each bet. The red line (Full Kelly) wagers 20% of the bankroll on each round, while the blue (.4 Kelly) wagers 8%. The x-axis uses a log scale: "2" means multiplying your money a hundredfold, while "-1" means losing 90% of your money.

Crucially, neither of these distributions is better. The red gets higher returns, but the blue has a much more favorable risk profile. Practically, 0.2 to 0.5 Kelly seems to satisfy most peoples' real-world preferences.

Treat risk preferences like any other preference

SBF and Caroline Ellison both endorsed full Martingale strategies. Caroline says:

Most people don’t do this, because their utility is more like a function of their log wealth or something and they really don’t want to lose all of their money. Of course those people are lame and not EAs; this blog endorses double-or-nothing coin flips and high leverage.

She doesn't specify that it's a weighted coin, but I assume she meant a positive EV bet.

Meanwhile, SBF literally endorses this strategy for the entire Earth in an interview with Tyler Cowen

[TYLER] ... 51 percent, you double the Earth out somewhere else; 49 percent, it all disappears. Would you play that game? And would you keep on playing that, double or nothing?

SBF agrees to play the game repeatedly, with the caveats that the new Earths are created in noninteracting universes, that we have perfect confidence that the new Earths are in fact created, and that the game is played a finite number of times.

I strongly disagree with SBF. But it's a sincerely held preference, not a mistake about the underlying physical reality. If the game is played for 10 rounds, the choices are

A. One Earth, as it exists today

B. 839/840 chance that Earth is destroyed and a 1/840 chance of 1,024 Earths

I strongly prefer A, and SBF seems to genuinely prefer B

One of my objections to B is that I don't believe a life created entirely makes up for a life destroyed, and I don't believe that two Earths, even in noninteracting universes, is fully twice as valuable as one. I also think population ethics is very unsolved, so I'd err toward caution.

That's a weak objection though. Tyler could keep making the odds more favorable until he's overcome each of these issues. Here's Tyler's scenario on hard mode:

50%, you create a million Earths in noninteracting universes. 50 percent, it all disappears. Would you play that game? For how many rounds? Each round multiplies the set of Earths a millionfold.

I still wouldn't play. My next objection is that it's terrible for SBF to make this decision for everyone, when I'm confident almost the entire world would disagree. He's imposing his preferences on the rest of the world, and it'll probably kill them. That sounds like the definition of evil.

That's still a weak objection. It doesn't work in the least convenient world [LW · GW], because Tyler could reframe the scenario again:

50%, you create a million Earths in noninteracting universes. 50 percent, it all disappears. In a planetwide election, 60% of people agreed to play. You are a senior official with veto power. If you don't exercise your veto, then Earth will take the risk.

I won't answer this one. I'm out of objections. I hope I'd do the right thing, but I'm not sure what that is.

Did these ideas affect the FTX crash?

I think so. I predict with 30% confidence that, by end of 2027, a public report on the FTX collapse by a US Government agency will identify SBF or Caroline Ellison's attitude towards risk as a necessary cause of the FTX crash and will specifically cite their writings on double-or-nothing bets.

By "necessary cause" I mean that, if SBF and Caroline did not have these beliefs, then the crash would not have occurred. For my prediction, it's sufficient for the report to imply this causality. It doesn't have to be stated directly.

I have about 80% confidence in the underlying reality (risk attitude was a necessary cause of the crash), but that's not a verifiable prediction.

Of course, it's not the only necessary cause. The fraudulent loans to Alameda are certainly another one!

Conclusions

A fractional Kelly Bet gets excellent results for the vast majority of risk preferences. I endorse using 0.2 to 0.5 Kelly to calibrate personal risk-taking in your life.

I don't endorse risk neutrality for the very largest EA orgs/donors. I think this cashes out as a factual disagreement with SBF. I'm probably just estimating larger diminishing returns than he is.

For everyone else, I literally endorse wagering 100% of your funds to be donated on a bet that, after accounting for tax implications, amounts to a 51% double-or-nothing. Obviously, not in situations with big negative externalities.

I disagree strongly with SBF's answer to Tyler Cowen's Earth doubling problem, but I don't know what to do once the easy escapes are closed off. I'm curious if someone does.

Cross-posted to EA Forum

- ^

Risk neutral preferences didn't really affect the decision here. We would've gotten to the same decision (yes on 60%, no on 51%) using the Kelly Criterion on the total assets promised to EA

19 comments

Comments sorted by top scores.

comment by aphyer · 2023-01-01T00:12:21.277Z · LW(p) · GW(p)

I think it might be wise to not trust the stated principles of people who commit large-scale frauds.

Replies from: grant-demaree↑ comment by Grant Demaree (grant-demaree) · 2023-01-01T00:57:55.509Z · LW(p) · GW(p)

It doesn't matter who said an idea. I'd rather just consider each idea on its own merits

Replies from: Dagon, Slider↑ comment by Dagon · 2023-01-01T02:29:48.854Z · LW(p) · GW(p)

Unfortunately, ideas don't have many merits to consider in the pure abstract (ok, they do, but they're not the important merits. The important consideration is how well it works for decisions you are likely to face). You need to evaluate applications of ideas, as embodied by actions and consequences.

For that evaluation, the actions of people who most strongly espouse an idea are a data point to the utility of the idea.

comment by interstice · 2022-12-31T19:35:37.388Z · LW(p) · GW(p)

I disagree strongly with SBF’s answer to Tyler Cowen’s Earth doubling problem, but I don’t know what to do once the easy escapes are closed off. I’m curious if someone does.

Have a bounded utility function?

Replies from: grant-demaree↑ comment by Grant Demaree (grant-demaree) · 2022-12-31T19:42:13.618Z · LW(p) · GW(p)

I don't think that solves it. A bounded utility function would stop you from doing infinite doublings, but it still doesn't prevent some finite number of doublings in the million-Earths case

That is, if the first round multiplies Earth a millionfold, then you just have to agree that a million Earths is at least twice as good as one Earth

Replies from: interstice↑ comment by interstice · 2023-01-01T08:06:06.072Z · LW(p) · GW(p)

Right. But just doing a finite number of million-fold-increase bets doesn't seem so crazy to me. I think this is confounded a bit by the resources in the universe being mind-bogglingly large already, so it feels hard to imagine doubling the future utility. As a thought experiment, consider the choice between the following futures: (a) guarantee of 100 million years of flourishing human civilization, but no post-humans or leaving the solar system, (b) 50% chance extinction, 50% chance intergalactic colonization and transhumanism. To me option (b) feels more intuitively appealing.

Replies from: grant-demaree↑ comment by Grant Demaree (grant-demaree) · 2023-01-01T19:18:34.234Z · LW(p) · GW(p)

I agree. I think this basically resolves the issue. Once you've added a bunch of caveats:

- The bet is mind-bogglingly favorable. More like the million-to-one, and less like the 51% doubling

- The bet reflects the preferences of most of the world. It's not a unilateral action

- You're very confident that the results will actually happen (we have good reason to believe that the new Earths will definitely be created)

Then it's actually fine to take the bet. At that point, our natural aversion is based on our inability to comprehend the vast scale of a million Earths. I still want to say no, but I'd probably be a yes at reflective equilibrium

Therefore... there's not much of a dilemma anymore

comment by Morpheus · 2023-01-01T02:09:46.256Z · LW(p) · GW(p)

A fractional Kelly Bet gets excellent results for the vast majority of risk preferences. I endorse using 0.2 to 0.5 Kelly to calibrate personal risk-taking in your life.

Why not normal Kelly?

Replies from: grant-demaree↑ comment by Grant Demaree (grant-demaree) · 2023-01-01T19:29:21.697Z · LW(p) · GW(p)

Because maximizing the geometric rate of return, irrespective of the risk of ruin, doesn't reflect most peoples' true preferences

In the scenario above with the red and blue lines, the full Kelly has a 9.3% chance of losing at least half your money, but the .4 Kelly only has a 0.58% chance of getting an outcome at least that bad

comment by Slider · 2022-12-31T15:55:14.696Z · LW(p) · GW(p)

I do wonder whether there is a strategy that would be preferable to an individual resident. Say that after you have 4 or more coins you always take 1 coin into a safe out of which you will not further bet but only buy medicine. And then on the first loss cash out. Even if we terminate at say 54, some people survive and it is not a total loss. But going full risk-neutral when we lose, we totally lose.

Stated another way. If you make full bets you obviously get faster to the condition of having saved everybody if you do. But given that you want to get to the state of having saved everyone what is the bet pattern that risks the least lives?

Replies from: grant-demaree↑ comment by Grant Demaree (grant-demaree) · 2022-12-31T17:31:04.917Z · LW(p) · GW(p)

There's definitely bet patterns superior to risking everything -- but only if you're allowed to play a very large number of rounds

If the pharmacist is only willing to play 10 rounds, then there's no way to beat betting everything every round

As the number of rounds you play approaches infinity (assuming you can bet fractions of a coin), your chance of saving everyone approaches 1. But this takes a huge number of rounds. Even at 10,000 rounds, the best possible strategy only gives each person around a 1 in 20 chance of survival

comment by philh · 2023-01-04T16:18:43.066Z · LW(p) · GW(p)

If you keep playing until you’ve saved everyone or go broke, each resident gets a 0.51^10 = 1 in 840 chance of survival.

That's if you bet everything every time. If you bet 1 gold coin every time, I think you get about a 4% chance of saving everyone. (Per http://www.columbia.edu/~ks20/FE-Notes/4700-07-Notes-GR.pdf I think it would be .) I don't think you can do better than that without fractional coins - Kelly would let you get there faster-on-average, if you start betting multiple coins when your bankroll is high enough, but I think not more reliably.

The Kelly Criterion sneaks in the assumption that you want to maximize the geometric rate of return.

So, I'm not sure what the "Kelly Criterion" even is - a criterion is a means of judging something, so is the criterion "a bet of size is better than any other bet size"? Or is it "the best bet size is the one that causes ..."? Or something else? Wikipedia says the criterion is a formula, which sounds like not-a-criterion.... Without knowing what it is, I'm not sure whether I agree it sneaks in that assumption.

But to rephrase, it sounds like you're saying "the argument for Kelly rests on wanting to maximize geometric rate of return, and if you don't want to do that, Kelly might not be right for you"?

I kind of disagree [LW · GW]. To me, the biggest argument to bet Kelly is that in the sufficiently far future, with very high probability, it gives you more money than any non-Kelly bettor. Which in many situations is equivalent to maximizing geometric rate of return, but not always; and it's much more closely connected to what people care about.

It looks like with a 60% double-or-nothing, 100 rounds isn't enough to reach "very high probability" (eyeballing, the Kelly bettor has more somewhere between 60 and 80% of the time?); but what does the graph look like at 1,000 rounds, or 1,000,000? I'd guess that at 1,000 rounds, almost everyone would prefer the Kelly distribution over the .4 Kelly one; and I'd be quite surprised if that wasn't the case at 1,000,000.

(In the parasite example, there is no strategy that gives you this desideratum without fractional coins. Proof: any strategy that places at least one bet has at least a 49% chance of ending up with less money than the place-no-bets strategy; but that strategy has a 51% chance of ending up with less money than the place-exactly-one-bet strategy. But also, this desideratum is different from "maximize probability of saving everyone".)

Most people also have a preference for reducing the risk of losing much of their starting capital. Betting a fraction of the Kelly Bet gives a slightly lower rate of return but a massively lower risk of ruin.

This seems to be a nonstandard definition of "ruin". Under the framework you seem to be using here, neither the Kelly or the fractional-Kelly bettor will ever hit 0; they'll always be able to keep playing, with a chance of getting back their original capital and then some.

We could decide that "ruin" means having less than some amount of money. Assuming the starting capital is not-ruined, it may be that the Kelly bettor will always have a higher risk of ever becoming ruined than a fractional-Kelly bettor. (Certainly, it'll be higher than for the 0-Kelly bettor who never bets.) It may even be - I'm not sure about this - that at some very far future time, the Kelly bettor has a higher probability of currently being ruined. But if so it'll be a higher very small probability. And a ruined Kelly bettor will be faster on average to become un-ruined than a ruined fractional-Kelly bettor.

Practically, 0.2 to 0.5 Kelly seems to satisfy most peoples’ real-world preferences.

In what situations does it seem this way to you?

comment by Dagon · 2022-12-31T23:22:40.991Z · LW(p) · GW(p)

I think a missing part to a lot of these ponderings and theories is a bit of epistemic humility. There is a distinct lack of questioning of the actual likelihood of the wager's payout. Especially at extremes, it's just impossible to calibrate probabilities accurately enough to make fine-grained expectation decisions.

In regions where you have near-linear utility, and the lower bound (zero) is just part of your evaluative range, still linear (the gold coins example), you probably SHOULD be pure-EV-maximizing. In sequences where the bounds are undefined and the zero-bound is special (you stop playing), Kelly takes over.

But either way, in situations of very distant (in probability, quantity, or time) outcomes, you can't calculate closely enough to make purely-rational optimal decisions. And it's VERY reasonable to discount the distant parts more than the closer parts - not a utility discount, but a certainty discount. I don't know if the universe is perverse, but many agents are adversarial and I just don't believe a 51% coinflip in my favor is ACTUALLY getting paid out that way.

And this is magnified for short-term risks traded against long-term benefits.

I disagree strongly with SBF's answer to Tyler Cowen's Earth doubling problem, but I don't know what to do once the easy escapes are closed off. I'm curious if someone does.

I don't disagree in principal, but I don't think there's any practical way to believe the scenario. Just the evidence I'd need to believe that "creating 100 earths in noninteracting universes, conditional on a behavior inside this universe" is kind of overwhelming. Destroying this earth, I can believe much more easily (and have well over 100x more credence would happen in the "lose" condition than the "win" condition).

I predict with 30% confidence that, by end of 2027, a public report on the FTX collapse by a US Government agency will identify SBF or Caroline Ellison's attitude towards risk as a necessary cause of the FTX crash and will specifically cite their writings on double-or-nothing bets.

I'd give that much less than 30%, if you mean "court or well-respected media report". If you include blogs and speculative media, then it's guaranteed to be claimed, but still pretty unlikely to be true. That attitude may be a contributing factor, or it may just be a smokescreen and not actually believed at all. But it's at best secondary to the real cause, which is criminally arrogant negligence that SBF and his company were so much smarter than everyone else and didn't have to actually pay attention or manage the risks that were keeping others from making all this obviously-easy money.

comment by avturchin · 2022-12-31T15:16:43.258Z · LW(p) · GW(p)

If MWI is true, Earth is constantly doubling, so there is no reason to "double Earth"

Replies from: Slider↑ comment by Slider · 2022-12-31T15:32:54.474Z · LW(p) · GW(p)

it is not clear to me that two differing versions of half the amplitude means that value is going up.

Replies from: avturchin, TAG↑ comment by avturchin · 2023-01-01T11:49:19.061Z · LW(p) · GW(p)

If this is true, then increasing of the earth's amplitude two times will also suffice to double the value.

And may be we can do it by performing observations less often (if we think that measurement is what causes world splitting – there are different interpretations of MWI). In that case meditation will be really good: less observations, less world splitting, more amplitude in our timeline.

Replies from: Slider↑ comment by Slider · 2023-01-01T12:01:34.896Z · LW(p) · GW(p)

Wigners friend shows that this does not really help the external world.

With less observation one agent can influence more amplitude but can't be specific about it. With more observation two agents individually have less "scope" of amplitude but together cover the same range but can be specific about their control.