Abstractions are not Natural

post by Alfred Harwood · 2024-11-04T11:10:09.023Z · LW · GW · 21 commentsContents

Systems must have similar observational apparatus Systems must be interacting with similar worlds Systems must be subject to similar selection pressure/constraints Systems must have similar utility functions [2] Moving forward with the Natural Abstraction Hypothesis Option 1 Option 2 Option 3 Option 4 None 21 comments

(This was inspired by a conversation with Alex Altair [LW · GW] and other fellows as part of the agent foundations fellowship [LW · GW], funded by the LTFF)

(Also: after I had essentially finished this piece, I was pointed toward the post Natural abstractions are observer-dependent [LW · GW] which covers a lot of similar ground. I've decided to post this one anyway because it comes at things from a slightly different angle.)

Here [LW · GW] is a nice summary statement of the Natural Abstractions Hypothesis (NAH):

The Natural Abstraction Hypothesis, proposed by John Wentworth, states that there exist abstractions (relatively low-dimensional summaries which capture information relevant for prediction) which are "natural" in the sense that we should expect a wide variety of cognitive systems to converge on using them.

I think that this is not true and that whenever cognitive systems converge on using the same abstractions this is almost entirely due to similarities present in the systems themselves, rather than any fact about the world being 'naturally abstractable'.

I tried to explain my view in a conversation and didn't do a very good job, so this is a second attempt.

To start, I'll attempt to answer the following question:

Suppose we had two cognitive systems which did not share the same abstractions. Under what circumstances would we consider this a refutation of the NAH?

Systems must have similar observational apparatus

Imagine two cognitive systems observing the the same view of the world with the following distinction: the first system receives its observations though a colour camera and the second system receives its observations through an otherwise identical black-and-white camera. Suppose the two systems have identical goals and we allow them both the same freedom to explore and interact with the world. After letting them do this for a while we quiz them both about their models of the world (either by asking them directly or through some interpretability techniques if the systems are neural nets). If we found that the first system had an abstraction for 'blue' but the second system did not, would we consider this evidence against the NAH? Or more specifically, would be consider this evidence that 'blue' or 'colour' were not 'natural' abstractions? Probably not, since it is obvious that the lack of a 'blue' abstraction in the second system comes from its observational apparatus, not from any feature of 'abstractions' or 'the world'.

More generally, I suspect for any abstraction formed by system making observations of a world, one could create a system which fails to form that abstraction when observing the same world by giving it a different observational apparatus. If you are not convinced, look around you and think about how many 'natural'-seeming abstractions you would have failed to develop if you were born with senses significantly lower resolution than the ones you have. To take an extreme example, a blind deaf person who only had a sense of smell would presumably form different abstractions to a sighted person who could not smell, even if they are in the same underlying 'world'.

Examples like these, however, would (I suspect) not be taken as counterexamples to the NAH by most people. Implicit in the NAH is that for two systems to converge on abstractions they must be making observations using apparatus that is at least roughly similar in the sense that they must allow for approximately the same information to be transmitted from the environment to the system. (I'm going to use the word 'similar' loosely throughout this post).

Systems must be interacting with similar worlds

A Sentinel Islander probably hasn't formed the abstraction of 'laptop computer', but this isn't evidence against the NAH. He hasn't interacted with anything that someone from the developed world would associate with the abstraction 'laptop computer' so its not surprising if he doesn't converge on this abstraction. If he moved to the city, got a job as a programmer, used a laptop 10 hours a day and still didn't have the abstraction of 'laptop computer', then it would be evidence against the NAH (or at least: evidence that 'laptop computer' is not a natural abstraction).

The NAH is not 'all systems will form the same abstractions regardless of anything' it is closer to 'if two systems are both presented with the same/similar data, then they will form the same abstractions' [1].

This means that 'two systems failing to share abstractions' is not evidence against the NAH unless both systems are interacting with similar environments.

Systems must be subject to similar selection pressure/constraints

Abstractions often come about through selection pressure and constraints. In particular, they are in some sense efficient ways of describing the world so are useful when computational power is limited. If one can perfectly model every detail of the world without abstracting then you can get away without using abstractions. Part of the reason that humans use abstractions is that our brains are finite sized and use finite amounts of energy.

To avoid the problems discussed in previous sections, lets restrict ourselves only to situations where systems are interacting with the same environment, through the same observation apparatus.

Here's a toy environment:

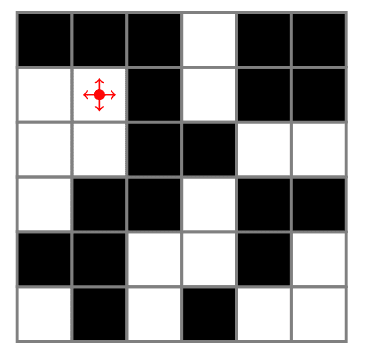

Imagine a 6-by-6 pixel environment where pixels can either be white or black. An agent can observe the full 36 pixels at once. It can move a cursor (represented by a red dot) up,down,left right one square at a time and knows where the cursor is. Apart from moving the cursor, it can take a single action which flips the colour of the square that the cursor is currently on (from white to black or black to white). An agent will be left to its own devices for 1 million timesteps and then will be given a score equal to the number of black pixels in the top half of the environment plus the number of white pixels in the bottom half. After that, the environment is reset to a random configuration with the cursor in the top left corner and the game is played again.

It would be fairly straightforward to train a neural net to produce a program which would navigate this game/environment and get a perfect score. Here is one way in which a program might learn to implement a perfect strategy (apologies for bad pseudocode):

while(x)

if cursor_position = (1,1) and pixel(1,1)= white:

flip_colour

move_nextpixel

else if cursor_position = (1,1) and pixel(1,1)= black

move_nextpixel

if cursor_position = (1,2) and pixel(1,2)= white:

flip_colour

move_nextpixel

...... and so on for all 36 pixels. In words: this program has explicitly coded which action it should take in every possible situation (a strategy sometimes known as a 'lookup table'). There's nothing wrong with this strategy, except that it takes a lot of memory. If we trained a neural net using a process which selected for short programs we might end up with a program that looks like this:

while(x)

if cursor_position in top_half and pixel(cursor_position)=white:

flip_colour

move_nextpixel

else if cursor_position in top_half and pixel(cursor_position)=black:

move_nextpixel

if cursor_position in bottom_half and pixel(cursor_position)=black:

flip_colour

move_nextpixel

else if cursor_position in bottom_half and pixel(cursor_position)=white:

move_nextpixelIn this code, the program is using an abstraction. Instead of enumerating all possible pixels, it 'abstracts' them into two categories 'top_half' (where it needs pixels to be black) and 'bottom_half' (where it needs the pixels to be white) which keep the useful information about the pixels while discarding 'low level' information about the exact coordinates of each pixel (the code defining top_half and bottom_half abstraction is omitted but hopefully you get the idea).

Now imagine we trained two ML systems on this game, one with no selection pressure to produce short programs and the other where long programs were heavily penalised. The system with no selection pressure produces a program similar to the first piece of pseudocode, where each pixel is treated individually, and the system subject to selection pressure produces a program similar to the second piece of pseudocode which makes use of the top_half/bottom_half abstraction to shorten the code. The first system fails to converge on the same abstractions as the second system.

If we observed this, would it constitute a counterexample to the NAH? I suspect defenders of the NAH would reply 'No, the difference between the two systems comes from the difference in selection pressure. If the two systems were subject to similar selection pressures, then they would converge on the same abstractions'.

Note that 'selection pressure' also includes physical constraints on the systems, such as constraints on size, memory, processing speed etc. all of which can change the abstractions available to a system. A tarantula probably doesn't have a good abstraction for 'Bulgaria'. Even if we showed it Bulgarian history lectures and in an unprecedented landslide victory, it was elected president of Bulgaria, it still wouldn't have a good abstraction for 'Bulgaria' (or, if it did, it probably would not be the same abstraction as humans).

This would presumably not constitute a refutation of the NAH - it's just the case that a spider doesn't have the information-processing hardware to produce such an abstraction.

So cognitive systems must be subject to similar physical constraints and selection pressures in order to converge on similar abstractions. To recap, our current version of the NAH states that two cognitive systems will converge on the same abstractions if

- they have similar observational apparatus...

- ... and are interacting with similar environments...

- ...and are subject to similar selection pressures and physical constraints.

Systems must have similar utility functions [2]

One reason to form certain abstractions is because they are useful for achieving one's goals/maximizing one's utility function. Mice presumably have an abstraction for 'the kind of vibrations a cat makes when it is approaching me from behind' because such an abstraction is useful for fulfilling their (sub)goal of 'not being eaten by a cat'. Honeybees have an abstraction of 'the dance that another bee does to indicate that there are nectar-bearing flowers 40m away' because it is useful for their (sub)goal of 'collecting nectar'.

Humans do not naturally converge on these abstractions because they are not useful to us. Does this provide evidence against the NAH? I'm guessing but NAH advocates might say something like 'but humans can learn both of these abstractions quite easily, just by hearing a description of the honeybee dance, humans can acquire the abstraction that the bees have. This is actually evidence in favour of the NAH - a human can easily converge on the same abstraction as a honeybee which is a completely different type of cognitive system'.

My response to this would be I will only converge on the same abstractions as honeybees if my utility function explicitly has a term which values 'understanding honeybee abstractions'. And even for humans who place great value on understanding honeybee abstractions, it still took years of study to understand honeybee dances.

Is the NAH saying 'two cognitive systems will converge on the same set of abstractions, provided that one of the systems explicitly values and works towards understanding the abstractions the other system is using'? If so, I wish people would stop saying that the NAH says that systems will 'naturally converge' or using other suggestive language which implies that systems will share abstractions by default without explicitly aiming to converge.

The honeybee example is a bit tricky because, on top of having different utility functions, honeybees also have different observational apparatus, and are subject to different selection pressures and constraints on their processing power. To get rid of these confounders, here is an example where systems are the same in every respect except for their utility functions and this leads them to develop abstractions.

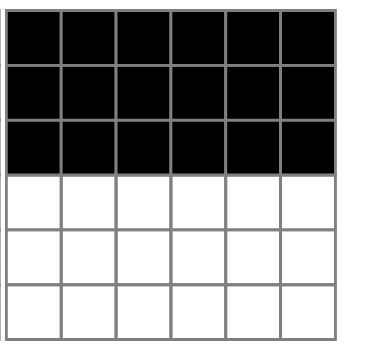

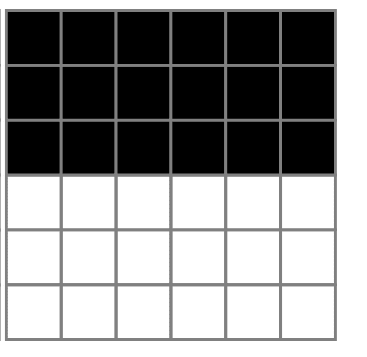

Consider the environment of the pixel game from the previous section. Suppose there are two systems playing this game, which are identical in all respects except their utility functions. They observe the same world in the same way, and they are both subject to the same constraints, including the constraints that their program for navigating the world must be 'small' (ie. it cannot just be a lookup table for every possible situation). But they have different utility functions. System A uses a utility function , which gives one point for every pixel in the top half of the environment which ends up black and one point for every pixel in the bottom half of the environment which ends up white.

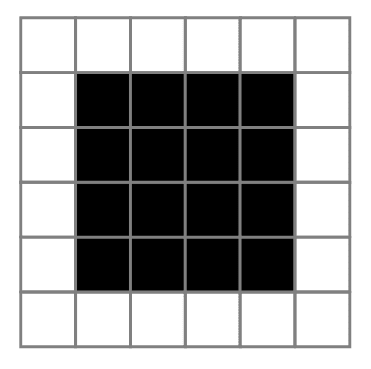

System B on the other hand uses a utility function , which gives one point for every pixel on the outer rim of the environment which ends up white and one point for every pixel in the inner 4x4 square which ends up black.

The two systems are then allowed to explore the environment and learn strategies which maximize their utility functions while subjected to the same constraints. I suspect that the system using would end up with abstractions for the 'top half' and 'bottom half' of the environment while the system using would not end up with these abstractions, because they are not useful for achieving On the other hand, system B would end up with an abstraction corresponding to 'middle square' and 'outer rim' because these abstractions are useful for achieving .

So two systems require similar utility functions in order to converge on similar abstractions.

(Side note: utility functions are similar to selection pressure in that we can describe 'having a utility function U' as a selection pressure 'selecting for strategies/cognitive representations which result in U being maximized'. I think that utility functions merit their own section for clarity but I wouldn't be mad if someone wanted to bundle them up in the previous section.)

Moving forward with the Natural Abstraction Hypothesis

If we don't want the NAH to be refuted by one of the counterexamples in the above sections, I will tentatively suggest some (not mutually exclusive) options for moving forward with the NAH.

Option 1

First, we could add lots of caveats and change the statement of the NAH. Recall the informal statement used at the start of this post:

there exist abstractions ... which are "natural" in the sense that we should expect a wide variety of cognitive systems to converge on using them

We would have to modify this to something like:

there exist abstractions ... which are "natural" in the sense that we should expect a wide variety of cognitive systems to converge on using them provided that those cognitive systems:

- have similar observational apparatus,

- and are interacting with similar environment,

- and are subject to similar physical constraints and selection pressures,

- and have similar utility functions.

This is fine, I guess, but its seems to me that we're stretching the use of the phrase 'wide variety of cognitive systems' if we then put all of these constraints on the kinds of systems to which our statement applies.

This statement of the NAH is also dangerously close to what I would call the 'Trivial Abstractions Hypothesis' (TAH):

there exist abstractions ... which are "natural" in the sense that we should expect a wide variety of cognitive systems to converge on using them provided that those cognitive systems:

- are exactly the same in every respect.

which is not a very interesting hypothesis!

Option 2

The other way to salvage the NAH is to hone in on the phrase 'there exist abstractions'. One could also claim that none of the counterexamples I gave in this post are 'true' natural abstractions. The hypothesis is just that some such abstractions exist. If someone takes this view, I would be interested to know: what are these abstractions? Are there any such abstractions which survive changes in observational apparatus/selection pressure/utility function ?

If I was in a combative mood [3] I would say something like: show me any abstraction and I will create a cognitive system which will not hold this abstraction by tweaking one of the conditions (observational apparatus/utility function etc.) I described above.

Option 3

Alternatively, one could properly quantify what 'wide variety' and 'similar' actually mean in a way which gels with the original spirit of the NAH. When I suggested that different utility functions lead to different abstractions, Alex made the following suggestion for reconciling this with the NAH (not an exact quote or fully fleshed-out). He suggested that for two systems, you could make small changes to their utility functions and this would only change the abstractions the systems adopted minimally, but to change the abstractions in a large way would require utility functions to be exponentially different. Of course, we would need to be be specific about how we quantify the similarity of two sets of abstractions and the similarity of two utility functions, but this provides a sense in which a set of abstractions could be 'natural' while still allowing different systems to have different abstractions. One can always come up with a super weird utility function that induces strange abstractions in systems which hold it, but this is in some sense would be contrived and complex and 'unnatural'. Something similar could also be done in terms of quantifying other ways in which systems need to be 'similar' in order to share the same abstractions.

Has anyone done any work like this? In my initial foray into the natural abstractions literature I haven't seen anything similar to this but maybe it's there and I missed it. It seems promising!

Option 4

This option is to claim that the universe abstracts well, in a way that suggests some 'objective' set of abstractions but hold back on the claim that most systems will converge on this objective set of abstractions. As far as I can tell, a lot of the technical work on Natural Abstractions (such as work on Koopman-Pitman-Darmois) falls under this category.

There might be some objective sense in which general relativity is the correct way of thinking about gravity-that doesn't mean all intelligent agents will converge on believing it. For example, an agent might just not care about understanding gravity, or be born before the prerequisite math was developed, or be born with a brain that struggles with the math required, or only be interested in pursuing goals which can be modelled entirely using Newton mechanics. Similarly, there might be an 'objective' set of abstractions present in the natural world, but this doesn't automatically mean that all cognitive systems will converge on using this set of abstractions.

If you wanted to go further and prove that a wide variety of system will use this set of abstractions, you will then need to do an additional piece of work which proves that the objective set of abstractions is also the 'most useful' in some operational sense.

As an example of work in this direction, one could try proving that, unless a utility function is in terms of this objective/natural set of abstractions, it is not possible to maximize it (or at least: it is not possible to maximize it any better than some 'random' strategy). Under this view, it would still be possible to create an agent with a utility function which cared about 'unnatural' abstractions but this agent would not be successful at achieving its goals. We could then prove something like a selection theorem along the lines of 'if an agent is actually successful in achieving its goals, it must be using a certain set of abstractions and we must be able to frame those goals in terms of this set of abstractions'.

This sounds interesting to me-I would be interested to hear if there is any work that has already been done along these lines!

- ^

- ^

I'm going to use 'utility function' in a very loose sense here, interchangeable with 'goal'/'reward function'/'objective'. I don't think this matters for the meat of the argument.

- ^

Don't worry, I'm not!

21 comments

Comments sorted by top scores.

comment by johnswentworth · 2024-11-04T17:33:03.173Z · LW(p) · GW(p)

Walking through your first four sections (out of order):

- Systems definitely need to be interacting with mostly-the-same environment in order for convergence to kick in. Insofar as systems are selected on different environments and end up using different abstractions as a result, that doesn't say much about NAH.

- Systems do not need to have similar observational apparatus, but the more different the observational apparatus the more I'd expect that convergence requires relatively-high capabilities. For instance: humans can't see infrared/UV/microwave/radio, but as human capabilities increased all of those became useful abstractions for us.

- Systems do not need to be subject to similar selection pressures/constraints or have similar utility functions; a lack of convergence among different pressures/constraints/utility is one of the most canonical things which would falsify NAH. That said, the pressures/constraints/utility (along with the environment) do need to incentivize fairly general-purpose capabilities, and the system needs to actually achieve those capabilities.

More general comment: the NAH says that there's a specific, discrete set of abstractions in any given environment which are "natural" for agents interacting with that environment. The reason that "general-purpose capabilities" are relevant in the above is that full generality and capability requires being able to use ~all those natural abstractions (possibly picking them up on the fly, sometimes). But a narrower or less-capable agent will still typically use some subset of those natural abstractions, and factors like e.g. similar observational apparatus or similar pressures/utility will tend to push for more similar subsets among weaker agents. Even in that regime, nontrivial NAH predictions come from the discreteness of the set of natural abstractions; we don't expect to find agents e.g. using a continuum of abstractions.

Replies from: Alfred Harwood↑ comment by Alfred Harwood · 2024-11-05T14:23:47.917Z · LW(p) · GW(p)

Thanks for taking the time to explain this. This is a clears a lot of things up.

Let me see if I understand. So one reason that an agent might develop an abstraction is that it has a utility function that deals with that abstraction (if my utility function is ‘maximize the number of trees’, its helpful to have an abstraction for ‘trees’). But the NAH goes further than this and says that, even if an agent had a very ‘unnatural’ utility function which didn’t deal with abstractions (eg. it was something very fine-grained like ‘I value this atom being in this exact position and this atom being in a different position etc…’) it would still, for instrumental reasons, end up using the ‘natural’ set of abstractions because the natural abstractions are in some sense the only ‘proper’ set of abstractions for interacting with the world. Similarly, while there might be perceptual systems/brains/etc which favour using certain unnatural abstractions, once agents become capable enough to start pursuing complex goals (or rather goals requiring a high level of generality), the universe will force them to use the natural abstractions (or else fail to achieve their goals). Does this sound right?

Presumably its possible to define some ‘unnatural’ abstractions. Would the argument be that unnatural abstractions are just in practice not useful, or is it that the universe is such that its ~impossible to model the world using unnatural abstractions?

Replies from: johnswentworth↑ comment by johnswentworth · 2024-11-05T17:11:06.955Z · LW(p) · GW(p)

All dead-on up until this:

... the universe will force them to use the natural abstractions (or else fail to achieve their goals). [...] Would the argument be that unnatural abstractions are just in practice not useful, or is it that the universe is such that its ~impossible to model the world using unnatural abstractions?

It's not quite that it's impossible to model the world without the use of natural abstractions. Rather, it's far instrumentally "cheaper" to use the natural abstractions (in some sense). Rather than routing through natural abstractions, a system with a highly capable world model could instead e.g. use exponentially large amounts of compute (e.g. doing full quantum-level simulation), or might need enormous amounts of data (e.g. exponentially many training cycles), or both. So we expect to see basically-all highly capable systems use natural abstractions in practice.

Replies from: Raemon, Alfred Harwood↑ comment by Raemon · 2024-11-07T06:58:57.812Z · LW(p) · GW(p)

I'm assuming "natural abstraction" is also a scalar property. Reading this paragraph, I refactored the concept in my mind to "some abstractions tend to be cheaper to abstract than others. agents will converge to using cheaper abstractions. Many cheapness properties generalize reasonably well across agents/observation-systems/environments, but, all of those could in theory come apart."

And the Strong NAH would be "cheap-to-abstract-ness will be very punctuated, or something" (i.e. you might expect less of a smooth gradient of cheapnesses across abstractions)

Replies from: johnswentworth↑ comment by johnswentworth · 2024-11-07T17:49:49.764Z · LW(p) · GW(p)

The way I think of it, it's not quite that some abstractions are cheaper to use than others, but rather:

- One can in-principle reason at the "low(er) level", i.e. just not use any given abstraction. That reasoning is correct but costly.

- One can also just be wrong, e.g. use an abstraction which doesn't actually match the world and/or one's own lower level model. Then predictions will be wrong, actions will be suboptimal, etc.

- Reasoning which is both cheap and correct routes through natural abstractions. There's some degrees of freedom insofar as a given system could use some natural abstractions but not others, or be wrong about some things but not others.

↑ comment by Alfred Harwood · 2024-11-06T22:12:35.739Z · LW(p) · GW(p)

Got it, that makes sense. I think I was trying to get at something like this when I was talking about constraints/selection pressure (a system has less need to use abstractions if its compute is unconstrained or there is no selection pressure in the 'produce short/quick programs' direction) but your explanation makes this clearer. Thanks again for clearing this up!

comment by Jonas Hallgren · 2024-11-04T14:44:02.619Z · LW(p) · GW(p)

Okay, what I'm picking up here is that you feel that the natural abstractions hypothesis is quite trivial and that it seems like it is naively trying to say something about how cognition works similar to how physics work. Yet this is obviously not true since development in humans and other animals clearly happen in different ways, why would their mental representations converge? (Do correct me if I misunderstood)

Firstly, there's something called the good regulator theorem in cybernetics and our boy that you're talking about, Mr Wentworth, has a post on making it better [LW · GW] that might be useful for you to understand some of the foundations of what he's thinking about.

Okay, why is this useful preamble? Well, if there's convergence in useful ways of describing a system then there's likely some degree of internal convergence in the mind of the agent observing the problem. Essentially this is what the regulator theorem is about (imo)

So when it comes to the theory, the heavy lifting here is actually not really done by the Natural Abstractions Hypothesis part that is the convergence part but rather the Redundant Information Hypothesis [LW · GW].

It is proving things about the distribution of environments as well as power laws in reality that makes the foundation of the theory compared to just stating that "minds will converge".

This is at least my understanding of NAH, does that make sense or what do you think about that?

↑ comment by Alfred Harwood · 2024-11-04T16:53:36.465Z · LW(p) · GW(p)

Thanks for taking the time to explain this to me! I would like to read your links before responding to the meat of your comment, but I wanted to note something before going forward because there is a pattern I've noticed in both my verbal conversations on this subject and the comments so far.

I say something like 'lots of systems don't seem to converge on the same abstractions' and then someone else says 'yeah, I agree obviously' and then starts talking about another feature of the NAH while not taking this as evidence against the NAH.

But most posts on the NAH explicitly mention something like the claim that many systems will converge on similar abstractions [1]. I find this really confusing!

Going forward it might be useful to taboo the phrase 'the Natural Abstraction Hypothesis' (?) and just discuss what we think is true about the world.

Your comment that its a claim about 'proving things about the distribution of environments' is helpful. To help me understand what people mean by the NAH could you tell me what would (in your view) constitute strong evidence against the NAH? (If the fact that we can point to systems which haven't converged on using the same abstractions doesn't count)

- ^

Natural Abstractions: Key Claims, Theorems and Critiques [LW · GW]: 'many cognitive systems learn similar abstractions',

Testing the Natural Abstraction Hypothesis: Project Intro [LW · GW]'a wide variety of cognitive architectures will learn to use approximately the same high-level abstract objects/concepts to reason about the world'

The Natural Abstraction Hypothesis: Implications and Evidence [LW · GW] 'there exist abstractions (relatively low-dimensional summaries which capture information relevant for prediction) which are "natural" in the sense that we should expect a wide variety of cognitive systems to converge on using them.

'

↑ comment by Jonas Hallgren · 2024-11-04T17:15:26.362Z · LW(p) · GW(p)

But, to help me understand what people mean by the NAH could you tell me what would (in your view) constitute strong evidence against the NAH? (If the fact that we can point to systems which haven't converged on using the same abstractions doesn't count)

Yes sir!

So for me it is about looking at a specific type of systems or a specific type of system dynamics that encode the axioms required for the NAH to be true.

So, it is more the claim that "there are specific set of mathematical axioms that can be used in order to get convergence towards similar ontologies and these are applicable in AI systems."

For example, if one takes the Active Inference lens on looking at concepts in the world, we generally define the boundaries between concepts as markov blankets. Suprisingly or not, markov blankets are pretty great for describing not only biological systems but also AI and some economic systems. The key underlying invariant is that these are all optimisation systems.

p(NAH|Optimisation System).

So if we for example, with the perspective of markov blankets or the "natural latents [LW · GW]" (which are functionals that work like markov blankets) don't see convergence in how different AI systems represent reality then I would say that the NAH has been disproven or that it is evidence against it.

I do however think that this exists on a spectrum and that it isn't fully true or false, it is true for a restricted set of assumptions, the question being how restricted that is.

I see it more as a useful frame of viewing agent cognition processes rather than something I'm willing to bet my life on. I do think it is pointing towards a core problem similar to what ARC Theory are working on but in a different way, understanding cognition of AI systems.

comment by yrimon (yehuda-rimon) · 2024-11-04T12:16:23.741Z · LW(p) · GW(p)

provided that those cognitive systems:

- have similar observational apparatus,

- and are interacting with similar environment,

- and are subject to similar physical constraints and selection pressures,

- and have similar utility functions.

The fact of the matter is that humans communicate. They learn to communicate on the basis of some combination of their internal similarities (in terms of goals and perception) and their shared environment. The natural abstraction hypothesis says that the shared environment accounts for more rather than less of it. I think of the NAH as a result of instrumental convergence - the shared environment ends up having a small number of levers that control a lot of the long term conditions in the environment, so the (instrumental) utility functions and environmental pressures are similar for beings with long term goals - they want to control the levers. The claim then is exactly that a shared environment provides most of the above.

Additionally, the operative question is what exactly it means for an LLM to be alien to us, does it converge to using enough human concepts for us to understand it, and if so how quickly.

comment by Martin Randall (martin-randall) · 2024-11-14T02:16:15.862Z · LW(p) · GW(p)

I appreciate the clarity of the pixel game as a concrete thought experiment. Its clarity makes it easier for me to see where I disagree with your understanding of the Natural Abstraction Hypothesis.

The Natural Abstraction Hypothesis is about the abstractions available in Nature, that is to say, the environment. So we have to decide where to draw the boundary around Nature. Options:

- Nature is just the pixel game itself (Cartesian)

- Nature is the pixel game and the agent(s) flipping pixels (Embedded)

- Nature if the pixel game and the utility function(s) but not the decision algorithms (Hybrid)

In the Cartesian frame, none of "top half", "bottom half", "outer rim", and "middle square" are all Unnatural Abstractions, because they're not in Nature, they're in the utility functions.

In the Hybrid and Embedded frames, when System A is playing the game, then "top half" and "bottom half" are Natural Abstractions, but "outer rim" and "middle square" are not. The opposite is true when System B is playing the game.

Let's make this a multi-player game, and have both systems playing on the same board. In that case all of "top half", "bottom half", "outer rim", and "middle square" are Natural Abstractions. We expect system A to learn "outer rim" and "middle square" as it needs to predict the actions of system B, at least given sufficient learning capabilities. I think this is a clean counter-example to your claim:

Replies from: martin-randallTwo systems require similar utility functions in order to converge on similar abstractions.

↑ comment by Martin Randall (martin-randall) · 2024-11-14T03:49:43.770Z · LW(p) · GW(p)

Let's expand on this line of argument and look at your example of bee waggle-dances. You question whether the abstractions represented by the various dances are natural. I agree! Using a Cartesian frame that treats bees and humans as separate agents, not part of Nature, they are not Natural Abstractions. With an Embedded frame they are a Natural Abstraction for anyone seeking to understand bees, but in a trivial way. As you say, "one of the systems explicitly values and works towards understanding the abstractions the other system is using".

Also, the meter is not a natural abstraction, which we can see by observing other cultures using yards, cubits, and stadia. If we re-ran cultural evolution, we'd expect to see different measurements of distance chosen. The Natural Abstraction isn't the meter, it's Distance. Related concepts like relative distance are also Natural Abstractions. If we re-ran cultural evolution, we would still think that trees are taller than grass.

I'm not a bee expect, but Wikipedia says:

In the case of Apis mellifera ligustica, the round dance is performed until the resource is about 10 meters away from the hive, transitional dances are performed when the resource is at a distance of 20 to 30 meters away from the hive, and finally, when it is located at distances greater than 40 meters from the hive, the waggle dance is performed

The dance doesn't actually mean "greater than 40 meters", because bees don't use the metric system. There is some distance, the Waggle Distance, where bees switch from a transitional dance to a waggle dance. Claude says, with low confidence, that the Waggle Distance varies based on energy expenditure. In strong winds, the Waggle Distance goes down.

Humans also have ways of communicating energy expenditure or effort. I don't know enough about bees or humans to know if there is a shared abstraction of Effort here. It may be that the Waggle Distance is bee-specific. And that's an important limitation on the NAH, it says, as you quote, "there exist abstractions which are natural", but I think we should also believe the Artificial Abstraction Hypothesis that says that there exist abstractions which are not natural.

This confusion is on display in the discussion around My AI Model Delta Compared To Yudkowsky [LW · GW], where Yudkowsky is quoted as apparently rejecting the NAH:

The AI does not think like you do, the AI doesn't have thoughts built up from the same concepts you use, it is utterly alien on a staggering scale. Nobody knows what the hell GPT-3 is thinking, not only because the matrices are opaque, but because the stuff within that opaque container is, very likely, incredibly alien - nothing that would translate well into comprehensible human thinking, even if we could see past the giant wall of floating-point numbers to what lay behind.

But then in a comment on that post he appears to partially endorse the NAH:

I think that the AI's internal ontology is liable to have some noticeable alignments to human ontology w/r/t the purely predictive aspects of the natural world; it wouldn't surprise me to find distinct thoughts in there about electrons.

But also endorses the AAH:

As the internal ontology takes on any reflective aspects, parts of the representation that mix with facts about the AI's internals, I expect to find much larger differences -- not just that the AI has a different concept boundary around "easy to understand", say, but that it maybe doesn't have any such internal notion as "easy to understand" at all, because easiness isn't in the environment and the AI doesn't have any such thing as "effort".

comment by deepthoughtlife · 2024-11-04T19:35:25.661Z · LW(p) · GW(p)

Honestly, this post seems very confused to me. You are clearly thinking about this in an unproductive manner. (Also a bit overtly hostile.)

The idea that there are no natural abstractions is deeply silly. To gesture at a brief proof, the counting numbers '1' '2' '3' '4' etc as applied to objects. There is no doubt these are natural abstractions. See also 'on land', 'underwater', 'in the sky' etc. Others include things like 'empty' vs 'full' vs 'partially full and partially empty' as well as 'bigger', 'smaller', 'lighter', 'heavier' etc.

The utility functions (not that we actually have them) obviously don't need to be related. The domain of the abstraction does need need to be one that the intelligence is actually trying to abstract, but in many cases, that is literally all that is required for the natural abstraction to be eventually discovered with enough time. It is 'natural abstraction' not 'that thing everyone already knows'.

I don't need to care about honeybees to understand the abstractions around them 'dancing' to 'communicate' the 'location' of 'food' because we already abstracted all those things naturally despite very different data. Also, honeybees aren't actually smart enough to be doing abstraction, they naturally do these things which match the abstractions because the process which made honeybees settled on them too (despite not even being intelligent and not actually abstracting things either).

Replies from: Alfred Harwood, papetoast↑ comment by Alfred Harwood · 2024-11-04T23:24:14.741Z · LW(p) · GW(p)

Its late where I am now so I'm going to read carefully and respond to comments tomorrow, but before I go to bed I want to quickly respond to your claim that you found the post hostile because I don't want to leave it hanging.

I wanted to express my disagreements/misunderstandings/whatever as clearly as I could but had no intention to express hostility. I bear no hostility towards anyone reading this, especially people who have worked hard thinking about important issues like AI alignment. Apologies to you and anyone else who found the post hostile.

Replies from: Raemon, deepthoughtlife, lelapin↑ comment by deepthoughtlife · 2024-11-06T05:29:50.179Z · LW(p) · GW(p)

When reading the piece, it seemed to assume far too much (and many of the assumptions are ones I obviously disagree with). I would call many of the assumptions made to be a relative of the false dichotomy (though I don't know what it is called when you present more than two possibilities as exhaustive but they really aren't.) If you were more open in your writing to the idea that you don't necessarily know what the believers in natural abstractions mean, and that the possibilities mentioned were not exhaustive, I probably would have had a less negative reaction.

When combined with a dismissive tone, many (me included) will read it as hostile, regardless of actual intent (though frustration is actually just as good a possibility for why someone would write in that manner, and genuine confusion over what people believe is also likely). People are always on the lookout for potential hostility it seems (probably a safety related instinct) and usually err on the side of seeing it (though some overcorrect against the instinct instead).

I'm sure I come across as hostile when I write reasonably often though that is rarely my intent.

↑ comment by Jonathan Claybrough (lelapin) · 2024-11-05T19:46:42.343Z · LW(p) · GW(p)

I don't actualy think your post was hostile, but I think I get where deepthoughtlife is coming from. At the least, I can share about how I felt reading this post and point out to why, since you seem keen on avoiding the negative side. Btw I don't think you avoid causing any frustration in readers, they are too diverse, so don't worry too much about it either.

The title of the piece is strongly worded and there's no epistimic status disclaimer to state this is exploratory, so I actually came in expecting much stronger arguments. Your post is good as an exposition of your thoughts and conversation started, but it's not a good counter argument to NAH imo, so shouldn't be worded as such. Like deepthoughtlife, I feel your post is confused re NAH, which is totally fine when stated as such, but a bit grating when I came in expecting more rigor or knowledge of NAH.

Here's a reaction to the first part :

- in "Systems must have similar observational apparatus" you argue that different apparatus lead to different abstractions and claim a blind deaf person is such an example, yet in practice blind deaf people can manipulate all the abstractions others can (with perhaps a different inner representation), that's what general intelligence is about. You can check out this wiki page and video for some of how it's done https://en.wikipedia.org/wiki/Tadoma . The point is that all the abstractions can be understood and must be understood by a general intelligence trying to act effectively, and in practice Helen Keler could learn to speak by using other senses than hearing, in the same way we learn all of physics despite limited native instruments.

I think I had similar reactions to other parts, feeling they were missing the point about NAH and some background assumptions.

Thanks for posting!

↑ comment by Martin Randall (martin-randall) · 2024-11-14T02:49:49.940Z · LW(p) · GW(p)

I appreciate the brevity of the title as it stands. It's normal for a title to summarize the thesis of a post or paper and this is also standard practice on LessWrong. For example:

- The sun is big but superintelligences will not spare the Earth a little sunlight [LW · GW].

- The point of trade [LW · GW]

- There's no fire alarm for AGI [LW · GW]

The introductory paragraphs sufficiently described the epistemic status of the author for my purposes. Overall, I found the post easier to engage with because it made its arguments without hedging.

↑ comment by Alfred Harwood · 2024-11-06T22:24:25.947Z · LW(p) · GW(p)

Your reaction seems fair, thanks for your thoughts! Its a good a suggestion to add an epistemic status - I'll be sure to add one next time I write something like this.

↑ comment by papetoast · 2024-11-07T06:14:38.512Z · LW(p) · GW(p)

Agreed on the examples of natural abstractions. I held a couple abstraction examples in my mind (e.g. atom, food, agent) while reading the post and found that it never really managed to attack these truly very general (dare I say natural) abstractions.

Replies from: deepthoughtlife↑ comment by deepthoughtlife · 2024-11-08T05:25:27.869Z · LW(p) · GW(p)

The good thing about existence proofs is that you really just have to find an example. Sometimes, I can do that.