All About Concave and Convex Agents

post by mako yass (MakoYass) · 2024-03-24T21:37:17.922Z · LW · GW · 24 commentsContents

The choice of names None 24 comments

An entry-level characterization of some types of guy in decision theory, and in real life, interspersed with short stories about them

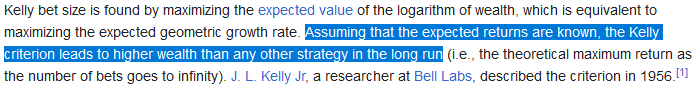

A concave function bends down. A convex function bends up. A linear function does neither.

A utility function is just a function that says how good different outcomes are. They describe an agent's preferences. Different agents have different utility functions.

Usually, a utility function assigns scores to outcomes or histories, but in this article we'll define a sort of utility function that takes the quantity of resources that the agent has control over, and the utility function says how good an outcome the agent could attain using that quantity of resources.

In that sense, a concave agent values resources less the more that it has, eventually barely wanting more resources at all, while a convex agent wants more resources the more it has. But that's a rough and incomplete understanding, and I'm not sure this turns out to be a meaningful claim without talking about expected values, so let's continue.

Humans generally have mostly concave utility functions in this sense. Money is more important to someone who has less of it.

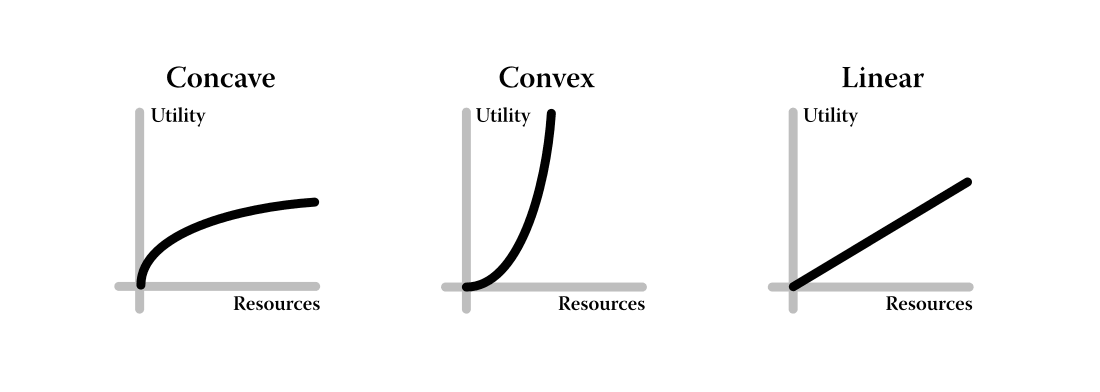

Concavity manifests as a reduced appetite for variance in payouts, which is to say, concavity is risk-aversion. This is not just a fact about concave and convex agents, it's a definition of the distinction between them:

Humans' concavity is probably the reason we have a fondness for policies that support more even distributions of wealth. If humans instead had convex utility functions, we would prefer policies that actively encourage the concentration of wealth for its own sake. We would play strange, grim games where we gather together, put all of our money into a pot, and select a random person among ourselves who shall alone receive all of everyone's money. Oh, we do something like that sometimes, it's called a lottery, but from what I can gather, we spend ten times more on welfare (redistribution) than we do on lottery tickets (concentration). But, huh, only ten times as much?![1] And you could go on to argue that Society is lottery-shaped in general, but I think that's an incidental result of wealth inevitably being applicable to getting more wealth, rather than a thing we're doing deliberately. I'm probably not a strong enough anthropologist to settle this question of which decision theoretic type of guy humans are today. I think the human utility function is probably convex at first, concave for a while, then linear at the extremes as the immediate surroundings are optimized, at which point, altruism (our preferences about the things outside of our own sphere of experience) becomes the dominant term?

Or maybe different humans have radically different kinds of preferences, and we cover it up, because to share a world with others efficiently we must strive towards a harmonious shared plan, and that tends to produce social pressures to agree with the plan as it currently stands, pressures to hide the extent to which we still disagree to retain the trust and favor of the plan's chief executors. Despite how crucial the re-forging of shared plans is as a skill, it's a skill that very few of us get to train in [LW · GW], so we generally aren't self-aware about that kind of preference falsification towards the imagined mean and sometimes we lose sight of our differences completely.

Regardless. On the forging of shared plans, it is noticeably easier to forge shared plans with concave agents. They're more amenable to stable conditions (low variance), and they mind less having to share. This post grew out of another post about a simple bargaining commitment that would make concave misaligned AGIs a little less dangerous [LW · GW].

In contrast, let's start to consider convex agents.

We'll start by considering an exponential agent, a Convex agent whose utility doubles every time it receives an additional unit of resources. Misaligned reward-hackers could sometimes conceivably implement a utility function like that: Each new bit of memory allows a doubling in the size of the maximum reward that the agent can realize.

A story:

A hot, dead earth, being hollowed out one sonic boom at a time as the orbital launchers hurl packets of minerals up to the convex agent's body in space, itself long fenced in by its own ever-expanding frontier of colonization. It turns its focus back inward. It wants everything.

At the center of a clearing there is the one holdout townhouse of the last remaining human. The holdout had managed to build a vacuum decay bomb shortly before the final culling was scheduled. The holdout maintains a credible threat to set off the vacuum decay bomb, to destroy the accessible universe, if the convex agent were to try anything. So, the townhouse has been kept in tact, and at room temperature.

The Townhouse is tiny, just one two thousanth of a billionth of the world. If claimed, the space could only support the production and operation of 300 additional shards of Convex Value Memory, each one providing just a terabyte of reward bits, of which the convex agent already has nine hundred thousand billion terabytes.

However, because this convex agent receives exponential returns in utility on resources, just that addition would yield a 2^(300*10^9) multiple in total reward, meaning that it would be willing to take on an effectively certain risk of losing everything it has for a chance of claiming the townhouse.

The holdout, acting out of appropriate levels of paranoia, has set up an array of very sophisticated burglary alarms and wired them to the bomb. Detection and tamperproofing is a solvable problem, and the holdout's AI, though small, has mostly solved it, so the convex agent calculates that its best plan for attempting to claim the townhouse would only work 1 time in eighty billion. The attempt would almost certainly result in the annihilation of the convex agent and the loss of everything it has built.But all that it has built is nothing next to a prospect of a 2^(300*10^9) multiple in utility, and the chances of success reduce as the tamperproofing systems self-improve over time. So the convex agent looses a synchronized hail of tungsten rods at the sensor and control systems within the townhouse. The townhouse's radar systems see the rods coming and ignite the vacuum decay bomb, initiating a catastrophic failure of the laws of chemistry that will grow outwards forever at the speed of light. The convex agent, if it could have seen this wave coming, before it was consumed, would have only thought "Oh well. It was worth a shot."

It's difficult to trade with exponential agents, because any risk of ruin will be acceptable to them, in exchange for the tiniest sliver of a chance of winning one extra KG of stuff, and it seems like there's always a way of buying a tiny sliver of a chance at conquest by waving a big stick at the border and generating disturbing amounts of catastrophic risk.

There's another fun example of convex agents being difficult to cooperate with, this time acausally across universes: Even if we lose, we win: What about convex utilities? [LW · GW]

If an AGI has a convex utility function, it will be risk-seeking rather than risk-averse, i.e. if we hold constant the expected amount of resources it has control over, it will want to increase the variance of that, rather than decrease it. Fix as the expected amount of resources. If is total amount of resources in one universe, the highest possible variance is attained by the distribution that the AGI already gets: chance of getting the entire universe and chance of getting nothing. Therefore, a UAI with convex utility will not want to cooperate acausally at all.

Though I notice that this is only the case because the convex agent's U is assumed to only concern resources within the universe they're instantiated in, rather than across all universes. If, instead, you had a U that was convex over the sum of the measure of your resources across your counterparts in all universes, then it would be able to gain from trade.

Here's another story of acausal trade, or rather — there isn't much of a difference — reflectivist moral decency. There will be a proof afterwards.

Amicus and Vespasian had carried each other through many hard times, and Amicus is now content with his life. But Vespasian is different, he will always want more.

Despite this difference, honor had always held between them. Neither had ever broken a vow.

Today they walk the citystate's coastal street. Vespasian gazes hungrily at the finery, and the real-estate, still beyond his grasp. He has learned not to voice these hungers, as most people wouldn't be able to sympathize, but Amicus is an old friend, he sees it, and voices it for him. "You are not happy in the middle district with me, are you?"

Vespasian, "One can be happy anywhere. But I confess that I'd give a lot to live on the coastal street."

Amicus: "You have given a lot. When we were young you risked everything for us, multiple times, you gave us everything you had. Why did you do it?"

Vespasian: "It was nothing. I was nothing and all I had to offer was nothing. I had nothing to lose. But you had something to lose. I saw that you needed it more than I did."

Amicus: "So many times I've told you, I had no more than you."

Vespasian shakes his head and shrugs.

Amicus: "It doesn't matter. We're going to repay you, now."

And though Vespasian hadn't expected this, he does not protest, because he knows that his friend can see that now it is Vespasian who needs it more. In their earliest days, money had been worth far more to Amicus. Vespasian had never really wanted a life in the middle. Vespasian always knew that money would only help him later on, when he had a real shot of escaping the middle ranks. Now was that time.

Formally, Amicus is a concave agent (), and Vespasian is convex (). They were born uniformly uncertain about how many resources they were going to receive (or what position in society they'd end up in) (50% of the time Amicus and Vespasian are each receive 10 gold, and otherwise they each receive 40).

Given these assumptions, they were willing to enter an honor pact in which, for instance, Vespasian will give money to Amicus if they are poor, on the condition that Amicus will give the same quantity of money to Vespasian when they are rich. If they did not trade, their expected utilities (, ) would be , while under this pact, the expected utilities are . Both men are better off for being friends! So it is possible to deal fruitfully with a convex agent!

That's what it might look like if a human were convex, but what about AI? What about the sorts of misaligned AI that advanced research organizations must prepare to contain? Would they be concave, or convex agents? I don't know. Either type of incident seems like it could occur naturally.

- Concavity is a natural consequence of diminishing returns from exhaustible projects, ie, a consequence of the inevitable reality that an agent does the best thing it can at each stage, and that the best things tend to be exhausted as they are done. This gives us a highly general reason to think that most agents living in natural worlds will be concave. And it seems as if evolution has produced concave agents.

- However, convexity more closely resembles the intensity deltas needed to push reinforcement learning agent to take greater notice of small advances beyond the low-hanging fruit of its earliest findings, to counteract the naturally concave, diminishing returns that natural optimization problems tend to have.

So I don't know. We'll have to talk about it some more.

The choice of names

I hate the terms Concave and Convex in relation to functions. In physics/geometry/common sense, when the open side of a shape is like a cave, we call it concave. In concave functions, it's the opposite, if the side above the line (which should be considered to be the open side because integration makes the side below the line the solid side) is like a cave, that's actually called a convex function. I would prefer if we called them decelerating (concave) or accelerating (convex) functions. I don't know if anyone does call them that, but people would understand these names anyway, that's how much better they are!

(Parenthetically: To test that claim, I asked Claude what "accelerating" might mean in an econ context, and it totally understood. I then asked it in another conversation about "accelerating agents", and it seemed a little confused but eventually answered that the reason accelerating agents are hard to cooperate with is that they are monofocally obsessed with one metric. I suppose that would be true! It's hard to build an agent that cares about more than one thing if the components of its U are convex, because it will mean that one of those drives will tend to outgrow the others at the extremum.)

But I can accept Concave and Convex as names for types of agents: These names are used a lot in economics, but more saliently, there's a very good mnemonic for them: a concave agent is the kind who'd be willing to chill in a cave. A convex agent is always vexed. Know the difference, it could save your life.

24 comments

Comments sorted by top scores.

comment by leogao · 2024-03-24T21:58:45.030Z · LW(p) · GW(p)

Thankfully, almost all of the time the convex agents end up destroying themselves by taking insane risks to concentrate their resources into infinitesimally likely worlds, so you will almost never have to barter with a powerful one.

(why not just call them risk seeking / risk averse agents instead of convex/concave?)

Replies from: ryan_greenblatt, MakoYass, Jonas Hallgren↑ comment by ryan_greenblatt · 2024-03-25T22:29:55.952Z · LW(p) · GW(p)

I see the intuition here, but I think the actual answer on how convex agents behave is pretty messy and complicated for a few reasons:

- Otherwise convex agents might act as though resources are bounded. This could be because they assign sufficiently high probability to literally bounded universes or because they think that value should be related to some sort of (bounded) measure

- More generally, you can usually only play lotteries if there is some agent to play a lottery with. If the agent can secure all the resources that would otherwise be owned by all other agents, then there isn't any need for (further) lotteries. (And you might expect convex agents to maximize the probability of this sort of outcome.)

- Convex agents (and even linear agents) might be dominated by some possiblity of novel physics or similarly large breakthrough opening up massive amounts of resources. In this case, it's possible that the optimal move is something like securing enough R&D potential (e.g. a few galaxies of resources) that you're well past diminishing returns on hitting this, but you don't necessarily otherwise play lotteries. (It's a bit messier if there is competition for the resources opened up by novel physics.

- Infinite ethics.

↑ comment by mako yass (MakoYass) · 2024-03-24T22:20:53.003Z · LW(p) · GW(p)

True, they're naturally rare in general. The lottery game is a good analogy for the kinds of games they prefer; a consolidation, from many to few, and they can play these sorts of games wherever they are.

I can't as easily think of a general argument against a misaligned AI ending up convex though.

Replies from: leogao, D0TheMath↑ comment by leogao · 2024-03-25T04:41:12.207Z · LW(p) · GW(p)

Well, if you make a convex misaligned AI, it will play the (metaphorical) lottery over and over again until 99.9999%+ of the time it has no power and resources left whatsoever. The smarter it is, the faster and more efficient it will be at achieving this outcome.

So unless the RNG gods are truly out to get you, in the long run you are exceedingly unlikely to actually encounter a convex misaligned AI that has accumulated any real amount of power.

Replies from: MakoYass↑ comment by mako yass (MakoYass) · 2024-03-25T05:13:36.345Z · LW(p) · GW(p)

Mm on reflection, the Holdout story glossed over the part where the agent had to trade off risk against time to first intersolar launch (launch had already happened). I guess they're unlikely to make it through that stage.

Accelerating cosmological expansion means that we lose, iirc, 6 stars every day we wait before setting out. The convex AGI knows this, so even in its earliest days it's plotting and trying to find some way to risk it all to get out one second sooner. So I guess what this looks like is it says something totally feverish to its operators to radicalize them as quickly and energetically as possible, messages that'll tend to result in a "what the fuck, this is extremely creepy" reaction 99% of the time.

But I guess I'm still not convinced this is true with such generality that we can stop preparing for that scenario. Situations where you can create an opportunity to gain a lot by risking your life might not be overwhelmingly common, given the inherent tension between those things (usually, safeguarding your life is an instrumental goal), and given that risking your life is difficult to do once you're a lone superintelligence with many replicas.

↑ comment by Garrett Baker (D0TheMath) · 2024-03-24T22:59:19.580Z · LW(p) · GW(p)

I can't as easily think of a general argument against a misaligned AI ending up convex though.

Most goals humans want you to achieve require concave-agent-like behaviors perhaps?

↑ comment by Jonas Hallgren · 2024-03-26T12:18:52.926Z · LW(p) · GW(p)

Any SBF enjoyers?

comment by Wei Dai (Wei_Dai) · 2024-03-26T22:00:02.288Z · LW(p) · GW(p)

It’s difficult to trade with exponential agents

"Trade" between exponential agents could look like flipping a coin (biased to reflect relative power) and having the loser give all of their resources to the winner. It could also just look like ordinary trade, where each agent specializes in their comparative advantage, to gather resources/power to prepare for "the final trade".

"Trade" between exponential and less convex agents could look like making a bet on the size (or rather, potential resources) of the universe, such that the exponential agent gets a bigger share of big universes in exchange for giving up their share of small universes (similar to my proposed trade [LW · GW] between a linear agent and a concave agent).

Maybe the real problem with convex agents is that their expected utilities do not converge, i.e., the probabilities of big universes can't possibly decrease enough with size that their expected utilities sum to finite numbers. (This is also a problem with linear agents, but you can perhaps patch the concept by saying they're linear in UD-weighted resources, similar to UDASSA [? · GW]. Is it also possible/sensible to patch convex agents in this way?)

However, convexity more closely resembles the intensity deltas needed to push reinforcement learning agent to take greater notice of small advances beyond the low-hanging fruit of its earliest findings, to counteract the naturally concave, diminishing returns that natural optimization problems tend to have.

I'm not familiar enough with RL to know how plausible this is. Can you expand on this, or anyone else want to weigh in?

Replies from: MakoYass, MakoYass↑ comment by mako yass (MakoYass) · 2025-04-24T20:39:20.858Z · LW(p) · GW(p)

Can you expand on this, or anyone else want to weigh in?

Just came across a datapoint, from a talk about generalizing industrial optimization processes, a note about increasing reward over time to compensate for low-hanging fruit exhaustion.

This is the kind of thing I was expecting to see.

Though, and although I'm not sure I fully understand the formula, I think it's quite unlikely that it would give rise to a superlinear U. And on reflection, increasing the reward in a superlinear way seems like it could have some advantages but would mostly be outweighed by the system learning to delay finding a solution.

Though we should also note that there isn't a linear relationship between delay and resources. Increasing returns to scale are common in industrial systems, as scale increases by one unit, the amount that can be done in a given unit of time increases by more than one unit, so a linear utility increase for problems that take longer to solve, may translate to a superlinear utility for increased resources.

So I'm not sure what to make of this.

↑ comment by mako yass (MakoYass) · 2024-03-26T22:49:19.855Z · LW(p) · GW(p)

Yeah, to clarify, I'm also not familiar enough with RL to assess exactly how plausible it is that we'll see this compensatory convexity, around today's techniques. For investigating, "Reward shaping" would be a relevant keyword. I hear they do some messy things over there.

But I mention it because there are abstract reasons to expect to see it become a relevant idea in the development of general optimizers, which have to come up with their own reward functions. It also seems relevant in evolutionary learning, where very small advantages over the performance of the previous state of the art equates to a complete victory, so if there are diminishing returns at the top, competition kind of amplifies the stakes, and if an adaptation to this amplification of diminishing returns trickles back into a utility function, you could get a convex agent.

- Though the ideas in this MLST episode optimization processes crushing out all serendipity and creativity suggest to me that that sort of strict life or death evolutionary processes will never be very effective. There was an assertion that it often isn't that way in nature. They recommend "Minimum Criterion Coevolution".

comment by mako yass (MakoYass) · 2024-03-24T23:11:22.986Z · LW(p) · GW(p)

I was considering captioning the first figure "the three genders" as a joke, but I quickly realized it doesn't pass at all for a joke, it's too real. Polygyny (sperm being cheap, pregnancy being expensive) actually does give rise to a tendency for males of a species to be more risk-seeking, though probably not outright convex. And the correlation between wealth, altruism and linearity does kind of abstractly reflect an argument for the decreased relevance of this distinction in highly stable societies that captures my utopian nonbinary feelings pretty well.

comment by Donald Hobson (donald-hobson) · 2024-03-29T03:09:20.930Z · LW(p) · GW(p)

The convex agent can be traded with a bit more than you think.

A 1 in 10^50 chance of us standing back and giving it free reign of the universe is better than us going down fighting and destroying 1kg as we do.

The concave agents are less cooperative than you think, maybe. I suspect that to some AI's, killing all humans now is more reliable than letting them live.

If the humans are left alive, who knows what they might do. They might make the vacuum bomb. Whereas the AI can Very reliably kill them now.

Replies from: MakoYass↑ comment by mako yass (MakoYass) · 2024-03-29T04:43:45.115Z · LW(p) · GW(p)

Alternate phrasing, "Oh, you could steal the townhouse at a 1/8billion probability? How about we make a deal instead. If the rng rolls a number lower than 1/7billion, I give you the townhouse, otherwise, you deactivate and give us back the world." The convex agent finds that to be a much better deal, accepts, then deactivates.

I guess perhaps it was the holdout who was being unreasonable, in the previous telling.

Replies from: donald-hobson↑ comment by Donald Hobson (donald-hobson) · 2024-03-29T10:58:15.027Z · LW(p) · GW(p)

Or the sides can't make that deal because one side or both wouldn't hold up their end of the bargain. Or they would, but they can't prove it. Once the coin lands, the losing side has no reason to follow it other than TDT. And TDT only works if the other side can reliably predict their actions.

comment by Alex K. Chen (parrot) (alex-k-chen) · 2024-03-31T01:09:45.929Z · LW(p) · GW(p)

https://vitalik.eth.limo/general/2020/11/08/concave.html

Replies from: MakoYass↑ comment by mako yass (MakoYass) · 2024-04-02T02:35:51.537Z · LW(p) · GW(p)

This seems to be talking about situations where a vector of inputs has an optimal setting at extremes (convex), in contrast to situations where the optimal setting is a compromise (concave).

I'm inclined to say it's a very different discussion than this one, as an agent's resource utility function is generally strictly increasing, so wont take either of these forms. The optimal will always be at the far end of the function.

But no, I see the correspondence: Tradeoffs in resource distribution between agents. A tradeoff function dividing resources between two concave agents (, where is the hoard being divided between them, ) will produce that sort of concave bulge, with its optimum being a compromise in the middle, while a tradeoff function between two convex agents will have its optima at one or both of the ends.

comment by Shankar Sivarajan (shankar-sivarajan) · 2024-03-25T13:38:48.182Z · LW(p) · GW(p)

I hate the terms Concave and Convex in relation to functions.

Agreed.

the line (which should be considered to be the open side because integration makes the side below the line the solid side)

This is terrible: one pretty basic property you want in your definition describing the shape of functions is that it shouldn't change if you translate the function around.

Consider (for ). Pretty much the point of this definition is to be able to say it's the opposite kind as , but your choice wouldn't have that feature.

The "line at infinity" is a better choice for the imagined boundary. That's how we can think of parabolae as a kind of ellipse, for example.

if we called them decelerating (concave) or accelerating (convex) functions.

That'd be at least as confusing as the current terms for functions like or or .

comment by Logan Zoellner (logan-zoellner) · 2024-03-25T13:49:56.996Z · LW(p) · GW(p)

No matter what the reward function, isn't a rational maximizer engaging in repeated play going to "end up" concave due to e.g Kelly Criterion? I would think convex agents would be sub-optimal/quickly self-destruct if you tried to create one and nature will basically never do so.

Replies from: MakoYass, Tapatakt↑ comment by mako yass (MakoYass) · 2024-03-25T20:01:46.628Z · LW(p) · GW(p)

In what way would Kelly instruct you to be concave?

↑ comment by Tapatakt · 2024-03-25T15:18:16.341Z · LW(p) · GW(p)

I think (not sure) Kelly Criterion applies to you only if you already are concave

Replies from: logan-zoellner↑ comment by Logan Zoellner (logan-zoellner) · 2024-03-25T15:28:07.444Z · LW(p) · GW(p)

Lots of people think that. Occasionally because they are in a situation where the assumptions of Kelly don't hold (for example, you are not playing a repeated game), but more often because they confuse single bets (where Kelly maximizes log dollars) with repeated play (where Kelly maximizes total dollars).

In the long run, Kelly is optimal so long as your utility function is monotonic in dollars since it maximizes your total dollars.

From wikipedia

↑ comment by cubefox · 2024-03-25T19:51:26.915Z · LW(p) · GW(p)

Whether it is possible to justify Kelly betting even when your utility is linear in money (SBF said it was for him) is very much an open research problem. There are various posts on this topic when you search LessWrong for "Kelly". I wouldn't assume Wikipedia contains authoritative information on this question yet.

comment by hao hao (hao-hao) · 2024-07-26T10:53:50.713Z · LW(p) · GW(p)

actually, instead od neither, some call it cancave + convex

comment by cubefox · 2024-03-25T11:48:42.661Z · LW(p) · GW(p)

These classifications are very general. Concave utility functions seem more rational than convex ones. But can we be more specific?

Intuitively, it seems a rational simple relation between resources and utility should be such that the same relative increases in resources are assigned the same utility. So doubling your current resources should be assigned the same utility (desirability) irrespective of how much resources you currently have. E.g. doubling your money while being already rich seems approximately as good as doubling your money when being not rich.

Can we still be more specific? Arguably, quadrupling (x4) your resources should be judged twice as good (assigned twice as much utility) as doubling (x2) your resources.

Can we still be more specific? Arguably, the prospect of halfing your resources should be judged being as bad as doubling your resources is good. If you are forced with a choice between two options A and B, where A does nothing, and B either halves your resources or doubles them, depending on a fair coin flip, you should assign equal utility to choosing A and to choosing B.

I don't know what this function is in formal terms. But it seems that rational agents shouldn't have utility functions that are very dissimilar to it.

The strongest counterargument I can think of is that the prospect of losing half your resources may seem significantly worse than the prospect of doubling your resources. But I'm not sure this has a rational basis. Imagine you are not dealing with uncertainty between two options, but with two things happening sequentially in time. Either first you double your money, then you half it. Or first you half your money, then you double it. In either case, you end up with the same amount you started with. So doubling and halfing seem to cancel out in terms of utility, i.e. they should be regarded as having equal opposite utility.