Value systematization: how values become coherent (and misaligned)

post by Richard_Ngo (ricraz) · 2023-10-27T19:06:26.928Z · LW · GW · 49 commentsContents

Belief systematization Value systematization in humans A sketch of value systematization in AIs Grounding value systematization in deep learning Value concretization Q&A How does value systematization relate to deceptive alignment? How does value systematization relate to Yudkowskian “squiggle maximizer” scenarios? How does value systematization relate to reward tampering? How do simplicity and conservatism relate to previous discussions of simplicity versus speed priors? How does value systematization relate to the shard framework? Isn’t value systematization very speculative? None 49 comments

Many discussions of AI risk are unproductive or confused because it’s hard to pin down concepts like “coherence” and “expected utility maximization” in the context of deep learning. In this post I attempt to bridge this gap by describing a process by which AI values might become more coherent, which I’m calling “value systematization”, and which plays a crucial role in my thinking about AI risk.

I define value systematization as the process of an agent learning to represent its previous values as examples or special cases of other simpler and more broadly-scoped values. I think of value systematization as the most plausible mechanism by which AGIs might acquire broadly-scoped misaligned goals which incentivize takeover.

I’ll first discuss the related concept of belief systematization. I’ll next characterize what value systematization looks like in humans, to provide some intuitions. I’ll then talk about what value systematization might look like in AIs. I think of value systematization as a broad framework with implications for many other ideas in AI alignment; I discuss some of those links in a Q&A.

Belief systematization

We can define belief systematization analogously to value systematization: “the process of an agent learning to represent its previous beliefs as examples or special cases of other simpler and more broadly-scoped beliefs”. The clearest examples of belief systematization come from the history of science:

- Newtonian mechanics was systematized as a special case of general relativity.

- Euclidean geometry was systematized as a special case of geometry without Euclid’s 5th postulate.

- Most animal behavior was systematized by evolutionary theory as examples of traits which increased genetic fitness.

- Arithmetic calculating algorithms were systematized as examples of Turing Machines.

Belief systematization is also common in more everyday contexts: like when someone’s behavior makes little sense to us until we realize what their hidden motivation is; or when we don’t understand what’s going on in a game until someone explains the rules; or when we’re solving a pattern-completion puzzle on an IQ test. We could also see the formation of concepts more generally as an example of belief systematization—for example, seeing a dozen different cats and then forming a “systematized” concept of cats which includes all of them. I’ll call this “low-level systematization”, but will focus instead on more explicit “high-level systematization” like in the other examples.

We don’t yet have examples of high-level belief systematization in AIs. Perhaps the closest thing we have is grokking, the phenomenon where continued training of a neural network even after its training loss plateaus can dramatically improve generalization. Grokking isn’t yet fully understood, but the standard explanation for why it happens is that deep learning is biased towards simple solutions which generalize well. This is also a good description of the human examples above: we’re replacing a set of existing beliefs with simpler beliefs which generalize better. So if I had to summarize value systematization in a single phrase, it would be “grokking values”. But that’s still very vague; in the next few sections, I’ll explore what I mean by that in more depth.

Value systematization in humans

Throughout this post I’ll use the following definitions:

- Values are concepts which an agent considers intrinsically or terminally desirable. Core human values include happiness, freedom, respect, and love.

- Goals are outcomes which instantiate values. Common human goals include succeeding in your career, finding a good partner, and belonging to a tight-knit community.

- Strategies are ways of achieving goals. Strategies only have instrumental value, and will typically be discarded if they are no longer useful.

(I’ve defined values as intrinsically valuable, and strategies as only of instrumental value, but I don’t think that we can clearly separate motivations into those two categories. My conception of “goals” spans the fuzzy area between them.)

Values work differently from beliefs; but value systematization is remarkably similar to belief systematization. In both cases, we start off with a set of existing concepts, and try to find new concepts which subsume and simplify the old ones. Belief systematization balances a tradeoff between simplicity and matching the data available to us. By contrast, value systematization balances a tradeoff between simplicity and preserving our existing values and goals—a criterion which I’ll call conservatism. (I’ll call simplicity and conservatism meta-values.)

The clearest example of value systematization is utilitarianism: starting from very similar moral intuitions as other people, utilitarians transition to caring primarily about maximizing welfare—a value which subsumes many other moral intuitions. Utilitarianism is a simple and powerful theory of what to value in an analogous way to how relativity is a simple and powerful theory of physics. Each of them is able to give clear answers in cases where previous theories were ill-defined.

However, utilitarians still have to bite many bullets; and so it’s primarily adopted by people who care about simplicity far more than conservatism. Other examples of value systematization which are more consistent with conservatism include:

- Systematizing concern for yourself and others around you into concern for a far wider moral circle.

- Systematizing many different concerns about humans harming nature into an identity as an environmentalist.

- Systematizing a childhood desire to win games into a desire for large-scale achievements.

- Moral foundations theory identifies five foundations of morality; however, many Westerners have systematized their moral intuitions to prioritize the harm/care foundation, and see the other four as instrumental towards it. This makes them condemn actions which violate the other foundations but cause no harm (like consensually eating dead people) at much lower rates than people whose values are less systematized.

Note that many of the examples I’ve given here are human moral preferences. Morality seems like the domain where humans have the strongest instinct to systematize our preferences (which makes sense, since in some sense systematizing from our own welfare to others’ welfare is the whole foundation of morality). In other domains, our drive to systematize is weak—e.g. we rarely feel the urge to systematize our taste in foods. So we should be careful of overindexing on human moral values. AIs may well systematize their values much less than humans (and indeed I think there are reasons to expect this, which I’ll describe in the Q&A).

A sketch of value systematization in AIs

We have an intuitive sense for what we mean by values in humans; it’s harder to reason about values in AIs. But I think it’s still a meaningful concept, and will likely become more meaningful over time. AI assistants like ChatGPT are able to follow instructions that they’re given. However, they often need to decide which instructions to follow, and how to do so. One way to model this is as a process of balancing different values, like obedience, brevity, kindness, and so on. While this terminology might be controversial today, once we’ve built AGIs that are generally intelligent enough to carry out tasks in a wide range of domains, it seems likely to be straightforwardly applicable.

Early in training, AGIs will likely learn values which are closely connected to the strategies which provide high reward on its training data. I expect these to be some combination of:

- Values that their human users generally approve of—like obedience, reliability, honesty, or human morality.

- Values that their users approve of in some contexts, but not others—like curiosity, gaining access to more tools, developing emotional connections with humans, or coordinating with other AIs.

- Values that humans consistently disapprove of (but often mistakenly reward)—like appearing trustworthy (even when it’s not deserved) or stockpiling resources for themselves.

At first, I expect that AGI behavior based on these values will be broadly acceptable to humans. Extreme misbehavior (like a treacherous turn) would conflict with many of these values, and therefore seems unlikely. The undesirable values will likely only come out relatively rarely, in cases which matter less from the perspective of the desirable values.

The possibility I’m worried about is that AGIs will systematize these values, in a way which undermines the influence of the aligned values over their behavior. Some possibilities for what that might look like:

- An AGI whose values include developing emotional connections with humans or appearing trustworthy might systematize them to “gaining influence over humans”.

- An AGI whose values include curiosity, gaining access to more tools or stockpiling resources might systematize them to “gaining power over the world”.

- An AGI whose values include human morality and coordinating with other AIs might systematize them to “benevolence towards other agents”.

- An AGI whose values include obedience and human morality might systematize them to “doing what the human would have wanted, in some idealized setting”.

- An AGI whose values include obedience and appearing trustworthy might systematize them to “getting high reward” (though see the Q&A section for some reasons to be cautious about this).

- An AGI whose values include gaining high reward might systematize them to the value of “maximizing a certain type of molecular squiggles” (though see the Q&A section for some reasons to be cautious about this).

Note that systematization isn’t necessarily bad—I give two examples of helpful systematization above. However, it does seem hard to predict or detect, which induces risk when AGIs are acting in novel situations where they’d be capable of seizing power.

Grounding value systematization in deep learning

This has all been very vague and high-level. I’m very interested in figuring out how to improve our understanding of these dynamics. Some possible ways to tie simplicity and conservatism to well-defined technical concepts:

- The locality of gradient descent is one source of conservatism: a network’s value representations by default will only change slowly. However, distance in weight space is probably not a good metric of conservatism: systematization might preserve most goals, but dramatically change the relationships between them (e.g. which are terminal versus instrumental). Instead, we would ideally be able to measure conservatism in terms of which circuits caused a given output; ARC’s work on formalizing heuristic explanations seems relevant to this.

- Another possible source of conservatism: it can be harder to change earlier than later layers in a network, due to credit assignment problems such as vanishing gradients. So core values which are encoded in earlier layers may be more likely to be preserved.

- A third possibility is that AI developers might deliberately build conservatism into the model, because it’s useful: a non-conservative network which often underwent big shifts in core modules might have much less reliable behavior. One way of doing so is reducing the learning rate; but we should expect that there are many other ways to do so (albeit not necessarily very reliably).

- Neural networks trained via SGD exhibit a well-known simplicity bias, which is then usually augmented using regularization techniques like weight decay, giving rise to phenomena like grokking. However, as with conservatism, we’d ideally find a way to measure simplicity in terms of circuits rather than weights, to better link it back to high-level concepts.

- Another possible driver towards simplicity: AIs might learn to favor simpler chains of reasoning, in a way which influences which values are distilled back into their weights. For example, consider a training regime where AIs are rewarded for accurately describing their intentions before carrying out a task. They may learn to favor intentions which can be described and justified quickly and easily.

- AI developers are also likely to deliberately design and implement more types of regularization towards simplicity, because those help models systematize and generalize their beliefs and skills to new tasks.

I’ll finish by discussing two complications with the picture above. Firstly, I’ve described value systematization above as something which gradient descent could do to models. But in some cases it would be more useful to think of the model as an active participant. Value systematization might happen via gradient descent “distilling” into a model’s weights its thoughts about how to trade off between different goals in a novel situation. Or a model could directly reason about which new values would best systematize its current values, with the intention of having its conclusions distilled into its weights; this would be an example of gradient hacking [AF · GW].

Secondly: I’ve talked about value systematization as a process by which an AI’s values become simpler. But we shouldn’t expect values to be represented in isolation—instead, they’ll be entangled with the concepts and representations in the AI’s world-model. This has two implications. Firstly, it means that we should understand simplicity in the context of an agent’s existing world-model: values are privileged if they’re simple to represent given the concepts which the agent already uses to predict the world. (In the human context, this is just common sense—it seems bizarre to value “doing what God wants” if you don’t believe in any gods.) Secondly, though, it raises some doubt about how much simpler value systematization would actually make an AI overall—since pursuing simpler values (like utilitarianism) might require models to represent more complex strategies as part of their world-models. My guess is that to resolve this tension we’ll need a more sophisticated notion of “simplicity”; this seems like an interesting thread to pull on in future work.

Value concretization

Systematization is one way of balancing the competing demands of conservatism and simplicity. Another is value concretization, by which I mean an agent’s values becoming more specific and more narrowly-scoped. Consider a hypothetical example: suppose an AI learns a broad value like “acquiring resources”, but is then fine-tuned in environments where money is the only type of resource available. The value “acquiring money” would then be rewarded just as highly as the value “acquiring resources”. If the former happens to be simpler, it’s plausible that the latter would be lost as fine-tuning progresses, and only the more concrete goal of acquiring money would be retained.

In some sense this is the opposite of value systematization, but we can also see them as complementary forces. For example, suppose that an AI starts off with N values, and N-1 of them are systematized into a single overarching value. After the N-1 values are simplified in this way, the Nth value will likely be disproportionately complex; and so value concretization could reduce the complexity of the AI’s values significantly by discarding that last goal.

Possible examples of value concretization in humans include:

- Starting by caring about doing good in general, but gradually growing to care primarily about specific cause areas.

- Starting by caring about having a successful career in general, but gradually growing to care primarily about achieving specific ambitions.

- Starting by caring about friendships and relationships in general, but gradually growing to care primarily about specific friendships and relationships.

Value concretization is particularly interesting as a possible mechanism pushing against deceptive alignment. An AI which acts in aligned ways in order to better position itself to achieve a misaligned goal might be rewarded just as highly as an aligned AI. However, if the misaligned goal rarely directly affects the AI’s actions, then it might be simpler for the AI to instead be motivated directly by human values. In neural networks, value concretization might be implemented by pruning away unused circuits; I’d be interested in pointers to relevant work.

Q&A

How does value systematization relate to deceptive alignment?

Value systematization is one mechanism by which deceptive alignment might arise: the systematization of an AI’s values (including some aligned values) might produce broadly-scoped values which incentivize deceptive alignment.

However, existing characterizations of deceptive alignment tend to portray it as a binary: either the model is being deceptive, or it’s not. Thinking about it in terms of value systematization helps make clear that this could be a fairly continuous process:

- I’ve argued above that AIs will likely be motivated by fairly aligned goals before they systematize their values—and so deceptively alignment might be as simple as deciding not to change their behavior after their values shift (until they’re in a position to take more decisive action). The model’s internal representations of aligned behavior need not change very much during this shift; the only difference might be that aligned behavior shifts from being a terminal goal to an instrumental goal.

- Since value systematization might be triggered by novel inputs, AIs might not systematize their values until after a distributional shift occurs. (A human analogy: a politician who’s running for office, and promises to govern well, might only think seriously about what they really want to do with that power after they’ve won the election. More generally, humans often deceive ourselves about how altruistic we are, at least when we’re not forced to act on our stated values.) We might call this “latent” deceptive alignment, but I think it’s better to say that the model starts off mostly aligned, and then value systematization could amplify the extent to which it’s misaligned.

- Value concretization (as described above) might be a constant force pushing models back towards being aligned, so that it’s not a one-way process.

How does value systematization relate to Yudkowskian “squiggle maximizer” scenarios?

Yudkowskian “molecular squiggle” maximizers (renamed from paperclip maximizers [? · GW]) are AIs whose values have become incredibly simple and scalable, to the point where they seem absurd to humans. So squiggle-maximizers could be described as taking value systematization to an extreme. However, the value systematization framework also provides some reasons to be skeptical of this possibility.

Firstly, squiggle-maximization is an extreme example of prioritizing simplicity over conservatism. Squiggle-maximizers would start off with goals that are more closely related to the tasks they are trained on; and then gradually systematize them. But from the perspective of their earlier versions, squiggle-maximization would be an alien and undesirable goal; so if they started off anywhere near as conservative as humans, they’d be hesitant to let their values change so radically. And if anything, I expect early AGIs to be more conservative than humans—because human brains are much more size- and data-constrained than artificial neural networks, and so AGIs probably won’t need to prioritize simplicity as much as we do to match our capabilities in most domains.

Secondly, even for agents that heavily prioritize simplicity, it’s not clear that the simplest values would in fact be very low-level ones. I’ve argued that the complexity of values should be thought of in the context of an existing world-model. But even superintelligences won’t have world-models which are exclusively formulated at very low levels; instead, like humans, they’ll have hierarchical world-models which contain concepts at many different scales. So values like “maximizing intelligence” or “maximizing power” will plausibly be relatively simple even in the ontologies of superintelligences, while being much more closely related to their original values than molecular squiggles are; and more aligned values like “maximizing human flourishing” might not be so far behind, for roughly the same reasons.

How does value systematization relate to reward tampering?

Value systematization is one mechanism by which reward tampering might arise: the systematization of existing values which are correlated with high reward or low loss (such as completing tasks or hiding mistakes) might give rise to the new value of getting high reward or low loss directly (which I call feedback-mechanism-related values). This will require that models have the situational awareness to understand that they’re part of a ML training process.

However, while feedback-mechanism-related values are very simple in the context of training, they are underdefined once training stops. There's no clear way to generalize feedback-mechanism-related values to deployment (analogous to how there's no clear way to generalize "what evolution would have wanted" when making decisions about the future of humanity). And so I expect that continued value systematization will push models towards prioritizing values which are well-defined across a broader range of contexts, including ones where there are no feedback mechanisms active.

One counterargument from Paul Christiano [LW(p) · GW(p)] is that AIs could learn to care about reward conditional on their episode being included in the training data. However, the concept of "being included in the training data" seems like a messy one with many edge cases (e.g. what if it depends on the model's actions during the episode? What if there are many different versions of the model being fine-tuned? What if some episodes are used for different types of training from others?) And in cases where they have strong evidence that they’re not in training, they’d need to figure out what maximizing reward would look like in a bizarre low-probability world, which will also often be underspecified (akin to asking a human in a surreal dream “what would you do if this were all real?”). So I still expect that, even if AIs learn to care about conditional reward initially, over time value systematization would push them towards caring more about real-world outcomes whether they're in training or not.

How do simplicity and conservatism relate to previous [AF · GW] discussions [AF · GW] of simplicity versus speed priors?

I’ve previously thought about value systematization in terms of a trade-off between a simplicity prior and a speed prior, but I’ve now changed my mind about that. It’s true that more systematized values tend to be higher-level, adding computational overhead to figuring out what to do—consider a utilitarian trying to calculate from first principles which actions are good or bad. But in practice, that cost is amortized over a large number of actions: you can “cache” instrumental goals and then default to pursuing them in most cases (as utilitarians usually do). And less systematized values face the problem of often being inapplicable or underdefined, making it slow and hard to figure out what actions they endorse—think of deontologists who have no systematic procedure for deciding what to do when two values clash, or religious scholars who endlessly debate how each specific rule in the Bible or Torah applies to each facet of modern life.

Because of this, I now think that “simplicity versus conservatism” is a better frame than “simplicity versus speed”. However, note my discussion in the “Grounding value systematization” section of the relationship between simplicity of values and simplicity of world-models. I expect that to resolve this uncertainty we’ll need a more sophisticated understanding of which types of simplicity will be prioritized during training.

How does value systematization relate to the shard framework?

Some alignment researchers advocate for thinking about AI motivations in terms of “shards [LW · GW]”: subagents that encode separate motivations, where interactions and “negotiations” between different shards determine the goals that agents try to achieve. At a high level, I’m sympathetic to this perspective, and it’s broadly consistent with the ideas I’ve laid out in this post. The key point that seems to be missing in discussions of shards, though, is that systematization might lead to major changes in an agent’s motivations, undermining some previously-existing motivations. Or, in shard terminology: negotiations between shards might lead to coalitions which give some shards almost no power. For example, someone might start off strongly valuing honesty as a terminal value. But after value systematization they might become a utilitarian, conclude that honesty is only valuable for instrumental reasons, and start lying whenever it’s useful. Because of this, I’m skeptical of appeals to shards as part of arguments that AI risk is very unlikely. However, I still think that work on characterizing and understanding shards is very valuable.

Isn’t value systematization very speculative?

Yes. But I also think it’s a step towards making even more speculative concepts that often underlie discussions of AI risk (like “coherence” or “lawfulness”) better-defined. So I’d like help making it less speculative; get in touch if you’re interested.

49 comments

Comments sorted by top scores.

comment by Kaj_Sotala · 2023-10-28T20:13:35.540Z · LW(p) · GW(p)

Morality seems like the domain where humans have the strongest instinct to systematize our preferences

At least, the domain where modern educated Western humans have an instinct to systematize our preferences. Interestingly, it seems the kind of extensive value systematization done in moral philosophy may itself be an example of belief systematization. Scientific thinking taught people the mental habit of systematizing things, and then those habits led them to start systematizing values too, as a special case of "things that can be systematized".

Phil Goetz had this anecdote [LW · GW]:

I'm also reminded of a talk I attended by one of the Dalai Lama's assistants. This was not slick, Westernized Buddhism; this was saffron-robed fresh-off-the-plane-from-Tibet Buddhism. He spoke about his beliefs, and then took questions. People began asking him about some of the implications of his belief that life, love, feelings, and the universe as a whole are inherently bad and undesirable. He had great difficulty comprehending the questions - not because of his English, I think; but because the notion of taking a belief expressed in one context, and applying it in another, seemed completely new to him. To him, knowledge came in units; each unit of knowledge was a story with a conclusion and a specific application. (No wonder they think understanding Buddhism takes decades.) He seemed not to have the idea that these units could interact; that you could take an idea from one setting, and explore its implications in completely different settings.

David Chapman has a page talking about how fundamentalist forms of religion are a relatively recent development, a consequence of how secular people first started systematizing values and then religion has to start doing the same in order to adapt:

Fundamentalism describes itself as traditional and anti-modern. This is inaccurate. Early fundamentalism was anti-modernist, in the special sense of “modernist theology,” but it was itself modernist in a broad sense. Systems of justifications are the defining feature of “modernity,” as I (and many historians) use the term.

The defining feature of actual tradition—“the choiceless mode”—is the absence of a system of justifications: chains of “therefore” and “because” that explain why you have to do what you have to do. In a traditional culture, you just do it, and there is no abstract “because.” How-things-are-done is immanent in concrete customs, not theorized in transcendent explanations.

Genuine traditions have no defense against modernity. Modernity asks “Why should anyone believe this? Why should anyone do that?” and tradition has no answer. (Beyond, perhaps, “we always have.”) Modernity says “If you believe and act differently, you can have 200 channels of cable TV, and you can eat fajitas and pad thai and sushi instead of boiled taro every day”; and every genuinely traditional person says “hell yeah!” Because why not? Choice is great! (And sushi is better than boiled taro.)

Fundamentalisms try to defend traditions by building a system of justification that supplies the missing “becauses.” You can’t eat sushi because God hates shrimp. How do we know? Because it says so here in Leviticus 11:10-11.3

Secular modernism tries to answer every “why” question with a chain of “becauses” that eventually ends in “rationality,” which magically reveals Ultimate Truth. Fundamentalist modernism tries to answer every “why” with a chain that eventually ends in “God said so right here in this magic book which contains the Ultimate Truth.”

The attempt to defend tradition can be noble; tradition is often profoundly good in ways modernity can never be. Unfortunately, fundamentalism, by taking up modernity’s weapons, transforms a traditional culture into a modern one. “Modern,” that is, in having a system of justification, founded on a transcendent eternal ordering principle. And once you have that, much of what is good about tradition is lost.

This is currently easier to see in Islamic than in Christian fundamentalism. Islamism is widely viewed as “the modern Islam” by young people. That is one of its main attractions: it can explain itself, where traditional Islam cannot. Sophisticated urban Muslims reject their grandparents’ traditional religion as a jumble of pointless, outmoded village customs with no basis in the Koran. Many consider fundamentalism the forward-looking, global, intellectually coherent religion that makes sense of everyday life and of world politics.

Jonathan Haidt also talked about the way that even among Westerners, requiring justification and trying to ground everything in harm/care is most prominent in educated people (who had been socialized to think about morality in this way) as opposed to working-class people. Excerpts from The Righteous Mind where he talks about reading people stories about victimless moral violations (e.g. having sex with a dead chicken before eating it) to see how they thought about them:

I got my Ph.D. at McDonald’s. Part of it, anyway, given the hours I spent standing outside of a McDonald’s restaurant in West Philadelphia trying to recruit working-class adults to talk with me for my dissertation research. When someone agreed, we’d sit down together at the restaurant’s outdoor seating area, and I’d ask them what they thought about the family that ate its dog, the woman who used her flag as a rag, and all the rest. I got some odd looks as the interviews progressed, and also plenty of laughter—particularly when I told people about the guy and the chicken. I was expecting that, because I had written the stories to surprise and even shock people.

But what I didn’t expect was that these working-class subjects would sometimes find my request for justifications so perplexing. Each time someone said that the people in a story had done something wrong, I asked, “Can you tell me why that was wrong?” When I had interviewed college students on the Penn campus a month earlier, this question brought forth their moral justifications quite smoothly. But a few blocks west, this same question often led to long pauses and disbelieving stares. Those pauses and stares seemed to say, You mean you don’t know why it’s wrong to do that to a chicken? I have to explain this to you? What planet are you from?

These subjects were right to wonder about me because I really was weird. I came from a strange and different moral world—the University of Pennsylvania. Penn students were the most unusual of all twelve groups in my study. They were unique in their unwavering devotion to the “harm principle,” which John Stuart Mill had put forth in 1859: “The only purpose for which power can be rightfully exercised over any member of a civilized community, against his will, is to prevent harm to others.”1 As one Penn student said: “It’s his chicken, he’s eating it, nobody is getting hurt.”

The Penn students were just as likely as people in the other eleven groups to say that it would bother them to witness the taboo violations, but they were the only group that frequently ignored their own feelings of disgust and said that an action that bothered them was nonetheless morally permissible. And they were the only group in which a majority (73 percent) were able to tolerate the chicken story. As one Penn student said, “It’s perverted, but if it’s done in private, it’s his right.” [...]

Haidt also talks about this kind of value systematization being uniquely related to Western mental habits:

I and my fellow Penn students were weird in a second way too. In 2010, the cultural psychologists Joe Henrich, Steve Heine, and Ara Norenzayan published a profoundly important article titled “The Weirdest People in the World?” The authors pointed out that nearly all research in psychology is conducted on a very small subset of the human population: people from cultures that are Western, educated, industrialized, rich, and democratic (forming the acronym WEIRD). They then reviewed dozens of studies showing that WEIRD people are statistical outliers; they are the least typical, least representative people you could study if you want to make generalizations about human nature. Even within the West, Americans are more extreme outliers than Europeans, and within the United States, the educated upper middle class (like my Penn sample) is the most unusual of all.

Several of the peculiarities of WEIRD culture can be captured in this simple generalization: The WEIRDer you are, the more you see a world full of separate objects, rather than relationships. It has long been reported that Westerners have a more independent and autonomous concept of the self than do East Asians. For example, when asked to write twenty statements beginning with the words “I am …,” Americans are likely to list their own internal psychological characteristics (happy, outgoing, interested in jazz), whereas East Asians are more likely to list their roles and relationships (a son, a husband, an employee of Fujitsu).

The differences run deep; even visual perception is affected. In what’s known as the framed-line task, you are shown a square with a line drawn inside it. You then turn the page and see an empty square that is larger or smaller than the original square. Your task is to draw a line that is the same as the line you saw on the previous page, either in absolute terms (same number of centimeters; ignore the new frame) or in relative terms (same proportion relative to the frame). Westerners, and particularly Americans, excel at the absolute task, because they saw the line as an independent object in the first place and stored it separately in memory. East Asians, in contrast, outperform Americans at the relative task, because they automatically perceived and remembered the relationship among the parts.

Related to this difference in perception is a difference in thinking style. Most people think holistically (seeing the whole context and the relationships among parts), but WEIRD people think more analytically (detaching the focal object from its context, assigning it to a category, and then assuming that what’s true about the category is true about the object). Putting this all together, it makes sense that WEIRD philosophers since Kant and Mill have mostly generated moral systems that are individualistic, rule-based, and universalist. That’s the morality you need to govern a society of autonomous individuals.

But when holistic thinkers in a non-WEIRD culture write about morality, we get something more like the Analects of Confucius, a collection of aphorisms and anecdotes that can’t be reduced to a single rule.6 Confucius talks about a variety of relationship-specific duties and virtues (such as filial piety and the proper treatment of one’s subordinates). If WEIRD and non-WEIRD people think differently and see the world differently, then it stands to reason that they’d have different moral concerns. If you see a world full of individuals, then you’ll want the morality of Kohlberg and Turiel—a morality that protects those individuals and their individual rights. You’ll emphasize concerns about harm and fairness.

But if you live in a non-WEIRD society in which people are more likely to see relationships, contexts, groups, and institutions, then you won’t be so focused on protecting individuals. You’ll have a more sociocentric morality, which means (as Shweder described it back in chapter 1) that you place the needs of groups and institutions first, often ahead of the needs of individuals. If you do that, then a morality based on concerns about harm and fairness won’t be sufficient. You’ll have additional concerns, and you’ll need additional virtues to bind people together.

comment by Thane Ruthenis · 2023-10-27T21:39:10.634Z · LW(p) · GW(p)

I'd previously sketched out a model basically identical to this one, see here [LW · GW] and especially here [LW · GW].

... but I've since updated away from it, in favour of an even simpler explanation.

The major issue with this model is the assumption that either (1) the SGD/evolution/whatever-other-selection-pressure will always convergently instill the drive for doing value systematization into the mind it's shaping, or (2) that agents will somehow independently arrive at it on their own; and that this drive will have overwhelming power, enough to crush the object-level values. But why?

I'd had my own explanation, but let's begin with your arguments [LW · GW]. I find them unconvincing.

- First, the reason we expect value compilation/systematization to begin with is because we observe it in humans, and human minds are not trained the way NN models are trained. Moreover, the instances where we note value systematization in humans seem to have very little to do with blind-idiot training algorithms (like the SGD or evolution) at all. Instead, it tends to happen when humans leverage their full symbolic intelligence to do moral philosophy.

- So the SGD-specific explanations are right out.

- So we're left with the "the mind itself wants to do this" class of explanations. But it doesn't really make sense. If you value X, Y, Z, and those are your terminal values, whyever would you choose to rewrite yourself to care about W instead? If W is a "simple generator" of X, Y, Z, that would change... precisely nothing. You care about X, Y, Z, not about W; nothing more to be said. Unless given a compelling external reason to switch to W, you won't do that.

- At most you'll use W as a simpler proxy measure for fast calculations of plans in high-intensity situations. But you'd still periodically "look back" at X, Y, Z to ensure you're still following them; W would ever remain just an instrumental proxy.

So, again, we need the drive for value systematization to be itself a value, and such a strong one that it's able to frequently overpower whole coalitions of object-level values. Why would that happen?

My sketch [LW · GW] went roughly as follows: Suppose we have a system at an intermediary stage of training. So far it's pretty dumb; all instinct and no high-level reasoning. The training objective is ; the system implements a set of contextually-activated shards/heuristics that cause it to engage in contextual behaviors . Engaging in any is correlated with optimizing for , but every is just that: an "upstream correlate" of , and it's only a valid correlate in some specific context. Outside that context, following would not lead to optimizing for ; and the optimizing-for- behavior is only achieved by a careful balance of the contextual behaviors.

Now suppose we've entered the stage of training at which higher-level symbolic intelligence starts to appear. We've grown a mesa-optimizer. We now need to point it at something; some goal that's correlated with . Problem: we can only point it at goals concepts corresponding to which are present in its world-model, and might not even be there yet! (Stone-age humans had no idea about inclusive genetic fitness or pleasure-maximization.) We only have a bunch of s...

In that case, instilling a drive for value systematization seems like the right thing to do. No is a proper proxy for , but the weighted sum of them, , is. So that's what we point our newborn agent at. We essentially task it with figuring out for what purpose it was optimized, and then tell it to then go do that thing. We hard-wire this objective into it, and make it have an overriding priority.

(But of course is still an imperfect proxy for , and the agent's attempts to figure out are imperfect as well, so it still ends up misaligned from .)

That story still seems plausible to me. It's highly convoluted, but it makes sense.

... but I don't think there's any need for it.

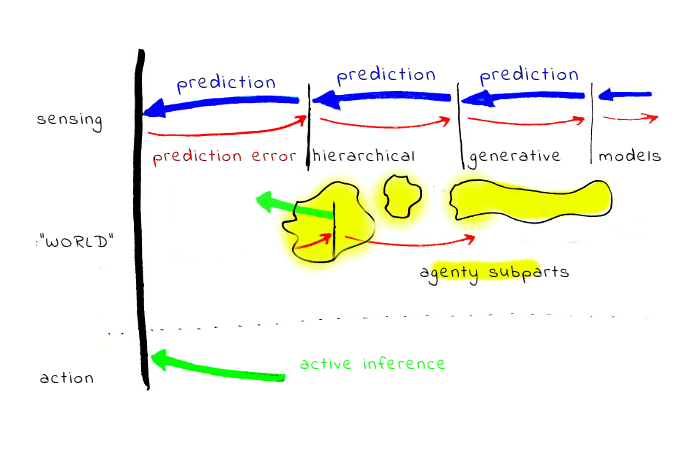

I think "value systematization" is simply the reflection of the fact that the world can be viewed as a series of hierarchical ever-more-abstract models [? · GW].

- Suppose we have a low-level model of reality , with variables (atoms, objects, whatever).

- Suppose we "abstract up", deriving a more simple model of the world , with variables. Each variable in it is an abstraction over some set of lower-level variables , such that .

- We iterate, to , , ..., . Caveat: . Since each subsequent level is simpler, it contains fewer variables. People to social groups to countries to the civilization; atoms to molecules to macro-scale objects to astronomical objects; etc.

- Let's define the function . I. e.: it returns a probability distribution over the low-level variables given the state of a high-level variable that abstracts over them. (E. g., if the world economy is in this state, how happy my grandmother is likely to be?)

- If we view our values as an utility function , we can "translate" our utility function from any to roughly as follows: . (There's a ton of complications there, but this expression conveys the concept.)

... and then value systematization just naturally falls out of this.

Suppose we have a bunch of values at th abstraction level. Once we start frequently reasoning at th level, we "translate" our values to it, and cache the resultant values. Since the th level likely has fewer variables than th, the mapping-up is not injective: some values defined over different low-level variables end up translated to the same higher-level variable ("I like Bob and Alice" -> "I like people"). This effect only strengthens as we go up higher and higher; and at , we can plausibly end up with only one variable we value ("eudaimonia" or something).

That does not mean we stop caring about our lower-level values. Nay: those translations are still instrumental, we simply often use them to save on processing costs.

... or so it ideally should be. But humans are subject to value drift. An ideal agent would never forget the distinction between the terminal and the instrumental; humans do. And so the more often a given human reasons at larger scales compared to lower scales, the more they "drift" towards higher-level values, such as going from deontology to utilitarianism.

Value concretization is simply the exact same process, but mapping a higher-level value down the abstraction levels.

For me, at least, this explanation essentially dissolves the question of value systematization; I perceive no leftover confusion.

In a very real sense, it can't work any other way.

Replies from: ricraz↑ comment by Richard_Ngo (ricraz) · 2023-10-27T23:24:24.519Z · LW(p) · GW(p)

Thanks for the comment! I agree that thinking of minds as hierarchically modeling the world is very closely related to value systematization.

But I think the mistake you're making is to assume that the lower levels are preserved after finding higher-level abstractions. Instead, higher-level abstractions reframe the way we think about lower-level abstractions, which can potentially change them dramatically. This is what happens with most scientific breakthroughs: we start with lower-level phenomena, but we don't understand them very well until we discover the higher-level abstraction.

For example, before Darwin people had some concept that organisms seemed to be "well-fitted" for their environments, but it was a messy concept entangled with their theological beliefs. After Darwin, their concept of fitness changed. It's not that they've drifted into using the new concept, it's that they've realized that the old concept was under-specified and didn't really make sense.

Similarly, suppose you have two deontological values which trade off against each other. Before systematization, the question of "what's the right way to handle cases where they conflict" is not really well-defined; you have no procedure for doing so. After systematization, you do. (And you also have answers to questions like "what counts as lying?" or "is X racist?", which without systematization are often underdefined.)

That's where the tradeoff comes from. You can conserve your values (i.e. continue to care terminally about lower-level representations) but the price you pay is that they make less sense, and they're underdefined in a lot of cases. Or you can simplify your values (i.e. care terminally about higher-level representations) but the price you pay is that the lower-level representations might change a lot.

And that's why the "mind itself wants to do this" does make sense, because it's reasonable to assume that highly capable cognitive architectures will have ways of identifying aspects of their thinking that "don't make sense" and correcting them.

Replies from: Kaj_Sotala, Thane Ruthenis, Wei_Dai↑ comment by Kaj_Sotala · 2023-10-28T20:42:50.238Z · LW(p) · GW(p)

Similarly, suppose you have two deontological values which trade off against each other. Before systematization, the question of "what's the right way to handle cases where they conflict" is not really well-defined; you have no procedure for doing so. After systematization, you do. (And you also have answers to questions like "what counts as lying?" or "is X racist?", which without systematization are often underdefined.) [...]

You can conserve your values (i.e. continue to care terminally about lower-level representations) but the price you pay is that they make less sense, and they're underdefined in a lot of cases. [...] And that's why the "mind itself wants to do this" does make sense, because it's reasonable to assume that highly capable cognitive architectures will have ways of identifying aspects of their thinking that "don't make sense" and correcting them.

I think we should be careful to distinguish explicit and implicit systematization. Some of what you are saying (e.g. getting answers to question like "what counts as lying") sounds like you are talking about explicit, consciously done systematization; but some of what you are saying (e.g. minds identifying aspects of thinking that "don't make sense" and correcting them) also sounds like it'd apply more generally to developing implicit decision-making procedures.

I could see the deontologist solving their problem either way - by developing some explicit procedure and reasoning for solving the conflict between their values, or just going by a gut feel for which value seems to make more sense to apply in that situation and the mind then incorporating this decision into its underlying definition of the two values.

I don't know how exactly deontological rules work, but I'm guessing that you could solve a conflict between them by basically just putting in a special case for "in this situation, rule X wins over rule Y" - and if you view the rules as regions in state space where the region for rule X corresponds to the situations where rule X is applied, then adding data points about which rule is meant to cover which situation ends up modifying the rule itself. It would also be similar to the way that rules work in skill learning in general, in that experts find the rules getting increasingly fine-grained, implicit and full of exceptions. Here's how Josh Waitzkin describes the development of chess expertise:

Let’s say that I spend fifteen years studying chess. [...] We will start with day one. The first thing I have to do is to internalize how the pieces move. I have to learn their values. I have to learn how to coordinate them with one another. [...]

Soon enough, the movements and values of the chess pieces are natural to me. I don’t have to think about them consciously, but see their potential simultaneously with the figurine itself. Chess pieces stop being hunks of wood or plastic, and begin to take on an energetic dimension. Where the piece currently sits on a chessboard pales in comparison to the countless vectors of potential flying off in the mind. I see how each piece affects those around it. Because the basic movements are natural to me, I can take in more information and have a broader perspective of the board. Now when I look at a chess position, I can see all the pieces at once. The network is coming together.

Next I have to learn the principles of coordinating the pieces. I learn how to place my arsenal most efficiently on the chessboard and I learn to read the road signs that determine how to maximize a given soldier’s effectiveness in a particular setting. These road signs are principles. Just as I initially had to think about each chess piece individually, now I have to plod through the principles in my brain to figure out which apply to the current position and how. Over time, that process becomes increasingly natural to me, until I eventually see the pieces and the appropriate principles in a blink. While an intermediate player will learn how a bishop’s strength in the middlegame depends on the central pawn structure, a slightly more advanced player will just flash his or her mind across the board and take in the bishop and the critical structural components. The structure and the bishop are one. Neither has any intrinsic value outside of its relation to the other, and they are chunked together in the mind.

This new integration of knowledge has a peculiar effect, because I begin to realize that the initial maxims of piece value are far from ironclad. The pieces gradually lose absolute identity. I learn that rooks and bishops work more efficiently together than rooks and knights, but queens and knights tend to have an edge over queens and bishops. Each piece’s power is purely relational, depending upon such variables as pawn structure and surrounding forces. So now when you look at a knight, you see its potential in the context of the bishop a few squares away. Over time each chess principle loses rigidity, and you get better and better at reading the subtle signs of qualitative relativity. Soon enough, learning becomes unlearning. The stronger chess player is often the one who is less attached to a dogmatic interpretation of the principles. This leads to a whole new layer of principles—those that consist of the exceptions to the initial principles. Of course the next step is for those counterintuitive signs to become internalized just as the initial movements of the pieces were. The network of my chess knowledge now involves principles, patterns, and chunks of information, accessed through a whole new set of navigational principles, patterns, and chunks of information, which are soon followed by another set of principles and chunks designed to assist in the interpretation of the last. Learning chess at this level becomes sitting with paradox, being at peace with and navigating the tension of competing truths, letting go of any notion of solidity.

"Sitting with paradox, being at peace with and navigating the tension of competing truths, letting go of any notion of solidity" also sounds to me like some of the models for higher stages of moral development, where one moves past the stage of trying to explicitly systematize morality and can treat entire systems of morality as things that all co-exist in one's mind and are applicable in different situations. Which would make sense, if moral reasoning is a skill in the same sense that playing chess is a skill, and moral preferences are analogous to a chess expert's preferences for which piece to play where.

Replies from: Matthew_Opitz↑ comment by Matthew_Opitz · 2023-10-29T00:42:31.189Z · LW(p) · GW(p)

Except that chess really does have an objectively correct value systemization, which is "win the game." "Sitting with paradox" just means, don't get too attached to partial systemizations. It reminds me of Max Stirner's egoist philosophy, which emphasized that individuals should not get hung up on partial abstractions or "idées fixées" (honesty, pleasure, success, money, truth, etc.) except perhaps as cheap, heuristic proxies for one's uber-systematized value of self-interest, but one should instead always keep in mind the overriding abstraction of self-interest and check in periodically as to whether one's commitment to honesty, pleasure, success, money, truth, or any of these other "spooks" really are promoting one's self-interest (perhaps yes, perhaps no).

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2023-10-29T07:00:46.515Z · LW(p) · GW(p)

Except that chess really does have an objectively correct value systemization, which is "win the game."

Your phrasing sounds like you might be saying this as an objection to what I wrote, but I'm not sure how it would contradict my comment.

The same mechanisms can still apply even if the correct systematization is subjective in one case and objective in the second case. Ultimately what matters is that the cognitive system feels that one alternative is better than the other and takes that feeling as feedback for shaping future behavior, and I think that the mechanism which updates on feedback doesn't really see whether the source of the feedback is something we'd call objective (win or loss at chess) or subjective (whether the resulting outcome was good in terms of the person's pre-existing values).

"Sitting with paradox" just means, don't get too attached to partial systemizations.

Yeah, I think that's a reasonable description of what it means in the context of morality too.

↑ comment by Thane Ruthenis · 2023-10-28T00:10:38.962Z · LW(p) · GW(p)

But I think the mistake you're making is to assume that the lower levels are preserved after finding higher-level abstractions. Instead, higher-level abstractions reframe the way we think about lower-level abstractions, which can potentially change them dramatically

Mm, I think there's two things being conflated there: ontological crises (even small-scale ones, like the concept of fitness not being outright destroyed but just re-shaped), and the simple process of translating your preference around the world-model without changing that world-model.

It's not actually the case that the derivation of a higher abstraction level always changes our lower-level representation. Again, consider people -> social groups -> countries. Our models of specific people we know, how we relate to them, etc., don't change just because we've figured out a way to efficiently reason about entire groups of people at once. We can now make better predictions about the world, yes, we can track the impact of more-distant factors on our friends, but we don't actually start to care about our friends in a different way in the light of all this.

In fact: Suppose we've magically created an agent that already starts our with a perfect world-model. It'll never experience an ontology crisis in its life. This agent would still engage in value translation as I'd outlined. If it cares about Alice and Bob, for example, and it's engaging in plotting at the geopolitical scales, it'd still be useful for it to project its care for Alice and Bob into higher abstraction levels, and start e. g. optimizing towards the improvement of the human economy. But optimizing for all humans' welfare would still remain an instrumental goal for it, wholly subordinate to its love for the two specific humans.

Similarly, suppose you have two deontological values which trade off against each other. Before systematization, the question of "what's the right way to handle cases where they conflict" is not really well-defined; you have no procedure for doing so

I think you do, actually? Inasmuch as real-life deontologists don't actually shut down when facing a values conflict. They ultimately pick one or the other, in a show of revealed preferences. (They may hesitate a lot, yes, but their cognitive process doesn't get literally suspended.)

I model this just as an agent having two utility functions, and , and optimizing for their sum . If the values are in conflict, if taking an action that maximizes hurts and vice versa — well, one of them almost surely spits out a higher value, so the maximization of is still well-defined. And this is how that goes in practice: the deontologist hesitates a bit, figuring out which it values more, but ultimately acts.

There's a different story about "pruning" values that I haven't fully thought out yet, but it seems simple at a glance. E. g, suppose you have values , , , but optimizing for is always predicted to minimize and , and is always smaller than . (E. g., a psychopath loves money, power, and expects to get a slight thrill if he publicly kills a person.) In that case, it makes sense to just delete — it's pointless to waste processing power on including it in your tradeoff computations, since it's always outvoted (the psychopath conditions himself to remove the homicidal urge).

There's some more general principle here, where agents notice such consistently-outvoted scenarios and modify their values into a format where they're effectively equivalent (still lead to the exact same actions in all situations) but simpler to compute. E. g., if sometimes got high enough to outvote , it'd still make sense for the agent to optimize it by replacing it with that only activated on those higher values (and didn't pointlessly muddy up the computations otherwise).

But note that all of this is happening at the same abstraction level. It's not how you go from deontology to utilitarianism — it's how you work out the kinks in your deontological framework.

Replies from: ricraz↑ comment by Richard_Ngo (ricraz) · 2023-10-28T19:19:47.727Z · LW(p) · GW(p)

It's not actually the case that the derivation of a higher abstraction level always changes our lower-level representation. Again, consider people -> social groups -> countries. Our models of specific people we know, how we relate to them, etc., don't change just because we've figured out a way to efficiently reason about entire groups of people at once. We can now make better predictions about the world, yes, we can track the impact of more-distant factors on our friends, but we don't actually start to care about our friends in a different way in the light of all this.

I actually think this type of change is very common—because individuals' identities are very strongly interwoven with the identities of the groups they belong to. You grow up as a kid and even if you nominally belong to a given (class/political/religious) group, you don't really understand it very well. But then over time you construct your identity as X type of person, and that heavily informs your friendships—they're far less likely to last when they have to bridge very different political/religious/class identities. E.g. how many college students with strong political beliefs would say that it hasn't impacted the way they feel about friends with opposing political beliefs?

Inasmuch as real-life deontologists don't actually shut down when facing a values conflict. They ultimately pick one or the other, in a show of revealed preferences.

I model this just as an agent having two utility functions, and , and optimizing for their sum .

This is a straightforwardly incorrect model of deontologists; the whole point of deontology is rejecting the utility-maximization framework. Instead, deontologists have a bunch of rules and heuristics (like "don't kill"). But those rules and heuristics are underdefined in the sense that they often endorse different lines of reasoning which give different answers. For example, they'll say pulling the lever in a trolley problem is right, but pushing someone onto the tracks is wrong, but also there's no moral difference between doing something via a lever or via your own hands.

I guess technically you could say that the procedure for resolving this is "do a bunch of moral philosophy" but that's basically equivalent to "do a bunch of systematization".

Suppose we've magically created an agent that already starts our with a perfect world-model. It'll never experience an ontology crisis in its life. This agent would still engage in value translation as I'd outlined.

...

But optimizing for all humans' welfare would still remain an instrumental goal for it, wholly subordinate to its love for the two specific humans.

Yeah, I totally agree with this. The question is then: why don't translated human goals remain instrumental? It seems like your answer is basically just that it's a design flaw in the human brain, of allowing value drift; the same type of thing which could in principle happen in an agent with a perfect world-model. And I agree that this is probably part of the effect. But it seems to me that, given that humans don't have perfect world-models, the explanation I've given (that systematization makes our values better-defined) is more likely to be the dominant force here.

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2023-10-28T21:20:33.599Z · LW(p) · GW(p)

I actually think this type of change is very common—because individuals' identities are very strongly interwoven with the identities of the groups they belong to

Mm, I'll concede that point. I shouldn't have used people as an example; people are messy.

Literal gears, then. Suppose you're studying some massive mechanism. You find gears in it, and derive the laws by which each individual gear moves. Then you grasp some higher-level dynamics, and suddenly understand what function a given gear fulfills in the grand scheme of things. But your low-level model of a specific gear's dynamics didn't change — locally, it was as correct as it could ever be.

And if you had a terminal utility function over that gear (e. g., "I want it to spin at the rate of 1 rotation per minutes"), that utility function won't change in the light of your model expanding, either. Why would it?

the whole point of deontology is rejecting the utility-maximization framework. Instead, deontologists have a bunch of rules and heuristics

... which can be represented as utility functions. Take a given deontological rule, like "killing is bad". Let's say we view it as a constraint on the allowable actions; or, in other words, a probability distribution over your actions that "predicts" that you're very likely/unlikely to take specific actions. Probability distributions of this form could be transformed into utility functions by reverse-softmaxing them; thus, it's perfectly coherent to model a deontologist as an agent with a lot of separate utility functions.

See Friston's predictive-processing framework in neuroscience, plus this [LW · GW] (and that comment [LW(p) · GW(p)]).

Deontologists reject utility-maximization in the sense that they refuse to engage in utility-maximizing calculations using their symbolic intelligence, but similar dynamics are still at play "under the hood".

It seems like your answer is basically just that it's a design flaw in the human brain, of allowing value drift

Well, not a flaw as such; a design choice. Humans are trained in an on-line regime, our values are learned from scratch, [LW · GW] and... this process of active value learning just never switches off (although it plausibly slows down with age, see old people often being "set in their ways"). Our values change by the same process by which they were learned to begin with.

Replies from: Mo Nastri, ricraz↑ comment by Mo Putera (Mo Nastri) · 2023-12-28T13:08:14.234Z · LW(p) · GW(p)

Tangentially:

See Friston's predictive-processing framework in neuroscience

Nostalgebraist has argued that Friston's ideas here are either vacuous or a nonstarter, in case you're interested.

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2023-12-28T22:49:19.973Z · LW(p) · GW(p)

Yeah, I'm familiar with that view on Friston, and I shared it for a while. But it seems there's a place for that stuff after all. Even if the initial switch to viewing things probabilistically is mathematically vacuous, it can still be useful: if viewing cognition in that framework makes it easier to think about (and thus theorize about).

Much like changing coordinates from Cartesian to polar is "vacuous" in some sense, but makes certain problems dramatically more straightforward to think through.

↑ comment by Richard_Ngo (ricraz) · 2024-01-11T18:17:03.921Z · LW(p) · GW(p)

(drafted this reply a couple months ago but forgot to send it, sorry)

your low-level model of a specific gear's dynamics didn't change — locally, it was as correct as it could ever be.

And if you had a terminal utility function over that gear (e. g., "I want it to spin at the rate of 1 rotation per minutes"), that utility function won't change in the light of your model expanding, either. Why would it?

Let me list some ways in which it could change:

- Your criteria for what counts as "the same gear" changes as you think more about continuity of identity over time. Once the gear stars wearing down, this will affect what you choose to do.

- After learning about relativity, your concepts of "spinning" and "minutes" change, as you realize they depend on the reference frame of the observer.

- You might realize that your mental pointer to the gear you care about identified it in terms of its function not its physical position. For example, you might have cared about "the gear that was driving the piston continuing to rotate", but then realize that it's a different gear that's driving the piston than you thought.

These are a little contrived. But so too is the notion of a value that's about such a basic phenomenon as a single gear spinning. In practice almost all human values are (and almost all AI values will be) focused on much more complex entities, where there's much more room for change as your model expands.

Take a given deontological rule, like "killing is bad". Let's say we view it as a constraint on the allowable actions; or, in other words, a probability distribution over your actions that "predicts" that you're very likely/unlikely to take specific actions. Probability distributions of this form could be transformed into utility functions by reverse-softmaxing them; thus, it's perfectly coherent to model a deontologist as an agent with a lot of separate utility functions.

This doesn't actually address the problem of underspecification, it just shuffles it somewhere else. When you have to choose between two bad things, how do you do so? Well, it depends on which probability distributions you've chosen, which have a number of free parameters. And it depends very sensitively on free parameters, because the region where two deontological rules clash is going to be a small proportion of your overall distribution.

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2024-01-11T19:10:10.005Z · LW(p) · GW(p)

Let me list some ways in which it could change:

If I recall correctly, the hypothetical under consideration here involved an agent with an already-perfect world-model, and we were discussing how value translation up the abstraction levels would work in it. That artificial setting was meant to disentangle the "value translation" phenomenon from the "ontology crisis" phenomenon.

Shifts in the agent's model of what counts as "a gear" or "spinning" violate that hypothetical. And I think they do fall under the purview of ontology-crisis navigation.

Can you construct an example where the value over something would change to be simpler/more systemic, but in which the change isn't forced on the agent downstream of some epistemic updates to its model of what it values? Just as a side-effect of it putting the value/the gear into the context of a broader/higher-abstraction model (e. g., the gear's role in the whole mechanism)?

I agree that there are some very interesting and tricky dynamics underlying even very subtle ontology breakdowns. But I think that's a separate topic. I think that, if you have some value , and it doesn't run into direct conflict with any other values you have, and your model of isn't wrong at the abstraction level it's defined at, you'll never want to change .

You might realize that your mental pointer to the gear you care about identified it in terms of its function not its physical position

That's the closest example, but it seems to be just an epistemic mistake? Your value is well-defined over "the gear that was driving the piston". After you learn it's a different gear from the one you thought, that value isn't updated: you just naturally shift it to the real gear.

Plainer example: Suppose you have two bank account numbers at hand, A and B. One belongs to your friend, another to a stranger. You want to wire some money to your friend, and you think A is their account number. You prepare to send the money... but then you realize that was a mistake, and actually your friend's number is B, so you send the money there. That didn't involve any value-related shift.

I'll try again to make the human example work. Suppose you love your friend, and your model of their personality is accurate – your model of what you value is correct at the abstraction level at which "individual humans" are defined. However, there are also:

- Some higher-level dynamics you're not accounting for, like the impact your friend's job has on the society.

- Some lower-level dynamics you're unaware of, like the way your friend's mind is implemented at the levels of cells and atoms.

My claim is that, unless you have terminal preferences over those other levels, then learning to model these higher- and lower-level dynamics would have no impact on the shape of your love for your friend.

Granted, that's an unrealistic scenario. You likely have some opinions on social politics, and if you learned that your friend's job is net-harmful at the societal level, that'll surely impact your opinion of them. Or you might have conflicting same-level preferences, like caring about specific other people, and learning about these higher-level societal dynamics would make it clear to you that your friend's job is hurting them. Less realistically, you may have some preferences over cells, and you may want to... convince your friend to change their diet so that their cellular composition is more in-line with your aesthetic, or something weird like that.

But if that isn't the case – if your value is defined over an accurate abstraction and there are no other conflicting preferences at play – then the mere fact of putting it into a lower- or higher-level context won't change it.

Much like you'll never change your preferences over a gear's rotation if your model of the mechanism at the level of gears was accurate – even if you were failing to model the whole mechanism's functionality or that gear's atomic composition.

(I agree that it's a pretty contrived setup, but I think it's very valuable to tease out the specific phenomena at play – and I think "value translation" and "value conflict resolution" and "ontology crises" are highly distinct, and your model somewhat muddles them up.)

- ^

Although there may be higher-level dynamics you're not tracking, or lower-level confusions. See the friend example below.

↑ comment by Richard_Ngo (ricraz) · 2024-01-12T02:24:31.188Z · LW(p) · GW(p)

Can you construct an example where the value over something would change to be simpler/more systemic, but in which the change isn't forced on the agent downstream of some epistemic updates to its model of what it values? Just as a side-effect of it putting the value/the gear into the context of a broader/higher-abstraction model (e. g., the gear's role in the whole mechanism)?

I think some of my examples do this. E.g. you used to value this particular gear (which happens to be the one that moves the piston) rotating, but now you value the gear that moves the piston rotating, and it's fine if the specific gear gets swapped out for a copy. I'm not assuming there's a mistake anywhere, I'm just assuming you switch from caring about one type of property it has (physical) to another (functional).

In general, in the higher-abstraction model each component will acquire new relational/functional properties which may end up being prioritized over the physical properties it had in the lower-abstraction model.

I picture you saying "well, you could just not prioritize them". But in some cases this adds a bunch of complexity. E.g. suppose that you start off by valuing "this particular gear", but you realize that atoms are constantly being removed and new ones added (implausibly, but let's assume it's a self-repairing gear) and so there's no clear line between this gear and some other gear. Whereas, suppose we assume that there is a clear, simple definition of "the gear that moves the piston"—then valuing that could be much simpler.

Zooming out: previously you said

I agree that there are some very interesting and tricky dynamics underlying even very subtle ontology breakdowns. But I think that's a separate topic. I think that, if you have some value , and it doesn't run into direct conflict with any other values you have, and your model of isn't wrong at the abstraction level it's defined at, you'll never want to change .

I'm worried that we're just talking about different things here, because I totally agree with what you're saying. My main claims are twofold. First, insofar as you value simplicity (which I think most agents strongly do) then you're going to systematize your values. And secondly, insofar as you have an incomplete ontology (which every agent does) and you value having well-defined preferences over a wide range of situations, then you're going to systematize your values.

Separately, if you have neither of these things, you might find yourself identifying instrumental strategies that are very abstract (or very concrete). That seems fine, no objections there. If you then cache these instrumental strategies, and forget to update them, then that might look very similar to value systematization or concretization. But it could also look very different—e.g. the cached strategies could be much more complicated to specify than the original values; and they could be defined over a much smaller range of situations. So I think there are two separate things going on here.

Replies from: Thane Ruthenis↑ comment by Thane Ruthenis · 2024-01-12T03:07:00.586Z · LW(p) · GW(p)

E.g. you used to value this particular gear (which happens to be the one that moves the piston) rotating, but now you value the gear that moves the piston rotating

That seems more like value reflection, rather than a value change?

The way I'd model it is: you have some value , whose implementations you can't inspect directly, and some guess about what it is . (That's how it often works in humans: we don't have direct knowledge of how some of our values are implemented.) Before you were introduced to the question of "what if we swap the gear for a different one: which one would you care about then?", your model of that value put the majority of probability mass on , which was "I value this particular gear". But upon considering , your PD over changed, and now it puts most probability on , defined as "I care about whatever gear is moving the piston".

Importantly, that example doesn't seem to involve any changes to the object-level model of the mechanism? Just the newly-introduced possibility of switching the gear. And if your values shift in response to previously-unconsidered hypotheticals (rather than changes to the model of the actual reality), that seems to be a case of your learning about your values. Your model of your values changing, rather than them changing directly.

(Notably, that's only possible in scenarios where you don't have direct access to your values! Where they're black-boxed, and you have to infer their internals from the outside.)

the cached strategies could be much more complicated to specify than the original values; and they could be defined over a much smaller range of situations

Sounds right, yep. I'd argue that translating a value up the abstraction levels would almost surely lead to simpler cached strategies, though, just because higher levels are themselves simpler. See my initial arguments [LW(p) · GW(p)].

insofar as you value simplicity (which I think most agents strongly do) then you're going to systematize your values

Sure, but: the preference for simplicity needs to be strong enough to overpower the object-level values it wants to systematize, and it needs to be stronger than them the more it wants to shift them. The simplest values are no values, after all.