An Intuitive Explanation of Solomonoff Induction

post by Alex_Altair · 2012-07-11T08:05:20.544Z · LW · GW · Legacy · 225 commentsContents

Contents: Algorithms Induction Occam’s Razor Probability The Problem of Priors Binary Sequences All Algorithms Solomonoff's Lightsaber Formalized Science Approximations Unresolved Details None 225 comments

This is the completed article that Luke wrote the first half of. My thanks go to the following for reading, editing, and commenting; Luke Muehlhauser, Louie Helm, Benjamin Noble, and Francelle Wax.

People disagree about things. Some say that television makes you dumber; other say it makes you smarter. Some scientists believe life must exist elsewhere in the universe; others believe it must not. Some say that complicated financial derivatives are essential to a modern competitive economy; others think a nation's economy will do better without them. It's hard to know what is true.

And it's hard to know how to figure out what is true. Some argue that you should assume the things you are most certain about and then deduce all other beliefs from your original beliefs. Others think you should accept at face value the most intuitive explanations of personal experience. Still others think you should generally agree with the scientific consensus until it is disproved.

Wouldn't it be nice if determining what is true was like baking a cake? What if there was a recipe for finding out what is true? All you'd have to do is follow the written directions exactly, and after the last instruction you'd inevitably find yourself with some sweet, tasty truth!

Wouldn't it be nice if determining what is true was like baking a cake? What if there was a recipe for finding out what is true? All you'd have to do is follow the written directions exactly, and after the last instruction you'd inevitably find yourself with some sweet, tasty truth!

In this tutorial, we'll explain the closest thing we’ve found so far to a recipe for finding truth: Solomonoff induction.

There are some qualifications to make. To describe just one: roughly speaking, you don't have time to follow the recipe. To find the truth to even a simple question using this recipe would require you to follow one step after another until long after the heat death of the universe, and you can't do that.

But we can find shortcuts. Suppose you know that the exact recipe for baking a cake asks you to count out one molecule of H2O at a time until you have exactly 0.5 cups of water. If you did that, you might not finish the cake before the heat death of the universe. But you could approximate that part of the recipe by measuring out something very close to 0.5 cups of water, and you'd probably still end up with a pretty good cake.

Similarly, once we know the exact recipe for finding truth, we can try to approximate it in a way that allows us to finish all the steps sometime before the sun burns out.

This tutorial explains that best-we've-got-so-far recipe for finding truth, Solomonoff induction. Don’t worry, we won’t be using any equations, just qualitative descriptions.

Like Eliezer Yudkowsky's Intuitive Explanation of Bayes' Theorem and Luke Muehlhauser's Crash Course in the Neuroscience of Human Motivation, this tutorial is long. You may not have time to read it; that's fine. But if you do read it, we recommend that you read it in sections.

Contents:

Background

1. Algorithms — We’re looking for an algorithm to determine truth.

2. Induction — By “determine truth”, we mean induction.

3. Occam’s Razor — How we judge between many inductive hypotheses.

4. Probability — Probability is what we usually use in induction.

5. The Problem of Priors — Probabilities change with evidence, but where do they start?

The Solution

6. Binary Sequences — Everything can be encoded as binary.

7. All Algorithms — Hypotheses are algorithms. Turing machines describe these.

8. Solomonoff's Lightsaber — Putting it all together.

9. Formalized Science — From intuition to precision.

10. Approximations — Ongoing work towards practicality.

11. Unresolved Details — Problems, philosophical and mathematical.

Algorithms

At an early age you learned a set of precisely-defined steps — a 'recipe' or, more formally, an algorithm — that you could use to find the largest number in a list of numbers like this:

21, 18, 4, 19, 55, 12, 30

The algorithm you learned probably looked something like this:

- Look at the first item. Note that it is the largest you've seen on this list so far. If this is the only item on the list, output it as the largest number on the list. Otherwise, proceed to step 2.

- Look at the next item. If it is larger than the largest item noted so far, note it as the largest you've seen in this list so far. Proceed to step 3.

- If you have not reached the end of the list, return to step 2. Otherwise, output the last noted item as the largest number in the list.

Other algorithms could be used to solve the same problem. For example, you could work your way from right to left instead of from left to right. But the point is that if you follow this algorithm exactly, and you have enough time to complete the task, you can't fail to solve the problem. You can't get confused about what one of the steps means or what the next step is. Every instruction tells you exactly what to do next, all the way through to the answer.

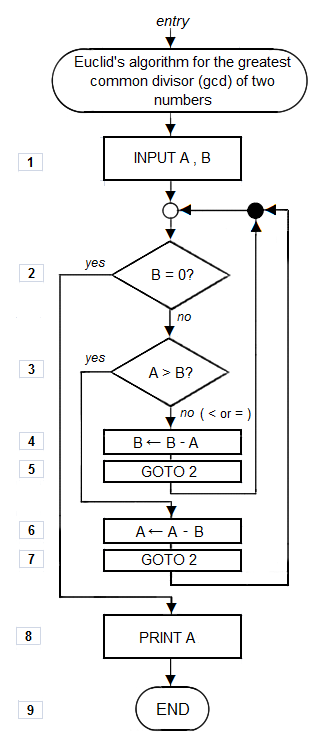

You probably learned other algorithms, too, like how to find the greatest common divisor of any two integers (see image on right).

You probably learned other algorithms, too, like how to find the greatest common divisor of any two integers (see image on right).

But not just any set of instructions is a precisely-defined algorithm. Sometimes, instructions are unclear or incomplete. Consider the following instructions based on an article about the scientific method:

- Make an observation.

- Form a hypothesis that explains the observation.

- Conduct an experiment that will test the hypothesis.

- If the experimental results disconfirm the hypothesis, return to step #2 and form a hypothesis not yet used. If the experimental results confirm the hypothesis, provisionally accept the hypothesis.

This is not an algorithm.

First, many of the terms are not clearly defined. What counts as an observation? What counts as a hypothesis? What would a hypothesis need to be like in order to ‘explain’ the observation? What counts as an experiment that will ‘test’ the hypothesis? What does it mean for experimental results to ‘confirm’ or ‘disconfirm’ a hypothesis?

Second, the instructions may be incomplete. What do we do if we reach step 4 and the experimental results neither ‘confirm’ nor ‘disconfirm’ the hypothesis under consideration, but instead are in some sense ‘neutral’ toward the hypothesis? These instructions don’t tell us what to do in that case.

An algorithm is a well-defined procedure that takes some value or values as input and, after a finite series of steps, generates some value or values as output.

For example, the ‘find the largest number’ algorithm above could take the input {21, 18, 4, 19, 55, 12, 30} and would, after 13 steps, produce the following output: {55}. Or it could take the input {34} and, after 1 step, produce the output: {34}.

An algorithm is so well written, that we can construct machines that follow them. Today, the machines that follow algorithms are mostly computers. This is why all computer science students take a class in algorithms. If we construct our algorithm for truth, then we can make a computer program that finds truth—an Artificial Intelligence.

Induction

Let’s clarify what we mean. In movies, scientists will reveal “truth machines”. Input a statement, and the truth machine will tell you whether it is true or false. This is not what Solomonoff induction does. Instead, Solomonoff induction is our ultimate “induction machine”.

Whether we are a detective trying to catch a thief, a scientist trying to discover a new physical law, or a businessman attempting to understand a recent change in demand, we are all in the process of collecting information and trying to infer the underlying causes.

-Shane Legg

The problem of induction is this: We have a set of observations (or data), and we want to find the underlying causes of those observations. That is, we want to find hypotheses that explain our data. We’d like to know which hypothesis is correct, so we can use that knowledge to predict future events. Our algorithm for truth will not listen to questions and answer yes or no. Our algorithm will take in data (observations) and output the rule by which the data was created. That is, it will give us the explanation of the observations; the causes.

Suppose your data concern a large set of stock market changes and other events in the world. You’d like to know the processes responsible for the stock market price changes, because then you can predict what the stock market will do in the future, and make some money.

Or, suppose you are a parent. You come home from work to find a chair propped against the refrigerator, with the cookie jar atop the fridge a bit emptier than before. You like cookies, and you don’t want them to disappear, so you start thinking. One hypothesis that leaps to mind is that your young daughter used the chair to reach the cookies. However, many other hypotheses explain the data. Perhaps a very short thief broke into your home and stole some cookies. Perhaps your daughter put the chair in front of the fridge because the fridge door is broken and no longer stays shut, and you forgot that your friend ate a few cookies when he visited last night. Perhaps you moved the chair and ate the cookies yourself while sleepwalking the night before.

Or, suppose you are a parent. You come home from work to find a chair propped against the refrigerator, with the cookie jar atop the fridge a bit emptier than before. You like cookies, and you don’t want them to disappear, so you start thinking. One hypothesis that leaps to mind is that your young daughter used the chair to reach the cookies. However, many other hypotheses explain the data. Perhaps a very short thief broke into your home and stole some cookies. Perhaps your daughter put the chair in front of the fridge because the fridge door is broken and no longer stays shut, and you forgot that your friend ate a few cookies when he visited last night. Perhaps you moved the chair and ate the cookies yourself while sleepwalking the night before.

All these hypotheses are possible, but intuitively it seems like some hypotheses are more likely than others. If you’ve seen your daughter access the cookies this way before but have never been burgled, then the ‘daughter hypothesis’ seems more plausible. If some expensive things from your bedroom and living room are missing and there is hateful graffiti on your door at the eye level of a very short person, then the ‘short thief’ hypothesis becomes more plausible than before. If you suddenly remember that your friend ate a few cookies and broke the fridge door last night, the ‘broken fridge door’ hypothesis gains credibility. If you’ve never been burgled and your daughter is out of town and you have a habit of moving and eating things while sleepwalking, the ‘sleepwalking’ hypothesis becomes less bizarre.

So the weight you give to each hypothesis depends greatly on your prior knowledge. But what if you had just been hit on the head and lost all past memories, and for some reason the most urgent thing you wanted to do was to solve the mystery of the chair and cookies? Then how would you weigh the likelihood of the available hypotheses?

When you have very little data but want to compare hypotheses anyway, Occam's Razor comes to the rescue.

Occam’s Razor

Consider a different inductive problem. A computer program outputs the following sequence of numbers:

1, 3, 5, 7

Which number comes next? If you guess correctly, you’ll win $500.

In order to predict the next number in the sequence, you make a hypothesis about the process the computer is using to generate these numbers. One obvious hypothesis is that it is simply listing all the odd numbers in ascending order from 1. If that’s true, you should guess that "9" will be the next number.

But perhaps the computer is using a different algorithm to generate the numbers. Suppose that n is the step in the sequence, so that n=1 when it generated ‘1’, n=2 when it generated ‘3’, and so on. Maybe the computer used this equation to calculate each number in the sequence:

2n − 1 + (n − 1)(n − 2)(n − 3)(n − 4)

If so, the next number in the sequence will be 33. (Go ahead, check the calculations.)

But doesn’t the first hypothesis seem more likely?

The principle behind this intuition, which goes back to William of Occam, could be stated:

Among all hypotheses consistent with the observations, the simplest is the most likely.

The principle is called Occam’s razor because it ‘shaves away’ unnecessary assumptions.

For example, think about the case of the missing cookies again. In most cases, the ‘daughter’ hypothesis seems to make fewer unnecessary assumptions than the ‘short thief’ hypothesis does. You already know you have a daughter that likes cookies and knows how to move chairs to reach cookies. But in order for the short thief hypothesis to be plausible, you have to assume that (1) a thief found a way to break in, that (2) the thief wanted inexpensive cookies from your home, that (3) the thief was, unusually, too short to reach the top of the fridge without the help of a chair, and (4) many other unnecessary assumptions.

Occam’s razor sounds right, but can it be made more precise, and can it be justified? How do we find all consistent hypotheses, and how do we judge their simplicity? We will return to those questions later. Before then, we’ll describe the area of mathematics that usually deals with reasoning: probability.

Probability

You’re a soldier in combat, crouching in a trench. You know for sure there is just one enemy soldier left on the battlefield, about 400 yards away. You also know that if the remaining enemy is a regular army troop, there’s only a small chance he could hit you with one shot from that distance. But if the remaining enemy is a sniper, then there’s a very good chance he can hit you with one shot from that distance. But snipers are rare, so it’s probably just a regular army troop.

You’re a soldier in combat, crouching in a trench. You know for sure there is just one enemy soldier left on the battlefield, about 400 yards away. You also know that if the remaining enemy is a regular army troop, there’s only a small chance he could hit you with one shot from that distance. But if the remaining enemy is a sniper, then there’s a very good chance he can hit you with one shot from that distance. But snipers are rare, so it’s probably just a regular army troop.

You peek your head out of the trench, trying to get a better look.

Bam! A bullet glances off your helmet and you duck down again.

“Okay,” you think. “I know snipers are rare, but that guy just hit me with a bullet from 400 yards away. I suppose it might still be a regular army troop, but there’s a seriously good chance it’s a sniper, since he hit me from that far away.”

After another minute, you dare to take another look, and peek your head out of the trench again.

Bam! Another bullet glances off your helmet! You duck down again.

“Whoa,” you think. “It’s definitely a sniper. No matter how rare snipers are, there’s no way that guy just hit me twice in a row from that distance if he’s a regular army troop. He’s gotta be a sniper. I’d better call for support.”

This is an example of reasoning under uncertainty, of updating uncertain beliefs in response to evidence. We do it all the time.

You start with some prior beliefs, and all of them are uncertain. You are 99.99% certain the Earth revolves around the sun, 90% confident your best friend will attend your birthday party, and 40% sure that the song you're listening to on the radio was played by The Turtles.

Then, you encounter new evidence—new observations—and you update your beliefs in response.

Suppose you start out 85% confident that the one remaining enemy soldier is not a sniper. That leaves only 15% credence to the hypothesis that he is a sniper. But then, a bullet glances off your helmet — an event far more likely if the enemy soldier is a sniper than if he is not. So now you’re only 40% confident he’s a non-sniper, and 60% confident he is a sniper. Another bullet glances off your helmet, and you update again. Now you’re only 2% confident he’s a non-sniper, and 98% confident he is a sniper.

Probability theory is the mathematics of reasoning with uncertainty. The keystone of this subject is called Bayes’ Theorem. It tells you how likely something is given some other knowledge. Understanding this simple theorem is more useful and important for most people than Solomonoff induction. If you haven’t learned it already, you may want to read either tutorial #1, tutorial #2, tutorial #3, or tutorial #4 on Bayes’ Theorem. The exact math of Bayes' Theorem is not required for this tutorial. We'll just describe its results qualitatively.

Bayes’ Theorem can tell us how likely a hypothesis is, given evidence (or data, or observations). This is helpful because we want to know which model of the world is correct so that we can successfully predict the future. It calculates this probability based on the prior probability of the hypothesis alone, the probability of the evidence alone, and the probability of the evidence given the hypothesis. Now we just plug the numbers in.

Of course, it’s not easy to “just plug the numbers in.” You aren’t an all-knowing god. You don’t know exactly how likely it is that the enemy soldier would hit your helmet if he’s a sniper, compared to how likely that is if he’s not a sniper. But you can do your best. With enough evidence, it will become overwhelmingly clear which hypothesis is correct.

But guesses are not well-suited to an exact algorithm, and so our quest to find an algorithm for truth-finding must continue. For now, we turn to the problem of choosing priors.

The Problem of Priors

In the example above where you're a soldier in combat, I gave you your starting probabilities: 85% confidence that the enemy soldier was a sniper, and 15% confidence he was not. But what if you don't know your "priors"? What then?

Most situations in real life are complex, so that your “priors” (as used in Bayes’ Theorem) are actually probabilities that have been updated several times with past evidence. You had an idea that snipers were rare because you saw many soldiers, but only a few of them were snipers. Or you read a reliable report saying that snipers were rare. But what would our ideal reasoning computer do before it knew anything? What would the probabilities be set to before we turned it on? How can we determine the probability of a hypothesis before seeing any data?

The general answer is Occam’s razor; simpler hypotheses are more likely. But this isn’t rigorous. It’s usually difficult to find a measure of complexity, even for mathematical hypotheses. Is a normal curve simpler than an exponential curve? Bayesian probability theory doesn’t have anything to say about choosing priors. Thus, many standard "prior distributions" have been developed. Generally, they distribute probability equally across hypotheses. Of course this is a good approach if all the hypotheses are equally likely. But as we saw above, it seems that some hypotheses are more complex than others, and this makes them less likely than the other hypotheses. So when distributing your probability across several hypotheses, you shouldn't necessarily distribute it evenly. There’s also a growing body of work around an idea called the Maximum Entropy Principle. This principle helps you choose a prior that makes the least assumptions given the constraints of the problem. But this principle can’t be used to handle all possible types of hypotheses, only ones for which “entropy” can be mathematically evaluated.

We need a method that everyone can agree provides the correct priors in all situations. This helps us perform induction correctly. It also helps everyone be more honest. Since priors partly determine what people believe, they can sometimes choose priors that help “prove” what they want to prove. This can happen intentionally or unintentionally. It can also happen in formal situations, such as an academic paper defending a proposed program.

To solve the problem of priors once and for all, we'd like to have an acceptable, universal prior distribution, so that there's no vagueness in the process of induction. We need a recipe, an algorithm, for selecting our priors. For that, we turn to the subject of binary sequences.

Binary Sequences

At this point, we have collected a lot of background material. We know about algorithms, and we know we need an algorithm that does induction. We know that induction also uses Occam’s razor and probability. We know that one of the problems in probability is selecting priors. Now we’re ready to formalize it.

At this point, we have collected a lot of background material. We know about algorithms, and we know we need an algorithm that does induction. We know that induction also uses Occam’s razor and probability. We know that one of the problems in probability is selecting priors. Now we’re ready to formalize it.

To start, we need a language in which we can express all problems, all data, all hypotheses. Binary is the name for representing information using only the characters '0' and '1'. In a sense, binary is the simplest possible alphabet. A two-character alphabet is the smallest alphabet that can communicate a difference. If we had an alphabet of just one character, our “sentences” would be uniform. With two, we can begin to encode information. Each 0 or 1 in a binary sequence (e. g. 01001011) can be considered the answer to a yes-or-no question.

In the above example about sorting numbers, it's easy to convert it to binary just by writing a computer program to follow the algorithm. All programming languages are based on binary. This also applies to anything you’ve ever experienced using a computer. From the greatest movie you’ve ever seen to emotional instant messaging conversations, all of it was encoded in binary.

In principle, all information can be represented in binary sequences. If this seems like an extreme claim, consider the following...

All your experiences, whether from your eyes, ears, other senses or even memories and muscle movements, occur between neurons (your nerve cells and brain cells). And it was discovered that neurons communicate using a digital signal called the action potential. Because it is digital, a neuron either sends the signal, or it doesn't. There is no half-sending the action potential. This can be translated directly into binary. An action potential is a 1, no action potential is a 0. All your sensations, thoughts, and actions can be encoded as a binary sequence over time. A really long sequence.

Or, if neuron communication turns out to be more complicated than that, we can look to a deeper level. All events in the universe follow the laws of physics. We're not quite done discovering the true laws of physics; there are some inconsistencies and unexplained phenomena. But the currently proposed laws are incredibly accurate. And they can be represented as a single binary sequence.

You might be thinking, “But I see and do multiple things simultaneously, and in the universe there are trillions of stars all burning at the same time. How can parallel events turn into a single sequence of binary?”

This is a perfectly reasonable question. It turns out that, at least formally, this poses no problem at all. The machinery we will use to deal with binary sequences can turn multiple sequences into one just by dovetailing them together and adjusting how it processes them so the results are the same. Because it is easiest to deal with a single sequence, we do this in the formal recipe. Any good implementation of Solomonoff induction will use multiple sequences just to be faster.

A picture of your daughter can be represented as a sequence of ones and zeros. But a picture is not your daughter. A video of all your daughter’s actions can also be represented as a sequence of ones and zeros. But a video isn’t your daughter, either; we can’t necessarily tell if she’s thinking about cookies, or poetry. The position of all the subatomic particles that make up your daughter as she lives her entire life can be represented as a sequence of binary. And that really is your daughter.

Having a common and simple language can sometimes be the key to progress. The ancient Greek mathematician Archimedes discovered many specific results of calculus, but could not generalize the methods because he did not have the language of calculus. After this language was developed in the late 1600s, hundreds of mathematicians were able to produce new results in the field. Now, calculus forms an important base of our modern civilization.

Being able to do everything in the language of binary sequences simplifies things greatly, and gives us great power. Now we don't have to deal with complex concepts like “daughter” and “soldier." It's all still there in the data, only as a large sequence of 0s and 1s. We can treat it all the same.

All Algorithms

Now that we have a simple way to deal with all types of data, let's look at hypotheses. Recall that we’re looking for a way to assign prior probabilities to hypotheses. (And then, when we encounter new data, we'll use Bayes' Theorem to update the probabilities we assign to those hypotheses). To be complete, and guarantee we find the real explanation for our data, we have to consider all possible hypotheses. But how could we ever find all possible explanations for our data? We could sit in a room for days, making a list of all the ways the cookies could be missing, and still not think of the possibility that our wife took some to work.

It turns out that mathematical abstraction can save the day. By using a well-tested model, and the language of binary, we can find all hypotheses.

This piece of the puzzle was discovered in 1936 by a man named Alan Turing. He created a simple, formal model of computers called “Turing machines” before anyone had ever built a computer.

In Turing's model, each machine's language is—you guessed it—binary. There is one binary sequence for the input, a second binary sequence that constantly gets worked on and re-written, and a third binary sequence for output. (This description is called a three-tape Turing machine, and is easiest to think about for Solomonoff induction. The normal description of Turing machines includes only one tape, but it turns out that they are equivalent.)

The rules that determine how the machine reacts to and changes the bits on these tapes are very simple. An example is shown on the diagram above. Basically, every Turing machine has a finite number of “states”, each of which is a little rule. These rules seem bland and boring at first, but in a few paragraphs, you’ll find out why they’re so exciting. First, the machine will start out in a certain state, with some binary on the input tape, all zeros on the work tape, and all zeros on the output tape. The rules for that first state will tell it to look at the input tape and the work tape. Depending on what binary number is on those two tapes, the rules will say to perform certain actions. It will say to;

- feed the input tape (or not)

- write 0 or 1 to the work tape

- move the work tape left or right

- write 0 or 1 to the output tape

- feed the output tape (or not).

After that, the rules will say which state to move to next, and the process will repeat. Remember that the rules for these states are fixed; they could be written out on pieces of paper. All that changes are the tapes, and what rule the machine is currently following. The basic mathematics behind this is fairly simple to understand, and can be found in books on computational theory.

This model is incredibly powerful. Given the right rules, Turing machines can

- calculate the square of a number,

- run a spreadsheet program,

- compress large files,

- estimate the probability of rain tomorrow,

- control the flight of an airplane,

- play chess better than a human,

- and much, much more.

You may have noticed that this sounds like a list of what regular computers do. And you would be correct; the model of Turing machines came before, and served as an invaluable guide to, the invention of electronic computers. Everything they do is within the model of Turing machines.

Even more exciting is the fact that all attempts to formalize the intuitive idea of “algorithm” or “process” have been proven to be at most equally as powerful as Turing machines. If a system has this property, it is called Turing complete. For example, math equations using algebra have a huge range of algorithms they can express. Multiplying is an algorithm, finding the hypotenuse of a right triangle is an algorithm, and the quadratic formula is an algorithm. Turing machines can run all these algorithms, and more. That is, Turing machines can be used to calculate out all algebraic algorithms, but there are some algorithms Turing machines can run that can’t be represented by algebra. This means algebra is not Turing complete. For another example, mathematicians often invent “games” where sequences of symbols can be rewritten using certain rules. (They will then try to prove things about what sequences these rules can create.) But no matter how creative their rules, every one of them can be simulated on a Turing machine. That is, for every set of re-writing rules, there is a binary sequence you can give a Turing machine so that the machine will rewrite the sequences in the same way.

Remember how limited the states of a Turing machine are; every machine has only a finite number of states with “if” rules like in the figure above. But somehow, using these and the tape as memory, they can simulate every set of rules, every algorithm ever thought up. Even the distinctively different theory of quantum computers is at most Turing complete. In the 80 years since Turing’s paper, no superior systems have been found. The idea that Turing machines truly capture the idea of “algorithm” is called the Church-Turing thesis.

So the model of Turing machines covers regular computers, but that is not all. As mentioned above, the current laws of physics can be represented as a binary sequence. That is, the laws of physics are an algorithm that can be fed into a Turing machine to compute the past, present and future of the universe. This includes stars burning, the climate of the earth, the action of cells, and even the actions and thoughts of humans. Most of the power here is in the laws of physics themselves. What Turing discovered is that these can be computed by a mathematically simple machine.

As if Turing’s model wasn’t amazing enough, he went on to prove that one specific set of these rules could simulate all other sets of rules.

The computer with this special rule set is called a universal Turing machine. We simulate another chosen machine by giving the universal machine a compiler binary sequence. A compiler is a short program that translates between computer languages or, in this case, between machines. Sometimes a compiler doesn’t exist. For example, you couldn’t translate Super Mario Brothers onto a computer that only plays tic-tac-toe. But there will always be a compiler to translate onto a universal Turing machine. We place this compiler in front of the input which would have been given to the chosen machine. From one perspective, we are just giving the universal machine a single, longer sequence. But from another perspective, the universal machine is using the compiler to set itself up to simulate the chosen machine. While the universal machine (using its own, fixed rules) is processing the compiler, it will write various things on the work tape. By the time it has passed the compiler and gets to the original input sequence, the work tape will have something written on it to help simulate the chosen machine. While processing the input, it will still follow its own, fixed rules, only the binary on the work tape will guide it down a different “path” through those rules than if we had only given it the original input.

For example, say that we want to calculate the square of 42 (or in binary, 101010). Assume we know the rule set for the Turing machine which squares numbers when given the number in binary. Given all the specifics, there is an algorithmic way to find the “compiler” sequence based on these rules. Let’s say that the compiler is 1011000. Then, in order to compute the square of 42 on the universal Turing machine, we simply give it the input 1011000101010, which is just the compiler 1011000 next to the number 42 as 101010. If we want to calculate the square of 43, we just change the second part to 101011 (which is 101010 + 1). The compiler sequence doesn’t change, because it is a property of the machine we want to simulate, e. g. the squaring machine, but not of the input to that simulated machine, e. g. 42.

In summary: algorithms are represented by Turing machines, and Turing machines are represented by inputs to the universal Turing machine. Therefore, algorithms are represented by inputs to the universal Turing machine.

In Solomonoff induction, the assumption we make about our data is that it was generated by some algorithm. That is, the hypothesis that explains the data is an algorithm. Therefore, a universal Turing machine can output the data, as long as you give the machine the correct hypothesis as input. Therefore, the set of all possible inputs to our universal Turing machine is the set of all possible hypotheses. This includes the hypothesis that the data is a list of the odd numbers, the hypothesis that the enemy soldier is a sniper, and the hypothesis that your daughter ate the cookies. This is the power of formalization and mathematics.

Solomonoff's Lightsaber

Now we can find all the hypotheses that would predict the data we have observed. This is much more powerful than the informal statement of Occam's razor. Because of its precision and completeness, this process has been jokingly dubbed “Solomonoff's Lightsaber”. Given our data, we find potential hypotheses to explain it by running every hypothesis, one at a time, through the universal Turing machine. If the output matches our data, we keep it. Otherwise, we throw it away.

By the way, this is where Solomonoff induction becomes incomputable. It would take an infinite amount of time to check every algorithm. And even more problematic, some of the algorithms will run forever without producing output—and we can't prove they will never stop running. This is known as the halting problem, and it is a deep fact of the theory of computation. It's the sheer number of algorithms, and these pesky non-halting algorithms that stop us from actually running Solomonoff induction.

The actual process above might seem a little underwhelming. We just check every single hypothesis? Really? Isn’t that a little mindless and inefficient? This will certainly not be how the first true AI operates. But don’t forget that before this, nobody had any idea how to do ideal induction, even in principle. Developing fundamental theories, like quantum mechanics, might seem abstract and wasteful. But history has proven that it doesn’t take long before such theories and models change the world, as quantum mechanics did with modern electronics. In the future, men and women will develop ways to approximate Solomonoff induction in a second. Perhaps they will develop methods to eliminate large numbers of hypotheses all at once. Maybe hypotheses will be broken into distinct classes. Or maybe they’ll use methods to statistically converge toward the right hypotheses.

So now, at least in theory, we have the whole list of hypotheses that might be the true cause behind our observations. These hypotheses, since they are algorithms, look like binary sequences. For example, the first few might be 01001101, 0011010110000110100100110, and 1000111110111111000111010010100001. That is, for each of these three, when you give them to the universal Turing machine as input, the output is our data. Which of these three do you think is more likely to be the true hypothesis that generated our data in the first place?

We have a list, but we're trying to come up with a probability, not just a list of possible explanations. So how do we decide what the probability is of each of these hypotheses? Imagine that the true algorithm is produced in a most unbiased way: by flipping a coin. For each bit of the hypothesis, we flip a coin. Heads will be 0, and tails will be 1. In the example above, 01001101, the coin landed heads, tails, heads, heads, tails, and so on. Because each flip of the coin has a 50% probability, each bit contributes ½ to the final probability.

We have a list, but we're trying to come up with a probability, not just a list of possible explanations. So how do we decide what the probability is of each of these hypotheses? Imagine that the true algorithm is produced in a most unbiased way: by flipping a coin. For each bit of the hypothesis, we flip a coin. Heads will be 0, and tails will be 1. In the example above, 01001101, the coin landed heads, tails, heads, heads, tails, and so on. Because each flip of the coin has a 50% probability, each bit contributes ½ to the final probability.

Therefore an algorithm that is one bit longer is half as likely to be the true algorithm. Notice that this intuitively fits Occam's razor; a hypothesis that is 8 bits long is much more likely than a hypothesis that is 34 bits long. Why bother with extra bits? We’d need evidence to show that they were necessary.

So, why not just take the shortest hypothesis, and call that the truth? Because all of the hypotheses predict the data we have so far, and in the future we might get data to rule out the shortest one. We keep all consistent hypotheses, but weigh the shorter ones with higher probability. So in our eight-bit example, the probability of 01001101 being the true algorithm is ½^8, or 1/256. It's important to say that this isn't an absolute probability in the normal sense. It hasn't been normalized—that is, the probabilities haven't been adjusted so that they add up to 1. This is computationally much more difficult, and might not be necessary in the final implementation of Solomonoff induction. These probabilities can still be used to compare how likely different hypotheses are.

To find the probability of the evidence alone, all we have to do is add up the probability of all these hypotheses consistent with the evidence. Since any of these hypotheses could be the true one that generates the data, and they're mutually exclusive, adding them together doesn't double count any probability.

Formalized Science

Let’s go back to the process above that describes the scientific method. We’ll see that Solomonoff induction is this process made into an algorithm.

1. Make an observation.

Our observation is our binary sequence of data. Only binary sequences are data, and all binary sequences can qualify as data.

2. Form a hypothesis that explains the observation.

We use a universal Turing machine to find all possible hypotheses, no fuzziness included. The hypothesis “explains” the observation if the output of the machine matches the data exactly.

3. Conduct an experiment that will test the hypothesis.

The only “experiment” is to observe the data sequence for longer, and run the universal machine for longer. The hypotheses whose output continues to match the data are the ones that pass the test.

4. If the experimental results disconfirm the hypothesis, return to step #2 and form a hypothesis not yet used. If the experimental results confirm the hypothesis, provisionally accept the hypothesis.

This step tells us to repeat the “experiment” with each binary sequence in our matching collection. However, instead of “provisionally” accepting the hypothesis, we accept all matching hypotheses with a probability weight according to its length.

Now we’ve found the truth, as best as it can be found. We’ve excluded no possibilities. There’s no place for a scientist to be biased, and we don’t need to depend on our creativity to come up with the right hypothesis or experiment. We know how to measure how complex a hypothesis is. Our only problem left is to efficiently run it.

Approximations

As mentioned before, we actually can’t run all the hypotheses to see which ones match. There are infinitely many, and some of them will never halt. So, just like our cake recipe, we need some very helpful approximations that still deliver very close to true outputs. Technically, all prediction methods are approximations of Solomonoff induction, because Solomonoff induction tries all possibilities. But most methods use a very small set of hypotheses, and many don't use good methods for estimating their probabilities. How can we more directly approximate our recipe? At present, there aren't any outstandingly fast and accurate approximations to Solomonoff induction. If there were, we would be well on our way to true AI. Below are some ideas that have been published.

In Universal Artificial Intelligence, Marcus Hutter provides a full formal approximation of Solomonoff induction which he calls AIXI-tl. This model is optimal in a technical and subtle way. Also it can always return an answer in a finite amount of time, but this time is usually extremely long and doubles with every bit of data. It would still take longer than the life of the universe before we got an answer for most questions. How can we do even better?

In Universal Artificial Intelligence, Marcus Hutter provides a full formal approximation of Solomonoff induction which he calls AIXI-tl. This model is optimal in a technical and subtle way. Also it can always return an answer in a finite amount of time, but this time is usually extremely long and doubles with every bit of data. It would still take longer than the life of the universe before we got an answer for most questions. How can we do even better?

One general method would be to use a Turing machine that you know will always halt. Because of the halting problem, this means that you won't be testing some halting algorithms that might be the correct hypotheses. But you could still conceivably find a large set of hypotheses that all halted.

Another popular method of approximating any intractable algorithm is to use randomness. This is called a Monte Carlo method. We can’t test all hypotheses, so we have to select a subset. But we don’t want our selection process to bias the result. Therefore we could randomly generate a bunch of hypotheses to test. We could use an evolutionary algorithm, where we test this seed set of hypotheses, and keep the ones that generate data closest to ours. Then we would vary these hypotheses, run them again through the Turing machine, keep the closest fits, and continue this process until the hypotheses actually predicted our data exactly.

The mathematician Jürgen Schmidhuber proposes a different probability weighing which also gives high probability to hypotheses that can be quickly computed. He demonstrates how this is “near-optimal”. This is vastly faster, but risks making another assumption, that faster algorithms are inherently more likely.

Unresolved Details

Solomonoff induction is an active area of research in modern mathematics. While it is universal in a broad and impressive sense, some choices can still be made in the mathematical definition. Many people also have philosophical concerns or objections to the claim that Solomonoff induction is ideal and universal.

The first question many mathematicians ask is, “Which universal Turing machine?” I have written this tutorial as if there is only one, but there are in fact infinitely many sets of rules that can simulate all other sets of rules. Just as the length of a program will depend on which programming language you write it in, the length of the hypothesis as a binary sequence will depend on which universal Turing machine you use. This means the probabilities will be different. The change, however, is very limited. There are theorems that well-define this effect and it is generally agreed not to be a concern. Specifically, going between two universal machines cannot increase the hypothesis length any more than the length of the compiler from one machine to the other. This length is fixed, independent of the hypothesis, so the more data you use, the less this difference matters.

Another concern is that the true hypothesis may be incomputable. There are known definitions of binary sequences which make sense, but which no Turing machine can output. Solomonoff induction would converge to this sequence, but would never predict it exactly. It is also generally agreed that nothing could ever predict this sequence, because all known predictors are equivalent to Turing machines. If this is a problem, it is similar to the problem of a finite universe; there is nothing that can be done about it.

Lastly, many people, mathematicians included, reject the ideas behind the model, such as that the universe can be represented as a binary sequence. This often delves into complex philosophical arguments, and often revolves around consciousness.

Many more details can be found the more one studies. Many mathematicians work on modified versions and extensions where the computer learns how to act as well as predict. However these open areas are resolved, Solomonoff induction has provided an invaluable model and perspective for research into solving the problem of how to find truth. (Also see Open Problems Related to Solomonoff Induction.)

Fundamental theories have an effect of unifying previously separate ideas. After Turing discovered the basics of computability theory, it was only a matter of time before these ideas made their way across philosophy and mathematics. Statisticians could only find exact probabilities in simplified situations; now they know how to find them in all situations. Scientists wondered how to really know which hypothesis was simpler; now they can find a number. Philosophers wondered how induction could be justified. Solomonoff induction gives them an answer.

So, what’s next? To build our AI truth-machine, or even to find the precise truth to a single real-world problem, we need considerable work on approximating our recipe. Obviously a scientist cannot presently download a program to tell him the complexity of his hypothesis regarding deep-sea life behavior. But now that we know how to find truth in principle, mathematicians and computer scientists will work on finding it in practice.

(How does this fit with Bayes' theorem? A followup.)

225 comments

Comments sorted by top scores.

comment by Shmi (shminux) · 2012-07-09T06:03:09.526Z · LW(p) · GW(p)

Let me dramatize your sniper example a bit with this hypothetical scenario.

You read about homeopathy, you realize that the purported theoretical underpinnings sound nonsensical, you learn that all properly conducted clinical trials show that homeopathy is no better than placebo and so estimate that the odds of it being anything but a sham are, charitably, 1 in a million. Then your mother, who is not nearly as rational, tries to convince you to take Sabadil for your allergies, because it has good product reviews. You reluctantly agree to humor her (after all, homeopathy is safe, if useless).

Lo, your hay fever subsides... and now you are stuck trying to recalculate your estimate of homeopathy being more than a sham in the face of the shear impossibility of the sugar pills with not a single active molecule remaining after multiple dilutions having any more effect than a regular sugar pill.

What would you do?

Replies from: Vaniver, None, DaFranker, Alex_Altair, Luke_A_Somers↑ comment by Vaniver · 2012-07-15T18:00:17.466Z · LW(p) · GW(p)

Lo, your hay fever subsides... and now you are stuck trying to recalculate your estimate of homeopathy being more than a sham in the face of the shear impossibility of the sugar pills with not a single active molecule remaining after multiple dilutions having any more effect than a regular sugar pill.

What would you do?

Test regular sugar pills, obviously. Apparently my hayfever responds to placebo, and that's awesome. High fives all around!

Replies from: shminux↑ comment by Shmi (shminux) · 2012-07-15T20:45:41.691Z · LW(p) · GW(p)

Apparently my hayfever responds to placebo

and if it does not?

Replies from: Vaniver, johnlawrenceaspden↑ comment by Vaniver · 2012-07-16T00:31:05.219Z · LW(p) · GW(p)

Increase the probability that homeopathy works for me- but also increase the probability of all other competing hypotheses except the "sugar placebo" one. The next most plausible hypothesis at that point is probably that the effort is related to my mother's recommendation. Unless I expect the knowledge gained here to extend to something else, at some point I'll probably stop trying to explain it.

At some point, though, this hypothetical veers into "I wouldn't explain X because X wouldn't happen" territory. Hypothetical results don't have to be generated in the same fashion as real results, and so may have unreal explanations.

↑ comment by johnlawrenceaspden · 2012-07-16T10:29:12.883Z · LW(p) · GW(p)

Oooh! That would be so interesting. You could do blind trials with sugar pills vs other sugar pills. You could try to make other tests which could distinguish between homeopathic sugar and non-homeopathic sugar. Then if you found some you could try to make better placebos that pass the tests. You might end up finding an ingredient in the dye in the packaging that cures your hayfever. And if not something like that, you might end up rooting out something deep!

I suspect that if you were modelling an ideal mind rather than my rubbish one, the actual priors might not shift very much, but the expected value of investigating might be enough to prompt some experimenting. Especially if the ideal mind's hayfever was bad and the homeopathic sugar was expensive.

↑ comment by [deleted] · 2012-07-12T08:03:48.480Z · LW(p) · GW(p)

Do a bayesian update.

Additional to the prior, you need:

(A) The probability that you will heal anyway

(B) The probability that you will heal if sabadil works

I have done the math for A=0,5 ; B=0,75 : The result is an update from 1/10^6 to 1,5 * 1/10^6

For A=1/1000 and B=1 the result is 0.000999

The math is explained here: http://lesswrong.com/lw/2b0/bayes_theorem_illustrated_my_way

↑ comment by DaFranker · 2012-07-09T16:40:07.033Z · LW(p) · GW(p)

I would follow the good old principle of least assumptions: It is extremely likely that something else, the nature of which is unknown and difficult to identify within the confines of the stated problem, caused the fever to subside. This merely assumes that your prior is more reliable and that you do not know all the truths of the universe, the latter assumption being de-facto in very much all bayesian reasoning, to my understanding.

From there, you can either be "stubborn" and reject this piece of evidence due to the lack of an unknown amount of unknown data on what other possible causes there could be, or you could be strictly bayesian and update your belief accordingly. Even if you update your beliefs, since the priors of Homeopathy working is already very low, and the prior of you being cured by this specific action is also extremely low when compared to everything else that could possibly have cured you... and since you're strictly bayesian, you also update other beliefs, such as placebo curing minor ailments, "telepathic-like" thought-curing, self-healing through symbolic action, etc.

All in all, this paints a pretty solid picture of your beliefs towards homeopathy being unchanged, all things considered.

Replies from: shminux↑ comment by Shmi (shminux) · 2012-07-09T17:01:15.253Z · LW(p) · GW(p)

Following the OP's sniper example, suppose you take the same pill the next time you have a flare-up, and it helps again. Would you still stick to your prewritten bottom line of "it ought to be something else"?

Replies from: DaFranker, private_messaging↑ comment by DaFranker · 2012-07-10T19:11:47.131Z · LW(p) · GW(p)

To better frame my earlier point, suppose that you flare up six times during a season, and the six times it gets better after having applied "X" method which was statistically shown to be no better than placebo, and after rational analysis you find out that each time you applied method X you were also circumstantially applying method Y at the same time (that is, you're also applying a placebo, since unless in your entire life you have never been relieved of some ailment by taking a pill, your body/brain will remember the principle and synchronize, just like any other form of positive reinforcement training).

In other words, both P(X-works|cured) and P(Y-works|cured) are raised, but only by half, since statistically they've been shown to have the same effect, and thus your priors are that they are equally as likely of being the cure-cause, and while both could be a cure-cause, the cure-cause could also be to have applied both of them. Since those two latter possibilities end up evening out, you divide the posteriors in the two, from my understanding. I might be totally off though, since I haven't been learning about Bayes' theorem all that long and I'm very much still a novice in bayesian rationality and probability.

To make a long story short, yes, I would stick to it, because within the presented context there are potentially thousands of X, Y and Z possible cure-causes, so while the likeliness that one of them is a cure is going up really fast each time I cure myself under the same circumstances, only careful framing of said circumstances will allow anyone to really rationally establish which factors become more likely to be truly causal and which are circumstantial (or correlated in another, non-directly-causal fashion).

Since homeopathy almost invariably involves hundreds of other factors, many of which are unknown and some of which we might be completely unaware, it becomes extremely difficult to reliably test for its effectiveness in some circumstances. This is why we assign greater trust in the large-scale double-blind studies, because our own analysis is of lower comparative confidence. At least within the context of this particular sniper example.

↑ comment by private_messaging · 2012-07-10T18:16:27.354Z · LW(p) · GW(p)

The homoeopathy claims interaction of water molecules with each other leads to water memory... it can even claim that all the physics is the same (hypothesis size per Solomonoff induction is same) yet the physics works out to the stuff happening. And we haven't really ran sufficiently detailed simulation to rule it out, just arguments of uncertain validity. There's no way to estimate it's probability.

We do something different than estimating probability of homoeopathy being true. It's actually very beautiful method, very elegant solution. We say, well, let's strategically take the risk of one-in-a-million that we discard a true curing method homoeopathy with such and such clinical effect. Then we run the clinical trials, and find evidence that there's less than one-in-a-million chance that those trials happened as they were, if the homoeopathy was true. We still do not know the probability of homoeopathy, but we strategically discard it, and we know the probability of wrongfully having discarded it, we can even find upper bound on how much that strategy lost in terms of expected utility, by discarding. That's how science works. The cut off strategy can be set from utility maximization considerations (with great care to avoid pascal's wager).

Replies from: shminux, othercriteria↑ comment by Shmi (shminux) · 2012-07-10T18:37:47.603Z · LW(p) · GW(p)

So, suppose that Sabadil cured your allergies 10 times out of 10, you will not take again unless forced to, because "There's no way to estimate it's (sic) probability."? Maybe you need to reread chapter 1 of HPMOR, and brush up on how to actually change your mind.

Replies from: DaFranker, private_messaging↑ comment by DaFranker · 2012-07-10T19:28:56.681Z · LW(p) · GW(p)

If it did cure allergies 10 times out of 10, and that ALL other possible cure-causes had been eliminated as causal beforehand (including the placebo effect which is inherent to most acts of taking a homoeopathic pill, even when the patient doesn't believe it'll work, simply out of subconscious memory of being cured by taking a pill), then yes, the posterior belief in its effectiveness would shoot up.

However, "the body curing itself by wanting to and being willing to even try things we know probably won't work based on what-ifs alone" is itself a major factor, one that has also been documented.

Par contre, if it did work 10 times out of 10, then I almost definitely would take it again, since it has now been shown to be, at worst, statistically correlated with whatever actually does cure me of my symptoms, whether that's the homoeopathic treatment or not. While doing that, I would keep attempting to rationally identify the proper causal links between events.

↑ comment by private_messaging · 2012-07-10T18:48:32.202Z · LW(p) · GW(p)

The point is that there is a decision method that allows me to decide without anyone having to make a prior.

Say, the cost of trial is a, the cost (utility loss) of missing valid cure to strategy failure is b, you do the N trials , N such that a N < (the probability of trials given assumption of validity of cure) b , then you proclaim cure not working. Then you can do more trials if the cost of trial falls. You don't know the probability and you still decide in an utility-maximizing manner (on choice of strategy), because you have the estimate on the utility loss that the strategy will incur in general.

edit: clearer. Also I am not claiming it is the best possible method, it isn't, but it's a practical solution that works. You can know the probability that you will end up going uncured if the cure actually works.

↑ comment by othercriteria · 2012-07-10T19:17:40.673Z · LW(p) · GW(p)

let's strategically take the risk of one-in-a-million that we discard a true curing method homoeopathy with such and such clinical effect

Where does your choice of "such and such clinical effect" come from? Keeping your one-in-a-million chance of being wrong fixed, the scale of the clinical trials required depends on the effect size of homeopathy. If homeopathy is a guaranteed cure, it's enough to dose one incurably sick person. If it helps half of the patients, you might need to dose on the order of twenty. And so on for smaller effect sizes. The homeopathy claim is not just a single hypothesis but a compound hypothesis consisting of all these hypotheses. Choosing which of these hypotheses to entertain is a probabilistic judgment; it can't be escaped by just picking one of the hypotheses, since that's just concentrating the prior mass at one point.

Replies from: private_messaging↑ comment by private_messaging · 2012-07-10T20:45:37.472Z · LW(p) · GW(p)

It's part of the hypothesis, without it the idea is not a defined hypothesis. See falsifiability.

Replies from: othercriteria↑ comment by othercriteria · 2012-07-10T21:53:45.518Z · LW(p) · GW(p)

(Pardon the goofy notation. Don't want to deal with the LaTeX engine.)

The compound hypothesis is well-defined. Suppose that the baseline cure probability for a placebo is θ ∈ [0,1). Then hypotheses take the form H ⊂ [0,1], which have the interpretation that the cure rate for homeopathy is in H. The standing null hypothesis in this case is Hθ = { θ }. The alternative hypothesis that homeopathy works is H>θ = (θ,1] = { x : x > θ }. For any θ' ∈ H>θ, we can construct a "one-in-a-million chance of being wrong" test for the simple hypothesis Hθ' that homeopathy is effective with effect size exactly θ'. It is convenient that such tests work just as well for the hypothesis H≥θ'. However, we can't construct a test for H>θ.

Bringing in falsifiability only confuses the issue. No clinical data exist that will strictly falsify any of the hypotheses considered above. On the other hand, rejecting Hθ' seems like it should provide weak support for rejecting H>θ. My take on this is that since such a research program seems to work in practice, falsifiability doesn't fully describe how science works in this case (see Popper vs. Kuhn, Lakatos, Feyerabend, etc.).

Replies from: private_messaging↑ comment by private_messaging · 2012-07-11T06:25:57.241Z · LW(p) · GW(p)

Clinical data still exists that would allow a strategy to stop doing more tests at specific cut off point as the payoff from the hypothesis being right is dependent to the size of the effect and there will be clinical data at some point where the integral of payoff over lost clinical effects is small enough. It just gets fairly annoying to calculate. . Taking the strategy will be similar to gambling decision.

I do agree that there is a place for occam's razor here but there exist no formalism that actually lets you quantify this weak support. There's the Solomonoff induction, which is un-computable and awesome for work like putting an upper bound on how good induction can (or rather, can't) ever be.

↑ comment by Alex_Altair · 2012-07-09T15:22:41.415Z · LW(p) · GW(p)

I would do the same thing I would do if my hay fever had gone away with no treatment.

Replies from: shminux↑ comment by Shmi (shminux) · 2012-07-09T15:52:31.772Z · LW(p) · GW(p)

And what would that be? Refuse to account for new evidence?

Replies from: Alex_Altair↑ comment by Alex_Altair · 2012-07-09T16:52:31.409Z · LW(p) · GW(p)

No, I mean I would be equally confused, because that's exactly what happened. Homeopathy is literally water, so I would be just as confused if I had drank water and my hay fever subsided.

Also, completely separately, even if you took some drug that wasn't just water, your case is anecdotal evidence. It should hold the same weight as any single case in any of the clinical trials. That means that it adds pretty much no data, and it's correct to not update much.

Replies from: shminux↑ comment by Shmi (shminux) · 2012-07-09T18:19:47.675Z · LW(p) · GW(p)

even if you took some drug that wasn't just water, your case is anecdotal evidence. It should hold the same weight as any single case in any of the clinical trials.

Ah, but that's not quite true. If a remedy helps you personally, it is less than a "single case" for clinical trial purposes, because it was not set up properly for that. You would do well to ignore it completely if you are calculating your priors for the remedy to work for you.

However, it is much more than nothing for you personally, once you have seen it working for you once. Now you have to update on the evidence. It's a different step in the Bayesian reasoning: calculating new probabilities given that a certain event (in this case one very unlikely apriori -- that the remedy works) actually happened.

↑ comment by Luke_A_Somers · 2012-07-09T14:13:00.955Z · LW(p) · GW(p)

hypothesize that they're incompetent/dishonest at diluting and left some active agent in there.

Replies from: shminux↑ comment by Shmi (shminux) · 2012-07-09T15:51:48.806Z · LW(p) · GW(p)

OK, so you take it to your chem lab and they confirm that the composition is pure sugar, as far as they can tell. How many alternatives would you keep inventing before you update your probability of it actually working?

In other words, when do you update your probability that there is a sniper out there, as opposed to "there is a regular soldier close by"?

Replies from: Luke_A_Somers, Oscar_Cunningham↑ comment by Luke_A_Somers · 2012-07-09T21:28:58.847Z · LW(p) · GW(p)

Before I update my probability of it working? The alternatives are rising to the surface because I'm updating the probability of it working.

Replies from: shminux↑ comment by Shmi (shminux) · 2012-07-10T05:58:09.828Z · LW(p) · GW(p)

OK, I suppose this makes sense. Let me phrase it differently. What personal experience (not published peer-reviewed placebo-controlled randomized studies) would cause you to be convinced that what is essentially magically prepared water is as valid a remedy as, say, Claritin?

Replies from: johnlawrenceaspden↑ comment by johnlawrenceaspden · 2012-07-15T15:35:11.001Z · LW(p) · GW(p)

Well, I hate to say this for obvious reasons, but if the magic sugar water cured my hayfever just once, I'd try it again, and if it worked again, I'd try it again. And once it had worked a few times, I'd probably keep trying it even if it occasionally failed.

If it consistently worked reliably I'd start looking for better explanations. If no-one could offer one I'd probably start believing in magic.

I guess not believing in magic is something to do with not expecting this sort of thing to happen.

Replies from: Vladimir_Nesov, SusanBrennan, private_messaging↑ comment by Vladimir_Nesov · 2012-07-15T17:11:31.107Z · LW(p) · GW(p)

Well, I hate to say this for obvious reasons, but if the magic sugar water cured my hayfever just once, I'd try it again, and if it worked again, I'd try it again.

(This tripped my positive bias sense: only testing the outcome in the presence of an intervention doesn't establish that it's doing anything. It's wrong to try again and again after something seemed to work, one should also try not doing it and see if it stops working. Scattering anti-tiger pills around town also "works": if one does that every day, there will be no tigers in the neighborhood.)

Replies from: shminux, Vaniver, johnlawrenceaspden↑ comment by Shmi (shminux) · 2012-07-15T20:57:41.592Z · LW(p) · GW(p)

Scattering anti-tiger pills around town also "works": if one does that every day, there will be no tigers in the neighborhood.

That's a bad analogy. If "anti-tiger pills" repeatedly got rid of a previously observed real tiger, you would be well advised to give the issue some thought.

Replies from: Tyrrell_McAllister↑ comment by Tyrrell_McAllister · 2012-07-15T23:35:13.359Z · LW(p) · GW(p)

That's a bad analogy. If "anti-tiger pills" repeatedly got rid of a previously observed real tiger, you would be well advised to give the issue some thought.

What's that line about how, if you treat a cold, you can get rid of it in seven days, but otherwise it lasts a week?

You would still want to check to see whether tigers disappear even when no "anti-tiger pills" are administered.

Replies from: Sarokrae↑ comment by Sarokrae · 2012-07-15T23:50:20.813Z · LW(p) · GW(p)

You would still want to check to see whether tigers disappear even when no "anti-tiger pills" are administered.

Depending on how likely the tiger is to eat people if it didn't disappear, and your probability of the pills successfully repelling the tiger given that it's always got rid of the tiger, and the cost of the pill and how many people the tiger is expected to eat if it doesn't disappear? Not always.

Replies from: TGM, Tyrrell_McAllister↑ comment by TGM · 2012-07-16T00:32:21.217Z · LW(p) · GW(p)

A medical example of this is the lack of evidence for the efficacy of antihistamine against anaphylaxis. When I asked my sister (currently going through clinical school) about why, she said "because if you do a study, people in the control group will die if these things work, and we have good reason to believe they do"

EDIT: I got beaten to posting this by the only other person I told about it

↑ comment by Tyrrell_McAllister · 2012-07-16T17:03:36.542Z · LW(p) · GW(p)

and your probability of the pills successfully repelling the tiger given that it's always got rid of the tiger

Yes. But the point is that this number should be negligible if you haven't seen how the tiger behaves in the absence of the pills. (All of this assumes that you do not have any causal model linking pill-presence to tiger-absence.)

This case differs from the use of antihistamine against anaphylaxis for two reasons:

There is some theoretical reason to anticipate that antihistamine would help against anaphylaxis, even if the connection hasn't been nailed down with double-blind experiments.

We have cases where people with anaphylaxis did not receive antihistamine, so we can compare cases with and without antihistamine. The observations might not have met the rigorous conditions of a scientific experiment, but that is not necessary for the evidence to be rational and to justify action.

↑ comment by Sarokrae · 2012-07-16T17:39:14.375Z · LW(p) · GW(p)

Absolutely. The precise thing that matters is the probability tigers happen if you don't use the pills. So, say, I wouldn't recommend doing the experiment if you live in areas with high densities of tigers (which you do if there's one showing up every day!) and you weren't sure what was going into the pills (tiger poison?), but would recommend doing the experiment if you lived in London and knew that the pills were just sugar.

Similarly, I'm more likely to just go for a herbal remedy that hasn't had scientific testing, but has lots of anecdotal evidence for lack of side-effects, than a homeopathic remedy with the same amount of recommendation.

↑ comment by Vaniver · 2012-07-15T17:56:29.425Z · LW(p) · GW(p)

It is positive bias (in that this isn't the best way to acquire knowledge), but there's a secondary effect: the value of knowing whether or not the magic sugar water cures his hayfever is being traded off against the value of not having hayfever.

Depending on how frequently he gets hayfever, and how long it took to go away without magic sugar water, and how bothersome it is, and how costly the magic sugar water is, it may be better to have an unexplained ritual for that portion of his life than to do informative experiments.

(And, given that the placebo effect is real, if he thinks the magic sugar water is placebo, that's reason enough to drink it without superior alternatives.)

Replies from: Sarokrae↑ comment by Sarokrae · 2012-07-15T19:56:30.280Z · LW(p) · GW(p)

Agree with this. Knowing the truth has a value and a cost (doing the experiment).

I recently heard something along the lines of: "We don't have proof that antihistamines work to treat anaphylaxis, because we haven't done the study. But the reason we haven't done the study is because we're pretty sure the control group would die."

↑ comment by johnlawrenceaspden · 2012-07-16T09:44:15.663Z · LW(p) · GW(p)

I agree, I'd try not taking it too! I had hayfever as a child, and it was bloody awful. I used to put onion juice in my eyes because it was the only thing that would provide relief. But even as a child I was curious enough to try it both ways.

↑ comment by SusanBrennan · 2012-07-15T16:13:09.940Z · LW(p) · GW(p)

The placebo effect strikes me as a decent enough explanation.

Replies from: johnlawrenceaspden↑ comment by johnlawrenceaspden · 2012-07-16T10:10:22.942Z · LW(p) · GW(p)

Maybe, but it also explains why any other thing will cure my hayfever. And shouldn't it go away if I realize it's a placebo? And if I say 'I have this one thing that cures my hayfever reliably, and no other thing does, but it has no mechanism except for the placebo effect', is that very different from 'I have this magic thing?'.

I'm not keen on explanations which don't tell me what to anticipate. But maybe I misunderstand the placebo effect. How would I tell the difference between it and magic?

Replies from: TheOtherDave, Sarokrae↑ comment by TheOtherDave · 2012-07-16T13:15:07.828Z · LW(p) · GW(p)

And if I say 'I have this one thing that cures my hayfever reliably, and no other thing does, but it has no mechanism except for the placebo effect', is that very different from 'I have this magic thing?'.

No, not very. Also, if it turns out that only this one thing works, and no other thing works, then (correcting for the usual expectation effects) that is relatively strong evidence that something more than the placebo effect is going on. Conversely, if it is the placebo effect, I would expect that a variety of substances could replace the sugar pills without changing the effect much.

Another way of putting this is, if I believe that the placebo effect is curing my hayfever, that ultimately means the power to cure my hayfever resides inside my brain and the question is how to arrange things so that that power gets applied properly. If I believe that this pill cures my hayfever (whether via "the placebo effect" or via "magic" or via "science" or whatever other dimly understood label I tack onto the process), that means the power resides outside my brain and the question is how to secure a steady supply of the pill.

Those two conditions seem pretty different to me.

↑ comment by Sarokrae · 2012-07-16T10:35:38.915Z · LW(p) · GW(p)

shouldn't it go away if I realize it's a placebo?

Apparently not. The effect might be less, I don't think the study checked. But once you know it's a placebo and the placebo works, then you're no longer taking a sugar pill expecting nothing, you're taking a sugar pill expecting to get better.

You could tell the difference between the placebo effect and magic by doing a double blind trial on yourself. e.g. Get someone to assign either "magic" pill or identical sugar pill (or solution) with a random number generator for a period where you'll be taking the drug, prepare them and put them in order for you to take on successive days, and write down the order to check later. Then don't talk to them for the period of the experiment. (If you want to talk to them you can apply your own shuffle and write down how to reverse it)

Replies from: johnlawrenceaspden↑ comment by johnlawrenceaspden · 2012-07-16T10:39:13.091Z · LW(p) · GW(p)

Wait, what? I'm taking a stack of identical things and whether they work or not depends on a randomly generated list I've never seen?

Replies from: Sarokrae, TGM↑ comment by Sarokrae · 2012-07-16T10:42:57.594Z · LW(p) · GW(p)

Exactly. You write down your observations for each day and then compare them to the list to see if you felt better on days when you were taking the actual pill.

Only if it's not too costly to check, of course, and sometimes it is.

Edit: I think gwern's done a number of self-trials, though I haven't looked at his exact methodology.

Edit again: In case I haven't been clear enough, I'm proposing a method to distinguish between "sugar pills that are magic" and "regular sugar pills".

Edit3: ninja'd

↑ comment by TGM · 2012-07-16T11:38:00.784Z · LW(p) · GW(p)

If you have a selection of 'magic' sugar pills, and you want to test them for being magic vs placebo effect, you do a study comparing their efficacy to that of 'non-magic' sugar pills.

If they are magic, then you aren't comparing identical things, because only some of them have the 'magic' property

↑ comment by private_messaging · 2012-07-16T17:56:50.476Z · LW(p) · GW(p)

Well, you need it to work better than without the magic sugar water.

My approach is: I believe that the strategy of "if the magic sugar water worked with only 1 in a million probability of 'worked' being obtained by chance without any sugar water, and if only a small number of alternative cures were also tried, then adopt the belief that magic sugar water works" is a strategy that has only small risk of trusting in a non-working cure, but is very robust against unknown unknowns. It works even if you are living in a simulator where the beings-above mess with the internals doing all sorts of weird stuff that shouldn't happen and for which you might be tempted to set very low prior.

Meanwhile the strategy of "make up a very low prior, then update it in vaguely Bayesian manner" has past history of screwing up big time leading up to significant preventable death, e.g. when antiseptic practices invented by this guy were rejected on the grounds of 'sounds implausible', and has pretty much no robustness against unknown unknowns, and as such is grossly irrational (in the conventional sense of 'rational') even though in the magic water example it sounds like awesomely good idea.

↑ comment by Oscar_Cunningham · 2012-07-09T21:56:16.193Z · LW(p) · GW(p)

How many alternatives would you keep inventing before you update your probability of it actually working?

Precisely the hypotheses that are more likely than homeopathy. Once I've falsified those the probability starts pouring into homeopathy. Jaynes' "Probability Theory: The Logic Of Science", explains this really well in Chapter 4 and the "telepathy" example of Chapter 5. In particular I learnt a lot by staring at Figure 4.1.

comment by kilobug · 2012-07-09T13:09:38.326Z · LW(p) · GW(p)

After reading it fully, I've another, deeper problem with this (still great) article : Bayes' theorem totally disappears at the end. Hypothesis that exactly match the observation bitwise have a fixed probability (2^-n where n is their length), and those which are off even by one bit is discarded. There is no updating of probabilities, because hypothesis are always right or wrong. There is no concept left of an hypothesis that'll predict the position of an electron using a probability distribution, and there is no room for an hypothesis like "the coin will fall heads 75% of times, tails 25% of time".

That's both a problem for the article itself (why even speak of Bayes' theorem, if at the end it doesn't matter ?) and to find the "truth" about the universe in a quantum world (even if you accept MWI, what you'll actually observe in the world you end up being in will still be random).