You are not too "irrational" to know your preferences.

post by DaystarEld · 2024-11-26T15:01:42.996Z · LW · GW · 50 commentsContents

1) You are not too stupid to know what you want. 2) Feeling hurt is not a sign of irrationality. 3) Illegible preferences are not invalid. 4) Your preferences do not need to fully match your community's. Final Thoughts None 50 comments

Epistemic Status: 13 years working as a therapist for a wide variety of populations, 5 of them working with rationalists and EA clients. 7 years teaching and directing at over 20 rationality camps and workshops. This is an extremely short and colloquially written form of points that could be expanded on to fill a book, and there is plenty of nuance to practically everything here, but I am extremely confident of the core points in this frame, and have used it to help many people break out of or avoid manipulative practices.

TL;DR: Your wants and preferences are not invalidated by smarter or more “rational” people’s preferences. What feels good or bad to someone is not a monocausal result of how smart or stupid they are.

Alternative titles to this post are "Two people are enough to form a cult" and "Red flags if dating rationalists," but this stuff extends beyond romance and far beyond LW-Rationalism.

I saw forms of it as a college student among various intellectual subcultures. I saw forms of it growing up around non-intellectuals who still ascribed clear positives and negatives to the words "smart" and "stupid." I saw forms of it as a therapist working with people from a variety of nationalities. And of course, my various roles in the rationalist and EA communities have exposed me to a number of people who have been subject to some form of it from friends, romantic partners, or family... hell, most of the time I've heard it coming from someone's parents.

What I'm here to argue against is, put simply, the notion that what feels good or bad to someone is a monocausal result of how smart or stupid they are. There are a lot of false beliefs downstream of that notion, but the main one I'm focusing on here is the idea that your wants or preferences might be invalid because someone "more rational" than you said so.

Because while I've taught extensively about how to defend against "dark arts" emotional manipulation in a variety of flavors, I especially dislike seeing "rationality" used as an authoritative word to shame others into self-coercive narratives.

Rationality, as I use the word, refers to an epistemology that minimizes false beliefs and a course of action that maximally fulfills one’s goals.

If someone else tells you that something you’re doing or thinking is irrational, they need to first demonstrate that they understand your goals, and second demonstrate that they have information you don't, which may inform predictions of why your actions will fail to achieve those goals.

If they can't do that, criticizing your feelings or preferences is not the same thing as offering reasonable critique of your beliefs and actions. Feelings and preferences are not assertions that can be wrong; they’re experiences.

And I think no one should feel ashamed of their own experiential qualia, but I especially don’t want people to ignore their preferences because they're worried about not being "rational" enough.

1) You are not too stupid to know what you want.

Ash and Bryce have just had dinner together, and on the way home Ash spots an ice cream shop.

"Oo, I want to stop in for some ice cream!"

"Why?"

"Because it'll be tasty!"

"But it's just a superstimulus of sugar and dairy that you evolved to find enjoyable. It might make make you gain weight, becoming less attractive, lowering your expected income, and shortening your lifespan. Also that money can be better spent on malaria nets."

"Sure, but I still want it."

"That's stupid."

I hope that most people would recognize that there's something wrong in the above conversation. Maybe Ash is perfectly happy for Bryce to talk at length about the downsides of having ice cream when they express a preference for it, or maybe they understand that Bryce has good intentions and won't take it to heart. Either way, most people would flag "that's stupid," or worse, "you're stupid," as bad communication coming from a friend or partner or family member.

But what about "That's irrational?"

Dun dun duuuuun...

Cue defensiveness, self-doubt, internal conflict. We don't want to be irrational, right? That's like, our whole thing!

Now, there are some people out there who might use this opportunity to say "Exactly, you can't be rational literally all the time! Everyone needs to be irrational sometimes, especially in pursuit of happiness!"

To them I say, "You want people to enjoy their ice cream even if it's 'irrational.' I want people to know that preferences are never 'irrational.' We are not the same."[1]

If you think the ice cream example is too easy, what about career choice? What about being monogamous vs open vs poly? What about how you receive feedback?

The key is recognizing that the preference itself is completely independent from rationality or intelligence.

If you want a partner who helps with housework, even if they make 5x more than you and their time is "worth more?"

Nothing to do with rationality.

If you wish your partner would do the dishes sometimes so you don't always do them, or as a signal that they value your time too?

Still nothing to do with rationality!

If you refuse any other solution to the dish situation and insist that they must do the dishes sometimes or else they don't love you...

Now we have stepped away from pure "wants" or preferences. Now the examination of what's rational makes sense. Your feelings can be understandable and valid, while the thoughts that arise from them can sometimes be false or unjustified... which is to say, (epistemologically) irrational.

And your assertions or ultimatums as a result can sometimes be self-defeating to your broader values or preferences... which is what I mean when I say someone is being (instrumentally) irrational.

But the fundamental preferences you have are not about rationality. Inconsistent actions can be irrational if they’re self-defeating, but “inconsistent preferences” only makes sense if you presume you’re a monolithic entity, or believe your "parts" need to all be in full agreement all the time… which I think very badly misunderstands how human brains work.

If you try different solutions and listen to arguments and ultimately decide you do not want to be with someone who does not help clean the dishes sometimes, no matter how good the relationship is otherwise, many people may find that unusual, but “irrational” presumes that you have the same values and wants as others, and why should that presumption be any more true for this than it is for ice cream flavors?

Wants are not beliefs. They are things you feel.

And no one is too stupid or irrational to know what they feel. Too disembodied or disassociated, yes! Many such cases. But knowing what you feel is not a test of epistemology.[2]

Pause and consider whether you have ever believed you were being stupid or irrational for wanting or not wanting something. Where did that belief come from? Why do you believe it?

Because what I’ve seen, over and over, is that it comes from the expectation, from yourself or others, that if you want something, all your beliefs about it must be true, or all the plans that arise to get it must be followed.

And that's obviously nonsense, on both counts.

Wants are emergent, complex forms of predicted pain and pleasure. They are either felt or they are not felt, and reason only comes in at the stage of deciding what to do about them.

So stop judging your wants, and stop listening to other people who judge them either.

2) Feeling hurt is not a sign of irrationality.

“I don’t see what you’re so upset about,” a hypothetical Bryce may say. “I’m just being honest. I don’t want you to get fat, because I care about you, and eating lots of ice cream may increase the odds that you end up at a higher weight set-point. You want me to be honest, right? I wouldn’t be a true friend if I didn’t point out when I thought you were making an error.”

I think honesty is a virtue. I genuinely believe that people who do their best to be as truthful as possible, not just in direct words but also to avoid misleading others, are demonstrating a sort of deep Goodness. More than that, I think dishonesty consequentially leads to worse worlds in almost every circumstance.

This virtue is not extended to people who repeatedly volunteer "brutal honesty" to their friends or partners or children while knowing it is likely to be painful.

My steelman of the speaker who says "you shouldn't feel hurt by this" probably means something like "it would be better for you, suffering-wise or consequentially, to not get hurt about this, and perceive it as truth untouched by stuck priors."

But that's not how humans work.

It is instrumentally valuable [LW · GW] to be careful how and when you offer criticism. It is not just a sign of caring about others, but also understanding the role of emotion in our cognition. "You should not be hurt by that" or "you should not find that offensive" are words said by people who want humans to work a certain way more than they actually care to understand how human psychology actually functions, let alone how the individual they're speaking to does.

Our feelings are, for the most part, lossy heuristics and blunt motivations for things we evolved or have been conditioned to care about.[3] They are not things to which the word "should" makes sense to attach.

Some people genuinely don't care if they are physically unattractive. Some people genuinely don't care if they are perceived as smart or competent. Some people genuinely don't care if they are "likeable."

But people who do not care about any of those things are extremely rare, because each is a dimension of evolutionary fitness. It is not a sign of strength or "rationality" to not care about them, it is a sign of either extreme abundance or extreme neurodivergence.

(And if someone is trying to explicitly hold their neurodivergent traits up as an in-all-ways-better flex on neurotypical norms, I have a different essay in mind for that sort of compartmentalized blindspot).

It isn't impossible to sometimes "reason" your way into feelings of security and abundance, but that's because our feelings of insecurity and scarcity are, again, lossy heuristics. They are not always accurate, and there are some ways to explicitly think your way through and around the catastrophizing, insecurity, fear, defensiveness, etc that makes certain words hurtful.

But they nearly all require slow, careful exploration. I have never seen them dissolve from brute force, and if someone wields "truth" like a mallet to try and force another person’s behavior or feelings into the shape better suited to their own preferences or ideals, this is fundamentally a hostile act, whether they intend it as such or not. It's the same as shouting in someone's face after they tell you to stop because you think what you're saying is just too important for them to ignore.

To be clear, if someone invites truthful claims or evaluations or feedback, there is nothing wrong with being totally honest in response, even if it might be painful to hear. If the listener gets upset after inviting honesty, that is not the speaker's fault.

But I have seen too many people perpetuating emotionally abusive relationships justify their behavior with "I'm just being honest" to let this pass without clear and unpressured signs that the person hearing constant harsh truths actually wants to hear them that way.

Even worse, if someone takes another person’s pain as evidence that they must have said something true or valuable for them to hear, they are again fundamentally misunderstanding how emotions work.

If someone accuses me of thinking or feeling something hostile that I didn’t think or feel, it might bother me. It might even hurt, if it’s someone I consider a friend. But that hurt would come from feeling unseen or uncared for, completely independent of how accurate their perception was (which by default they certainly do not have better evidence of than I do).

And of course, I should emphasize that no one is perfect. People make mistakes. Grace and forgiveness are important for people to improve, and build robust and healthy relationships. I don't mean to bash anyone who finds learning inhibition difficult, and I think "filtering" is genuinely not equally easy for everyone.

But if you express to a friend or partner or parent or child or even a stranger a clear preference against this sort of communication, and make it clear that you find it hurtful and not helpful, I think effort on their part to try and learn better ways to communicate is an integral signal of caring about you and the relationship.

If they decide they'd rather not, that it's too stifling... that is their right. Maybe the relationship just isn't meant to be, and by all means, people should self-select into the friend groups and romantic partnerships that work for them.

But if they try to justify it as a matter of virtue, or "rationality?" If you ask them to stop and they start quoting the Litany of Gendlin at you and insisting that if you were smarter you'd be thankful that they're willing to honestly tell you how unattractive or stupid you are?

That's not rationality.

I'm gatekeeping the term away from that sort of high-school negging bullshit, and if they continue to insist it is, I will suspect they are gaining negative-sum value from this form of communication which they do not want to lose.

3) Illegible preferences are not invalid.

“Well at least explain why you want the ice cream," an increasingly frustrated Bryce may say. “You have to have a reason for it, right?"

"You just want me to give a reason?"

"Yeah, it doesn't make sense to me."

"The reason is it tastes good and will make me happy."

"Those don't seem like actual reasons to have ice cream specifically. If I find you something tasty but healthier, you'd have that instead, right?"

"Maybe? But I actually just want the ice cream right now."

"Okay, but let's look at this logically..."

There’s a great discussion to be had around the ways knowledge can be legible or illegible, explicit or implicit [LW · GW], as well as situations where putting too much weight on legibility can lead to ignoring or discounting implicit knowledge [LW · GW]. By default, most things we know or think we know are hard to make legible to others. For the speaker, language is a lossy medium through which to compress experiences and concepts, and for the listener, attention and memory have many imperfections that can cause further corruption of transferred knowledge/meaning.

I've got the skeleton of a book written called When to Think and When to Feel, and my hope is that it will help people understand what feelings are "for," what logical thought is "for," and why it's a bad idea to ignore either or confuse one for the other. The header above is how I would sum up about 1/5 of it.

Your preferences do not need to be legible to be valid, and you should never feel like you need to justify or defend them to others.

Models and predictions, certainly! Actions even more so, if they affect other people!

But again, things you want are just extensions of positive and negative valence qualia. "I like vanilla ice cream" and "I dislike chocolate ice cream" are not a matter of rationality or irrationality, and neither are any other preferences you have.

Accept them for what they are, and ignore people who want you not to.

"But what about—"

Yes, yes. Of course it is often helpful, for you or others, if your preferences are legible! Of course your preferences may be contradictory at times! Of course your preferences may not, in fact, all be things you endorse acting on at all times!

But they are not individually invalid just because you have other preferences, or reasons against acting on them, and they are certainly not invalid because someone else doesn't understand them. Legibility is for planning and coordination. It is not for justification.

If someone says "let's be logical about this" or tries to otherwise use "reason" to dissuade, or worse shame, a preference you have, they are not likely to be actually using reason, logic, or "rationality" to point to true things.

And if they do it specifically in a context where they are trying to change your preference to match their own, they may just be using authoritative words to manipulate you.

Please don't get mad at "logic" or "legibility," get mad at people attempting to gaslight you out of your preferences! It's not a different monster than guilt-tripping, it's the same monster wearing a "reason" skinsuit.

"Because I don't want to" is always, always, always a sufficient reason to not do something or not accept something others do that affects you.

This is not a get-out-of-jail-free card! Others may respond accordingly! Your wants do not by default override theirs! Maybe people will judge you for not being able to make your reasoning more legible to them, or feel like they can’t then cooperate with you in some circumstances. Social or legal consequences exist independent of you being true to yourself.

And, of course, you may have other preferences that could make you feel compelled to do things you predict would be unpleasant. But in every case, the first step that makes the most difference is accepting (and being willing to defend) what you want and don't want.

From there you become much more resistant to manipulations of all sorts, and can actually figure out what actions balance all your preferences, both short-term and long-term.

4) Your preferences do not need to fully match your community's.

Every culture and subculture has norms and expectations, things that get socially reinforced or punished, often through status. This is mostly not a conscious action, but the simple flow of how much people like each other; communities having norms is important, as it's the fundamental thing that makes their subculture more enjoyable for the sort of people who formed and gravitated to it in the first place.

But every norm can also act as a mechanism to pressure others into conformity, and if you let your sense of what's right or wrong be determined by a group, not only are you inviting blindspots, you're at risk of being manipulated, even by people with good intentions.

Sometimes people will come into a subculture or community and say "Hey, this place is great and all, but it would be much better if it did X instead of Y." Then maybe things get discussed and changed, or maybe most people in the community say "No thanks, we made this subculture in part to not have that sort of thing in it. We'd rather keep it as it is." If there’s enough fundamental preference mismatches there, then it might make sense to part ways with the community as a whole.[4]

These sorts of dialogue and outcomes are perfectly fine. But one of the surest signs of a High Control Group is discouraging communication with outsiders, whether by punishment or more subtly undermining the judgment of those outside the subculture. A lot of cultures have a norm of "don't air dirty laundry from the family to outsiders," and this helps hide a lot of abuse around the world.

"Don't ask your family/friends about this, they're not 'one of us,' they wouldn't understand... here, let me call our friend/priest/village elder, they can act as a neutral party!"

Is it always a red flag? Not necessarily; it is often true that subcultures have norms that work well for some people in them but not for everyone, and unfortunately larger cultures will often pathologize those differences by default. So an “inside” third-person perspective can be useful!

But both people should have a say in who gets asked, and if either side starts to insist that only their picks are smart or rational or wise enough to be trusted to have a good take, that's pretty sus. Additionally, it doesn't make any sense to do it for disagreeing preferences, as opposed to specific strategies for fulfilling preferences! Any time someone tries, substitute with “He’ll tell us if I’m right for preferring chocolate ice cream over vanilla” and see if that helps.

If instead they try to insist that you just don’t understand all the information needed to agree with them, guess what? Convincing you is their job as the supposedly more rational or better informed person. And that happens not by making you feel worse about yourself, nor by dressing up a browbeating with rationalist shibboleths, but by understanding your model of the world, identifying the different beliefs or values, finding cruxes, and so on.

If they can’t do that, why on earth should you give up on your preferences? In what bizarro world would that sort of acquiescence to someone else’s self-claimed authority be “rational?”

By all means, work to find solutions or compromises where you can, but do not let anyone else tell you that your wants are any more irrational than theirs. Not an entire community of people, nor an entire world of them. If your preferences are inherently self-destructive or self-defeating, the territory should demonstrate that, and there should be compelling examples to point to.

Sometimes preferences are too far out of reach from each other for a solution or compromise to be reached in reasonable timeframes. That sucks, but it happens. If so, the appropriate response may be to grieve, alone or together, and move on. Maybe just changing the nature of the relationship will be enough, or adjusting how much of each person's life is spent with one another.

And sometimes communities do in fact have explicit “preferences” that will cost people status just by having different ones. It might even be costly to find out what those diffuse preferences are, and especially daunting for people new to a community. But if you ultimately do discover that your preferences don’t fully line up with those, hopefully you can find others in the community who differ in the same way.

Meanwhile, if someone insists you’re just "not being rational enough" to concede to some preference or community norm? Separate entirely from all of the above, please be sure to check how representative they are in the community... and don't just trust people they selected to tell you.

Final Thoughts

As I said, this topic contains a lot of nuance that I don't have time to get into here. The frame above is one that treats values and preferences and desires as entirely separate from what’s “rational” in the spirit of the orthogonality thesis, distinguishing goals from capability-to-achieve-goals. There are other frames we could use, like one that highlights the ways our preferences, being the result of natural selection and behavioral conditioning, are thus are fully rational expressions of our genetic predispositions given the complexity and superstimuli of the modern world.

My main intent here is to push back against narratives that try to shove “rationality” into particular value sets, rather than keeping it a pure expression of epistemic rigor and effective action evaluation. Believing that preferences indicate intellectual inadequacy is a subtle, and common, extension of that.

Different brains find different things enjoyable, and I wouldn't be surprised if there is some correlation between intelligence and what sorts of activities a person finds enjoyable for how long before they get bored or frustrated... but there is no value-gain in judging yourself or anyone else by generalities, or putting up with others who do.

Even if your literal job is to record preference correlations to form some Bayesian prior by which to evaluate applicants for a job or something, thinking that someone is less smart or less rational because of a preference is like judging someone’s cooking skills by whether they enjoy a fast food burger.

- ^

If "I'm allowed to be irrational sometimes" is what helps you live a better, less stressful life, feel free to ignore all this and go about your day. My point is that, by my definition of rational, there are many, many circumstances where it is completely rational to decide to eat ice cream (or whatever) rather than donate to malaria nets (or whatever).

- ^

I don't mean to oversimplify this, and could talk for hours on the topic. Wants are often influenced by beliefs! Feelings are often hard to put into legible words! Some people have trouble feeling anything they want as physical sensations, and some things we say we want are only things we think we should want. Beliefs and predictions that influence wants may be false or miscalibrated, but the feeling itself, the want itself, just is what it is, the same way sensations of hunger or heat just are what they are.

Also, "you either do or do not feel a want" is not the same as "you either do now or you never will." Preferences can, and inevitably often do, change over time from new experiences, or repetitions of similar experiences.

- ^

Reminded from a comment to highlight that this is an ongoing process. By no means are your emotional responses or preferences locked-in from some vague "past" or developmental experiences; people can and do change the way they feel about things and acquire new preferences over the course of their life. But in the moment, it is important to note that you cannot arbitrarily change your preferences.

- ^

Though the implications of what it means to “leave” a community should itself be unpacked. It implies that being part of a culture is all-or-nothing, which is more how cults operate than communities. Part of why it’s “healthier” to be part of multiple communities is that it creates a robustness against single-culture pressures. While some people might find only one community that deeply matches their ideals and preferences, it’s still extremely unlikely for one community to match every preference someone has. Instead, a healthy community should include multiple slightly different sub-cultures, so that people can shift from one to another if they feel their preferences aren’t sufficiently matched.

50 comments

Comments sorted by top scores.

comment by Jan_Kulveit · 2024-11-26T23:16:43.124Z · LW(p) · GW(p)

To add some nuance....

While I think this is a very useful frame, particularly for people who have oppressive legibility-valuing parts, and it is likely something many people would benefit from hearing, I doubt this is great as descriptive model.

Model in my view closer to reality is, there isn't that sharp difference between "wants" and "beliefs", and both "wants" and "beliefs" do update.

Wants are often represented by not very legible taste boxes, but these boxes do update upon being fed data. To continue an example from the post, let's talk about literal taste and ice cream. While whether you want or don't want or like or don't like an icecream sounds like pure want, it can change, develop or even completely flip, based on what you do. There is the well known concept of acquired taste: maybe the first time you see a puerh ice cream on offer, it does not seem attractive. Maybe you taste it and still dislike it. But maybe, after doing it a few times, you actually start to like it. The output of the taste box changed. The box will likely also update if some flavour of icecream is very high-status in your social environment; when you will get horrible diarrhea from the meal you ate just before the ice cream; and in many other cases.

Realizing that your preferences can and do develop obviously opens the Pandora's box of actions which do change preferences.[1] The ability to do that breaks orthogonality. Feed your taste boxes slop and you may start enjoying slop. Surround yourself with people who do a lot of [x] and ... it you may find you like and do [x] as well, not because someone told you "it's the rational thing to do", but because learning, dynamics between your different parts, etc.

- ^

Actually, all actions do!

↑ comment by DaystarEld · 2024-11-27T11:45:09.702Z · LW(p) · GW(p)

Completely agree, and for what it's worth, I don't think anything in the frame of my post contradicts these points.

"You either do or do not feel a want" is not the same as "you either do now or you never will," and I note that conditioning is also a cause of preferences, though I will edit to highlight that this is an ongoing process in case it sounds like I was saying it's all locked-in from some vague "past" or developmental experiences (which was not my intent).

↑ comment by SpectrumDT · 2024-11-29T12:57:08.218Z · LW(p) · GW(p)

Realizing that your preferences can and do develop obviously opens the Pandora's box of actions which do change preferences.[1] The ability to do that breaks orthogonality.

Could you please elaborate on how this "breaks orthogonality"? It is unclear to me what you think the ramifications of this are.

comment by johnswentworth · 2024-11-26T17:49:17.404Z · LW(p) · GW(p)

This post would be a LOT better with about half-a-dozen representative real examples you've run into.

Replies from: DaystarEld, avery-liu↑ comment by DaystarEld · 2024-11-27T11:54:10.187Z · LW(p) · GW(p)

I'm a little confused. Do the examples in the post seem purely hypothetical to you? They're all real things I have encountered or heard from others:

- Whether or not it's rational to have ice cream (or other unhealthy indulgences).

- Whether or not wanting your high-earning partner to do housework is reasonable.

- Whether being hurt by unfiltered criticism or judgements is rational.

- Whether being mono vs open vs poly is a sign of rationality.

- Whether your career preference is a sign of how smart or rational you are.

Obviously not all are as equally detailed, and I could always add more, but if it doesn't seem concrete enough yet, I'm not sure what else I should add or in how much detail to hit the target better.

Replies from: johnswentworth↑ comment by johnswentworth · 2024-11-27T19:02:31.652Z · LW(p) · GW(p)

The ice cream snippets were good, but they felt too much like they were trying to be a relatively obvious not-very-controversial example of the problems you're pointing at, rather than a central/prototypical example. Which is good as an intro, but then I want to see it backed up by more central examples.

The dishes example was IMO the best in the post, more like that would be great.

Unfiltered criticism was discussed in the abstract, it wasn't really concrete enough to be an example. Walking through an example conversation (like the ice cream thing) would help.

Mono vs open vs poly would be a great example, but it needs an actual example conversation (like the ice cream thing), not just a brief mention. Same with career choice. I want to see how specifically the issues you're pointing to come up in those contexts.

(Also TBC it's an important post, and I'm glad you wrote it.)

Replies from: DaystarEld↑ comment by DaystarEld · 2024-11-27T21:18:10.621Z · LW(p) · GW(p)

Makes sense! I probably will not have time to dedicate to do this properly over the next few days, but maybe after that.

↑ comment by KvmanThinking (avery-liu) · 2024-11-27T03:04:34.619Z · LW(p) · GW(p)

The above statement could be applied to a LOT of other posts too, not just this one.

comment by Richard_Kennaway · 2024-11-26T16:19:06.342Z · LW(p) · GW(p)

The key is recognizing that the preference itself is completely independent from rationality or intelligence.

The orthogonality thesis is also for human beings.

Replies from: jessica.liu.taylor↑ comment by jessicata (jessica.liu.taylor) · 2024-11-27T01:46:17.908Z · LW(p) · GW(p)

I don't think so; even if it applies to the subset of hypothetical superintelligences that factor neatly into beliefs and values, humans don't seem to factorize this way (see Obliqueness Thesis [LW · GW], esp. argument from brain messiness).

comment by Algon · 2024-11-26T16:24:08.422Z · LW(p) · GW(p)

Reminds me of "Self-Integrity and the Drowning Child [LW · GW]" which talks about another kind of way that people in EA/rat communities are liable to hammer down parts of themselves.

comment by MinusGix · 2024-11-26T19:00:24.493Z · LW(p) · GW(p)

Beliefs and predictions that influence wants may be false or miscalibrated, but the feeling itself, the want itself, just is what it is, the same way sensations of hunger or heat just are what they are.

I think this may be part of the disconnect between me and the article. I often view the short jolt preferences (that you get from seeing an ice-cream shop) as heuristics, as effectively predictions paired with some simpler preference for "sweet things that make me feel all homey and nice". These heuristics can be trained to know how to weigh the costs, though I agree just having a "that's irrational" / "that's dumb" is a poor approach to it. Other preferences, like "I prefer these people to be happy" are not short-jolts but rather thought about and endorsed values that would take quite a bit more to shift—but are also significantly influenced by beliefs too.

Other values like "I enjoy this aesthetic" seem more central to your argument than short-jolts or considered values.

This is why you could view a smoker's preference for another cigarette as irrational: the 'core want' is just a simple preference for the general feel of smoking a cigarette, but the short-jolt preference has the added prediction of "and this will be good to do". But that added prediction is false and inconsistent with everything they know. The usual statement of "you would regret this in the future". Unfortunately, the short-jolt preference often has enough strength to get past the other preferences, which is why you want to downweight it.

So, I agree that there's various preferences that having them is disentangled from whether you're rational or not, but that I also think most preferences are quite entangled with predictions about reality.

“inconsistent preferences” only makes sense if you presume you’re a monolithic entity, or believe your "parts" need to all be in full agreement all the time… which I think very badly misunderstands how human brains work.

I agree that humans can't manage this, but it does still make sense for a non-monolithic entity—You'd take there being an inconsistency as a sign that there's a problem, which is what people tend to do, even if ti can't be fixed.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2024-11-27T14:38:02.855Z · LW(p) · GW(p)

Commenting on a relatively isolated point in what you wrote; none of this affects your core point about preferences being entangled with predictions (actually it relies on it).

This is why you could view a smoker's preference for another cigarette as irrational: the 'core want' is just a simple preference for the general feel of smoking a cigarette, but the short-jolt preference has the added prediction of "and this will be good to do". But that added prediction is false and inconsistent with everything they know. The usual statement of "you would regret this in the future".

I think that the short-jolt preference's prediction is actually often correct; it's just over a shorter time horizon. The short-term preference predicts that "if I take this smoke, then I will feel better" and it is correct. The long-term preference predicts that "I will later regret taking this smoke, " and it is also correct. Neither preference is irrational, they're just optimizing over different goals and timescales.

Now it would certainly be tempting to define rationality as something like "only taking actions that you endorse in the long term", but I'd be cautious of that. Some long-term preferences are genuinely that, but many of them are also optimizing for something looking good socially, while failing to model any of the genuine benefits of the socially-unpopular short-term actions.

For example, smoking a cigarette often gives smokers a temporary feeling of being in control, and if they are going out to smoke together with others, a break and some social connection. It is certainly valid to look at those benefits and judge that they are still not worth the long-term costs... but frequently the "long-term" preference may be based on something like "smoking is bad and uncool and I shouldn't do it and I should never say that there could be a valid reason to do for otherwise everyone will scold me".

Then by maintaining both the short-term preference (which continues the smoking habit) and the long-term preference (which might make socially-visible attempts to stop smoking), the person may be getting the benefit from smoking while also avoiding some of the social costs of continuing.

This is obviously not to say that the costs of smoking would only be social. Of course there are genuine health reasons as well. But I think that quite a few people who care about "health" actually care about not appearing low status by doing things that everyone knows are unhealthy.

Though even if that wasn't the case - how do you weigh the pleasure of a cigarette now, versus increased probability of various health issues some time in the future? It's certainly very valid to say that better health in the future outweighs the pleasure in the now, but there's also no objective criteria for why that should be; you could equally consistently put things other way around.

So I don't think that smoking a cigarette is necessarily irrational in the sense of making an incorrect prediction. It's more like a correct but only locally optimal [LW · GW] prediction. (Though it's also valid to define rationality as something like "globally optimal behavior", or as the thing that you'd do if you got both the long-term and the short-term preference to see each other's points and then make a decision that took all the benefits and harms into consideration.)

Replies from: MinusGix, xpym↑ comment by MinusGix · 2024-11-27T17:54:33.689Z · LW(p) · GW(p)

I define rationality as "more in line with your overall values". There are problems here, because people do profess social values that they don't really hold (in some sense), but roughly it is what they would reflect on and come up with.

Someone could value the short-term more than the long-term, but I think that most don't. I'm unsure if this is a side-effect of Christianity-influenced morality or just a strong tendency of human thought.

Locally optimal is probably the correct framing, but that it is irrational relative to whatever idealized values the individual would have. Just like how a hacky approximation of a Chess engine is irrational relative to Stockfish—they both can be roughly considered to have the same goal, just one has various heuristics and short-term thinking that hampers it. These heuristics can be essential, as it runs with less processing power, but in the human mind they can be trained and tuned.

Though I do agree that smoking isn't always irrational: I would say smoking is irrational for the supermajority of human minds, however. The social negativity around smoking may be what influences them primarily, but I'd consider that just another fragment of being irrational— >90% of them would have a value for their health, but they are varying levels of poor at weighting the costs and the social negativity response is easier for the mind to emulate. Especially since they might see people walking around them while they're out taking a cigarette. (Of course, the social approval is some part of a real value too; though people have preferences about which social values they give into)

↑ comment by xpym · 2024-11-29T10:06:38.830Z · LW(p) · GW(p)

Now it would certainly be tempting to define rationality as something like “only taking actions that you endorse in the long term”, but I’d be cautious of that.

Indeed, and there's another big reason for that - trying to always override your short-term "monkey brain" impulses just doesn't work that well for most people. That's the root of akrasia, which certainly isn't a problem that self-identified rationalists are immune to. What seems to be a better approach is to find compromises, where you develop workable long-term strategies which involve neither unlimited amounts of proverbial ice cream, nor total abstinence.

But I think that quite a few people who care about “health” actually care about not appearing low status by doing things that everyone knows are unhealthy.

Which is a good thing, in this particular case, yes? That's cultural evolution properly doing its job, as far as I'm concerned.

Replies from: Kaj_Sotala↑ comment by Kaj_Sotala · 2024-11-29T11:25:24.962Z · LW(p) · GW(p)

Indeed, and there's another big reason for that - trying to always override your short-term "monkey brain" impulses just doesn't work that well for most people.

+1.

Which is a good thing, in this particular case, yes?

Less smoking does seem better than more smoking. Though generally it doesn't seem to me like social stigma would be a very effective way of reducing unhealthy behaviors - lots of those behaviors are ubiquitous despite being somewhat low-status. I think the problem is at least threefold:

- As already mentioned, social stigma tends to cause optimization to avoid having the appearance of doing the low-status thing, instead of optimization to avoid doing the low-status thing. (To be clear, it does cause the latter too, but it doesn't cause the latter anywhere near exclusively.)

- Social stigma easily causes counter-reactions where people turn the stigmatized thing into an outright virtue, or at least start aggressively holding that it's not actually that bad.

- Shame makes things wonky in various ways. E.g. someone who feels they're out of shape may feel so much shame about the thought of doing badly if they try to exercise, they don't even try. For compulsive habits like smoking, there's often a loop where someone feels bad, turns to smoking to feel momentarily better, then feels even worse for having smoked, then because they feel even worse they are drawn even more strongly into smoking to feel momentarily better, etc.

I think generally people can maintain healthy habits much more consistently if their motivation comes from genuinely believing in the health benefits and wanting to feel better. But of course that's harder to spread on a mass scale, especially since not everyone actually feels better from healthy habits (e.g. some people feel better from exercise but some don't).

Then again, for the specific example of smoking in particular, stigma does seem to have reduced the amount of it (in part due to mechanisms like indoor smoking bans), so sometimes it does work anyway.

Replies from: xpym↑ comment by xpym · 2024-11-29T12:18:30.720Z · LW(p) · GW(p)

Though generally it doesn’t seem to me like social stigma would be a very effective way of reducing unhealthy behaviors

I agree, as far as it goes, but surely we shouldn't be quick to dismiss stigma, as uncouth as it might seem, if our social technology isn't developed enough yet to actually provide any very effective approaches instead? Humans are wired to care about status a great deal, so it's no surprise that traditional enforcement mechanisms tend to lean heavily into that.

I think generally people can maintain healthy habits much more consistently if their motivation comes from genuinely believing in the health benefits and wanting to feel better.

Humans are also wired with hyperbolic discounting, which doesn't simply go away when you brand it as an irrational bias. (I do in general feel that this community is too quick to dismiss "biases" as "irrational", they clearly were plenty useful in the evolutionary environment, and I'd guess still aren't quite as obsolete as the local consensus would have it, but that's a different discussion.)

comment by eukaryote · 2024-11-27T21:02:59.831Z · LW(p) · GW(p)

I really like this post. Thanks for explaining a complicated thing well!

I think this dynamic in relationships, especially in a more minor form, sometimes emerges from a thing where, like ... Especially if you're used to talking with your partner about brains and preferences and philosophy and rationality and etc - like, a close partner who you hang out day-to-day with is interesting! You get access to someone else making different decisions than you'd make, with different heuristics!

When you want to do something hedonic with potential downsides, you know you've thought about the tradeoffs. You're making a rational decision (of course). But this other person? Well, what's going on in their head? And you ask them and they can't immediately explain their process in a way that makes sense to you? Well, let's get into that! You care about them! What if they're making a mistake?

This isn't always bad. Sometimes this can be an interesting and helpful exploration to do together. The thing is that from the other side, this can be indistinguishable from "my partner demands I justify things that make me happy and then criticizes whatever I say", which sucks incredibly and is bad.

If you think you might be the offending partner in this particular situation, some surface-level ideas for not getting to that point:

- Get a sense of the other person, and how into this kind of thing, as applied to them, they actually are. You can ask them outright but probably also want a vibe of like "do they participate enthusiastically and non-defensively".

- People also often have boundaries or topics they're sensitive about. For instance, a lot of women have been policed obnoxiously and repeatedly about their weight and staying attractive - for the ice cream example in particular this could be a painful thing to stray into. Everyone's are different, you probably have your own, keep this in mind.

- Interrogate your own preferences vocally and curiously as often as you do theirs.

- Are you coming at it from a place of curiosity and observation? Like, you're going to support them in doing whatever they want and just go like "huh, people are so interesting, I love you in all your manifold complexity" even if you don't ultimately understand, right?

- If you think you might be doing this in the moment, pause and ask your interlocutor if they're okay with this and if they're feeling judged. Perhaps reaffirm that you're not doing this as a criticism. (If you are doing it as a criticism, that's kind of beyond the scope of this comment, but refer to the original post + ask them and yourself if this is the time and place, and if it's any of your business.)

- Remember whatever you learned from last time and don't keep having the same conversation. Also, don't do it all the time.

↑ comment by DaystarEld · 2024-11-28T16:31:05.805Z · LW(p) · GW(p)

Excellent points! Yes, this is definitely a fun and interesting thing to engage with intellectually so long as both people feel like it's being done in a non-judgmental or agendic way. Part of why I included the paragraphs about non-filtering being hard for some people is that I know there are some brains for which this genuinely doesn't feel like it "should" be hostile or pressurey, since they don't perceive it that way... but as in all things, that's why your point about actually paying attention to what the other person says and taking it seriously is so important.

↑ comment by kave · 2024-11-27T22:32:07.517Z · LW(p) · GW(p)

as applied to them

A slight nitpick: I think this treats their like of the activity applied to them as a scalar, but I think it's also plausibly a function of how you, the applier, go about it. Like maybe they are very in to this activity as applied to them, but not in the way you do it.

Replies from: eukaryote↑ comment by SpectrumDT · 2024-11-29T13:01:16.067Z · LW(p) · GW(p)

which sucks incredibly and is bad.

Your wording here makes me curious: Are you saying the same thing twice here, or are you saying two different things? Does the phrase "X sucks" mean the same thing to you as "X is bad", or is there a distinction?

Replies from: eukaryote↑ comment by eukaryote · 2024-11-29T20:30:38.809Z · LW(p) · GW(p)

Mostly saying the same thing twice, a rhetorical flourish. I guess just really doubling down on how this is not good, in case the reader was like "well this sucks incredibly but maybe there's a good upside" and then got to the second part and was like "ah no I see now it is genuinely bad", or vice versa.

comment by Dagon · 2024-11-26T17:41:38.167Z · LW(p) · GW(p)

I'd enjoy some acknowledgement that there IS an interplay between cognitive beliefs (based on intelligent modeling of the universe and other people) and intuitive experienced emotions. "not a monocausal result of how smart or stupid they are" does not imply total lack of correlation or impact. Nor does it imply that cognitive ability to choose a framing or model is not effective in changing one's aliefs and preferences.

I'm fully onboard with countering the bullying and soldier-mindset debate techniques that smart people use against less-smart (or equally-smart but differently-educated) people. I don't buy that everyone is entitled to express and follow any preferences, including anti-social or harmful-to-others beliefs. Some things are just wrong in modern social contexts.

Replies from: DaystarEld↑ comment by DaystarEld · 2024-11-26T22:54:29.825Z · LW(p) · GW(p)

To your first point, I do believe the post covers this; specifically, the idea that e.g. frames and predictions can be mistaken, and correcting those mistakes can change emotional reactivity. Is that not what you mean?

For the second point... if they follow their preferences, they are acting, and if they are wrong it's because it causes harm, no? I do not believe preferences themselves, or expressing them, should ever be considered wrong; that seems an artifact of Puritanical norms and fears.

Replies from: xpym↑ comment by xpym · 2024-11-29T10:34:47.167Z · LW(p) · GW(p)

I do not believe preferences themselves, or expressing them, should ever be considered wrong

Suppose that you have a preference for inflicting suffering on others. You also have a preference for being a nice person, that other people enjoy the company of. Clearly those preferences would be in constant conflict, which would likely cause you discomfort. This doesn't mean that either of those preferences is "bad", in a perfectly objective cosmic sense, but such a definition of "bad" doesn't seem particularly useful.

Replies from: DaystarEld↑ comment by DaystarEld · 2024-11-29T11:09:53.405Z · LW(p) · GW(p)

The implication that the preference itself is bad only works with assumptions that the preference will cause harm, to yourself or others, even if you don't act on it. But I don't think this is always true; it's often a matter of degree or context, and how the person's inner life works.

We could certainly say it is inconvenient or dysfunctional to have a preference that causes suffering for the self or others, and maybe that's what you mean by "bad." But this still doesn't justify the assertion that "expressing" the preference is "wrong." That's the thing that feels particularly presumptuous, to me, about how preferences should be distinguished from actions.

Replies from: xpym↑ comment by xpym · 2024-11-29T12:00:50.861Z · LW(p) · GW(p)

But I don’t think this is always true

Neither do I, of course, but my impression was that you thought this was never true.

But this still doesn’t justify the assertion that “expressing” the preference is “wrong.”

I do agree that the word "wrong" doesn't feel appropriate here, something like "ill-advised" might work better instead. If you're a sadist, or a pedophile, making this widely known is unlikely to be a wise course of action.

Replies from: DaystarEld↑ comment by DaystarEld · 2024-12-15T01:03:46.954Z · LW(p) · GW(p)

Right, those words definitely seem more accurate to me!

comment by David Gross (David_Gross) · 2024-11-26T15:56:33.229Z · LW(p) · GW(p)

Wants are emergent, complex forms of pain and pleasure. They are either felt or they are not felt, and reason only comes in at the stage of deciding what to do about them.

Are you really certain that one's desires are just givens that one has no rational influence over? I'm skeptical.

https://www.lesswrong.com/posts/aQQ69PijQR2Z64m2z/notes-on-temperance#Can_we_shape_our_desires_

Replies from: DaystarEld↑ comment by DaystarEld · 2024-11-26T16:19:02.128Z · LW(p) · GW(p)

I don't see how your question contradicts my statement, nor that link. People absolutely develop in their desires over time, and can change them, but that is not the same as being able to decide, in the moment, that you do not like the taste of pizza if your tongue is having the sensory experience of enjoying it.

comment by cubefox · 2024-12-05T05:23:23.792Z · LW(p) · GW(p)

Let me politely disagree with this post. Yes, often desires ("wants") are neither rational nor irrational, but that's far from always the case. Let's begin with this:

But the fundamental preferences you have are not about rationality. Inconsistent actions can be irrational if they’re self-defeating, but “inconsistent preferences” only makes sense if you presume you’re a monolithic entity, or believe your "parts" need to all be in full agreement all the time… which I think very badly misunderstands how human brains work.

In the above quote you could simply replace "preferences" with "beliefs". The form of argument wouldn't change, except that you now say (absurdly) that beliefs, like preferences, can't be irrational. I disagree with both.

One example of irrational desires is Akrasia (weakness of will). This phenomenon occurs when you want something (eat unhealthy, procrastinate, etc) but do not want to want it. In this case the former desire is clearly instrumentally irrational. This is a frequent and often serious problem and adequately labeled "irrational".

Note that this is perfectly compatible with the brain having different parts: E.g.: The (rather stupid) cerebellum wants to procrastinate, the (smart) cortex wants to not procrastinate. When in contradiction, you should listen to your cortex rather than to your cerebellum. Or something like that. (Freud called the stupid part of the motivation system the "id" and the smart part the "super ego".)

Such irrational desires are not reducible to actions. An action can fail to obtain for many reasons (perhaps it presupposed false beliefs) but that doesn't mean the underlying desire wasn't irrational.

Wants are not beliefs. They are things you feel.

Feelings and desires/"wants" are not the same. It's the difference between hedonic and preference utilitarianism. Desires are actually more similar to beliefs, as both are necessarily about something (the thing which we believe or desire), whereas feelings can often just be had, without them being about anything. E.g. you can simply feel happy without being happy about something specific. (Philosophers call mental states that are about something "intentional states" or "propositional attitudes".)

Moreover, sets of desires, just like sets of beliefs, can be irrational ("inconsistent"). For example, if you want x to be true and also want not-x to be true. That's irrational, just like believing x while also believing not-x. A more complex example from utility theory: If describes your degrees of belief in various propositions, and describes your degrees of desire that various proposition are true, and , then . In other words, if you believe two propositions to be mutually exclusive, your expected desire for their disjunction should equal the sum of your expected desires for the individual propositions, a form of weighted average.

comment by Michael Cohn (michael-cohn) · 2024-11-29T14:58:17.855Z · LW(p) · GW(p)

I'm a little surprised by how you view the subtext of the ice cream example. If I imagine myself in either role, I would not interpret Bryce as saying Ash shouldn't like ice cream in some very base sense. I interpret conversations like that as meaning either:

1) "You might have a desire for X but you shouldn't indulge that desire because it has net bad consequences"

or

2) "If you knew all the negative things that X causes, it would spoil your enjoyment of it and you wouldn't be attracted to it anymore."

or

3) "If you knew all the negative things that X causes, your hedonic attraction to the better world that not-x would create would outweigh your hedonic attraction to the experience of X."

Those can all create some kind of unhealthy or manipulative dynamic but I don't see them as the same thing you're saying, which is more like 4) "it's wrong and stupid to enjoy the physical sensation of eating ice cream."

Do you agree with my reading that 1-3 are different from what you're talking about, or do you think they're included within it?

Replies from: DaystarEld↑ comment by DaystarEld · 2024-11-30T00:45:40.672Z · LW(p) · GW(p)

I agree that those are the thoughts at the surface-level of Bryce in those situations, and they are not the same as "it's wrong/stupid to enjoy eating ice cream."

But I think in many cases, they often do imply "and you are stupid/irrational if knowing these things does not spoil your enjoyment or shift your hedonic attractor." And even if Bryce genuinely doesn't feel that way, I hope they would still be very careful with their wording to avoid that implication.

Replies from: michael-cohn↑ comment by Michael Cohn (michael-cohn) · 2024-12-01T14:51:41.067Z · LW(p) · GW(p)

Thanks, that clears up a lot for me! And it makes me think that the perspective you encourage has a lot of connections to other important habits of mind, like knowing how to question automatic thoughts and system 1 conclusions without beating yourself up for having them.

comment by FlorianH (florian-habermacher) · 2024-11-26T22:52:41.480Z · LW(p) · GW(p)

Taking what you write as excuse to nerd a bit about Hyperbolic Discounting

One way to paraphrase esp. some of your ice cream example:

Hyperbolic discounting - the habit of valuing this moment a lot while abruptly (not smoothly exponentially) discounting everything coming even just a short while after - may in a technical sense be 'time inconsistent', but it's misguided to call it 'irrational' in the common usage of the term: My current self may simply care about itself distinctly more than about the future selves, even if some of these future selves are forthcoming relatively soon. It's my current self's preference structure, and preferences are not rational or irrational, basta.

I agree and had been thinking this, and I find it an interesting counterpoint to the usual description of hyperbolic discounting as 'irrational'.

It is a bit funny also as we have plenty of discussions trying to explain when/why some hyperbolic discounting may actually be "rational" (ex. here, here, here), but I've not yet seen any so fundamental (and simple) rejection of the notion of irrationality (though maybe I've just missed it so far).

(Then, with their dubious habits of using common terms in subtly misleading ways, fellow economists may rebut that we have simply defined irrationality in this debate as meaning to have non-exponential alias time-inconsistent preferences, justifying the term 'irrationality' here quasi by definition)

comment by Matt Goldenberg (mr-hire) · 2024-11-26T16:27:34.737Z · LW(p) · GW(p)

Do you think wants that arise from conscious thought processes are equally valid to wants that arise from feelings? How do you think about that?

Replies from: DaystarEld, AnthonyC↑ comment by DaystarEld · 2024-11-26T16:46:02.971Z · LW(p) · GW(p)

I think wants that arise from conscious thought are, fundamentally, wants that arise from feelings attached to those conscious thoughts. The conscious thought processes may be mistaken in many ways, but they still evoke memories or predictions that trigger emotions associated with imagined world-states, which translate to wants or not-wants.

↑ comment by AnthonyC · 2024-11-26T16:50:50.577Z · LW(p) · GW(p)

Good question. Curious to hear what the OP thinks, too.

Personally I'm not convinced that the results of a conscious process are actually "wants" in the sense the described here, until they become more deeply internalized. Like, obviously if I want ice cream it's partly because at some point I consciously chose to try it and (plausibly) wanted to try it. But I don't know that I can choose to want something, as opposed to putting myself in the position of choosing something with the hope or expectation that will come to want it.

The way I think about it, I can choose to try things, or do things. I can choose to want to want things, or want to like things. As I try and do the things I want to want and like, I may come to want them. I can use various techniques to make those subconscious changes faster, easier, or more likely. But I don't think I can choose to want things.

I do think this matters, because in the long run, choosing to not do or get the things you want, in favor of the things you consciously think you should want, or want to want, but don't, is not good for mental health.

comment by Going Durden (going-durden) · 2024-12-05T14:47:05.822Z · LW(p) · GW(p)

My take is that a lot of wants, is followed, run afoul of the Cigarette Principle: "If you smoke enough cigarettes, you will die and become unable to smoke cigarettes".

Or to expand it, following irrational wants quite often leads to outcomes so bad as to more than negate the pleasure derived from fulfilling the want, quite often to the point of making the future happiness from following such want impossible, or very unlikely.

The problem is, the vast majority of wants, if pursued by anything less than rational moderation, leads to a form of Cigarette Principle, the prime example being that the main cause of death in modern times is lifestyle related cardiac failure. Thus preferences should be considered internally suspicious and examined carefully for traps, rather than reflexively defended.

People are relatively good at spotting when Thing I Want and Thing That's Good For Me are the same thing, but bad at seeing when these things are misaligned, so the best course of action is to consciously train yourself to like or unlike things based on whether they are Cigarettes in disguise or not.

comment by japancolorado (russell-white) · 2024-12-01T21:49:17.316Z · LW(p) · GW(p)

“Well at least explain why you want the ice cream," an increasingly frustrated Bryce may say. “You have to have a reason for it, right?"

"You just want me to give a reason?"

"Yeah, it doesn't make sense to me."

"The reason is it tastes good and will make me happy."

"Those don't seem like actual reasons to have ice cream specifically. If I find you something tasty but healthier, you'd have that instead, right?"

"Maybe? But I actually just want the ice cream right now."

"Okay, but let's look at this logically..."

This example seems like it's not proving the point set out; Bryce doesn't seem to be critiquing his partner's desires for something that tastes good and makes her happy(her wants), but rather the action of buying ice cream that fulfills those goals. He's assuming that she also shares a value/desire for good health(which I would say is a reasonable assumption for most people) and Bryce tries to lay out an alternate course of action that would fulfill all those values.

This seems like a specific instance of a broader issue throughout the post, which is that want is sometimes used to mean an intrinsic value and sometimes used to mean an instrumental value, making the treatment of both confused. Intrinsic/terminal values can't be irrational, like you've said, but instrumental values certainly can be. If you have a terminal value of being happy and content in life, I would say that wanting money would be instrumentally irrational value/desire.

In the ice cream narrative above, Bryce is critiquing her instrumental values rather than her terminal values, and in theory I don't see much wrong with that. If she explains her reasoning behind choosing ice cream as a terminal value and Bryce still rejects it, or if it turns out she just intrinsically values eating ice cream, then that is a problem for their relationship.

In fact, I think it's the last two sentences that make the exchange problematic. It seems like she is expressing an illegible preference for ice cream that Bryce is just dismissing.

Replies from: DaystarEld↑ comment by DaystarEld · 2024-12-03T20:55:09.461Z · LW(p) · GW(p)

It is the case that Bryce is, ostensibly, just trying to help Ash fulfill their terminal goals while being healthier. The problem is that Bryce presumes that of the available action space, ice cream is fungible for something else that is healthier, and does not listen when Ash reasserts that ice cream itself is the thing they want.

Just because it is a safe bet that Ash will share the value/desire for good health does not mean Ash must prioritize good health in every action they take.

Replies from: russell-white↑ comment by japancolorado (russell-white) · 2024-12-04T07:21:05.395Z · LW(p) · GW(p)

I agree with that. I think that the general ick that I get from the dialogue is the presumption and general tone of Bryce. Thanks for clarifying!

comment by SpectrumDT · 2024-11-29T12:55:02.643Z · LW(p) · GW(p)

And sometimes communities do in fact have explicit “preferences” that will cost people status just by having different ones. It might even be costly to find out what those diffuse preferences are, and especially daunting for people new to a community.

Could you please give some examples of this? It is unclear to me what kind of things you are talking about here.

Replies from: DaystarEld↑ comment by DaystarEld · 2024-11-30T01:05:30.709Z · LW(p) · GW(p)

Sure. So, there are some workplaces have implicit cultural norms that aren't written down but are crucial for career advancement. Always being available and responding to emails quickly might be an unspoken expectation, or participating in after-work social events might not be mandatory but would be noted and count against people looking for promotion. Certain dress codes or communication styles might be rewarded or penalized beyond their actual professional relevance.

In a community, this usually comes as a form of purity testing of some kind, but can also be related to preferences around how you socialize or what you spend your time doing. If you're in a community that thinks sex-work is low status, for example, and you want to ask if that's true... just asking might in fact be costly, because it might clue people in to your potential interest in doing it.

Does that make sense?

↑ comment by SpectrumDT · 2024-12-02T12:12:29.987Z · LW(p) · GW(p)

Yes. Thanks. Good explanation.

comment by exmateriae (Sefirosu) · 2024-11-28T16:38:18.983Z · LW(p) · GW(p)

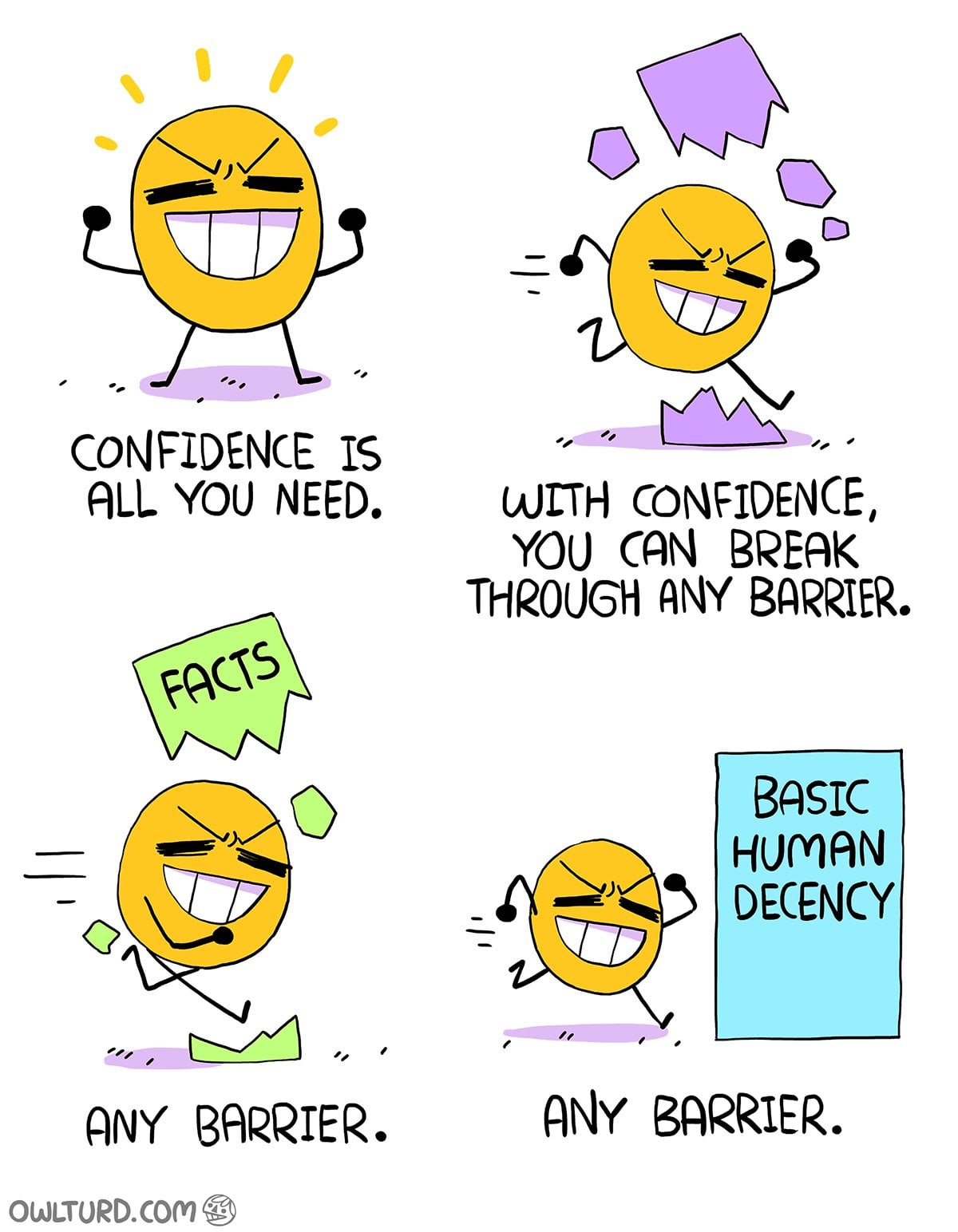

Love the drawings! I feel like more drawings would make this community easier to understand to outsiders.

I'm guilty of n°3 when people defend some hype products while saying untrue things about how they are better than the competition. Obvious example about Apple's products "doing something no other phone does" when the functionality in question was introduced 7 years ago on Android. Not exactly the same since they do not frame it as preference but try to rationalize it but I'll remember this next time to be nicer.

Replies from: DaystarEld↑ comment by DaystarEld · 2024-11-28T17:53:01.455Z · LW(p) · GW(p)

Ah, yeah I definitely struggle a bit sometimes with people who make objective-assertion-type-statements when promoting or defending things they enjoy. I also gain quite a lot of enjoyment from looking at various kinds of media with a critical eye; I just do my best to keep that criticism in contexts where the listener or reader wants to share it :)

comment by Joachim Bartosik (joachim-bartosik) · 2024-11-27T12:01:19.706Z · LW(p) · GW(p)

If they can’t do that, why on earth should you give up on your preferences? In what bizarro world would that sort of acquiescence to someone else’s self-claimed authority be “rational?”

Well if they consistently make recommendations that in retrospect end up looking good then maybe you're bad at understanding. Or maybe they're bad at explaining. But trusting them when you don't understand their recommendation is exploitable so maybe they're running a strategy where they deliberately make good recommendations with poor explanations so when you start trusting them they can start mixing in exploitative recommendations (which you can't tell apart because all recommendations have poor explanations).

So I'd really rather not do that in community context. There are ways to work with that. Eg. boss can skip some details of employees recommendations and if results are bad enough fire the employee. On the other hand I think it's pretty common for employee to act in their own interest. But yeah, we're talking principal-agent problem at that point and tradeoffs what's more efficient...

comment by Isekka · 2024-11-28T18:57:52.446Z · LW(p) · GW(p)

This will be the last post LessWrong ever needs about self-management/how to deal with preferences, if people now just stop posting instruction manuals for how to repress your id and fake more socially desirable preferences to LessWrong. Which of course won't happen.