Self-Integrity and the Drowning Child

post by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2021-10-24T20:57:01.742Z · LW · GW · 91 commentsContents

92 comments

(Excerpted from "mad investor chaos and the woman of asmodeus", about an unusually selfish dath ilani, "Keltham", who dies in a plane accident and ends up in Cheliax, a country governed by D&D!Hell. Keltham is here remembering an incident from his childhood.)

And the Watcher told the class a parable, about an adult, coming across a child who'd somehow bypassed the various safeguards around a wilderness area, and fallen into a muddy pond, and seemed to be showing signs of drowning (for they'd already been told, then, what drowning looked like). The water, in this parable, didn't look like it would be over their own adult heads. But - in the parable - they'd just bought some incredibly-expensive clothing, costing dozens of their own labor-hours, and less resilient than usual, that would be ruined by the muddy water.

And the Watcher asked the class if they thought it was right to save the child, at the cost of ruining their clothing.

Everyone in there moved their hand to the 'yes' position, of course. Except Keltham, who by this point had already decided quite clearly who he was, and who simply closed his hand into a fist, otherwise saying neither 'yes' nor 'no' to the question, defying it entirely.

The Watcher asked him to explain, and Keltham said that it seemed to him that it was okay for an adult to take an extra fifteen seconds to strip off all their super-expensive clothing and then jump in to save the child.

The Watcher invited the other children to argue with Keltham about that, which they did, though Keltham's first defense, that his utility function was what it was, had not been a friendly one, or inviting of further argument. But they did eventually convince Keltham that, especially if you weren't sure you could call in other help or get attention or successfully drag the child's body towards help, if that child actually did drown - meaning the child's true life was at stake - then it would make sense to jump in right away, not take the extra risk of waiting another quarter-minute to strip off your clothes, and bill the child's parents' insurance for the cost. Or at least, that was where Keltham shifted his position, in the face of that argumentative pressure.

Some kids, at that point, questioned the Watcher about this actually being a pretty good point, and why wouldn't anyone just bill the child's parents' insurance.

To which the Watcher asked them to consider hypothetically the case where insurance refused to pay out in cases like that, because it would be too easy for people to set up 'accidents' letting them bill insurances - not that this precaution had proven to be necessary in real life, of course. But the Watcher asked them to consider the Least Convenient Possible World where insurance companies, and even parents, did need to reason like that; because there'd proven to be too many master criminals setting up 'children at risk of true death from drowning' accidents that they could apparently avert and claim bounties on.

Well, said Keltham, in that case, he was going right back to taking another fifteen seconds to strip off his super-expensive clothes, if the child didn't look like it was literally right about to drown. And if society didn't like that, it was society's job to solve that thing with the master criminals. Though he'd maybe modify that if they were in a possible-true-death situation, because a true life is worth a huge number of labor-hours, and that part did feel like some bit of decision theory would say that everyone would be wealthier if everyone would sacrifice small amounts of wealth to save huge amounts of somebody else's wealth, if that happened unpredictably to people, and if society was also that incompetent at setting up proper reimbursements. Though if it was like that in real life instead of the Least Convenient Possible World, it would mean that Civilization was terrible at coordination and it was time to overthrow Governance and start over.

This time the smarter kids did not succeed in pushing Keltham away from his position, and after a few more minutes the Watcher called a halt to it, and told the assembled children that they had been brought here today to learn an important lesson from Keltham about self-integrity.

Keltham is being coherent, said the Watcher.

Keltham's decision is a valid one, given his own utility function (said the Watcher); you were wrong to try to talk him into thinking that he was making an objective error.

It's easy for you to say you'd save the child (said the Watcher) when you're not really there, when you don't actually have to make the sacrifice of what you spent so many hours laboring to obtain, and would you all please note how none of you even considered about whether or not to spend a quarter-minute stripping off your clothes, or whether to try to bill the child's parents' insurance. Because you were too busy showing off how Moral you were, and how willing to make Sacrifices. Maybe you would decide not to do it, if the fifteen seconds were too costly; and then, any time you spent thinking about it, would also have been costly; and in that sense it might make more sense given your own utility functions (unlike Keltham's) to rush ahead without taking the time to think, let alone the time to strip off your expensive fragile clothes. But labor does have value, along with a child's life; and it is not incoherent or stupid for Keltham to weigh that too, especially given his own utility function - so said the Watcher.

Keltham did have enough dignity, by that point in his life, not to rub it in or say 'told you so' to the other children, as this would have distracted them from the process of updating.

The Watcher spoke on, then, about how most people have selfish and unselfish parts - not selfish and unselfish components in their utility function, but parts of themselves in some less Law-aspiring way than that. Something with a utility function, if it values an apple 1% more than an orange, if offered a million apple-or-orange choices, will choose a million apples and zero oranges. The division within most people into selfish and unselfish components is not like that, you cannot feed it all with unselfish choices whatever the ratio. Not unless you are a Keeper, maybe, who has made yourself sharper and more coherent; or maybe not even then, who knows? For (it was said in another place) it is hazardous to non-Keepers to know too much about exactly how Keepers think.

It is dangerous to believe, said the Watcher, that you get extra virtue points the more that you let your altruistic part hammer down the selfish part. If you were older, said the Watcher, if you were more able to dissect thoughts into their parts and catalogue their effects, you would have noticed at once how this whole parable of the drowning child, was set to crush down the selfish part of you, to make it look like you would be invalid and shameful and harmful-to-others if the selfish part of you won, because, you're meant to think, people don't need expensive clothing - although somebody who's spent a lot on expensive clothing clearly has some use for it or some part of themselves that desires it quite strongly.

It is a parable calculated to set at odds two pieces of yourself (said the Watcher), and your flaw is not that you made the wrong choice between the two pieces, it was that you hammered one of those pieces down. Even though with a bit more thought, you could have at least seen the options for being that piece of yourself too, and not too expensively.

And much more importantly (said the Watcher), you failed to understand and notice a kind of outside assault on your internal integrity, you did not notice how this parable was setting up two pieces of yourself at odds, so that you could not be both at once, and arranging for one of them to hammer down the other in a way that would leave it feeling small and injured and unable to speak in its own defense.

"If I'd actually wanted you to twist yourselves up and burn yourselves out around this," said the Watcher, "I could have designed an adversarial lecture that would have driven everybody in this room halfway crazy - except for Keltham. He's not just immune because he's an agent with a slightly different utility function, he's immune because he instinctively doesn't switch off a kind of self-integrity that everyone else in this class needs to learn to not switch off so easily."

91 comments

Comments sorted by top scores.

comment by Idan Arye · 2021-10-27T20:39:26.112Z · LW(p) · GW(p)

I think the key to the drowning child parable is the ability of others to judge you. I can't judge you for not donating a huge portion of your income to charity, because then you'll bring up the fact that I don't donate a huge portion of my own income to charity. Sure, there are people who do donate that much, but they are few enough that it is still socially safe to not donate. But I can judge you for not saving the child, because you can't challenge me for not saving them - I was not there. This means that not saving the child poses a risk to your social status, which can greatly tilt the utility balance in favor of saving them.

Replies from: weightt-an↑ comment by Canaletto (weightt-an) · 2021-10-29T07:14:45.346Z · LW(p) · GW(p)

Exactly

comment by Alex Flint (alexflint) · 2021-10-25T14:29:58.170Z · LW(p) · GW(p)

But how exactly do you do this without hammering down on the part that hammers down on parts? Because the part that hammers down on parts really has a lot to offer, too, especially when it notices that one part is way out of control and hogging the microphone, or when it sees that one part is operating outside of the domain in which its wisdom is applicable.

(Your last paragraph seems to read "and now, dear audience, please see that the REAL problem is such-and-such a part, namely the part that hammers down on parts, and you may now proceed to hammer down on this part at will!")

Replies from: Vladimir_Nesov, None↑ comment by Vladimir_Nesov · 2021-10-25T14:57:52.895Z · LW(p) · GW(p)

You can apply the lesson to that conclusion as well, avoid hammering down on the part that hammers down on parts. The point is not to belittle it, but to reform it so that it's less brutishly violent and gullible, so that the parts of mind it gardens and lives among can grow healthy together, even as it judiciously prunes the weeds.

↑ comment by [deleted] · 2021-11-15T13:32:50.501Z · LW(p) · GW(p)

You cannot truly dissolve an urge by creating another one. Now there are 2 urges at odds with one another, using precious cognitive resources while not achieving anything.

You can only dissolve it by becoming conscious of it and seeing clearly that it is not helping. Perhaps internal double crux would be a tool for this. I'd expect meditation to help, too.

comment by Mau (Mauricio) · 2021-10-26T11:46:30.843Z · LW(p) · GW(p)

I agree with and appreciate the broad point. I'll pick on one detail because I think it matters.

this whole parable of the drowning child, was set to crush down the selfish part of you, to make it look like you would be invalid and shameful and harmful-to-others if the selfish part of you won [...]

It is a parable calculated to set at odds two pieces of yourself... arranging for one of them to hammer down the other in a way that would leave it feeling small and injured and unable to speak in its own defense.

This seems uncharitable? Singer's thought experiment may have had the above effects, but my impression's been that it was calculated largely to help people recognize our impartially altruistic parts—parts of us that in practice seem to get hammered down, obliterated, and forgotten far more often than our self-focused parts (consider e.g. how many people do approximately nothing for strangers vs. how many people do approximately nothing for themselves).

So part of me worries that "the drowning child thought experiment is a calculated assault on your personal integrity!" is not just mistaken but yet another hammer by which people will kick down their own altruistic parts—the parts of us that protect those who are small and injured and unable to speak in their own defense.

Replies from: tomcatfish↑ comment by Alex Vermillion (tomcatfish) · 2022-06-16T21:57:18.013Z · LW(p) · GW(p)

Peter Singer and Keltham live in different worlds; someone else devised the story there.

Replies from: elityre↑ comment by Eli Tyre (elityre) · 2023-09-18T05:50:50.779Z · LW(p) · GW(p)

Yeah ok. But this essay was posted on Earth. And on Earth I read it as response to a percieved failure-mode of an Effective Altruism philosophy.

Replies from: tomcatfish↑ comment by Alex Vermillion (tomcatfish) · 2023-09-18T15:28:52.108Z · LW(p) · GW(p)

I think, separately, I would endorse some of the message, though I cannot say what Singer's intentions were or were not.

Any thought experiment which reveals a conflict in your values and asks you to resolve it without also offering you guidance on how to integrate all your values is going to sacrifice one of your values. This isn't a novel insight I think, as I'm almost pulling a 'by definition' on you, but the spectrum of magnitudes of this is important to me.

Our social network roundabout these parts has many metaphorical skeletons representing dozens and dozens of folks turning themselves into hollowed out "goodness" maximizers after being caught between thought experiment after thought experiment. Again, I don't attribute malice on the part of the person offering the parable, but Drowning Child is one thing I have seen cut down many people's sense of self, and I am happy standing loosely against the way it is used in practice on Earth regardless of its original intention.

comment by Logan Riggs (elriggs) · 2021-10-25T18:36:36.005Z · LW(p) · GW(p)

At a pond, my niece was in a child floaty, reached too far and flipped over into the water. I slammed my half-eaten sandwich on my brother's chest, hoping he would grab it and ran into the water and saved her.

She was fine and I got to finish my sandwich.

Replies from: SpectrumDT↑ comment by SpectrumDT · 2024-12-13T09:13:15.187Z · LW(p) · GW(p)

Evidently you think your niece is worth more than half a sandwich.

Replies from: whestler↑ comment by whestler · 2024-12-13T13:58:34.748Z · LW(p) · GW(p)

I notice they could have just dropped the sandwich as they ran, so it seems that there was a small part of them still valuing the sandwich enough to spend the half second giving it to the brother, in doing so, trading a fraction of a second of niece-drowning-time for the sandwich. Not that any of this decision would have been explicit, system 2 thinking.

Carefully or even leasurely setting the sandwich aside and trading several seconds would be another thing entirely (and might make a good dark comedy skit).

I'm reminded of a first aid course I took once, where the instructor took pains to point out moments in which the person receiving CPR might be "innapropriate" if their clothing had ridden up and was exposing them in some way, taking time to cover them up and make them "decent". I couldn't help but be somewhat outraged that this was even a consideration in the man's mind, when somebody's life was at risk. I suppose his perspective was different to mine, given he worked as an emergency responder and the risk of death was quite normalised to him, but he retained his sensibilities around modesty.

comment by spkoc · 2021-10-26T14:27:46.874Z · LW(p) · GW(p)

Regarding the direct example

I feel like it's self-subverting. There's an old canard about https://www.watersafetymagazine.com/drowning-doesnt-look-like-drowning/ Given how staggeringly disproportionate the utility losses are in this scenario I think even a 1% chance of my assumption that 'I have 15 seconds to undress' would lead to death means I should act immediately.

In general when thinking about superfast reflex decisions vs thought out decisions: Obey the reflex unless your ability to estimate the probabilities involved has really low margins of error. My gut says X but my slow, super weak priors-that-have-never-been-adjusted-by-real-world-experience-about-this-first-time-in-my-life-situation say Y... Yeah just go with X. Reflect on the outcome later and maybe come up with a Z that should have been the gut/reflex response.

There's an old video game Starcraft 2 advice from Day9 that's surprisingly applicable in life: Plan your game before the game, in game follow the plan even if it seems like it's failing, after the game review and adjust your plan. Never plan during the game, speed is of the essence and the loss of micro and macro speed will cost you more than a bad plan executed well.

Don't plan during a crisis moment where you have seconds to react correctly. Do. Then later on train yourself to have better reflexes. Applicable when socializing, doing anything physical, in week 1 of a software development 2 week sprint etc.

Regarding the more general point of people having ... self-consistent utility functions/preferences

I fundamentally disagree that you shouldn't criticize someone for their utility function. An individual's utility function should include reasonably low-discount approximations of the utility functions of people around them. This is what morality tries to approximate. People that seem to not integrate my preferences into their own signal danger to me. How irrelevant is my welfare in their calculations? How much of my utility would they destroy for how small a gain in their own utility?

People strongly committed to non-violence and so on are an edge case, but I'd feel much more comfortable with someone not in control over their own utility function than someone that is in control, based on the people I have encountered in life so far.

How intrusively should people integrate each other's preferences? How much should we police other individual's exchange rate from personal utils to other people utils? No good answer, it varies over time and societies.

Society is an iron maiden, shaped around the general opinion about what the right action is in a given scenario. Shame is when we decide something that we know others will judge us badly for. Guilt is when we've internalized that shame.

The art of a good society is designing an iron maiden that most people don't even notice.

It seems irrational to me to not internalize the social moral code to some extent into my individual utility function. (It happens anyway, might as well do it consciously so I can at least reject some of the rules) If the social order is not to my taste, try and leave or change it. But just ignoring it makes no sense.

I'd also argue that the vast majority of preferences in our, so-called, 'personal' utility function are just bits and bobs picked up from the societal example palette we observed as we grew up.

People's utility functions also include components for the type of iron maiden they want their society to build around other members. I want to be able to make assumptions about the likely outcomes of meeting a random other person. Will they try to rob me? If I'm in trouble will they help me? If my kid is playing outside unsupervised by me, but there's always random people walking by, can I trust that any of them will take reasonably care of the child if the kid ends up in trouble?

I strongly do not want to live in a society that doesn't match my preferred answers on those and other critical questions.

I absolutely do not want to live in a society that has no iron maiden built at all. That is just mad max world. I can make no reasonable assumption about what might happen when I cross paths with another person. When people are faced with situations of moral anarchy, they spontaneously band together, bang out some rules and carve out an area of the wilderness where they enforce their rules.

Replies from: Slider, wslafleur, SpectrumDT↑ comment by Slider · 2021-10-28T08:30:35.736Z · LW(p) · GW(p)

The starcraft advice is really dependent on the problem being speed sensitive. Law of equal and opposite advice applies when that structure is not present. For example in a real army that somebody is infact charge of the group is important enough that rather than jumping into everything people will call in for confirmation/order to do certain stuff. For example peacekeeping during demonstration one would want to quell a rebellion if one is about to start but shooting back when people throw rocks could make for an unneccesary bloodpath. And that call can not be made in advance as there might not be information available.

Replies from: spkoc↑ comment by spkoc · 2021-10-28T10:00:56.324Z · LW(p) · GW(p)

I agree, sort of. I'd argue that in the military example there is already a plan that includes consultation phases on purpose. The rules of engagement explicitly require a slow step. I don't know if this applies in genuinely surprising situations. A sort of known unknown vs unknown unknown distinction. I guess you can have a meta policy of always pausing ANY time something unexpected happens, but I feel like that's... hard to live(or even survive) with? Speeding car coming towards me or a kid in the road. Just act, no time to think. In fairness, this is why you prepare and preplan for likely emergency events you might encounter in life.

↑ comment by wslafleur · 2021-10-28T13:56:02.496Z · LW(p) · GW(p)

These definitions of shame and guilt strike me as inherently dysfunctional because they seem to rely on direct external reference, rather than referencing some sort of internal 'Ideal Observer' which - in a healthy individual - should presumably be an amalgamate intuition, built on top of many disparate considerations and life experience.

Replies from: spkoc↑ comment by spkoc · 2021-10-28T15:14:07.578Z · LW(p) · GW(p)

The internal Ideal Observer is the amalgamated averaged out result of interactions with the world and other people alive and dead. Human beings don't come from the orangutan branch of the primate tree, we are fundamentally biologically not solitary creatures.

Our ecological niche depends on our ability to coordinate at a scale comparable to ants, but while maintaining the individual decision making autonomy of mammals.

We're not a hive mind and we're not atomized individuals. We do and should constantly be balancing ourselves based on the feedback we get from physical reality and the social reality we live in.

Is the Ideal Observer the thing doing that balancing? Sure. But then it becomes a very reduced sort of entity, kinda like science keeps reducing the space where the god of the gaps can hide.

There's an inner utility function spitting out pleasure and pain based on stimuli, but I wouldn't call that me, there's a bit more flesh around me than just that nugget of calculation.

↑ comment by SpectrumDT · 2024-12-13T09:22:58.580Z · LW(p) · GW(p)

I'd feel much more comfortable with someone not in control over their own utility function than someone that is in control, based on the people I have encountered in life so far.

May I ask what kind of experiences you base this on?

Replies from: spkoc↑ comment by spkoc · 2024-12-22T12:02:36.138Z · LW(p) · GW(p)

Getting mugged, getting asked for bribes by gov officials, entitled crazy road rage people in traffic.

Mind you this was (maybe overly) contextualized by the OP being an argument against social norms that force people to sacrifice personal utility even when it might produce net negative utility overall.

In a broader context, status quo ethics dissidents often explicitly did the opposite, martyrising themselves for the public good. After all people fought throughout history to expand the circle of Who Matters to include more and more people.

So I guess there's also a strong dose of living in the western world at the end of history: "we've got the right liberal ethics now and deviations from them tend to result in total utility losses". I'm not giving modern human rights advocates in most of the rest of the world enough credit. Or heck vegans and whatnot in the west. Who knows how the future will judge the moral circles we draw.

Blast from the past, hadn't thought about this in ages.

comment by sapphire (deluks917) · 2021-11-01T22:05:53.292Z · LW(p) · GW(p)

Humans don't swim very well wearing lots of clothing. Take off your suit before going into the water.

Replies from: Eliezer_Yudkowsky, Richard_Kennaway, Slider↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2021-11-02T04:33:56.492Z · LW(p) · GW(p)

I actually keep thinking this in the back of my own mind every time I run into this parable, so thank you for stating it out loud. (I expect if a child brought it up, the Watcher credited them for noticing further consequences, then asked to assume the Less Convenient Possible World where this is not the case.)

↑ comment by Richard_Kennaway · 2021-11-02T16:31:29.946Z · LW(p) · GW(p)

An adult may wade where a child would drown. "The water, in this parable, didn't look like it would be over their own adult heads." (I don't know if Eliezer added that after you brought this up.) (And "heads" should be singular. The class are children, and there is one hypothetical adult.)

comment by Shmi (shminux) · 2021-10-25T02:27:10.843Z · LW(p) · GW(p)

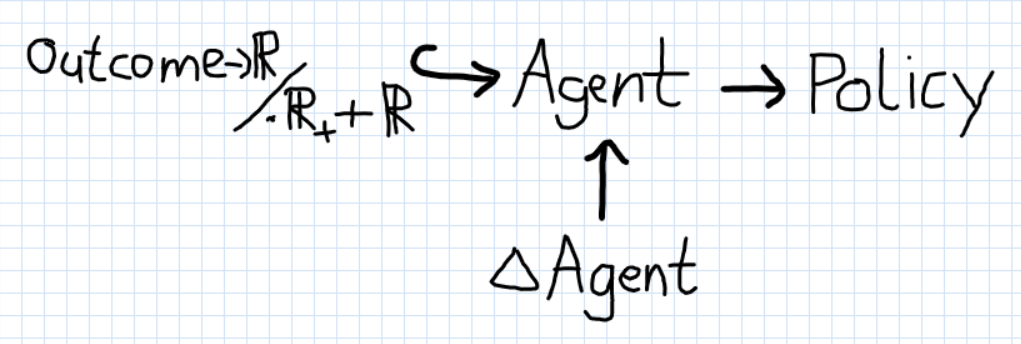

Trying to summarize for those of us not fond of long-winded parables.

- A single moral agent is a bad model of a human,

- Multiple agents with individual utilities/rules/virtues is a more accurate model.

- It's useful to be aware of this, because Tarski [? · GW], or else your actions don't match your expectations.

↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2021-10-25T02:41:34.832Z · LW(p) · GW(p)

I worry that "parts as people" or even "parts as animals" models are putting people on the wrong path to self-integrity, and I did my best to edit this whole metaparable to try to avoid suggesting that any point.

Replies from: RobbBB, shminux↑ comment by Rob Bensinger (RobbBB) · 2021-10-25T18:47:15.029Z · LW(p) · GW(p)

I worry that "parts as people" or even "parts as animals" models are putting people on the wrong path to self-integrity

I'd very much love to hear more about this. (Including from others, both for and against.)

Replies from: adamzerner↑ comment by Adam Zerner (adamzerner) · 2021-10-26T23:29:40.751Z · LW(p) · GW(p)

Same.

↑ comment by Shmi (shminux) · 2021-10-25T03:46:34.191Z · LW(p) · GW(p)

Oops... I guess I misunderstood what you meant by "two pieces of yourself".

Anyway, I really like the part

you failed to understand and notice a kind of outside assault on your internal integrity, you did not notice how this parable was setting up two pieces of yourself at odds, so that you could not be both at once, and arranging for one of them to hammer down the other in a way that would leave it feeling small and injured and unable to speak in its own defense

because it attends to the feelings and not just to the logic: "hammer down the other in a way that would leave it feeling small and injured".

I could have designed an adversarial lecture that would have driven everybody in this room halfway crazy - except for Keltham

I... would love to see one of those, unless you consider it an infohazard/Shiri's scissor.

Replies from: ADifferentAnonymous, erikerikson, Avnix↑ comment by ADifferentAnonymous · 2021-10-25T16:00:26.493Z · LW(p) · GW(p)

> I could have designed an adversarial lecture that would have driven everybody in this room halfway crazy - except for Keltham

I... would love to see one of those, unless you consider it an infohazard/Shiri's scissor.

I think this might just mean using the drowning child argument to convince the students they should be acting selflessly all the time, donating all their money above minimal subsistence, etc.

Replies from: Eliezer_Yudkowsky↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2021-10-27T00:21:47.853Z · LW(p) · GW(p)

If the people on the other side of the argument ended up behaving coherently, rather than twisting themselves into knots and burning themselves out as their inner gears ground against themselves in unresolvable circles, it wouldn't be much of an adversarial lecture, would it?

Replies from: ADifferentAnonymous↑ comment by ADifferentAnonymous · 2021-10-27T02:15:20.455Z · LW(p) · GW(p)

Knot-twisting is indeed the outcome I was imagining.

(Your translation spell might be handling the words "convince" and "should" optimistically... maybe try them with the scare quotes?)

↑ comment by erikerikson · 2021-10-25T10:24:56.741Z · LW(p) · GW(p)

Regarding pieces of oneself, consider the ideas of IFS (internal family systems). "Parts" can be said to attenuate to different concerns and if one can distract from others then an opportunity to maximize utility across dimensions may be missed. One might also suggest that attenuation to only one concern over time can result in a slight movement towards disintegration as a result of increasingly strong feelings about "ignored" concerns. Integration or alignment, with every part joining a cooperative council is often considered a goal and personification can assist some in more peaceably achieving that. I personally found the suggestion to personify felt weird and false.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2021-10-25T10:49:39.105Z · LW(p) · GW(p)

I personally found the suggestion to personify felt weird and false.

I second this.

↑ comment by Sweetgum (Avnix) · 2022-09-23T22:09:33.799Z · LW(p) · GW(p)

I imagine it would be similar to the chain of arguments one often goes through in ethics. "W can't be right because A implies X! But X can't be right because B implies Y! But Y can't be right because C implies Z! But Z can't be right because..." Like how Consequentialism and Deontology both seem to have reasons they "can't be right". Of course, the students in your Adversarial Lecture could adopt a blend of various theories, so you'll have to trick them into not doing that, maybe by subtly implying that it's inconsistent, or hypocritical, or just a rationalization of their own immorality, or something like that.

comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2021-10-25T07:50:51.225Z · LW(p) · GW(p)

I unfortunately have very little of substance to add, but a strong upvote was not quite enough.

There is something in here of Iron Hufflepuff, and I'm exceedingly grateful to Eliezer for dignifying and validating it so unequivocally in this meta-parable. I expect to link to this fairly frequently over the next decade.

Replies from: tomcatfish, cmessinger↑ comment by Alex Vermillion (tomcatfish) · 2022-06-16T22:01:56.112Z · LW(p) · GW(p)

What is "Iron Hufflepuff"? This is the only mention I got when I searched LW.

Replies from: thomas-kwa↑ comment by Thomas Kwa (thomas-kwa) · 2022-06-16T22:19:12.960Z · LW(p) · GW(p)

Strong upvote for this reply. Why does the parent have 22 karma when no one knows what Iron Hufflepuff is, and no one has asked for 8 months?

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-06-17T02:40:05.984Z · LW(p) · GW(p)

Presumably because the people who gave it the net 22 karma either know the term from me using it, or were able to put together something meaningful for themselves from context.

From this FB post of a few years ago.

Red Gryffindors: prideful, vengeful, hotheaded, reckless

(Cormac MacLaggen, Ginny's worse aspects)

(Non-HP example: Chandra Nalaar)

Gold Gryffindors: courageous, unflinching, steadfast, noble

(Harry's best aspects, Fred & George from HPMOR)

(Non-HP example: Ralph from Lord of the Flies)

-----

Green Slytherins: conceited, manipulative, bigoted, selfish

(Pansy Parkinson, Slughorn's worse aspects)

(Non-HP: Borsk Fey'lya, Theron from 300)

Silver Slytherins: perceptive, unfettered, realistic, savvy

(no examples in canon; maybe Regulus?)

(Non-HP: Grand Admiral Thrawn, Cap'n Jack Sparrow)

-----

Yellow Hufflepuffs: timid, conformist, unstrategic, naive

(Ernie MacMillan's worse aspects, canon Pettigrew (shut up, leave me alone))

(Non-HP: Cady Heron from Mean Girls (before redemption))

Iron Hufflepuffs: tenacious, inclusive, empathetic, kind

(Cedric Diggory, Neville from HPMOR)

(Non-HP: Ender Wiggin, Samwise Gamgee)

-----

Blue Ravenclaws: detached, condescending, impractical, irresolute

(Xenophilius Lovegood, Helena Ravenclaw)

(Non-HP: every Vulcan ever written to piss off the audience)

Bronze Ravenclaws: brilliant, innovative, quick-witted, detail-oriented

(no examples in canon; HJPEV at his best)

(Non-HP: MacGuyver)

↑ comment by chanamessinger (cmessinger) · 2022-08-13T04:55:15.810Z · LW(p) · GW(p)

Hm, Keltham has a lot of good qualities here, but kind doesn't seem among them.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-08-13T06:39:42.004Z · LW(p) · GW(p)

... seems like a non-sequitur; can you connect the dots for me?

Replies from: cmessinger↑ comment by chanamessinger (cmessinger) · 2022-08-13T08:11:42.456Z · LW(p) · GW(p)

Kind is one of the four adjectives in your description of Iron Hufflepuff.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2022-08-13T08:59:36.863Z · LW(p) · GW(p)

Ah, gotcha.

(Also "lol"/"whoops.")

"There is something in here of Iron Hufflepuff" not meant to equal "All of Iron Hufflepuff is in here."

I agree the above does not represent kindness much.

Tenacious is the bit that's coming through most strongly, and also if I were rewriting the lists today I would include "principled" or "consistent" or "conscientious" as a strong piece of Hufflepuff, and that's very much on display here.

comment by DaemonicSigil · 2021-10-25T05:20:22.838Z · LW(p) · GW(p)

An interesting difference between the drowning child situation and the "could donate to effective charity to save children's lives" situation is that the person who happens to be walking by that pond has a non-transferable opportunity to save a child's life for $500 (or whatever the cost of the clothes are, plus some time cost, and the inconvenience of getting wet and muddy). In the case of effective charity, even if one declines to donate, other people will still have the same opportunity. In the case of the drowning child, the fact that you are the only one who can act makes jumping in to save the child somehow seem more urgent. If you don't save the child, then you'd be somehow "wasting" a valuable opportunity. For a mostly selfish person who values all lives other than their own at less than $500, the opportunity would be valuable to others but useless for themselves.

If the going rate is $1000 to save a child through effective charity, then a mostly altruistic person would be willing to pay a mostly selfish person $600 to compensate for the costs of their clothes. There would have to be some "honour" involved, since the selfish person couldn't exactly unsave the child after the fact. If they could make the deal work anyway, then the mostly selfish person would have succeeded in selling non-transferable opportunity for $100, and it would be worthwhile for them to save the drowning child.

Replies from: Gurkenglas↑ comment by Gurkenglas · 2021-10-25T14:09:01.324Z · LW(p) · GW(p)

I would like to distinguish between money burnt and money transferred. The $500 are burnt, assuming you handpicked homegrown cotton and knitted it into clothes. The $1000 are also burnt, assuming that nobody extracts rent on saving children. The $600 are merely transferred, and so the altruist may be willing to pay more even than $1000, if he expects the profits spent in ways he still moderately endorses.

comment by romeostevensit · 2021-10-24T23:54:21.954Z · LW(p) · GW(p)

My objection lies in the second part of the drowning child parable. The part where someone geographically distant is considered identical to the child in front of me, and money is considered identical to the actions of saving. It's some sort of physics being the same everywhere intuition being inappropriately applied. Of course distance in time, space, or inference create uncertainty. Of course uncertainty reduces expected value and possibly even brings the sign of the action into question if the expected variance is high enough.

Replies from: AllAmericanBreakfast, jimrandomh, SaidAchmiz, elityre, 4thWayWastrel↑ comment by DirectedEvolution (AllAmericanBreakfast) · 2021-10-25T05:09:43.326Z · LW(p) · GW(p)

A literal drowning child puts a limit on your commitment. Save this child, and your duty is discharged. When we apply this moral intuition to all the other issues in the world, our individual obligation suddenly becomes all-consuming.

Furthermore, a literal drowning child is an accident. It represents a drastic exception to the normal outcomes of your society. Your saving action is plugging a hole in a basically sound system. Do our moral intuitions stem from a consequentialist goal to save all lives that can be saved? Or do they stem from an obligation to maintain a healthy, caring, and more-or-less self-sufficient society?

To me, the best interpretation of the drowning child parable extended to a global level is that it gives me a sense of moral glee. Holy smokes! The mere act of donating money, or of doing direct work in a powerful cause for good, can save lives just the way that a more conventional heroic action can! How cool!

I'd import Eliezer's concept of a "cheerful price," but in reverse. Instead of being paid in money to cheerfully take an action I'd otherwise rather not do, I am being paid in lives saved to cheerfully give some money I'd otherwise rather not donate. A life saved for a mere $10,000? A bargain at twice the price!

Replies from: lsusr, RobbBB↑ comment by Rob Bensinger (RobbBB) · 2021-10-25T19:57:24.710Z · LW(p) · GW(p)

Quoth AllAmericanBreakfast:

Do our moral intuitions stem from a consequentialist goal to save all lives that can be saved? Or do they stem from an obligation to maintain a healthy, caring, and more-or-less self-sufficient society?

If the question is just "What's the ultimate psychological cause of my moral intuitions in these cases?", then 🤷.

If the question is "Are we just faking caring about saving other lives, when really we don't care about other human beings' welfare, autonomy, or survival at all?", then I feel confident saying 'Nah'.

I get a sense from this question (and from Romeo's content) of 'correctly noticing that EA has made some serious missteps here, but then swinging the pendulum too far in the other direction'. Or maybe it just feels to me like this is giving surprisingly wrong/incomplete pictures of most people's motivations.

Quoth Romeo:

Of course distance in time, space, or inference create uncertainty. Of course uncertainty reduces expected value and possibly even brings the sign of the action into question if the expected variance is high enough.

For most people I suspect the demandingness is the crux, rather than the uncertainty. I think they'd resist the argument even if the local 'save a drowning child' intervention seemed more uncertain than the GiveWell-ish intervention. (Partly because of a 'don't let yourself get mugged' instinct, partly because of the integrity/parts thing, and partly because of scope insensitivity [LW · GW].)

I also think there's a big factor of 'I just don't care as much about people far away from me, their inner lives feel less salient to me' and/or 'I won't be held similarly blameworthy if I ignore large amounts of distant suffering as if I ignore even small amounts of nearby suffering, because the people who could socially punish me are also located near me'.

We can consider a 2x2 matrix:

- Undemanding + Near: Drowning child. There's a cost to saving the child, but because this scenario is rare, one-off, local, and not too costly, almost everyone (pace Keltham) is happy to endorse saving the child here.

- Undemanding + Far: The same dilemma, except you're (say) missing a medium-importance business call (with cost equivalent to one fancy suit) in order to give someone directions over the phone that will enable them to save a drowning child in a foreign country.

I suspect most people would endorse doing the same thing in these two cases, at least given a side-by-side comparison.

- Demanding + Near: E.g., fighting in the trenches in a just war; or you're living in WW2 Germany and have a unique opportunity to help thousands of Jews escape the country, at the risk of being caught and executed.

- Demanding + Far: AMF, GiveDirectly, etc.

Here, my guess is that a lot more people will see overriding moral urgency and value in 'Demanding + Near' than in 'Demanding + Far'. When bodies are flying all around you, or you are directly living through your own community experiencing an atrocity, I expect that to be parsed as a very different moral category than 'there's an atrocity happening in a distant country and I could donate all my time and money to reducing the death toll'.

Replies from: romeostevensit, Idan Arye↑ comment by romeostevensit · 2021-10-28T16:28:37.239Z · LW(p) · GW(p)

I also think of the demandingness as generating an additional uncertainty term in the straussian sense.

↑ comment by Idan Arye · 2021-10-27T20:31:11.259Z · LW(p) · GW(p)

Could you clarify what you mean by "demandingness"? Because according to my understanding the drowning child should be more demanding than donating to AMF because the situation demands that you sacrifice to rescue them, unlike AMF that does not place any specific demands on you personally. So I assume you mean something else?

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2021-10-27T20:42:47.337Z · LW(p) · GW(p)

The point of the original drowning child argument was to argue for 'give basically everything you have to help people in dire need in the developing world'. So the point of the original argument was to move from

- A relatively Undemanding + Near scenario: You encounter a child drowning in the real world. This is relatively undemanding because it's a rare, once-off event that only costs you the shirt on your back plus a few minutes of your time. You aren't risking your life, giving away all your wealth, spending your whole life working on the problem, etc.

to

- A relatively Demanding + Far scenario. It doesn't have to be AMF or GiveDirectly, but I use those as examples. (Also, obviously, you can give to those orgs without endorsing 'give everything you have'. They're just stand-ins here.)

↑ comment by AnnaSalamon · 2021-10-27T20:59:46.452Z · LW(p) · GW(p)

Equally importantly IMO, it argues for transfer from a context where the effect of your actions is directly perceptionally obvious to one where it is unclear and filters through political structures (e.g., aid organizations and what they choose to do and to communicate; any governments they might be interacting with; any other players on the ground in the distant country) that will be hard to model accurately.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2021-10-28T01:33:49.977Z · LW(p) · GW(p)

My guess is that this has a relatively small effect on most people's moral intuitions (though maybe it should have a larger effect -- I don't think I grok the implicit concern here). I'd be curious if there's research bearing on this, and on the other speculations I tossed out there. (Or maybe Spencer or someone can go test it.)

Replies from: SpectrumDT↑ comment by SpectrumDT · 2024-12-13T11:40:11.399Z · LW(p) · GW(p)

I have heard a number of people saying that they don't want to give money to charity because they don't trust the charities spend the money well.

↑ comment by jimrandomh · 2021-10-25T00:18:50.481Z · LW(p) · GW(p)

I think distance is a good correlate for whether insurance will pay, figuratively speaking. Not because there is literally an insurance company that will pay money, but because some fraction of people whose life has been saved, or whose child's life has been saved, will think of themselves as owing a debt.

↑ comment by Said Achmiz (SaidAchmiz) · 2021-10-25T00:25:31.185Z · LW(p) · GW(p)

I agree with you, but this seems to very much not be the point of this parable.

Replies from: ricraz↑ comment by Richard_Ngo (ricraz) · 2021-10-25T20:46:22.003Z · LW(p) · GW(p)

Indeed, it seems like Romeo may be letting (one) altruistic part get hammered down by his other parts.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2021-10-25T21:41:23.520Z · LW(p) · GW(p)

I do not think that’s the problem here; rather it’s just a case of focusing on the details of the example, rather than on the concept that it’s being used as an example for.

↑ comment by Eli Tyre (elityre) · 2021-10-25T00:07:36.299Z · LW(p) · GW(p)

You're referring to the original Peter Singer essay, not to this one, yes?

Replies from: romeostevensit↑ comment by romeostevensit · 2021-10-25T00:24:12.147Z · LW(p) · GW(p)

Correct

↑ comment by Jarred Filmer (4thWayWastrel) · 2021-10-25T03:26:19.192Z · LW(p) · GW(p)

Out of curiosity, does all of the difference between the value of a child drowning in front of you and a child drowning far away come from uncertainty?

Replies from: TekhneMakre, romeostevensit↑ comment by TekhneMakre · 2021-10-25T04:17:53.033Z · LW(p) · GW(p)

There's also some coordination thing that's muddled in here. Like, "everyone protect their neighbor" is more efficient than "everyone seek out the maximal marginal use of their dollar to save a life". This doesn't necessarily cash out--indeed, why *not* seek out the maximal marginal life-saving?

For one thing, the seeking is a cost; it can also be a long-term benefit if it "adds up", accumulating evidence and understanding, but that's a more specific kind of seeking (and you might even harm this project if e.g. you think you should lie to direct donations).

For another thing, you're seriously eliding the possibility of, for example, helping to create the conditions under which malaria-ridden areas could produce their own mosquito nets, by (1) not trusting that people could take care of themselves, (2) having high time-preference for saving lives.

For a third thing, it's treating, I think maybe inappropriately, everyone as being in a marketplace, and eliding that we (humans, minds) are in some sense (though not close to entirely) "the same agent". So if I pay you low wages to really inefficiently save a life, maybe that was a good marginal use of my dollar, but concretely what happened is that you did a bunch of labor for little value. We might hope that eventually this process equilibriates to people paying for what they want and therefore getting it, but still, we can at least notice that it's very far from how we would act if we were one agent with many actuators.

↑ comment by romeostevensit · 2021-10-25T04:15:57.406Z · LW(p) · GW(p)

In a sense, since other differences might be unknown?

comment by Ruby · 2021-10-27T23:41:35.428Z · LW(p) · GW(p)

Curated. To generalize, as the stakes continue to seem high ("most important century"-level high), it's easy to feel an immense obligation to act and to give it all up for the sake of the future. This meta-parable reminds us that humans aren't made solely of parts that give everything up, and that it's a matter of self-integrity to not do so.

comment by Gurkenglas · 2021-10-25T14:51:06.813Z · LW(p) · GW(p)

not selfish and unselfish components in their utility function, but parts of themselves in some less Law-aspiring way than that

Utility functions don't model all agents; we should look at a larger space. I expect it to better model not just a human but also a council of humans or a multiverse of acausal traders. I expect this also to say how an AGI should handle uncertainty about preferences.

There should be a natural way to aggregate a distribution of agents into an agent, obeying the obvious law that an arbitrarily deeply nested distribution comes out the same way no matter which order you aggregate its layers in.

The free generalization, of course, is an agent as a distribution of utility functions. The aggregate is then simply a flattening of nested distributions. This does not tell us how an agent makes decisions - we don't just take an expectation, because that's not invariant under u and 2u being equivalent utility functions.

We might need to replace the usual distribution monad with another.

Replies from: Nisan↑ comment by Nisan · 2021-10-27T03:50:05.210Z · LW(p) · GW(p)

Ah, great! To fill in some of the details:

-

Given agents and numbers such that , there is an aggregate agent called which means "agents and acting together as a group, in which the relative power of versus is the ratio of to ". The group does not make decisions by combining their utility functions, but instead by negotiating or fighting or something.

-

Aggregation should be associative, so .

-

If you spell out all the associativity relations, you'll find that aggregation of agents is an algebra over the operad of topological simplices. (See Example 2 https://arxiv.org/abs/2107.09581.)

-

Of course we still have the old VNM-rational utility-maximizing agents. But now we also have aggregates of such agents, which are "less Law-aspiring" than their parts.

-

In order to specify the behavior of an aggregate, we might need more data than the component agents and their relative power . In that case we'd use some other operad.

comment by TurnTrout · 2022-11-26T01:53:56.550Z · LW(p) · GW(p)

Something with a utility function, if it values an apple 1% more than an orange, if offered a million apple-or-orange choices, will choose a million apples and zero oranges. The division within most people into selfish and unselfish components is not like that, you cannot feed it all with unselfish choices whatever the ratio. Not unless you are a Keeper, maybe, who has made yourself sharper and more coherent; or maybe not even then, who knows?

I fear that this parable encourages a view whereby the utility function "should" factorize over intuitively obvious discrete quantities [LW(p) · GW(p)] (e.g. apples and oranges). My utility function can value having a mixture of both apples and oranges.

comment by james.lucassen · 2021-10-25T21:52:40.956Z · LW(p) · GW(p)

TLDR: if we model a human as a collection of sub-agents rather than single agent, how do we make normative claims about which sub-agents should or shouldn't hammer down others? There's no over-arching set of goals to evaluate against, and each sub-agent always wants to hammer down all the others.

If I'm interpreting things right, I think I agree with the descriptive claims here, but tentatively disagree with the normative ones. I agree that modeling humans as single agents is inaccurate, and a multi-agent model of some sort is better. I also agree that the Drowning Child parable emphasizes the conflict between two sub-agents, although I'm not sure it sets up one side against the other too strongly (I know some people for whom the Drowning Child conflict hammers down altruism).

What I have trouble with is thinking about how a multi-agent human "should" try to alter the weights of their sub-agents, or influence this "hammering" process. We can't really ask the sub-agents for their opinion, since they're always all in conflict with all the others, to varying degrees. If some event (like exposure to a thought experiment) forces a conflict between sub-agents to rise to confrontation, and one side or the other ends up winning out, that doesn't have any intuitive normative consequences to me. In fact, it's not clear to me how it could have normativity to it at all, since there's no over-arching set of goals for it to be evaluated against.

comment by Drake Morrison (Leviad) · 2022-12-10T22:19:05.162Z · LW(p) · GW(p)

This post felt like a great counterpoint to the drowning child thought experiment, and as such I found it a useful insight. A reminder that it's okay to take care of yourself is important, especially in these times and in a community of people dedicated to things like EA and the Alignment Problem.

comment by gjm · 2021-10-30T15:37:27.363Z · LW(p) · GW(p)

In case anyone else, like me, followed the link near the start of OP to the story from which this is excerpted, and is wondering whether (having had a bunch of updates on 2021-10-24 but none since) it's likely to be dead: I had a look at its pattern of updates and it's very bursty, with gaps on the order of 1-3 weeks between bursts, so the current ~1w of no updates is not strong evidence against there being more future updates to come.

(It seems like the majority of fictional works posted online end up being abandoned, and I generally prefer not to read things of non-negligible length that stop in mid

Replies from: MondSemmel↑ comment by MondSemmel · 2021-10-31T15:40:53.165Z · LW(p) · GW(p)

I agree that most fiction ends up unfinished. This is the likely fate of any story, including anything posted on Glowfic. Even professionally published fiction is not safe from this, due to the propensity to write books as trilogies or something; and sometimes authors die (Berserk) or commit a crime (Act-Age).

That said, I am flabbergasted at the notion that you'd check a fiction, see that it was last updated <7 days ago, and immediately bring the hypothesis that "this is likely to be dead" to conscious attention, even if you then reject it.

I think this attitude sets bad incentives for authors (and translators etc.; this is a common reaction to manga scanlators, too), and makes it more likely that works will indeed not be finished. So I want to strongly push back against this, and say instead: Yes, most fiction will not get finished. It's the responsibility of the reader to take this risk into account, when they decide whether to start reading something. (Especially when it comes to free fiction - the dynamics for Patreon-supported stuff etc. are imo different. And especially especially when it comes to something like Glowfic, which is more like people publically roleplaying in text, than like webfiction where authors often commit to a regular update schedule.) Do not push for updates, nor support a culture which does.

Replies from: gjm↑ comment by gjm · 2021-10-31T16:22:35.536Z · LW(p) · GW(p)

Well, what I actually saw was that it was updated many times over at most a couple of days and then nothing for about a week. I hadn't, at that point, looked at the times of the earliest postings and noticed that they were months earlier.

So what I saw at that point -- and what I thought others might likewise see -- was a flurry of activity followed by a gap. And that seemed like possible evidence of dead-ness, which is why I checked further and decided it wasn't.

Your last paragraph seems to be reading things into what I wrote that I'm pretty sure I never put there. I completely agree that if a reader prefers to avoid reading things that get abandoned in the middle, it's their responsibility to look. That's what I did. I found (1) reason for initial suspicion that this might be such a work and then (2) excellent reason to drop that suspicion, so I said so. Neither did I push for updates nor suggest that anyone else should. (In case anyone else took what I said as some sort of encouragement to do that: Do not do that! It's rude!)

Replies from: MondSemmel↑ comment by MondSemmel · 2021-10-31T17:51:05.582Z · LW(p) · GW(p)

Fair enough! Insofar as I read something into your original comment that wasn't there, I think it was due to my interpretation of the language? When I hear something described as "dead" or "abandoned", that sounds like assigning blame to the author, as if they didn't fulfill a responsibility or duty; but I understand that this interpretation was not intended.

To be clear, I would still bet at 2:1 odds that the story won't get finished, simply based on base rates for web fiction (possibly the base rate for unfinished Glowfics is even higher?). All the while stressing that I don't mean that as blame, and that it's entirely fine for anyone to decide that a free fiction project is no longer worth their opportunity cost.

comment by Signer · 2021-10-25T06:05:22.372Z · LW(p) · GW(p)

The Watcher spoke on, then, about how most people have selfish and unselfish parts—not selfish and unselfish components in their utility function, but parts of themselves in some less Law-aspiring way than that.

I guess it's appropriate that children there learn about utility functions before learning about multiplication.

Replies from: moridinamael↑ comment by moridinamael · 2021-10-25T12:05:43.652Z · LW(p) · GW(p)

Perhaps the parable could have been circumvented entirely by never teaching the children that such a thing as a “utility function” existed in the first place. I was mildly surprised to learn that the dath ilani used the concept at all, rather than speaking of preferences directly. There are very few conversations about relative preference that are improved by introducing the phrase “utility function.”

Replies from: Vladimir_Nesov, cousin_it↑ comment by Vladimir_Nesov · 2021-10-25T12:43:46.361Z · LW(p) · GW(p)

Utility functions are very useful for solving decision problems with simple objectives. Human preference is not one of these, but we can often fit a utility function that approximately captures it in a particular situation, which is useful for computing informed suggestions for decisions. The model of one's preference that informs fitting of utility functions to it for use in contexts of particular decision problems could also be called a model of one's utility function, but that terminology would be misleading.

The error is forgetting that on human level, all utility functions you can work with are hopelessly simplified approximations, maps of some notion of actual preference, and even an understanding of all these maps considered altogether is a hopelessly simplified approximation, not preference itself. It's not even useful to postulate that preference is a utility function, as this is not the form that is visible in practice when drawing its maps. Still, having maps for a thing clarifies what it is, better than not having any maps at all, and better yet when maps stop getting confused for the thing itself.

Replies from: moridinamael↑ comment by moridinamael · 2021-10-25T20:30:40.681Z · LW(p) · GW(p)

I thought I agreed but upon rereading your comment I am no longer sure. As you say, the notion of a utility function implies a consistent mapping between world states and utility valuations, which is something that humans do not do in practice, and cannot do even in principle because of computational limits.

But I am not sure I follow the very last bit. Surely the best map of the dath ilan parable is just a matrix, or table, describing all the possible outcomes, with degrees of distinction provided to whatever level of detail the subject considers relevant. This, I think, is the most practical and useful amount of compression. Compress further, into a “utility function”, and you now have the equivalent of a street map that includes only topology but without street names, if you’ll forgive the metaphor.

Further, if we aren’t at any point multiplying utilities by probabilities in this thought experiment, one has to ask why you would even want utilities in the first place, rather than simply ranking the outcomes in preference order and picking the best one.

↑ comment by cousin_it · 2021-10-25T12:52:22.202Z · LW(p) · GW(p)

It's more subtle than that. Utility functions, by design, encode preferences that are consistent over lotteries (immune to Allais paradox), not just pure outcomes.

Or equivalently, they make you say not only that you prefer pure outcome A to pure outcome B, but also by how much. That "by how much" must obey some constraints motivated by probability theory, and the simplest way to summarize them is to say each outcome has a numeric utility.

comment by Olivier Faure (olivier-faure) · 2021-10-28T09:13:31.723Z · LW(p) · GW(p)

I reject the parable/dilemma for another reason: in the majority of cases, I don't think it's ethical to spend so much money on a suit that you would legitimately hesitate to save a drowning child if it put the suit at risk?

If you're so rich that you can buy tailor-made suits, then sure, go save the child and buy another suit. If you're not... then why are you buying super-expensive tailor-made suits? I see extremely few situations where keeping the ability to play status games slightly better would be worth more than saving a child's life.

(And yes, there's arguments about "near vs far" and how you could spend your money saving children in poor countries instead, but other commenters have already pointed out why one might still value a nearby child more. Also, even under that lens, the framework "don't spend more money than you can afford on status signals" still holds.)

Replies from: JBlack, aphyer, iaroslav-postovalov↑ comment by JBlack · 2021-10-29T02:40:11.954Z · LW(p) · GW(p)

From previous posts about this setting, the background assumption is that the child almost certainly won't permanently die if it takes 15 seconds longer to reach them. This is not Earth. Even if they die, their body should be recoverable before their brain degrades too badly for vitrification and future revival. It is also stated that the primary character here is far more selfish than usual.

However even on Earth, we do accept economic reasons for delaying rescue by even a lot more than 15 seconds. We don't pay enough lifeguards to patrol near every swimmer, for example, which means that when they spot a swimmer in distress it takes at least 15 more seconds to reach them. In nearly every city, a single extra ambulance team could reduce average response time to medical emergencies by a great deal more than 15 seconds. There doesn't seem to be any great ethical outcry about this, though there are sometimes newspaper articles when the delays go past a few extra hours.

What's more these are typically shared, public expenses (via insurance if nothing else). One of the major questions addressed in the post is whether the extra cost should be borne by the rescuer alone. Is that ethically relevant, or is it just an economic question of incentives?

Replies from: olivier-faure↑ comment by Olivier Faure (olivier-faure) · 2021-10-31T09:14:36.170Z · LW(p) · GW(p)

From previous posts about this setting, the background assumption is that the child almost certainly won't permanently die if it takes 15 seconds longer to reach them.

Sure, whatever.

Honestly, that answer makes me want to engage with the article even less. If the idea is that you're supposed to know about an entire fanfiction-of-a-fanfiction canon to talk about this thought experiment, then I don't see what it's doing in the Curated feed.

↑ comment by Iaroslav Postovalov (iaroslav-postovalov) · 2024-12-30T02:59:16.328Z · LW(p) · GW(p)

Putting ethics aside, if you are not rich enough to easily buy a new fragile suit, you can simply insure it. There are plenty of situations in which the suit could be ruined.

comment by Vladimir_Nesov · 2021-10-25T03:13:02.392Z · LW(p) · GW(p)

This applies to integrity of a false persona just as well, a separate issue from fitting an agentic persona (that gets decision making privileges, but not self-ratification privileges) to a human. Deciding quite clearly who you are doesn't seem possible without a million years of reflection and radical cognitive enhancement. The other option is rewriting who you are, begging the question, a more serious failure of integrity (of a different kind) whose salience distracts from the point of the dath ilani lesson.

comment by MichaelBowlby · 2021-11-10T02:50:03.302Z · LW(p) · GW(p)

I prefer hypocrisy to cruelty.

More gennerally I think this just misses the point of drowning child. The argument is not that you have this set of preferences and therefore you save the child, the argument is that luxury items are not of equal moral worth to the life of a child. This can be made consistent with taking off your suit first if think the delay has a sufficiently small probability of leading to the death of child and you think the death of a child and the expensive suit are comparable.

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2021-11-10T03:42:12.810Z · LW(p) · GW(p)

Er. Trying to preemptively frame it as "cruelty" is somewhat refusing to engage with the very question at hand.

comment by rosyatrandom · 2021-11-01T10:05:02.114Z · LW(p) · GW(p)

Coming late to this, and having to skim because Real Life, but I would modify:

Keltham is being coherent, said the Watcher.

Keltham's decision is a valid one, given his own utility function (said the Watcher); you were wrong to try to talk him into thinking that he was making an objective error.

However, the Watcher said, Keltham's utility function is also awful and Keltham should be shunned for it by any being with decent ethics.

I really don't care how valid your utility function is, or how rational you think you are, if it turns you into the sort of person who has to weigh the possibility of a child dying against materialistic concerns. In that case, you've sacrificed your soul for the sake of optimising something worthless.

Replies from: aphyer↑ comment by aphyer · 2021-11-02T15:40:24.253Z · LW(p) · GW(p)

Since you are posting on this thread, I am going to assume that you probably own a computer. Even if your computer is quite cheap, its cost trades off against a substantial probability of being able to save a child's life (much greater than the loss incurred by a 15-second delay).

I conclude therefore that by your own morality you should be shunned by any being with decent ethics, for having sacrificed your soul and chosen a worthless computer instead.

Replies from: rosyatrandom↑ comment by rosyatrandom · 2021-11-08T13:07:42.184Z · LW(p) · GW(p)

We don't live in a world of clear perceptions and communications about abstract, many-times-removed ethical trade-offs.

We're humans, with a tiny little window focused on the trivia of our day-to-day lives, trying to talk to other humans doing the same thing.

Sometimes, we manage to rise a little above that, which is wonderful, and we need to work out how to co-ordinate civilisation better in that direction.

But mostly we are just stumbling about in the mud, and don't pretend that ridiculous sophist exercises in philosophical equivalence have any relation to real people and real experiences.

People don't often get the chance to participate in real, close, and meaningful ethical dilemmas. A quite-possibly-drowning-child that you can save by simply taking obvious physical action would be one of them. And anyone who refuses to take that action is scum.