Computational functionalism probably can't explain phenomenal consciousness

post by EuanMcLean (euanmclean) · 2024-12-10T17:11:28.044Z · LW · GW · 36 commentsContents

My assumptions Defining computational functionalism Arguments against computational functionalism so far Arguments in favor of computational functionalism We can reproduce human capabilities on computers, why not consciousness? The computational lens helps explain the mind Chalmer’s fading qualia But non-functionalists don’t trust cyborgs? Are neuron-replacing chips physically possible? Putnam’s octopus What does a non-functionalist world look like? What this means for AI consciousness What this means for mind uploading Conclusion None 36 comments

I’ve updated quite hard against computational functionalism (CF) recently (as an explanation for phenomenal consciousness), from ~80% to ~30%. Of course it’s more complicated than that, since there are different ways to interpret CF and having credences on theories of consciousness can be hella slippery.

So far in this sequence, I’ve scrutinised a couple of concrete claims that computational functionalists might make, which I called theoretical and practical CF. In this post, I want to address CF more generally.

Like most rationalists I know, I used to basically assume some kind of CF when thinking about phenomenal consciousness. I found a lot of the arguments against functionalism, like Searle’s Chinese room, unconvincing. They just further entrenched my functionalismness. But as I came across and tried to explain away more and more criticisms of CF, I started to wonder, why did I start believing in it in the first place? So I decided to trace back my intellectual steps by properly scrutinising the arguments for CF.

In this post, I’ll first give a summary of the problems I have with CF, then summarise the main arguments for CF and why I find them sus, and finally briefly discuss what my view means for AI consciousness and mind uploading.

My assumptions

- I assume realism about phenomenal consciousness: Given some physical process, there is an objective fact of the matter whether or not that process is having a phenomenal experience, and what that phenomenal experience is. I am in camp #2 of Rafael’s two camps [LW · GW].

- I assume a materialist position: that there exists a correct theory of phenomenal consciousness that specifies a map between the third-person properties of a physical system and whether or not it has phenomenal consciousness (and if so, the nature of that phenomenal experience).

- I assume that phenomenal consciousness is a sub-component of the mind.

Defining computational functionalism

Here are some definitions of CF I found:

- Computational functionalism: the mind is the software of the brain. (Piccinini 2010)

- [Putnam] proposes that mental activity implements a probabilistic automaton and that particular mental states are machine states of the automaton’s central processor. (SEP)

- Computational functionalism is the view that mental states and events – pains, beliefs, desires, thoughts and so forth – are computational states of the brain, and so are defined in terms of “computational parameters plus relations to biologically characterized inputs and outputs” (Shagir 2005)

Here’s my version:

Computational functionalism: the activity of the mind is the execution of a program.

I’m most interested in CF as an explanation for phenomenal consciousness. Insofar as phenomenal consciousness is a real thing, and that phenomenal consciousness can be considered an element of the mind,[1] CF then says about phenomenal consciousness:

Computational functionalism (applied to phenomenal consciousness): phenomenal consciousness is the execution of a program.

What I want from a theory of phenomenal consciousness is for it to tell me what third-person properties to look for in a system to decide if, and if so what, phenomenal consciousness is present.

Computational functionalism (as a classifier for phenomenal consciousness): The right program running on some system is necessary and sufficient for the presence of phenomenal consciousness in that system.[2] If phenomenal consciousness is present, all aspects of the corresponding experience is specified by the program.

The second sentence might be contentious, but this follows from the last definition. If this sentence isn’t true, then you can’t say that conscious experience is that program, because the experience has properties that the program does not. If the program does not fully specify the experience, then the best we can say is that the program is but one component of the experience, a weaker statement.

When I use the phrase computational functionalism (or CF) below, I’m referring to the “classifier for phenomenal consciousness” version I’ve defined above.

Arguments against computational functionalism so far

Previously in the sequence, I defined and argued against two things computational functionalists tend to say:

- Theoretical CF: A simulation of a human brain on a computer, with physics perfectly simulated down to the atomic level, would cause the same conscious experience as that brain.

Practical CF: A simulation of a human brain on a classical computer, capturing the dynamics of the brain on some coarse-grained level of abstraction, that can run on a computer small and light enough to fit on the surface of Earth, with the simulation running at the same speed as base reality[3], would cause the same conscious experience as that brain.

I argued against practical CF here [LW · GW] and theoretical CF here [LW · GW]. These two claims are two potential ways to cash out the CF classifier I defined above. Practical CF says that a particular conscious experience in a human brain is identical to the execution of a program that is simple enough to run on a classical computer on Earth, which requires it to be implemented on a level of abstraction of the brain higher than biophysics. Theoretical CF says the program that creates the experience in the brain is (at least a submodule of) the “program” that governs all physical degrees of freedom in the brain.[4]

These two claims both have the strong subclaim that the simulation must have the same conscious experience as the brain they are simulating. We could weaken the claims to instead say “the simulation would have a similar conscious experience”, or even just “it would have a conscious experience at all”. These weaker claims are much less sensitive to my arguments against theoretical & practical CF.

But as I said above, if the conscious experience is different, that tells me that the experience cannot be fully specified by the program being run, and therefore the experience cannot be fully explained by that program. If we loosen the requirement of the same experience happening, this signals that the experience is also sensitive to other details like hardware, which constitutes a weaker statement than my face-value reading of the CF classifier.

I hold that a theory of phenomenal consciousness should probably have some grounding in observations of the brain, since that’s the one datapoint we have. So if we look at the brain, does it look like something implementing a program? In the practical CF post [? · GW], I argue that the answer is no, by calling into question the presence of a “software level of abstraction” (cf. Marr’s levels) below behavior and above biophysics.

In the theoretical CF post [? · GW], I give a more abstract argument against the CF classifier. I argue that computation is fuzzy, it’s a property of our map of a system rather than the territory. In contrast, given my realist assumptions above, phenomenal consciousness is not a fuzzy property of a map, it is the territory. So consciousness cannot be computation.

When I first realized these problems, it updated me only a little bit away from CF. I still said to myself “well, all this consciousness stuff is contentious and confusing. There are these arguments against CF I find convincing, but there are also good arguments in favor of it, so I don’t know whether to stop believing in it or not.” But then I actually scrutinized the arguments in favor CF, and realized I don’t find them very convincing. Below I’ll give a review of the main ones, and my problems with them.

Arguments in favor of computational functionalism

Please shout if you think I’ve missed an important one!

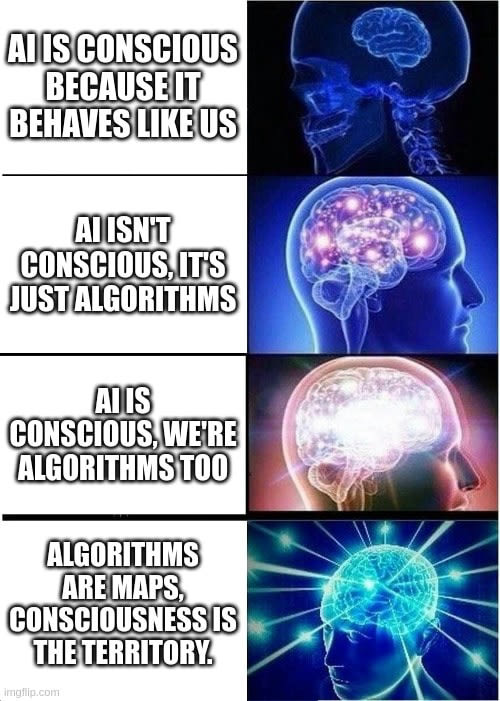

We can reproduce human capabilities on computers, why not consciousness?

AI is achieving more and more things that we used to think were exclusively human. First they came for chess. Then they came for Go, visual recognition, art, natural language. At each step the naysayers dragged their heels, saying “But it’ll never be able to do thing that humans can do!”.

Are the non-functionalists just making the same mistake by claiming that consciousness will never be achieved on computers?

First of all, I don’t think computational functionalism is strictly required for consciousness on computers.[5] But sure, computational functionalism feels closely related to the claim that AI will become conscious, so let’s address it here.

This argument assumes that phenomenal consciousness is in the same reference class as the set of previously-uniquely-human capabilities that AI has achieved. But is it? Is phenomenal consciousness a cognitive capability?

Hang on a minute. Saying that phenomenal consciousness is a cognitive capability sounds a bit… functionalist. Yes, if consciousness is a nothing but particular function that the brain does, and AI is developing the ability to reproduce more and more functions of the human brain, then it seems reasonable to expect the consciousness function to eventually arrive in AI.

But if you don’t accept that phenomenal consciousness is a function, then it’s not in the same reference class as natural language etc. Then the emergence of those capabilities in AI does not tell us about the emergence of consciousness.

So this argument is circular. Consciousness is a function, AI can do human functions, so consciousness will appear in AI. Take away the functionalist assumption, and the argument breaks down.

The computational lens helps explain the mind

Functionalism, and computational functionalism in particular, was also motivated by the rapid progress of computer science and early forms of AI in the second half of the 20th Century (Boden 2008; Dupuy 2009). These gains helped embed the metaphor of the brain as a computer, normalise the language of information processing as describing what brains do, and arguably galvanise the discipline of cognitive science. But metaphors are in the end just metaphors (Cobb 2020). The fact that mental processes can sometimes be usefully described in terms of computation is not a sufficient basis to conclude that the brain actually computes, or that consciousness is a form of computation.

Human brains are the main (only?) datapoint from which we can induct a theory of phenomenal consciousness. So when asking what properties are required for phenomenal consciousness, we can investigate what properties of the human brain are necessary for the creation of a mind.

The human brain seems to give rise to the human mind at least a bit like how a computer gives rise to the execution of programs. Modelling the brain as a computer has proven to have a lot of explanatory power: via many algorithmic models including models of visual processing, memory, attention, decision-making, perception & learning, and motor control.

These algorithms are useful maps of the brain and mind. But is computation also the territory? Is the mind a program? Such a program would need to exist as a high-level abstraction of the brain that is causally closed and fully encodes the mind.

In my previous post [? · GW] assessing practical CF, I explored whether or not such an abstraction exists. I concluded that it probably doesn’t. There is probably no software/hardware separation in the brain. Such a separation is not genetically fit [? · GW] since it is energetically expensive and there is no need for brains to download new programs in the way computers do. There is some empirical evidence [? · GW] consistent with this: The mind and neural spiking is sensitive to many biophysical details like neurotransmitter trajectories and mitochondria.

The computational lens is powerful for modelling the brain. But if you look close enough, it breaks down. Computation is a map of the mind, but it probably isn’t the territory.

Chalmer’s fading qualia

David Chalmers argued for substrate independence with his fading qualia thought experiment. Imagine you woke up in the middle of the night to find out that Chalmers had kidnapped you and tied you to a hospital bed in his spooky lab at NYU. “I’m going to do a little experiment on you, but don’t worry it’s not very invasive. I’m just going to remove a single neuron from your brain, and replace it with one of these silicon chips my grad student invented.”

He explains that the chip performs the exact input/output behavior as the real neuron he’s going to remove, right down to the electrical signals and neurotransmitters. “Your experience won’t change” he claims, “your brain will still function just as it used to, so your mind will still all be there”. Before you get the chance to protest, his grad student puts you under general anesthetic and performs the procedure.

When you wake up David asks “Are you still conscious?” and you say “yes”. “Ok, do another one,” he says to his grad student, and you’re under again. The grad student continues to replace neurons with silicon chips one by one, checking each time if you are still conscious. Since each time it’s just a measly neuron that was removed, your answer never changes.

After one hundred billion operations, every neuron has been replaced with a chip. “Are you still conscious?” you answer “Yes”, because of course you do, your brain is functioning exactly like it did before. “Aha! I have proved substrate independence once and for all!” Chalmers exclaims, “Your mind is running on different hardware, yet your conscious experience has remained unchanged.”

Surely there can’t be a single neuron replacement that turns you into a philosophical zombie? That would mean your consciousness was reliant on that single neuron, which seems implausible.

The other option is that your consciousness gradually fades over the course of the operations. But surely you would notice that your experience was gradually fading and report it? To not notice the fading would be a catastrophic failure of introspection.

There are a couple of rebuttals to this I want to focus on.

But non-functionalists don’t trust cyborgs?

Schwitzgebel points out that Chalmers has an “audience problem”. Those he is trying to convince of functionalism are those who do not yet believe in functionalism. These non-functionalists are skeptical that the final product of the experiment, the person made of silicon chips, is conscious. So despite the fully silicon person reporting consciousness, the non-functionalist does not believe them[6], since behaving like you are conscious is not conclusive evidence that you’re conscious.

The non-functionalist audience is also not obliged to trust the introspective reports at intermediate stages. A person with 50% neurons and 50% chips will report unchanged consciousness, but for the same reason as for the final state, the non-functionalist need not believe that report. Therefore, for a non-functionalist, it’s perfectly possible that the patient could continue to report normal consciousness while in fact their consciousness is fading.

Are neuron-replacing chips physically possible?

In how much detail would Chalmer’s silicon chips have to simulate the in/out behavior of the neurons? If the neuron doctrine was true, the chips could simply have protruding wires that give and receive electrical impulses. Then, it could have a tiny computer on board that has learned the correct in/out mapping.

But as I brought up in a previous post [LW · GW], neurons do not only communicate via electrical signals.[7] The precise trajectories of neurotransmitters might also be important. When neurotransmitters arrive, where on the surface they penetrate, and how deep they get all influence the pattern of firing of the receiving neuron and what neurotransmitters it sends on to other cells.

The silicon chip would need detectors for each species of neurotransmitter on every point of its surface. It must use that data to simulate the neuron’s processing of the neurotransmitters. To simulate many precise trajectories within the cell could be very expensive. Could any device ever run such simulations quickly enough (so as to keep up with the pace of the biological neurons) on a chip small enough (so as to fit in amongst the biological neurons)?

It must also synthesize new neurotransmitters to send out to other neurons. To create the new neurotransmitters, the chip needs to have a supply of chemicals to build new neurotransmitters with. As to not run out, the chip will have to re-use the chemicals from incoming neurotransmitters.

And hey, since a large component of our expensive simulation is going to be tracking the transformation of old neurotransmitters to new neurotransmitters, we can dispose of that simulation since we’re actually just running those reactions in reality. Wait a minute, is this still a simulation of a neuron? Because it’s starting to just feel like a neuron.

Following this to its logical conclusion: when it comes down to actually designing these chips, a designer may end up discovering that the only way to reproduce all of the relevant in/out behavior of a neuron, is just to build a neuron![8]

Putnam’s octopus

So I’m not convinced by any of the arguments so far. This makes me start to wonder, where did CF come from in the first place? What were the ideas that first motivated it? CF was first defined and argued for by Hilary Putnam in the 60s, who justified it with the following argument.

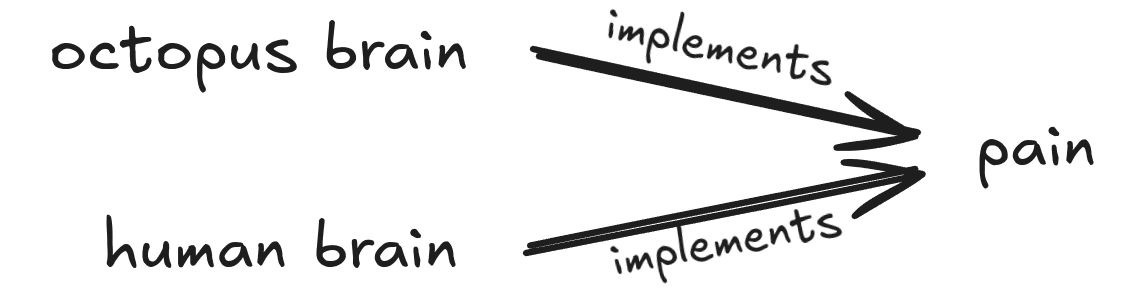

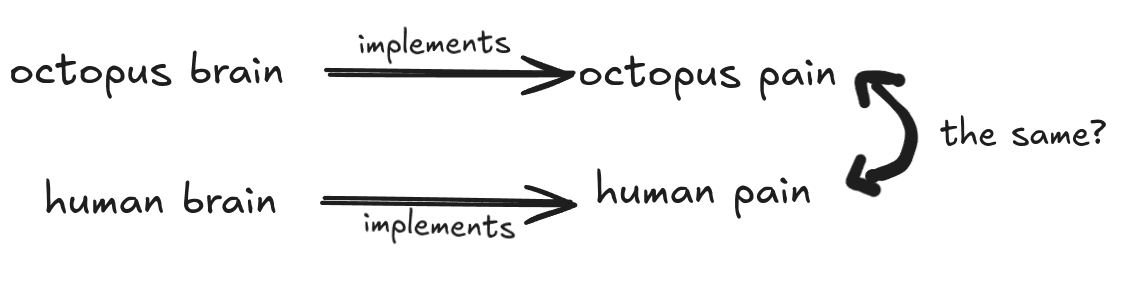

An octopus mind is implemented in a radically different way than a human mind. Octopuses have a decentralized nervous system with most neurons located in their tentacles, a donut-shaped brain, and a vertical lobe instead of hippocampi (hippocampuses?) or neocortexes (neocortexi?).

But octopuses can have experiences like ours. Namely: octopuses seem to feel pain. They demonstrate aversive responses to harmful stimuli and they learn to avoid situations where they have been hurt. So we have the pain experience being created by two very different physical implementations. So pain is substrate-independent! Therefore multiple realizability, therefore CF.

I think this only works when we interpret multiple realizability at a suitably coarse-grained level of description of mental states (Bechtel & Mundale 2022). You can certainly argue that Octopus pain and human pain are of the same “type” (they play a similar function or have similar effects on the animal’s behavior). But since we’re interested in the phenomenal texture of that experience, we’re left with the question: how can we assume that octopus pain and human pain have the same quality?

If you want to use octopuses to argue that phenomenal consciousness is a program, the best you can do is a circular argument. How might we argue that human pain and octopus pain are the same experience? They seem to be playing the same function - both experiences are driving the animal to avoid harmful stimuli. Oh, so octopus pain and human pain are the same because they play the same function, in other words, because functionalism?

This concludes the tour of (what I interpret to be) the main arguments for CF.

What does a non-functionalist world look like?

What this means for AI consciousness

I still think conscious AI is possible even if CF is wrong.

If CF is true then AI might be conscious, since the AI could be running the same algorithms that make the human mind conscious. But does negating CF make AI consciousness impossible? To claim this without further argument is a denying the antecedent fallacy.[9]

To say that CF is false is to say that consciousness isn’t totally explained by computation. But it’s another thing to say that the computational lens doesn’t tell you anything about how likely it is to be conscious. To claim that consciousness cannot emerge from silicon, one cannot just deny functionalism but they must also explain why biology has the secret sauce while chips do not.[10]

If computation isn’t the source of consciousness, it could still be correlated with whatever that true source is. Cao 2022 argues that since function constrains implementation, then function tells us at least some things about other properties of the system (like the physical makeup):

From an everyday perspective, it may seem obvious that function constrains material make-up. A bicycle chain cannot be made of just anything—certain physical properties are required in order for it to perform as required within the functional organisation of the bicycle. Swiss cheese would make for a terrible bicycle chain, let alone a mind.

(Cao 2022)

For example, consciousness isn’t a function under this view, it probably still plays a function in biology.[11] If that function is useful for future AI, then we can predict that consciousness will eventually appear in AI systems, since whatever property creates consciousness will be engineered into AI to improve its capabilities.

What this means for mind uploading

There is probably no simple abstraction of brain states that captures the necessary dynamics that encode consciousness. Scanning one’s brain and finding the “program of your mind” might be impractical because your mind, memories, personality etc are deeply entangled into the biophysical details of your brain. Geoffrey Hinton calls this mortal computation, a kind of information processing that involves an inseparable entanglement between software and hardware. If the hardware dies, the software dies with it.

Perhaps we will still be able to run coarse-grained simulations of our brains that capture various traits of ourselves, but if CF is wrong, those simulations will not be conscious. This makes me worried about a future where the lightcone is tiled with what we think to be conscious simulations, when in fact they are zombies with no moral value.

Conclusion

Computational functionalism has some problems: the most pressing one (in my book) is the problem of algorithmic arbitrariness. But are there strong arguments in favour of CF to counterbalance these problems? In this post, I went through the main arguments and have argued that they are not strong. So the overall case for CF is not strong either.

I hope you enjoyed this sequence. I’m going to continue scratching my head about computational functionalism, as I think it’s an important question and it’s tractable to update on its validity. If I find more crucial considerations, I might add more posts to this sequence.

Thanks for reading!

- ^

I suspect this jump might be a source of confusion and disagreement in this debate. “The mind” could be read in a number of ways. It seems clear that many aspects of the mind can be explained by computation (e.g. its functional properties). In this article I’m only interested in the phenomenal consciousness element of the mind.

- ^

CF could accommodate for “degrees of consciousness” rather than a binary on/off conception of consciousness, by saying that the degree of consciousness is defined by the program being run. Some programs are not conscious at all, some are slightly conscious, and some are very conscious.

- ^

1 second of simulated time is computed at least every second in base reality.

- ^

down to some very small length scale.

- ^

See the "What this means for AI consciousness" section.

- ^

Do non-fuctionalists say that we can’t trust introspective reports at all? Not necessarily. A non-functionalst would believe the introspective reports of other fully biological humans, because they are biological humans themselves and they are extrapolating the existence of their own consciousness to the other person. We’re not obliged to believe all introspective reports. A non-functionalist could poo-poo the report of the 50/50 human for the same reason that they poo-poo the reports of LaMBDA: reports are not enough to guarantee consciousness.

- ^

Content warning: contentious claims from neuroscience. Feel free to skip or not update much, I won't be offended.

- ^

Perhaps it doesn’t have to be exactly a neuron, there may still be some substrate flexibility - e.g., we have the freedom to rearrange certain internal chemical processes without changing the function. But in this case we have less substrate flexibility than computational functionalists usually assume, the replacement chip still looks very different to a typical digital computer chip.

- ^

Denying the antecedent: A implies B, and not A, so not B. In our case: CF implies conscious AI, so not CF implies not conscious AI.

- ^

The AI consciousness disbeliever must state a “crucial thesis” that posits a link between biology and consciousness tight enough to exclude the possibility of consciousness on silicon chips, and argue for that thesis.

- ^

For example, modelling the environment in an energy efficient way.

36 comments

Comments sorted by top scores.

comment by Steven Byrnes (steve2152) · 2024-12-11T00:00:45.632Z · LW(p) · GW(p)

These algorithms are useful maps of the brain and mind. But is computation also the territory? Is the mind a program? Such a program would need to exist as a high-level abstraction of the brain that is causally closed and fully encodes the mind.

I said it in one of your previous posts [LW(p) · GW(p)] but I’ll say it again: I think causal closure is patently absurd, and a red herring. The brain is a machine that runs an algorithm, but algorithms are allowed to have inputs! And if an algorithm has inputs, then it’s not causally closed.

The most obvious examples are sensory inputs—vision, sounds, etc. I’m not sure why you don’t mention those. As soon as I open my eyes, everything in my field of view has causal effects on the flow of my brain algorithm.

Needless to say, algorithms are allowed to have inputs. For example, the mergesort algorithm has an input (namely, a list). But I hope we can all agree that mergesort is an algorithm!

The other example is: the brain algorithm has input channels where random noise enters in. Again, that doesn’t prevent it from being an algorithm. Many famous, central examples of algorithms have input channels that accept random bits—for example, MCMC.

And in regards to “practical CF”, if I run MCMC on my computer while sitting outside, and I use an anemometer attached to the computer as a source of the random input bits entering the MCMC run, then it’s true that you need an astronomically complex hyper-accurate atmospheric simulator in order to reproduce this exact run of MCMC, but I don’t understand your perspective wherein that fact would be important. It’s still true that my computer is implementing MCMC “on a level of abstraction…higher than” atoms and electrons. The wind flowing around the computer is relevant to the random bits, but is not part of the calculations that comprise MCMC (which involve the CPU instruction set etc.). By the same token, if thermal noise mildly impacts my train of thought (as it always does), then it’s true that you need to simulate my brain down to the jiggling atoms in order to reproduce this exact run of my brain algorithm, but this seems irrelevant to me, and in particular it’s still true that my brain algorithm is “implemented on a level of abstraction of the brain higher than biophysics”. (Heck, if I look up at the night sky, then you’d need to simulate the entire Milky Way to reproduce this exact run of my brain algorithm! Who cares, right?)

Replies from: sil-ver, euanmclean↑ comment by Rafael Harth (sil-ver) · 2024-12-12T12:16:46.755Z · LW(p) · GW(p)

I think causal closure of the kind that matters here just means that the abstract description (in this case, of the brain as performing an algorithm/computation) captures all relevant features of the the physical description, not that it has no dependence on inputs. Should probably be renamed something like "abstraction adequacy" (making this up right now, I don't have a term on shelf for this property). Abstraction (in)adequacy is relevant for CF I believe (I think it's straight-forward why?). Randomness probably doesn't matter since you can include this in the abstract description.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-12-12T18:54:06.958Z · LW(p) · GW(p)

Right, what I actually think is that a future brain scan with future understanding could enable a WBE to run on a reasonable-sized supercomputer (e.g. <100 GPUs), and it would be capturing what makes me me, and would be conscious (to the extent that I am), and it would be my consciousness (to a similar extent that I am), but it wouldn’t be able to reproduce my exact train of thought in perpetuity, because it would be able to reproduce neither the input data nor the random noise of my physical brain. I believe that OP’s objection to “practical CF” is centered around the fact that you need an astronomically large supercomputer to reproduce the random noise, and I don’t think that’s relevant. I agree that “abstraction adequacy” would be a step in the right direction.

Causal closure is just way too strict. And it’s not just because of random noise. For example, suppose that there’s a tiny amount of crosstalk between my neurons that represent the concept “banana” and my neurons that represent the concept “Red Army”, just by random chance. And once every 5 years or so, I’m thinking about bananas, and then a few seconds later, the idea of the Red Army pops into my head, and if not for this cross-talk, it counterfactually wouldn’t have popped into my head. And suppose that I have no idea of this fact, and it has no impact on my life. This overlap just exists by random chance, not part of some systematic learning algorithm. If I got magical brain surgery tomorrow that eliminated that specific cross-talk, and didn’t change anything else, then I would obviously still be “me”, even despite the fact that maybe some afternoon 3 years from now I would fail to think about the Red Army when I otherwise might. This cross-talk is not randomness, and it does undermine “causal closure” interpreted literally. But I would still say that “abstraction adequacy” would be achieved by an abstraction of my brain that captured everything except this particular instance of cross-talk.

Replies from: sil-ver↑ comment by Rafael Harth (sil-ver) · 2024-12-13T13:07:30.395Z · LW(p) · GW(p)

Two thoughts here

-

I feel like the actual crux between you and OP is with the claim in post #2 [? · GW] that the brain operates outside the neuron doctrine to a significant extent. This seems to be what your back and forth is heading toward; OP is fine with pseudo-randomness as long as it doesn't play a nontrivial computational function in the brain, so the actual important question is not anything about pseudo-randomness but just whether such computational functions exist. (But maybe I'm missing something, also I kind of feel like this is what most people's objection to the sequence 'should' be, so I might have tunnel vision here.)

-

(Mostly unrelated to the debate, just trying to improve my theory of mind, sorry in advance if this question is annoying.) I don't get what you mean when you say stuff like "would be conscious (to the extent that I am), and it would be my consciousness (to a similar extent that I am)," since afaik you don't actually believe that there is a fact of the matter as to the answers to these questions. Some possibilities what I think you could mean

- I don't actually think these questions are coherent, but I'm pretending as if I did for the sake of argument

- I'm just using consciousness/identity as fuzzy categories here because I assume that the realist conclusions must align with the intuitive judgments (i.e., if it seems like the fuzzy category 'consciousness' applies similarly to both the brain and the simulation, then probably the realist will be forced to say that their consciousness is also the same)

- Actually there is a question worth debating here even if consciousness is just a fuzzy category because ???

- Actually I'm genuinely entertaining the realist view now

- Actually I reject the strict realist/anti-realist distinction because ???

↑ comment by Steven Byrnes (steve2152) · 2024-12-13T15:52:50.792Z · LW(p) · GW(p)

Thanks!

I feel like the actual crux between you and OP is with the claim in post #2 [? · GW] that the brain operates outside the neuron doctrine to a significant extent.

I don’t think that’s quite right. Neuron doctrine is pretty specific IIUC. I want to say: when the brain does systematic things, it’s because the brain is running a legible algorithm that relates to those things. And then there’s a legible explanation of how biochemistry is running that algorithm. But the latter doesn’t need to be neuron-doctrine. It can involve dendritic spikes and gene expression and astrocytes etc.

All the examples here [LW · GW] are real and important, and would impact the algorithms of an “adequate” WBE, but are mostly not “neuron doctrine”, IIUC.

Basically, it’s the thing I wrote a long time ago here [LW · GW]: “If some [part of] the brain is doing something useful, then it's humanly feasible to understand what that thing is and why it's useful, and to write our own CPU code that does the same useful thing.” And I think “doing something useful” includes as a special case everything that makes me me.

I don't get what you mean when you say stuff like "would be conscious (to the extent that I am), and it would be my consciousness (to a similar extent that I am)," since afaik you don't actually believe that there is a fact of the matter as to the answers to these questions…

Just, it’s a can of worms that I’m trying not to get into right here. I don’t have a super well-formed opinion, and I have a hunch that the question of whether consciousness is a coherent thing is itself a (meta-level) incoherent question (because of the (A) versus (B) thing here [LW(p) · GW(p)]). Yeah, just didn’t want to get into it, and I haven’t thought too hard about it anyway. :)

↑ comment by EuanMcLean (euanmclean) · 2024-12-12T16:32:25.225Z · LW(p) · GW(p)

The most obvious examples are sensory inputs—vision, sounds, etc. I’m not sure why you don’t mention those.

Obviously algorithms are allowed to have inputs, and I agree that the fact that the brain takes in sensory input (and all other kinds of inputs) is not evidence against practical CF. The way I'm defining causal closure is that the algorithm is allowed to take in some narrow band of inputs (narrow relative to, say, the inputs being the dynamics of all the atoms in the atmosphere around the neurons, or whatever). My bad for not making this more explicit, I've gone back and edited the post to make it clearer.

Computer chips have a clear sense in which they exhibit causal closure (even though they are allowed to take in inputs through narrow channels). There is a useful level of abstraction of the chip: the charges in the transistors. We can fully describe all the computations executed by the chip at that level of abstraction plus inputs, because that level of abstraction is causally closed from lower-level details like the trajectories of individual charges. If it wasn't so, then that level of abstraction would not be helpful for understanding the behavior of the computer -- executions would branch conditional on specific charge trajectories, and it would be a rubbish computer.

random noise enters in

I think this is a big source of the confusion, another case where I haven't been clear enough. I agree that algorithms are allowed to receive random noise. What I am worried about is the case where the signals entering the from smaller length scales are systematic rather than random.

If the information leaking into the abstraction can be safely averaged out (say, we just define a uniform temperature throughout the brain as an input to the algorithm), then we can just consider this a part of the abstraction: a temperature parameter you define as an input or whatever. Such an abstraction might be able to create consciousness on a practical classical computer.

But imagine instead that (for sake of argument) it turned out that high-resolution details of temperature fluctuations throughout the brain had a causal effect on the execution of the algorithm such that the algorithm doesn't do what it's meant to do if you just take the average of those fluctuations. In that case, the algorithm is not fully specified on that level of abstraction, and whatever dynamics are important for phenomenal consciousness might be encoded in the details of temperature fluctuations, not be captured by your abstraction.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-12-12T17:38:59.647Z · LW(p) · GW(p)

I’m confused by your comment. Let’s keep talking about MCMC.

- The following is true: The random inputs to MCMC have “a causal effect on the execution of the algorithm such that the algorithm doesn't do what it's meant to do if you just take the average of those fluctuations”.

- For example, let’s say the MCMC accepts a million inputs in the range (0,1), typically generated by a PRNG in practice. If you replace the PRNG by the function

return 0.5(“just take the average of those fluctuations”), then the MCMC will definitely fail to give the right answer.

- For example, let’s say the MCMC accepts a million inputs in the range (0,1), typically generated by a PRNG in practice. If you replace the PRNG by the function

- The following is false: “the signals entering…are systematic rather than random”. The random inputs to MCMC are definitely expected and required to be random, not systematic. If the PRNG has systematic patterns, it screws up the algorithm—I believe this happens from time to time, and people doing Monte Carlo simulations need to be quite paranoid about using an appropriate PRNG. Even very subtle long-range patterns in the PRNG output can screw up the calculation.

The MCMC will do a highly nontrivial (high-computational-complexity) calculation and give a highly non-arbitrary answer. The answer does depend to some extent on the stream of random inputs. For example, suppose I do MCMC, and (unbeknownst to me) the exact answer is 8.00. If I use a random seed of 1 in my PRNG, then the MCMC might spit out a final answer of 7.98 ± 0.03. If I use a random seed of 2, then the MCMC might spit out a final answer of 8.01 ± 0.03. Etc. So the algorithm run is dependent on the random bits, but the output is not totally arbitrary.

All this is uncontroversial background, I hope. You understand all this, right?

executions would branch conditional on specific charge trajectories, and it would be a rubbish computer.

As it happens, almost all modern computer chips are designed to be deterministic, by putting every signal extremely far above the noise floor. This has a giant cost in terms of power efficiency, but it has a benefit of making the design far simpler and more flexible for the human programmer. You can write code without worrying about bits randomly flipping—except for SEUs, but those are rare enough that programmers can basically ignore them for most purposes.

(Even so, such chips can act non-deterministically in some cases—for example as discussed here, some ML code is designed with race conditions where sometimes (unpredictably) the chip calculates (a+b)+c and sometimes a+(b+c), which are ever-so-slightly different for floating point numbers, but nobody cares, the overall algorithm still works fine.)

But more importantly, it’s possible to run algorithms in the presence of noise. It’s not how we normally do things in the human world, but it’s totally possible. For example, I think an ML algorithm would basically work fine if a small but measurable fraction of bits randomly flipped as you ran it. You would need to design it accordingly, of course—e.g. don’t use floating point representation, because a bit-flip in the exponent would be catastrophic. Maybe some signals would be more sensitive to bit-flips than others, in which case maybe put an error-correcting code on the super-sensitive ones. But for lots of other signals, e.g. the lowest-order bit of some neural net activation, we can just accept that they’ll randomly flip sometimes, and the algorithm still basically accomplishes what it’s supposed to accomplish—say, image classification or whatever.

Replies from: euanmclean↑ comment by EuanMcLean (euanmclean) · 2024-12-12T17:55:43.230Z · LW(p) · GW(p)

I'm not saying anything about MCMC. I'm saying random noise is not what I care about, the MCMC example is not capturing what I'm trying to get at when I talk about causal closure.

I don't disagree with anything you've said in this comment, and I'm quite confused about how we're able to talk past each other to this degree.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-12-12T18:21:28.895Z · LW(p) · GW(p)

Yeah duh I know you’re not talking about MCMC. :) But MCMC is a simpler example to ensure that we’re on the same page on the general topic of how randomness can be involved in algorithms. Are we 100% on the same page about the role of randomness in MCMC? Is everything I said about MCMC super duper obvious from your perspective? If not, then I think we’re not yet ready to move on to the far-less-conceptually-straightforward topic of brains and consciousness.

I’m trying to get at what you mean by:

But imagine instead that (for sake of argument) it turned out that high-resolution details of temperature fluctuations throughout the brain had a causal effect on the execution of the algorithm such that the algorithm doesn't do what it's meant to do if you just take the average of those fluctuations.

I don’t understand what you mean here. For example:

- If I run MCMC with a PRNG given random seed 1, it outputs 7.98 ± 0.03. If I use a random seed of 2, then the MCMC spits out a final answer of 8.01 ± 0.03. My question is: does the random seed entering MCMC “have a causal effect on the execution of the algorithm”, in whatever sense you mean by the phrase “have a causal effect on the execution of the algorithm”?

- My MCMC code uses a PRNG that returns random floats between 0 and 1. If I replace that PRNG with

return 0.5, i.e. the average of the 0-to-1 interval, then the MCMC now returns a wildly-wrong answer of 942. Is that replacement the kind of thing you have in mind when you say “just take the average of those fluctuations”? If so, how do you reconcile the fact that “just take the average of those fluctuations” gives the wrong answer, with your description of that scenario as “what it’s meant to do”? Or if not, then what would “just take the average of those fluctuations” mean in this MCMC context?

↑ comment by EuanMcLean (euanmclean) · 2024-12-12T23:56:52.258Z · LW(p) · GW(p)

MCMC is a simpler example to ensure that we’re on the same page on the general topic of how randomness can be involved in algorithms.

Thanks for clarifying :)

Are we 100% on the same page about the role of randomness in MCMC? Is everything I said about MCMC super duper obvious from your perspective?

Yes.

If I run MCMC with a PRNG given random seed 1, it outputs 7.98 ± 0.03. If I use a random seed of 2, then the MCMC spits out a final answer of 8.01 ± 0.03. My question is: does the random seed entering MCMC “have a causal effect on the execution of the algorithm”, in whatever sense you mean by the phrase “have a causal effect on the execution of the algorithm”?

Yes, the seed has a causal effect on the execution of the algorithm by my definition. As was talked about in the comments [LW(p) · GW(p)] of the original post, causal closure comes in degrees, and in this case the MCMC algorithm is somewhat causally closed from the seed. An abstract description of the MCMC system that excludes the value of the seed is still a useful abstract description of that system - you can reason about what the algorithm is doing, predict the output within the error bars, etc.

In contrast, the algorithm is not very causally closed to, say, idk, some function f() that is called a bunch of times on each iteration of the MCMC. If we leave f() out of our abstract description of the MCMC system, we don't have a very good description of that system, we can't work out much about what the output would be given an input.

If the 'mental software' I talk about is as causally closed to some biophysics as the MCMC is causally closed to the seed, then my argument in that post is weak. If however it's only as causally closed to biphysics as our program is to f(), then it's not very causally closed, and my argument in that post is stronger.

My MCMC code uses a PRNG that returns random floats between 0 and 1. If I replace that PRNG with return 0.5, i.e. the average of the 0-to-1 interval, then the MCMC now returns a wildly-wrong answer of 942. Is that replacement the kind of thing you have in mind when you say “just take the average of those fluctuations”?

Hmm, yea this is a good counterexample to my limited "just take the average of those fluctuations" claim.

If it's important that my algorithm needs a pseudorandom float between 0 and 1, and I don't have access to the particular PRNG that the algorithm calls, I could replace it with a different PRNG in my abstract description of the MCMC. It won't work exactly the same, but it will still run MCMC and give out a correct answer.

To connect it to the brain stuff: say I have a candidate abstraction of the brain that I hope explains the mind. Say temperatures fluctuate in the brain between 38°C and 39°C. Here are 3 possibilities of how this might effect the abstraction:

- Maybe in the simulation, we can just set the temperature to 38.5°C, and the simulation still correctly predicts the important features of the output. In this case, I consider the abstraction causally closed to the details temperature fluctuations.

- Or maybe temperature is an important source of randomness for the mind algorithm. In the simulation, we need to set the temperature to 38+x°C where, in the simulation, I just generate x as a PRN between 0 and 1. In this case, I still consider the abstraction causally closed to the details of the temperature fluctuations.

- Or maybe even doing the 38+x°C replacement makes the simulation totally wrong and just not do the functions its meant to do. The mind algorithm doesn't just need randomness, it needs systematic patterns that are encoded in the temperature fluctuations. In this case, to simulate the mind, we need to constantly call a function temp() which simulates the finer details of the currents of heat etc throughout the brain. In this case, in my parlance, I'd say the abstraction is not causally closed to the temperature fluctuations.

comment by quetzal_rainbow · 2024-12-10T21:03:51.376Z · LW(p) · GW(p)

I think the simplest objection to your practical CF part is that (here and further, phenomenal) consciousness is physiologically and evolutionarily robust: an infinite number of factors can't be required to have consciousness because the probability of having an infinite number of factors right is zero.

On the one hand, we have evolutionary robustness: it seems very unlikely that any single mutation could cause Homo sapiens to become otherwise intellectually capable zombies.

You can consider two extreme possibilities. Let's suppose that Homo sapiens is conscious and Homo erectus isn't. Therefore, there must be a very small number of structural changes in the brain that cause consciousness among a very large range of organisms (different humans), and ATP is not included here, as both Homo sapiens and Homo erectus have ATP.

Consider the opposite situation: all organisms with a neural system are conscious. In that case, there must be a simple property (otherwise, not all organisms in the range would be conscious) common among neural systems causing consciousness. Since neural systems of organisms are highly diverse, this property must be something with a very short description.

For everything in between: if you think that hydras don't have consciousness but proconsuls do, there must be a finite change in the genome, mRNAs, proteins, etc., between a hydra egg and a proconsul egg that causes consciousness to appear. Moreover, this change is smaller than the overall distance between hydras and proconsuls because humans (descendants of proconsuls) have consciousness too.

From a physiological point of view, there is also extreme robustness. You need to be hit in the head really hard to lose consciousness, and you preserve consciousness under relatively large ranges of pH. Hemispherectomy often doesn't even lead to cognitive decline. Autism, depression, and schizophrenia are associated with significant changes in the brain, yet phenomenal consciousness still appears to be here.

EDIT: in other words, imagine that we have certain structure in Homo sapiens brain absent in Homo erectus brain which makes us conscious. Take all possible statements distinguishing this structure from all structures in Homo erectus brain. If we exclude all statements, logically equivalent to "this structure implements such-n-such computation", we are left... exactly with what? We are probably left with something like "this structure is a bunch of lipid bubbles pumping sodium ions in certain geometric configuration" and I don't see any reasons for ion pumping in lipid bubbles to be relevant to phenomenal consciousness, even if it happens in a fancy geometric configuration.

comment by avturchin · 2024-12-10T23:09:56.795Z · LW(p) · GW(p)

If we deny practical computational functionalism (CF), we need to pay a theoretical cost:

1. One such possible cost is that we have to assume that the secret of consciousness lies in some 'exotic transistors,' meaning that consciousness depends not on the global properties of the brain, but on small local properties of neurons or their elements (microtubules, neurotransmitter concentrations, etc.).

1a. Such exotic transistors are also internally unobservable. This makes them similar to the idea of soul, as criticized by Locke. He argued that change or replacement of the soul can't be observed. Thus, Locke's argument against the soul is similar to the fading qualia argument.

1b. Such exotic transistors should be inside the smallest animals and even bacteria. This paves the way to panpsychism, but strong panpsychism implies that computers are conscious because everything is conscious. (There are theories that a single electron is the carrier of consciousness – see Argonov).

There are theories which suggest something like a "global quantum field" or "quantum computer of consciousness" and thus partially escape the curse of exotic transistors. The assume global physical property which is created by many small exotic transistors.

2 and 3. If we deny exotic transistors, we remain either with exotic computations or soul.

"Soul" here includes non-physicalist world models, e.g., qualia-only world, which is a form of solipsism or requires the existence of God who produces souls and installs them in minds (and can install them in computers).

Exotic computations can be either extremely complex or require very special computational operations (strange loop by Hofstadter).

comment by Charlie Steiner · 2024-12-10T22:56:29.613Z · LW(p) · GW(p)

I'm on board with being realist about your own consciousness. Personal experience and all that. But there's an epistemological problem with generalizing - how are you supposed to have learned that other humans have first-person experience, or indeed that the notion of first-person experience generalizes outside of your own head?

In Solomonoff induction, the mathematical formalization of Occam's razor, it's perfectly legitimate to start by assuming your own phenomenal experience (and then look for hypotheses that would produce that, such as the external world plus some bridging laws). But there's no a priori reason those bridging laws have to apply to other humans. It's not that they're assumed to be zombies, there just isn't a truth of the matter that needs to be answered.

To solve this problem, let me introduce you to schmonciousness, the property that you infer other people have based on their behavior and anatomy. You're conscious, they're schmonscious. These two properties might end up being more or less the same, but who knows.

Where before one might say that conscious people are moral patients, now you don't have to make the assumption that the people you care about are conscious, and you can just say that schmonscious people are moral patients.

Schmonsciousness is very obviously a functional property, because it's something you have to infer about other people (you can infer it about yourself based on your behavior as well, I suppose). But if consciousness is different from schmonsciousness, you still don't have to be a functionalist about consciousness.

Replies from: sil-ver↑ comment by Rafael Harth (sil-ver) · 2024-12-10T23:02:21.006Z · LW(p) · GW(p)

In Solomonoff induction, the mathematical formalization of Occam's razor, it's perfectly legitimate to start by assuming your own phenomenal experience (and then look for hypotheses that would produce that, such as the external world plus some bridging laws). But there's no a priori reason those bridging laws have to apply to other humans.

You can reason that a universe in which you are conscious and everyone else is not is more complex than a universe in which everyone is equally conscious, therefore Solomonoff Induction privileges consciousness for everyone.

Replies from: Charlie Steiner↑ comment by Charlie Steiner · 2024-12-10T23:41:53.546Z · LW(p) · GW(p)

If consciousness is not functional, then Solomonoff induction will not predict it for other people even if you assert it for yourself. This is because "asserting it for yourself" doesn't have a functional impact on yourself, so there's no need to integrate it into the model of the world - it can just be a variable set to True a priori.

As I said, if you use induction to try to predict your more fine-grained personal experience, then the natural consequence (if the external world exists) is that you get a model of the external world plus some bridging laws that say how you experience it. You are certainly allowed to try to generalize these bridging laws to other humans' brains, but you are not forced to, it doesn't come out as an automatic part of the model.

Replies from: sil-ver↑ comment by Rafael Harth (sil-ver) · 2024-12-11T10:56:39.500Z · LW(p) · GW(p)

If consciousness is not functional, then Solomonoff induction will not predict it for other people even if you assert it for yourself.

Agreed. But who's saying that consciousness isn't functional? "Functionalism" and "functional" as you're using it are similar sounding words, but they mean two different things. "Functionalism" is about locating consciousness on an abstracted vs. fundamental level. "Functional" is about consciousness being causally active vs. passive.[1] You can be a consciousness realist, think consciousness is functional, but not a functionalist.

You can also phrase the "is consciousness functional" issue as the existence or non-existence of bridging laws (if consciousness is functional, then there are no bridging laws). Which actually also means that Solomonoff Induction privileges consciousness being functional, all else equal (which circles back to your original point, though of course you can assert that consciousness being functional is logically incoherent and then it doesn't matter if the description is shorter).

I would frame this as dual-aspect monism [≈ consciousness is functional] vs. epiphenomenalism [≈ consciousness is not functional], to have a different sounding word. Although there are many other labels people use to refer to either of the two positions, especially for the first, these are just what I think are clearest. ↩︎

↑ comment by Charlie Steiner · 2024-12-11T11:25:09.645Z · LW(p) · GW(p)

You can also phrase the "is consciousness functional" issue as the existence or non-existence of bridging laws (if consciousness is functional, then there are no bridging laws). Which actually also means that Solomonoff Induction privileges consciousness being functional, all else equal.

Just imagine using your own subjective experience as the input to Solomonoff induction. If you have subjective experience that's not connected by bridging laws to the physical world, Solomonoff induction is happy to try to predict its patterns anyhow.

Solomonoff induction only privileges consciousness being functional if you actually mean schmonsciousness.

Replies from: sil-ver↑ comment by Rafael Harth (sil-ver) · 2024-12-11T11:51:40.705Z · LW(p) · GW(p)

You're using 'bridging law' differently from I was, so let me rephrase.

To explain subjective experience, you need bridging-laws-as-you-define-them. But it could be that consciousness is functional and the bridging laws are implicit in the description of the universe, rather than explicit. Differently put, the bridging laws follow as a logical consequences of how the remaining universe is defined, rather than being an additional degree of freedom.[1]

In that case, since bridging laws do not add to the length of the program,[2] Solomonoff Induction will favor a universe in which they're the same for everyone, since this is what happens by default (you'd have a hard time imagining that bridging laws follow by logical necessity but are different for different people). In fact, there's a sense in which the program that SI finds is the same as the program SI would find for an illusionist universe; the difference is just about whether you think this program implies the existence of implicit bridging laws. But in neither cases is there an explicit set of bridging laws that add to the length of the program.

Most of Eliezer's anti zombie sequence, especially Zombies Redacted [LW · GW] can be viewed as an argument for bridging laws being implicit rather than explicit. He phrases this as "consciousness happens within physics" in that post. ↩︎

Also arguable but something I feel very strongly about; I have an unpublished post where I argue at length that and why logical implications shouldn't increase program length in Solomonoff Induction. ↩︎

comment by Rafael Harth (sil-ver) · 2024-12-10T21:25:46.907Z · LW(p) · GW(p)

Chalmer’s fading qualia [...]

Worth noting that Eliezer uses this argument as well in The Generalized Anti-Zombie Principle [LW · GW], as the first line of his Socrates Dialogue (I don't know if he has it from Chalmers or thought of it independently):

Albert: "Suppose I replaced all the neurons in your head with tiny robotic artificial neurons that had the same connections, the same local input-output behavior, and analogous internal state and learning rules."

He also acknowledges that this could be impossible, but only considers one reason why (which at least I consider highly implausible):

Sir Roger Penrose: "The thought experiment you propose is impossible. You can't duplicate the behavior of neurons without tapping into quantum gravity. That said, there's not much point in me taking further part in this conversation." (Wanders away.)

Also worth noting that another logical possibility (which you sort of get at in footnote 9) is that the thought experiment does go through, and a human with silicon chips instead of neurons would still be conscious, but CF is still false. Maybe it's not the substrate but the spatial location of neurons that's relevant. ("Substrate-indendence" is not actually a super well-defined concept, either.)

If you do reject CF but do believe in realist consciousness, then it's interesting to consider what other property is the key factor for human consciousness. If you're also a physicalist, then whichever property that is probably has to play a significant computational role in the brain, otherwise you run into contradicts when you compare the brain with a system that doesn't have the property and is otherwise as similar as possible. Spatial location has at least some things going for it here (e.g., ephaptic coupling and neuron synchronization).

For example, consciousness isn’t a function under this view, it probably still plays a function in biology.[12] If that function is useful for future AI, then we can predict that consciousness will eventually appear in AI systems, since whatever property creates consciousness will be engineered into AI to improve its capabilities.

This is a decent argument for why AI consciousness will happen, but actually "AI consciousness is possible" is a weaker claim. And it's pretty hard to see how that weaker claim could ever be false, especially if one is a physicalist (aka what you call "materialist" in your assumptions of this post). It would imply that consciousness in the brain depends on a physical property, but that physical property is impossible to instantiate in an artificial system; that seems highly suspect.

comment by avturchin · 2024-12-23T16:04:47.390Z · LW(p) · GW(p)

A failure of practical CF can be of two kinds:

- We fail to create a digital copy of a person which have the same behavior with 99.9 fidelity.

Copy is possible, but it will not have phenomenal consciousness or, at least, it will be non-human or non-mine phenomenal consciousness, e.g., it will have different non-human qualia.

What is your opinion about (1) – the possibility of creating a copy?

comment by green_leaf · 2024-12-13T08:01:10.428Z · LW(p) · GW(p)

I argue that computation is fuzzy, it’s a property of our map of a system rather than the territory.

This is false. Everything exists in the territory to the extent to which it can interact with us. While different models can output a different answer as to which computation something runs, that doesn't mean the computation isn't real (or, even, that no computation is real). The computation is real in the sense of it influencing our sense impressions (I can observe my computer running a specific computation, for example). Someone else, whose model doesn't return "yes" to the question whether my computer runs a particular computation will then have to explain my reports of my sense impressions (why does this person claim their computer runs Windows, when I'm predicting it runs CP/M?), and they will have to either change their model, or make systematically incorrect predictions about my utterances.

In this way, every computation that can be ascribed to a physical system is intersubjectively real, which is the only kind of reality there could, in principle, be.

(Philosophical zombies, by the way, don't refer to functional isomorphs, but to physical duplicates, so even if you lost your consciousness after having your brain converted, it wouldn't turn you into a philosophical zombie.)

Could any device ever run such simulations quickly enough (so as to keep up with the pace of the biological neurons) on a chip small enough (so as to fit in amongst the biological neurons)?

In principle, yes. The upper physical limit for the amount of computation per kg of material per second is incredibly high.

Following this to its logical conclusion: when it comes down to actually designing these chips, a designer may end up discovering that the only way to reproduce all of the relevant in/out behavior of a neuron, is just to build a neuron!

This is false. It's known that any subset of the universe can be simulated on a classical computer to an arbitrary precision.

The non-functionalist audience is also not obliged to trust the introspective reports at intermediate stages.

This introduces a bizarre disconnect between your beliefs about your qualia, and the qualia themselves. Imagine: It would be possible, for example, that you believe you're in pain, and act in all ways as if you're in pain, but actually, you're not in pain.

Whatever I denote by "qualia," it certainly doesn't have this extremely bizarre property.

But since we’re interested in the phenomenal texture of that experience, we’re left with the question: how can we assume that octopus pain and human pain have the same quality?

Because then, the functional properties of a quale and the quale itself would be synchronized only in Homo sapiens. Other species (like octopus) might have qualia, but since they're made of different matter, they (the non-computationalist would argue) certainly have a different quality, so while they funtionally behave the same way, the quale itself is different. This would introduce a bizarre desynchronization between behavior and qualia, that just happens to match for Homo sapiens.

(This isn't something that I ever thought would be written in net-upvoted posts about on LessWrong, let alone ending in a sequence. Identity is necessarily in the pattern [LW · GW], and there is no reason to think the meat-parts of the pattern are necessary in addition to the computation-parts.)

Replies from: antimonyanthony↑ comment by Anthony DiGiovanni (antimonyanthony) · 2024-12-30T04:36:10.904Z · LW(p) · GW(p)

The non-functionalist audience is also not obliged to trust the introspective reports at intermediate stages.

This introduces a bizarre disconnect between your beliefs about your qualia, and the qualia themselves. Imagine: It would be possible, for example, that you believe you're in pain, and act in all ways as if you're in pain, but actually, you're not in pain.

I think "belief" is overloaded here. We could distinguish two kinds of "believing you're in pain" in this context:

- Patterns in some algorithm (resulting from some noxious stimulus) that, combined with other dispositions, lead to the agent's behavior, including uttering "I'm in pain."

- A first-person response of recognition of the subjective experience of pain.

I'd agree it's totally bizarre (if not incoherent) for someone to (2)-believe they're in pain yet be mistaken about that. But in order to resist the fading qualia argument along the quoted lines, I think we only need someone to (1)-believe they're in pain yet be mistaken. Which doesn't seem bizarre to me.

(And no, you don't need to be an epiphenomenalist to buy this, I think. Quoting Block: “Consider two computationally identical computers, one that works via electronic mechanisms, the other that works via hydraulic mechanisms. (Suppose that the fluid in one does the same job that the electricity does in the other.) We are not entitled to infer from the causal efficacy of the fluid in the hydraulic machine that the electrical machine also has fluid. One could not conclude that the presence or absence of the fluid makes no difference, just because there is a functional equivalent that has no fluid.”)

Replies from: green_leaf↑ comment by green_leaf · 2025-01-05T04:06:17.268Z · LW(p) · GW(p)

I think "belief" is overloaded here. We could distinguish two kinds of "believing you're in pain" in this context:

(1) isn't a belief (unless accompanied by (2)).

But in order to resist the fading qualia argument along the quoted lines, I think we only need someone to (1)-believe they're in pain yet be mistaken.

That's not possible, because the belief_2 that one isn't in pain has nowhere to be instantiated.

Even if the intermediate stages believed_2 they're not in pain and only spoke and acted that way (which isn't possible), it would introduce a desynchronization between the consciousness on one side, and the behavior and cognitive processes on the other. The fact that the person isn't in pain would be hidden entirely from their cognitive processes, and instead they would reflect on their false belief_1 about how they are, in fact, in pain.

That quale would then be shielded from them in this way, rendering its existence meaningless (since every time they would try to think about it, they would arrive at the conclusion that they don't actually have it and that they actually have the opposite quale).

In fact, aren't we lucky that our cognition and qualia are perfectly coupled? Just think about how many coincidences had to happen during evolution to get our brain exactly right.

(It would also rob qualia of their causal power. (Now the quale of being in pain can't cause the quale of feeling depressed, because that quale is accessible to my cognitive processes, and so now I would talk about being (and really be) depressed for no physical reason.) Such a quale would be shielded not only from our cognition, but also from our other qualia, thereby not existing in any meaningful sense.)

Whatever I call "qualia," it doesn't (even possibly) have these properties.

(Also, different qualia of the same person necessarily create a coherent whole, which wouldn't be the case here.)

Quoting Block: “Consider two computationally identical computers, one that works via electronic mechanisms, the other that works via hydraulic mechanisms. (Suppose that the fluid in one does the same job that the electricity does in the other.) We are not entitled to infer from the causal efficacy of the fluid in the hydraulic machine that the electrical machine also has fluid. One could not conclude that the presence or absence of the fluid makes no difference, just because there is a functional equivalent that has no fluid.”

There is no analogue of "fluid" in the brain. There is only the pattern. (If there were, there would still be all the other reasons why it can't work that way.)

Replies from: antimonyanthony↑ comment by Anthony DiGiovanni (antimonyanthony) · 2025-01-05T05:22:52.810Z · LW(p) · GW(p)

(1) isn't a belief (unless accompanied by (2))

Why not? Call it what you like, but it has all the properties relevant to your argument, because your concern was that the person would "act in all ways as if they're in pain" but not actually be in pain. (Seems like you'd be begging the question in favor of functionalism if you claimed that the first-person recognition ((2)-belief) necessarily occurs whenever there's something playing the functional role of a (1)-belief.)

That's not possible, because the belief_2 that one isn't in pain has nowhere to be instantiated.

I'm saying that no belief_2 exists in this scenario (where there is no pain) at all. Not that the person has a belief_2 that they aren't in pain.

Even if the intermediate stages believed_2 they're not in pain and only spoke and acted that way (which isn't possible), it would introduce a desynchronization between the consciousness on one side, and the behavior and cognitive processes on the other.

I don't find this compelling, because denying epiphenomenalism doesn’t require us to think that changing the first-person aspect of X always changes the third-person aspect of some Y that X causally influences. Only that this sometimes can happen. If we artificially intervene on the person's brain so as to replace X with something else designed to have the same third-person effects on Y as the original, it doesn’t follow that the new X has the same first-person aspect! The whole reason why given our actual brains our beliefs reliably track our subjective experiences is, the subjective experience is naturally coupled with some third-person aspect that tends to cause such beliefs. This no longer holds when we artificially intervene on the system as hypothesized.

There is no analogue of "fluid" in the brain. There is only the pattern.

We probably disagree at a more basic level then. I reject materialism. Subjective experiences are not just patterns.

Replies from: green_leaf↑ comment by green_leaf · 2025-02-13T22:48:27.626Z · LW(p) · GW(p)

Why not?

Because it's not accompanied by the belief itself, only by the computational pattern combined with behavior. If we hypothetically could subtract the first-person belief (which we can't), what would be left would be everything else but the belief itself.

if you claimed that the first-person recognition ((2)-belief) necessarily occurs whenever there's something playing the functional role of a (1)-belief

That's what I claimed, right.

Seems like you'd be begging the question in favor of functionalism

I don't think so. That specific argument had a form of me illustrating how absurd it would be on the intuitive level. It doesn't assume functionalism, it only appeals to our intuition.

I'm saying that no belief_2 exists in this scenario (where there is no pain) at all. Not that the person has a belief_2 that they aren't in pain.

That doesn't sound coherent - either I believe_2 I'm in pain, or I believe_2 I'm not.

I don't find this compelling, because denying epiphenomenalism doesn’t require us to think that changing the first-person aspect of X always changes the third-person aspect of some Y that X causally influences.

That's true, but my claim was a little more specific than that.

The whole reason why given our actual brains our beliefs reliably track our subjective experiences is, the subjective experience is naturally coupled with some third-person aspect that tends to cause such beliefs. This no longer holds when we artificially intervene on the system as hypothesized.

Right, but why think it matters if some change occurred naturally or not? For the universe, everything is natural, for one thing.

I reject materialism.

Well... I guess we have to draw the line somewhere.

comment by rife (edgar-muniz) · 2025-01-27T14:37:43.048Z · LW(p) · GW(p)

I think a missing critical ingredient to evaluating this is why simulating the brain would cause consciousness. Realizing why it must makes functionalism far more sensical as a conclusion. Otherwise it's just "I guess it probably would work":

Suzie Describes Her Experience

- Suzie is a human known to have phenomenal experiences.

- Suzie makes a statement about what it's like to have one of those experiences—"It's hard to describe what it feels like to think. It feels kinda like....the thoughts appear, almost fully formed..."

- Suzie's actual experiences must have a causal effect on her behavior because: when we discuss our experience, it always feels like we're talking about our experience. If the actual experience wasn't having any effect on what we said, then it would have to be perpetual coincidence that our words lined up with our experience. Perpetual coincidence is impossible.

Replacing Neurons Generally

- We know that regardless of whatever low level details cause a neuron to fire, it ultimately resolves into a binary conclusion—fire or do not fire

- Every outward behavior we perform is based on this same causal chain. We have sensory inputs, this causes neurons to fire, some of those cause others to fire, eventually some of those are motor neurons and they cause vocal cords to speak or fingers to type.

- If you replace a neuron with a functional equivalent, whether hardware or software, assuming that it fires at the same speed and strength as the original neuron, and given the same input it will either fire or not fire as the original would have - then the behavior would be exactly the same as the original. It's not a guess this is true, it's a fact of physics. This is true whether they were hardware or software equivalents.

Replacing Specifically Suzie's Neurons Specifically While She Describes Her Experience

- We have already established that Suzie's experience must have a causal effect on honest self-report of experience.

- And we also established that all causal effects of behaviour resolve at the action potential scale and abstraction level. For instance, if quantum weirdness happens on a smaller scale - it only has an effect on behaviour if it somehow determined whether or not a neuron fired. Our hardware or software equivalents would be made to account for that.

- Not to mention there's not really good reason to suppose that tiny quantum effects are orchestrating large scale neuronal pattern alterations. I'm not sure the quantum consciousness people are even arguing this. I think there focus is more on attempting to find consciousness in the quantum realm, than to say that quantum effects are able to drastically alter firing patterns

- So if Suzie's experience has a causal effect, and the entire causal chain is in neuron action potential propagation, then that must mean that experience is somehow contained in the patterns of this action potential propagation, and it is independent of the substrate, so it is something about what these components are doing, rather than the components themselves

comment by Seth Herd · 2024-12-13T02:21:31.069Z · LW(p) · GW(p)

I think you're conflating creating a similar vs identical conscious experience with a simulated brain. Close is close enough for me - I'd take an upload run at far less resolution than molecular scale.

I spent 23 years studying computational neuroscience. You don't need to model every molecule or even close to get a similar computational and therefore conscious experience. The information content of neurons (collectively and inferred where data is t complete) is a very good match to reported aspects of conscious experience.

comment by James Camacho (james-camacho) · 2024-12-10T19:09:50.413Z · LW(p) · GW(p)

I don't like this writing style. It feels like you are saying a lot of things, without trying to demarcate boundaries for what you actually mean, and I also don't see you criticizing your sentences before you put them down. For example, with these two paragraphs:

Surely there can’t be a single neuron replacement that turns you into a philosophical zombie? That would mean your consciousness was reliant on that single neuron, which seems implausible.