[Intuitive self-models] 2. Conscious Awareness

post by Steven Byrnes (steve2152) · 2024-09-25T13:29:02.820Z · LW · GW · 60 commentsContents

2.1 Post summary / Table of contents 2.2 The “awareness” concept 2.2.1 The cortex has a finite computational capacity that gets deployed serially 2.2.2 Predictive learning represents that algorithmic property via a kind of abstract container called “awareness” 2.2.3 “S(apple)”, defined as the self-reflective thought “apple being in awareness”, is different from the object-level thought “apple” 2.3 Awareness over time: The “Stream of Consciousness” 2.4 Relation between “awareness” and memory 2.4.1 Intuitive model of memory as a storage archive 2.4.2 Intuitive connection between memory and awareness 2.5 The valence of S(X) thoughts 2.5.1 Positive-valence S(X) models often go with “what my best self would do” (other things equal) 2.5.2 Positive-valence S(X) models also tend to go with X’s that are object-level motivating (other things equal) 2.6 S(A) as “the intention to immediately do action A”, and the rapid sequence [S(A) ; A] as the signature of an intentional action 2.6.1 Clarification: Two ways to “think about an action” 2.6.2 For any action A where S(A) has positive valence, there’s often a two-step temporal sequence: [S(A) ; A actually happens] 2.6.3 This two-step sequence corresponds to “intentional” actions (as opposed to “spontaneously blurting something out”, “acting on instinct”, etc.) 2.6.4 The common temporal sequence above—i.e. [S(A) with positive valence ; A actually happens]—is itself incorporated into the intuitive self-model. Call it D(A) for “Deciding to do action A” 2.6.5 An application: Illusions of intentionality 2.7 Conclusion None 60 comments

2.1 Post summary / Table of contents

Part of the Intuitive Self-Models series [? · GW].

The previous post [LW · GW] laid some groundwork for talking about intuitive self-models. Now we’re jumping right into the deep end: the intuitive concept of “conscious awareness” (or “awareness” for short). Some argue (§1.6.2 [LW · GW]) that if we can fully understand why we have an “awareness” concept, then we will thereby understand phenomenal consciousness itself! Alas, “phenomenal consciousness itself” is outside the scope of this series—again see §1.6.2 [LW · GW]. Regardless, the “awareness” concept is centrally important to how we conceptualize our own mental worlds, and well worth understanding for its own sake.

In one sense, “awareness” is nothing special: it’s an intuitive concept, built like any other intuitive concept. I can think the thought “a squirrel is in my conscious awareness”, just as I can think the thought “a squirrel is in my glove compartment”.

But in a different sense, “awareness” feels a bit enigmatic. The “glove compartment” concept is a veridical model (§1.3.2 [LW · GW]) of a tangible thing in a car. Whereas the “awareness” concept is a veridical model of … what exactly, if anything?

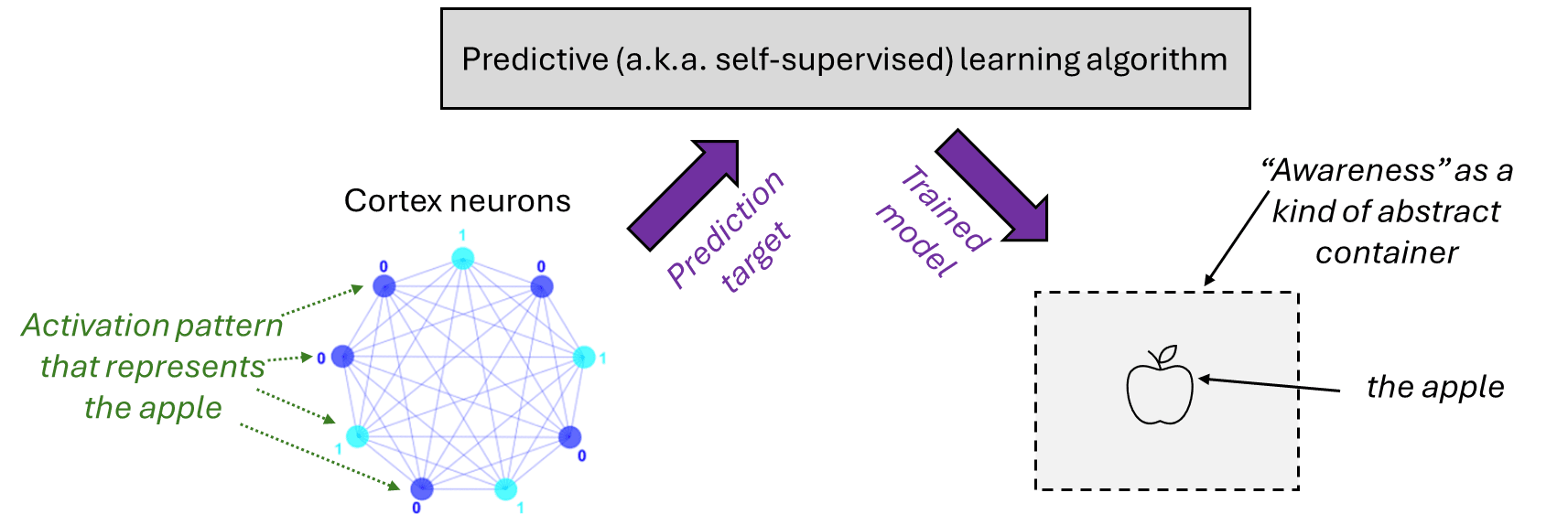

I have an answer! The short version of my hypothesis is: The brain algorithm involves the cortex, which has a limited computational capacity that gets deployed serially—you can’t both read health insurance documentation and ponder the meaning of life at the very same moment.[1] When this aspect of the brain algorithm is itself incorporated into a generative model via predictive (a.k.a. self-supervised) learning, it winds up represented as an “awareness” concept, which functions as a kind of abstract container that can hold any other mental concept(s) in it.

- In Section 2.2, I flesh out that hypothesis above. And relatedly, I introduce an important terminology that I’ll be using throughout the series: For any concept X, I define “S(X)” (“X in a self-reflective frame”) to be the intuitive model wherein X is contained within the “awareness” abstract container.

- Section 2.3 talks about how awareness unfolds in time as a “stream of consciousness”—an area where our intuitions strikingly depart from reality, once we zoom into sub-second timescales.

- Section 2.4 covers memory and how it connects to awareness—both in reality and in our intuitive models.

- Section 2.5 talks about the valence [LW · GW] of those S(X) thoughts. I’ll show that this valence is influenced not only by the object-level X being directly motivating, but also by X being associated with “my best self”—the things that fit well with my social image and an appealing narrative of my life. Note the suspicious convergence between the valence of S(X), and the things that we do “intentionally” versus “impulsively”. That brings us to…

- Section 2.6, where I develop the connection between “awareness”, intentions, and decisions. In particular, I consider the special case of S(A), where A is an action concept like “say hi” or “think about the Roman Empire”—and not just the sensory and semantic consequences of that action (e.g. the idea of saying hi), but the attention-control and/or motor-control outputs that would make that action actually happen. In this case, I’ll suggest that we intuitively interpret S(A) as an intention to do A. And if this S(A) is immediately followed by the execution of A itself (as is often the case), then we call A an intentional action—as opposed to an action which is spontaneous, reflexive, instinctive, “blurted out”, etc. As an application of this idea, I’ll explain “illusions of intentionality”.

That’s still not the whole story of intentions and decisions—it’s missing the critical ingredient of an intuitive agent that actively causes the decisions. That turns out to be a whole giant can of worms, which we’ll tackle in Post 3 [LW · GW].

Prior work: From my perspective, my main hypothesis (§2.2) should be “obvious” if you’re familiar with “Global Workspace Theory” [LW · GW][2] and/or “Attention Schema Theory”—and indeed I found Michael Graziano’s Rethinking Consciousness (2019) to be extremely helpful for clarifying my thinking.[3] Graziano & I have some differences though.[4] Also, §2.3 partly follows chapter 5 of Daniel Dennett’s Consciousness Explained (1991). Once we get into §2.5–§2.6 and the whole rest of the series, I mostly felt like I was figuring things out from scratch—but please let me know if you’ve seen relevant prior literature!

2.2 The “awareness” concept

2.2.1 The cortex has a finite computational capacity that gets deployed serially

What do I mean by that heading? Here are a few different ways to put it:

- If I’m thinking about calling the plumber, then the various parts of my cortex are busily tracking the various aspects and associations of calling the plumber. If I’m thinking about going to the zoo, then the various parts of my cortex are busily tracking the various aspects and associations of going to the zoo. The cortex is unable to do both those things simultaneously.

- Think of a system with “attractor dynamics”, like a Hopfield net or Boltzmann machine. It can’t activate two different stored patterns simultaneously. I think the cortex has a vaguely similar property.

- In terms of the §1.2 [LW · GW] discussion, the cortex does probabilistic inference, always homing in on the best generative model. But the way that works entails a rather limited ability to activate and query multiple possible generative models simultaneously. Instead, most of the time, in most of the area of the cortex, I claim there’s mainly just one active generative model, corresponding to a maximum a posteriori (MAP) estimate.

2.2.2 Predictive learning represents that algorithmic property via a kind of abstract container called “awareness”

If I say, “This apple is on my mind”, that’s a self-reflective thought. It involves a concept I’m calling “awareness”, and also the concept of “this apple”, and those two concepts are connected by a kind of container-containee relationship.

And I claim that this thought is modeling a situation where the cortex[2] is, at some particular moment, using its finite computational capacity to process my intuitive model of this apple.

More generally:

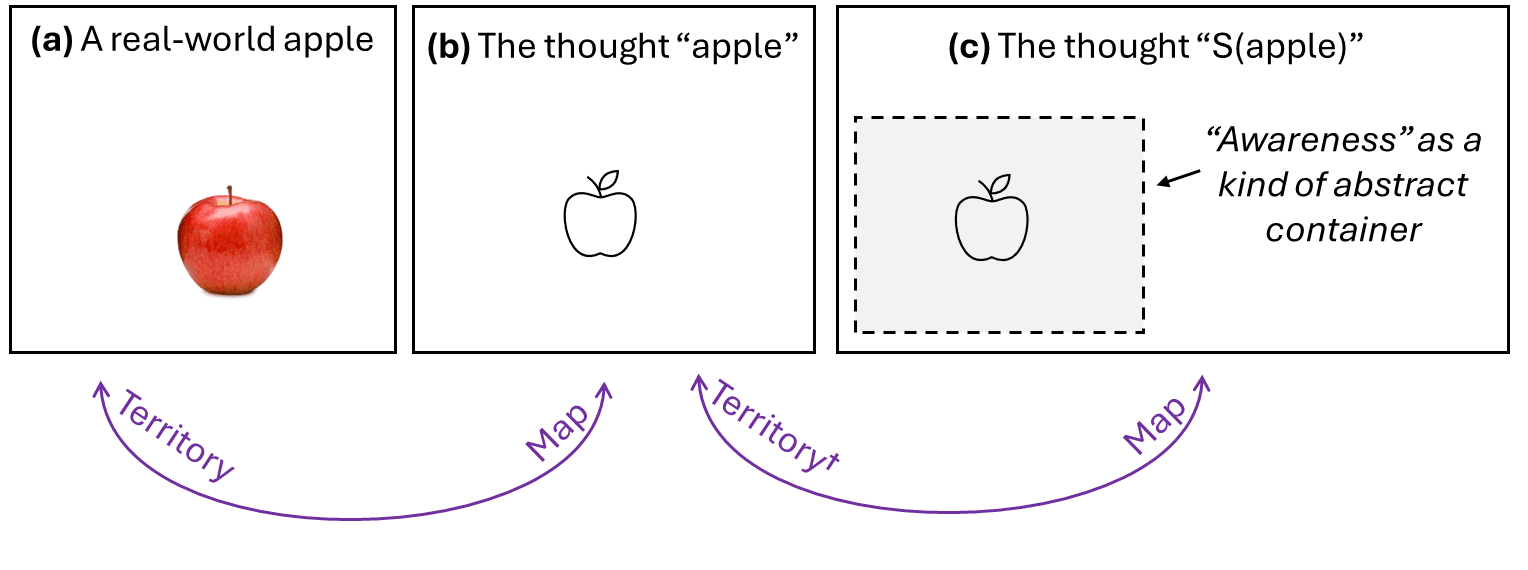

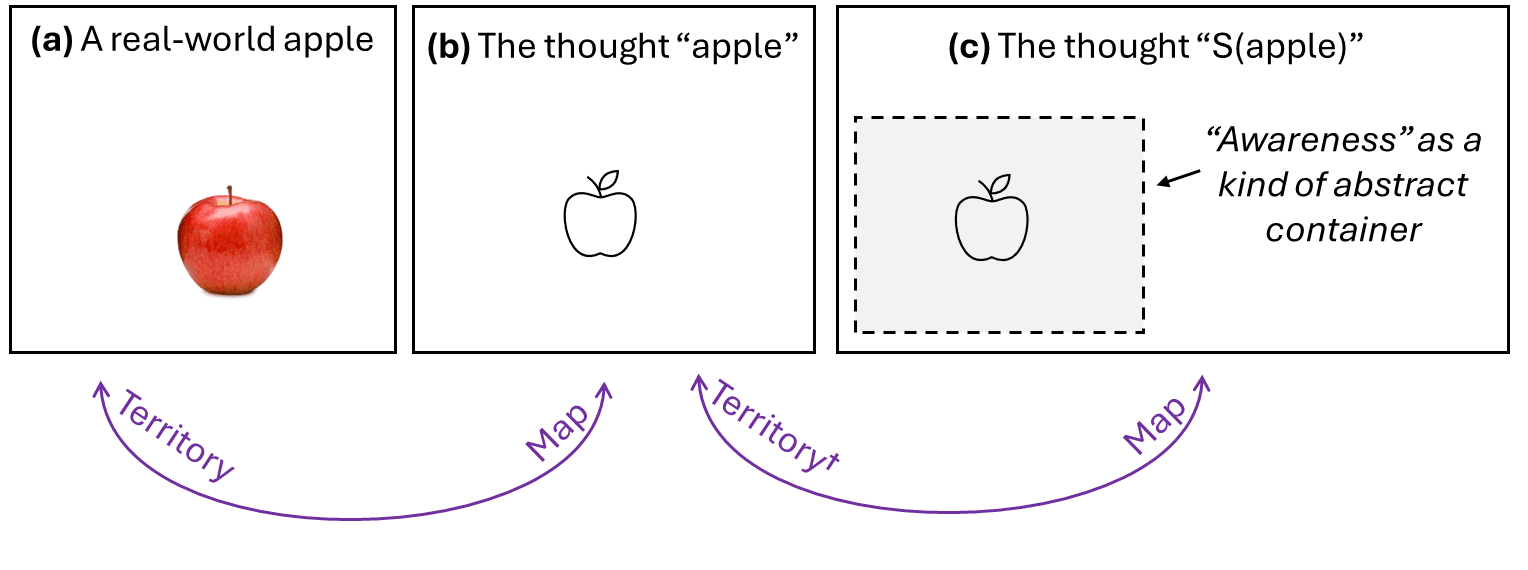

- In the intuitive self-model (“map”), any possible thought can be in “awareness” at any given time, but only one thought can be there at a time.

- Correspondingly, in the actual brain algorithm (“territory”), any possible thought can be represented by the cortex (by definition), but only one thought can be there at a time (to a first approximation, see §2.3 below).

So there’s a map-territory correspondence: awareness is a (somewhat) veridical (§1.3.2 [LW · GW]) model of this particular aspect of the brain algorithm.

2.2.3 “S(apple)”, defined as the self-reflective thought “apple being in awareness”, is different from the object-level thought “apple”

Astute readers might be wondering: if the “awareness” concept can itself be part of an intuitive model active in the cortex, then wouldn’t the thought “the apple is in awareness right now” be self-contradictory?

After all, the thing you’re thinking “right now” would be “the apple is in awareness right now”, rather than just “the apple” itself, right?

Yes! In order to think the former thought, you would have to stop thinking of just “the apple” itself, and flip to a different thought, where there’s a frame (in the sense of “frame semantics” in linguistics or “frame languages” in GOFAI) involving the “awareness” concept, and the “apple” concept, interconnected by container-containee relationship.

For various purposes later on, it will be nice to have a shorthand. So S(apple) (read: apple in a self-reflective frame) will denote the apple-is-in-awareness thought. It's “self-reflective” in the sense that it involves “awareness”, which is part of the intuitive self-model.

2.3 Awareness over time: The “Stream of Consciousness”

[Optional bonus section! You can skip §2.3, and still be able to follow the rest of the series.]

Here’s an aspect of the intuitive “awareness” concept that does not veridically correspond to the algorithmic phenomenon that it’s modeling. Daniel Dennett makes a big deal out of this topic in Consciousness Explained (1991), because it was important for his thesis to find aspects of our intuitive “awareness” concept that is not veridical, and this one seems reasonably clear-cut.

As background, there are various situations where, for events that unfold over the course of some fraction of a second, later sensory inputs are taken into account in how you remember experiencing earlier sensory inputs. Dennett uses an obscure psychology result called “color phi phenomenon” as his main case study, but the phenomenon is quite common, so I’ll use a more everyday example: hearing someone talk.

I’ll start from the computational picture. As discussed in §1.2 [LW · GW], your cortex is (either literally or effectively) searching through its space of generative models for one that matches input data and other constraints, via probabilistic inference. Some generative models, like a model that predicts the sound of a word, are extended in time, and therefore the associated probabilistic inference has to be extended in time as well.

So suppose somebody says the word “smile” to me over the course of 0.4 seconds. The actual moment-by-moment activation of my cortex algorithm might look like:

- From t=0 to t=0.15 seconds, there’s a sound that I can’t yet make out—a number of different incompatible generative models are simultaneously weakly active. There just hasn’t been enough sound yet to make any sense of it.

- By t=0.3 seconds, the generative model “smile” has won the competition, becoming the active model (posterior).

…But interestingly, if I then immediately ask you what you were experiencing just now, you won’t describe it as above. Instead you’ll say that you were hearing “sm-” at t=0 and “-mi” at t=0.2 and “-ile” at t=0.4. In other words, you’ll recall it in terms of the time-course of the generative model that ultimately turned out to be the best explanation.

So with that as background, here’s how someone might intuitively describe their awareness over time:

Statement: When I’m watching and paying attention to something, I’m constantly aware of it as it happens, moment-by-moment. I might not always remember things perfectly, but there’s a fact of the matter of what I was actually experiencing at any given time.

Intuitive model underlying that statement: Within our intuitive models, there’s a “awareness” concept / frame as above, and at any given moment it has some content in it, related to the current sensory input, memories, thoughts, or whatever else we’re paying attention to. The flow of this content through time constitutes a kind of movie, which we might call the “stream of consciousness”. The things that “I” have “experienced” are exactly the things that were frames of that movie. The movie unfolds through time, although it’s possible that I’ll misremember some aspect of it after the fact.

What’s really happening, and is the model veridical in this respect? In the above example of hearing the word “smile”, there was no moment when the beginning part of the word was the active part of the active generative model. When “smi-” was entering our brain, the “smile” generative model was not yet strongly activated—that happened slightly later. But it doesn’t seem to be that way subjectively—we remember hearing the whole word as the beginning, middle, and end of “smile”. So,

Question: was the beginning part of hearing the word “smile” actually “experienced”?

Answer: That question is incoherent, because this is an area where the intuitive model above is not veridical.

Specifically, in the brain algorithm in question, there are two history streams we can talk about:

- One history stream is related to the moment-by-moment state of the algorithmic processing—at such-and-such moment, which generative models were active in the cortex, and how active were they?

- The other history stream is the best-guess time-course of what’s happening, stitched together by probabilistic inference, routinely taking advantage of (a fraction of a second of) hindsight.

The “stream-of-consciousness” intuitive model smushes these together into the same thing—just one history stream, labeled “what I was experiencing at that moment”.

That smushing-together is an excellent approximation on a multi-second timescale, but inaccurate if you zoom into what’s happening at sub-second timescales.

So a question like “what was I really experiencing at t=0.1 seconds” doesn’t seem answerable—it’s a question about the “map” (intuitive model) that doesn’t correspond to any well-defined question about the “territory” (the algorithms that the intuitive model was designed to model). Or equivalently, it corresponds equally well to two different questions about the territory, with two different answers, and there’s just no fact of the matter about which is the real answer.

Anyway, the intuitive model, with just one history stream instead of two, is much simpler, while still being perfectly adequate to play the role that it plays in generating predictions (see §1.4 [LW · GW]). So it’s no surprise that this is the generative model built by the predictive learning algorithm. Indeed, the fact that this aspect of the model is not perfectly veridical is something that basically never comes up in normal life.

2.4 Relation between “awareness” and memory

2.4.1 Intuitive model of memory as a storage archive

Statement: “I remember going to Chicago”

Intuitive model: Long-term memory in general, and autobiographical long-term memory in particular, is some kind of storage archive. Things can get pulled from that archive into the “awareness” abstract container. And there are memories of myself-in-Chicago stored in that box, which can be retrieved deliberately or by random association.

What’s really happening? There’s some brain system (mainly the hippocampus, I think) that stores episodic memories. The memories can get triggered by pattern-matching (a.k.a. “autoassociative memory”), and then the memory and its various associations can activate all around the cortex.

Is the model veridical? Yeah, pretty much. As above, it’s not a veridical model of your brain as a hunk of meat in 3D space, but it is a reasonably veridical model of an aspect of the algorithm that your brain is running.

2.4.2 Intuitive connection between memory and awareness

Statement: “An intimate part of my awareness is its tie to long-term memory. If you show me a video of me going scuba diving this morning, and I absolutely have no memory whatsoever of it, and you can prove that the video is real, well I mean, I don't know what to say, I must have been unconscious or something!”[5]

Intuitive model: Whatever happens in “awareness” also gets automatically cataloged in the memory storage archive—at least the important stuff, and at least temporarily. And that’s all that’s in the memory storage archive. The memory storage archive just is a (very lossy) history of what’s been in awareness. This connection is deeply integrated into the intuitive model, such that imagining something in memory that was never in awareness, or conversely imagining that there was recently something very exciting and unusual in awareness but that it’s absent from memory, seems like a contradiction, demanding of some exotic explanation like “I wasn’t really conscious”.

Is the model veridical in this respect? Yup, I think this aspect of the intuitive model is veridically capturing the relation between cortex and episodic memory storage within the (normally-functioning) brain algorithm.

2.5 The valence of S(X) thoughts

We have lots of self-reflective thoughts—i.e., thoughts that involve components of the intuitive self-model—such as S(Christmas presents) = the self-reflective idea that Christmas presents are on my mind (see §2.2.3 above). And those thoughts have valence, just like any other thought. Let’s explore that idea and its consequences.

(Warning: I’m using the term “valence” in a specific and idiosyncratic way—see my Valence series [LW · GW].)

The starting question is: What controls the valence of an S(X) model?

Well, it’s the same as anything else—see How does valence get set and adjusted? [LW · GW]. One thing that can happen is that S(X) might directly trigger an innate drive, which injects positive or negative valence as a kind of ground truth. Another thing that can happen is: S(X) might have a strong association with / implication of some other thought / concept C. In that case, we’ll often think of S(X), then think of C, back and forth in rapid succession. And then by TD learning [LW · GW], some of the valence of C will splash onto S(X) (and vice-versa).

That latter dynamic—valence flowing through salient associations—turns out to have some important implications as I’ll discuss next (and more on that in Post 8 [LW · GW]).

2.5.1 Positive-valence S(X) models often go with “what my best self would do” (other things equal)

Notice how S(⋯) thoughts are “self-reflective”, in the sense that they involve me and my mind, and not just things in the outside world. This is important because it leads to S(⋯) having strong salient associations with other thoughts C that are also self-reflective. After all, if a self-reflective thought is in your head right now, then it’s much likelier for other self-reflective thoughts to pop into your head immediately afterwards.

As a consequence, here are two common examples of factors that influence the valence of S(X):

- How does S(X) fit in with my social image? A self-reflective thought like S(homework) ≈ “I’m focusing on my homework” has the salient implication “Other people might know that I’m focusing on my homework”, which in turn might be motivating or demotivating.

- Cf. the innate “drive to be liked / admired” [LW · GW].

- How does S(X) fit in with the narrative of my life? A self-reflective thought like S(homework) ≈ “I’m focusing on my homework” may have the salient implication “I’m following through on my New Year’s Resolution to focus on my homework”, or “I’m making progress towards my goal of becoming rich and famous”.

Contrast either of those with a non-self-reflective (i.e., object-level) thought related to doing my homework, e.g. “What’s the square root of 121 again?”. If I’m thinking about that, then the question of what other people think about me, and how my life plans are going, are less salient.

There’s a pattern here, which is that self-reflective thoughts are more likely to be positive-valence (motivating) if it’s something that we’re proud of, that we like to remember, that we’d like other people to see, etc.

But that’s not the only factor. The object-level is relevant too:

2.5.2 Positive-valence S(X) models also tend to go with X’s that are object-level motivating (other things equal)

For example, if I’m tired, then I want to go to sleep. Maybe going to sleep right now wouldn’t help my social image, and maybe it’s not appealing in the context of the narrative of my life. More generally, maybe I don’t think that “my best self” would be sleeping now, instead of working more. But nevertheless, the self-reflective thought “I’m gonna go to sleep now” will be highly motivating to me, because of its obvious association with / implication of sleep itself.

Maybe I’ll even say “Screw being ‘my best self’, I’m tired, I’m going to sleep”.

What’s going on? It’s the same dynamic as above, but this time the salient association of S(X) is X itself. When I think the self-reflective thought S(go to sleep) ≈ “I’m thinking about going to sleep”, some of the things that it tends to bring to mind are object-level thoughts about going to sleep, e.g. the expectation of feeling the soft pillow on my head. Those thoughts are motivating, since I’m tired. And then by TD learning [LW · GW], S(X) winds up with positive valence too.

(Conversely, just as the valence of X splashes onto S(X), by the same logic, the valence of S(X) splashes onto X. More on that below.)

2.6 S(A) as “the intention to immediately do action A”, and the rapid sequence [S(A) ; A] as the signature of an intentional action

2.6.1 Clarification: Two ways to “think about an action”

I’ll be arguing shortly that, for a voluntary [LW · GW] action A, S(A) is the “intention” to immediately do A. You might find this confusing: “Can’t I think self-reflectively about an action, without intending to do that action??” Yes, but … allow me to clarify.

Put aside self-reflective thoughts for a moment; let’s just start at the object level. If “the idea of standing up is on my mind” at some moment, that might mean either or both of two rather different things:

- Maybe the sensory and semantic consequences of the standing-up action are in my awareness—including, for example, the expected feeling of bodily motion and exertion, and the idea that I’ll wind up standing, and that my chair will wind up empty, etc.;

- Maybe the standing-up action program itself—i.e., the patterns of motor control and attention control outputs that would collectively make my muscles actually execute the standing-up action—are in my awareness. If they are, and if there’s positive valence [LW · GW] keeping it active, then I would find myself immediately actually standing up.

The punchline: When I say “an action A” in this series, it always refers to the second bullet, not the first—an action program, not merely an action idea.

So far that’s all the object-level domain. But there’s an analogous distinction in the self-reflective domain, “S(stand up)” is ambiguous as written. It could be the thought: “standing up (as a thing that could happen) is the occupant of conscious awareness”—i.e., a veridical model of the first bullet point situation above. Or it could be the thought “standing up (the action program itself) is the occupant of conscious awareness”—i.e., a veridical model of the second bullet point situation above.

And just as above, when I say S(A), I’ll always be talking about the latter, not the former; it’s the latter that (I’ll argue) corresponds to an “intention”.

That said, those two aspects of standing up are obviously strongly associated with each other. They can activate simultaneously. And even if they don’t, each tends to bring the other to mind, such that the valence of one influences the valence of the other.

With that aside, let’s get into the substance of this section!

2.6.2 For any action A where S(A) has positive valence, there’s often a two-step temporal sequence: [S(A) ; A actually happens]

In this section I’ll give a kind of first-principles derivation of something that we should expect to happen in brain algorithms, based on the discussion thus far. Then afterwards, I’ll argue that this phenomenon corresponds to our everyday notion of intentions and actions. Here goes:

- Ingredient 1: In general, S(X) often summons a follow-on thought of X. As mentioned in §2.5.2 above, there’s a strong, salient association between an object-level thing and the corresponding self-reflective way to think about that same thing—each implies the other. So if we’re thinking of X, S(X) may well immediately pop into our heads, and vice-versa.

- Ingredient 2: If S(X) is positive valence, that makes it more likely (other things equal) for X to wind up positive valence. Again see §2.5.2 above.

- Ingredient 3: If a voluntary [LW · GW] action program A (attention-control, motor-control, or both) is active in awareness, and has positive valence, then it will immediately actually happen. See §2.6.1 above; this is almost the definition of valence, as I use the term [LW · GW].

Put these together, and we conclude that there ought to be a frequent pattern:

- STEP 1: There’s a self-reflective thought S(A), for some action-program A (motor-control and/or attention-control), and this thought has positive valence;

- STEP 2 (a fraction of a second later): The non-self-reflective (a.k.a. object-level) thought A occurs, and this makes the action A actually happen.

2.6.3 This two-step sequence corresponds to “intentional” actions (as opposed to “spontaneously blurting something out”, “acting on instinct”, etc.)

Here are a few reasons that you might believe me on this:

Evidence from introspection: I’m suggesting that (step 1) you think of yourself sending a command to wiggle your fingers, and you find that thought to be motivating (positive valence), and then (step 2) a fraction of a second later, the command is sent and your fingers are actually wiggling. To me, that feels like a pretty good fit to “intentionally” / “deliberately” doing something. Whereas “acting on impulses, instincts, reflexes, etc.” seems to be missing the self-reflective step 1 part.

Evidence from the report of an insight meditator: For what it’s worth, meditation guru Daniel Ingram writes here: “In Mind and Body, the earliest insight stage, those who know what to look for and how to leverage this way of perceiving reality will take the opportunity to notice the intention to breathe that precedes the breath, the intention to move the foot that precedes the foot moving, the intention to think a thought that precedes the thinking of the thought, and even the intention to move attention that precedes attention moving.” I claim that’s a good match to what I wrote—S(A) would be the “intention” to do action A.

Evidence from the systematic differences between intentional actions and spontaneous actions: Consider spontaneous actions like “blurting out”, also called instinctive, reflexive, unthinking, reactive, spontaneous, etc. According to my story, a key difference between these types of actions, versus intentional actions, is that the valence of S(A) is necessarily positive in intentional actions, but need not be positive in spontaneous actions. And in §2.5 above, I said that the valence of S(A) is influenced by the valence of A, but S(A) is also influenced by “what my best self would do”—S(A) tends to be more positive for actions A that would positively impact my social image, fit well into the narrative of my life, and so on. And correspondingly, those are exactly the kinds of actions that are more likely to be “intentional” than “spontaneous”. Good fit!

2.6.4 The common temporal sequence above—i.e. [S(A) with positive valence ; A actually happens]—is itself incorporated into the intuitive self-model. Call it D(A) for “Deciding to do action A”

The whole point of these intuitive generative models is to observe things that often happen, and then expect them to keep happening in the future. So if the [S(A) with positive valence ; A actually happens] pattern happens regularly, of course the brain will incorporate that as an intuitive concept in its generative models. I’ll call it D(A).

2.6.5 An application: Illusions of intentionality

The stereotypical intentional-action scenario above is:

- Step 1: S(A) [with positive valence]

- Step 2: A

Here’s a different scenario:

- Step 1’: (…not sure, wasn’t paying attention…)

- Step 2’: A

Now suppose that Step 1’ was not in fact S(A), but that it could have been S(A)—in the specific sense that the hypothesis “what just happened was [S(A) ; A]” is a priori highly plausible and compatible with everything we know about ourselves and what’s happening.

In that case, we should expect the D(A) generative model to activate. Why? It’s just the cortex doing what it always does: using probabilistic inference to find the best generative model given the limited information available. It’s no different from what happens in visual perception: if I see my friend’s head coming up over the hill, I automatically intuitively interpret it as the head of my friend whose body I can’t see; I do not interpret it as my friend’s severed head. The latter would be a priori less plausible than the former.

Anyway, if D(A) activates despite a lack of actual S(A), that would be a (so-called) “illusion of free will”. Examples include the "choice blindness" experiment of Johansson et al. 2005, the "I Spy" and other experiments described in Wegner & Wheatley 1999, some instances of confabulation, and probably some types of “forcing” in stage magic. As another (possible) example, if I’m deeply in a flow state, writing code, and I take an action A = typing a word, then the self-reflective S(A) thought is almost certainly not active (that’s what “flow state” means, see Post 4 [LW · GW]), but if you ask me after the fact whether I had “decided” to execute action A, I think I would say “yes”.

2.7 Conclusion

I think this is a nice story for how the “conscious awareness” concept comes to exist in our mental worlds, how it relates to other intuitive notions like memory, stream-of-consciousness, intentions, and decisions, and how all these entities in the “map” (intuitive model) relate to corresponding entities in the “territory” (brain algorithms, as designed by the genome).

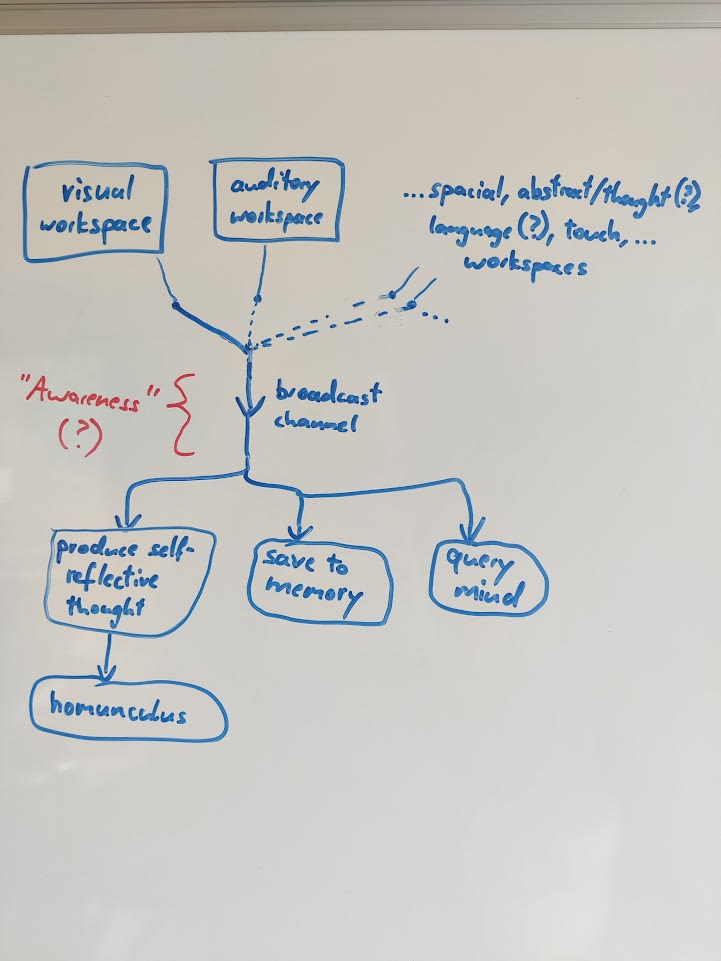

However, the above story of intentions and decisions is not yet complete! There’s an additional critical ingredient within our intuitive self-models. Not only are there intentions and decisions in our minds, but we also intuitively believe there to be a protagonist—an entity that actively intends our intentions, and decides our decisions, and wills our will! Following Dennett, I’ll call that concept “the homunculus”, and that will be the subject of the next post [LW · GW].

Thanks Thane Ruthenis, lsusr, Seth Herd, Linda Linsefors, and Justis Mills for critical comments on earlier drafts.

- ^

It is, of course, possible to read health insurance documentation and ponder the meaning of life in rapid succession, separated by as little as a fraction of a second. Especially when “pondering the meaning of life” includes nihilism and existential despair! USA readers, you know what I’m talking about.

- ^

It won’t come up again in this series, but I’ll note for completeness that “awareness” is related to the activation state of some parts of the cortex much more than other parts. For example, the primary visual cortex is not interconnected with other parts of the cortex or with long-term memory in the same direct way that many other cortical areas are; hence, you can say that we’re “not directly aware” of what happens in the primary visual cortex. In the lingo, people describe this fact by saying that there’s a “Global Neuronal Workspace” [LW · GW] consisting of many (most?) parts of the cortex, but that the primary visual cortex is not one of those parts.

- ^

Relatedly, some bits of text in this post are copied from my earlier post Book Review: Rethinking Consciousness [LW · GW].

- ^

From my perspective, Graziano’s main thesis and my §2.2 are pretty similar in the big picture. I think the biggest difference between his presentation and mine is that we stand at different places on the spectrum from “evolved modularity” to “universal learning machine” [LW · GW]. Graziano seems to be more towards the “evolved modularity” end, where he thinks that evolution specifically built “awareness” into the brain to serve as sensory feedback for attention actions, in analogy to how evolution specifically built the somatosensory cortex to serve as sensory feedback for motor actions. By contrast, my belief is much closer to the “universal learning machine” end, where “awareness” (like the rest of the intuitive self-model) comes out of a somewhat generic within-lifetime predictive learning algorithm, involving many of the same brain parts and processes that would create, store, and query an intuitive model of a carburetor.

Again, that’s all my own understanding. Graziano has not read or endorsed anything in this post.

- ^

I adapted that statement from something Jeff Hawkins said. But tragically, it’s not just a hypothetical: Clive Wearing developed total amnesia 40 years ago, and ever since then “he constantly believes that he has only recently awoken from a comatose state”.

60 comments

Comments sorted by top scores.

comment by cubefox · 2024-09-26T12:58:16.048Z · LW(p) · GW(p)

I don't know whether this is relevant to you, but in "x is aware of y" ("y is in x's awareness"), y is considered an intensional term, while for "x physically contains y", y is considered extensional. (And x is extensional in both cases.)

"Extensional" means that co-referring terms can always be substituted for each other without affecting the truth value of the resulting proposition. For "intensional" terms this is not necessarily the case.

For example, "Steve is aware of Yvain" does not entail "Steve is aware of Scott", even if Scott = Yvain. Namely when Steve doesn't know that Scott = Yvain.

However, "The house contains Scott" ("Scott is in the house") implies "The house contains Yvain" because Yvain = Scott.

Most relations only involve extensional terms. Some examples of relations which involve intensional terms: is aware of, thinks, believes, wants, intends, loves, means.

Intensional (with an "s") terms are present mainly or only in relations which express "intentionality" (with a "t") in the technical philosophical sense: a mind (or mental state) representing, or being about, something. It's a central question in philosophy of mind how this can happen. Because ordinary physical objects don't seem to exhibit this property.

Though I'm not completely sure whether your theory has ambitions to solve this problem.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-09-26T13:16:09.299Z · LW(p) · GW(p)

Thanks! I feel like that’s a very straightforward question in my framework. Recall this diagram from above:

[UPDATED TO ALSO COPY THE FOLLOWING SENTENCE FROM OP: †To be clear, the “territory” for (c) is really “(b) being active in the cortex”, not (b) per se.]

Your “truth value” is what I call “what’s happening in the territory”. In the (b)-(a) map-territory correspondence, the “territory” is the real world of atoms, so two different concepts that point to the same possibility in the real world of atoms will have the same “truth value”. In the (c)-(b) map-territory correspondence, the “territory” is the cortex, or more specifically what concepts are active in the cortex, so different concepts are always different things in the territory.

Do you agree that that’s a satisfactory explanation in my framework of why “apple is in awareness” is intensional while “apple is in the cupboard” is extensional? Or am I missing something?

Replies from: cubefox↑ comment by cubefox · 2024-09-26T14:50:30.109Z · LW(p) · GW(p)

So here (c) is about / represents (b), which itself is about / represents (a). Both (b) and (c) are thoughts (the thought of an apple and the thought of the thought of an apple), so it is expected that they both can represent things. And (a) is a physical object, so it isn't surprising that (a) doesn't represent anything.

However, it is not clear how this difference in capacity for representation arises. More specifically, if we think of (c) not as a thought/concept, but as the cortex, which is a physical object, it is not clear how the cortex could represent / be about something, namely (b).

It is also not clear why thinking about X doesn't imply thinking about Y even in cases where X=Y, while X being on the cupboard implies Y being on the cupboard when X=Y.

Tangential considerations:

I notice that in (b)-(a), (a) is intensional, as expected, while in (c)-(b), (b) does seem to be extensional. Which is not expected, since (c) is a thought about (b).

For example, in the case of (b)-(a) we could have a thought about the apple on the cupboard, and a thought about the apple I bought yesterday, which would not be the same thought, even if both apples are the same object, since I may not know that the apple on the cupboard is the same as the apple I bought yesterday.

But when thinking about our own thoughts, no such failure of identification seems possible. We always seem to know whether two thoughts are the same or not. Apparently because we have direct "access" to them because they are "internal", while we don't have direct "access" to physical objects, or to other external objects, like the thoughts of other people. So extensionality fails for thoughts about external objects, but holds for thoughts about internal objects, like our own thoughts.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-09-26T15:22:20.205Z · LW(p) · GW(p)

Thanks! The “territory” for (c) is not (b) per se but rather “(b) being active in the cortex”. (That’s the little dagger on the word “territory” below (b), I explained it in the OP but didn’t copy it into the comment above, sorry.)

So “thought of the thought of an apple” is not quite what (c) is. Something like “thought of the apple being on my mind” would be closer.

More specifically, if we think of (c) not as a thought/concept, but as the cortex, which is a physical object, it is not clear how the cortex could represent / be about something, namely (b).

I sorta feel like you’re making something simple sound complicated, or else I don’t understand your point. “If you think of a map of London as a map of London, then it represents London. If you think of a map of London as a piece of paper with ink on it, then does it still represent London?” Umm, I guess? I don’t know! What’s the point of that question? Isn’t it a silly kind of thing to be talking about? What’s at stake?

It is also not clear why thinking about A doesn't imply thinking about B even in cases where A=B, while A being on the cupboard implies B being on the cupboard when A=B.

Again, I feel like you’re making common sense sound esoteric (or else I’m missing your point). If I don’t know that Yvain is Scott, and if at time 1 I’m thinking about Yvain, and if at time 2 I’m thinking about Scott, then I’m doing two systematically different things at time 1 versus time 2, right?

But when thinking about our own thoughts, no such failure of identification seems possible.

In some contexts, two different things in the map wind up pointing to the same thing in the territory. In other cases, that doesn’t happen. For example, in the domain of “members of my family”, I’m confident that the different things on my map are also different in the territory. Whereas in the domain of anatomy, I’m not so confident—maybe I don’t realize that erythrocytes = red blood cells. Anyway, whether this is true or not in any particular domain doesn’t seem like a deep question to me—it just depends on the domain, and more specifically how easy it is for one thing in the territory to “appear” different (from my perspective) at different times, such that when I see it the second time, I draw it as a new dot on the map, instead of invoking the preexisting dot.

Replies from: cubefox↑ comment by cubefox · 2024-09-26T17:56:25.097Z · LW(p) · GW(p)

I sorta feel like you’re making something simple sound complicated, or else I don’t understand your point. “If you think of a map of London as a map of London, then it represents London. If you think of a map of London as a piece of paper with ink on it, then does it still represent London?” Umm, I guess? I don’t know! What’s the point of that question? Isn’t it a silly kind of thing to be talking about? What’s at stake?

Well, it seems that purely the map by itself (as a physical object only) doesn't represent London, because the same map-like object could have been created as an (extremely unlikely) accident. Just like a random splash of ink that happens to look like Jesus doesn't represent Jesus, or a random string generator creating the string "Eliezer Yudkowsky" doesn't refer to Eliezer Yudkowsky. What matters seems to be the intention (a mental object) behind the creation of an actual map of London: Someone intended it to represent London.

Or assume a local tries to explain to you where the next gas station is, gesticulates, and uses his right fist to represent the gas station and his left fist to represent the next intersection. The right fist representing the gas station is not a fact about the physical limb alone, but about the local's intention behind using it. (He can represent the gas station even if you misunderstand him, so only his state of mind seems to matter for representation.)

So it isn't clear how a physical object alone (like the cortex) can be about something. Because apparently maps or splashes or strings or fists don't represent anything by themselves. That is not to say that the cortex can't represent things, but rather that it isn't clear why it does, if it does.

Again, I feel like you’re making common sense sound esoteric (or else I’m missing your point). If I don’t know that Yvain is Scott, and if at time 1 I’m thinking about Yvain, and if at time 2 I’m thinking about Scott, then I’m doing two systematically different things at time 1 versus time 2, right?

Exactly. But it isn't clear why these thoughts are different. If your thinking about someone is a relation between yourself and someone else, then it isn't clear why you thinking about one person could ever be two different things.

(A similar problem arises when you think about something that might not exist, like God. Does this thought then express a relation between yourself and nothing? But thinking about nothing is clearly different from thinking about God. Besides, other non-existent objects, like the largest prime number, are clearly different from God.)

Maybe it is instead a relation between yourself and your concept of Yvain, and a relationship between yourself and your concept of Scott, which would be different relations, if the names express different concepts, in case you don't regard them as synonymous. But both concepts happen to refer to the same object. Then "refers to" (or "represents") would be a relation between a concept and an object. Then the question is again how reference/representation/aboutness/intentionality works, since ordinary physical objects don't seem to do it. What makes it the case that concept X represents, or doesn't represent, object Y?

But when thinking about our own thoughts, no such failure of identification seems possible.

In some contexts, two different things in the map wind up pointing to the same thing in the territory. In other cases, that doesn’t happen. For example, in the domain of “members of my family”, I’m confident that the different things on my map are also different in the territory.

If you believe x and y are members of your family, that doesn't imply you having a belief on whether x and y are identical or not. But if x and y are thoughts of yours (or other mental objects), you know whether they are the same or not. Example: you are confident that your brother is a member of your family, and that the person who ate your apple is a member of your family, but you are not confident about whether your brother is identical to the person who ate your apple.

It seems such examples can be constructed for any external objects, but not for internal ones, so the only "domain" where extensionality holds for intentionality/representation relations is arguably internal objects (our own mental states).

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-09-26T19:05:56.048Z · LW(p) · GW(p)

I feel like the difference here is that I’m trying to talk about algorithms (self-supervised learning, generative models, probabilistic inference), and you’re trying to talk about philosophy? (See §1.6.2 [LW · GW]). I think there are questions that seem important and tricky in your philosophy-speak, but seem weird or obvious or pointless in my algorithm-speak … Well anyway, here’s my perspective:

Let’s say:

- There’s a real-world thing T (some machine made of atoms),

- T is upstream of some set of sensory inputs S (light reflecting off the machine and hitting photoreceptors etc.)

- There’s a predictive learning algorithm L tasked with predicting S,

- This learning algorithm gradually builds a trained model (a.k.a. generative model space, a.k.a. intuitive model space) M.

In this case, it is often (though not always) the case that some part of M will have a straightforward structural resemblance to T. In §1.3.2 [LW · GW], I called that a “veridical correspondence”.

If that happens, then we know why it happened; it happened because of the learning algorithm L! Obviously, right? Veridical map-territory correspondence is generally a very effective way to predict what’s going to happen, and thus predictive learning algorithms very often build trained models with veridical aspects. (I think the term “teleosemantics” [LW · GW] is relevant here? Not sure.)

By contrast, if some part of M has a straightforward structural resemblence to T, then the hypothesis that this happened by coincidence is astronomically unlikely, compared to the hypothesis that this happened because it’s a good way for L to reduce its loss function.

(Then you say: “Ah, but what if that astronomical coincidence comes to pass?” Well then I would say “Huh. Funny that”, and I would shrug and go on with my day. I never claimed to have an airtight philosophical theory of about-ness or representing-ness or whatever! It was you who brought it up!)

Other times, there isn’t a veridical correspondence! Instead, the predictive learning algorithm builds an M, no part of which has any straightforward structural resemblance to T. There are lots of reasons that could happen. I gave one or two examples of non-veridical things in this post, and much more coming up in Post 3.

But it isn't clear why these thoughts are different. If your thinking about someone is a relation between yourself and someone else, then it isn't clear why you thinking about one person could ever be two different things.

M is some data structure stored in the cortex. If I don’t know that Scott is Yvain, then Scott is one part of M, and Yvain is a different part of M. Two different sets of neurons in the cortex, or whatever. Right? I don’t think I’m saying anything deep here. :)

Replies from: cubefox↑ comment by cubefox · 2024-09-26T20:00:34.606Z · LW(p) · GW(p)

I'm not sure how much "structural resemblance" or "veridical correspondence" can account for representation/reference. Maybe our concept of a sock or an apple somehow (structurally) resembles a sock or an apple. But what if I'm thinking of the content of your suitcase, and I don't know whether it is a sock or an apple or something else? Surely the part of the model (my brain) which represents/refers to the content of your suitcase does not in any way (structurally or otherwise) resemble a sock, even if the content of your suitcase is indeed identical to a sock.

M is some data structure stored in the cortex. If I don’t know that Scott is Yvain, then Scott is one part of M, and Yvain is a different part of M. Two different sets of neurons in the cortex, or whatever. Right? I don’t think I’m saying anything deep here. :)

But Scott and Ivan are an object in the territory, not parts of a model, so the parts of the model which do represent Scott and Yvain require the existence of some sort of representation relation.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-09-26T21:40:33.523Z · LW(p) · GW(p)

Maybe our concept of a sock or an apple somehow (structurally) resembles a sock or an apple.

I could start writing pairs of sentences like:

REAL WORLD: feet often have socks on them

MY INTUITIVE MODELS: “feet” “often” “have” “socks” “on” “them”

REAL WORLD: socks are usually stretchy

MY INTUITIVE MODELS: “socks” “are” “usually” “stretchy”

(… 7000 more things like that …)

If you take all those things, AND the information that all these things wound up in my intuitive models via the process of my brain doing predictive learning from observations of real-world socks over the course of my life, AND the information that my intuitive models of socks tend to activate when I’m looking at actual real-world socks, and to contribute to me successfully predicting what I see … and you mix all that together … then I think we wind up in a place where saying “my intuitive model of socks has by-and-large pretty good veridical correspondence to actual socks” is perfectly obvious common sense. :)

(This is all the same kinds of things I would say if you ask me what makes something a map of London. If it has features that straightforwardly correspond to features of London, and if it was made by someone trying to map London, and if it is actually useful for navigating London in the same kind of way that maps are normally useful, then yeah, that's definitely a map of London. If there's a weird edge case where some of those apply but not others, then OK, it's a weird edge case, and I don't see any point in drawing a sharp line through the thicket of weird edge cases. Just call them edge cases!)

But what if I'm thinking of the content of your suitcase, and I don't know whether it is a sock or an apple or something else? Surely the part of the model (my brain) which represents/refers to the content of your suitcase does not in any way (structurally or otherwise) resemble a sock, even if the content of your suitcase is indeed identical to a sock.

Right, if I don’t know what’s in your suitcase, then there will be rather little veridical correspondence between my intuitive model of the inside of your suitcase, and the actual inside of your suitcase! :)

(The statement “my intuitive model of socks has by-and-large pretty good veridical correspondence to actual socks” does not mean I have omniscient knowledge of every sock on Earth, or that nothing about socks will ever surprise me, etc.!)

But Scott and [Yvain] are an object in the territory, not parts of a model, so the parts of the model which do represent Scott and Yvain require the existence of some sort of representation relation.

Oh sorry, I thought that was clear from context … when I say “Scott is one part of M”, obviously I mean something more like “[the part of my intuitive world-model that I would describe as Scott] is one part of M”. M is a model, i.e. data structure, stored in the cortex. So everything in M is a part of a model by definition.

Replies from: cubefox↑ comment by cubefox · 2024-09-26T23:53:55.641Z · LW(p) · GW(p)

But what if I'm thinking of the content of your suitcase, and I don't know whether it is a sock or an apple or something else? Surely the part of the model (my brain) which represents/refers to the content of your suitcase does not in any way (structurally or otherwise) resemble a sock, even if the content of your suitcase is indeed identical to a sock.

Right, if I don’t know what’s in your suitcase, then there will be rather little veridical correspondence between my intuitive model of the inside of your suitcase, and the actual inside of your suitcase! :)

(The statement “my intuitive model of socks has by-and-large pretty good veridical correspondence to actual socks” does not mean I have omniscient knowledge of every sock on Earth, or that nothing about socks will ever surprise me, etc.!)

Okay, but then this theory doesn't explain how we (or a hypothetical ML model) can in fact successfully refer to / think about things which aren't known more or less directly. Like the contents of the suitcase, the person ringing at the door, the cause of the car failing to start, the reason for birth rate decline, the birthday present, what I had for dinner a week ago, what I will have for dinner tomorrow, the surprise guest, the solution to some equation, the unknown proof of some conjecture, the things I forgot about etc.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-09-27T01:04:07.175Z · LW(p) · GW(p)

What you’re saying is basically: sometimes we know some aspects of a thing, but don’t know other aspects of it. There’s a thing in a suitcase. Well, I know where it is (in the suitcase), and a bit about how big it is (smaller than a bulldozer), and whether it’s tangible versus abstract (tangible). Then there are other things about it that I don’t know, like its color and shape. OK, cool. That’s not unusual—absolutely everything is like that. Even things I’m looking straight at are like that. I don’t know their weight, internal composition, etc.

I don’t need a “theory” to explain how a “hypothetical” learning algorithm can build a generative model that can represent this kind of information in its latent variables, and draw appropriate inferences. It’s not a hypothetical! Any generative model built by a predictive learning algorithm will actually do this—it will pick up on local patterns and extrapolate them, even in the absence of omniscient knowledge of every aspect of the thing / situation. It will draw inferences from the limited information it does have. Trained LLMs do this, and an adult cortex does it too.

I think you’re going wrong by taking “aboutness” to be a bedrock principle of how you’re thinking about things. These predictive learning algorithms and trained models actually exist. If, when you run these algorithms, you wind up with all kinds of edge cases where it’s unclear what is “about” what, (and you do), then that’s a sign that you should not be treating “aboutness” as a bedrock principle in the first place. “Aboutness” is like any other word / category—there are cases where it’s clearly a useful notion, and cases where it’s clearly not, and lots of edge cases in between. The sensible way to deal with edge cases is to use more words to elaborate what’s going on. (“Is chess a sport?” “Well, it’s like a sport in such-and-such respects but it also has so-and-so properties which are not very sport-like.” That’s a good response! No need for philosophizing.)

That’s how I’m using “veridicality” (≈ aboutness) in this series. I defined the term in Post 1 and am using it regularly, because I think there are lots of central cases where it’s clearly useful. There are also plenty of edge cases, and when I hit an edge case, I just use more words to elaborate exactly what’s going on. [Copying from Post 1:] For example, suppose intuitive concept X faithfully captures the behavior of algorithm Y, but X is intuitively conceptualized as a spirit floating in the room, rather than as an algorithm within the Platonic, ethereal realm of algorithms. Well then, I would just say something like: “X has good veridical correspondence to the behavior of algorithm Y, but the spirit- and location-related aspects of X do not veridically correspond to anything at all.” (This is basically a real example—it’s how some “awakened” (Post 6) people talk about what I call conscious awareness in this post.) I think you want “aboutness” to be something more fundamental than that, and I think that you’re wrong to want that.

Replies from: cubefox↑ comment by cubefox · 2024-09-27T02:46:10.226Z · LW(p) · GW(p)

I don’t need a “theory” to explain how a “hypothetical” learning algorithm can build a generative model that can represent this kind of information in its latent variables, and draw appropriate inferences.

Sure, but we would still need a separate explanation if we want to understand how representation/reference works in a model (or in the brain) itself. If we are interested in that, of course. It could be interesting from the standpoint of philosophy of mind, philosophy of language, linguistics, cognitive psychology, and of course machine learning interpretability.

If, when you run these algorithms, you wind up with all kinds of edge cases where it’s unclear what is “about” what, (and you do), then that’s a sign that you should not be treating “aboutness” as a bedrock principle in the first place.

I don't think we did run into any edge cases of representation so far where something partially represents or is partially represented, like chess is partially sport-like. Representation/reference/aboutness doesn't seem a very vague concept. Apparently the difficulty of finding an adequate definition isn't due to vagueness.

That being said, it's clearly not necessary for your theory to cover this topic if you don't find it very interesting and/or you have other objectives.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-09-27T12:56:01.328Z · LW(p) · GW(p)

it's clearly not necessary for your theory to cover this topic if you don't find it very interesting and/or you have other objectives

I’m trying to make a stronger statement than that. I think the kind of theory you’re looking for doesn’t exist. :)

I don't think we did run into any edge cases of representation so far where something partially represents or is partially represented

In this comment [LW(p) · GW(p)] you talked about a scenario where a perfect map of London was created by “an (extremely unlikely) accident”. I think that’s an “edge case of representation”, right? Just as a virus is an edge case of alive-ness. And (I claim) that arguing about whether viruses are “really” alive or not is a waste of time, and likewise arguing whether accidental maps “really” represent London or not is a waste of time too.

Again, a central case of representation would be:

- (A) The map has lots and lots of features that straightforwardly correspond to features of London

- (B) The reason for (A) is that the map was made by an optimization process [LW · GW] that systematically (though imperfectly) tends to lead to (A), e.g. the map was created by a human map-maker who was trying to accurately map London

- (C) The map is in fact useful for navigating London

I claim that if you run a predictive learning algorithm, that’s a valid (B), and we can call the trained generative model a “map”. If we do that…

- You can pick out places where all three of (A-C) are very clearly applicable, and when you find one of those places, you can say that there’s some map-territory correspondence / representation there. Those places are clear-cut central examples, just as a cat is a clear-cut central example of alive-ness.

- There are other bits of the map that clearly don’t correspond to anything in the territory, like a drug-induced hallucinated ghost that the person believes to be really standing in front of them. That’s a clear-cut non-example of map-territory correspondence, just as a rock is a clear-cut non-example of alive-ness.

- And then there are edge cases, like the Gettier problem, or vague impressions, or partially-correct ideas, or hearsay, or vibes, or Scott/Yvain, or latent variables that are somehow helpful for prediction but in a very hard-to-interpret and indirect way, or habits, etc. Those are edge-cases of map-territory correspondence. And arguing about what if anything they’re “really” representing is a waste of time, just as arguing about whether a virus is “really” alive is a waste of time, right? In those cases, I claim that it’s useful to invoke the term “map-territory correspondence” or “representation” as part of a longer description of what’s happening here, but not as a bedrock ground truth that we’re trying to suss out.

↑ comment by cubefox · 2024-09-27T19:20:19.476Z · LW(p) · GW(p)

In this comment you talked about a scenario where a perfect map of London was created by “an (extremely unlikely) accident”. I think that’s an “edge case of representation”, right?

I think it clearly wasn't a case of representation, in the same way a random string clearly doesn't represent anything, nor a cloud that happens to look like something. Those are not edge cases; edge cases are arguably examples where something satisfies a vague predicate partially, like chess being "sporty" to some degree. ("Life" is arguably also vague because it isn't clear whether it requires a metabolism, which isn't present in viruses.)

Again, a central case of representation would be:

(A) The map has lots and lots of features that straightforwardly correspond to features of London

(B) The reason for (A) is that the map was made by an optimization process that systematically (though imperfectly) tends to lead to (A), e.g. the map was created by a human map-maker who was trying to accurately map London

(C) The map is in fact useful for navigating London

I think maps are not overly central cases of representation.

(A) is clearly not at all required for clear cases of representation, e.g. in case of language (abstract symbols) or when a fist is used to represent a gas station, or a stone represents a missing chess piece, etc. That's also again the difference between the sock and the contents of the suitcase. The latter concept doesn't resemble the thing it represents, like a sock, even though it may refer to one.

Regarding (C), representation also doesn't have to be helpful to be representation. A secret message written in code is in fact optimized to be maximally unhelpful to most people, perhaps even to everyone in case of an encrypted diary. And the local explaining the way to the next gas station with the help of his hands clearly means something regardless of whether someone is able to understand what he means. Yes, maps specifically are mostly optimized to be helpful, but that doesn't mean representation has to be helpful. It's similar to portraits: they tend to be optimized for representing someone, resembling someone, and for being aesthetically pleasing. Which doesn't mean representation itself requires resemblance or aesthetics.

I agree that something like (B) might be a defining feature of representation, but not quite. Some form of optimization seems to be necessary (randomly created things don't represent anything), but optimization for (A) (resemblance) is necessary only for things like maps or pictures, but not for other forms of representation, as I argued above.

And then there are edge cases, like the Gettier problem, or vague impressions, or partially-correct ideas, or hearsay, or vibes, or Scott/Yvain, or latent variables that are somehow helpful for prediction but in a very hard-to-interpret and indirect way, or habits, etc. Those are edge-cases of map-territory correspondence. And arguing about what if anything they’re “really” representing is a waste of time, just as arguing about whether a virus is “really” alive is a waste of time.

The Gettier problem is a good illustration of what I think is the misunderstanding here: Gettier cases are not supposed to be edge cases of knowledge. Just like the randomly created string "Eliezer Yudkowsky" is not an edge case of representation. Edge cases have to do with vagueness, but the problem with defining knowledge is not that the concept may be vague. Gettier cases are examples where justified true belief is intuitively present but knowledge is intuitively absent. Not partially present like in edge cases. That is, we would apply the first terms (justification, truth, belief) but not the latter (knowledge). Vagueness itself is often not a problem for definition. A bachelor is clearly an unmarried man, but what may count as marriage, and what separates men from boys, is a matter of degree, and so too must be bachelor status.

Now you may still think that finding something like necessary and sufficient conditions for representation is still "a waste of time" even if vagueness is not the issue. But wouldn't that, for example, also apply to your attempted explication of "intention"? Or "awareness"? In my opinion, all those concepts are interesting and benefit from analysis.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-09-28T16:11:29.342Z · LW(p) · GW(p)

Back to the Scott / Yvain thing, suppose that I open Google Maps and find that there are two listings for the same restaurant location, one labeled KFC, the other labeled Kentucky Fried Chicken. What do we learn from that?

- (X) The common-sense answer is: there’s some function in the Google Maps codebase such that, when someone submits a restaurant entry, it checks if it’s a duplicate before officially adding it. Let’s say the function is

check_if_dupe(). And this code evidently had a false negative when someone submitted KFC. Hey, let’s dive into the implementation ofcheck_if_dupe()to understand why there was a false negative! … - (Y) The philosophizing answer is: This observation implies something deep and profound about the nature of Representation! :)

Maybe I’m being too harsh on the philosophizing answer (here and in earlier comments); I’ll do the best I can to steelman it now.

- (Y’) We almost always use language in a casual way, but sometimes it’s useful to have some term with a precise universal necessary-and-sufficient definition that can be unambiguously applied to any conceivable situation. (Certainly it would be hard to do math without those kinds of definitions!) And if we want to give the word “Representation” this kind of precise universal definition, then that definition had better be able to deal with this KFC situation.

Anyway, (Y’) is fine, but we need to be clear that we’re not learning anything about the world by doing that activity. At best, it’s a means to an end. Answering (Y) or (Y’) will not give any insight into the straightforward (X) question of why check_if_dupe() returned a false negative. (Nor vice-versa.) I think this is how we’re talking past each other. I suspect that you’re too focused on (Y) or (Y’) because you expect them to answer all our questions including (X), when it’s obvious (in this KFC case) that they won’t, right? I’m putting words in your mouth; feel free to disagree.

(…But that was my interpretation of a few comments ago where I was trying [LW(p) · GW(p)] to chat about the implementation of check_if_dupe() in the human cortex as a path to answering your “failure of identification” question, and your response [LW(p) · GW(p)] seemed to fundamentally reject that whole way of thinking about things.)

Nowhere in this series do I purport to offer any precise universal necessary-and-sufficient definition that can be applied to any conceivable situation, for any word at all, not “Awareness”, not “Intention”, not anything. It’s just not an activity I’m generally enthusiastic about. You can be the world-leading expert on socks without having a precise universal necessary-and-sufficient definition of what constitutes a sock. Likewise, if there’s a map of London made by an astronomically-unlikely coincidence, and you say it’s not a Representation of London, and I say yes it is a Representation of London, then we’re not disagreeing about anything substantive. We have the same understanding of the situation but a different definition of the word Representation. Does that matter? Sure, a little bit. Maybe your definition of Representation is better, all things considered—maybe it’s more useful for explaining certain things, maybe it better aligns with preexisting intuitions, whatever. But still, it’s just terminology, not substance. We can always just split the term Representation into “Representation_per_cubefox” and “Representation_per_Steve” and then suddenly we’re not disagreeing about anything at all. Or better yet, we can agree to use the word Representation in the obvious central cases where everybody agrees that this term is applicable, and to use multi-word phrases and sentences and analogies to provide nuance in cases where that’s helpful. In other words: the normal way that people communicate all the time! :)

Replies from: cubefox↑ comment by cubefox · 2024-10-03T15:12:50.484Z · LW(p) · GW(p)

I don't understand the relevance of the Google Maps example and the emphasis you place on the "check_if_dupe()" function for understanding what "representation" is.

I think my previous explanation of why we can always know the identity of internal objects (to stick with a software analogy: whether two variables refer to the same memory location) but not always the identity of external objects, since there is no full access to those objects. Which is why the function can make mistakes. However, this is not an analysis of representation.

Nowhere in this series do I purport to offer any precise universal necessary-and-sufficient definition that can be applied to any conceivable situation, for any word at all, not “Awareness”, not “Intention”, not anything.

And you don't even aim for a good definition? For what do you aim then?

You can be the world-leading expert on socks without having a precise universal necessary-and-sufficient definition of what constitutes a sock.

I think if I'm doing a priori armchair reasoning on socks, the way you and I do armchair reasoning here, I'm pretty much constrained to conceptual analysis. Which is the activity of finding necessary and sufficient conditions for a concept.

Likewise, if there’s a map of London made by an astronomically-unlikely coincidence, and you say it’s not a Representation of London, and I say yes it is a Representation of London, then we’re not disagreeing about anything substantive.

Yes. But are you even disagreeing with me on this map example here? If so, do you disagree with the cases of clouds, fists, random strings etc? In philosophy the issue is rarely the disagreement over what a term means (because then we could simply infer that the term is ambiguous, and the case is closed), rather, the problem is finding a definition for a term where everyone already agrees on what it means. Like knowledge, causation, explanation, evidence, probability, truth etc.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-10-03T15:53:23.336Z · LW(p) · GW(p)

And you don't even aim for a good definition? For what do you aim then? … I think if I'm doing a priori armchair reasoning on socks, the way you and I do armchair reasoning here, I'm pretty much constrained to conceptual analysis. Which is activity of finding necessary and sufficient conditions for a concept.

The goal of this series is to explain how certain observable facts about the physical universe arise from more basic principles of physics, neuroscience, algorithms, etc. See §1.6 [LW · GW].

I’m not sure what you mean by “armchair reasoning”. When Einstein invented the theory of General Relativity, was he doing “armchair reasoning”? Well, yes in the sense that he was reasoning, and for all I know he was literally sitting in an armchair while doing it. :) But what he was doing was not “constrained to conceptual analysis”, right?

As a more specific example, one thing that happens a lot in this series is: I describe some algorithm, and then I talk about what happens when you run that algorithm. Those things that the algorithm winds up doing are often not immediately obvious just from looking at the algorithm pseudocode by itself. But they make sense once you spend some time thinking it through. This is the kind of activity that people frequently do in algorithms classes, and it overlaps with math, and I don’t think of it as being related to philosophy or “conceptual analysis” or “a priori armchair reasoning”.

In this case, the algorithm in question happens to be implemented by neurons and synapses in the human brain (I claim). And thus by understanding the algorithm and what it does when you run it, we wind up with new insights into human behavior and beliefs.

Does that help?

are you even disagreeing with me on this map example here

Yes I am disagreeing. If there’s a perfect map of London made by an astronomically-unlikely coincidence, and someone asks whether it’s a “representation” of London, then your answer is “definitely no” and my answer is “Maybe? I dunno. I don’t understand what you’re asking. Can you please taboo the word ‘representation’ [? · GW] and ask it again?” :-P

Replies from: cubefox↑ comment by cubefox · 2024-10-03T16:45:02.966Z · LW(p) · GW(p)

Einstein (scientists in general) tried to explain empirical observations. The point of conceptual analysis, in contrast, is to analyze general concepts, to answer "What is X?" questions, where X is a general term. I thought your post fit more in the conceptual analysis direction rather than in an empirical one, since it seems focused on concepts rather than observations.

One way to distinguish the two is by what they consider counterexamples. In science, a counterexample is an observation which contradicts a prediction of the proposed explanation. In conceptual analysis, a counterexample is a thought experiment (like a Gettier case or the string example above) to which the proposed definition (the definiens) intuitively applies but the defined term (the definiendum) doesn't, or the other way round.

The algorithm analysis method arguably doesn't really fit here, since it requires access to the algorithm, which isn't available in case of the brain. (Unless I misunderstood the method and it treats algorithms actually as black boxes while only looking at input/output examples. But then it wouldn't be so different from conceptual analysis, where a thought experiment is the input, and an intuitive application of a term the output.)

are you even disagreeing with me on this map example here

Yes I am disagreeing. If there’s a perfect map of London made by an astronomically-unlikely coincidence, and someone asks whether it’s a “representation” of London, then your answer is “definitely no” and my answer is “Maybe? I dunno. I don’t understand your question.

But I assume you do agree that random strings don't refer to anyone, that clouds don't represent anyone they accidentally resemble, that a fist by itself doesn't represent anything etc. An accidentally created map seems to be the same kind of case, just vastly less likely. So treating them differently doesn't seem very coherent.

Can you please taboo the word ‘representation’ and ask it again?” :-P

Well... That's hardly possible when analysing the concept of representation, since this is just the meaning of the word "represents". Of course nobody is forcing you to do it when you find it pointless, which is okay.

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-10-03T17:22:17.646Z · LW(p) · GW(p)

Of course nobody is forcing you to do it when you find it pointless, which is okay.

Yup! :) :)

The algorithm analysis method arguably doesn't really fit here, since it requires access to the algorithm, which isn't available in case of the brain.

Oh I have lots and lots of opinions about what algorithms are running in the brain. See my many dozens of blog posts about neuroscience. Post 1 has some of the core pieces: I think there’s a predictive (a.k.a. self-supervised) learning algorithm, that the trained model (a.k.a. generative model space) for that learning algorithm winds up stored in the cortex, and that the generative model space is continually queried in real time by a process that amounts to probabilistic inference. Those are the most basic things, but there’s a ton of other bits and pieces that I introduce throughout the series as needed, things like how “valence” fits into that algorithm [LW · GW], how “valence” is updated by supervised learning and temporal difference learning, how interoception fits into that algorithm, how certain innate brainstem reactions fit into that algorithm, how various types of attention fit into that algorithm … on and on.

Of course, you don’t have to agree! There is never a neuroscience consensus. Some of my opinions about brain algorithms are close to neuroscience consensus, others much less so. But if I make some claim about brain algorithms that seems false, you’re welcome to question it, and I can explain why I believe it. :)

…Or separately, if you’re suggesting that the only way to learn about what an algorithm will do when you run it, is to actually run it on an actual computer, then I strongly disagree. It’s perfectly possible to just write down pseudocode, think for a bit, and conclude non-obvious things about what that pseudocode would do if you were to run it. Smart people can reach consensus on those kinds of questions, without ever running the code. It’s basically math—not so different from the fact that mathematicians are perfectly capable of reaching consensus about math claims without relying on the computer-verified formal proofs as ground truth. Right?

As an example, “the locker problem” is basically describing an algorithm, and asking what happens when you run that algorithm. That question is readily solvable without running any code on a computer, and indeed it would be perfectly reasonable to find that problem on a math test where you don’t even have computer access. Does that help? Or sorry if I’m misunderstanding your point.

comment by Rafael Harth (sil-ver) · 2024-09-27T10:38:28.762Z · LW(p) · GW(p)