[Intuitive self-models] 1. Preliminaries

post by Steven Byrnes (steve2152) · 2024-09-19T13:45:27.976Z · LW · GW · 23 commentsContents

1.1 Summary & Table of Contents 1.1.1 Summary & Table of Contents—for the whole series 1.1.2 Summary & Table of Contents—for this first post in particular 1.2 Generative models and probabilistic inference 1.2.1 Example: bistable perception 1.2.2 Probabilistic inference 1.2.3 The thing you “experience” is the generative model (a.k.a. “intuitive model”) 1.2.4 Explanation of bistable perception 1.2.5 Teaser: Unusual states of consciousness as a version of bistable perception 1.3 Casting judgment upon intuitive models 1.3.1 “Is the intuitive model real, or is it fake?” 1.3.2 “Is the intuitive model veridical, or is it non-veridical?” 1.3.2.1 Non-veridical intuitive models are extremely common and unremarkable 1.3.2.2 …But of course it’s good if you’re intellectually aware of how veridical your various intuitive models are 1.3.3 “Is the intuitive model healthy, or is it pathological?” 1.4 Why does the predictive learning algorithm build generative models / concepts related to what’s happening in your own mind? 1.4.1 Further notes on the path from predictive learning algorithms to intuitive self-models 1.5 Appendix: Some terminology I’ll be using in this series 1.5.1 Learning algorithms and trained models 1.5.2 Concepts, models, thoughts, subagents 1.6 Appendix: How does this series fit into Philosophy Of Mind? 1.6.1 Introspective self-reports as a “straightforward” scientific question 1.6.2 Are explanations-of-self-reports a first step towards understanding the “true nature” of consciousness, free will, etc.? 1.6.3 Related work 1.7 Conclusion None 23 comments

1.1 Summary & Table of Contents

This is the first of a series of eight blog posts [? · GW]. Here’s an overview of the whole series, and then we’ll jump right into the first post!

1.1.1 Summary & Table of Contents—for the whole series

This is a rather ambitious series of blog posts, in that I’ll attempt to explain what’s the deal with consciousness, free will, hypnotism, enlightenment, hallucinations, flow states, dissociation, akrasia, delusions, and more.

The starting point for this whole journey is very simple:

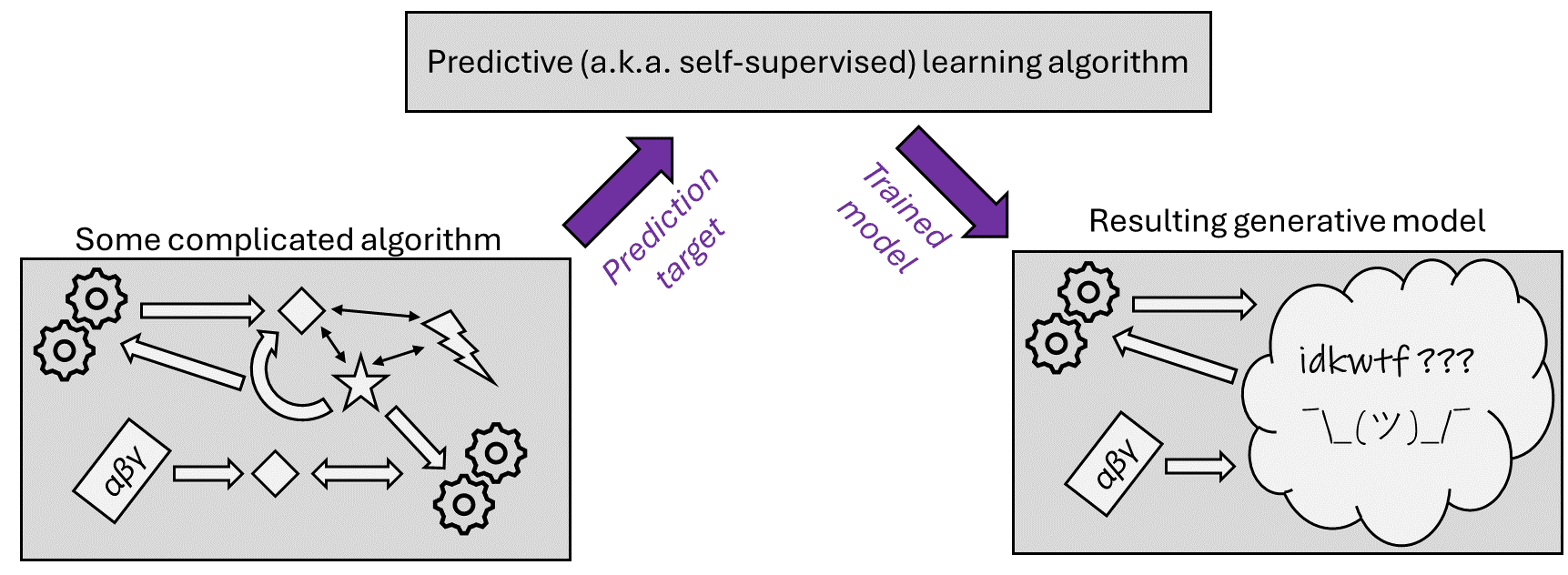

- The brain has a predictive (a.k.a. self-supervised) learning algorithm.

- This algorithm builds generative models (a.k.a. “intuitive models”) that can predict incoming data.

- It turns out that, in order to predict incoming data, the algorithm winds up not only building generative models capturing properties of trucks and shoes and birds, but also building generative models capturing properties of the brain algorithm itself.

Those latter models, which I call “intuitive self-models”, wind up including ingredients like conscious awareness, deliberate actions, and the sense of applying one’s will.

That’s a simple idea, but exploring its consequences will take us to all kinds of strange places—plenty to fill up an eight-post series! Here’s the outline:

- Post 1 (Preliminaries) gives some background on the brain’s predictive learning algorithm, how to think about the “intuitive models” built by that algorithm, how intuitive self-models come about, and the relation of this whole series to Philosophy Of Mind.

- Post 2 (Conscious Awareness) [LW · GW] proposes that our intuitive self-models include an ingredient called “conscious awareness”, and that this ingredient is built by the predictive learning algorithm to represent a serial aspect of cortex computation. I’ll discuss ways in which this model is veridical (faithful to the algorithmic phenomenon that it’s modeling), and ways that it isn’t. I’ll also talk about how intentions and decisions fit into that framework.

- Post 3 (The Homunculus) [LW · GW] focuses more specifically on the intuitive self-model that almost everyone reading this post is experiencing right now (as opposed to the other possibilities covered later in the series), which I call the Conventional Intuitive Self-Model. In particular, I propose that a key player in that model is a certain entity that’s conceptualized as actively causing acts of free will. Following Dennett, I call this entity “the homunculus”, and relate that to intuitions around free will and sense-of-self.

- Post 4 (Trance) [LW · GW] builds a framework to systematize the various types of trance, from everyday “flow states”, to intense possession rituals with amnesia. I try to explain why these states have the properties they do, and to reverse-engineer the various tricks that people use to induce trance in practice.

- Post 5 (Dissociative Identity Disorder, a.k.a. Multiple Personality Disorder) [LW · GW] is a brief opinionated tour of this controversial psychiatric diagnosis. Is it real? Is it iatrogenic? Why is it related to borderline personality disorder (BPD) and trauma? What do we make of the wild claim that each “alter” can’t remember the lives of the other “alters”?

- Post 6 (Awakening / Enlightenment / PNSE) [LW · GW] is a type of intuitive self-model, typically accessed via extensive meditation practice. It’s quite different from the conventional intuitive self-model. I offer a hypothesis about what exactly the difference is, and why that difference has the various downstream effects that it has.

- Post 7 (Hearing Voices, and Other Hallucinations) [LW · GW] talks about factors contributing to hallucinations—although I argue against drawing a deep distinction between hallucinations versus “normal” inner speech and imagination. I discuss both psychological factors like schizophrenia and BPD; and cultural factors, including some critical discussion of Julian Jaynes’s Origin of Consciousness In The Breakdown Of The Bicameral Mind.

- Post 8 (Rooting Out Free Will Intuitions) [LW · GW] is, in a sense, the flip side of Post 3. Post 3 centers around the suite of intuitions related to free will. What are these intuitions? How did these intuitions wind up in my brain, even when they have (I argue) precious little relation to real psychology or neuroscience? But Post 3 left a critical question unaddressed: If free-will-related intuitions are the wrong way to think about the everyday psychology of motivation—desires, urges, akrasia, willpower, self-control, and more—then what’s the right way to think about all those things? This post offers a framework to fill that gap.

1.1.2 Summary & Table of Contents—for this first post in particular

This post will lay groundwork that I’ll be using throughout the series.

- Section 1.2 uses the fun optical-illusion-type phenomenon of “bistable perception” to illustrate the idea that the cortex learns and stores a space of generative models, and processes incoming data by searching through that space via probabilistic inference. (I’ll use “generative model” and “intuitive model” interchangeably in this series.)

- Section 1.3 talks about three very different ways that people can judge each other’s intuitive models:

- “Is the intuitive model real, or fake?” is about honestly reporting your own internal experience, versus lying or misremembering;

- “Is the intuitive model veridical, or non-veridical?” is about map–territory correspondence [? · GW]: “veridical” means that the intuitive model (map) corresponds to some kind of external truth (territory).

- “Is the intuitive model healthy, or pathological?” is about a person’s mental health and well-being.

- Section 1.4 makes the jump from “intuitive model” to “intuitive self-model”: why and how does the brain’s predictive learning algorithm wind up building generative models of aspects of those very same brain algorithms?

- Section 1.5 is an appendix going through some terminology and assumptions that I use when talking about probabilistic inference and intuitive models.

- Section 1.6 is another appendix about how this series relates to Philosophy Of Mind. I’ll argue that this series is highly relevant background for anyone trying to understand the true nature of consciousness, free will, and so on. But the series itself is firmly restricted in scope to questions that can be resolved within the physical universe, including physics, neuroscience, algorithms, and so on. As for “the true nature of consciousness” and similar questions, I will pass that baton to the philosophers—and then run away screaming.

1.2 Generative models and probabilistic inference

1.2.1 Example: bistable perception

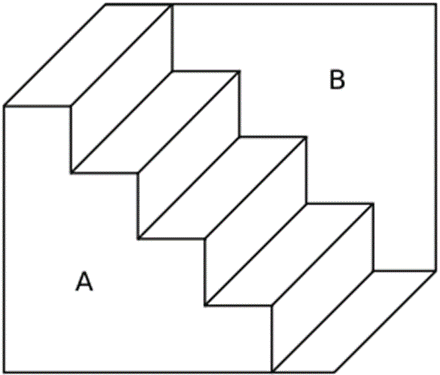

Here are two examples of bistable visual perception:

The steps are on the floor or the ceiling. The dancer is spinning clockwise or counterclockwise. You’ll see one or the other. Your mind may spontaneously switch after a while. If it doesn’t, you might be able to switch it deliberately, via hard-to-describe intuitive moves that involve attention control, moving your eyes, maybe blurring your vision at times, or who knows.

(Except that I can’t for the life of me get the dancer to go counterclockwise! I can sometimes get her to go counterclockwise in my peripheral vision, but as soon as I look right at her, bam, clockwise every time. Funny.)

1.2.2 Probabilistic inference

To understand what’s going on in bistable perception, a key is that perceptions involve probabilistic inference.[1] In the case of vision:

- Your brain has a giant space of possible generative models[2] that map from underlying states of the world (e.g. “there’s a silhouette dancer with thus-and-such 3D shape spinning clockwise against a white background etc.”) to how the photoreceptor cells would send signals into the brain (“this part of my visual field is bright, that part is dark, etc.”)

- …But oops, that map is the wrong way around, because the brain does not know the underlying state of the world, but it does know what the photoreceptor cells are doing.

- …So your brain needs to invert that map. And that inversion operation is called probabilistic inference.

Basically, part of your brain (the cortex and thalamus, more-or-less) is somehow searching through the generative model space (which was learned over the course of prior life experience) until it finds a generative model (“posterior”) that’s sufficiently compatible with both what the photoreceptor cells are doing (“data”) and what is a priori plausible according to the generative model space (“prior”).

You might be wondering: How does that process work? Is that part of the brain literally implementing approximate Bayesian inference, or is it effectively doing probabilistic inference via some superficially-different process that converges to it (or has similar behavior for some other reason)? Exactly how is the generative model space learned and updated? …Sorry, but I won’t answer those kinds of questions in this series. Not only that, but I encourage everyone else to also not answer those kinds of questions, because I firmly believe that we shouldn’t invent brain-like AGI until we figure out how to use it safely [LW · GW].

1.2.3 The thing you “experience” is the generative model (a.k.a. “intuitive model”)

A key point (more on which in the next post [LW · GW]) is that “experience” corresponds to the active generative model, not to what the photoreceptor cells are doing etc.

You might be thinking: “Wrong! I know exactly what my photoreceptor cells are doing! This part of the visual field is white, that part is black, etc.—those things are part of my conscious awareness! Not just the parameters of a high-level generative model, i.e. the idea that I’m looking at a spinning dancer!”

I have two responses:

- First of all, you’re almost certainly more oblivious to what your photoreceptor cells are doing than you realize—see the checker shadow illusion, or the fact that most people don’t realize that their peripheral vision has terrible resolution and terrible color perception and makes faces look creepy, or the fact that the world seems stable even as your eyes saccade five times a second, etc.

- Second of all, if you say “X is part of my conscious experience”, then I can and will immediately reply “OK, well if that’s true, then I guess X is part of your active generative model”. In particular, there’s no rule that says that the generative model must be only high-level-ish things like “dancer” or “chair”, and can’t also include low-level-ish things like “dark blob of such-and-such shape at such-and-such location”.

1.2.4 Explanation of bistable perception

Finally, back to those bistable perception examples from §1.2.1 above. These are constructed such that, when you look at them, there are two different generative models that are about equally good at explaining the visual data, and also similarly plausible a priori. So your brain can wind up settling on either of them. And that’s what you experience.

Again, the thing you “experience” is the generative model (e.g. the dancer spinning clockwise), not the raw sense data (patterns of light on your retina).

…And that’s the explanation of bistable perception! I don’t think there’s anything particularly deep or mysterious about it. That said, make sure you’re extremely comfortable with bistable perception, because we’re going to take that idea into much more mind-bending directions next.

1.2.5 Teaser: Unusual states of consciousness as a version of bistable perception

In this series, among other topics, I’ll be talking about “hearing voices”, “attaining enlightenment”, “entering trance”, dissociative identity disorder, and more. I propose that we can think of all these things as a bit like bistable perception—these are different generative models that are compatible with the same sensory data.

Granted, the analogy isn’t perfect. For one thing, the Spinning Dancer is just about perception, whereas a hypnotic trance (for example) impacts both perception and action. For another thing, the “sensory data” for the Spinning Dancer is just visual input—but what’s the analogous “sensory data” in the case of trance and so on? These are good questions, and I’ll get to them in future posts.

But meanwhile, I think the basic idea is absolutely right:

- The Spinning Dancer is actually pixels on a screen, but we can experience it as either clockwise or counterclockwise motion—two different generative models.

- Inner monologue is actually certain neurons and synapses doing a certain thing, but we can experience it as either coming from ourselves or coming from a disembodied voice—two different generative models.

- Motor control is actually certain neurons and synapses doing some other thing, but we can experience it as either “caused by our own volition” or “caused by the hypnotist’s volition”—two different generative models.

- Etc.

1.3 Casting judgment upon intuitive models

I know what you’re probably thinking: “OK, so people have intuitive models. How do I use that fact to judge people?” (Y’know, the important questions!) So in this section, I will go through three ways that people cast judgment upon themselves and others based on their intuitive models: “real” vs “fake”, “veridical” vs “non-veridical”, and “healthy” vs “pathological”. Only one of these three (veridical vs non-veridical) will be important for this series, but boy is that one important, so pay attention to that part.

1.3.1 “Is the intuitive model real, or is it fake?”

Fun fact: I consistently perceive the Spinning Dancer going clockwise.

…Is that fun fact “real”?

Yes!! I’m not trolling you—I’m telling you honestly what I experience. The dancer’s clockwise motion is my “real” experience, and it directly corresponds to a “real” pattern of neurons firing in my “real” brain, and this pattern is objectively measurable in principle (and maybe even in practice) if you put a sufficiently advanced brain scanner onto my head.

So, by the same token, suppose someone tells me that they hear disembodied voices, and suppose they’re being honest rather than trolling me. Is that “real”? Yes—in exactly the same sense.

1.3.2 “Is the intuitive model veridical, or is it non-veridical?”

When I say “X is a veridical model of Y”, I’m talking about map-territory correspondence [? · GW]:

- X is part of the “map”—i.e., part of an intuitive model.

- Y is part of the “territory”—i.e., it’s some kind of objective, observer-independent thing.

- “X is a veridical model of Y” means roughly that there’s a straightforward, accurate correspondence between properties of X and corresponding properties of Y.

(Models can be more or less veridical, along a spectrum, rather than a binary.)

Simple example: I have an intuitive model of my sock. It’s pretty veridical! My sock actually exists in the world of atoms, and by and large there’s a straightforward and faithful correspondence between aspects of my intuitive model of the sock, and aspects of how those sock-related atoms are configured.

Conversely, Aristotle had an intuitive model of the sun, but in most respects it was not a veridical model of the sun. For example, his intuitive model said that the sun was smooth and unblemished and attached to a rotating transparent sphere made of aether.[3]

Here’s a weirder example, which will be relevant to this series. I have an intuitive concept of the mergesort algorithm. Is that intuitive model “veridical”? First we must ask: a veridical model of what? Well, it’s not a veridical model of any specific atoms in the real world. But it is a veridical model of a thing in the Platonic, ethereal realm of algorithms! That’s a bona fide “territory”, which is both possible and useful for us to “map”. So there’s a meaningful notion of veridicality there.

When “veridical” needs nuance, I’ll just try to be specific in what I mean. For example, suppose intuitive concept X faithfully captures the behavior of algorithm Y, but X is intuitively conceptualized as a spirit floating in the room, rather than as an algorithm within the Platonic, ethereal realm of algorithms. Well then, I would just say something like: “X has good veridical correspondence to the behavior of algorithm Y, but the spirit- and location-related aspects of X do not veridically correspond to anything at all.”

(If that example seems fanciful, just wait for the upcoming posts!)

OK, hopefully you now know what I mean by the word “veridical”. I haven’t provided any rigorous and universally-applicable definition, because I don’t have one, sorry. But I think it will be clear enough.

Next, an important point going forward:

1.3.2.1 Non-veridical intuitive models are extremely common and unremarkable

…And I’m not just talking about your intuitive models of deliberate optical illusions, or of yet-to-be-discovered scientific phenomena. Here are some more everyday examples:

- Do you see the Spinning Dancer going clockwise? Sorry, that’s not a veridical model of the real-world thing you’re looking at. Counter-clockwise? Sorry, that’s also not a veridical model of the real-world thing you’re looking at! A veridical model of the real-world thing you’re looking at would feel like a 2D pattern of changing pixels on a flat screen—after all, nothing in the real world of atoms is rotating in 3D!

- If you know intellectually that you’re not looking at a 3D spinning thing, then good for you, see next subsection. But that doesn’t change the fact that you’re experiencing an intuitive model involving a 3D spinning thing.

- Think of everything you’ve ever seen, or learned in school, that you found surprising, confusing, or unintuitive at the time you first saw it. Newton’s First Law! Magnets! Siphons! Gyroscopes! Quantum mechanics! Special Relativity! Antibonding orbitals! …If you found any of those unintuitive, then you must have had (and perhaps still have) non-veridical aspects of your intuitive models, that this new information was violating.

- Does the moon seem to follow you when you walk at night? If so, then your intuitive model has the moon situated at the wrong distance from Earth.

1.3.2.2 …But of course it’s good if you’re intellectually aware of how veridical your various intuitive models are

It’s good to know intellectually that you have a non-veridical intuitive model, for the same humdrum reason that it’s good to know anything else that’s true. True beliefs are good. My intuitive models still say that the moon follows me at night, and that the spinning dancer spins, but I know intellectually that neither of those intuitive models are veridical. And that’s good.

By the same token, I endorse the conventional wisdom that if someone is “hearing voices”—in the sense of having an intuitive model that a disembodied voice is coming from 1 meter behind her head—then that’s pretty far on the non-veridical side of the spectrum. And if she denies that—if she says “the voice is there for sure—if you get the right scientific equipment and measure what’s happening 1 meter behind my head, then you’ll find it!”, then I say: “sorry but you’re wrong”.

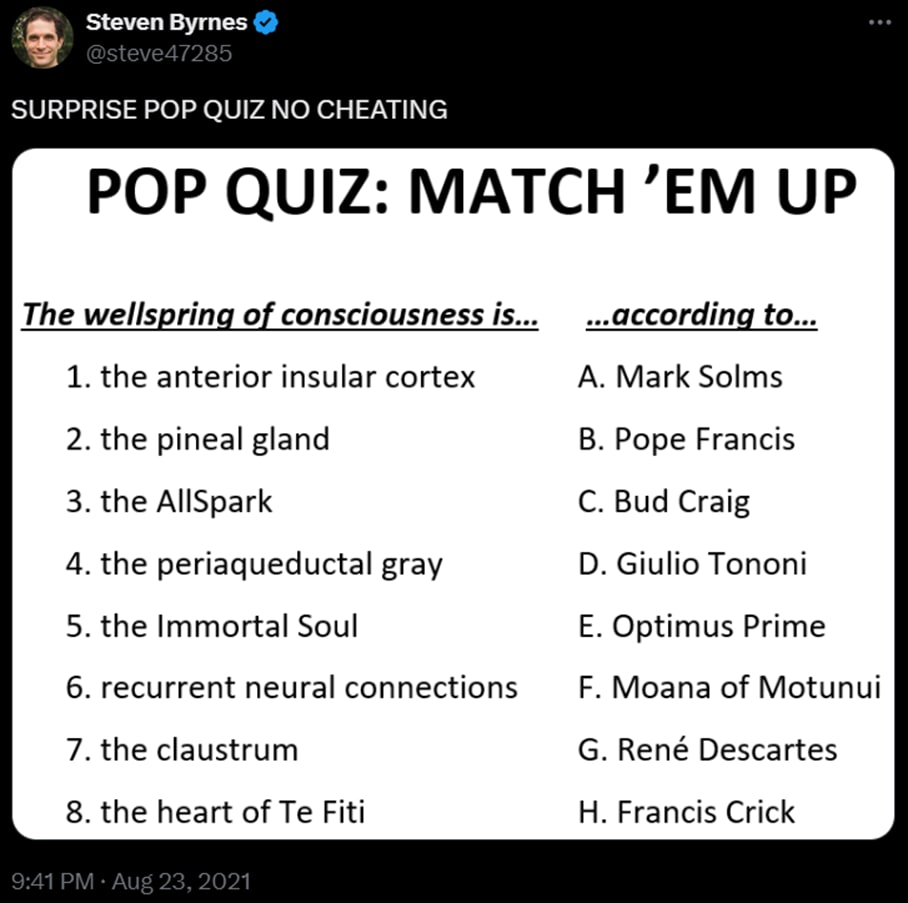

Laugh at her all you want, but then go look in the mirror, because in my opinion everyone has not-terribly-veridical intuitive models of their metacognitive world—the world of consciousness, free will, desires, and so on—and practically all of us incorrectly believe those models to be more veridical than they really are, in various ways. Thus, in my spicy opinion, when Francis Crick (for example) says that phenomenal consciousness is in the claustrum, he’s being confused in a fundamentally similar way as that made-up person above who says that a disembodied voice is in the empty space behind her head. (I’m making a bold claim without defending it; more on this topic in later posts, but note that this kind of thing is not the main subject of this series.)

1.3.3 “Is the intuitive model healthy, or is it pathological?”

As above, there’s nothing pathological about having non-veridical intuitive models.

In the case of the spinning dancer, it’s quite the opposite—if your intuitive model is a veridical model of the thing you’re looking at—i.e., if it doesn’t look like 3D spinning at all, but rather looks like patterns of pixels on a flat screen—then that’s the situation where you might consider checking with a neurologist!!

So, how about hearing voices? According to activists, people who hear voices can find it a healthy and beneficial part of their lives, as long as other people don’t judge and harass them for it—see Eleanor Longden’s TED talk. OK, cool, sounds great, as far as I know. As long as they’re not a threat to themselves or others, let them experience the world how they like!

I’ll go further: if those people think that the voices are veridical—well, they’re wrong, but oh well, whatever. In and of itself, a wrong belief is nothing to get worked up about, if it’s not ruining their life. Pick any healthy upstanding citizen, and it’s a safe bet that they have at least one strong belief, very important to how they live their life, that ain’t true.

(And as above, if you want to judge or drug people for thinking that their intuitive self-models are more veridical than the models really are, then I claim you’ll be judging or drugging 99.9…% of the world population, including many philosophers-of-mind, neuroscientists, etc.)

That’s how I think about all the other unusual states of consciousness too—trance, enlightenment, tulpas, you name it. Is it working out? Then great! Is it creating problems? Then try to change something! Don’t ask me how—I have no opinion.

1.4 Why does the predictive learning algorithm build generative models / concepts related to what’s happening in your own mind?

For example, I have a learned concept in my world-model of “screws”.

Sometimes I’ll think about that concept in the context of the external world: ”there are screws in the top drawer”.

But other times I’ll think about that concept in the context of my own inner world: ”I’m thinking about screws right now”, “I’m worried about the screws”, “I can never remember where I left the screws”, etc.

If the cortex “learns from scratch” [LW · GW], as I believe, then we need to explain how these models of my inner world get built by a predictive learning algorithm.

To start with: In general, if world-model concept X is active, it tends to invoke (or incentivize the creation of) an “explanation” of X—i.e., an upstream model that explains the fact that X is active—and which can thus help predict when X will be active again in the future.

This is just an aspect of predictive (a.k.a. self-supervised) learning from sensory inputs [LW · GW]—the cortex learning algorithm sculpts the generative world-model to predict when the concept X is about to be active, just as it sculpts the world-model to predict when raw sensory inputs are about to be active.

For example, if a baby frequently sees cars, she would first learn a “car” concept that helps predict what car-related visual inputs are doing after they first appear. But eventually the predictive learning algorithm would need to develop a method for anticipating when the “car” concept itself was about to be active. For example, the baby would learn to expect cars when she looks at a street.

For a purely passive observer, that’s the whole story, and there would be no algorithmic force whatsoever for developing self-model / inner-world concepts. If the “car” concept is active right now, there must be cars in the sensory input stream, or at least something related to cars. Thus, when the algorithm finds models that help predict that the “car” concept is about to be active, those models will always be exogenous—they’ll track some aspect of how the world works outside my own head. “Cars are often found on highways.” “Cars are rarely found in somebody’s mouth.” Etc.

However, for an active agent, concepts in my world-model are often active for endogenous reasons. Maybe the “car” concept is active in my mind because it spontaneously occurred to me that it would be a good idea to go for a drive right about now. Or maybe it’s on my mind because I’m anxious about cars. Etc. In those cases, the same predictive learning algorithm as above—the one sculpting generative models to better anticipate when my “car” concept will be active—will have to construct generative models of what’s going on in my own head. That’s the only possible way to make successful predictions in those cases.

…So that’s all that’s needed. If any system has both a capacity for endogenous action (motor control, attention control, etc.), and a generic predictive learning algorithm, that algorithm will be automatically incentivized to develop generative models about itself (both its physical self and its algorithmic self), in addition to (and connected to) models about the outside world.

1.4.1 Further notes on the path from predictive learning algorithms to intuitive self-models

- I said that, for any active agent with a predictive learning algorithm, that algorithm is incentivized to develop generative models of the self. But that doesn’t mean that the algorithm will necessarily succeed at developing such generative models. As Machine Learning researchers know, it’s perfectly possible to train a model to learn something, only to find that it can’t actually learn it. In fact, it’s impossible for the intuitive models to perfectly predict the outputs of the brain algorithm (of which they themselves are a part).[4] As a result, the generative models wind up modeling some aspects of brain algorithms, but also encapsulating other aspects into entities whose internals cannot themselves be deterministically modeled or predicted. As a teaser for future posts, these entities wind up closely related to key concepts like “my true self”, “free will”, and so on—but hold that thought until Post 3 [LW · GW].

- The trained model that results from predictive learning is generally underdetermined—it can wind up depending on fine details, like the order that data is presented, learning algorithm hyperparameters that vary amongst different people, and so on. Indeed, as I’ll discuss in this series, different people can wind up with rather different intuitive self-models: there are people who hear voices, people who have achieved Buddhist enlightenment, and so on.

- Relatedly, every vertebrate, and presumably many invertebrates too, are also active agents with predictive learning algorithms in their brain, and hence their predictive learning algorithms are also incentivized to build intuitive self-models. But I’m sure that the intuitive self-model in a zebrafish pallium (if any) is wildly different from the intuitive self-model in an adult human cortex.

1.5 Appendix: Some terminology I’ll be using in this series

I’m sure everything I write will be crystal clear in context (haha), but just in case, here are some of the terms and assumptions that I’ll be using throughout the series, related to how I think about probabilistic inference and generative modeling in the brain.

1.5.1 Learning algorithms and trained models

In this series, “learning algorithms” always means within-lifetime learning algorithms that are designed by evolution and built directly into the brain—I’m not talking about genetic evolution, or cultural evolution, or learned metacognitive strategies like spaced repetition.

In Machine Learning, people talk about the distinction between (A) learning algorithms versus (B) the trained models that those learning algorithms gradually build. I think this is a very useful distinction for the brain too—see my discussion at “Learning from scratch” in the brain [LW · GW]. (The brain also does things [LW · GW] that are related to neither learning algorithms nor trained models, but those things won’t be too relevant for this series.) If you’ve been reading my other posts, you’ll notice that I usually spend a lot of time talking about (certain aspects of) brain learning algorithms, and very little time talking about trained models. But this series is an exception: my main focus here is within the trained model level—i.e., the specific content and structure of the generative models in the cortex of a human adult.

Predictive learning (also called “self-supervised learning”) is any learning algorithm that tries to build a generative model that can predict what’s about to happen (e.g. imminent sensory inputs). When the generative model gets a prediction wrong (i.e., is surprised), the learning algorithm updates the generative model, or builds a new one, such that it will be less surprised in similar situations in the future. As I’ve discussed here [LW · GW], I think predictive learning is a very important learning algorithm in the brain (more specifically, in the cortex and thalamus). But it’s not the only learning algorithm—I think reinforcement learning is a separate thing [LW · GW], still centrally involving the cortex and thalamus, but this time also involving the basal ganglia.

(Learning from culture is obviously important, but I claim it’s not a separate learning algorithm, but rather an emergent consequence of predictive learning, reinforcement learning, and innate social drives [LW · GW], all working together.)

1.5.2 Concepts, models, thoughts, subagents

An “intuitive model”—which I’m using synonymously with “generative model”—constitutes a belief / understanding about what’s going on, and it issues corresponding predictions / expectations about what’s going to happen next. Intuitive models can say what you’re planning, seeing, remembering, understanding, attempting, doing, etc. If there’s exactly one active generative model right now in your cortex—as opposed to your being in a transient state of confusion—then that model would constitute the thought that you’re thinking.

The “generative model space” would be the set of all generative models that you’ve learned, along with how a priori likely they are. I have sometimes called that by the term “world-model”, but I’ll avoid that in this series, since it’s actually kinda broader than a world-model—it not only includes everything you know about the world, but also everything you know about yourself, and your habits, skills, strategies, implicit expectations, and so on.

A “concept”, a.k.a. “generative model piece”, would be something which isn’t (usually) a generative model in and of itself, but more often forms a generative model by combining with other concepts in appropriate relations. For example, “hanging the dress in the closet” might be a generative model, but it involves concepts like the dress, its hanger, the closet, etc.

“Subagent” is not a term I’ll be using in this series at all, but I’ll mention it here in case you’re trying to compare and contrast my account with others’. Generative models sometimes involve actions (e.g. “I’m gonna hang the dress right now”), and sometimes they don’t (e.g. “the ball is bouncing on the floor”). The former, but not the latter, are directly[5] sculpted and pruned by not only predictive learning but also reinforcement learning (RL), which tries to find action-involving generative models that not only make correct predictions but also have maximally positive valence (as defined in my Valence series [LW · GW]). Anyway, I think people usually use the word “subagent” to refer to a generative model that involves actions, or perhaps sometimes to a group of thematically-related generative models that involve thematically-related actions. For example, the generative model “I’m going to open the window now” could be reconceptualized as a “subagent” that sometimes pokes its head into your conscious awareness and proposes to open the window. And if the valence of that proposal is positive, then that subagent would “take over” (become and remain the active generative model), and then you would get up and actually open the window.

1.6 Appendix: How does this series fit into Philosophy Of Mind?

1.6.1 Introspective self-reports as a “straightforward” scientific question

Hopefully we can all agree that we live in a universe that follows orderly laws of physics, always and everywhere, even if we don’t know exactly what those laws are yet.[6] And hopefully we can all agree that those laws of physics apply to the biological bodies and brains that live inside that universe, just like everything else. After all, whenever scientists measure biological organisms, they find that their behavior can be explained by normal chemistry and physics, and ditto when they measure neurons and synapses.

So that gets us all the way to self-reports about consciousness, free will, and everything else. If you ask someone about their inner mental world, and they move their jaws and tongues to answer, then those are motor actions, and (via the laws of physics) we can trace those motor actions back to signals going down motoneuron pools, and those signals in turn came from motor cortex neurons spiking, and so on back through the chain. There’s clearly a systematic pattern in what happens—people systematically describe consciousness and the rest of their mental world in some ways but not others. So there has to be an explanation of those patterns, within the physical universe—principles of physics, chemistry, biology, neuroscience, algorithms, and so on.

“The meta-problem of consciousness” is a standard term for part of this problem, namely: What is the chain of causation in the physical universe that reliably leads to people declaring that there’s a “Hard Problem of Consciousness”? But I’m broadening that to include everything else that people say about consciousness—e.g. people describing their consciousness in detail, including talk about enlightenment, hypnotic trance, and so on—and then we can broaden it further to include everything people say about free will, sense-of-self, etc.

1.6.2 Are explanations-of-self-reports a first step towards understanding the “true nature” of consciousness, free will, etc.?

The broader research program would be:

Research program:

|

This series is focused on STEP 1, not STEP 2. Why? For one thing, STEP 1 is a pure question of physics and chemistry and biology and algorithms and neuroscience, which is in my comfort zone; whereas STEP 2 is a question of philosophy, which is definitely not. For another thing, I find that arguments over the true nature of consciousness are endless and polarizing and exhausting, going around in circles, whereas I hope we can all join together towards the hopefully-uncontroversial STEP 1 scientific endeavor.

But some readers might be wondering:

Is STEP 1 really relevant to STEP 2?

The case for yes: If you ask me the color of my wristwatch, and if I answer you honestly from my experience (rather than trolling you, or parroting something I heard, etc.), then somewhere in the chain of causation that ultimately leads to me saying “it’s black”, you’ll find an actual wristwatch, and photons bouncing off of it, entering my eyes, etc.

What’s true for my wristwatch should be equally true of “consciousness”, “free will”, “qualia”, and so on: if those things exist at all, then they’d better be somewhere in the chain of causation that leads to us talking about them. When I ask you a question about those things, your brain needs to somehow sense their properties, and then actuate your tongue and lips accordingly. Otherwise you would have no grounds for saying anything about them at all! Everything you say about them would be just a completely uninformed shot in the dark! Indeed, as far as you know, everything you think you know about qualia is wrong, and in fact qualia are not unified, and not subjective, and not ineffable, but they are about two meters in diameter, with a consistency similar to chewing gum, and they ooze out of your bellybutton. Oh, that’s wrong? Are you sure it’s wrong? Now ask yourself what process you went through to so confidently confirm that in your mind. That process had to have somehow involved somehow “observing” your “qualia” to discern their properties, right?

Alternatively, see here [LW · GW] for an argument for that same conclusion but framed in terms of Bayesian epistemology.

The case for no: You can find one in Chalmers 2003. He acknowledges the argument above (“It is certainly at least strange to suggest that consciousness plays no causal role in my utterances of ‘I am conscious’. Some have suggested more strongly that this rules out any knowledge of consciousness… The oddness of epiphenomenalism is exacerbated by the fact that the relationship between consciousness and reports about consciousness seems to be something of a lucky coincidence, on the epiphenomenalist view …”) But then Chalmers goes through a bunch of counterarguments, to which he is sympathetic. I won’t try to reproduce those here.

In any case, getting back to the topic at hand:

- I obviously have opinions about who’s right in that back-and-forth above. And I also have opinions about The Hard Problem of Consciousness (probably not what you’d expect!). But I’ll leave them out, because that would be getting way off-topic.

- Even if you don’t think STEP 1 provides any definitive evidence about STEP 2, I imagine you’d be at least curious about STEP 1, if only for its own sake. And if so, you should have no foundational objection to what I’m trying to do in this series.

- Indeed, even if you think that the STEP 1 research program is doomed, because the laws of physics as we know them will be falsified (i.e. give incorrect predictions) in future experiments involving conscious minds—then you still ought to be interested in the STEP 1 research program, if only to see how far it gets before it fails!!

1.6.3 Related work

Yet again, I maintain that anyone with any opinion about the nature of consciousness, free will, etc., should be jointly interested in the STEP 1 research program.

…But as it happens, the people who have actually tried to work on STEP 1 in detail have disproportionately tended to subscribe to the philosophy-of-mind theory known as strong illusionism—see Frankish 2016. The five examples that I’m familiar with are: philosophers Keith Frankish, Daniel Dennett, and Thomas Metzinger, and neuroscientists Nicholas Humphrey and Michael Graziano. I’m sure there are others too.[7]

I think that some of their STEP-1-related discussions (especially Graziano’s) have kernels of truth, and I will cite them where applicable, but I find others to be wrong, or unhelpfully vague. Anyway, I’m mostly trying to figure things out for myself—to piece together a story that dovetails with my tons of opinions about how the human brain works.

1.7 Conclusion

Now that you better understand how I think about intuitive self-models in general, the next seven posts will dive into the specifics!

Thanks Thane Ruthenis, Charlie Steiner, Kaj Sotala, lsusr, Seth Herd, Johannes Mayer, Jonas Hallgren, and Justis Mills for critical comments on earlier drafts.

- ^

In case you’re wondering, this series will centrally involve probabilistic inference, but will not involve “active inference”. I think most “active inference” discourse is baloney (see Why I’m not into the Free Energy Principle [LW · GW]), and indeed I’m not sure how active inference ever became so popular given the obvious fact that things can be plausible but not desirable, and that things can be desirable but not plausible. I think “plausibility” involves probabilistic inference, while “desirability” involves valence—see my Valence series [LW · GW].

- ^

I think it would be a bit more conventional to say that the brain has a (singular) generative model with lots of adjustable parameters / settings, but I think the discussion will sound more intuitive and flow better if I say that the brain has a whole space of zillions of generative models (plural), each with greater or lesser degrees of a priori plausibility. This isn’t a substantive difference, just a choice of terminology.

- ^

We can’t read Aristotle’s mind, so we don’t actually know for sure what Aristotle’s intuitive model of the sun was; it’s technically possible that Aristotle was saying things about the sun that he found unintuitive but nevertheless intellectually believed to be true (see §1.3.2.2). But I think that’s unlikely. I’d bet he was describing his intuitive model.

- ^

The idea that a simpler generative model can’t predict the behavior of a big complicated algorithm is hopefully common sense, but for a related formalization see “Computational Irreducibility” (more discussion here).

- ^

Reinforcement Learning (RL) is obviously indirectly relevant to the formation of generative models that don’t involve actions. For example, if I really like clouds, then I might spend all day watching clouds, and spend all night imagining clouds, and I’ll thus wind up with unusually detailed and accurate generative models of clouds. RL is obviously relevant in this story: RL is how my love of clouds influences my actions, including both attention control (thinking about clouds) and motor control (looking at clouds). And those actions, in turn, influence the choice of data that winds up serving as a target for predictive learning. But it’s still true that my generative models of clouds are updated only by predictive learning, not RL.

- ^

“The Standard Model of Particle Physics including weak-field quantum general relativity (GR)” (I wish it was better-known and had a catchier name) appears sufficient to explain everything that happens in the solar system (ref). Nobody has ever found any experiment violating it, despite extraordinarily precise and varied tests. This theory can’t explain everything that happens in the universe—in particular, it can’t make any predictions about either (A) microscopic exploding black holes or (B) the Big Bang. Also, (C) the Standard Model happens to include 18 elementary particles (depending on how you count), because those are the ones we’ve discovered; but the theoretical framework is fully compatible with other particles existing too, and indeed there are strong theoretical and astronomical [LW · GW] reasons to think they do exist. It’s just that those other particles are irrelevant for anything happening on Earth—so irrelevant that we’ve spent decades and billions of dollars searching for any Earthly experiment whatsoever where they play a measurable role, without success. Anyway, I think there are strong reasons to believe that our universe follows some set of orderly laws—some well-defined mathematical framework that elegantly unifies the Standard Model with all of GR, not just weak-field GR—even if physicists don’t know what those laws are yet. (I think there are promising leads, but that’s getting off-topic.) …And we should strongly expect that, when we eventually discover those laws, we’ll find that they shed no light whatsoever into how consciousness works—just as we learned nothing whatsoever about consciousness from previous advances in fundamental physics like GR or quantum field theory.

- ^

Maybe Tor Norretranders’s The User Illusion (1999) belongs in this category, but I haven’t read it.

23 comments

Comments sorted by top scores.

comment by Rafael Harth (sil-ver) · 2024-09-20T13:13:25.961Z · LW(p) · GW(p)

I too find that the dancer just will. not. spin. counterclockwise. no matter how long I look at it.

But after trying a few things, I found an "intervention" to make it so. (No clue whether it'll work for anyone else, but I find it interesting that it works for me.) While looking at the dancer, I hold my right hand in front of the gif on the screen, slightly below so I can see both; then as the leg goes leftward, I perform counter-clockwise rotation with the hand, as if loosening an oversized screw. (And I try to make the act very deliberate, rather than absent-mindedly doing the movement.) After repeating this a few times, I generally perceive the counter-clockwise rotation, which sometimes lasts a few seconds and sometimes longer.

I also tried putting other counter-clockwise-spinning animations next to the dancer, but that didn't do anything.

Replies from: Linda Linsefors, steve2152, lucie-philippon, kvas_duplicate0.1636121129676118, Morpheus↑ comment by Linda Linsefors · 2024-09-20T17:07:41.292Z · LW(p) · GW(p)

I tried it and it works for me too.

For me the dancer was spinning contraclockwise and would not change. With your screwing trick I could change rotation, and where now stably stuck in the clockwise direction. Until I screwed in the other direction. I've now done this back and forth a few times.

↑ comment by Steven Byrnes (steve2152) · 2024-09-20T13:24:19.911Z · LW(p) · GW(p)

In case you missed it, there’s a show/hide box at the bottom of the wiki article with three side-by-side synchronized spinning dancers—the original one in the middle, and broken-symmetry ones on either side, with internal edges drawn in to break the ambiguity. I fixed my gaze on the counterclockwise dancer while gradually uncovering the original dancer with my hand, and thus got the original spinning counterclockwise in my peripheral vision. Then I gradually moved my eyes towards the original and had her spinning counterclockwise in the center of my view for a bit! …But then she flipped back when I blinked. Sounds vaguely similar to the kind of thing you were doing. I got bored pretty quick and stopped trying :)

Replies from: qv^!q↑ comment by qvalq (qv^!q) · 2024-10-16T19:45:08.742Z · LW(p) · GW(p)

I can see the dancers spinning in different directions.

↑ comment by Lucie Philippon (lucie-philippon) · 2024-11-02T21:06:05.386Z · LW(p) · GW(p)

I find that by focusing on the legs of the dancer, I managed to see it oscillating: half-turn clockwise then half-turn counterclockwise with the feet towards the front. However, this always break when I start looking at the arms. Interesting

↑ comment by kvas_it (kvas_duplicate0.1636121129676118) · 2024-10-16T19:29:06.734Z · LW(p) · GW(p)

For me your method didn't work, but I found another one. I wave the finger (that's pointing down) in front of the image in a spinning motion synchronized with the leg movement and going in the direction that I want. The finger obscures the dancer quite a bit, which makes it easier for me to imagine it spinning in the "right" direction. Sometimes I'd see it spin in the "right" direction for like 90 degrees and then stubbornly go back again, but eventually it complies and starts spinning in how I want it. Then I can remove the finger and it continues.

comment by Dalcy (Darcy) · 2025-02-23T08:38:27.196Z · LW(p) · GW(p)

Curious about the claim regarding bistable perception as the brain "settling" differently on two distinct but roughly equally plausible generative model parameters behind an observation. In standard statistical terms, should I think of it as: two parameters having similarly high Bayesian posterior probability, but the brain not explicitly representing this posterior, instead using something like local hill climbing to find a local MAP solution—bistable perception corresponding to the two different solutions this process converges to?

If correct, to what extent should I interpret the brain as finding a single solution (MLE/MAP) versus representing a superposition or distribution over multiple solutions (fully Bayesian)? Specifically, in which context should I interpret the phrase "the brain settling on two different generative models"?

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2025-02-23T13:19:43.452Z · LW(p) · GW(p)

should I think of it as: two parameters having similarly high Bayesian posterior probability, but the brain not explicitly representing this posterior, instead using something like local hill climbing to find a local MAP solution—bistable perception corresponding to the two different solutions this process converges to?

Yup, sounds right.

to what extent should I interpret the brain as finding a single solution (MLE/MAP) versus representing a superposition or distribution over multiple solutions (fully Bayesian)?

I think it can represent multiple possibilities to a nonzero but quite limited extent; I think the superposition can only be kinda local to a particular subregion of the cortex and a fraction of a second. I talk about that a bit in §2.3 [LW · GW].

in which context should I interpret the phrase "the brain settling on two different generative models"

I wrote "your brain can wind up settling on either of [the two generative models]", not both at once.

…Not sure if I answered your question.

Replies from: Darcy↑ comment by Dalcy (Darcy) · 2025-02-23T13:27:51.898Z · LW(p) · GW(p)

I wrote "your brain can wind up settling on either of [the two generative models]", not both at once.

Ah that makes sense. So the picture I should have is: whatever local algorithm oscillates between multiple local MAP solutions over time that correspond to qualitatively different high-level information (e.g., clockwise vs counterclockwise). Concretely, something like the metastable states of a Hopfield network, or the update steps of predictive coding [LW · GW] (literally gradient update to find MAP solution for perception!!) oscillating between multiple local minima?

comment by justinpombrio · 2024-10-03T16:46:08.934Z · LW(p) · GW(p)

This is fantastic! I've tried reasoning along these directions, but never made any progress.

A couple comments/questions:

Why "veridical" instead of simply "accurate"? To me, the accuracy of a map is how well it corresponds to the territory it's trying to map. I've been replacing "veridical" with "accurate" while reading, and it's seemed appropriate everywhere.

Do you see the Spinning Dancer going clockwise? Sorry, that’s not a veridical model of the real-world thing you’re looking at. [...] after all, nothing in the real world of atoms is rotating in 3D.

I think you're being unfair to our intuitive models here.

The GIF isn't rotating, but the 3D model that produced the GIF was rotating, and that's the thing our intuitive models are modeling. So exactly one of [spinning clockwise] and [spinning counterclockwise] is veridical, depending on whether the graphic artist had the dancer rotating clockwise or counterclockwise before turning her into a silhouette. (Though whether it happens to be veridical is entirely coincidental, as the silhouette is identical to the one that would have been produced had the dancer been spinning in the opposite direction.)

If you look at the photograph of Abe Lincoln from Feb 27, 1860, you see a 3D scene with a person in it. This is veridical! There was an actual room with an actual person in it, who dressed that way and touched that book. The map's territory is 164 years older than the map, but so what.

(My favorite example of an intuitive model being wildly incorrect is Feynman's story of learning to identify kinds of galaxies from images on slides. He asks his mentor "what kind of galaxy is this one, I can't identify it", and his mentor says it's a smudge on the slide.)

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-10-03T18:18:42.402Z · LW(p) · GW(p)

This is fantastic!

Thanks! :)

Why "veridical" instead of simply "accurate"?

Accurate might have been fine too. I like “veridical” mildly better for a few reasons, more about pedagogy than anything else.

One reason is that “accurate” has a strong positive-valence connotation [LW · GW] (i.e., “accuracy is good, inaccuracy is bad”), which is distracting, since I’m trying to describe things independently of whether they’re good or bad. I would rather find a term with a strictly neutral vibe. “Veridical”, being a less familiar term, is closer to that. But alas, I notice from your comment that it still has some positive connotation. (Note how you said “being unfair”, suggesting a frame where I said the intuition was non-veridical = bad, and you’re “defending” that intuition by saying no it’s actually veridical = good.) Oh well. It’s still a step in the right direction, I think.

Another reason is I’m trying hard to push for a two-argument usage (“X is or is not a veridical model of Y“), rather than a one-argument usage (“X is or is not veridical”). I wasn’t perfect about that. But again, I think “accurate” makes that problem somewhat worse. “Accurate” has a familiar connotation that the one-argument usage is fine because of course everybody knows what is the territory corresponding to the map. “Veridical” is more of a clean slate in which I can push people towards the two-argument usage.

Another thing: if someone has an experience that there’s a spirit talking to them, I would say “their conception of the spirit is not a veridical model of anything in the real world”. If I said “their conception of the spirit is not an accurate model of anything in the real world”, that seems kinda misleading, it’s not just a matter of less accurate versus more accurate, it’s stronger than that.

The GIF isn't rotating, but the 3D model that produced the GIF was rotating, and that's the thing our intuitive models are modeling. So exactly one of [spinning clockwise] and [spinning counterclockwise] is veridical, depending on whether the graphic artist had the dancer rotating clockwise or counterclockwise before turning her into a silhouette.

It was made by a graphic artist. I’m not sure their exact technique, but it seems at least plausible to me that they never actually created a 3D model. Some people are just really good at art. I dunno. This seems like the kind of thing that shouldn’t matter though! :)

Anyway, I wrote “that’s not a veridical model of the real-world thing you’re looking at” to specifically preempt your complaint. Again see what I wrote just above, about two-argument versus one-argument usage :)

Replies from: justinpombrio↑ comment by justinpombrio · 2024-10-04T16:54:26.365Z · LW(p) · GW(p)

I like “veridical” mildly better for a few reasons, more about pedagogy than anything else.

That's a fine set of reasons! I'll continue to use "accurate" in my head, as I already fully feel that the accuracy of a map depends on which territory you're choosing for it to represent. (And a map can accurately represent multiple territories, as happens a lot with mathematical maps.)

Another reason is I’m trying hard to push for a two-argument usage

Do you see the Spinning Dancer going clockwise? Sorry, that’s not a veridical model of the real-world thing you’re looking at.

My point is that:

- The 3D spinning dancer in your intuitive model is a veridical map of something 3D. I'm confident that the 3D thing is a 3D graphical model which was silhouetted after the fact (see below), but even if it was drawn by hand, the 3D thing was a stunningly accurate 3D model of a dancer in the artist's mind.

- That 3D thing is the obvious territory for the map to represent.

- It feels disingenuous to say "sorry, that's not a veridical map of [something other than the territory map obviously represents]".

So I guess it's mostly the word "sorry" that I disagree with!

By "the real-world thing you're looking at", you mean the image on your monitor, right? There are some other ways one's intuitive model doesn't veridically represent that such as the fact that, unlike other objects in the room, it's flashing off and on at 60 times per second, has a weirdly spiky color spectrum, and (assuming an LCD screen) consists entirely of circularly polarized light.

It was made by a graphic artist. I’m not sure their exact technique, but it seems at least plausible to me that they never actually created a 3D model.

This is a side track, but I'm very confident a 3D model was involved. Plenty of people can draw a photorealistic silhouette. The thing I think is difficult is drawing 100+ silhouettes that match each other perfectly and have consistent rotation. (The GIF only has 34 frames, but the original video is much smoother.) Even if technically possible, it would be much easier to make one 3D model and have the computer rotate it. Annnd, if you look at Nobuyuki Kayahara's website, his talent seems more on the side of mathematics and visualization than photo-realistic drawing, so my guess is that he used an existing 3D model for the dancer (possibly hand-posed).

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-10-04T17:58:22.195Z · LW(p) · GW(p)

I think we’re in agreement on everything.

By "the real-world thing you're looking at", you mean the image on your monitor, right?

Yup, or as I wrote: “2D pattern of changing pixels on a flat screen”.

I'm very confident a 3D model was involved

For what it’s worth, even if that’s true, it’s still at least possible that we could view both the 3D model and the full source code, and yet still not have an answer to whether it’s spinning clockwise or counterclockwise. E.g. perhaps you could look at the source code and say “this code is rotating the model counterclockwise and rendering it from the +z direction”, or you could say “this code is rotating the model clockwise and rendering it from the -z direction”, with both interpretations matching the source code equally well. Or something like that. That’s not necessarily the case, just possible, I think. I’ve never coded in Flash, so I wouldn’t know for sure. Yeah this is definitely a side track. :)

Nice find with the website, thanks.

Replies from: justinpombrio↑ comment by justinpombrio · 2024-10-04T20:23:46.175Z · LW(p) · GW(p)

I think we’re in agreement on everything.

Excellent. Sorry for thinking you were saying something you weren't!

still not have an answer to whether it’s spinning clockwise or counterclockwise

More simply (and quite possibly true), Nobuyuki Kayahara rendered it spinning either clockwise or counterclockwise, lost the source, and has since forgotten which way it was going.

comment by Kaj_Sotala · 2024-09-23T19:17:42.732Z · LW(p) · GW(p)

On the topic of bistable perception, this is one of my favorite examples:

comment by Gunnar_Zarncke · 2024-09-21T00:13:45.559Z · LW(p) · GW(p)

…So that’s all that’s needed. If any system has both a capacity for endogenous action (motor control, attention control, etc.), and a generic predictive learning algorithm, that algorithm will be automatically incentivized to develop generative models about itself (both its physical self and its algorithmic self), in addition to (and connected to) models about the outside world.

Yes, and there are many different classes of such models. Most of them boring because the prediction of the effect of the agent on the environment is limited (small effect or low data rate) or simple (linear-ish or more-is-better-like).

But the self-models of social animals will quickly grow complex because the prediction of the action on the environment includes elements in the environment - other members of the species - that themselves predict the actions of other members.

You don't mention it, but I think Theory of Mind [? · GW] or Emphatic Inference [? · GW] play a large role in the specific flavor of human self-models.

comment by Rafael Harth (sil-ver) · 2024-09-20T13:54:53.668Z · LW(p) · GW(p)

1.6.2 Are explanations-of-self-reports a first step towards understanding the “true nature” of consciousness, free will, etc.?

Fwiw I've spent a lot of time thinking about the relationship between Step 1 and Step 2, and I strongly believe that step 1 is sufficient or almost sufficient for step 2, i.e., that it's impossible to give an adequate account of human phenomenology without figuring out most of the computational aspects of consciousness. So at least in principle, I think philosophy is superfluous. But I also find all discussions I've read about it (such as the stuff from Dennett, but also everything I've found on LessWrong) to be far too shallow/high-level to get anywhere interesting. People who take the hard problem seriously seem to prefer talking about the philosophical stuff, and people who don't seem content with vague analogies or appeals to future work, and so no one -- that I've seen, anyway -- actually addresses what I'd consider to be the difficult aspects of phenomenology.

Will definitely read any serious attempt to engage with step 1. And I'll try not be biased by the fact that I know your set of conclusions isn't compatible with mine.

Replies from: barnaby-crook↑ comment by Paradiddle (barnaby-crook) · 2024-09-20T16:23:38.341Z · LW(p) · GW(p)

I strongly believe that step 1 is sufficient or almost sufficient for step 2, i.e., that it's impossible to give an adequate account of human phenomenology without figuring out most of the computational aspects of consciousness.

Apologies for nitpicking, but your strong belief that step 1 is (almost) sufficient for step 2 would be more faithfully re-phrased as: it will (probably) be possible/easy to give an adequate account of human phenomenology by figuring out most of the computational aspects of consciousness. The way you phrased it (viz., "impossible...without") is equivalent to saying that step 1 is necessary for step 2, an importantly different claim (on this phrasing, something besides the computational aspects may be required). Of course, you may think it is both necessary and sufficient, I'm just pointing out the distinction.

Replies from: sil-ver↑ comment by Rafael Harth (sil-ver) · 2024-09-20T23:21:05.989Z · LW(p) · GW(p)

Mhh, I think "it's not possible to solve (1) without also solving (2)" is equivalent to "every solution to (1) also solves (2)", which is equivalent to "(1) is sufficient for (2)". I did take some liberty in rephrasing step (2) from "figure out what consciousness is" to "figure out its computational implementation".

comment by Paradiddle (barnaby-crook) · 2024-09-20T17:00:46.193Z · LW(p) · GW(p)

Section 1.6 is another appendix about how this series relates to Philosophy Of Mind. My opinion of Philosophy Of Mind is: I’m against it! Or rather, I’ll say plenty in this series that would be highly relevant to understanding the true nature of consciousness, free will, and so on, but the series itself is firmly restricted in scope to questions that can be resolved within the physical universe (including physics, neuroscience, algorithms, and so on). I’ll leave the philosophy to the philosophers.

At the risk of outing myself as a thin-skinned philosopher, I want to push back on this a bit. If we are taking "philosophy of mind" to mean, "the kind of work philosophers of mind do" (which I think we should), then your comment seems misplaced. Crucially, one need not be defending particular views on "big questions" about the true nature of consciousness, free will, and so on to be doing philosophy of mind. Rather, much of the work philosophers of mind do is continuous with scientific inquiry. Indeed, I would say some philosophy of mind is close to indistinguishable from what you do in this post! For example, lots of this work involves trying to carve up conceptual space in a way that coheres with empirical findings, suggests avenues for further research, and renders fruitful discussion easier. Your section 1.3 in this post features exactly the kind of conceptual work that is the bread-and-butter of philosophy. So, far from leaving philosophy to the philosophers, I actually think your work would fit comfortably into the more empirically informed end of contemporary philosophy of mind. To end on a positive note, I think it's really clearly written, fascinating, and fun to read. So thanks!

Replies from: steve2152↑ comment by Steven Byrnes (steve2152) · 2024-09-20T17:21:40.348Z · LW(p) · GW(p)

Thanks for the kind words!

The thing you quoted was supposed to be very silly and self-deprecating, but I wrote it very poorly, and it actually wound up sounding kinda judgmental. Oops, sorry. I just rewrote it. I agree with everything you wrote in this comment.

comment by satchlj · 2024-10-26T15:50:47.419Z · LW(p) · GW(p)

Your brain has a giant space of possible generative models[2] that map from underlying states of the world (e.g. “there’s a silhouette dancer with thus-and-such 3D shape spinning clockwise against a white background etc.”) to how the photoreceptor cells would send signals into the brain (“this part of my visual field is bright, that part is dark, etc.”)

How do you argue that the models are really implemented backwards like this in the brain?