[Intuitive self-models] 7. Hearing Voices, and Other Hallucinations

post by Steven Byrnes (steve2152) · 2024-10-29T13:36:16.325Z · LW · GW · 2 commentsContents

7.1 Post summary / Table of contents 7.2 Against an axiomatic bright-line distinction between “hallucinations” and normal inner speech & imagination 7.3 Culture and psychology can both influence intuitive self-models 7.4 Hallucinations via culture 7.4.1 Hearing voices as a cultural norm 7.4.2 Sorry Julian Jaynes, but hearing the voice of a god does not make you an “automaton” under “hallucinatory control” 7.4.2.1 The counterargument from common sense 7.4.2.2 The counterargument from archaeological and historical evidence 7.4.2.3 The counterargument from psychology 7.4.3 “Hallucinogenic aids” 7.4.3.1 Desensitization to hallucinogenic aids as a possible explanation of shifts in culture and iconography around 1000 BC 7.5 Hallucinations and delusions via disjointed cognition (schizophrenia & mania) 7.6 Hallucinations and delusions via strong emotions (BPD & psychotic depression) Conclusion None 2 comments

7.1 Post summary / Table of contents

Part of the Intuitive Self-Models series [? · GW].

The main thrust of this post is an opinionated discussion of the book The Origin of Consciousness in the Breakdown of the Bicameral Mind by Julian Jaynes (1976) in §7.4, and then some discussion of how hallucinations and delusions arise from schizophrenia and mania (§7.5), and from psychotic depression and BPD (§7.6).

But first—what exactly do hallucinations have to do with intuitive self-models? A whole lot, it turns out!

We’ve seen throughout this series that different states of consciousness can have overlapping mechanisms. Deep trance states (Post 4 [LW · GW]) mechanistically have a lot in common with Dissociative Identity Disorder (Post 5 [LW · GW]). Lucid trance states (Post 4 [LW · GW]) mechanistically have a lot in common with tulpas (§4.4.1.2 [LW · GW]), and tulpas in turn shade into the kinds of hallucinations that I’ll be discussing in this post. Indeed, if you’ve been paying close attention to the series so far, you might even be feeling some crumbling of the seemingly bedrock-deep wall separating everyday free will (where decisions seem to be caused by the self, or more specifically, the “homunculus” of Post 3 [LW · GW]) from trance (where decisions seem to be caused by a spirit or hypnotist). After all, an imagined spirit does not veridically (§1.3.2 [LW · GW]) correspond to anything objective in the real world—but then, neither does the homunculus (§3.6 [LW · GW])!

I’ll extend that theme in Section 7.2 by arguing against a bright-line distinction between everyday inner speech and imagination (which seem to be caused by the homunculus) versus hallucinations (which seem to appear spontaneously, or perhaps to be caused by God, etc.). This is an important distinction “in the map”, but I’ll argue that it doesn’t always correspond to an important distinction “in the territory” (§1.3.2 [LW · GW]).

Section 7.3 then offers a framework, rooted in probabilistic inference (§1.2 [LW · GW]), for thinking about how culture and an individual’s psychology can each influence intuitive self-models in general, and hallucinations in particular.

…And that sets the stage for Section 7.4, where I talk about hearing voices as a cultural norm, including some discussion of Julian Jaynes’s The Origin of Consciousness in the Breakdown of the Bicameral Mind. I come down strongly against his suggestion that there were ever cultures full of “automatons” under “hallucinatory control”—not only does his historical evidence not hold up, but it’s based on deeply confused psychological thinking. On the other hand, I’m open-minded to Jaynes’s claim that cultures sometimes treat certain sculptures, drawings, etc. as “hallucinogenic aids”, and I offer a hypothesis that desensitization to those “hallucinogenic aids” might explain corresponding shifts in rituals and iconography.

Then we move on from culture to individual psychology as a cause of hallucinations, tackling schizophrenia and mania in Sections 7.5, and psychotic depression and borderline personality disorder (BPD, which is correlated with hearing voices) in Section 7.6.

(Other causes of hallucination, like seizures and hallucinogenic drugs, are not particularly related to the themes of this series,[1] so I’m declaring them out-of-scope.)

7.2 Against an axiomatic bright-line distinction between “hallucinations” and normal inner speech & imagination

Sometimes I “talk to myself”, and correspondingly there are imagined words in my head. Or sometimes I “imagine a scene”, and correspondingly there are imagined images in my mind’s eye.

Then other times, there are imagined words in the head, or imagined images in my mind’s eye, that don’t seem to be internally generated.

We call the former “inner speech” or “imagination”. We call the latter “hallucinations”.[1] I’m suggesting that this is not a fundamental distinction. Instead it’s an intuitive self-modeling difference: the former seems to be caused by the homunculus (§3.5 [LW · GW]), and the latter seems to be caused by something else. It’s a distinction “in the map”, not “in the territory”.

Well, I don’t want to go too far in equating these two categories. After all, it’s probabilistic inference (§1.2 [LW · GW]), not random chance, that leads to some imagined words seeming to be caused by the homunculus, and others not. Hence, there have to be systematic differences between those two categories.

But it’s equally important not to go too far in treating those two categories as totally different things. For example, people report that hallucinated voices say things, and they don’t have introspective access to where those words came from. But with self-generated inner speech, you also don’t have introspective access to where those words came from!! In the latter case, it doesn’t feel like there’s an unresolved mystery about where those words came from, because we have the “vitalistic force” intuition (§3.3 [LW · GW]), which masquerades as an explanation. But really, it’s not an explanation at all!

Anyway, I will use the term “hallucination” in this post, but I don’t intend the widespread connotation that the way we think of normal inner speech and imagination is veridical (as defined in §1.3.2 [LW · GW]) while the way we think of hallucinations is not.

7.3 Culture and psychology can both influence intuitive self-models

Back in Post 1, I talked about bistable perception, such as the spinning dancer, and explained it in terms of probabilistic inference. Then I suggested in §1.4 [LW · GW] that the same phenomenon arises in the domain of intuitive self-models.

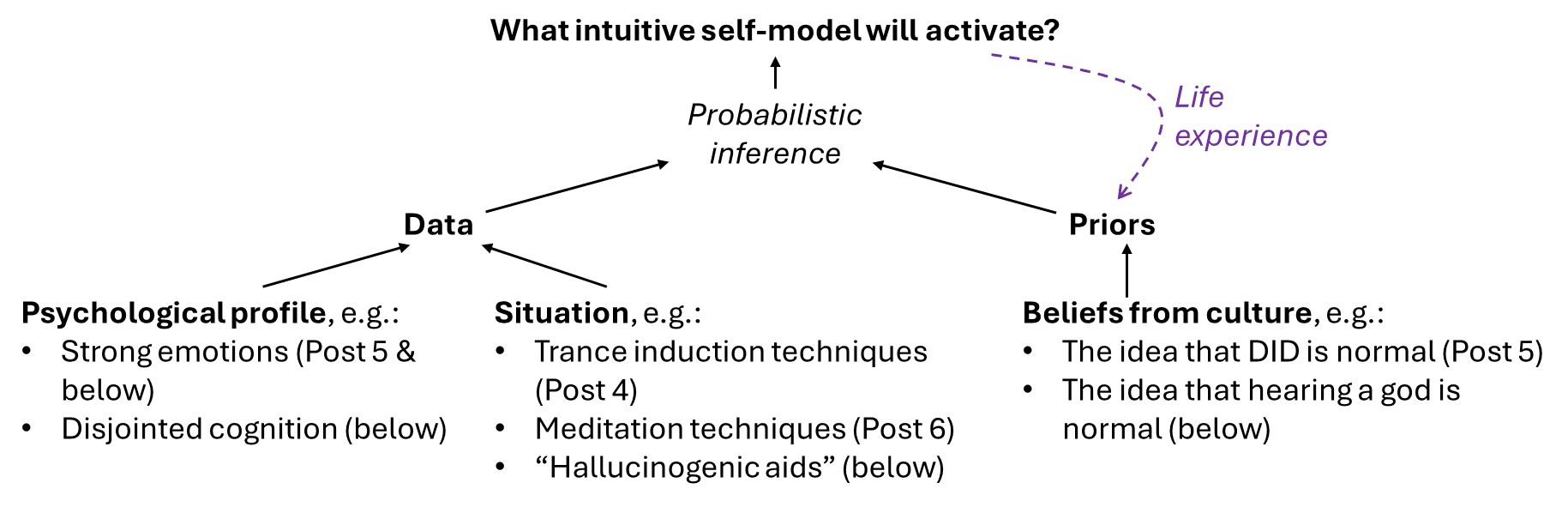

For example, if auditory areas are currently representing the phrase “go away”, then this can be intuitively modeled as the result of the homunculus causing internal speech, or it can be intuitively modeled as the result of a god speaking. Which will it be? Here’s a diagram with some of the major factors, and examples where those factors have come up in this series (in general, not just for hearing voices):

One of the takeaways from this diagram is that psychological profile (left column) and culture (mainly center and right columns) are both influencing what happens. Thus:

- If there’s a strong cultural norm of not hearing voices (as in my own culture), then relatively few people will regularly hear voices, and those who do will be especially likely to have predisposing psychological factors (e.g. schizophrenia).

- Conversely, if there’s a strong cultural norm of hearing voices all the time, then a broader cross-section of society will do so.

The next section will cover some of the cultural factors, and then I’ll talk a bit about psychological factors in §7.5–7.6.

7.4 Hallucinations via culture

7.4.1 Hearing voices as a cultural norm

My own culture has a widespread belief that voices don’t exist and hearing them is weird and pathological. (That belief might be bad, but that’s off-topic for this series, see §1.3.3 [LW · GW].) Even so, lots of people in my culture hear voices today. And my impression (not sure how to prove or quantify it[2]) is that hearing voices is far more common in cultures or subcultures where hearing voices is culturally expected and normalized, such as renewalist Christians that run workshops on how to hear the voice of God, or Haitian Vodou which involves ceremonies like:

[The initiation rite] includes a lav tèt ("head washing") to prepare the initiate for having the lwa [spirit] enter and reside in their head. Vodouists believe that one of the two parts of the human soul, the gwo bonnanj, is removed from the initiate's head, thus making space for the lwa [spirit] to enter and reside there…

If you’ve read this far in the series (especially Post 4 [LW · GW]), you should have no problem accounting for these kinds of hallucinations, in terms of intuitive self-models. Sometimes thoughts or intentions arise, or actions are taken, which in our culture would normally be intuitively modeled as “caused by the homunculus” (Post 3 [LW · GW]). But they can alternatively be intuitively modeled as “caused by a god”.

7.4.2 Sorry Julian Jaynes, but hearing the voice of a god does not make you an “automaton” under “hallucinatory control”

In Julian Jaynes’s book,[3] he claims that people in so-called “bicameral cultures” (including the Near East before ca. 1300 BC, and other places at other times) were “automatons” unable to think for themselves, make sophisticated plans, and so on, instead acting as unthinking slaves to the hallucinated voices of gods:

We would say [Hector] has had an hallucination. So has Achilles. The Trojan War was directed by hallucinations. And the soldiers who were so directed were not at all like us. They were noble automatons who knew not what they did.

…

Not subjectively conscious, unable to deceive or to narratize out the deception of others, the Inca and his lords were captured like helpless automatons.

…

It is very difficult to imagine doing the things that these laws say men did in the eighteenth century B.C. without having a subjective consciousness in which to plan and devise, deceive and hope. But it should be remembered how rudimentary all this was…

…

I have endeavored in these two chapters to examine the record of a huge time span to reveal the plausibility that man and his early civilizations had a profoundly different mentality from our own, that in fact men and women were not conscious as are we, were not responsible for their actions, and therefore cannot be given the credit or blame for anything that was done over these vast millennia of time; that instead each person had a part of his nervous system which was divine, by which he was ordered about like any slave, a voice or voices which indeed were what we call volition and empowered what they commanded and were related to the hallucinated voices of others in a carefully established hierarchy.

Anyway, from my perspective, it’s just really obvious that this idea is utter nonsense. But I’ll dwell on it a bit anyway, since it leads into some important points of psychology and motivation that tie into larger themes of this series.

7.4.2.1 The counterargument from common sense

I mean, go chat with those perfectly normal Haitian Vodou practitioners and renewalist Christians whom I mentioned in the previous subsection. There you have it. Right?

…OK, I imagine that Jaynes defenders would say that this is missing the point, because the renewalist Christians etc. are merely “partly bicameral”, unlike the “fully bicameral” ancient cultures that he discusses in his book. Or something like that?

Even then, if being “partly bicameral” has negligible impact on motivation or competence, that should surely count as some reason for skepticism about Jaynes’s wild pronouncements about “fully bicameral” people, I would think.

7.4.2.2 The counterargument from archaeological and historical evidence

Here are a few examples I noticed:

- Contrary to Jaynes’s claim that sophisticated planning, understanding, and deception didn’t exist in so-called “bicameral” cultures, Alexey Guzey here finds lots of evidence for such behaviors long before Jaynes would say that such behaviors were psychologically possible—including coups starting around 1800 BC, and examples from Hammurabi’s Code and other sources.

- The way that Jaynes describes Pizarro’s conquest of the Inca (“…Not subjectively conscious, unable to deceive or to narratize out the deception of others, the Inca and his lords were captured like helpless automatons…”) is wildly inconsistent with the brief account here [LW · GW] by my friend who read a history book on that topic.

- The periodic “collapse” of early states is explained by Jaynes in terms of “hallucinatory control”, but I’ve seen at least one other plausible-to-me explanation for that same phenomenon.[4]

- Pre-1300 BC peoples did lots of things that certainly seem to require sophisticated planning, inventiveness, and coordination—from building monuments (pyramids, Stonehenge, etc.) to inventing and refining technologies (metallurgy, glassmaking, shipbuilding, irrigation, etc.), to governance mechanisms like citizen assemblies.

7.4.2.3 The counterargument from psychology

Finally, the part that I care most about.

I’ll say much more about this in the next post (§8.2 [LW · GW]), but one of the recurring themes in this series is that people are inclined to vastly overstate the extent to which motivations are entwined with intuitive self-models. Indeed, this is the fourth post in a row where that theme has come up! To be clear, the connection between intuitive self-models and motivations is not zero! But it’s much less than most people seem to think. I think the error comes from a specific difficulty in comprehending other intuitive models—recall “The Parable of Caesar and Lightning” in §6.3.4.1 [LW · GW].

In §7.2 above, I suggested that there isn’t necessarily a deep distinction between normal inner speech and imagination, versus words and images that seem to be put into one’s head by a god. In either case, we can ask the same question: why is it one set of words instead of a different set? Well, there must have been some underlying motivation or expectation. It might be an object-level motivation: if you’re hungry, then the words might be about how to eat. Alternatively, it might be more indirect: the words might be what you implicitly expect the god to say. But then there would still have to be an independent motivation to do what the god says. This is parallel to the discussion in §4.5.4 [LW · GW]: you can only be hypnotized by someone whom you already want to obey—someone whose directions you would feel intrinsically motivated to follow even if you weren’t in a trance. Maybe you admire the hypnotist, or maybe you fear them, who knows. But there has to be something. And so it is with a hallucinated god voice.

Indeed, “a god” is far less “in control” than the hypnotist! For a hypnotist, there’s at least a fact of the matter about what the hypnotist wants you to do. For a god, there’s very little constraint on “what the god wants”, so the all-powerful force of motivated reasoning [LW · GW] / rationalization will rapidly sculpt “what the god wants” into agreement with whatever happens to be intrinsically motivating to the person. In other words, “the god” would wind up making essentially the same suggestions that a conventional inner voice would make, for essentially the same reasons, resulting in essentially the same behavior.

So, a god is far less “in control” than a hypnotist. And actually, even hypnotists don’t have complete freedom of suggestion! If the hypnotist keeps suggesting to do intrinsically-unpleasant things, then “the idea of doing what the hypnotist wants” will gradually lose its motivation, breaking the trance. This is closely related to how, if I trust and respect my therapist, and she asks me to do something I wouldn’t normally want to do, then I’ll nevertheless probably feel motivated to do that, all things considered; but if she keeps asking me to do unpleasant things, then I’ll eventually start feeling that the therapy is a pain, and stop feeling motivated to follow her suggestions.[5]

7.4.3 “Hallucinogenic aids”

A less-insane claim made by Jaynes is his proposal that a major purpose of many ancient (pre-1300 BC) Near East cultural artifacts, from figurines to sculptures to monumental buildings, was to induce those looking at them to hear the voice of a corresponding god—i.e., they were (what he calls) “hallucinogenic aids”. He presents lots of evidence for this hypothesis, mainly based on the writing of those cultures. I’m in no position to judge if he’s fairly reporting the evidence. Despite everything above, I’m going to provisionally take his word for this part, until somebody tells me otherwise.

How might “hallucinogenic aids” work in terms of intuitive self-models? In §4.5.3 [LW · GW], I proposed that one method of inducing trance is to “Seek out perceptual illusions where the most salient intuitive explanation of what’s happening is that a different agent is the direct cause of motor or attentional actions.”

It’s the same idea here. If you’re looking at a sculpture of a god, with the strong cultural expectation that the god should be speaking to you in your head, then things that the god might say are especially likely to pop into your head, and you’re especially likely to feel intuitively like those words were caused by the god.

Particularly important ingredients for a hallucinogenic aid seem to include:

- (1) inducing physiological arousal,

- (2) inducing a feeling that you have low status in relation to the hallucinogenic aid, and

- (3) inducing a feeling that you’re looking at a living person.

If you go through Jaynes’s various examples, they tend to push some or all of those buttons. There’s a term, “sense of awe”, that encompasses both (1) & (2), and I guess a strong enough “sense of awe” can be sufficient even without (3)—Jaynes cites intriguing evidence that “the mountains themselves were hallucinatory to the Hittites”. The role of (2) (i.e. status) is relevant for similar reasons as §4.5.4 [LW · GW]; people are always motivated to listen to a person / god who they see as high-status, and to do what they ask, and this positive valence [LW · GW] makes it more likely for such thoughts to arise in the first place. Eye contact is an important part of (3) (and also (1-2)), and Jaynes correspondingly documents lots of sculptures with larger-than-life eyes.

Jaynes also proposes that these particular cultures seem to have had some upheaval in the centuries around 1000 BC, where (among other things) they seem to have mostly stopped using hallucinogenic aids. For example, he says that an older monument might center around a god-idol sitting on a throne, while a less-old monument might center around an empty pedestal, on which a god could land “when or if he ever … did return to the city”.

Again, not knowing any better, I’ll provisionally take Jaynes’s word for it that there was in fact a systematic change along those lines, in these particular cultures at these particular times. If so, what caused it? Jaynes throws out a bunch of ideas, but doesn’t mention the one that I find most plausible:

7.4.3.1 Desensitization to hallucinogenic aids as a possible explanation of shifts in culture and iconography around 1000 BC

Consider what happens when you look at a photo hanging on your wall. Nothing. You don’t feel a thing. It’s just a picture on the wall. But imagine growing up in a culture with no photos, and indeed no realistic face depictions of any kind. Then every photorealistic face that you’ve ever seen in your life is an actual person’s face (plus dim reflections of your own face off of puddles). Then imagine, as an adult, seeing a photo for the first time in your life. It would “seem alive”—it would be mesmerizing, fascinating, uncanny, creepy.

Accordingly, the anthropological and historical record is full of people treating images as having some magic or sacred power, from the European folklore myth that vampires don’t have mirror-reflections, to the third commandment in Judaism and similar things in other traditions, to the photography-related taboos in some traditional cultures. But we don’t treat images that way! We’re fully desensitized. Photos do not “feel alive” to us; they feel inanimate.

Switch to neuroscience. I think we have an innate “sense of sociality” in our brainstem (or maybe hypothalamus), analogous to how (I claim) fear-of-heights is triggered by an innate brainstem “sense” that we’re standing over a precipice. The “sense of sociality” tends to get triggered by seeing and hearing live people. It also activates (by generalization) when we’re in the same room as other people, even when we’re not directly looking at them.

By default (thanks to genetically-hardcoded heuristics in the brainstem, and/or learned models that generalize from those heuristics), this “sense of sociality” will also get triggered by looking at photos, realistic big-eyed sculptures, and so on. But that default can get overridden by a “desensitization” process. In my case, long ago in my life, I saw lots of photos in lots of situations that lacked any other evidence that I was in a social situation (e.g. low physiological arousal). So my brain eventually learned that looking at a photo ought not to trigger the “sense of sociality”. This process is closely akin to exposure therapy for fear-of-heights—see methods and corresponding neuroscientific speculations here [LW · GW].

Back to archaeology. I figure it’s sometimes possible for cultures to avoid widespread desensitization to hallucinogenic aids. Presumably that would involve having a clear idea of what constitutes a proper hallucinogenic aid, and making sure that people only interact with hallucinogenic aids in contexts where they would in fact work successfully as hallucinogenic aids.

And maybe, in this particular region at this particular time, avoiding desensitization got progressively harder. I don’t know exactly why that would be, but it seems like the kind of thing that could easily happen. Something about bigger and more complex cities? Or maybe the increasing use of writing, which is an abundant source of pictures and imagined sounds stripped of any sense of alive-ness? Well anyway, if desensitization happened, then the idols would have stopped “feeling alive”, and it’s only natural that people would have thrown them out and started saying that the gods had ascended to heaven instead.

OK, that’s all I want to say about Julian Jaynes. To round out the post, here are a couple quick discussions of other sources of hallucinations, which arise from psychology rather than culture.

7.5 Hallucinations and delusions via disjointed cognition (schizophrenia & mania)

My working model of psychosis (in schizophrenia) is still the one I described a year ago in Model of psychosis, take 2 [LW · GW]. Partly copying from there:

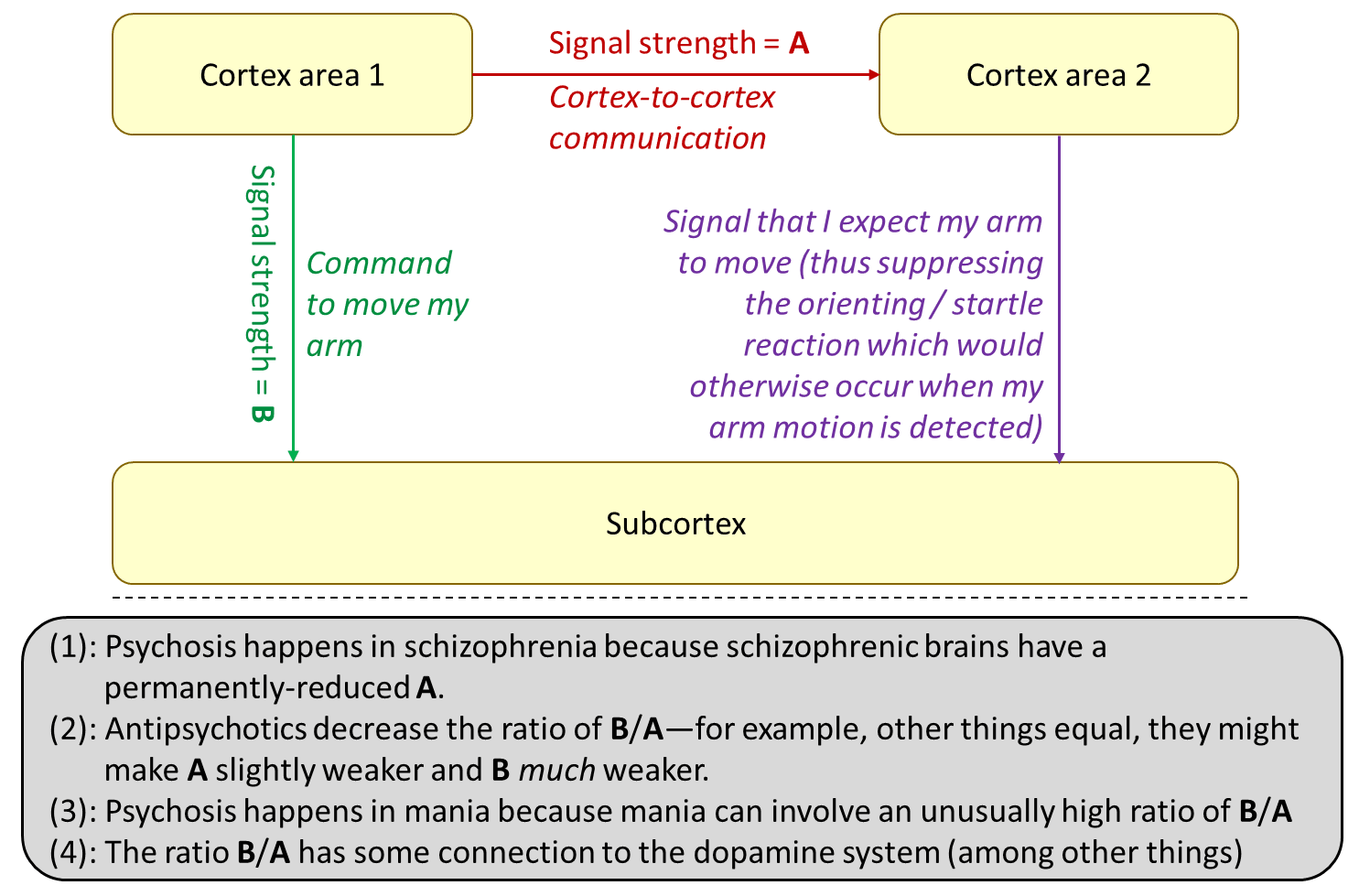

The cortex has different areas—motor areas, tactile areas, visual areas, homeostatic control areas, and so on.

One thing that happens is: those different areas talk to each other, enabling them to trade information and “reach consensus” by getting into a self-consistent configuration. That’s A in the diagram.

Another thing that happens is: those different areas send output signals to non-cortex (“subcortical”) areas in the brain and body—the motor cortex sends motor commands to brainstem motor areas and down the spine, the visual cortex sends signals to the brainstem superior colliculus, and so on. That’s B in the diagram.

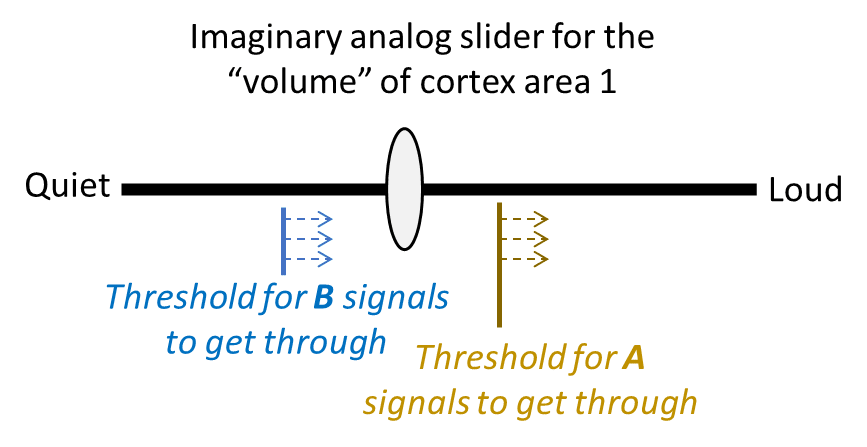

Now, think of a part of the cortex as having an adjustable “volume”, in terms of how strongly and clearly it is announcing what it’s up to right now. For example, if the thought crosses your mind that maybe you might move your finger, then you might find (if you look closely and/or use scientific equipment) that your finger twitches a bit, whereas if you strongly intend to move your finger, then your finger will actually move.

Anyway, imagine an analog slider for the “volume” of some part of cortex (“Cortex area 1” in that diagram).

- Mark the volume threshold where Cortex Area 1 is “shouting” loud enough that its A messages start getting through and having an effect.

- Separately, make the volume threshold where Cortex Area 1 is “shouting” loud enough that its B messages start getting through and having an effect.

To avoid psychosis, we want the A mark to be at a lower volume setting than the B mark. That way, there will be no possible “volume level” in which the B messages are transmitting but the A messages are not. The latter would be a situation where different cortex areas are sending output signals into the brainstem and body in an independent, uncoordinated way, each area oblivious to what the other areas are doing. I’m claiming that this situation can lead to psychotic delusions and hallucinations.

What does this have to do with intuitive self-models? Well, we learn early in life to recognize the tell-tale signs of self-generated actions, as opposed to actions caused by some external force. And when different cortex areas are sending output signals into the brainstem and body in an independent, uncoordinated way, that tends not to match those tell-tale signs of self-generated actions. So the usual probabilistic inference process (§1.2 [LW · GW]) fits these observations to some different intuitive model, where the actions are not self-generated (caused by the homunculus).

In the diagram above, I used “command to move my arm” as an example. By default, when my brainstem notices my arm moving unexpectedly, it fires an orienting / startle reflex—imagine having your arm resting on an armrest, and the armrest suddenly starts moving. Now, when it’s my own motor cortex initiating the arm movement, then that shouldn’t be “unexpected”, and hence shouldn’t lead to a startle. However, if different parts of the cortex are sending output signals independently, each oblivious to what the other parts are doing, then a key prediction signal won’t get sent down into the brainstem, and thus the motion will in fact be “unexpected” from the brainstem’s perspective. The resulting suite of sensations, including the startle, will be pretty different from how self-generated motor actions feel, and so it will be conceptualized differently, perhaps as a “delusion of control”.

That’s just one example. The same idea works equally well if I replace “command to move my arm” with “command to do a certain inner speech act”, in which case the result is an auditory hallucination. Or it could be a “command to visually imagine something”, in which case the result is a visual hallucination. Or it could be some visceromotor signal that causes physiological arousal, perhaps leading to a delusion of reference, and so on.

7.6 Hallucinations and delusions via strong emotions (BPD & psychotic depression)

This is a second, unrelated reason that psychology can lead to hallucinations, with the help of intuitive self-models.

In §5.3 [LW · GW], I talked about Borderline Personality Disorder (BPD), and suggested (see Lorien Psychiatry reference page) that we should think of BPD as basically a condition wherein emotions are unusually strong. I related BPD to Dissociative Identity Disorder (DID), via the simple idea that angry-me, anxious-me, traumatized-me, etc. are always somewhat different, but in BPD people they’re super different, which can be a first step toward full-blown DID (especially when it’s also being egged on by culture and/or psychologists).

Fun fact: “between 50% and 90% of patients with BPD report hearing voices that other people do not hear” (ref). Why would that be? I’m guessing that it’s basically a similar explanation. Let’s leave aside DID and assume there’s just one homunculus. If that homunculus is currently conceptualized as being in emotion state , and then a inner-speech-involving thought θ appears which is highly charged in emotion state which is very different from , then it might feel intuitively implausible that the homunculus summoned θ; so instead θ might be intuitively modeled as having come from a disembodied voice.

(Here’s an alternative theory, which isn’t mutually exclusive: Anxiety and other aversive emotions come with involuntary attention (§6.5.2.1 [? · GW]). If involuntary attention is the actual explanation of why thought θ was summoned, then that’s inconsistent with “the homunculus did it”. See related discussion in §3.5.2 [LW · GW].)

So that’s BPD. What about psychotic depression? My current guess is that the (usually auditory) hallucinations in psychotic depression tend to happen for the same reason as in BPD above: sudden bursts of very strong negative emotions.

I think the delusions in psychotic depression are kinda different from the hallucinations, and generally unrelated to this series.[6]

Conclusion

Thus ends our six-post whirlwind tour of some different intuitive self-models. The next post [LW · GW] will round out the series by suggesting that people tend to be really bad at thinking about how motivation works in the various unusual self-models that I’ve been discussing (trance, DID, awakening, and hallucinations). I suggest that the problem is that we’re tied down by those non-veridical intuitions described in Post 3 [LW · GW], the ones related to “vitalistic force”, “wanting”, the “homunculus”, and free will more generally. If we’re going to jettison those deeply misleading intuitions, then what do we replace them with? I have ideas!

Thanks Johannes Mayer and Justis Mills for critical comments on earlier drafts.

- ^

My current understanding is that all visual imagination, and some visual hallucinations, fundamentally work through attention-control. Those are the ones I’m talking about in this post. Other visual hallucinations, such as those caused by certain seizures or hallucinogenic drugs, have a very different mechanism—they mess around with visual processing areas directly. I won’t be talking about the latter kind of hallucination in this series. I think those latter hallucinations would be “detailed” in a way that the former kind is not, especially for people on the aphantasia spectrum.

Similar comments apply to auditory and other kinds of hallucinations.

- ^

In an earlier post I cited a self-described anthropologist on reddit who wrote: “I work with Haitian Vodou practitioners where … hearing the lwa tell you to do something or give you important guidance is as everyday normal as hearing your phone ring.” But I would like a better and more detailed source than that!

- ^

I won’t be covering every aspect of Julian Jaynes’s book, just a couple topics where I have something to say. For other aspects, see the book itself, along with helpful reviews / responses by Scott Alexander (and corresponding rebuttal by a Jaynes partisan) and by Kevin Simler.

- ^

“[James C.] Scott thinks of these collapses not as disasters or mysteries but as the expected order of things. It is a minor miracle that some guy in a palace can get everyone to stay on his fields and work for him and pay him taxes, and no surprise when this situation stops holding. These collapses rarely involved great loss of life. They could just be a simple transition from “a bunch of farming towns pay taxes to the state center” to “a bunch of farming towns are no longer paying taxes to the state center”.” Source: Scott Alexander book review of Against the Grain.

- ^

See also the example I quoted in §4.5.4 [LW · GW] where a hypnotized person was clearly pushing back against her hypnotist, when the hypnotist suggested that she do a thing that she didn’t want to do: “She was quite reluctant to make this effort, eventually starting to do it with a final plea: ‘Do you really want me to do this? I’ll do it if you say so.’” …Admittedly, that’s a bit of weird case, because the thing that she didn’t want to do was to act as if she were not hypnotized! But still, she did push back while under hypnosis, and I think that’s an interesting datapoint.

- ^

My current understanding of psychotic depression delusions is as follows: The biggest manifestation of psychotic depression is not hallucinations but rather delusions. However, the particular kinds of delusions in psychotic depression don’t seem to be fundamentally different from the everyday phenomenon of emotions warping beliefs. For example, if I’m feeling really down, then maybe I’ll say to myself “everybody hates me”, and maybe I’ll even believe it. In CBT lingo, they call this “emotional reasoning”. In math lingo, I call this “probabilistic inference, but treating my own emotional state as one of the sources of evidence” (related discussion [LW · GW]). Anyway, I guess that if “emotional reasoning” becomes strong enough, clinicians will stop calling it “emotional reasoning” and start calling it “psychotic delusions”. But I think that’s a difference of degree, not kind. I’m not an expert and open to feedback.

2 comments

Comments sorted by top scores.

comment by Gunnar_Zarncke · 2024-10-29T14:42:02.096Z · LW(p) · GW(p)

Congrats again for the sequence! It all fits together nicely.

While it makes sense to exclude hallucinogenic drugs and seizures, at least hallucinogenic drugs seem to fit into the pattern if I understand the effect correctly.

Auditory hallucinations, top-down processing and language perception - this paper says that imbalances in top-down cortical regulation is responsible for auditory hallucinations:

Participants who reported AH in the week preceding the test had a higher false alarm rate in their auditory perception compared with those without such (recent) experiences.

And this page Models of psychedelic drug action: modulation of cortical-subcortical circuits says that hallucinogenic drugs lead to such imbalances. So it is plausibly the same mechanism.

comment by AprilSR · 2024-10-29T13:54:31.424Z · LW(p) · GW(p)

Switch to neuroscience. I think we have an innate “sense of sociality” in our brainstem (or maybe hypothalamus), analogous to how (I claim) fear-of-heights is triggered by an innate brainstem “sense” that we’re standing over a precipice.

I think lately I've noticed how much written text triggers this for me varying a bit over time?