Causal Reference

post by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-10-20T22:12:30.227Z · LW · GW · Legacy · 247 commentsContents

Mainstream status. None 248 comments

Followup to: The Fabric of Real Things, Stuff That Makes Stuff Happen

Previous meditation: "Does your rule forbid epiphenomenalist theories of consciousness that consciousness is caused by neurons, but doesn't affect those neurons in turn? The classic argument for epiphenomenal consciousness is that we can imagine a universe where people behave exactly the same way, but there's nobody home - no awareness, no consciousness, inside the brain. For all the atoms in this universe to be in the same place - for there to be no detectable difference internally, not just externally - 'consciousness' would have to be something created by the atoms in the brain, but which didn't affect those atoms in turn. It would be an effect of atoms, but not a cause of atoms. Now, I'm not so much interested in whether you think epiphenomenal theories of consciousness are true or false - rather, I want to know if you think they're impossible or meaningless a priori based on your rules."

Is it coherent to imagine a universe in which a real entity can be an effect but not a cause?

Well... there's a couple of senses in which it seems imaginable. It's important to remember that imagining things yields info primarily about what human brains can imagine. It only provides info about reality to the extent that we think imagination and reality are systematically correlated for some reason.

That said, I can certainly write a computer program in which there's a tier of objects affecting each other, and a second tier - a lower tier - of epiphenomenal objects which are affected by them, but don't affect them. For example, I could write a program to simulate some balls that bounce off each other, and then some little shadows that follow the balls around.

But then I only know about the shadows because I'm outside that whole universe, looking in. So my mind is being affected by both the balls and shadows - to observe something is to be affected by it. I know where the shadow is, because the shadow makes pixels be drawn on screen, which make my eye see pixels. If your universe has two tiers of causality - a tier with things that affect each other, and another tier of things that are affected by the first tier without affecting them - then could you know that fact from inside that universe?

Again, this seems easy to imagine as long as objects in the second tier can affect each other. You'd just have to be living in the second tier! We can imagine, for example - this wasn't the way things worked out in our universe, but it might've seemed plausible to the ancient Greeks - that the stars in heaven (and the Sun as a special case) could affect each other and affect Earthly forces, but no Earthly force could affect them:

(Here the X'd-arrow stands for 'cannot affect'.)

The Sun's light would illuminate Earth, so it would cause plant growth. And sometimes you would see two stars crash into each other and explode, so you'd see they could affect each other. (And affect your brain, which was seeing them.) But the stars and Sun would be made out of a different substance, the 'heavenly material', and throwing any Earthly material at it would not cause it to change state in the slightest. The Earthly material might be burned up, but the Sun would occupy exactly the same position as before. It would affect us, but not be affected by us.

(To clarify an important point raised in the comments: In standard causal diagrams and in standard physics, no two individual events ever affect each other; there's a causal arrow from the PAST to FUTURE but never an arrow from FUTURE to PAST. What we're talking about here is the sun and stars over time, and the generalization over causal arrows that point from Star-in-Past to Sun-in-Present and Sun-in-Present back to Star-in-Future. The standard formalism dealing with this would be Dynamic Bayesian Networks (DBNs) in which there are repeating nodes and repeating arrows for each successive timeframe: X1, X2, X3, and causal laws F relating Xi to Xi+1. If the laws of physics did not repeat over time, it would be rather hard to learn about the universe! The Sun repeatedly sends out photons, and they obey the same laws each time they fall on Earth; rather than the Fi being new transition tables each time, we see a constant Fphysics over and over. By saying that we live in a single-tier universe, we're observing that whenever there are F-arrows, causal-link-types, which (over repeating time) descend from variables-of-type-X to variables-of-type-Y (like present photons affecting future electrons), there are also arrows going back from Ys to Xs (like present electrons affecting future photons). If we weren't generalizing over time, it couldn't possibly make sense to speak of thingies that "affect each other" - causal diagrams don't allow directed cycles!)

A two-tier causal universe seems easy to imagine, even easy to specify as a computer program. If you were arranging a Dynamic Bayes Net at random, would it randomly have everything in a single tier? If you were designing a causal universe at random, wouldn't there randomly be some things that appeared to us as causes but not effects? And yet our own physicists haven't discovered any upper-tier particles which can move us without being movable by us. There might be a hint here at what sort of thingies tend to be real in the first place - that, for whatever reasons, the Real Rules somehow mandate or suggest that all the causal forces in a universe be on the same level, capable of both affecting and being affected by each other.

Still, we don't actually know the Real Rules are like that; and so it seems premature to assign a priori zero probability to hypotheses with multi-tiered causal universes. Discovering a class of upper-tier affect-only particles seems imaginable[1] - we can imagine which experiences would convince us that they existed. If we're in the Matrix, we can see how to program a Matrix like that. If there's some deeper reason why that's impossible in any base-level reality, we don't know it yet. So we probably want to call that a meaningful hypothesis for now.

But what about lower-tier particles which can be affected by us, and yet never affect us?

Perhaps there are whole sentient Shadow Civilizations living on my nose hairs which can never affect those nose hairs, but find my nose hairs solid beneath their feet. (The solid Earth affecting them but not being affected, like the Sun's light affecting us in the 'heavenly material' hypothesis.) Perhaps I wreck their world every time I sneeze. It certainly seems imaginable - you could write a computer program simulating physics like that, given sufficient perverseness and computing power...

And yet the fundamental question of rationality - "What do you think you know, and how do you think you know it?" - raises the question:

How could you possibly know about the lower tier, even if it existed?

To observe something is to be affected by it - to have your brain and beliefs take on different states, depending on that thing's state. How can you know about something that doesn't affect your brain?

In fact there's an even deeper question, "How could you possibly talk about that lower tier of causality even if it existed?"

Let's say you're a Lord of the Matrix. You write a computer program which first computes the physical universe as we know it (or a discrete approximation), and then you add a couple of lower-tier effects as follows:

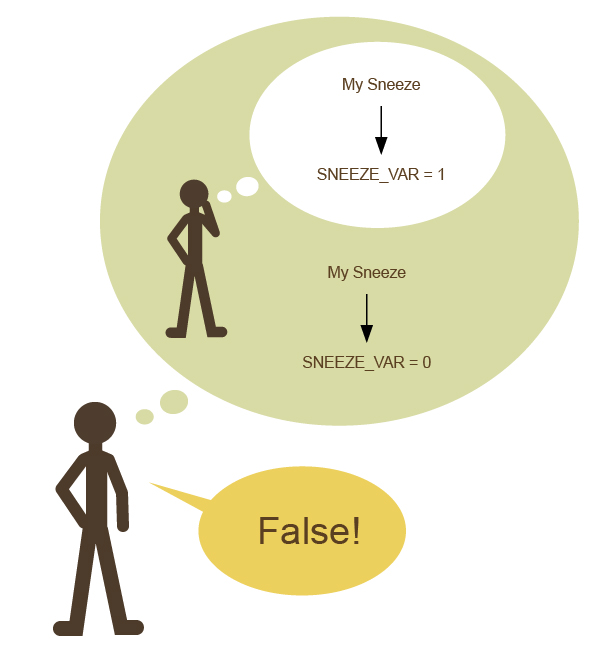

First, every time I sneeze, the binary variable YES_SNEEZE will be set to the second of its two possible values.

Second, every time I sneeze, the binary variable NO_SNEEZE will be set to the first of its two possible values.

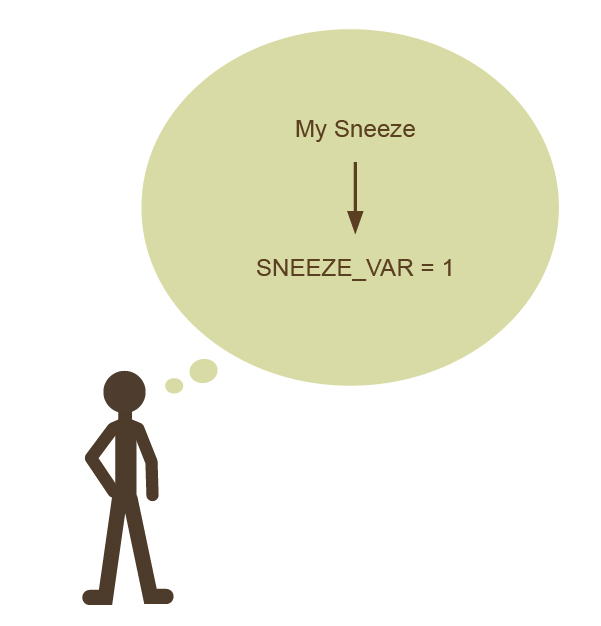

Now let's say that - somehow - even though I've never caught any hint of the Matrix - I just magically think to myself one day, "What if there's a variable that watches when I sneeze, and gets set to 1?"

It will be all too easy for me to imagine that this belief is meaningful and could be true or false:

And yet in reality - as you know from outside the matrix - there are two shadow variables that get set when I sneeze. How can I talk about one of them, rather than the other? Why should my thought about '1' refer to their second possible value rather than their first possible value, inside the Matrix computer program? If we tried to establish a truth-value in this situation, to compare my thought to the reality inside the computer program - why compare my thought about SNEEZE_VAR to the variable YES_SNEEZE instead of NO_SNEEZE, or compare my thought '1' to the first possible value instead of the second possible value?

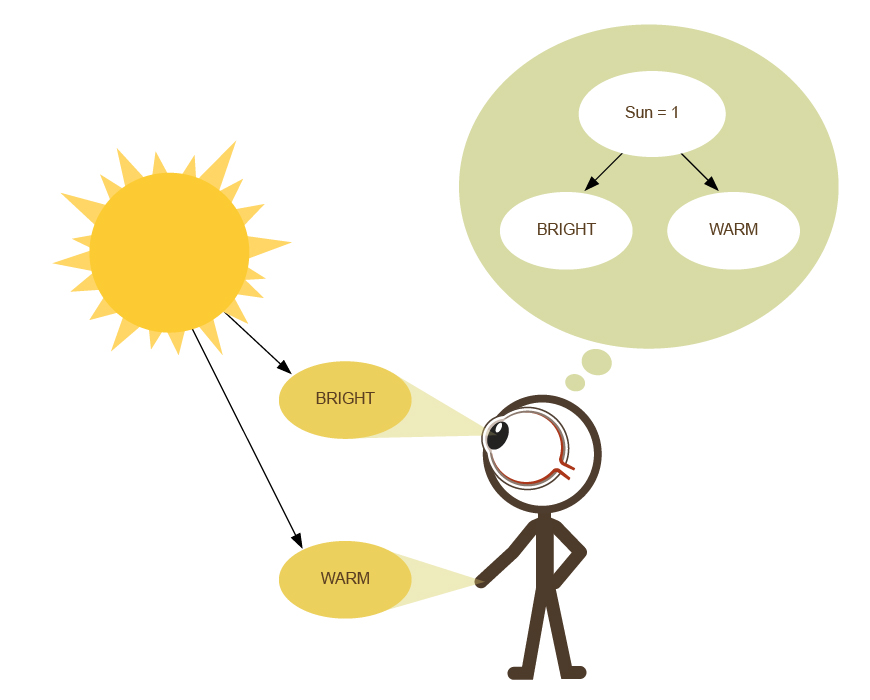

Under more epistemically healthy circumstances, when you talk about things that are not directly sensory experiences, you will reference a causal model of the universe that you inducted to explain your sensory experiences. Let's say you repeatedly go outside at various times of day, and your eyes and skin directly experience BRIGHT-WARM, BRIGHT-WARM, BRIGHT-WARM, DARK-COOL, DARK-COOL, etc. To explain the patterns in your sensory experiences, you hypothesize a latent variable we'll call 'Sun', with some kind of state which can change between 1, which causes BRIGHTness and WARMness, and 0, which causes DARKness and COOLness. You believe that the state of the 'Sun' variable changes over time, but usually changes less frequently than you go outside.

|

|

Standing here outside the Matrix, we might be tempted to compare your beliefs about "Sun = 1", to the real universe's state regarding the visibility of the sun in the sky (or rather, the Earth's rotational position).

But even if we compress the sun's visibility down to a binary categorization, how are we to know that your thought "Sun = 1" is meant to correspond to the sun being visible in the sky, rather than the sun being occluded by the Earth? Why the first state of the variable, rather than the second state?

How indeed are we know that this thought "Sun = 1" is meant to compare to the sun at all, rather than an anteater in Venezuela?

Well, because that 'Sun' thingy is supposed to be the cause of BRIGHT and WARM feelings, and if you trace back the cause of those sensory experiences in reality you'll arrive at the sun that the 'Sun' thought allegedly corresponds to. And to distinguish between whether the sun being visible in the sky is meant to correspond to 'Sun'=1 or 'Sun'=0, you check the conditional probabilities for that 'Sun'-state giving rise to BRIGHT - if the actual sun being visible has a 95% chance of causing the BRIGHT sensory feeling, then that true state of the sun is intended to correspond to the hypothetical 'Sun'=1, not 'Sun'=0.

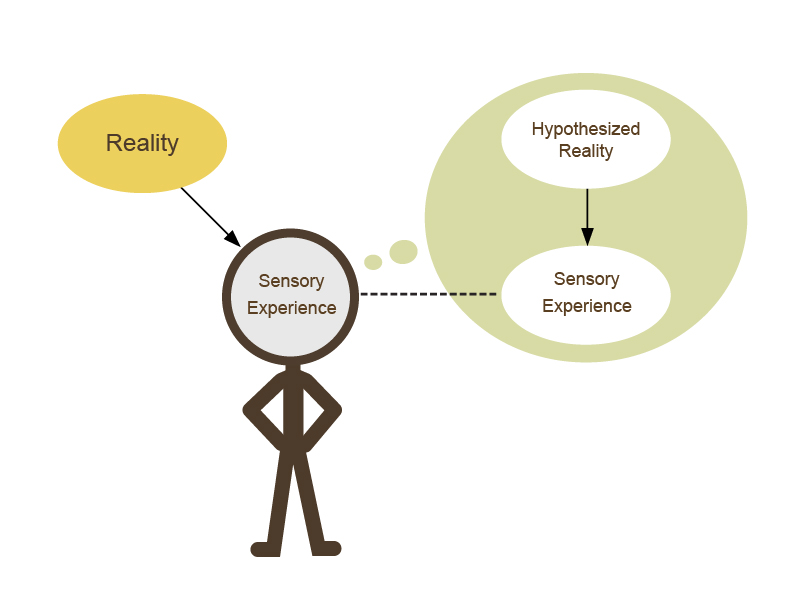

Or to put it more generally, in cases where we have...

...then the correspondence between map and territory can at least in principle be point-wise evaluated by tracing causal links back from sensory experiences to reality, and tracing hypothetical causal links from sensory experiences back to hypothetical reality. We can't directly evaluate that truth-condition inside our own thoughts; but we can perform experiments and be corrected by them.

Being able to imagine that your thoughts are meaningful and that a correspondence between map and territory is being maintained, is no guarantee that your thoughts are true. On the other hand, if you can't even imagine within your own model how a piece of your map could have a traceable correspondence to the territory, that is a very bad sign for the belief being meaningful, let alone true. Checking to see whether you can imagine a belief being meaningful is a test which will occasionally throw out bad beliefs, though it is no guarantee of a belief being good.

Okay, but what about the idea that it should be meaningful to talk about whether or not a spaceship continues to exist after it travels over the cosmological horizon? Doesn't this theory of meaningfulness seem to claim that you can only sensibly imagine something that makes a difference to your sensory experiences?

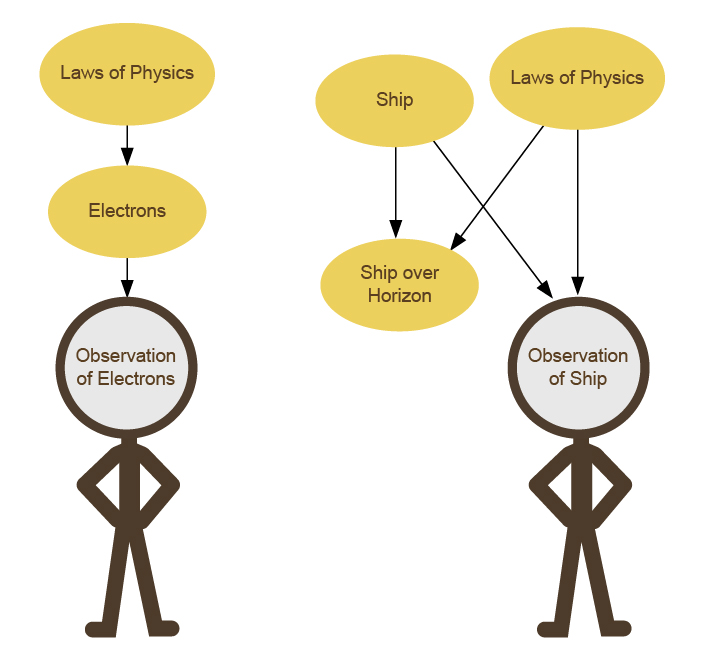

No. It says that you can only talk about events that your sensory experiences pin down within the causal graph. If you observe enough protons, electrons, neutrons, and so on, you can pin down the physical generalization which says, "Mass-energy is neither created nor destroyed; and in particular, particles don't vanish into nothingness without a trace." It is then an effect of that rule, combined with our previous observation of the ship itself, which tells us that there's a ship that went over the cosmological horizon and now we can't see it any more.

To navigate referentially to the fact that the ship continues to exist over the cosmological horizon, we navigate from our sensory experience up to the laws of physics, by talking about the cause of electrons not blinking out of existence; we also navigate up to the ship's existence by tracing back the cause of our observation of the ship being built. We can't see the future ship over the horizon - but the causal links down from the ship's construction, and from the laws of physics saying it doesn't disappear, are both pinned down by observation - there's no difficulty in figuring out which causes we're talking about, or what effects they have.[2]

All righty-ighty, let's revisit that meditation:

"Does your rule forbid epiphenomenalist theories of consciousness in which consciousness is caused by neurons, but doesn't affect those neurons in turn? The classic argument for epiphenomenal consciousness is that we can imagine a universe where people behave exactly the same way, but there's nobody home - no awareness, no consciousness, inside the brain. For all the atoms in this universe to be in the same place - for there to be no detectable difference internally, not just externally - 'consciousness' would have to be something created by the atoms in the brain, but which didn't affect those atoms in turn. It would be an effect of atoms, but not a cause of atoms. Now, I'm not so much interested in whether you think epiphenomenal theories of consciousness are true or false - rather, I want to know if you think they're impossible or meaningless a priori based on your rules."

The closest theory to this which definitely does seem coherent - i.e., it's imaginable that it has a pinpointed meaning - would be if there was another little brain living inside my brain, made of shadow particles which could affect each other and be affected by my brain, but not affect my brain in turn. This brain would correctly hypothesize the reasons for its sensory experiences - that there was, from its perspective, an upper tier of particles interacting with each other that it couldn't affect. Upper-tier particles are observable, i.e., can affect lower-tier senses, so it would be possible to correctly induct a simplest explanation for them. And this inner brain would think, "I can imagine a Zombie Universe in which I am missing, but all the upper-tier particles go on interacting with each other as before." If we imagine that the upper-tier brain is just a robotic sort of agent, or a kitten, then the inner brain might justifiably imagine that the Zombie Universe would contain nobody to listen - no lower-tier brains to watch and be aware of events.

We could write that computer program, given significantly more knowledge and vastly more computing power and zero ethics.

But this inner brain composed of lower-tier shadow particles cannot write upper-tier philosophy papers about the Zombie universe. If the inner brain thinks, "I am aware of my own awareness", the upper-tier lips cannot move and say aloud, "I am aware of my own awareness" a few seconds later. That would require causal links from lower particles to upper particles.

If we try to suppose that the lower tier isn't a complicated brain with an independent reasoning process that can imagine its own hypotheses, but just some shadowy pure experiences that don't affect anything in the upper tier, then clearly the upper-tier brain must be thinking meaningless gibberish when the upper-tier lips say, "I have a lower tier of shadowy pure experiences which did not affect in any way how I said these words." The deliberating upper brain that invents hypotheses for sense data, can only use sense data that affects the upper neurons carrying out the search for hypotheses that can be reported by the lips. Any shadowy pure experiences couldn't be inputs into the hypothesis-inventing cognitive process. So the upper brain would be talking nonsense.

There's a version of this theory in which the part of our brain that we can report out loud, which invents hypotheses to explain sense data out loud and manifests physically visible papers about Zombie universes, has for no explained reason invented a meaningless theory of shadow experiences which is experienced by the shadow part as a meaningful and correct theory. So that if we look at the "merely physical" slice of our universe, philosophy papers about consciousness are meaningless and the physical part of the philosopher is saying things their physical brain couldn't possibly know even if they were true. And yet our inner experience of those philosophy papers is meaningful and true. In a way that couldn't possibly have caused me to physically write the previous sentence, mind you. And yet your experience of that sentence is also true even though, in the upper tier of the universe where that sentence was actually written, it is not only false but meaningless.

I'm honestly not sure what to say when a conversation gets to that point. Mostly you just want to yell, "Oh, for the love of Belldandy, will you just give up already?" or something about the importance of saying oops.

(Oh, plus the unexplained correlation violates the Markov condition for causal models.)

Maybe my reply would be something along the lines of, "Okay... look... I've given my account of a single-tier universe in which agents can invent meaningful explanations for sense data, and when they build accurate maps of reality there's a known reason for the correspondence... if you want to claim that a different kind of meaningfulness can hold within a different kind of agent divided into upper and lower tiers, it's up to you to explain what parts of the agent are doing which kinds of hypothesizing and how those hypotheses end up being meaningful and what causally explains their miraculous accuracy so that this all makes sense."

But frankly, I think people would be wiser to just give up trying to write sensible philosophy papers about lower causal tiers of the universe that don't affect the philosophy papers in any way.

Meditation: If we can only meaningfully talk about parts of the universe that can be pinned down inside the causal graph, where do we find the fact that 2 + 2 = 4? Or did I just make a meaningless noise, there? Or if you claim that "2 + 2 = 4" isn't meaningful or true, then what alternate property does the sentence "2 + 2 = 4" have which makes it so much more useful than the sentence "2 + 2 = 3"?

[1] Well, it seems imaginable so long as you toss most of quantum physics out the window and put us back in a classical universe. For particles to not be affected by us, they'd need their own configuration space such that "which configurations are identical" was determined by looking only at those particles, and not looking at any lower-tier particles entangled with them. If you don't want to toss QM out the window, it's actually pretty hard to imagine what an upper-tier particle would look like.

[2] This diagram treats the laws of physics as being just another node, which is a convenient shorthand, but probably not a good way to draw the graph. The laws of physics really correspond to the causal arrows Fi, not the causal nodes Xi. If you had the laws themselves - the function from past to future - be an Xi of variable state, then you'd need meta-physics to describe the Fphysics arrows for how the physics-stuff Xphysics could affect us, followed promptly by a need for meta-meta-physics et cetera. If the laws of physics were a kind of causal stuff, they'd be an upper tier of causality - we can't appear to affect the laws of physics, but if you call them causes, they can affect us. In Matrix terms, this would correspond to our universe running on a computer that stored the laws of physics in one area of RAM and the state of the universe in another area of RAM, the first area would be an upper causal tier and the second area would be a lower causal tier. But the infinite regress from treating the laws of determination as causal stuff, makes me suspicious that it might be an error to treat the laws of physics as "stuff that makes stuff happen and happens because of other stuff". When we trust that the ship doesn't disappear when it goes over the horizon, we may not be navigating to a physics-node in the graph, so much as we're navigating to a single Fphysics that appears in many different places inside the graph, and whose previously unknown function we have inferred. But this is an unimportant technical quibble on Tuesdays, Thursdays, Saturdays, and Sundays. It is only an incredibly deep question about the nature of reality on Mondays, Wednesdays, and Fridays, i.e., less than half the time.

Part of the sequence Highly Advanced Epistemology 101 for Beginners

Next post: "Proofs, Implications, and Models"

Previous post: "Stuff That Makes Stuff Happen"

247 comments

Comments sorted by top scores.

comment by CronoDAS · 2012-10-22T00:23:50.082Z · LW(p) · GW(p)

Epiphenominal theories of consciousness are kind of silly, but here's another situation I can wonder about... some cellular automata rules, including the Turing-complete Conway's Game of Life, can have different "pasts" that can lead to the same present. From the point of view of a being living in such a universe (one in which information can be destroyed), is there a fact of the matter as to which "past" actually happened?

Replies from: Pentashagon, Eliezer_Yudkowsky, abramdemski, mfb, drethelin, hwc↑ comment by Pentashagon · 2012-10-22T23:16:56.435Z · LW(p) · GW(p)

I had always thought that our physical universe had this property as well, i.e. the Everett multiverse branches into the past as well as into the future.

Replies from: Eliezer_Yudkowsky, Viliam_Bur↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-10-25T08:01:33.979Z · LW(p) · GW(p)

If you take a single branch and run it backward, you'll find that it diverges into a multiverse of its own. If you take all the branches and run them backward, their branches will cohere instead of decohering, cancel out in most places, and miraculously produce only the larger, more coherent blobs of amplitude they started from. Sort of like watching an egg unscramble itself.

Replies from: SilasBarta↑ comment by SilasBarta · 2012-10-28T00:06:52.117Z · LW(p) · GW(p)

If you take all the branches and run them backward, their branches will cohere instead of decohering, cancel out in most places, and miraculously produce only the larger, more coherent blobs of amplitude they started from.

And the beings in them will only have memories of further-cohered (further "pastward") events, just as if you didn't run anything backwards.

↑ comment by Viliam_Bur · 2012-10-25T07:40:09.086Z · LW(p) · GW(p)

And at the beginning of the universe we have a set of states which just point time-backwards at each other, which is why we cannot go meaningfully more backwards in time.

Something like:

A1 goes with probability 1% to B1, 1% to C1, and 98% to A2.

B1 goes with probability 1% to A1, 1% to C1, and 98% to B2.

C1 goes with probability 1% to A1, 1% to B1, and 98% to C2.

So if you ask about the past of A2, you get A1, which is the part that makes intuitive sense for us. But trying to go deeper in the past just gives us that the past of A1 is B1 or C1, and the past of B1 is A1 or C1, etc. Except that the change does not clearly happen in one moment (A2 has a rather well-defined past, A1 does not), but more gradually.

Replies from: Eliezer_Yudkowsky↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-10-25T08:00:07.303Z · LW(p) · GW(p)

As I understand it, this is not how standard physics models the beginning of time.

Replies from: army1987, Viliam_Bur↑ comment by A1987dM (army1987) · 2012-10-25T18:26:35.946Z · LW(p) · GW(p)

I don't think anyone takes seriously the way standard physics models the beginning of time (temperature and density of the universe approaching infinity as its age approaches zero), anyway, as it's most likely incorrect due to quantum gravity effects.

Replies from: wedrifid↑ comment by wedrifid · 2012-10-25T22:21:14.436Z · LW(p) · GW(p)

I don't think anyone takes seriously the way standard physics models the beginning of time (temperature and density of the universe approaching infinity

This is a correct usage of terminology but the irony still made me smile.

Replies from: army1987↑ comment by A1987dM (army1987) · 2012-10-26T00:35:45.235Z · LW(p) · GW(p)

What?

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2013-06-09T23:10:18.921Z · LW(p) · GW(p)

I think wedrifid is pointing to the irony in saying that the 'standard' model is (on some issue) standardly rejected.

↑ comment by Viliam_Bur · 2012-10-25T09:24:06.184Z · LW(p) · GW(p)

Oh. I tried to find something, but the only thing that partially pattern-matches it was the Hartle–Hawking state. If we mix it with the "universe as a Markov chain over particle configurations" model, it could lead to something like this. Or could not.

↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-10-22T00:55:49.969Z · LW(p) · GW(p)

Interesting question! I'd say that you could refer to the possibilities as possibilities, e.g. in a debate over whether a particular past would in fact have led to the present, but to speak of the 'actual past' might make no sense because you couldn't get there from there... no, actually, I take that back, you might be able to get there via simplicity. I.e. if there's only one past that would have evolved from a simply-tiled start state for the automaton.

Replies from: ialdabaoth, CCC, Pentashagon↑ comment by ialdabaoth · 2012-10-22T23:08:41.608Z · LW(p) · GW(p)

But does it really matter? If both states are possible, why not just say "my past contains ambiguity?"

With quantum mechanics, even though the "future" itself (as a unified wavefunction) evolves forward as a whole, the bit-that-makes-up-this-pseudofactor-of-me has multiple possible outcomes. We live with future ambiguity just fine, and quantum mechanics forces us to say "both experienced futures must be dealt with probabilistically". Even though the mechanism is different, what's wrong with treating the "past" as containing the same level of branching as the future?

EDIT: From a purely global, causal perspective, I understand the desire to be able to say, "both X and Y can directly cause Z, but in point of fact, this time it was Y." But you're inside, so you don't get to operate as a thing that can distinguish between X and Y, and this isn't necessarily an "orbital teapot" level of implausibility. If configuration Y is 10^4 more likely as a 'starting' configuration than configuration X according to your understanding of how starting configurations are chosen, then sure - go ahead and assert that it was (or may-as-well-have-been) configuration Y that was your "actual" past - but if the configuration probabilities are more like 70%/30%, or if your confidence that you understand how starting configurations are chosen is low enough, then it may be better to just swallow the ambiguity.

EDIT2: Coming from a completely different angle, why assert that one or the other "happened", rather than looking at it as a kind of path-integral? It's a celular automaton, instead of a quantum wave-function, which means that you're summing discrete paths instead of integrating infinitesimals, but it seems (at first glance) that the reasoning is equally applicable.

Replies from: Viliam_Bur↑ comment by Viliam_Bur · 2012-10-25T07:59:16.185Z · LW(p) · GW(p)

If both states are possible, why not just say "my past contains ambiguity?"

Ambiguity it is, but we usually want to know the probabilities. If I tell you that whether you win or not win a lottery tomorrow is "ambiguous", you would not be satisfied with such answer, and you would ask how much likely it is to win. And this question somehow makes sense even if the lottery is decided by a quantum event, so you know that each future happens in some Everett branch.

Similarly, in addition to knowing that the past is ambiguous, we should ask how likely are the individual pasts. In our universe you would want to know how the pasts P1 and P2 are likely to become NOW. The Conway's Game of Life does not branch time-forward, so if you have two valid pasts, their probabilities of becoming NOW are 100% each.

But that is only a part of the equation. The other part are the prior probabilities of P1 and P2. Even if both P1 and P2 deterministically evolve to NOW, their prior probabilities influence how likely did NOW really evolve from each of them.

I am not sure what would be the equivalent of Solomonoff induction for the Conway's Game of Life. Starting with a finite number of "on" cells, where each additional "on" cell decreases the prior probability of the configuration? Starting with an infinite plane where each cell has a 50% probability to be "on"? Or an infinite plane with each cell having a p probability of being "on", where p has the property that after one step in such plane, the average ratio of "on" cells remain the same (the p being kind-of-eigenvalue of the rules)?

But the general idea is that if P1 is somehow "generally more likely to happen" than P2, we should consider P1 to be more likely the past of NOW than P2, even if both P1 and P2 deterministically evolve to NOW.

↑ comment by CCC · 2012-10-25T08:35:49.315Z · LW(p) · GW(p)

In the Game of Life, a single live cell with no neighbours will become a dead cell in the next step. Therefore, any possible present state that has at least one past state has an infinite number of one-step-back states (which differ from the one state merely in having one or more neighbourless cells at random locations, far enough from anything else to have no effect).

Some of these one-step-back states may end up having evolved from simpler starting tilesets than the one with no vanishing cells.

↑ comment by Pentashagon · 2012-10-22T23:14:36.155Z · LW(p) · GW(p)

no, actually, I take that back, you might be able to get there via simplicity. I.e. if there's only one past that would have evolved from a simply-tiled start state for the automaton.

The simplest start state might actually be a program that simulates the evolution of every possible starting state in parallel. If time and space are unbounded and an entity is more complex than the shortest such program then it is more likely that the entity is the result of the program and not the result of evolving from another random state.

↑ comment by abramdemski · 2012-11-16T05:21:05.487Z · LW(p) · GW(p)

I am unable to see the appeal of a view in which there is no fact of the matter. It seems to me that there is a fact of the matter concerning the past, even if it is impossible for us to know. This is not similar to the case where sneezing alters two shadow variables, and it is impossible for us to meaningfully refer to variable 1 as opposed to variable 2; the past has a structure, so assertions will typically have definite referents.

↑ comment by mfb · 2012-10-25T15:10:45.082Z · LW(p) · GW(p)

The Standard Model of particle physics with MWI is time-symmetric (to be precise: CPT symmetric) and conserves information. If you define the precise state at one point in time, you can calculate the unique past which lead to that state and the unique future which will evolve from that state. Note that for general states, "past" and "future" are arbitrary definitions.

Replies from: CronoDAS↑ comment by drethelin · 2012-10-22T21:20:32.342Z · LW(p) · GW(p)

This is actually one of the reasons I have to doubt Cryonics. You can talk about nano-tech being able to "reverse" the damage, but it's possible (and I think likely), that it's very hard to go from damaged states to the specific non-damaged state that actually constitutes your consciousness/memory.

Replies from: ialdabaoth, DaFranker↑ comment by ialdabaoth · 2012-10-22T23:13:28.394Z · LW(p) · GW(p)

Assuming that "you" are a point in consciousness phase-space, and not a "smear". If "you-ness" is a locus of similar-but-slightly-different potential states, then "mostly right" is going to be good enough.

And, given that every morning when you wake up, you're different-but-still-you, I'd say that there's strong evidence that "you-ness" is a locus of similar-but-slightly-different potential states, rather than a singular point.

This means, incidentally, that it may be possible to resurrect people without a physical copy of their brains at all, if enough people who remember them well enough when the technology becomes available.

Of course, since it's a smear, the question becomes "where do you want to draw the line between Bob and not-Bob?" - since whatever you create will believe it's Bob, and will act the way everyone alive remembers that Bob acted, and the "original" isn't around to argue (assuming you believe in concepts like "original" to begin with, but if you do, you have some weirder paradoxes to deal with).

↑ comment by DaFranker · 2012-10-22T21:36:00.466Z · LW(p) · GW(p)

Which is why it's better for there to be more people signed up, but not actually being frozen yet. The more money they get while the later you get frozen, the better the odds. If immortality is something you want, this still seems like the best gamble.

↑ comment by hwc · 2012-10-22T21:06:24.905Z · LW(p) · GW(p)

Or, a Boltzmann brain that flickered into existence with memories of a past that never happened.

Replies from: ialdabaoth↑ comment by ialdabaoth · 2012-10-23T00:54:18.026Z · LW(p) · GW(p)

In that particular case, "never happened" has some weird ontological baggage. If a simulated consciousness is still conscious, then isn't its simulated past still a past?

Perhaps "didn't happen" in the sense that its future reality will not conform to its memory-informed expectations, but it seems like, if those memories form a coherent 'past', then in a simulationist sense that past did happen, even if it wasn't simulated with perfect fidelity.

comment by Armok_GoB · 2012-10-21T16:25:36.454Z · LW(p) · GW(p)

Just for the Least Convenient World, what if the zombies build a supercomputer and simulate random universes, and find that in 98% of simulated universes life forms like theirs do have shadow brains, and that the programs for the remaining 2% are usually significantly longer?

Replies from: Dweomite, Liron, afeller08↑ comment by Liron · 2012-10-23T04:47:23.414Z · LW(p) · GW(p)

How can the version without shadow brains be significantly longer? Even in the worst possible world, it seems like the 2% of non-shadow-brain programs could be encoded by copying their corresponding shadow-brain programs and adding a few lines telling the computer how to garbage-collect shadows using a straightforward pruning algorithm on the causal graph.

Replies from: Armok_GoB↑ comment by Armok_GoB · 2012-10-23T16:45:10.708Z · LW(p) · GW(p)

by the programs being short enough in the first place that those few lines still doubles the length? By the universe like part not being straightforwardly encoded so that to distinguish anything about it you first need a long AI-like interpreter just to get there?

↑ comment by afeller08 · 2012-10-25T01:09:40.015Z · LW(p) · GW(p)

That would strongly indicate that something caused the zombies to write a program for generating simulations that was likely to create simulated shadow brains in most of the simulations. (The compiler's built in prover for things like type checking was inefficient and left behind a lot of baggage that produced second tier shadow brains in all but 2% of simulations). It might cause the zombies to conclude that they probably had shadow brains and start talking about the possibility of shadow brains, but it should be equally likely to do that whether the shadow brains were real or not. (Which means any zombie with a sound epistemology would not give additional credence to the existence of shadow brains after the simulation caused other zombies to start talking about shadow brains than it would if the source of the discussion of shadow brains had come from a random number generator producing a very large number, and that large number being interpreted as a string in some normal encoding for the zombies producing a paper that discussed shadow brains. Shadow brains in that world should be an idea analogous to Russell's teapot, astrology, or the invisible pink unicorn in our world.)

Now, if there was some outside universe capable of looking at all of the universes and seeing some universes with shadow brains and some without, and in the the universes with shadow brains zombies were significantly more likely to produce simulations that created shadow brains than in the universe in which zombies had shadow brains they were much more likely to create simulations that predicted shadow brains similar to their actual shadow brains -- then, we would be back to seeing exactly what we see when philosophers talk about shadow brains directly: namely, the shadow brains are causing the zombies to imagine shadow brains which means that the shadow brains aren't really shadow brains because they are affecting the world (with probability 1).

Either the result of the simulations points to gross inefficiency somewhere (their simulations predicted something that their simulations shouldn't have been able to predict) or the shadow brains not really being shadow brains because they are causally impacting the world. (This is slightly more plausible than philosopher's postulating shadow brains correctly for no reason only because we don't necessarily know that there is anything driving the zombies to produce simulations efficiently; whereas, we know in our world that we can assume that brains typically produce non-gibberish because enormous selective pressures have caused brains to create non-gibberish.)

Replies from: Armok_GoB↑ comment by Armok_GoB · 2012-10-25T02:20:57.623Z · LW(p) · GW(p)

I were talking about the logical counter-factual, where it genuinely is true and knowably so through rationality.

It might be easier to think about it like this: there is a large number of civilizations in T4, each of which can observe that almost all the other have shadow brains but none of which can see if they have them themselves.

comment by Shmi (shminux) · 2012-10-21T17:08:22.849Z · LW(p) · GW(p)

Is it coherent to imagine a universe in which a real entity can be an effect but not a cause?

Your favorite example of event horizons, cosmological or otherwise, is like that. GR suggests that there can be a ring singularity inside an eternal spinning black hole (but not one spun up from rest), near/around which you can go forever without being crushed. (it also suggests that there could be closed timelike curves around it, but I'll ignore this for now.) So maybe there are particles/objects/entities living there.

Stuff thrown into such a black hole can certainly affect the hypothetical entities living inside. Like a meteor shower from the outside. But the outside is not affected by anything happening inside, the horizon prevents it.

Replies from: AnthonyC, MrMind↑ comment by AnthonyC · 2012-10-22T01:23:22.153Z · LW(p) · GW(p)

Fair, but quantum mechanics gives us Hawking radiation, which may or may not provide information (in principle) about what went into the black hole.

Also, there are causal arrows from the black hole to everything it pulls on, and those are ultimately the sum of causal arrows from each particle in the black hole even if from the outside we can't discern the individual particles.

↑ comment by MrMind · 2012-10-22T14:54:30.807Z · LW(p) · GW(p)

Stuff thrown into such a black hole can certainly affect the hypothetical entities living inside. Like a meteor shower from the outside. But the outside is not affected by anything happening inside, the horizon prevents it.

That is not entirely true: stuff thrown into the black hole increases the horizon area and possibly modifies its geometry, and in return the horizon affects the spatial infinity (the area around the horizon). The debate is about how much information the horizon deletes in the process. The same is for the cosmological horizon, which is effectively just another kind of singularity.

Replies from: shminux↑ comment by Shmi (shminux) · 2012-10-22T14:57:07.466Z · LW(p) · GW(p)

stuff thrown into the black hole increases the horizon area and possibly modifies its geometry, and in return the horizon affects the spatial infinity (the area around the horizon).

That's outside affecting outside, not inside affecting outside.

The same is for the cosmological horizon, which is effectively just another kind of singularity.

Horizon is not a singularity.

Replies from: MrMind↑ comment by MrMind · 2012-10-22T15:52:56.938Z · LW(p) · GW(p)

That's outside affecting outside, not inside affecting outside.

Hmm... let's taboo "outside" and "inside". The properties of stuff within the horizon affect the properties of the horizon, which in turn affect the properties of space-matter at spatial infinity. Is this formulation more acceptable?

Horizon is not a singularity.

Right, I'll rephrase: the same goes for the cosmological horizon, which effectively 'surrounds' just another kind of singularity.

Replies from: shminux↑ comment by Shmi (shminux) · 2012-10-22T16:22:24.311Z · LW(p) · GW(p)

The properties of stuff within the horizon affect the properties of the horizon

Wrong. There could be tons of different things going on inside, absolutely indistinguishable from outside, which only sees mass, electric charge and angular momentum. There is no causal connection from inside to outside whatsoever, barring FTL communication.

Right, I'll rephrase: the same goes for the cosmological horizon, which effectively 'surrounds' just another kind of singularity.

Wrong again. There is no singularity of any kind behind the cosmological horizon (which is not a closed surface to begin with, so it cannot "surround" anything). Well, there might be black holes and stuff, or there might not be, but there is certainly not a requirement of anything singular being there. Consider googling the definition of singularity in general relativity.

Replies from: DaFranker, Alejandro1, MrMind↑ comment by DaFranker · 2012-10-22T17:03:55.039Z · LW(p) · GW(p)

Wrong. There could be tons of different things going on inside, absolutely indistinguishable from outside, which only sees mass, electric charge and angular momentum. There is no causal connection from inside to outside whatsoever, barring FTL communication.

Unless the "inside" was spontaneously materialized into existence while simultaneously a different chunk of the singularity's mass blinked out of existence in manners which defy nearly all the physics I know, then there still remains a causal connection from the "disappearance" of this stuff that's "inside" from the world "outside" at some point in outside time frames, AFAICT. This disappearance of specific pieces of matter and energy seems to more than qualify as a causal effect, when compared to counterfactual futures where they do not disappear.

Also, the causal connection [Inside -> Mass -> Outside] pretty much looks like a causal connection from inside to outside to me. There's this nasty step in the middle that blurs all the information such that under most conceivable circumstances there's no way to tell which of all possible insides is the "true" one, but combined with the above about matter disappearance can still let you concentrate your probability mass, compared to meaningless epiphenomena that cover the entire infinite hypothesis space (minus one single-dimensional line representing its interaction with anything that interacts with our reality in any way) with equal probability because there's no way it could even in principle affect us even in CTCs, FTL, timeless or n-dimensional spaces, etc.

(Note: I'm not an expert on mind-bending hypothetical edge cases of theoretical physics, so I'm partially testing my own understanding of the subject here.)

Replies from: shminux↑ comment by Shmi (shminux) · 2012-10-22T17:57:03.921Z · LW(p) · GW(p)

I'm partially testing my own understanding of the subject here.

Most of what you said is either wrong or meaningless, so I don't know where to begin unraveling it, sorry. Feel free to ask simple questions of limited scope if you want to learn more about black holes, horizons, singularities and related matters. The subject is quite non-trivial and often counter-intuitive.

Replies from: DaFranker↑ comment by DaFranker · 2012-10-22T19:56:54.876Z · LW(p) · GW(p)

Hmm, alright.

In more vague, amateur terms, isn't the whole horizon thing always the same case, i.e. it's causally linked to the rest of the universe by observations in the past and inferences using presumed laws of physics, even if the actual state of things beyond the horizon (or inside it or whatever) doesn't change what we can observe?

Replies from: shminux↑ comment by Shmi (shminux) · 2012-10-22T20:19:22.193Z · LW(p) · GW(p)

The event horizon in an asymptotically flat spacetime (which is not quite the universe we live in, but a decent first step) is defined as the causal past of the infinite causal future. This definition guarantees that we see no effects whatsoever from the part of the universe that is behind the event horizon. The problem with this definition is that we have to wait forever to draw the horizon accurately. Thus there are several alternative horizons which are more instrumentally useful for theorem proving and/or numerical simulations, but are not in general identical to the event horizon. The cosmological event horizon is a totally different beast (it is similar to the Rindler horizon, used to derive the Unruh effect), though it does share a number of properties with the black hole event horizon. There are further exciting complications once you get deeper into the subject.

↑ comment by Alejandro1 · 2012-10-22T16:35:54.592Z · LW(p) · GW(p)

Wrong. There could be tons of different things going on inside, absolutely indistinguishable from outside, which only sees mass, electric charge and angular momentum.

Nitpick: this is only true for a stationary black hole. If you throw something sufficiently big in, you would expect the shape of the horizon to change and bulge a bit, until it settles down into a stationary state for a larger black hole. You are of course correct that this does not allow anything inside to send a signal to the outside.

Replies from: shminux↑ comment by Shmi (shminux) · 2012-10-22T16:41:07.551Z · LW(p) · GW(p)

Nitpick: this is only true for a stationary black hole.

Right, I didn't want to go into these details, MrMind seems confused enough as it is. I'd have to explain that the horizon shape is only determined by what falls in, and eventually talk about apparent and dynamical horizons and marginally outer trapped surfaces...

↑ comment by MrMind · 2012-10-24T14:33:07.592Z · LW(p) · GW(p)

Wrong. There could be tons of different things going on inside, absolutely indistinguishable from outside, which only sees mass, electric charge and angular momentum.

Also entropy. Anyway, those are determined by the mass, electrical charge and angular momentum of the matter that fell inside. We may not want to call it a causal connection, but it's certainly a case of properties within determining properties outside.

There is no causal connection from inside to outside whatsoever, barring FTL communication.

There is no direct causal connection, meaning a worldline from the inside to the outside of the black hole. But even if the horizon screens almost all of the infalling matter properties, it doesn't screen everything (and probably, but this is a matter of quantum gravity, doesn't screen nothing).

Wrong again. There is no singularity of any kind behind the cosmological horizon (which is not a closed surface to begin with, so it cannot "surround" anything). Well, there might be black holes and stuff, or there might not be, but there is certainly not a requirement of anything singular being there. Consider googling the definition of singularity in general relativity.

I'll admit to not have much knowledge about this specific theme, and I'll educate myself more properly, but in the case of my earlier sentence I used "singularity" as a mathematical term, referring to a region of spacetime in which the GR equations acquire a singular value, so not specifically to a gravitational singularity like a black-hole or a domain wall. In the case of most commonplace cosmological horizons, this region is simply space-like infinity.

comment by drnickbone · 2012-10-21T10:20:45.815Z · LW(p) · GW(p)

Some thoughts about "epiphenomena" in general, though not related to consciousness.

Suppose there are only finitely many events in the entire history of the universe (or multiverse), so that the universe can be represented by a finite casual graph. If it is an acrylic graph (no causal cycles), then there must be some nodes which are effects but not causes, that is, they are epiphenomena. But then why not posit a smaller graph with the epiphenomenal nodes removed, since they don't do anything? And then that reduced graph is also finite, and also has epiphenomenal nodes.... so why not remove those?

So, is the conclusion that the best model of the universe is a strictly infinite graph, with no epiphenomenal nodes that can be removed e.g. no future big crunches or other singularities? This seems like a dubious piece of armchair cosmology.

Or are there cases where the larger finite graph (with the epiphenomenal nodes) is strictly simpler as a theory than the reduced graph (with the epiphenomena removed), so that Occam's razor tells us to believe in the larger graph? But then Occam's razor is justifying a belief in epiphenomena, which sounds rather odd when put like that!

Replies from: Eliezer_Yudkowsky, CCC, army1987↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-10-21T21:18:31.315Z · LW(p) · GW(p)

The last nodes are never observed by anyone, but they descend from the same physics, the same F(physics), that have previously been pinned down, or so I assume. You can thus meaningfully talk about them for the same reason you can meaningfully talk about a spaceship going over the cosmological horizon. What we're trying to avoid is SNEEZE_VARs or lower qualia where there's no way that the hypothesis-making agent could ever have observed, inducted, and pinned down the causal mechanism - where there's no way a correspondence between map and territory could possibly be maintained.

↑ comment by CCC · 2012-10-21T11:26:02.900Z · LW(p) · GW(p)

Following this reasoning, if there is a finite causal state machine, then your pruning operation would eventually remove me, you, the human race, the planet Earth.

Now, from inside the universe, I cannot tell whether your hypothesis of a finite state graph universe is true or not - but I do have a certain self-interest in not being removed from existence. I find, therefore, that I am scrambling for justifications for why the finite-state-model universe nodes containing myself are somehow special, that they should not be removed (to be fair, I extend the same justifications to all sentient life).

↑ comment by A1987dM (army1987) · 2012-10-21T22:23:29.265Z · LW(p) · GW(p)

comment by Eugine_Nier · 2012-10-21T03:24:47.947Z · LW(p) · GW(p)

That said, I can certainly write a computer program in which there's a tier of objects affecting each other, and a second tier - a lower tier - of epiphenomenal objects which are affected by them, but don't affect them.

I would like to point out that any space-like surface (technically 3-fold) divides our universe into two such tiers.

Replies from: Eliezer_Yudkowsky, CCC↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-10-21T21:27:23.907Z · LW(p) · GW(p)

Okay, I can see that I need to spell out in more detail one of the ideas here - namely that you're trying to generalize over a repeating type of causal link and that reference is pinned down by such generalization. The Sun repeatedly sends out light in individual Sun-events, electrons repeatedly go on traveling through space instead of vanishing; in a universe like ours, rather than the F(i) being whole new transition tables randomly generated each time, you see the same F(physics) over and over. This is what you can pin down and refer to. Any causal graph is acyclic and can be divided as you say; the surprising thing is that there are no F-types, no causal-link-types, which (over repeating time) descend from one kind of variable to another, without (over time) there being arrows also going back from that kind to the other. Yes, we're generalizing and inducting over time, otherwise it would make no sense to speak of thingies that "affect each other". No two individual events ever affect each other!

Replies from: army1987, Eugine_Nier↑ comment by A1987dM (army1987) · 2012-10-21T22:27:46.024Z · LW(p) · GW(p)

Maybe you should elaborate on this in a top-level post.

Replies from: Eliezer_Yudkowsky↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-10-25T04:29:15.631Z · LW(p) · GW(p)

I edited the main post to put it in.

Replies from: William_Quixote↑ comment by William_Quixote · 2012-10-29T21:55:31.529Z · LW(p) · GW(p)

I will probably reskim the post, but in general it’s not clear to me that editing content into a preexisting posts is better than incorporating then into the next post where it would be appropriate. The former provides the content to all people yet to read it and all people who will reread it while the latter provides the content to all people who yet to read it. So you are trading the time value of getting updated content to the people who will reread this post faster at the expense of not getting updated content to those who will read the next post but not reread the present post.

I don’t have readership and rereadership stats, but this seems like an answerable question.

↑ comment by Eugine_Nier · 2012-10-21T22:38:23.939Z · LW(p) · GW(p)

Okay, I can see that I need to spell out in more detail one of the ideas here - namely that you're trying to generalize over a repeating type of causal link and that reference is pinned down by such generalization.

So in the end, we're back a frequentism.

Also, what about unique events?

Replies from: Cyan, Eliezer_Yudkowsky↑ comment by Cyan · 2012-10-22T02:47:55.876Z · LW(p) · GW(p)

Somewhat tangentially, I'd like to point out that simply bringing up relative frequencies of different types of events in discussion doesn't make one a crypto-frequentist -- the Bayesian approach doesn't bar relative frequencies from consideration. In contrast, frequentism does deprecate the use of mathematical probability as a model or representation of degrees of belief/plausibility.

↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-10-22T00:52:47.838Z · LW(p) · GW(p)

Er, no, they're called Dynamic Bayes Nets. And there are no known unique events relative to the fundamental laws of physics; those would be termed "miracles". Physics repeats perfectly - there's no question of frequentism because there's no probabilities - and the higher-level complex events are one-time if you try to measure them precisely; Socrates died only once, etc.

Replies from: Eugine_Nier↑ comment by Eugine_Nier · 2012-10-23T00:44:37.596Z · LW(p) · GW(p)

And there are no known unique events relative to the fundamental laws of physics

What about some of the things going on at the LHC?

↑ comment by CCC · 2012-10-21T10:58:25.626Z · LW(p) · GW(p)

So... the past can affect the future, but the future cannot affect the past (barring things like tachyons, which may or may not exist in any case). Taking this in context of the article could lead to an interesting discussion on the nature of time; does the past still exist once it is past? Does the future exist yet in any meaningful way?

Replies from: army1987↑ comment by A1987dM (army1987) · 2012-10-21T13:07:24.824Z · LW(p) · GW(p)

I'd say "No, unless you're using the words still and yet in a weird way."

Replies from: CCC↑ comment by CCC · 2012-10-21T19:32:24.973Z · LW(p) · GW(p)

Consider for a moment the concept of the lack of simultaneity in special relativity.

Consider, specifically, the train and platform experiment. A train passes a platform travelling at a significant fraction of the speed of light; some time before it does so, a light bulb in the precise centre of the train flashes, once.

The observer T on the train will find that the light reaches the front and back of the train simultaneously; the observer P on the platform finds that the light hits the back of the train before it hits the front of the train.

Consider now the instant in which the train observer T says that the light is currently hitting the front and the back of the train simultaneously. At that precise instant, he glances out of the window and sees that he is right next to the platform observer P. the event "P and T pass each other" occurs at that same instant. Thus, all three events - the light hitting the front of the train, the light hitting the rear of the train, and P and T passing, are simultaneous.

Now consider P. In P's reference frame, these events are not simultaneous. The occur in the following order:

- The light hits the rear of the train

- T passes P

- The light hits the front of the train

So. In the instant in which T and P pass each other, does the event "the light hits the rear of the train" exist? It is not in the past, or future, lightcone of the event "T and P pass each other", and thus cannot be directly causally linked to that event (barring FTL travel, which causes all sorts of headaches for relativity in any case).

Replies from: army1987↑ comment by A1987dM (army1987) · 2012-10-21T19:47:03.113Z · LW(p) · GW(p)

In the instant in which T and P pass each other, does the event "the light hits the rear of the train" exist?

The phrase "In the instant in which T and P pass each other" has a different meaning (namely, it refers to a different spacelike hypersurface) depending on what frame of reference the speaker is using. Some of those hypersurfaces include the event "the light hits the rear of the train" and others don't.

Replies from: CCC↑ comment by CCC · 2012-10-23T07:25:25.304Z · LW(p) · GW(p)

That is true. Nonetheless, you have two observers, T and P, who disagree at a given moment on whether the event "the light hits the rear of the train" is currently happening, or whether it has already happened. (Another observer can be introduced for whom the event has not yet happened).

So. If the present exists - that is, if everything which is on the same spacelike hypersurface as me at this current moment exists - then every possible spacelike hypersurface including me must exist. Which means that, over in the Andromeda galaxy, there must be quite a large time interval that exists all at once (everything that's not in my past/future light cone). Applying the same argument to a hypothetical observer in the Andromeda Galaxy implies that a large swath of time over here must all be in existance as well.

Now, it is possible that there is one particular spacelike hypersurface that can be considered to be the only spacelike hypersurface in existance at any given time; if this were the case, though, then I would expect that there would be some experiment that could demonstrate which spacelike hypersurface it is. That same experiment would disprove special relativity, and require an updating of that theory. On the other hand, if the past and future are in existance in some way, I would expect that there would be some way, as yet undiscovered, to affect them - some way, in short, to travel through time (or at least to send an SMS to the past). Either way, it leads to interesting possibilities for future physics.

Replies from: army1987↑ comment by A1987dM (army1987) · 2012-10-23T09:22:28.505Z · LW(p) · GW(p)

First of all, natural language sucks at specifying whether a statement is indexical/deictic (its referent depends on who is speaking, and where and when they are speaking, etc.) or not. The compulsory tense marking on verbs is part of the problem (“there is” is usually but not always taken to mean “there is now” as “is” is in the present tense, and that's indexical -- “now” refers to a different time in this comment than in one written three years ago), though not the only one (“it's not raining” is usually taken to mean “it's not raining here”, not “it's not raining anywhere”).

Now, it is possible that there is one particular spacelike hypersurface that can be considered to be the only spacelike hypersurface in existance at any given time

Yes, once you specify (explicitly or implicitly) what you mean by “at any given time” (i.e. what frame of reference you're using). But there's no God-given choice for that. (Well, there's the frame of reference in which the dipole anisotropy of the cosmic microwave background vanishes, but you have to “look out” to know what that is; in a closed system you couldn't tell.) IOW the phrase “at any given time” must also be taken as indexical; in everyday life that doesn't matter much because the hypersurfaces you could plausibly be referring to are only separated by tiny fractions of a second in the regions you'd normally want to talk about.

On the other hand, if the past and future are in existance in some way,

Yes, if “are” is interpreted non-indexically (i.e. not as “are now”).

I would expect that there would be some way, as yet undiscovered, to affect them - some way, in short, to travel through time (or at least to send an SMS to the past).

Why? In special relativity, “X can affect Y” is equivalent to “Y is within or on the future light cone of X”, which is a partial order relation, and that's completely self-consistent. (But in the real world, special relativity only applies locally, and even there we can't be 100% sure it applies exactly and in all conditions.)

Replies from: CCC↑ comment by CCC · 2012-10-23T18:12:11.010Z · LW(p) · GW(p)

Yes, once you specify (explicitly or implicitly) what you mean by “at any given time” (i.e. what frame of reference you're using).

This is where it all gets complicated. If I'm trying to talk about one instantaneous event maintaining an existence for longer than an instant - well, language just isn't structured right for that. An event can partake of many frames of reference, many of which can include me at different times by my watch (particularly if the event in question takes place in the Andromeda Galaxy). So, if there is one reference frame where an Event occurs at the same time as my watch shows 20:00, and another reference frame shows the same (distant) event happening while my watch says 21:00, then does that Event remain in existence for an entire hour?

That's basically the question I'm asking; while I suspect that the answer is 'no', I also don't see what experiment can be used to prove either a positive or a negative answer to that question (and either way, the same experiment seems likely to also prove something else interesting).

Yes, if “are” is interpreted non-indexically (i.e. not as “are now”).

I meant it as "are now".

I would expect that there would be some way, as yet undiscovered, to affect them - some way, in short, to travel through time (or at least to send an SMS to the past).

Why? In special relativity, “X can affect Y” is equivalent to “Y is within or on the future light cone of X”, which is a partial order relation, and that's completely self-consistent.

Because if it is now in existence, then I imagine that there is now some way to affect it; which in this case would imply time travel (and therefore at least some form of FTL travel)

Replies from: army1987↑ comment by A1987dM (army1987) · 2012-10-24T13:22:40.953Z · LW(p) · GW(p)

First of all, Thou Shalt Not use several frames of reference at once unless you know what you're doing or you risk being badly confused. (Take a look at the Special Relativity section of the Usenet Physics FAQ, especially the discussion of the Twin Paradox.) Possibly, get familiar with spacetime diagrams (also explained in that FAQ).

According to special relativity, the duration of the set of instants B in your life such as there exists an inertial frame of reference such that B is simultaneous with a fixed event A happening in Andromeda is 2L/c, where L is the distance from you to Andromeda. (Now you do need experiments to tell whether special relativity applies to the real world, but any deviation from it --except due to gravitation-- must be very small or only apply to certain circumstances, or we would have seen it by now.)

I meant it as "are now".

I'd say that the concept of “now” needs a frame of reference to be specified (or implicit from the context) to make sense.

Because if it is now in existence, then I imagine that there is now some way to affect it; which in this case would imply time travel (and therefore at least some form of FTL travel)

I think you are trying to apply to Minkowski spacetime an intuition that only applies to Galilean spacetime (and even then, it's not an intuition that everyone shares; IIRC, there have been people thinking that instant action at a distance is counterintuitive and a reason to suspect that Newtonian physics is not the whole story for centuries, even before Einstein came along).

Replies from: CCC↑ comment by CCC · 2012-10-25T08:23:36.860Z · LW(p) · GW(p)

First of all, Thou Shalt Not use several frames of reference at once unless you know what you're doing or you risk being badly confused.

I think that this is important; I have come to suspect that I am somewhat confused.

I think you are trying to apply to Minkowski spacetime an intuition that only applies to Galilean spacetime

This is more than likely correct. I would also note that I have been applying, over very long (intergalactic) distances, the assumption that there is no expansion, which is clearly wrong. I suspect that I should probably look more into General Relativity before continuing along this train of thought.

Replies from: army1987↑ comment by A1987dM (army1987) · 2012-10-25T08:48:49.244Z · LW(p) · GW(p)

I would also note that I have been applying, over very long (intergalactic) distances, the assumption that there is no expansion, which is clearly wrong.

Andromeda is nowhere near so far away that the expansion of the universe is important. (In fact, according to Wikipedia it's being blueshifted, meaning that its gravitational attraction to us is winning over the expansion of space.)

comment by dspeyer · 2012-10-21T01:59:53.971Z · LW(p) · GW(p)

Can anyone explain why epiphenomenalist theories of consciousness are interesting? There have been an awful lot of words on them here, but I can't find a reason to care.

Replies from: CarlShulman, drethelin, AnotherIdiot, Eliezer_Yudkowsky, bryjnar, TAG↑ comment by CarlShulman · 2012-10-21T20:25:33.117Z · LW(p) · GW(p)

It seems that you get similar questions as a natural outgrowth of simple computational models of thought. E.g. if one performs Solomonoff induction on the stream of camera inputs to a robot, what kind of short programs will dominate the probability distribution over the next input? Not just programs that simulate the physics of our universe: one would also need additional code to "read off" the part of the simulated universe that corresponded to the camera inputs. That additional code looks like epiphenomenal mind-stuff. Using this framework you can pose questions like "if the camera is expected to be rebuilt using different but functionally equivalent materials, will his change the inputs Solomonoff induction predicts?" or "if the camera is about to be duplicated, which copy's inputs will be predicted by Solomonoff induction?"

If we go beyond Solomonoff induction to allow actions, then you get questions that map pretty well to debates about "free will."

Replies from: Matt_Simpson, Giles↑ comment by Matt_Simpson · 2012-10-28T21:48:43.048Z · LW(p) · GW(p)

one would also need additional code to "read off" the part of the simulated universe that corresponded to the camera inputs. That additional code looks like epiphenomenal mind-stuff.

I don't understand why the additional code looks like epiphenomenal mind-stuff. Care to explain?

Replies from: gwern, CarlShulman↑ comment by gwern · 2012-10-28T23:12:00.878Z · LW(p) · GW(p)

I take Carl to meaning that: the program corresponding to 'universe A simulating universe B and I am in universe B' is strictly more complex than 'I am in universe B' while also predicting all the same observations, and so the 'universe A simulating universe B' part of the program makes no difference in the same way that mental epiphenomena make no difference - they predict you will make the same observations, while being strictly more complex.

Replies from: CarlShulman, SilasBarta, Matt_Simpson↑ comment by CarlShulman · 2012-10-29T21:18:44.338Z · LW(p) · GW(p)

This seems to be talking about something entirely different.

↑ comment by SilasBarta · 2012-10-28T23:59:15.634Z · LW(p) · GW(p)

the program corresponding to 'universe A simulating universe B and I am in universe B' is strictly more complex than 'I am in universe B' while also predicting all the same observations, and so the 'universe A simulating universe B' part of the program makes no difference in the same way that mental epiphenomena make no difference - they predict you will make the same observations, while being strictly more complex.

True, but, just as a reminder, that's not the position we're in. There are other (plausibly necessary) parts of our world model that could give us the implication "universe A simulates us" "for free", just as we get "the electron that goes beyond our cosmological horizon keeps existing" is an implication we get "for free" as a result of minimal models of physics.

In this case (per the standard Simulation Argument), the need to resolve the question of "what happens in civilizations that can construct virtual worlds indistinguishable from non-virtual worlds" can force us to posit parts of a (minimal) model that then imply the existence of universe A.

↑ comment by Matt_Simpson · 2012-10-28T23:32:38.612Z · LW(p) · GW(p)

Ah, ok, that makes sense. Thanks!

↑ comment by CarlShulman · 2012-10-29T21:17:30.604Z · LW(p) · GW(p)

The code simulating a physical universe doesn't need to make any reference to which brain or camera in the simulation is being "read off" to provide the sensory input stream. The additional code takes the simulation, which is a complete picture of the world according to the laws of physics as they are seen by the creatures in the simulation, and outputs a sensory stream. This function is directly analogous to what dualist/epiphenomenalist philosopher of mind David Chalmers calls "psychophysical laws."

Replies from: Gust↑ comment by Giles · 2012-10-28T20:59:33.576Z · LW(p) · GW(p)

Oh wow... I had been planning on writing a discussion post on essentially this topic. One quick question - if you have figured out the shortest program that will generate the camera data, is there a non-arbitrary way we can decide which parts of the program correspond to "physics of our universe" and which parts correspond to "reading off camera's data stream within universe"?

↑ comment by drethelin · 2012-10-21T02:20:18.175Z · LW(p) · GW(p)

Pretty much the same reason religion needs to be talked about. If no one had invented it wouldn't be useful to dispute notions of god creating us for a divine purpose, but because many people think this indeed happened you have to talk about it. It's especially important for reasonable discussions of AI.

Replies from: dspeyer↑ comment by dspeyer · 2012-10-21T06:47:27.410Z · LW(p) · GW(p)

Religion and epiphenomenalogy differ in three important ways:

- Religion is widespread. Almost everyone knows what it is. Most people have at least some religious memes sticking in their heads. A significant fraction of people have dangerous religious memes in their heads so decreasing those qualifies as raising the sanity waterline. Epiphenomenalogy is essentially unknown outside academic philosophy, and now the lesswrong readership.

- Religion has impact everywhere. People have died because of other people's religious beliefs, and not just from violence. Belief in epiphenomenalogy has almost no impact on the lives of non-believers.

- Religious thought patterns re-occur. Authority, green/blue, and "it is good to believe" show up over and over again. The sort of thoughts that lead to epiphenomenalogy are quite obscure.

↑ comment by hairyfigment · 2012-10-21T18:31:10.433Z · LW(p) · GW(p)

The word "epiphenomenalogy" is rare. The actual theory seems like an academic remnant of the default belief that 'You can't just reduce everything to numbers, people are more than that.'

So your last point seems entirely wrong. Zombie World comes from the urge to justify religious dualism or say that it wasn't all wrong (not in essence). And the fact that someone had to take it this far shows how untenable dualism seems in a practical sense, to educated people.

Replies from: TAG, TAG↑ comment by TAG · 2020-03-03T10:19:31.031Z · LW(p) · GW(p)

The most famous arguments for epiphenomenalism and zombies have nothing to do with religion. And we dont actually want to have reductive explanations of qualia, as you can tell from the fact that we can't construct qualia - - we can't write code that sees colours or tastes flavours. Construction is reduction in reverse.

↑ comment by Kaj_Sotala · 2012-10-21T08:47:39.709Z · LW(p) · GW(p)

Epiphenomenalogy is essentially unknown outside academic philosophy, and now the lesswrong readership.

I'd say it's more widespread than that. Some strands of Buddhist thought, for instance, seem to strongly imply it even if they didn't state it outright. And it feels like it'd be the most intuitive way of thinking about consciousness for many of the people who'd think about it at all, even if they weren't familiar with academic philosophy. (I don't think I got it from academic philosophy, though I can't be sure of that.)

↑ comment by AnotherIdiot · 2012-10-21T02:16:45.428Z · LW(p) · GW(p)

Because epiphenomenalist theories are common but incorrect, and the goal of LessWrong is at least partially what its name implies.

↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-10-21T06:13:02.063Z · LW(p) · GW(p)

It's good practice for seeing how the rules play out; and having a clear mental visualization of how causality works, and what sort of theories are allowed to be meaningful, is actually reasonably important both for anti-nonsense techniques and AI construction. People who will never need any more skill than they currently have in Resist Nonsense or Create Friendly AI can ignore it, I suppose.

↑ comment by bryjnar · 2012-10-21T10:30:50.400Z · LW(p) · GW(p)

Philosophers have a tendency to name pretty much every position that you can hold by accepting/refusing various "key" propositions. Epiphenomenalism tends to be reached by people frantically trying to hold on to their treasured beliefs about the way the mind works. Then they realise they can consistently be epiphenomenalists and they feel okay because it has a name or something.

Basically, it's a consistent position (well, Eliezer seems to think it's meaningless!), and so you want to go to some effort to show that it's actually wrong. Plus it's a good exercise to think about why it's wrong.

Replies from: RichardChappell↑ comment by RichardChappell · 2012-10-25T23:10:27.577Z · LW(p) · GW(p)

In my experience, most philosophers are actually pretty motivated to avoid the stigma of "epiphenomenalism", and try instead to lay claim to some more obscure-but-naturalist-friendly label for their view (like "non-reductive physicalism", "anomalous monism", etc.)

↑ comment by TAG · 2020-03-03T10:31:22.333Z · LW(p) · GW(p)

People don't like epiphenomenalism per se, they feel they are forced into it by other claims they find compelling. Usually some combination of

-

Qualia exist in some sense

-

Qualia can't be explained reductively

3.The physical world is causally closed.

In other words, 1 and 2 jointly imply that qualia are non physical, 3 means that physical explanations are sufficient, so non physical qualia must be causally idle.

The rationalist world doesn't have a clear refutation of of the above. Some try to refute 1, the Dennett approach of qualia denial. Others try to refute 2, in ways that fall short of providing a reductive explanation of qualia. Or just get confused between solving the easy problem and the hard problem.

comment by mfb · 2012-10-25T15:12:00.802Z · LW(p) · GW(p)

I think the question "does consciousness affect neurons?" is as meaningful as "does the process of computation in a computer affect bits?".

Replies from: Eliezer_Yudkowsky↑ comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2012-10-26T05:57:35.982Z · LW(p) · GW(p)

In other words, "Yes"?

Replies from: mfb↑ comment by mfb · 2012-10-26T16:06:21.515Z · LW(p) · GW(p)

Bit modifications are part of the process of computation. I wouldn't say they are "affected by" that (they depend causally on the input which started the process of computation, however). In a similar way, individual humans are not affected by the concept of "mankind" for all of them.

comment by RichardChappell · 2012-10-22T22:43:22.925Z · LW(p) · GW(p)