Time and Energy Costs to Erase a Bit

post by DaemonicSigil · 2023-05-06T23:29:42.909Z · LW · GW · 32 commentsContents

The argument for ~50kT Simple straightforward argument for ~kT Computational Study: Time costs and energy costs for reliable bit erasure Choosing a model for the flow rate of particles between states Code Standard Landauer bit erasure with no energy wall Double well from Landauer's paper Moving the particle between states. Cheap reliable erasure for about kT energy cost Isn't this really time-expensive? None 32 comments

Landauer derived a limit on the amount of energy dissipated in order to erase a bit. This limit is , where is Boltzmann's constant and is the temperature of the environment.

However, recently [LW(p) · GW(p)] some [LW(p) · GW(p)] questions [LW(p) · GW(p)] have been raised as to what situations this limit applies to. Maybe using energy only gives you a 50% chance of erasing the bit, which is nearly useless. Does suffice to allow us to erase a bit with high reliability, or is much more energy (eg. ) required to do this? We'll analyze the energy cost to reliably erase a bit in this post, while also considering the time taken to do so.

The argument for ~

First let's examine the argument for . Consider that the bit needs to represented with two possible states. For simplicity, let's say we have a particle which can be in either of the two states. To keep the particle from jumping from one state to the other, there needs to be an energy wall between the two states. And this wall needs to be high enough that the particle doesn't spontaneously jump between them due to thermal noise. If we want a decent reliability (say probability of error), then giving the wall a height of something like should be more than sufficient, since the chance of jumping the wall goes with .

Now to erase a bit, we raise the energy of the state on one side of the wall. If the bit is a 1, then the energy of the particle increases until it is able to jump the wall and land on the other side. (Or if the bit is a 0, then the particle just stays on the other side and nothing happens.) Since we have to raise the particle quite high for it to be able to jump the wall, nearly the entire height of the wall, the energy cost is equal to nearly the height of the wall. So if the bit had a 50% chance of being a 1, then the expected energy cost to erase a bit in this scenario is about (we don't have to pay any energy cost to raise the energy of a state if the particle is not currently occupying that state).

Simple straightforward argument for ~

First note that we only need the energy wall between states to be high while we care about the value of the bit and are doing computations with it. Once it comes time to erase a bit, we no longer care at all about whether the bit gets flipped by thermal noise. We're going to be erasing it in any case.

So consider a computer made out of 2 parts: The CPU and the Radiator. The CPU is a futuristic reversible computer. It's actively cooled to a few Kelvin, which uses some energy, but it's well-insulated enough that the quantity of energy required is relatively small. The CPU isn't responsible for erasing any information. Erasing information at low temperature would be proportionally cheaper, but the energy cost for a refrigerator to pull out the heat produced by the erasures would cancel out the benefit of the low temperature.

Whenever the CPU needs to erase a bit, it sends that bit to the Radiator, which is responsible for doing the erasure. The Radiator is exposed to the room temperature environment, and the heat produced by erasing all the bits flows from the Radiator to the environment. If the environment is at temperature and the CPU is at temperature , then the energy wall required to keep bits stable in the CPU is something like . But lifting a particle by is a perfectly reasonable energy cost, on the same order as the in additional energy needed to fully complete the erasure.

Computational Study: Time costs and energy costs for reliable bit erasure

Here we consider the problem of erasing a bit in more detail. We won't worry about having a separately cooled CPU in this model. We'll show that even at constant temperature , it's possible to erase a bit with high reliability in a reasonable amount of time for a cost of approximately .

Choosing a model for the flow rate of particles between states

In this model, we'll have discrete states, indexed by , each with a definite energy level . We'll study a process that runs from to , and we have control of the energy level of each state, written or collectively . In this model, information is represented by the position of a single particle, which can be in any of the states. We have a probability distribution over states which varies with time, .

States can be either connected or disconnected. We represent this by defining . If state is connected to state , then . Otherwise, . We also have a characteristic time constant , which represents how fast the particle tends to jump between states (due to thermal noise from the environment).

We'll use the following equation for how varies with time, assuming we're given .

This formula results in a probability distribution for a particle's state that converges to the Boltzmann distribution over time (at least if the states form a connected graph). In particular, note that for two states that are connected, we have the following steady state condition:

There are some other possible formulas which also satisfy this condition, but this one is a particularly good model because it limits the maximum flow rate between states to , which is a relatively pessimistic assumption for the time cost to erase a bit. This formula is also essentially a continuous-time version of the transition probabilities that show up in the Metropolis-Hastings algorithm. Finally, this formula gives flow rates that match those that Landauer gives for his double-well model. (I'm happy to elaborate on this in a comment if anyone asks.)

As for energy costs to complete an operation, the formula for expected energy cost looks like this:

This just says that the energy cost of changing the energy of a state by is equal to the probability that the particle is in that state times .

Code

This simulation computes the time and energy cost to erase a bit with error probability .

import numpy as np

import matplotlib.pyplot as plt

# constants:

dt = 0.01

def renormalize_probs(p):

p = np.maximum(p, 0.)

return p/p.sum()

def calc_energy_cost(p0, get_energies, connection_matrix, reliability_threshold=1e-15, dest_state=0):

""" diagonal elements of connection matrix should be 0 """

p = p0

t = 0.

total_cost = 0.

E_hist = [get_energies(t)]

p_hist = [p0.copy()]

cost_hist = [0.]

while np.sum(p[:dest_state]) + np.sum(p[dest_state + 1:]) > reliability_threshold:

t += dt

E = get_energies(t)

dE = E - E_hist[-1]

total_cost += np.sum(dE*p)

E_diff = E.reshape((1, -1)) - E.reshape((-1, 1))

flow_matrix = connection_matrix*np.exp(np.minimum(E_diff, 0)) # flow from j to i

dp_dt = flow_matrix - np.diag(flow_matrix.sum(0))

p += dt*(dp_dt @ p)

p = renormalize_probs(p)

E_hist.append(E)

p_hist.append(p)

cost_hist.append(total_cost)

# print result

print("time cost:", t)

print("energy cost", total_cost)

# plot result

fig, axes = plt.subplots(3)

ax1, ax2, ax3 = axes

ax1.set(ylabel="E_i"); ax2.set(ylabel="p_i"); ax3.set(ylabel="E_cost", xlabel="t")

t_data = dt * np.arange(len(cost_hist))

E_data = np.stack(E_hist, axis=1)

p_data = np.stack(p_hist, axis=1)

for i in range(len(p0)):

ax1.plot(t_data, E_data[i])

ax2.plot(t_data, p_data[i])

ax3.plot(t_data, cost_hist, color="k")

plt.show()

return total_cost

def line_connect_matrix(n):

""" construct the connection matrix corresponding to a line graph of size n """

ans = np.zeros((n, n))

for i in range(n-1):

ans[i, i+1] = 1.

ans[i+1, i] = 1.

return ans

# standard Landauer bit erasure

a = 0.25

def get_energies_basic(t):

return np.array([0., a*t])

print("\nStandard Landauer bit erasure (no wall to preserve bit identity)")

calc_energy_cost(np.array([0.5, 0.5]), get_energies_basic, line_connect_matrix(2))

# Landauer double well

E_wall = 40

a = 2.0

def get_energies_double_well(t):

return np.array([0., E_wall, min(a*t, E_wall)])

print("\ndouble well model from Landauer's paper")

calc_energy_cost(np.array([0.5, 0., 0.5]), get_energies_double_well, line_connect_matrix(3))

# particle swap

a = 0.2

E_0 = 40

def get_energies_swap(t):

E = a*t

return np.array([max(E_0 - E, 0), max(E - E_0, 0)])

print("\nSwapping a particle from one state to another")

calc_energy_cost(np.array([0., 1.]), get_energies_swap, line_connect_matrix(2))

# two stage erasure

a = 0.1

E_0 = 40

t_e = 850 # time where we stop swapping and start erasuing

E_1 = a*t_e - E_0

def get_energies_swap(t):

if t <= t_e: # swap

E = a*t

return np.array([max(E - E_0, 0), max(E_0 - E, 0), max(E_0 - E, 0), max(E - E_0, 0)])

else: # standard Landauer bit erasure

t -= t_e

return np.array([E_1, 0., t*a, E_1])

print("\nCheap and reliable two stage erasure")

calc_energy_cost(np.array([0.5, 0., 0., 0.5]), get_energies_swap, line_connect_matrix(4), dest_state=1)

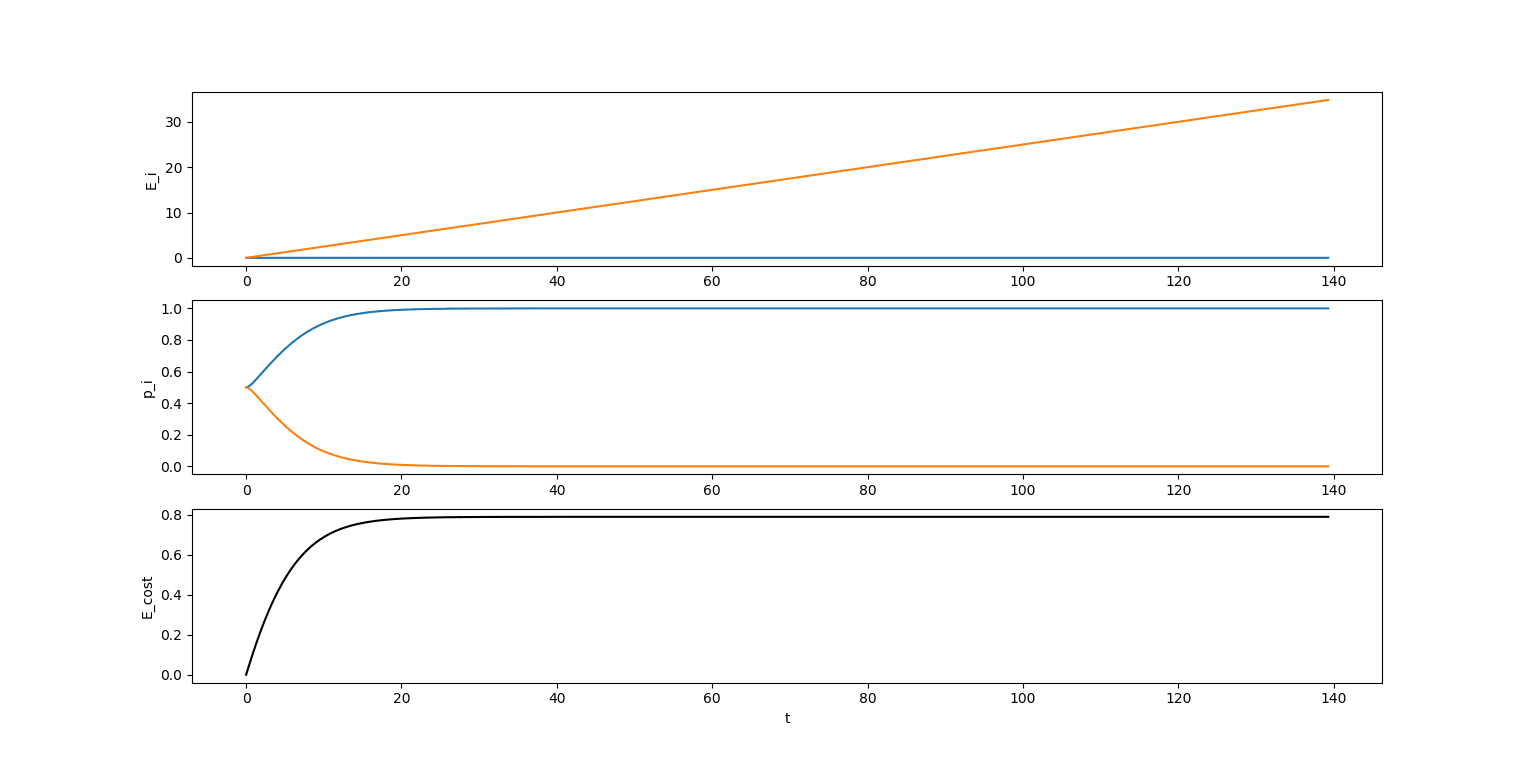

Standard Landauer bit erasure with no energy wall

This costs in theory if we do it so slowly that the distribution of particle position always matches the Boltzmann distribution. In practice it's a little bit more energy, but still quite close. Energy cost: ~ Time cost: ~

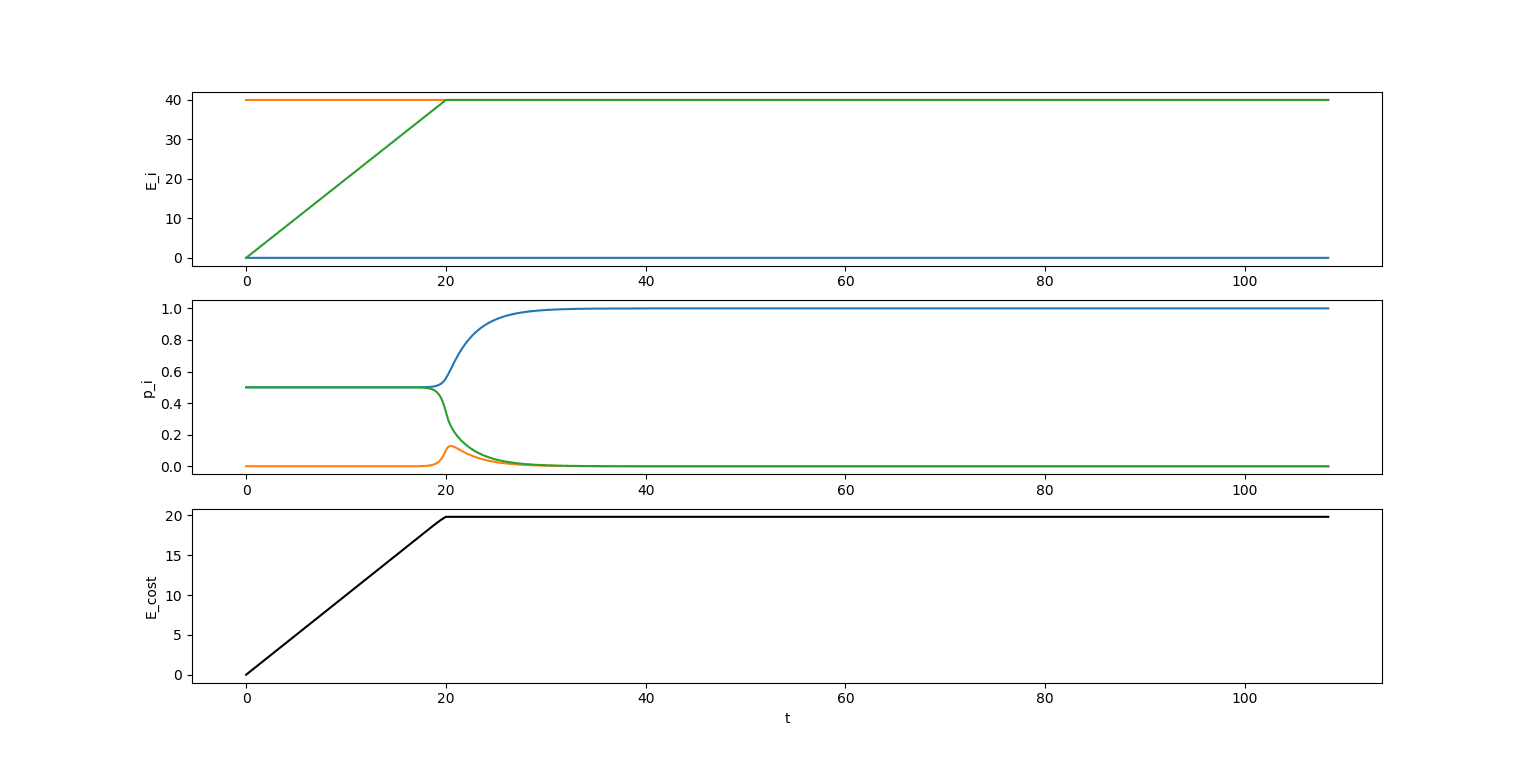

Double well from Landauer's paper

Here we can see a much higher energy cost of ~. But at least there's an energy wall to conserve our bit from being corrupted by noise.

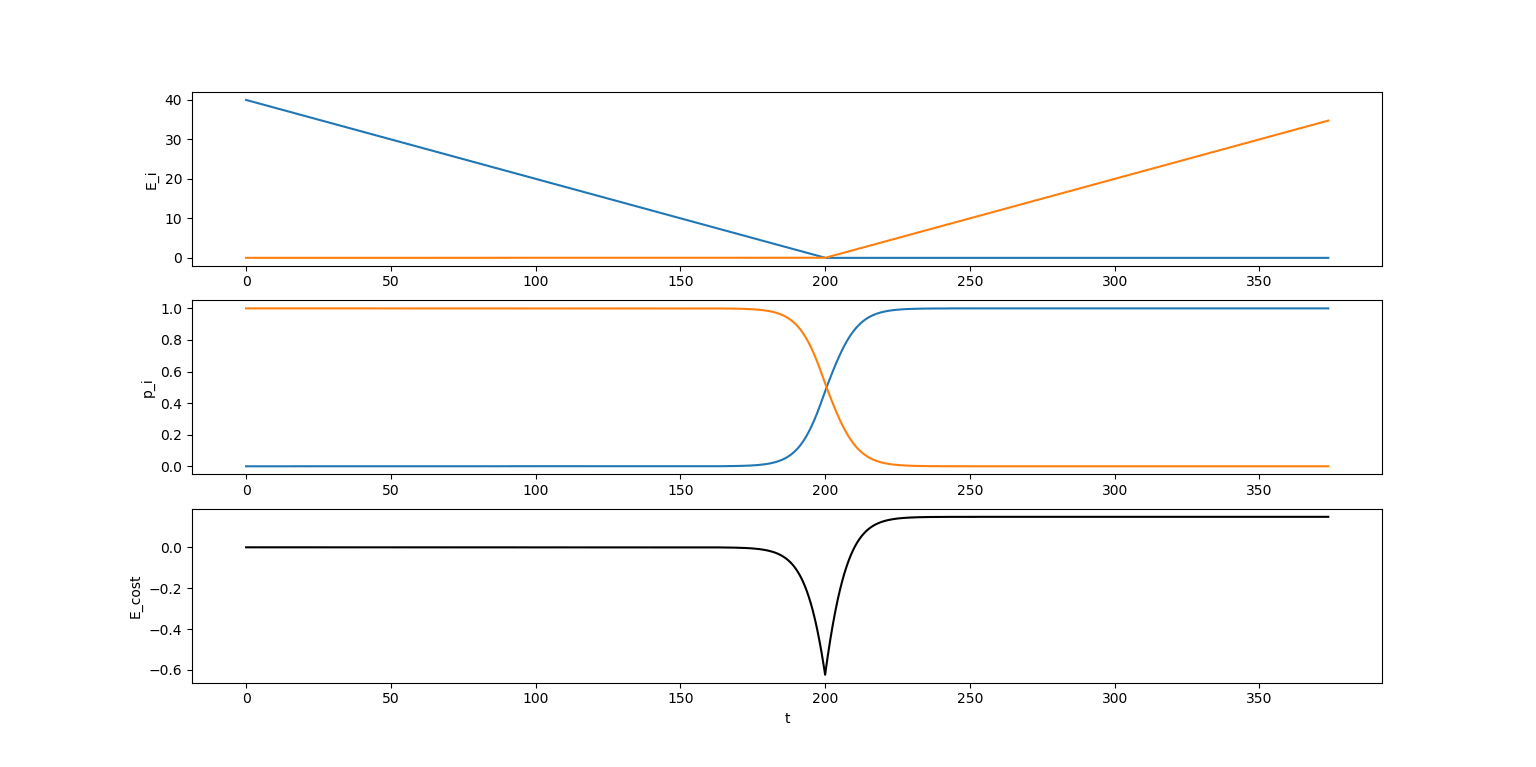

Moving the particle between states.

As a warmup to being able to erase a bit reliably (starting from an initial state with an energy wall), we first calculate the cost to move a particle from one state to another (the destination state starts out with high energy so that its chances of containing the particle are initially almost 0). This should be completely free in the limit of infinite time, but has a (quite cheap) cost of ~ for this particular case. Time cost: ~

From the graph, you can see that we first generate some energy lowering the destination state, then spend it back on lifting the starting state to force the particle into the destination state. This is not perfectly efficient, so there's still a small net energy cost.

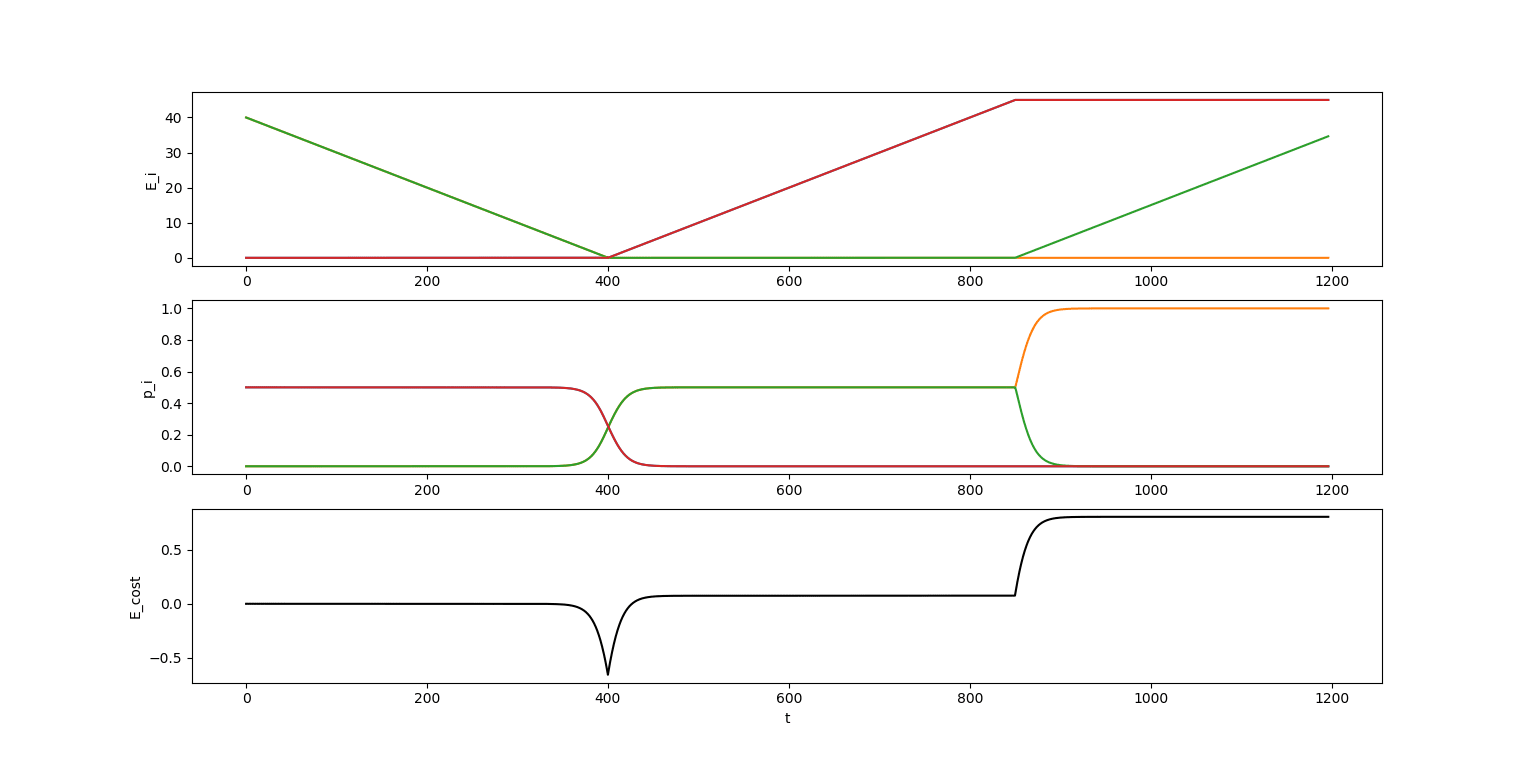

Cheap reliable erasure for about energy cost

There's four states now, connected in a row with two states in the middle and a state on each end. The two middle states start out being very high energy to form an energy wall just like we had for Landauer's double well. The outer two states are what we use to represent the bit. So we're starting out from a bit representation that's very reliable and could absolutely be used for computation.

The first stage of erasure is to move the particle from the outer two states to the inner two states following the "moving particle between states" procedure described above. Because of the symmetry, there's no dissipation caused when the particle jumps between the middle two states. After this operation, there's no longer an energy wall, and the particle is hanging out in the two middle states. The second stage of erasure is that we just perform a standard cheap Landauer erasure (the first case we looked at).

Energy cost: ~. Time cost: ~

Isn't this really time-expensive?

I mean, not that much. It would be bad if time required went as the inverse of error probability, so we'd expect to need time. In that case, we really could say that reliable bit erasure takes infinite time in the limit. Instead we're getting something much more reasonable, seems like maybe (this is my sense just from a bit of messing around).

Q: The constant factor is pretty bad, though, isn't it? Since we dealing with hundreds of ?

A1: First of all, I made the energies simple piecewise linear functions of time for ease of programming, but this is definitely not the most optimal thing to do. You want to move energies more slowly when those energies are very close to each other, and more quickly when they're very different. I bet the improvement could be pretty significant if we actually controlled the energies optimally.

A2: Even if we don't keep them at different temperatures, the CPU/Radiator distinction is still useful for thinking about futuristic reversible computers. The key figures for the CPU performance include both the throughput of operations and also the serial speed of operations, while for the Radiator the things that matters are the throughput and energy cost of bit erasures. Having to put up with a 100x slowdown in operation time would be really terrible for CPU serial operation speed. But this analysis doesn't pertain to CPU operation speed, just to Radiator bit erasure speed, where this can be compensated simply by putting 100x as many bit erasing modules in the Radiator. This has some materials costs, but making the Radiator less efficient also has materials costs since it has to be that much larger to radiate the heat away, not to mention the energy costs which are per-computation and not amortized.

32 comments

Comments sorted by top scores.

comment by Michael Frank (michael-frank) · 2023-07-02T23:18:50.335Z · LW(p) · GW(p)

Hi, not to spend a lot of time here, but someone called my attention to the fact that I was mentioned in the comments. Just a few things:

-

In the comment of mine where I was quoted, I was talking about conventional architectures wherein one erases bits by simply discarding the signal energy, and where the signals themselves have enough associated energy (e.g. an energy difference between 0 and 1 states, or an energy barrier between the states) to be reliably distinguished despite thermal noise. Yes, theoretically one can do somewhat better than this (i.e., closer to the kT ln 2 minimum) with more complicated erasure protocols, but these do generally come at a cost in terms of time. Also, most treatments of these protocols ignore the energy requirements for operating the control mechanisms, which depending on their nature can themselves be substantial. For realistic engineering purposes, one should really analyze a very concrete exemplar mechanism in much more detail; otherwise there are always valid questions that can be raised about whether the analysis that one has done is actually realistic.

-

That being said, regarding the details and analysis and optimization of alternative erasure protocols, there is a lot of existing published and preprint literature on this topic, some of it quite recent, so I would encourage anyone interested in this topic to begin by spending some time surveying what’s already been done on this before spending a ton of time reinventing the wheel. Start by spending a few minutes doing some relevant keyword searches on Google Scholar. Following citations and backward cites, etc. Nowadays you can use AIs to help you quickly absorb the gist of papers you find, so “doing your homework” in terms of background research is easier than ever.

-

All this aside, from my POV, optimizing bit erasure is less interesting than reversible computing, since RC can in theory do even better by avoiding erasure or greatly reducing the number of bit erasures that are even needed. Of course, reversible computing has its own overheads, and my above comments about needing to analyze concrete mechanisms in detail in order to be more relevant for engineering purposes also apply to it. Lots of work still needs to be done to prove any of these ideas to be truly practical. I’d certainly encourage anyone who’s interested to get involved, since we’re never going to see these things happen until a lot more people seriously start working on it. And sadly AI is still a long way from being able to do serious engineering innovation all on its own — but I think humans who understand how to engage AI effectively on challenging problems could make great strides.

Cheers… ~Mike Frank

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-07-04T06:04:27.001Z · LW(p) · GW(p)

Hi Dr. Frank, thanks for weighing in.

This post is definitely towards the theoretical side of the theory-engineering spectrum. So the question it tries to answer is much more "does the fact that the laws of physics are reversible rule this out?" rather than "can we actually build something that does this?". It's sounding to me like lowish cost reliable erasure is not in principle ruled out by reversible physics (eg. this paper gives where is the time required and is some constant), and so the engineering questions are what will be decisive. Hopefully reversible computing will be luckier than nuclear fusion power in terms of the engineering obstacles it runs into.

I don't have too much else to add right now, I'll have to take your advice of looking through the literature and seeing what's out there.

comment by jacob_cannell · 2023-05-07T05:00:40.849Z · LW(p) · GW(p)

However, recently some questions have been raised as to what situations this limit applies to. Maybe using energy only gives you a 50% chance of erasing the bit, which is nearly useless.

You make it sound like 1. these 'questions' were somehow recent, and 2. that I was somehow one of the first to raise them. I think you are somewhat misrepresenting Landauer's paper.

I mean just the abstract says:

This logical irreversibility is associated with physical irreversibility and requires a minimal heat generation, per machine cycle, typically of the order of kT for each irreversible function. This dissipation serves the purpose of standardizing signals and making them independent of their exact logical history. Two simple, but representative, models of bistable devices are subjected to a more detailed analysis of switching kinetics to yield the relationship between speed and energy dissipation, and to estimate the effects of errors induced by thermal fluctuations.

The "order kT" is a minimal rough lower bound, he then presents a more detailed analysis with a tighter bound:

A third source of error consists of the fact that even if the system is allowed to relax completely during switching there would still be a fraction of the ensemble of the order exp( -2A/kT) left in the unfavored initial state. (Assuming A>>kT.)

Anyway regardless there is mostly a consensus around , I'll round up more citations in my next post.

Switching events change bits, and erasures are always bit changes and thus switching events. The system is probabilistic, and thus equivalent to a Quantum CA or a probabilistic CA for classical computation.

There is absolutely nothing in physics that is deterministic, every bit transition is probabilistic.

Whenever the CPU needs to erase a bit, it sends that bit to the Radiator, which is responsible for doing the erasure. The Radiator is exposed to the room temperature environment, and the heat produced by erasing all the bits flows from the Radiator to the environment.

The CPU can not deterministically send a bit to the Radiator/environment. That is a probabilistic operation (ie minimally a 1 bit decision, and thus a 1 bit switching event). The bit particle ends up somewhere in the Radiator/environment with probability , and it ends up in the CPU with probability . Everything is probabilistic.

Given that mistake I'm not reading the rest in detail yet, but I believe what you are doing is calculating the mean cost of erasing bits, averaged over many bits or trials, and then confusing that with reliable erasure of a single specific bit, at a single specific time. Yes, if you have a large number of bits/trials, and you switch them all to 0 with 75% probability, and average out the total cost of successful erasures, you'll get ~ per erasure, but that is a very different thing then highly reliable single bit erasure as required to lift a system up to a useful implementation of deterministic logic circuits. (However, it is a useful representation for analog operations, and I use something similar for estimating synapse energy costs in brain efficiency).

I challenge you to design a physical way to erase a single specific bit reliably (whp) with only ~ energy/noise SNR. You don't need all the states in your model, as bit erasure just means we narrow the state space by half, so it might as well have been two states to begin with. Then just look at the probability you end up in either half, as a function of the energy/noise SNR.

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-05-07T08:04:05.820Z · LW(p) · GW(p)

You make it sound like 1. these 'questions' were somehow recent, and 2. that I was somehow one of the first to raise them. I think you are somewhat misrepresenting Landauer's paper.

I've only read Landauer and Frank, and my readings of both still don't have them making the same claim as you. As far as I can tell, Landauer just says the double well model is "representative", not universal to the point where it imposes a tighter limit on all possible ways of doing computation.

Anyway regardless there is mostly a consensus around exp(−ΔE/KBT), I'll round up more citations in my next post.

I already believe this formula for probability ratios between states as a function of the energy difference between those states, I'm not questioning Boltzmann and Gibbs! But the between states is not at all the same thing as the spent to complete a particular operation.

The CPU can not deterministically send a bit to the Radiator/environment.

Are you trying to get into issues of "sometimes we want to erase a bit, sometimes we don't, and the exact times at which we do want to erase a bit themselves constitute information that we probably also want to erase" here? One nice feature of Landauer's double well erase procedure compared to the more efficient one I describe here is that all the energy spent setting a bit to 0 is only spent when the bit happens to be a 1. Setting 0 to 0 is free in Landauer's double well procedure, whereas in my procedure, the expected energy cost of doing the procedure is the same whether the bit starts out as a 0 or as a 1. Is this a major concern for you?

The bit particle ends up somewhere in the Radiator/environment with probability exp(−ΔE/KBT), and it ends up in the CPU with probability 1−exp(−ΔE/KBT)

The bit (represented by which state is occupied by the particle) is distinct from the particle itself: there's no reason we'd strongly desire that the particle itself end up in the Radiator, just the information about where the particle started. (I think I maybe created some confusion here when writing this by accidentally implying that the energy wall in the Radiator had to be the same as the energy wall in the CPU. In fact, there's no reason they have to be the same at all.)

In any case, remember that the fundamental thermodynamic limit on just moving a bit from place to place is 0. Thermal diffusion is not the only way we know of to move particles around. (Or more abstractly, and more importantly to this discussion, it's not the only way to move information around.) To take an example using quantum mechanics, consider this Hamiltonian:

Wait for an appropriate amount of time, and it will move a bit stored in the first two states (not energy eigenstates, but the basis states used in the matrix itself, to be clear) to the second 2 states.

Yes, if you have a large number of bits/trials, and you switch them all to 0 with 75% probability, and average out the total cost of successful erasures, you'll get ~kTlog2 per erasure, but that is a very different thing then highly reliable single bit erasure as required to lift a system up to a useful implementation of deterministic logic circuits.

Look at the graphs, the probability trace for the destination state asymptotes to 1, not 0.75.

I challenge you to design a physical way to erase a single specific bit reliably (whp) with only ~kTlog2 energy/noise SNR. You don't need all the states in your model, as bit erasure just means we narrow the state space by half, so it might as well have been two states to begin with. Then just look at the probability you end up in either half, as a function of the energy/noise SNR.

I think you want this section [LW · GW] of the post. It has 4 states, since I'm spending 2 states on modelling the energy wall, but if you want a very rough "two state" description of what's going on here, I'm first lowering the energy wall between the states, and then raising up one of the states to about , but slowly enough that the particle has time to leave the state before I spend a bunch of energy lifting the particle itself all the way up to .

Replies from: jacob_cannell↑ comment by jacob_cannell · 2023-05-07T19:24:00.020Z · LW(p) · GW(p)

I've only read Landauer and Frank, and my readings of both still don't have them making the same claim as you. As far as I can tell, Landauer just says the double well model is "representative", not universal to the point where it imposes a tighter limit on all possible ways of doing computation.

See Zhirnov here[1] and Cavin here[2]. As for Mike Frank, you probably just have not read enough of his work (even though I am a near-term reversible computing skeptic, Mike Frank is as well, and I'm generally a huge fan of his work, and have read a large chunk of his extensive output).

As just a single example, here is a very clear relevant quote:

Reversible computing becomes important at a small scale because bit energies are becoming smaller, and are approaching the point (around 1 electron-volt) where they will not be able to be made much smaller without running into severe reliability problems due to thermal noise. But when you manipulate bits in an irreversible fashion, this essentially involves dissipating the entire bit energy to waste heat on each operation. Improvements in computer energy efficiency in the past have been driven mainly by reductions in bit energies, so if bit energy stops decreasing, it’s a big problem – the energy efficiency of traditional irreversible technology will level off and stop improving. Note this observation depends only on very fundamental thermodynamic considerations of thermal noise, and so it’s not affected by the details of whatever clever alternative nanoscale devices you may come up with. Your device technology could be based on superconductors, carbon nanotubes, nanophotonics, quantum interference transistors, I don’t care. As long as it’s a reliable, irreversible device, it will have to dissipate at least on the order of 1 electron volt (about 40 kT) per bit-operation in a room-temperature envronment, full stop, end of story.

Don't take this the wrong way, but if you actually have a way to do irreversible erasures whp at energy << 40kT, that is a publish-worthy result! Maybe you do - but I'm not seeing that yet, so far it just seems you are pushing the real work of erasure into components of the system you aren't modeling fully yet (which is the main downfall of most of the adiabatic logic RC techniques - the best current proposals for RC are drexler/merkle mechanical logic and Frank/etc's asynchronous ballistic RC).

I already believe this formula for probability ratios between states as a function of the energy difference between those states, I'm not questioning Boltzmann and Gibbs! But the between states is not at all the same thing as the spent to complete a particular operation.

Erasure is just a bit moving outside of the computational system, but otherwise is exactly the same as moving a bit inside the system. There is only one rule/relationship here about bit transitions, and it is probabilistic. The boundary between the computer and the environment is just conceptual anyway, in reality there is just one computer and no erasure.

In any case, remember that the fundamental thermodynamic limit on just moving a bit from place to place is 0

Only at zero temp and or with zero reliability. You give an arbitrary example of a Hamiltonian where you've already assumed your conclusion. Let's focus this discussion exclusively on classical physics/systems, unless you believe your argument only applies due to QM effects.

I think you want this section of the post. It has 4 states, since I'm spending 2 states on modelling the energy wall, but if you want a very rough "two state" description of what's going on here, I'm first lowering the energy wall between the states, and then raising up one of the states to about

You can't just magically reliably raise/lower the energy wall, as reliably lowering the energy wall is obviously now just moving a new bit, which you need to either 1. keep track of (reversible computing), or 2. forget/erase and thus pay .

As for energy costs to complete an operation, the formula for expected energy cost looks like this:

You also are also computing an expected average energy cost, which means you are not modeling reliable erasure of a single bit, as I already mentioned.

For us not to be talking past each other, you need to focus discussion on the probability of erasing a single specific bit from the system (the probability the bit was erased, which really just means it transitioned from computational state/location A to untracked state/location B) as a function of energy/noise SNR. This function can not be an expectation (we aren't talking about the mean energy to erase a bit, that is not the form the function needs).

So we need:

p(erasure) =

You can not introduce any other state changes without also describing their bit transition probabilities (you can not manipulate the well/wall in any way without paying the appropriate costs) and tracking those bits.

The expected cost of erasure is completely irrelevant, we are talking specifically and only about the probability of erasure success, for a single specific bit, for a single specific compute cycle of a machine (although we can assume thermal equilibrium).

↑ comment by DaemonicSigil · 2023-05-08T06:20:15.780Z · LW(p) · GW(p)

Don't take this the wrong way, but if you actually have a way to do irreversible erasures whp at energy << 40kT, that is a publish-worthy result!

Yeah, that Frank quote is intriguing. I was previously suspecting that if I talked to an actual expert about this they'd be saying: "yeah yeah, we know all this rudimentary stuff already, stop wasting my time". It would be exciting if this was a new result.

For us not to be talking past each other, you need to focus discussion on the probability of erasing a single specific bit from the system [...] The expected cost of erasure is completely irrelevant, we are talking specifically and only about the probability of erasure success, for a single specific bit, for a single specific compute cycle of a machine (although we can assume thermal equilibrium).

Yes, I'm indeed claiming to get high reliability erasures every time we do a bit erase operation. The error probabilities are , etc. The expectation is over energy cost, so the operation sometimes dissipates more energy and sometimes less, but the bit is always erased (unless you're in one of the unlucky cases, but the chance of that is ). If you look at the graph, you'll see that the probability trace for successful erasure asymptotes to 1. (At the point where the graph ends, it's reached .)

You can't just magically reliably raise/lower the energy wall, as reliably lowering the 40kT energy wall is obviously now just moving a new bit, which you need to either 1. keep track of (reversible computing), or 2. forget/erase and thus pay 40kT.

This isn't obvious at all to me, so I think you'll need to explain your objection here. Raising and lowering the energies of states isn't associated with any extra information: It happens exactly the same way on every bit erase cycle. There's no extra information produced that needs to be erased. If there were, then it would also prove [LW · GW] Landauer's analysis of the double well is incorrect, since Landauer needs to lift the energy of the 1 state so that the particle will diffuse over to the 0 state. If I looked at what the particle was doing before deciding how to move the wall, that would be a different story, but both Landauer and I are moving the state energies around in exactly the same way each time, so we don't have to worry about creating an infinite recursive turtle stack of extra bits to erase.

Only at zero temp and or with zero reliability. You give an arbitrary example of a Hamiltonian where you've already assumed your conclusion. Let's focus this discussion exclusively on classical physics/systems, unless you believe your argument only applies due to QM effects.

My argument does apply in the classical case too. See here [LW · GW] to cover moving a particle between states for a very low energy cost even in an environment with lots of thermal noise. To translate that into moving a bit, do the same thing for two states in parallel (with energy wall in between for high reliability).

Erasure is just a bit moving outside of the computational system, but otherwise is exactly the same as moving a bit inside the system.

Yes, but the analogy says that if I move the bit from one part of the system to the other, I have to give the associated energy cost to that part of the system. I don't have to dissipate it to the environment. I only have to dissipate energy to the environment if I'm trying to send a bit to the environment. Hence why erasure is costly while bit movement between internal computer parts is not. (Speaking in terms of the fundamental thermodynamic limits, of course.)

(There's a relatively minor technical point here, which is that parts of the computer are generally not assumed to be in thermal equilibrium, though the environment is. So the parts of the computer can contain unused negentropy inside them, and they can use that to accept a bit, rather forcing me to send them energy. It's not a free lunch, of course, a resource is still used, namely the negentropy, so the importance of the point is relatively minor.)

Replies from: jacob_cannell↑ comment by jacob_cannell · 2023-05-08T17:15:02.312Z · LW(p) · GW(p)

I've looked over your math/code a bit and I don't understand this in particular:

""" diagonal elements of connection matrix should be 0 """

And looking at your steady state condition it looks like it converges to a transition probability of 1 if the energy levels are the same? Which doesn't seem right, the convergence should be only to 0.5 - if we consider two possible locations/states the particle could be in, and they have the same energy, at equilibrium they have the same probability. Perhaps your connections are symmetric and normalization makes it equivalent, but that isn't clear if so.

Regardless, the code comment above seems to indicate you are not allowing the particle to stay in its starting state, which seems probably fatal.

Erasure is just a bit moving outside of the computational system, but otherwise is exactly the same as moving a bit inside the system.

Yes, but the analogy says that if I move the bit from one part of the system to the other, I have to give the associated energy cost to that part of the system. I don't have to dissipate it to the environment. I only have to dissipate energy to the environment if I'm trying to send a bit to the environment.

If there are two locations/states for the particle: A and B, then the probability distribution converges to the Boltzmann based on relative energy levels. If there is no energy gap between A and B, the probability is 50:50.

If we assume that both A and B are stable states whp then they must be lower potential energy favored states with a gap of vs nearby background states. Moving the particle up and out of one such well and into the other simply requires energy .

Any such potential energy wells are only created by yet other particles which themselves must also be in equally stable low energy configurations for the well to be stable, and so the argument applies recursively - manipulating the wells is just equivalent to moving particles out of wells.

Consider a simple minimal example of a computer built from dominoes. The starting up "0" state of a domino must have energy to be stable against noise so (imagine a continuous windstorm/earthquake). The down "1" state is typically even more stable with energy ~. A small force imparting on order to an input domino can then start a propagating flow of computation which results in perhaps half of the dominoes in the lowest energy 1 state.

The energy cost of one such cycle is very low as it's just a constant on order , but only because it hasn't yet erased any bits. In this system clearing the computational garbage by erasing all the 1 bits requires on order ~ per bit for moving each 1 bit back to the starting 0 state, and in general it requires at least to move a particle/bit out of some static low energy local minima (well) which must be lower than the background for stability.

Reversible computers avoid erasure costs by some combination of propagating bits ballistically through moving particles/waves and/or logically reversing time and uncomputing bits, but they don't reduce the energy cost of reliable erasure.

Replies from: DaemonicSigil, M. Y. Zuo↑ comment by DaemonicSigil · 2023-05-09T05:42:34.450Z · LW(p) · GW(p)

The connection matrix is symmetric and has entries that are 0 or 1, and it represents a graph (you can put in entries that are fractions to represent a case where states are partially connected (i.e. connected but the maximum flow rate between them is lower than )). Non-zero diagonal entries represent a state being connected to itself, which is kind of pointless since the flow is going to be 0 in any case.

And looking at your steady state condition it looks like it converges to a transition probability of 1 if the energy levels are the same?

For , the steady state condition is:

The normalized distribution which satisfies this requirement is .

Regardless, the code comment above seems to indicate you are not allowing the particle to stay in its starting state, which seems probably fatal.

It's a continuous-time differential equation, so the outward flow of probability is continuous in the model. In a small timestep , most particles are not going to change which state they're in.

Any such potential energy wells are only created by yet other particles which themselves must also be in equally stable low energy configurations for the well to be stable, and so the argument applies recursively - manipulating the wells is just equivalent to moving particles out of wells.

I ask again: If any manipulation of wells recursively creates another bit that we have to erase, then what's the difference between my well manipulations and Landauer's? Or for that matter, your domino resetting device? Why doesn't you model imply that all 3 get mired in an eternal cycle of "create a bit to erase a bit"?

Our initial particle has an entropy associated with it, since it has two possible states, and these allow it to represent a bit. The other particles producing the energy wells do not need to have any entropy, they can be sitting in a single state at the bottom of their own well with high energy walls all around. Because those parts of the device require no information, they can operate ballistically. To take a concrete model, we can build a big wheel with charges glued to various points along its circumference. The wheel spins and charges move past the set of states where we're keeping our particle, and depending on which charge is close, the energy of each states is raised and lowered. If we've designed a good bearing for the wheel, then the slowing of the wheel will be entirely due to its interaction with the particle.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2023-05-09T07:59:33.603Z · LW(p) · GW(p)

It's a continuous-time differential equation, so the outward flow of probability is continuous in the model. In a small timestep , most particles are not going to change which state they're in.

This still seem fatal, as it implies that any arbitrarily tiny transition energy will converge to the bit exiting the bit well, which obviously is not physically correct (and contradicts the claim that the bit well was stable against noise). Once you include the required diagonals I think your mechanism no longer works.

I ask again: If any manipulation of wells recursively creates another bit that we have to erase, then what's the difference between my well manipulations and Landauer's? Or for that matter, your domino resetting device? Why doesn't you model imply that all 3 get mired in an eternal cycle of "create a bit to erase a bit"?

The difference is the domino resetting device (or any practical actual current CMOS computer, or bio neural network etc) erases/thermalizes bits, so it doesn't have to continue keeping track of them.

None of the published reversible computers designs I've seen claim to erase reliably for much less than 1eV btw. That's not the focus at all, instead they save energy by eliminating unnecessary erasures.

If we've designed a good bearing for the wheel, then the slowing of the wheel will be entirely due to its interaction with the particle.

Therein is the problem as this slowing of the wheel will need be about per bit to reliably eject bits.

To actually reliably erase all the bits, the wheel needs to eject them from their low energy wells, which thus requires per bit, because each bit had to be in a well with energy that much lower than the neighborhood. So to the extent your wheel works it's just equivalent to the domino erasure mechanism (which could be having all the dominoes linked to axle rods and levers to a motor etc).

You could try to use the wheel to implement a temporary well, but that doesn't work because it is not sufficient to simply lower the well as that doesn't give the particle enough energy to move out of its location reliably.

You can move bits around ballistically for free (within some limits on not being able to aim them very precisely), but again moving bits around is not erasing them.

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-05-10T08:35:51.974Z · LW(p) · GW(p)

This still seem fatal, as it implies that any arbitrarily tiny transition energy will converge to the bit exiting the bit well, rather than staying put against noise until the erasure signal, which contradicts the model.

I'm not 100% sure what you're trying to say here, so forgive me if this is a wrong interpretation. The point is that a given energy wall height gives you a certain length of time in which to do computations. The flow out of a state can be suppressed exponentially by energy wall height, so in practice this can be a really long time. Also, flow out of a state is compensated by flow back into that state from other states so that overall things converge to the Boltzmann distribution. This isn't relevant to how long it takes a bit to thermalize, though.

Also, "erasure signals" aren't a thing in my model, see below.

The difference is the domino resetting device (or any practical actual current CMOS computer, or bio neural network etc) erases/thermalizes bits, so it doesn't have to continue keeping track of them.

My procedure also erases/thermalizes bits so that they no longer need to be tracked, that's the entire point of it. I think I'm having a hard time understanding what you're getting at here.

This interaction now just moves a bit around, and we now need a full model of the wheel to see where that bit went. Energy/information is conserved and can't be destroyed. Any time you lose track of it in your model you have erased/thermalized it, so I can just keep asking where the ~1eV bit went.

The particle jumps around between the states because it has some coupling to the environment, and the environment is full of thermal noise / fluctuations. Because physics is reversible, we could in theory chase the bit into the environment: In principle you can always keep track of where the bit went (at least if you're Laplace's demon). But let's say that once we've put the bit into the environment, that's good enough.

But we still used some energy to do that. We drained some energy from our battery to accomplish this task (maybe it's a conventional battery or capacitor, or maybe it's something more exotic, like a spinning flywheel).

The next thing you might worry about is that I cheated at the bit-erasing task by allowing some information to escape to the battery instead of the environment. Obviously there's some information going into the environment, since coupling to the environment is the entire reason the particle is jumping between states. But maybe the battery is picking up most of the information. That would be bad! But it seems like it could happen, since the amount of energy drawn by the device is random, and then that introduces randomness into the level of energy reserves left in the battery.

First, a meditation: Consider the double slit experiment. Momentum is conserved, so if the photon is refracted through the lower slit and heads back up to hit the detector, some downward momentum must have been transferred to the card with the slits. Likewise, if the photon goes through the upper slit, the card must have gained some upward momentum. Quantum mechanics is very sensitive to leaked information. If even a single bit of information (which slit the photon went though) were leaked to the environment, then the interference pattern would disappear. Why doesn't the "which way" information encoded in the momentum of the card have this effect?

Anyways, think about erasing a lot of bits. By the central limit theorem, the ratio of variance in the amount of energy left in the battery relative to the number of bits erased goes to zero.

Or say we already have a lot of thermal uncertainty about the amount of energy stored in the battery. Say it's a Gaussian distribution. This has entropy:

If performing 1 erasure costs a small quantity of energy with similarly small variance , then the change in entropy of the battery is going to look like:

The larger the uncertainty in battery energy, the less entropy a little additional information is going to carry.

In any case, note that Landauer's double-well erase procedure also has an energy cost that varies randomly. If the bit happens to be a 1, then it draws ~1eV, if the bit happens to be a 0 then it's free. Neither Landauer nor I are secretly depending on being able to shuffle information into the battery, though.

Your device also no longer makes sense to me - how does it erase a bit (or not) on demand from an incoming wire signal? As the wheel now controls the bit well and the erasure, and the wheel isn't controlled by the input signal, the device no longer works (at least not without yet more complexity).

My model (obviously other models are possible) of a reversible computer looks like a reversible circuit with some small number of irreversible gates. Those gates produce bits that need to be erased on every clock tick (the clock can be synced with the spinning flywheel). In order to do long serial computations, the rest of the output bits are looped back around to the input of the circuit so that more computation can be done on them in the next clock tick. So the flywheel bit erasing device just needs to be able to erase the bits coming out of the circuit on each clock tick, and there's always the same number of them. No need for an extra separate "erasure on demand" signal / feature.

You can move bits around ballistically for free (within some limits on not being able to aim them very precisely), but again moving bits around is not erasing them.

Yes, I also agree that moving bits around is not erasing them.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2023-05-10T19:27:04.720Z · LW(p) · GW(p)

Also, "erasure signals" aren't a thing in my model, see below.

You need an erasure signal to erase a bit.

Let's step back for a second and more formally define "erasure" and "bit".

I see several meanings of "bit":

- A True Bit - A bit in computer RAM/registers etc - read/write controlled by arbitrary signal bits on signal lines.

- A signal line bit propagating on a wire

- A temporary 'bit' which is not necessary for 1 or 2 above, and thus isn't really an irreducible bit

I believe to the extent your spinning wheel thing works, it is only 'erasing' type 3 non-bits, which is irrelevant. You need to show erasure of at least type-2, and really type-1 bits.

A reversible computer can already (in simplified theory at least) avoid all type 2 and 3 erasures completely, so it's irrelevant how much energy they use. You need to show reliable type 1 bit erasure, or you should change your post to add disclaimers that your claimed 'erasure' doesn't actually work for bits in RAM, and can't actually reduce energy use for a reversible computer.

The entire wheel apparatus in your scheme is just a complex way of reversible propagation of bits on signal lines. We could just simplify that down to a single particle propagating on the signal line.

Yes, I also agree that moving bits around is not erasing them.

Right, and your flywheel thing so far is just moving an (irreducible) bit.

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-05-12T06:30:09.990Z · LW(p) · GW(p)

Generally, I don't see a problem with using my procedure to erase a register in a computer. Seems like it would work fine for that, just like Landauer's double well procedure would. It would also work fine to erase data directly in RAM, though I personally don't think anyone's going to ever build a reversible computer that works that way. Anyways, what I'm saying is my procedure would suffice to erase a Cannellian Type-1 bit.

The point of the wheel example is that you claimed that: "manipulating the wells is just equivalent to moving particles out of wells". The wheel device provides a counterexample, since it changes the energy levels of the various states just by spinning. (It's also an example of a situation where an "erasure signal" is not necessary to erase a bit, since it erases a bit on every clock tick.)

But I'm in general totally fine with having an erasure signal. When I say it's not in my model, I mean that the basic erasure procedure I describe is independent of that kind of external detail.

Right, and your flywheel thing so far is just moving an (irreducible) bit.

Where is it moving to in your opinion? I provided you an argument that the answer isn't the wheel. What's left other than the environment?

Just also replying to a chunk of your earlier comment:

To actually reliably erase all the bits, the wheel needs to eject them from their low energy wells, which thus requires ΔE≈40KBT per bit, because each bit had to be in a well with energy that much lower than the neighborhood. So to the extent your wheel works it's just equivalent to the domino erasure mechanism (which could be having all the dominoes linked to axle rods and levers to a motor etc).

You could try to use the wheel to implement a temporary well, but that doesn't work because it is not sufficient to simply lower the well as that doesn't give the particle enough energy to move out of its location reliably.

After lowering the wall between the two states, I do then actually raise up one of the states high enough to kick the particle out of it with high reliability. The reason this doesn't cost the full is that as the energy of the state gets higher, the probability of the particle occupying that state is already decreasing. I only pay any energy cost if the particle happens to be occupying the state I'm currently lifting. So you do an integral to get the expected energy cost of kicking this particle all the way out, and it comes out to way less than . In fact, it comes out to exactly if we're moving so slowly that the particle is always distributed over states according to the Boltzmann distribution. But if you want to finish quickly, it comes out to a little bit more.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2023-05-15T13:55:26.813Z · LW(p) · GW(p)

Generally, I don't see a problem with using my procedure to erase a register in a computer. Seems like it would work fine for that, just like Landauer's double well procedure would. It would also work fine to erase data directly in RAM,

You haven't described any mechanism to do this yet.

Your flywheel thing (to the extent I now understand it), just seems to be equivalent to representing a signal bit moving down a wire, which could just be simplified to a single minimal particle. You haven't described anything that is capable of true bit erasure.

True bit erasure would require having a control signal which then controls the wheel, or something like that.

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-05-16T01:42:53.338Z · LW(p) · GW(p)

You haven't described any mechanism to do this yet.

However you're imagining Landauer's double well procedure raises the energy level of one of the wells conditional on a control signal, you should be able to imagine my procedure being implemented in a similar way. What obstacle do you run into when you try to do this?

Just to give an explicit example, though, you can imagine the control signal being used to determine the position of the particle that encodes the bit. You have some states close to the wheel, and some far away. Far away states aren't affected by the spinning of the wheel and if the particle is in the far states rather than the near states, then the wheel isn't slowed down and the bit isn't erased.

Choosing whether the particle goes into the near states or the far states conditional on a control bit is a reversible process, of course, so that completes the implementation.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2023-05-16T03:52:54.017Z · LW(p) · GW(p)

However you're imagining Landauer's double well procedure raises the energy level of one of the wells conditional on a control signal, you should be able to imagine my procedure being implemented in a similar way.

I do not imagine it this way, as trying to manipulate the barrier just punts the issue. It's simpler to imagine static wells which the particle/bit moves between, as in dominoes.

Just to give an explicit example, though, you can imagine the control signal being used to determine the position of the particle that encodes the bit.

This is not a true memory bit storage mechanism unless the particle is in some sort of loop in a low energy potential well about 1eV lower than the background. The control signal could come at any time or never and the bit must be stable for very long periods of time.

"Determining the position of the particle" requires deflecting it into 1 of 2 paths with high reliability, which requires energy on order 1eV. If you can reliably alter the particle's path - you no longer need the wheel, as one path can just exit the device or thermalize to erase. Any attempt to recover the energy of the erased particle is a dead end which just punts the problem to a new mechanism for erasure.

The gate which is formed from this process has 2 unique bit inputs (memory bit, control wire bit) and only 1 unique bit output (the control wire bit), as the other is erased. It is fundamentally irreversible - it doesn't matter how many complex additional mechanisms (flywheels) you tack on, they are just distractions.

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-05-16T07:03:02.945Z · LW(p) · GW(p)

I do not imagine it this way, as trying to manipulate the barrier just punts the issue. It's simpler to imagine static wells which the particle/bit moves between, as in dominoes.

A machine which turns a domino right side up is in fact raising the energy of the "domino tipped over" state. If the domino stayed tipped over, the mechanical arm pushing the domino up would at some point be intersecting with the domino, a state of very high energy indeed. Unless you're imagining some other way for the domino resetter to function?

This is not a true memory bit storage mechanism unless the particle is in some sort of loop in a low energy potential well about 1eV lower than the background. [...] "Determining the position of the particle" requires deflecting it into 1 of 2 paths with high reliability, which requires energy on order 1eV.

Yes, you need to be 1eV below extraneous nearby states to be doing anything sensible at all. This is an energy difference and not an energy cost. You're not dissipating any energy because the particle is not occupying those states. Determining the position of the particle based on a control bit is a reversible operation. If you want to argue that it must cost 1eV, you need to explain either why the operation is not actually reversible, or why it must have a thermodynamically unavoidable energy cost anyways, despite being reversible.

The control signal could come at any time or never and the bit must be stable for very long periods of time.

So the particle is in the far away states by default and the control signal kicks it into the nearby states when the bit has to be erased.

If you can reliably alter the particle's path - you no longer need the wheel, as one path can just exit the device or thermalize to erase. Any attempt to recover the energy of the erased particle is a dead end which just punts the problem to a new mechanism for erasure.

I'm certainly not saying the wheel part is necessary, it's just a particular example I gave. I do think it's allowed by thermodynamics to reliably and costlessly alter the particle's path in ways that are reversible. I'm not trying to recover the energy from the erased bit (I assume you meant "bit" here, as particles aren't erased). I'm spending the (on average), and then that's the end of it.

The gate which is formed from this process has 2 unique bit inputs (memory bit, control wire bit) and only 1 unique bit output (the control wire bit), as the other is erased. It is fundamentally irreversible - it doesn't matter how many complex additional mechanisms (flywheels) you tack on, they are just distractions.

No, the whole point of controllable erasures is that sometimes the bit isn't erased. So we need 2 outputs, one for the control wire as you say, and one for the original bit since sometimes the erasure signal is 0 and so the bit isn't erased. The gate is still irreversible, of course, but it seems like your mental model of the situation here is quite different than mine, so I'm pointing out the difference in the hopes of some clarification.

↑ comment by M. Y. Zuo · 2023-05-08T21:38:58.802Z · LW(p) · GW(p)

Can you explain why an ideal reversible device avoiding erasure costs wouldn't reduce the energy cost of reliable erasure?

The Mike Frank quote mentioned previously seems to limit itself to irreversible devices :

Replies from: jacob_cannellAs long as it’s a reliable, irreversible device, it will have to dissipate at least on the order of 1 electron volt (about 40 kT) per bit-operation in a room-temperature envronment, full stop, end of story.

↑ comment by jacob_cannell · 2023-05-08T22:01:08.805Z · LW(p) · GW(p)

Erasure is not reversible so this is a non question. An irreversible computer is then one that uses irreversible logic and gates at all levels, so it's erasing bits everywhere just to compute everything, even though that is not required in theory. So a reversible computer doesn't save energy by reducing the cost of each bit erasure, it saves energy by reducing the large quantity of unnecessary bit erasures. (But that turns out to be very hard to do and comes at a price)

Replies from: joseph-van-name↑ comment by Joseph Van Name (joseph-van-name) · 2023-05-10T22:15:15.229Z · LW(p) · GW(p)

It may be possible to still use reversible computation to even save the amount of energy required per bit deletion. It will be possible to use reversible computation to transform an unreliable but energy efficient bit deletion device into a reliable and energy efficient bit deletion device. We first need to take the random garbage data and send it through the efficient but unreliable bit deletion process. For example, if we have 10^9 bits of random data, then after the bit deletion process, 90% of those bits will be 1's and the rest will be 0's. One can then send this data through a data compression algorithm, and after compression, one will have about 0.47*10^9 bits of random data.

But I do not know if this is the best way of getting the energy efficiency of deletion close to Landauer's limit.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2023-05-11T01:16:20.660Z · LW(p) · GW(p)

We first need to take the random garbage data and send it through the efficient but unreliable bit deletion process.

I don't actually see a way of doing this. The problem is that storing a bit reliably requires that it is in a deep low energy potential well, lower by about 1eV than the local neighborhood.

So if you try to use only 0.1 eV to eject the bit, you only get a tiny probability of success, and you need to apply about 1eV for 50% success, > 1eV to eject it reliably, etc.

So the problem is that a reliable bit is - by requirement - one that requires large energy on order 1eV to move out of it's stable low energy local minimum (as otherwise it wouldn't persist reliably against noise).

Replies from: joseph-van-name↑ comment by Joseph Van Name (joseph-van-name) · 2023-05-11T15:51:52.220Z · LW(p) · GW(p)

I am a mathematician and not a physicist, so I am not confident in my ability to look for weaknesses in my own reasoning about physics.

It seems like we need to be able to move a bit from an irreversible low reliability high efficiency mode with a low barrier to a reversible mode with a high barrier where we can perform reliable reversible computation. It looks like we need a way of reversibly lowering the barrier when we need to perform the unreliable deletion process followed by reversibly raising the barrier when we need to perform the reversible operations. Is there a way to reversibly raise and lower the barrier from 0.1 eV to 1eV or higher?

I am pretty sure that it should not be too difficult to make a good enough simulation of this to verify that everything works out correctly and is reversible except for the bit deletion.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2023-05-15T13:57:22.497Z · LW(p) · GW(p)

Lowering the barrier just punts the problem to a new system component. See the long comment exchange with DaemonicSignal, where he ends up using a flywheel mechanism which is only capable of erasing non-bits (ie it doesn't implement actual bit erasure).

Replies from: joseph-van-name↑ comment by Joseph Van Name (joseph-van-name) · 2023-05-15T17:24:32.900Z · LW(p) · GW(p)

Yes. A flywheel seems like a reasonable idea for a mechanism for raising and lowering a potential barrier. We just need to make sure that the flywheel is completely reversible. I was thinking about having a flywheel that hooks up to cams and followers that can mechanically raise and lower a physical barrier. This is probably an engineering challenge that will be about as difficult as the problem of making reversible computing hardware in the first place. It therefore seems like there are at least two problems of reversible computation that need to be overcome:

- The most obvious problem is the problem of doing energy efficient purely reversible calculations.

- The other problem is the problem of deleting a large quantity of bits in a way that does not consume much more than k*T*ln(2) energy per bit deleted. This will likely include the problem of reversibly raising and lowering an energy barrier so that we have a high energy barrier for reversible operations and so that we have a low energy barrier for bit deletions.

It seems like the few people who are working on reversible computation are focused on (1) while I have not heard anything on (2). I am a mathematician and not a researcher in these kinds of mechanisms, so I should end this post by saying that more research on this topic is needed. I would like for there to be research backed up by simulations that show that k*T*ln(2) per bit deletion is possible. I would be satisfied if these simulations were classical, mechanical simulations but which are reversible (you can run the simulation in reverse) and they incorporate thermal noise and the laws of thermodynamics.

5/21/2023 I just checked the 1970 paper 'Minimal Energy Dissipation in Logic' by Keyes and Landauer, and that gives another way of getting the energy efficiency of computation close to k*T*ln(2). This paper also uses the raising and lowering of a potential barrier.

comment by Joseph Van Name (joseph-van-name) · 2023-05-10T20:24:55.546Z · LW(p) · GW(p)

Reversible computation will not only make the energy efficiency of computation far below Landauer's limit , but reversible computation can also take an unreliable but highly energy efficient bit deletion process and turn it into a very reliable and energy efficient energy efficient process. Let me explain.

Suppose that one has bits of garbage information that one wants to delete. We can safely assume that about half of these bits are 0's and the other half of these bits are 1's. Now let . Suppose that we have an unreliable bit deletion process where after the process of bit deletion, about of these bits will be 's and the rest of the bits will be 's. Then these data will have a Shannon entropy of . This means that one will be able to run these data through a reversible compression algorithm that consumes a negligible amount of energy thanks to energy efficient reversible computing, and after compression, the data will be about bits long, and one would have about bits of reliably deleted information. Reversible computation (in particular, reversible data compression algorithms) can even bring the energy efficiency of irreversible bit deletions down to per bit deleted.

comment by qjh (juehang) · 2023-05-10T08:53:25.233Z · LW(p) · GW(p)

I don't buy your ~kT argument. You can make the temperature ratio arbitrarily large, and hence the energy arbitrarily small, as far as I understand your argument.

With your model, I don't understand why the energy 'generated' when swapping isn't thermalised (lost to heat). When you drop the energy of the destination state and the particle moves from your origin to your destination state, the energy 'generated' seems analogous to that from bit erasure; after all, bit erasure is moving a particle between states (50% of the time). If you have a mechanism for harvesting energy, you can always use it.

I think there's a more thermodynamically-sound ~kT argument. When you zero a bit by raising energy to cross a ~nkT barrier, if the bit is in 1, a ~nkT photon (or whatever other boson) is emitted. The carnot efficiency for harnessing this nkT energy source is (1-1/n), so only ~kT energy is lost.

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-05-10T15:56:40.970Z · LW(p) · GW(p)

I don't buy your ~kT argument. You can make the temperature ratio arbitrarily large, and hence the energy arbitrarily small, as far as I understand your argument.

There's some intrinsic energy required to erase a bit, . This is true no matter how large the temperature ratio is. The point is that we'd normally be paying some extra energy cost in addition to this in order to get the particle over the energy wall, and it's this that we can make arbitrarily small by changing the temperature ratio.

Overall, I'd say that the "simple straightforward argument" serves mostly as an intuition pump for the idea that a high energy wall is needed only during computation and not erasure. It won't convince a skeptical reader, the computational study is in the post because it's actually pretty important.

With your model, I don't understand why the energy 'generated' when swapping isn't thermalised (lost to heat). When you drop the energy of the destination state and the particle moves from your origin to your destination state, the energy 'generated' seems analogous to that from bit erasure; after all, bit erasure is moving a particle between states (50% of the time). If you have a mechanism for harvesting energy, you can always use it.

Basically, there's two ways the energy of the particle can be lowered:

-

Lower the energy of a state while the particle is sitting in that state. This energy is recaptured by you.

-

Due to interaction with the environment, which has lots of thermal noise, the particle jumps from a high-energy state to a low energy state. This energy is dissipated and not recaptured by you.

When we're lowering the energy of the destination state, we're recapturing energy through mechanism 1. The process is basically the reverse of a Landauer erasure. We're accepting a bit of thermal noise from the environment in exchange for being given a little less than of energy. Then in the reverse phase we spend a little more than to push that bit back into the environment.

Anyways, this model can be shown to still respect the Landauer limit. See this [LW · GW]. If you perform the process very slowly such that the probability distribution over states matches the Boltzmann distribution then the energy cost integral gives .

Replies from: juehang↑ comment by qjh (juehang) · 2023-05-10T21:05:33.356Z · LW(p) · GW(p)

How would you experimentally realise mechanism 1? It still feels like you need an additional mechanism to capture the energy, and it doesn't necessarily seems easier to experimentally realise.

With regards to 2, you don't necessarily need a thermal bath to jump states, right? You can just emit a photon or something. Even in the limit where you can fully harvest energy, thermodynamics is fully preserved. If all the energy is thermalised, you actually cannot necessarily recover Landauer's principle; my understanding is that because of thermodynamics, even if you don't thermalise all of that energy immediately and somehow harvest it, you still can't exceed Landauer's principle.

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-05-12T05:45:50.966Z · LW(p) · GW(p)

How would you experimentally realise mechanism 1? It still feels like you need an additional mechanism to capture the energy, and it doesn't necessarily seems easier to experimentally realise.

One example (probably not the easiest thing to implement in practice) is the charge-patterned wheel that I mentioned in my thread with Jacob. If we're lowing the energy of a state while the particle is in that state, then the potential gradient puts a force on the particle which pulls back on the wheel, increasing its energy.

With regards to 2, you don't necessarily need a thermal bath to jump states, right? You can just emit a photon or something.

If you're emitting into vacuum (no other photons there at all), then that's like having access to a thermal bath of temperature 0. Erasure can be done for arbitrarily low cost under such conditions. If the vacuum has temperature higher than 0, then it has some photons in it and occasionally one of them will come along and knock into our particle. So then we pretty much have a thermal bath again.

comment by Muireall · 2023-05-09T02:01:56.051Z · LW(p) · GW(p)

First note that we only need the energy wall between states to be high while we care about the value of the bit and are doing computations with it. Once it comes time to erase a bit, we no longer care at all about whether the bit gets flipped by thermal noise. We're going to be erasing it in any case.

So consider a computer made out of 2 parts: The CPU and the Radiator. The CPU is a futuristic reversible computer. It's actively cooled to a few Kelvin, which uses some energy, but it's well-insulated enough that the quantity of energy required is relatively small. The CPU isn't responsible for erasing any information. Erasing information at low temperature would be proportionally cheaper, but the energy cost for a refrigerator to pull out the heat produced by the erasures would cancel out the benefit of the low temperature.

Whenever the CPU needs to erase a bit, it sends that bit to the Radiator, which is responsible for doing the erasure. The Radiator is exposed to the room temperature environment, and the heat produced by erasing all the bits flows from the Radiator to the environment. If the environment is at temperature and the CPU is at temperature , then the energy wall required to keep bits stable in the CPU is something like . But lifting a particle by is a perfectly reasonable energy cost, on the same order as the in additional energy needed to fully complete the erasure.

If the CPU is insulated, it needs to be insulated from the Radiator. You've just pushed the need for a barrier between states to a barrier between the CPU and Radiator. If you want to be able to turn that barrier on and off at will, you're back to Landauer's analysis.

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-05-09T06:11:06.812Z · LW(p) · GW(p)

I think this comment is based on a misunderstanding of what an insulator is. Heat isn't a particle that you need to throw up an energy barrier to block. Heat is energy, or more specifically, energy that carries information (in particular random information, aka thermal noise). How well insulated two systems are is a function of how they interact. A good way of thinking of it is that the systems both have a lot of internal degrees of freedom, plus each has some "edge" degrees of freedom. The systems interact with each other through their edge degrees of freedom, and the exact nature of this interaction determines how fast heat can flow between them. A stronger interaction typically translates to a faster rate of heat flow.

(Ironically, if we write down the interaction using a Hamiltonian, scaling up the Hamiltonian both increases the between its eigenvalues, and makes the interaction stronger (i.e. increases the rate of heat flow). So higher translates to having a worse insulator.)

Replies from: Muireall↑ comment by Muireall · 2023-05-09T10:28:35.987Z · LW(p) · GW(p)

What I mean is that "the bit is in the radiator" is another state of the system where a two-level subsystem corresponding to the bit is coupled to a high-temperature bath. There's some transition rate between it and "the bit is in the CPU" determined by the radiator temperature and energy barrier between states. In particular, you need the same kind of energy "wall" as between computational states, except that it needs to be made large compared to the bath temperature to avoid randomly flipping your computational bit and requiring your active cooling to remove more energy.

This seems to be more or less the same thing that jacob_cannell is saying in different words, if that helps. From your responses it seems your internal picture is not commensurate with ours in a number of places, but clarifying this one way or the other would be a step in the right direction.

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-05-10T07:14:12.769Z · LW(p) · GW(p)

Okay, thanks for clarifying. Treating "the bit is in the radiator" as a state of the system still seems a little like a strange way of phrasing it under my model. I would say something like "we have a particle in the CPU and a particle in the Radiator, each of which has 2 states so it can represent a bit. So the combined system has the states being: 00, 01, 10, 11. Moving the bit between the CPU and the Radiator looks like performing the following reversible mapping: 00 -> 00, 01 -> 10, 10 -> 01, 11 -> 11. (i.e. the bits are just swapped). The CPU and Radiator need to be coupled to achieve this mapping. Now to actually have moved a bit from the CPU to the radiator, rather than just have swapped two bits, something needs to be true about the probability distribution over states. In particular, we need the bit in the radiator to be 0 with near certainty. (i.e. P(01) and P(11) are approximately 0) This is the entire reason why the Radiator needs to have a mechanism to erase bits, so it can have a particle that's almost certainly in state 0 and then that negentropy can be sent back to the CPU for use in computations.

So it sounds like you're concerned with the question of "after we erase a bit, how do we keep it from being corrupted by thermal noise before it's sent back to the CPU?" The categories of solution to this would be:

-

Keep it well isolated enough and send it back quick enough after the erasure is completed that there's no opportunity for it to be corrupted.

-

Stick up a high energy wall between the states so the bit can persist for a long time.

Either one of these would be fine from my perspective, I guess you and Jacob would say that we have to go with 2, and if you have that assumption then even the simple straightforward argument depends on being able to manipulate the energy wall without cost. I do think you can manipulate energy walls without cost, though. See my discussion with Jacob, etc.