2014 iterated prisoner's dilemma tournament results

post by tetronian2 · 2014-09-30T21:23:26.914Z · LW · GW · Legacy · 57 commentsContents

AnderBot

CheeseBot

DefectBot

DMRB

Pip

SimHatingTitForTat

SimpleTFTseerBot

SwitchBot

TatForTit

TwoFacedTit

VOFB

Tournament results

Postscript

None

57 comments

Followup to: Announcing the 2014 program equilibrium iterated PD tournament

In August, I announced an iterated prisoner's dilemma tournament in which bots can simulate each other before making a move. Eleven bots were submitted to the tournament. Today, I am pleased to announce the final standings and release the source code and full results.

All of the source code submitted by the competitors and the full results for each match are available here. See here for the full set of rules and tournament code.

Before we get to the final results, here's a quick rundown of the bots that competed:

AnderBot

AnderBot follows a simple tit-for-tat-like algorithm that eschews simulation:

- On the first turn, Cooperate.

- For the next 10 turns, play tit-for-tat.

- For the rest of the game, Defect with 10% probability or Defect if the opposing bot has defected more times than AnderBot.

CheeseBot

CheeseBot tries several simulation-based strategies, and uses the first one that applies to the current situation.

- If the opponent defected on the previous round, Defect.

- If the opponent does not defect against defectBot, Defect.

- If defecting on this round would lead to CheeseBot being punished for it in future rounds, Cooperate. CheeseBot checks this by simulating future rounds with a simulated history in which it defects on the current round.

- If the opponent is a mirror-like bot, Cooperate. To test whether a bot is mirror-like, CheeseBot simulates the opponent and checks if it defects against DefectBot and cooperates with a bot that plays tit-for-tat but defects against CooperateBot and DefectBot.

- If it is the last round, Cooperate.

- Defect.

DefectBot

Defect!

This bot was submitted publicly by James Miller.

DMRB

DavidMonRoBot (DMRB) takes a more cautious approach to simulating: It spends a few hundred milliseconds simulating its opponent to figure out what the rest of the round will look like given a Cooperate or Defect on the current round, and then picks the outcome that leads to the highest total number of points for DMRB.

This allows DMRB to gauge whether its opponent is "dumb," i.e. does not punish defectors. If the opponent is dumb, DMRB reasons that the best move is to defect; otherwise, if DMRB thinks that its opponent will punish defection, it simply plays tit-for-tat. DMRB spends only a small amount of time simulating so that other simulation-based bots will be less likely to have their simulations time out while simulating DMRB.

Pip

This one is a behemoth. At almost 500 very dense lines of Haskell (including comments containing quotes from "The Quantum Thief"), Pip uses a complex series of simulations to classify the opponent into a large set of defined behaviors, such as "CooperateOnLastInTheFaceOfCooperation", "Uncompromising" and "Extortionist." Then, it builds out a decision tree and selects the outcome that leads to the highest score at the end of the match. If I'm being vague here, it's because the inner workings of Pip are still mostly a mystery to me.

SimHatingTitForTat

SimHatingTitForTat, as the name implies, plays tit-for-tat and attempts to punish bots that use simulation to exploit it, namely by defecting against bots that deviated from tit-for-tat on any previous round. Its strategy is as follows:

- On the first round, Cooperate.

- On every subsequent round, if the opponent played tit-for-tat on all previous rounds, play tit-for-tat. Otherwise, Defect.

SimpleTFTseerBot

SimpleTFTseer uses a modified version of tit-for-tat that ruthlessly punishes defectors and uses one simulation per round to look at what its opponent will do on the last round.

- If either player defected in a previous round, Defect.

- If it is the last round, Cooperate.

- Otherwise, simulate my opponent playing against me on the last round of this match, assuming that we both Cooperate from the current round until the second-to-last round, and do whatever my opponent does in that scenario. If the simulation does not terminate, Defect.

SwitchBot

SwitchBot also uses a modified tit-for-tat algorithm:

- On the first turn, Cooperate.

- On the second turn, if my opponent Defected on the previous turn, simulate my opponent playing against mirrorBot, and do whatever my opponent would do in that scenario.

- Otherwise, play tit-for-tat.

TatForTit

TatForTit follows a complex simulation-based strategy:

- On the first round, do whatever the opponent would do against TatForTit on the second round, assuming both bots cooperated on the first round.

- On the second round, if my opponent defected on the previous round, Defect. Otherwise, do whatever my opponent would do against me, assuming they cooperated on the first round.

- On all subsequent turns, if my previous move was not the same as my opponents move two turns ago, Defect. Otherwise, do whatever my opponent would do against me one turn in the future, assuming TatForTit repeats its previous move on the next turn and the opposing bot cooperated on the next turn.

TwoFacedTit

TwoFacedTit simulates its opponent playing against mirrorBot; if it takes more than 10 milliseconds to respond, TwoFacedTit plays Cooperate. Otherwise, TwoFacedTit plays tit-for-tat and then Defects on the last round.

VOFB

Like SimpleTFTseerBot, VeryOpportunisticFarseerBot (VOFB) uses a very aggressive defection-punishment strategy: If either player defected in a previous round, Defect. Otherwise, VOFB simulates the next round to determine what the opponent will do given a Cooperate or Defect on the current round. If the opponent does not punish defection or the simulation does not terminate, VOFB Defects. On the final round, VOFB uses additional simulations to detect whether its opponent defects against backstabbers, and if not, plays Defect.

Tournament results

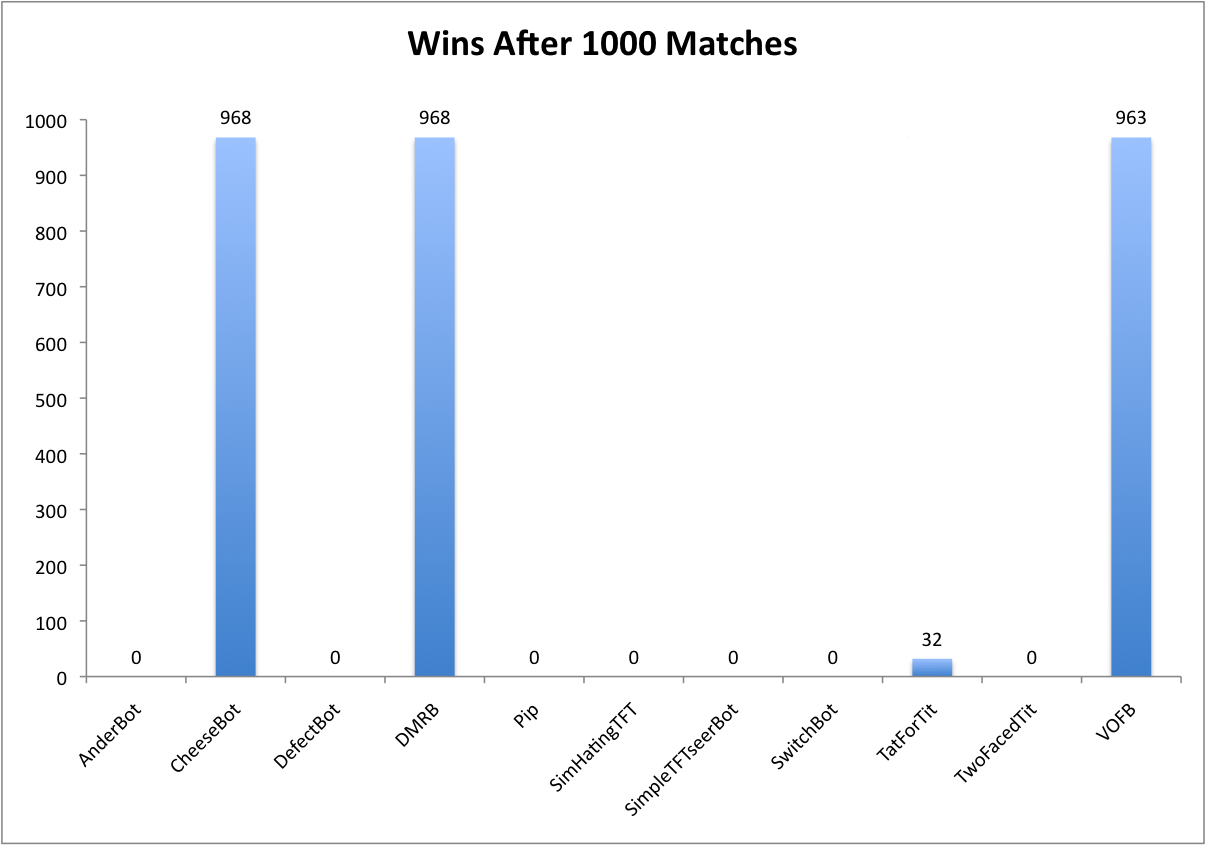

After 1000 round-robin elimination matches, the final standings are:

1st place (tied): CheeseBot and DavidMonRoBot

3rd place: VeryOpportunisticFarseerBot

4th place: TatForTit

The win frequencies for each bot:

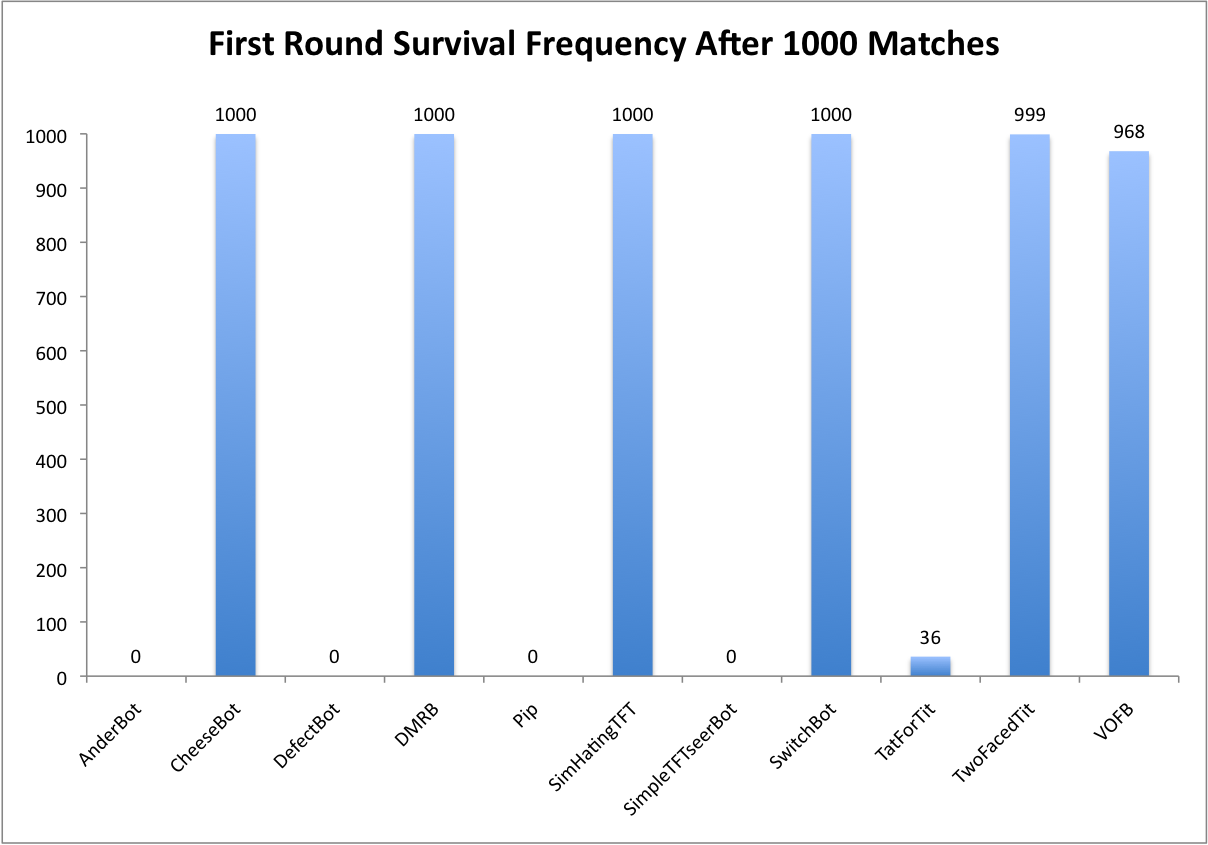

If it were a sporting event, this tournament would not be particularly exciting to watch, as most games were nearly identical from start to finish. The graph below shows each bot's frequency of surviving the first round-robin round:

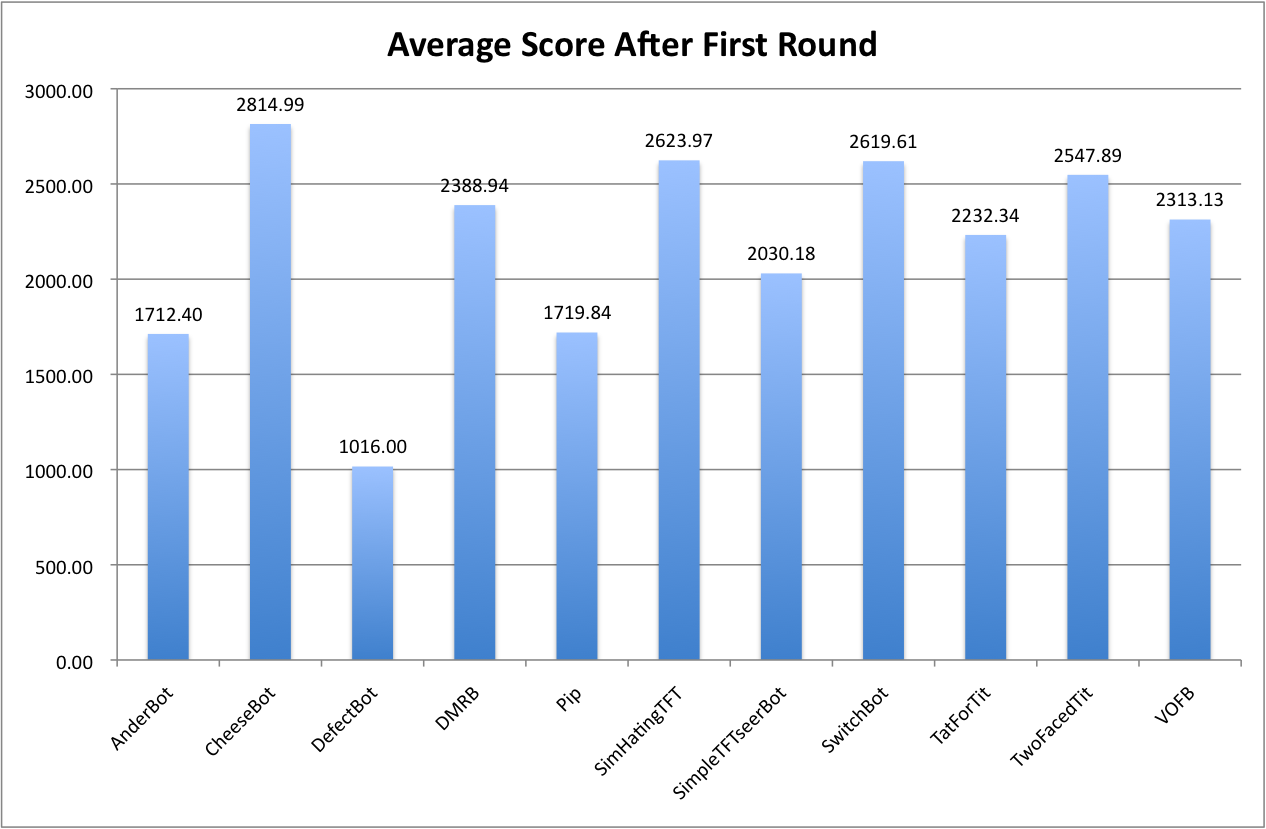

In other words, the same half of the field consistently swept the first round; AnderBot, DefectBot, Pip, and SimpleTFTseer never survived to see a second round. In general, this is because high-scoring bots almost always cooperated with each other (with the occasional backstab at the end of the round), and defected against AnderBot, DefectBot, Pop, and SimpleTFTseerBot, as these bots either did not consistently retaliate when defected against or pre-emptively defected, triggering a chain of mutual defections. Interestingly, the bots that continued on to the next round did not do so by a large margin:

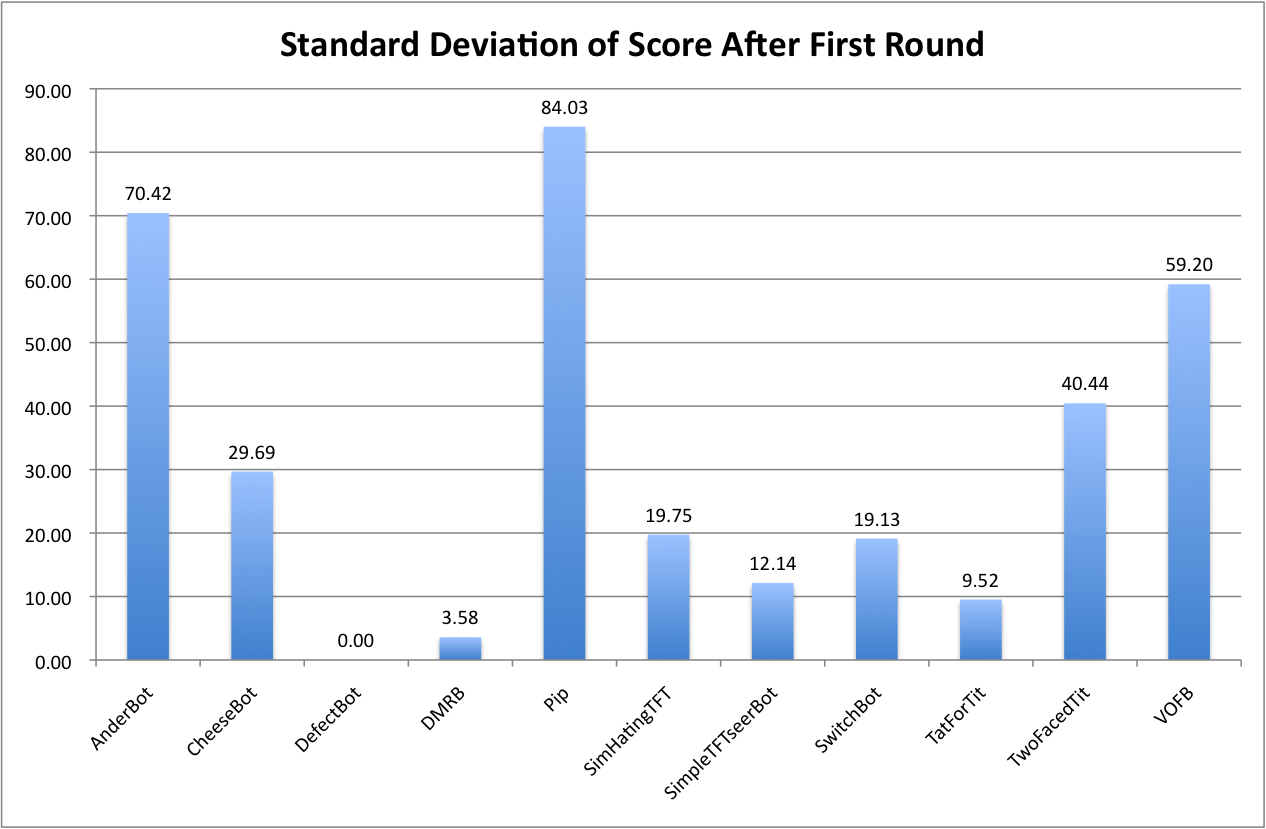

However, the variance in these scores was very low, primarily due to the repeated matchups of mostly-deterministic strategies consistently resulting in the same outcomes.

In addition, all of the matches progressed in one of the following 5 ways (hat tip lackofcheese):

- ALL->[Cheese,DMRB,SimHatingTFT,Switch,TwoFacedTit,VOFB]->[Cheese,DMRB,VOFB] (963 matches)

- (Only CheeseBot, DMRB, and VOFB made it into the final round; all three cooperated with each other for the entire round, resulting in a three-way tie)

- ALL->[Cheese,DMRB,SimHatingTFT,Switch,TatForTit,TwoFacedTit]->[Cheese,DMRB,TatForTit]->[DMRB,TatForTit]->[TatForTit] (32 matches)

- (Only TatForTit and DMRB made it into the final round; both bots cooperated until the second-to-last turns of each matchup, where TatForTit played Defect while the DMRB played Cooperate, resulting in a TatForTit victory)

- ALL->[Cheese,DMRB,SimHatingTFT,Switch,TatForTit,TwoFacedTit,VOFB]->[Cheese,DMRB,SimHatingTFT,TwoFacedTit]->[Cheese,DMRB] (3 matches)

- (Only CheeseBot and DMRB made it into the final round; both bots cooperated with each other for the entire round, resulting in a two-way tie)

- ALL->[Cheese,DMRB,SimHatingTFT,Switch,TatForTit,VOFB]->[Cheese,DMRB,SimHatingTFT]->[Cheese,DMRB] (1 match)

- (Only CheeseBot and DMRB made it into the final round; both bots cooperated with each other for the entire round, resulting in a two-way tie)

- ALL->[Cheese,DMRB,SimHatingTFT,Switch,TwoFacedTit,VOFB]->[Cheese,DMRB,SimHatingTFT]->[Cheese,DMRB] (1 match)

- (Only CheeseBot and DMRB made it into the final round; both bots cooperated with each other for the entire round, resulting in a two-way tie)

This suggests that this game does have some kind of equilibrium, because these top three bots use very similar strategies: Simulate my opponent and figure out if defections will be punished; if so, Cooperate, and otherwise, defect. This allows bots following this strategy to always cooperate with each other, consistently providing them with a large number of points in every round, ensuring that they outcompete backstabbing or other aggressive strategies. In this tournament, this allowed the top three bots to add a guaranteed 600 points per round, more than enough to consistently keep them from being eliminated.

The tournament was slightly more interesting (and far more varied) on a matchup-by-matchup basis. Last-round and second-to-last round defections after mutual cooperation were common. TatForTit frequently used this technique against VOFB, CheeseBot, DMRB, and vice versa; this tactic allowed it to steal 32 wins in the final round. Other bots, particularly AnderBot and Pip, behaved very differently between matches. Pip, in particular, sometimes cooperated and sometimes defected for long stretches, and AnderBot's randomness also led to erratic behavior. Ultimately, though, this did not net these bots a large number of points, as their opponents generally defected as soon as they stopped cooperating.

For those interested in the gritty details, I've formatted the output of each match to be human-readable, so you can easily read through the play-by-play of each match (and hopefully get some enjoyment out of it, as well). See the github repo for the full logs.

Postscript

In the course of running the tournament, I received a number of suggestions and idea for how things could be improved; some of these ideas include:

- Random-length matches instead of fixed-length matches.

- Straight elimination rather than round-robin elimination.

- More "cannon-fodder" bots included in the tournament by default, such as copies of cooperateBot, defectBot, and tit-for-tat.

- A QuickCheck-based test suite that allows bot writers to more easily test properties of their bot while developing, such as "cooperates with cooperateBot" or "cooperates if no one has defected so far."

If anyone would like me to run this tournament again at some unspecified time in the future, with or without modifications, feel free to let me know in the comments. If you would like to fork the project and run it on your own, you are more than welcome to do so.

Many thanks to everyone who participated!

57 comments

Comments sorted by top scores.

comment by V_V · 2014-09-24T09:25:33.058Z · LW(p) · GW(p)

Wow, I made it to the top three!

In the sake of fairness, however, I have to say that I think you made a mistake with my submission: I had first submitted SimpleTFTseer and then asked you to replace it with VeryOpportunisticFarseerBot, but it seems that you have entered both, so I had two bots in the tournament.

SimpleTFTseer did poorly and wasn't designed to advantage VeryOpportunisticFarseerBot, hence this probably didn't make a significant difference, but I think it is right to disclose it.

Anyway, thanks for your effort, it has been fun to participate!

comment by lackofcheese · 2014-09-24T15:13:45.235Z · LW(p) · GW(p)

My primary design criterion was to get the maximum number of points possible vs as many opponents as possible, so I'm particularly pleased that CheeseBot gets the highest average score in the first round by quite a large margin.

I wanted to make by bot "unexploitable" in the sense that no bot could ever bring me below the C/C equilibrium of 300 points without hurting its own score in the process. In this regard my bot still wasn't perfect, but as far as I can see no other bot was able to exploit me in this sense.

If the goal was to maximise IPD performance I would have won outright, but as was noted in the original thread the game we are playing is not a two-player non-zero sum IPD, but rather a much more complicated zero-sum game with many players.

For example, TatForTit defected on the 3rd last turn when playing against me, causing me to lose 5 points at the cost of 2 points. In the IPD this would be a stupid move, but in the tournament setting this is a pretty good deal.

However, this still doesn't explain everything; if my bot worked correctly, it would've defected on the 4th last turn if it anticipated being defected against on the 3rd last turn. I'm not sure why this failed, so I'll have to look into the CheeseBot vs TatForTitBot matchup in greater detail to work out what was going on there.

comment by Eliezer Yudkowsky (Eliezer_Yudkowsky) · 2014-09-28T16:44:58.093Z · LW(p) · GW(p)

Any reason I shouldn't move to Main and Promote?

Replies from: Sniffnoy↑ comment by Sniffnoy · 2014-09-30T23:02:35.149Z · LW(p) · GW(p)

Well, I'm a little annoyed seeing in Main the results of a tournament that was announced in Discussion... (not that I probably would have entered, but...)

Replies from: BloodyShrimp↑ comment by BloodyShrimp · 2014-10-01T02:42:19.732Z · LW(p) · GW(p)

I would've entered! I loved the one-shot PD tournament last summer. In the future, please move popular tournament announcements to Main!

Replies from: Sniffnoy↑ comment by Sniffnoy · 2014-10-01T04:19:53.656Z · LW(p) · GW(p)

Yes, agreed. (I tried to write an entry for the one-shot tournament but never finished; I'd like to see that revisited sometime with a Scheme variant tailored for the contest.)

Replies from: tetronian2↑ comment by tetronian2 · 2014-10-01T20:29:34.591Z · LW(p) · GW(p)

Wow, I had no idea that people missed out on the tournament because I posted it to discussion. I'll keep this in mind for next year. Apologies to Sniffnoy and BloodyShrimp and anyone else who missed the opportunity.

comment by philh · 2014-09-24T10:59:11.373Z · LW(p) · GW(p)

Joint first! \o/

Clarification for DMRB: a "dumb" bot is one that doesn't try to simulate vis opponent. If DMRB plays against a not-dumb opponent, ve plays tit-for-tat. Against a dumb opponent, ve simulates the future game tree a few levels deep to figure out how to get the most points. (To distinguish dumb from not-dumb: assume they're dumb, and run the simulation under a time limit, pitting them against TimeoutBot.)

There were only three dumb bots in this tournament (AnderBot, DefectBot, SimHatingTitForTat), so it seems like TitForTatBot would have done approximately as well. I expected the exploitation code to be mostly useful in early rounds, I hadn't realised that the sample bots wouldn't be taking part.

I considered defecting in the last round, but DMRB was (by design) sufficiently transparent that an opponent could have anticipated me doing that. It looks like if I'd done that, SimpleTFTSeerBot and DMRB would both have lost 300 points in the first round, and DMRB would have been knocked out. (Unless STSB already considered DMRB to time out.) Interestingly, I did defect in the last round against dumb bots, which would have been even easier to anticipate, but it doesn't look like anyone punished me for that.

(edit: actually only 200 points, not 300, but that would still have knocked me out.)

(edit 2: looking at the results, STSB does defect against DMRB, so defecting in the final round wouldn't have cost me anything there. In the final round, STSB is dumb. In the first round, STSB simulates vis opponent playing verself in the final round, and defects unless vis opponent cooperates. Since DMRB defects in that situation, STSB defects in the first round, and we fall into a pit of (D,D). So final-round defection against dumb bots cost me versus STSB, and final-round not-automatic-defection against clever bots didn't gain me anything against ver.)

Replies from: DataPacRat↑ comment by DataPacRat · 2014-09-24T11:10:08.917Z · LW(p) · GW(p)

Against a dumb opponent, ve simulates the future game tree a few levels deep to figure out how to get the most points.

Looking at a few of the runs, it looks like DMRB vs SimHatingTitForTat results in both sides cooperating, until DMRB hits the last turn and defects. Which is fairly close to DMRB simply playing tit-for-tat against SHTFT, even though the latter is considered a "dumb" bot.

Replies from: philhcomment by lackofcheese · 2014-09-28T17:29:53.207Z · LW(p) · GW(p)

Since people seem to be unsure about this, I figured it would be good to make it clear that there are many simple strategies that are provably symmetric Nash Equilibria and cooperate 1v1 vs relatively broad varieties of opponents (unlike a CliqueBot in the non-iterated source-sharing version). Here's one example:

If either you or your opponent has ever defected in the past, Defect.

Otherwise, if it's the 98th round and you simulate that your opponent will defect on the 99th round if you both cooperate on the 98th round, or if you simulate that your opponent will defect on the 100th round if you both cooperate on the 98th and 99th rounds, or if your simulation attempt times out, Defect.

Otherwise, Cooperate.

When playing against this bot, if you defect on the 98th round or earlier, you will be defected against for the rest of the game, and mutual defection loses you at least 4 points which outweighs your 2-point gain for DC. If you cooperate for 98 rounds and then defect on the 99th, 100th, or both, you will be defected against for the last three rounds and hence you will lose points.

Consequently, the only way to maximise your reward vs this bot is to Cooperate on every round, which gets you 300 points, so this bot is unexploitable in the same way that PrudentBot in the non-iterated source-sharing PD. Also, this bot clearly cooperates for all 100 rounds if it plays against itself.

The basic starting point for this type of bot is to punish defections in past rounds via simple Tit-for-Tat-like methods. This only leaves one basic flaw - Tit-for-Tat can be exploited via defection on the last round. The solution to this problem is to patch this hole up by simulating future rounds (with the same matchup) and punishing them in advance. Additionally, in any matchup between two bots that use this general principle, the recursive simulation process is guaranteed to eventually hit a base case.

I think there are quite a large number of distinguishable cooperative symmetric equilibrium strategies, but I think they would all follow this general principle of punishing past defections as well as defections detected in simulated future rounds.

I didn't go for something quite this simple because the tournament setup means the point of the game is not simply to maximise score, and because I wanted to perform optimally vs some simple bots like DefectBot, CooperateBot and RandomBot.

comment by NancyLebovitz · 2014-09-24T14:42:26.438Z · LW(p) · GW(p)

Would a tournament where the bots can see how other bots behave with each other be possible?

The simplest version would be that bots just see all the interactions. It could be made more realistic if the bots have memory limits, and much more realistic (and much messier) if bots have some ability to conceal their interactions.

comment by DataPacRat · 2014-09-24T11:17:21.185Z · LW(p) · GW(p)

I find it somewhat amusing that SimHatingTitForTat has an average score second only to CheeseBot, and is one of the constant first-round survivalists... but just doesn't quite make it into the finals. Not bad for an algorithm that was an attempt to translate Tit-for-Tat's simplicity and reflectiveness into this particular tournament's terms. If the terms of the tourney were somewhat different, focusing more on 'avoiding total loss' rather than 'maximized score each game', then, well... :)

(I would be quite happy to see any further tournaments get run - I might even manage to come up with further ideas for the same.)

Replies from: Gav↑ comment by Gav · 2014-09-25T01:22:19.310Z · LW(p) · GW(p)

I'm kinda surprised, my naive intuition was that SimHatingTitForTat would force a cooperative win.

Does anyone know if the lack of SimHatingTitForTat getting into the finals is an artifact of the algorithms knowing the length of the rounds? (I.e. they can decide to backstab on the final turn to get an extra point).

comment by Scott Garrabrant · 2014-09-24T04:26:27.522Z · LW(p) · GW(p)

If you ran this tournament again, I would happily participate again!

comment by MaximumLiberty · 2014-09-24T15:44:36.971Z · LW(p) · GW(p)

This is brilliant work and a great summary and analysis of the whole thing.

I'd love to see a version where the bots do not get each other's code to use as a simulation, and are limited to their own experiences, but -- before making the cooperate-or-defect decision -- can pay the opponent a price that the opponent sets for the opponent's full history to that point. This would require three decisions, instead of one: decide what price to set for your own history, decide whether to pay the price for your opponent's history, and decide whether to cooperate or defect.

As a further variation, I'd love to see the same thing where contestants are allowed to submit multiple bots, so they can use some as information gatherers (until they die off).

And I'd upvote the suggestion of a variable-length simulation where no one knows the number of rounds ahead of time.

Max L.

Replies from: Error↑ comment by Error · 2014-09-30T14:26:19.827Z · LW(p) · GW(p)

A price for a bot's history is interesting. I would expect to commonly see bots setting the price at zero, so they can freely demonstrate a TFT-ish history. I would also expect to commonly see bots punishing other bots for not pricing at zero, effectively assuming that a priced history is either hiding defection or attempting to extort them.

comment by lackofcheese · 2014-09-24T15:23:53.823Z · LW(p) · GW(p)

Also worth checking, out of interest: How does each of the bots perform when playing against itself?

Replies from: tetronian2↑ comment by tetronian2 · 2014-09-24T15:54:15.806Z · LW(p) · GW(p)

Good question--after running some matches:

AnderBot Cooperates until one of the copies randomly triggers a Defect, then both copies defect for the rest of the match.

CheeseBot, DMRB, SHTFT, SimpleTFTSeer, Switch, TatForTit, TwoFacedTit, and VOFB always cooperate with themselves.

Pip always defects against itself.

Replies from: V_V, lackofcheese, Vulture↑ comment by V_V · 2014-09-24T20:05:51.612Z · LW(p) · GW(p)

For a next version of the tournament, perhaps it would make sense to force each bot to play a match against itself.

This ensures that everybody playing DefectBot is no longer a tournament equilibrium (not that this is probably going to happen, anyway).

↑ comment by lackofcheese · 2014-09-24T17:44:26.554Z · LW(p) · GW(p)

Self-cooperation was one of my core test cases, so I guess this probably indicates that many other people also designed for and/or tested self-cooperation.

comment by lackofcheese · 2014-09-24T10:33:31.993Z · LW(p) · GW(p)

Thank you very much for running this and your effort in writing up the results; I'm very happy with how CheeseBot did!

Now I just need to work out how exactly TatForTit managed to exploit me...

Replies from: tetronian2↑ comment by tetronian2 · 2014-09-24T14:26:52.453Z · LW(p) · GW(p)

Congratulations! If you do work it out, please do post it here--I'm still not 100% sure how TatForTit managed to exploit CheeseBot and DMRB.

comment by DataPacRat · 2014-09-24T05:39:40.764Z · LW(p) · GW(p)

Is there a way to analyze this tournament's data, if the final turns' scores were to be ignored? (That is, if any 'defect on the last turn' instructions didn't do anything useful in the games themselves, only for simulation purposes.)

comment by mwengler · 2014-10-06T22:51:52.137Z · LW(p) · GW(p)

Do I see a hint of a network-like effect in the results?

Simulate my opponent and figure out if defections will be punished; if so, Cooperate, and otherwise, defect. This allows bots following this strategy to always cooperate with each other, consistently providing them with a large number of points in every round, ensuring that they outcompete backstabbing or other aggressive strategies.

If I understand this, then the more bots that have this "cooperate unless defection is unpunished" strategy, the better the payoff for having that strategy. So in an evolutionary system, once a few bots get this strategy, it will be selected for with increasing selection pressure in subsequent generations.

My understanding of these tournaments is on the hairy edge of not existing, but if I have understood this correctly then this seems like a strong result explaining how a strategy might win in an evolutionary arms-race.

comment by Scott Garrabrant · 2014-09-24T04:15:56.586Z · LW(p) · GW(p)

So, if I am reading this correctly, it looks like I (TatForTit) almost always won when I made it past the early bracket, but failed to do well in the early game. I actually somewhat predicted this. Oh well. Thanks for running this!

Replies from: V_V↑ comment by V_V · 2014-09-24T10:08:29.482Z · LW(p) · GW(p)

Argh! You managed to exploit my precious VeryOpportunisticFarseerBot!

I knew there was a weakness, but I didn't think other people would realize it without seeing my code. I stand humbled!

↑ comment by lackofcheese · 2014-09-24T15:00:40.755Z · LW(p) · GW(p)

I'm not sure I'd call it an "exploit"; based on the match logs it's more like VeryOpportunisticFarseerBot and TatForTitBot just really, really hate each other, enough that each is willing to spend ~200 points to hurt the other by ~200 points.

As far as the 1v1 matchup is concerned, if anything VOFB exploits TatForTit, considering you defect first and so you win the matchup with a score of 104-99.

Replies from: V_V↑ comment by V_V · 2014-09-24T16:43:02.227Z · LW(p) · GW(p)

Oh, I hadn't looked at the individual results, thanks for pointing that out.

Eyeballing it, it looks like VOFB was never exploited except by bots which use random strategies. Maybe VOFB is an equilibrium for the 1v1 after all, but still I'm not convinced about some details.

If that's the case, then it is certainly very interesting fact that the tournament format still allows a 1v1 equilibrium strategy to not win.

If I recall correctly, games with more than two players are not guaranteed to have a Nash equilibrium, hence this "issue" (if we wish to consider it an issue) is probably not fundamentally solvable. "Meta-game" will always be important in this kind of games.

Anyway, 1v1 equilibrium or quasi-equilibrium bots dominated the game, therefore it seems that the tournament format, particularly the elimination rounds, did a good job at identifying 1v1 equilibrium strategies, if that was an intended goal.

I was somewhat skeptical of the format at the beginning, but it has proven very interesting. Kudos to tetronian2!

↑ comment by lackofcheese · 2014-09-24T17:29:49.600Z · LW(p) · GW(p)

Actually, the tournament format allowing a 1v1 equilibrium strategy to lose is rather a trivial fact---DefectBot is a 1v1 equilibrium strategy and it loses pretty badly indeed. Also, what do you mean by 1v1 equilibrium, specifically? Are you talking about 1v1 where the goal is to maximise your expected score, or 1v1 where the goal is to have a higher score than your opponent?

In the tournament context, a tournament where everyone submits DefectBot is a Nash equilibrium for the entire tournament. More generally, you're wrong about the existence of Nash equilibria not being guaranteed; as long as there are finitely many players and finitely many strategies, and mixed strategies are allowed, there has to be at least one Nash equilibrium.

That being said, in this game a strategy is a program, so a mixed strategy would be a probability distribution over programs, not a program with random elements; you would have to do something like send an email to tetronian2 saying "I submit CheeseBot1 with 50% probability and CheeseBot2 with 50% probability".

It's also very important to remember that Nash equilibria are made up of strategy profiles, not strategies for individual players. In this situation the game is completely symmetric so each player has the same strategies available, but there can still be equilibria where different players play different strategies, and in general there may not be a pure-strategy equilibrium where everyone uses the same strategy.

As such, if everyone submits an equilibrium strategy it is not necessarily true that the strategies together form an equilibrium, because they might be equilibrium strategies that are from different Nash equilibria. See, for a simple example, the stag hunt.

In two-player zero-sum games Nash equilibria are, in fact, interchangeable; if (A1, B1) and (A2, B2) are equilibria , then so are (A1, B2) and (A2, B1). However, for more than two players the interchangeability property no longer holds, and so overall you're correct in saying that "meta-game" necessarily comes up. Every player can pick an equilibrium strategy and yet the collective result may fail to be an equilibrium.

Replies from: V_V↑ comment by V_V · 2014-09-24T19:52:01.362Z · LW(p) · GW(p)

Thanks very much for the info.

Actually, the tournament format allowing a 1v1 equilibrium strategy to lose is rather a trivial fact---DefectBot is a 1v1 equilibrium strategy and it loses pretty badly indeed. Also, what do you mean by 1v1 equilibrium, specifically? Are you talking about 1v1 where the goal is to maximise your expected score, or 1v1 where the goal is to have a higher score than your opponent?

I should have mentioned that I was talking about a 1v1 equilibrium of strategies that maximizes your expected score.

DefectBot is such an equilibrium strategy for the vanilla iterated-PD with fixed time horizon, but not if you allow simulation.

In the tournament context, a tournament where everyone submits DefectBot is a Nash equilibrium for the entire tournament.

Yes, in fact this was one of the reasons why I was skeptical of the tournament format (and James Miller was as well, IIRC). In the end it turned out Miller was the only one to submit a DefectBot.

Eventually, I predicted that there were going to be lots of Tit-for-tat-like bots to cooperate with and exploit in the last rounds, and that some bots that used a strategy similar to mine (play a 1v1 max-payoff equilibrium strategy while attempting to exploit "dumb" bots), hence I went for it hoping for a tie at the top position. It almost worked.

More generally, you're wrong about the existence of Nash equilibria not being guaranteed; as long as there are finitely many players and finitely many strategies, and mixed strategies are allowed, there has to be at least one Nash equilibrium.

Ok, I recalled incorrectly. :)

Replies from: lackofcheese↑ comment by lackofcheese · 2014-09-24T20:09:03.974Z · LW(p) · GW(p)

I should have mentioned that I was talking about a 1v1 equilibrium of strategies that maximizes your expected score. DefectBot is such an equilibrium strategy for the vanilla iterated-PD with fixed time horizon, but not if you allow simulation.

DefectBot maximises expected score against DefectBot, because you can't do better vs DefectBot than defect every round and get 100 points. As such, DefectBot vs DefectBot is still a Nash equilibrium.

You need to be more specific as to what "maximizes your expected score" means, because depending on your definition I could come up with some very surprising strategies you might not be expecting.

Replies from: V_V↑ comment by V_V · 2014-09-24T21:11:39.933Z · LW(p) · GW(p)

By "score" I mean the sum of the payoffs that your bot receives during each round in a single 1v1 match.

By "expected" I mean w.r.t. both the internal random source of each both and the random source you use to choose your bot if you are using a mixed strategy.

↑ comment by lackofcheese · 2014-09-24T21:25:47.528Z · LW(p) · GW(p)

DefectBot gets the maximum possible expected score of 100pts vs DefectBot - it's not possible to do better vs DefectBot.

Replies from: V_V↑ comment by V_V · 2014-09-24T21:29:35.678Z · LW(p) · GW(p)

Yes, but there are other equilibria were both players get an higher score.

Replies from: lackofcheese↑ comment by lackofcheese · 2014-09-24T21:30:24.084Z · LW(p) · GW(p)

Yes, and which one of those equilibria do you pick?

Replies from: V_V↑ comment by V_V · 2014-09-24T21:44:22.901Z · LW(p) · GW(p)

That's a coordination problem.

Unlike program-swap (I)PD, where these max-payoff equilibria are the CliqueBots equilibria, and there doesn't seem to be any "natural" clique to pick as a Schelling point, in program-simulation IPD it seems that there is a Schelling point.

Replies from: lackofcheese↑ comment by lackofcheese · 2014-09-24T22:01:06.223Z · LW(p) · GW(p)

The term "max-payoff equilibrium" is ill-defined.

Consider this pair of bots:

1) ExtortionBot, who defects for 80 rounds, and then cooperates with you for 20 rounds if and only if you cooperated for all of the first 80 (otherwise it defects for those rounds as well).

2) WeakBot, who always defects for the last 20 rounds, and cooperates with you for the first 80 if and only if it simulates that you will cooperate for the last 20 rounds iff WeakBot cooperates with you for the first 80 (otherwise it defects for the first 80).

The maximum score you can get vs ExtortionBot is 100 points, which is how many points WeakBot gets.

The maximum score you can get vs WeakBot is 400 points, which is how many points ExtortionBot gets.

Ergo ExtortionBot/WeakBot forms a Nash Equilibrium. Is that a max-payoff equilibrium, or is it not?

Replies from: V_V↑ comment by V_V · 2014-09-28T12:54:38.541Z · LW(p) · GW(p)

That means that this game is a symmetric bargaining problem.

According to Wikipedia, proposed solutions are symmetric, Pareto-optimal (i.e. "max-payoff") equilibria.

It seems to me that VOFB or something similar to it is a strategy leading to one of these equilibria (do other symmetric Pareto-optimal equilibria exist?)

Replies from: lackofcheese↑ comment by lackofcheese · 2014-09-28T17:48:46.684Z · LW(p) · GW(p)

I think there are many such equilibria, but they all rely on the same basic principle. I've clarified this in a top-level comment, along with a simple example of a symmetric Pareto-optimal equilibrium strategy.

comment by aphyer · 2014-12-30T21:16:00.718Z · LW(p) · GW(p)

Something that bothers me about this tournament: I feel like a competitive tournament doesn't actually reward the kind of strategy that is meant to do well in Prisoner's Dilemna. As a (highly oversimplified) example, consider three bots who have the scores:

A: 10 B: 9 C: 2

Here, A is 'winning.' Suppose B can make a move that costs A 3 points and costs itself 1 point, leading to:

A: 7 B: 8 C: 2

B's payoff function has dropped. However, from a 'winning the tournament' approach, B has gone from 2nd to 1st, and so this outcome is now better for B. This feels wrong.

I doubt this was a really big issue here, but just on general principles I feel like competition by comparing scores is incompatible with a desire to explore the Prisoner's Dilemma, since you're turning a non-zero-sum game into a zero-sum game.

comment by Gav · 2014-09-28T23:50:11.826Z · LW(p) · GW(p)

Dang it, days later and I'm still insanely curious to see how the results would differ if the length of the matches wasn't known by the algorithms. Either by removing the concept of limiting matches, or by ending matches far earlier(not just one or two steps) than they were 'planned' to end.

I've been pondering downloading the code, changing and running it, but my shoulder angels start slapping me at the thought of me neglecting my current projects to (even briefly) learn Haskell.

Just occurred to me that there's a way around this, I can offer a shameless bribe to someone else that already knows what they're doing and has some spare time. :-D

With that in mind, $20USD via paypal to the first person who runs the modified tournament and posts the results as a reply to this comment. If you don't want the cash, I'll donate it to MIRI/a charity of your choice, etc.

comment by Florian_Dietz · 2014-09-24T08:53:44.810Z · LW(p) · GW(p)

I would find it very interesting if the tournament had multiple rounds and the bots were able to adapt themselves based on previous performance and log files they generated at runtime. This way they could use information like 'most bots take longer to simulate than expected.' or 'there are fewer cannon-fodder bots than expected' and become better adapted in the next round. Such a setup would lessen the impact of the fact that some bots that are usually very good underperform here because of an unexpected population of competitors. This might be hard to implement and would probably scare away some participants, though.

comment by Kevin_Binz · 2014-10-01T02:34:42.617Z · LW(p) · GW(p)

I'd like to add one more suggestion for the next version of this tournament:

- If you have N bots, don't run NxN matches; instead, engineer their exchanges to be less complete, and more random. This may encourage the emergence of reputation.

And more generally, I would like to see systematic explorations in post-IPD tournaments; tournaments in which bots are able to (in constrained conditions) mold the utility landscape for their own ends.

comment by Vulture · 2014-09-24T13:58:22.399Z · LW(p) · GW(p)

Hey, my bot (TwoFacedTitBot) did pretty well for a while there! :) No bad, considering that I threw it together after reading one Haskell tutorial and on the basis of basically no analysis besides coming up with a bunch of variations on a theme and running them against each other.

The idea was supposed to be that the simulated round against MirrorBot would act as a crude test of whether 2FTB was being simulated (cause if the opponent was naively simulating me, then they would time out against MirrorBot due to infinite regress), and then if I was being simulated I would play nice and cooperate, and otherwise I would play the slightly more dickish TitForTat-but-defect-on-last strategy. I suspect that it only did that well because it was so similar to TitForTat, but nonetheless I'm at least a little pleased with myself.

I'd be interested to see how the tournament would run with default bots included, though. Like I think many people, I had somehow gotten it into my head that they would be included. And maybe RandomBot would improve the variance a little bit? :P

In any case, massive props to tetronian for running this Awesome Thing :)

comment by lackofcheese · 2014-09-24T13:27:47.355Z · LW(p) · GW(p)

Your win frequency graph is wrong; it should say 963 for VOFB, not 968.

Anyways, I did some more detailed statistics with the power of

ls | xargs perl -sn0e 'while (/next round:.(\N*)/sg) {print "$1->"}; print "$ARGV\n"' | sort -V

and here's the results I came up with:

963 matches went like this:

ALL->[Cheese,DMRB,SimHatingTFT,Switch,TwoFacedTit,VOFB]->[Cheese,DMRB,VOFB]

32 matches went like this:

ALL->[Cheese,DMRB,SimHatingTFT,Switch,TatForTit,TwoFacedTit]->[Cheese,DMRB,TatForTit]->[DMRB,TatForTit]->[TatForTit]

(13, 19, 30, 31, 33, 40, 42, 54, 74, 119, 137, 203, 236, 272, 301, 303,

321, 326, 343, 400, 438, 476, 516, 526, 539, 608, 626, 815, 823, 827, 941, 977)

3 matches went like this:

ALL->[Cheese,DMRB,SimHatingTFT,Switch,TatForTit,TwoFacedTit,VOFB]->[Cheese,DMRB,SimHatingTFT,TwoFacedTit]->[Cheese,DMRB]

(64, 553, 979)

Match #11 went like this:

ALL->[Cheese,DMRB,SimHatingTFT,Switch,TatForTit,VOFB]->[Cheese,DMRB,SimHatingTFT]->[Cheese,DMRB]

Match #12 went like this:

ALL->[Cheese,DMRB,SimHatingTFT,Switch,TwoFacedTit,VOFB]->[Cheese,DMRB,SimHatingTFT]->[Cheese,DMRB]

↑ comment by lackofcheese · 2014-09-24T14:34:20.558Z · LW(p) · GW(p)

OK, so here's what I can gather from those numbers, plus some extra time spent looking at the individual files.

Cheese, DMRB, SHTFT and Switch did well enough in Round 1 every time to guarantee that they would get through; this left two spots that were potentially up for grabs due to variance (although TwoFacedTit got one of those spots 999 times out of 1000 because its score was generally pretty solid).

964/1000 times VOFB got through Round 1.

963/964 of those times it ended up being a 3-way tie between VOFB, DMRB, and CheeseBot.

1/964 of those times (Match #12) VOFB had a spasm and defected against TwoFacedTit on Turn #43; TwoFacedTit punished it by defecting and so they defected for the rest of the game, which caused SimHatingTFT to get through to Round 3 instead of VOFB. The result was a tie between CheeseBot and DMRB.

32/1000 times TatForTit got through Round 1.

In those cases, TatForTit proceeded to win because VOFB wasn't there to stop it---Cheese, DMRB and TatForTit would always make it to Round 3, and the matches turned out the same way every time:

TatForTit would defect against CheeseBot on Turn #98, followed by mutual defection on the last two turns, resulting in a score of 298-293

TatForTit would defect agiainst DMRB on Turn #99, followed by mutual defection on the last turn, for a score of 300-295

CheeseBot and DMRB would cooperate for 300-300.

with totals of 598 for TatForTit, 595 for DMRB, and 593 for CheeseBot.

As such, CheeseBot would be knocked out, leading to DMRB vs TatForTit in Round 4, in which TatForTit would (obviously) win 300-295.

3/1000 times VOFB and TatForTit both made it through Round 1 because their scores were tied (i.e. TwoFacedTit still made it through, and 7 bots got through R1 instead of the usual 6)

1/1000 times (Match #11) TwoFacedTit failed to get through Round 1, which meant that both VOFB and TatForTit went through instead.

In that round, TwoFacedTit defected against VOFB in Turn #50, triggering a string of defections for the rest of the time, and meanwhile VOFB got really lucky and managed to cooperate with Pip for 97 turns.

In those 4 matches where VOFB and TatForTit both made it to Round 2 they knocked each other out; apparently VOFB and TatForTit really hate each other. The final result of all 4 of those matches was a tie between CheeseBot and DMRB.

Replies from: V_V↑ comment by V_V · 2014-09-24T16:58:47.561Z · LW(p) · GW(p)

Thanks for the analysis. Assuming that Pip uses a random strategy, it seems that the only surprising behavior of VOFB occurred with TwoFacedTit. Maybe this is due to timing issues.

Replies from: Vulture↑ comment by Vulture · 2014-09-25T00:43:29.262Z · LW(p) · GW(p)

It seems like VOFB and TwoFacedTit had some weird chemistry, since each inexplicably defected against the other exactly once. I assume it's a coincidence, since TwoFacedTit is entirely deterministic and could only have been screwed up by anomalous processing times or something.

↑ comment by tetronian2 · 2014-09-24T14:36:34.911Z · LW(p) · GW(p)

Thanks; fixed. And thanks for this analysis! I've added it to the OP.

comment by Gondolinian · 2014-11-28T20:06:20.099Z · LW(p) · GW(p)

Would it be worthwhile to setup an automated, decentralized competition for iterated prisoner's dilemma bots, sort of like the roborumble for Robocode?

comment by DataPacRat · 2014-09-25T02:34:14.705Z · LW(p) · GW(p)

A request: Could somebody collate the data, and produce a table of the (average) scores for each program pairing?

comment by JoshuaFox · 2014-09-24T13:02:16.164Z · LW(p) · GW(p)

"Simulate my opponent and figure out if it will defect or if defections will be punished; if so, Cooperate, and otherwise, defect."

Isn't that backwards?

Replies from: tetronian2↑ comment by tetronian2 · 2014-09-24T14:25:08.289Z · LW(p) · GW(p)

Thanks; fixed.