SRG 4: Biological Cognition, BCIs, Organizations

post by KatjaGrace · 2014-10-07T01:00:29.300Z · LW · GW · Legacy · 139 commentsContents

Summary Biological intelligence Brain-computer interfaces Networks and organizations Summary The book so far Notes In-depth investigations How to proceed None 139 comments

This is part of a weekly reading group on Nick Bostrom's book, Superintelligence. For more information about the group, and an index of posts so far see the announcement post. For the schedule of future topics, see MIRI's reading guide.

Welcome. This week we finish chapter 2 with three more routes to superintelligence: enhancement of biological cognition, brain-computer interfaces, and well-organized networks of intelligent agents. This corresponds to the fourth section in the reading guide, Biological Cognition, BCIs, Organizations.

This post summarizes the section, and offers a few relevant notes, and ideas for further investigation. My own thoughts and questions for discussion are in the comments.

There is no need to proceed in order through this post, or to look at everything. Feel free to jump straight to the discussion. Where applicable and I remember, page numbers indicate the rough part of the chapter that is most related (not necessarily that the chapter is being cited for the specific claim).

Reading: “Biological Cognition” and the rest of Chapter 2 (p36-51)

Summary

Biological intelligence

- Modest gains to intelligence are available with current interventions such as nutrition.

- Genetic technologies might produce a population whose average is smarter than anyone who has have ever lived.

- Some particularly interesting possibilities are 'iterated embryo selection' where many rounds of selection take place in a single generation, and 'spell-checking' where the genetic mutations which are ubiquitous in current human genomes are removed.

Brain-computer interfaces

- It is sometimes suggested that machines interfacing closely with the human brain will greatly enhance human cognition. For instance implants that allow perfect recall and fast arithmetic. (p44-45)

- Brain-computer interfaces seem unlikely to produce superintelligence (p51) This is because they have substantial health risks, because our existing systems for getting information in and out of our brains are hard to compete with, and because our brains are probably bottlenecked in other ways anyway. (p45-6)

- 'Downloading' directly from one brain to another seems infeasible because each brain represents concepts idiosyncratically, without a standard format. (p46-7)

Networks and organizations

- A large connected system of people (or something else) might become superintelligent. (p48)

- Systems of connected people become more capable through technological and institutional innovations, such as enhanced communications channels, well-aligned incentives, elimination of bureaucratic failures, and mechanisms for aggregating information. The internet as a whole is a contender for a network of humans that might become superintelligent (p49)

Summary

- Since there are many possible paths to superintelligence, we can be more confident that we will get there eventually (p50)

- Whole brain emulation and biological enhancement are both likely to succeed after enough incremental progress in existing technologies. Networks and organizations are already improving gradually.

- The path to AI is less clear, and may be discontinuous. Which route we take might matter a lot, even if we end up with similar capabilities anyway. (p50)

The book so far

Here's a recap of what we have seen so far, now at the end of Chapter 2:

- Economic history suggests big changes are plausible.

- AI progress is ongoing.

- AI progress is hard to predict, but AI experts tend to expect human-level AI in mid-century.

- Several plausible paths lead to superintelligence: brain emulations, AI, human cognitive enhancement, brain-computer interfaces, and organizations.

- Most of these probably lead to machine superintelligence ultimately.

- That there are several paths suggests we are likely to get there.

Do you disagree with any of these points? Tell us about it in the comments.

Notes

- Nootropics

Snake Oil Supplements? is a nice illustration of scientific evidence for different supplements, here filtered for those with purported mental effects, many of which relate to intelligence. I don't know how accurate it is, or where to find a summary of apparent effect sizes rather than evidence, which I think would be more interesting.

Ryan Carey and I talked to Gwern Branwen - an independent researcher with an interest in nootropics - about prospects for substantial intelligence amplification. I was most surprised that Gwern would not be surprised if creatine gave normal people an extra 3 IQ points. - Environmental influences on intelligence

And some more health-specific ones. - The Flynn Effect

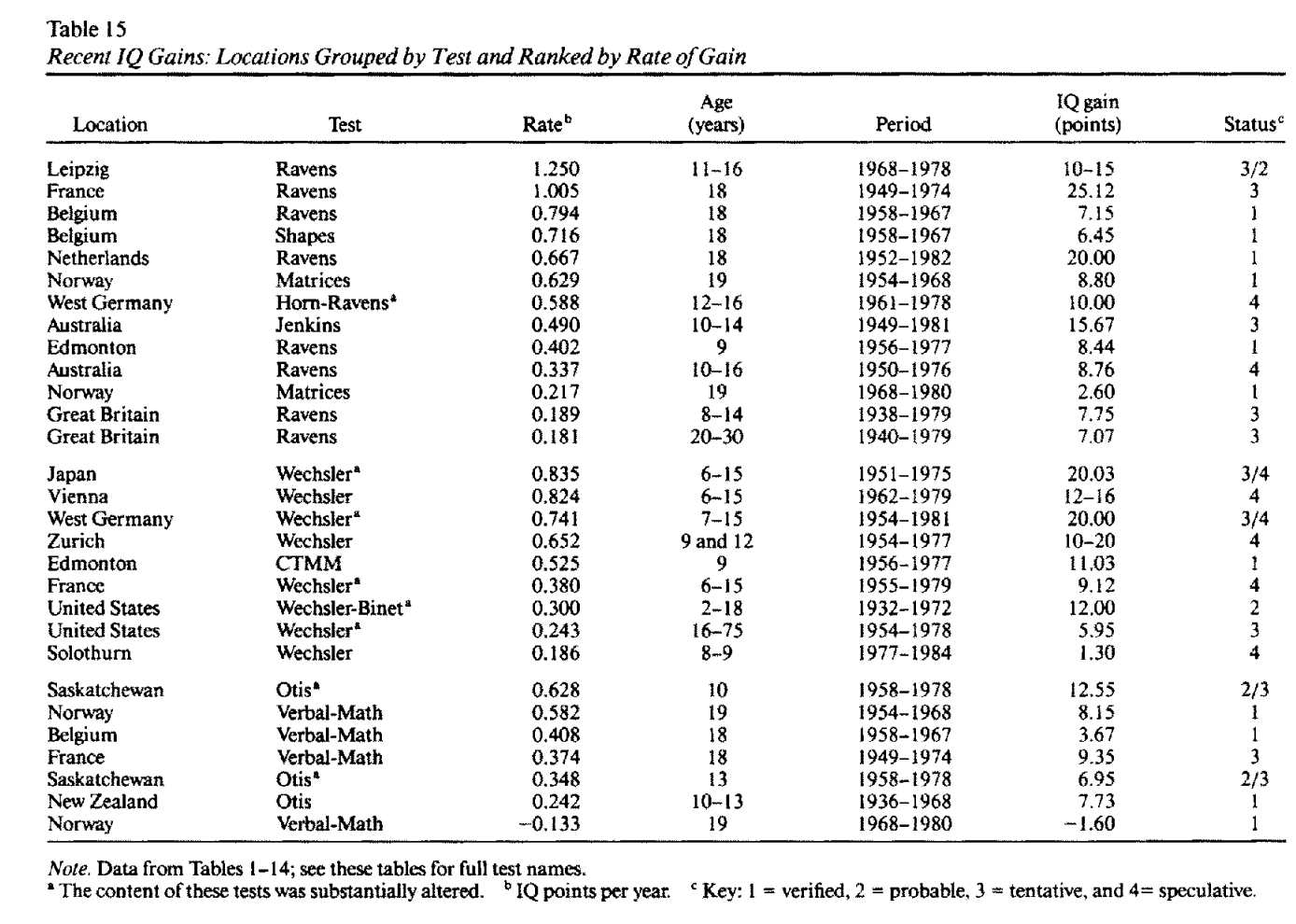

People have apparently been getting smarter by about 3 points per decade for much of the twentieth century, though this trend may be ending. Several explanations have been proposed. Namesake James Flynn has a TED talk on the phenomenon. It is strangely hard to find a good summary picture of these changes, but here's a table from Flynn's classic 1978 paper of measured increases at that point:

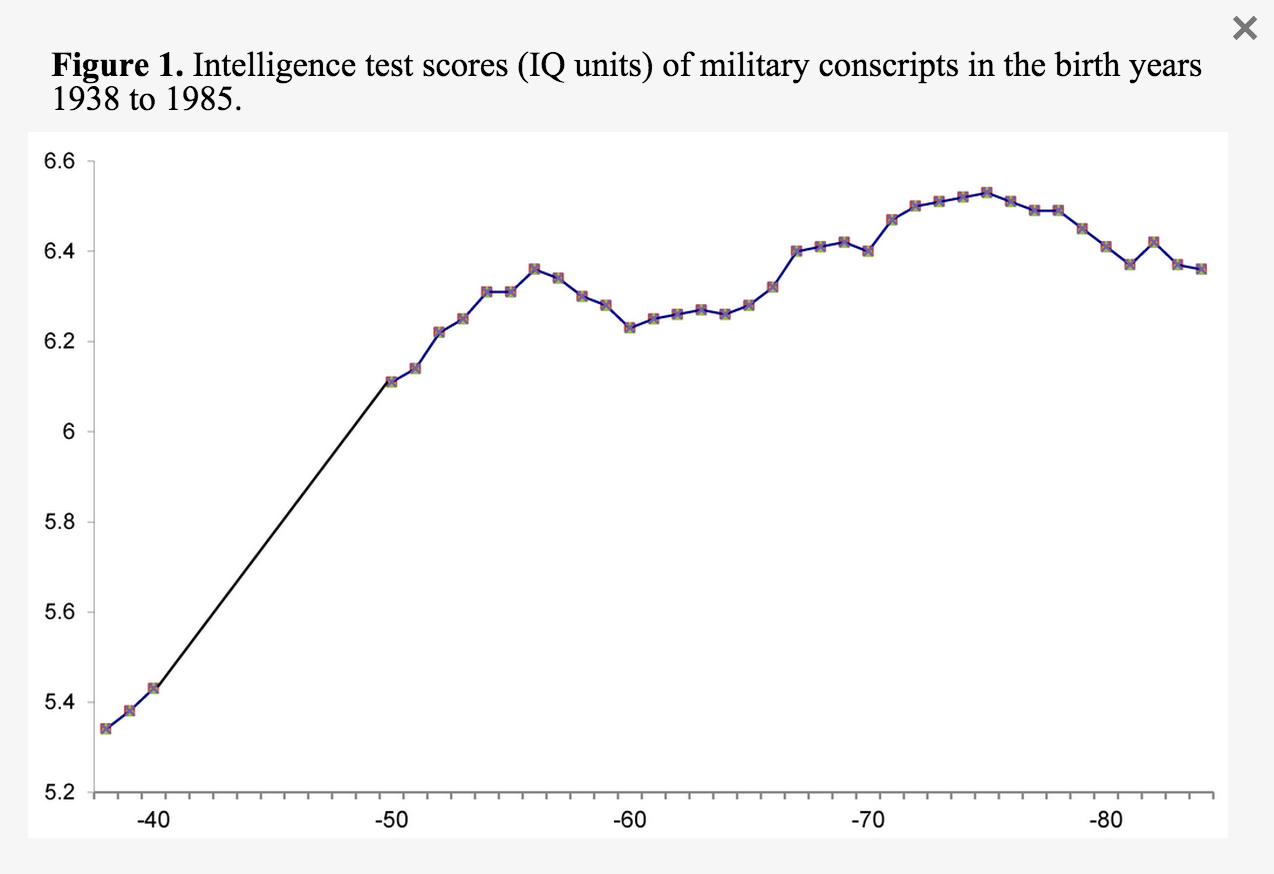

Here are changes in IQ test scores over time in a set of Polish teenagers, and a set of Norwegian military conscripts respectively:

- Prospects for genetic intelligence enhancement

This study uses 'Genome-wide Complex Trait Analysis' (GCTA) to estimate that about half of variation in fluid intelligence in adults is explained by common genetic variation (childhood intelligence may be less heritable). These studies use genetic data to predict 1% of variation in intelligence. This genome-wide association study (GWAS) allowed prediction of 2% of education and IQ. This study finds several common genetic variants associated with cognitive performance. Stephen Hsu very roughly estimates that you would need a million samples in order to characterize the relationship between intelligence and genetics. According to Robertson et al, even among students in the top 1% of quantitative ability, cognitive performance predicts differences in occupational outcomes later in life. The Social Science Genetics Association Consortium (SSGAC) lead research efforts on genetics of education and intelligence, and are also investigating the genetics of other 'social science traits' such as self-employment, happiness and fertility. Carl Shulman and Nick Bostrom provide some estimates for the feasibility and impact of genetic selection for intelligence, along with a discussion of reproductive technologies that might facilitate more extreme selection. Robert Sparrow writes about 'in vitro eugenics'. Stephen Hsu also had an interesting interview with Luke Muehlhauser about several of these topics, and summarizes research on genetics and intelligence in a Google Tech Talk. - Some brain computer interfaces in action

For Parkinson's disease relief, allowing locked in patients to communicate, handwriting, and controlling robot arms. - What changes have made human organizations 'smarter' in the past?

Big ones I can think of include innovations in using text (writing, printing, digital text editing), communicating better in other ways (faster, further, more reliably), increasing population size (population growth, or connection between disjoint populations), systems for trade (e.g. currency, finance, different kinds of marketplace), innovations in business organization, improvements in governance, and forces leading to reduced conflict.

In-depth investigations

If you are particularly interested in these topics, and want to do further research, these are a few plausible directions, some inspired by Luke Muehlhauser's list, which contains many suggestions related to parts of Superintelligence. These projects could be attempted at various levels of depth.

- How well does IQ predict relevant kinds of success? This is informative about what enhanced humans might achieve, in general and in terms of producing more enhancement. How much better is a person with IQ 150 at programming or doing genetics research than a person with IQ 120? How does IQ relate to philosophical ability, reflectiveness, or the ability to avoid catastrophic errors? (related project guide here).

- How promising are nootropics? Bostrom argues 'probably not very', but it seems worth checking more thoroughly. One related curiosity is that on casual inspection, there seem to be quite a few nootropics that appeared promising at some point and then haven't been studied much. This could be explained well by any of publication bias, whatever forces are usually blamed for relatively natural drugs receiving little attention, or the casualness of my casual inspection.

- How can we measure intelligence in non-human systems? e.g. What are good ways to track increasing 'intelligence' of social networks, quantitatively? We have the general sense that groups of humans are the level at which everything is a lot better than it was in 1000BC, but it would be nice to have an idea of how this is progressing over time. Is GDP a reasonable metric?

- What are the trends in those things that make groups of humans smarter? e.g. How will world capacity for information communication change over the coming decades? (Hilbert and Lopez's work is probably relevant)

How to proceed

This has been a collection of notes on the chapter. The most important part of the reading group though is discussion, which is in the comments section. I pose some questions for you there, and I invite you to add your own. Please remember that this group contains a variety of levels of expertise: if a line of discussion seems too basic or too incomprehensible, look around for one that suits you better!

Next week, we will talk about 'forms of superintelligence', in the sense of different dimensions in which general intelligence might be scaled up. To prepare, read Chapter 3, Forms of Superintelligence (p52-61). The discussion will go live at 6pm Pacific time next Monday 13 October. Sign up to be notified here.

139 comments

Comments sorted by top scores.

comment by JoshuaFox · 2014-10-07T15:11:41.928Z · LW(p) · GW(p)

What personal factors, if any, cause some people to tend towards one direction or another in some of these key prognostications?

For example, do economists tend more towards multiagent scenarios while computer scientists or ethicists tend more towards singleton prognostications?

Do neuroscientists tend more towards thinking that WBE will come first and AI folks more towards AGI, or the opposite?

Do professional technologists tend to have earlier timelines and others later timelines, or vice versa?

Do tendencies towards the political left or right influence such prognostications? Gender? Age? Education? Social status?

None of these affect the truth of the statements, of course, but if we found some such tendencies we might correct for them appropriately.

comment by AlexMennen · 2014-10-07T02:07:21.770Z · LW(p) · GW(p)

Lifelong depression of intelligence due to iodine deficiency remains widespread in many impoverished inland areas of the world--an outrage given that the condition can be prevented by fortifying table salt at a cost of a few cents per person and year.

According to the World Health Organization in 2007, nearly 2 billion individuals have insufficient iodine intake. Severe iodine deficiency hinders neurological development and leads to cretinism, which involves an average loss of about 12.5 IQ points. The condition can be easily and inexpensively prevented through salt fortification.

Wow. Are there any charities working on decreasing iodine deficiency? Why haven't I heard effective altruists hyping this strategy?

Replies from: None↑ comment by [deleted] · 2014-10-07T04:19:39.722Z · LW(p) · GW(p)

As of July 30, GiveWell considers the International Council for the Control of Iodine Deficiency Disorders Global Network (ICCIDD) a contender for their 2014 recommendation, according to their ongoing review. They also mention that they're considering the Global Alliance for Improved Nutrition (GAIN), which they've had their eye on for a few years. They describe some remaining uncertainties -- this has been a major philanthropic success for the past couple decades, so why is there a funding gap now, well before the work is finished? Is it some sort of donor fatigue, or are the remaining countries that need iodization harder to work in, or is it something else?

(Also, average gains from intervention seem to be more like 3-4 IQ points.)

Replies from: CarlShulman↑ comment by CarlShulman · 2014-10-07T23:10:12.544Z · LW(p) · GW(p)

Part of their reason for funding deworming is also improvements in cognitive skills, for which the evidence base just got some boost.

comment by KatjaGrace · 2014-10-07T03:10:51.087Z · LW(p) · GW(p)

Do you have a prefered explanation for the Flynn effect?

The Norwegian military conscripts above were part of a paper suggesting an interesting theory I hadn't heard before: that children are less intelligent as more are added to families, and so intelligence has risen as the size of families has shrunk.

Replies from: satt, TRIZ-Ingenieur↑ comment by satt · 2014-10-09T02:00:41.549Z · LW(p) · GW(p)

My guess: different causes of the Flynn effect dominated at different times (and maybe in different places, too).

For instance, Richard Lynn argued in 1990 that nutrition was the main explanation of the Flynn effect, but Flynn has recently counterargued that nutrition is unlikely to have contributed much since 1950 or so.

Another example. Rick Nevin reckons decreasing lead exposure for children has made all the difference, but when I did my own back-of-the-envelope calculations using NHANES data for teenagers from the late 1970s to 2010, it looked like lead probably had a big impact between the late 1970s and early 1990s (maybe 5 IQ points, as average lead levels sank from ~10μg/dL to ~2μg/dL), but not since then, because blood lead concentrations had fallen so low that further improvement (down to ~1μg/dL) made little difference to IQ.

Shrinking families would likely be a third factor along these lines, maybe kicking in hardest in mid-century, bolstering IQs after nutrition fell away as a key factor but before declining lead exposure made much difference.

Edit, November 27: fixing the Richard Lynn paper link.

Replies from: KatjaGrace↑ comment by KatjaGrace · 2014-10-09T18:49:23.863Z · LW(p) · GW(p)

And these different trends would tend to be consistently upwards rather than random, because we are consistently trying to improve such things? (Though the families one would probably still be random)

Replies from: satt↑ comment by satt · 2014-10-10T02:12:37.340Z · LW(p) · GW(p)

I'm not sure we do consistently try to improve these things. Nutrition, yes. But lead exposure got appreciably worse between WWI and 1970-1975, at least in the UK & US, and shrinking families is a manifestation of the demographic transition, which is only semi-intentional.

↑ comment by TRIZ-Ingenieur · 2014-10-08T21:51:49.779Z · LW(p) · GW(p)

Reading Steve Wozniaks biography iWoz I support your view that parents nowadays focus more on education in youngest years. Steve learned about electronic components even before he was four years old. His father explained to him many things about electronics before he was old enough for school. He learned to read at the age of three. This needs parents who assist. Steve Wozniak praised his father for explaining always on a level he could understand. Only one step at a time.

His exceptional high intelligence, he cited a test IQ > 200, is for sure not only inherited but consequence of loving care, teaching and challenging by his parents and peers.

Replies from: ChristianKl↑ comment by ChristianKl · 2014-10-10T13:03:42.843Z · LW(p) · GW(p)

There no good reason to think about this effect based on individual anecdotes. We do have controlled studies about the effects of parenting and it suggests that it doesn't matter much.

Replies from: TRIZ-Ingenieur↑ comment by TRIZ-Ingenieur · 2014-10-20T00:06:58.540Z · LW(p) · GW(p)

You are right. I needed some time for reading. The Flynn effect documents long term rise of fluid intelligence. Parenting and teaching are improving predominantly crystalline intelligence.

comment by KatjaGrace · 2014-10-07T03:02:52.101Z · LW(p) · GW(p)

'Let an ultraintelligent person be defined as a person who can far surpass all the intellectual activities of any other person however clever. Since the improvement of people is one of these intellectual activities, an ultraintelligent person could produce even better people; there would then unquestionably be an 'intelligence explosion,' and the intelligence of ordinary people would be left far behind. Thus the first ultraintelligent person is the last invention that people need ever make, provided that the person is docile enough to tell us how to keep them under control.'

Does this work?

Replies from: paulfchristiano, Jeff_Alexander↑ comment by paulfchristiano · 2014-10-07T15:18:06.907Z · LW(p) · GW(p)

Looks good to me, with the same set of caveats as the original claim. Though note that both arguments are bolstered if "improvement of people" or "design of machines" in the second sentence is replaced by a more exhaustive inventory. Would be good to think more about the differences.

Replies from: KatjaGrace, CarlShulman↑ comment by KatjaGrace · 2014-10-08T19:31:56.951Z · LW(p) · GW(p)

What caveats are you thinking of?

↑ comment by CarlShulman · 2014-10-07T23:20:14.423Z · LW(p) · GW(p)

This application highlights a problem in that definition, namely gains of specialization. Say you produced humans with superhuman general intelligence as measured by IQ tests, maybe the equivalent of 3 SD above von Neumann. Such a human still could not be an expert in each and every field of intellectual activity simultaneously due to time and storage constraints.

The superhuman could perhaps master any given field better than any human given some time for study and practice, but could not so master all of them without really ridiculously superhuman prowess. This overkill requirement is somewhat like the way a rigorous Turing Test requires not only humanlike reasoning, but tremendous ability to tell a coherent fake story about biographical details, etc.

↑ comment by Jeff_Alexander · 2014-10-24T03:04:23.299Z · LW(p) · GW(p)

For me, it "works" similarly to the original, but emphasizes (1) the underspecification of "far surpass", and (2) that the creation of a greater intelligence may require resources (intellectual or otherwise) beyond those of the proposed ultraintelligent person, the way an ultraintelligent wasp may qualify as far superior in all intellectual endeavors to a typical wasp yet still remain unable to invent and build a simple computing machine, nevermind constructing a greater intelligence.

comment by V_V · 2014-10-08T08:59:06.263Z · LW(p) · GW(p)

Economic history suggests big changes are plausible.

Sure, but it is hard to predict what changes are going to happen and when.

In particular, major economic changes are typically precipitated by technological breakthroughs. It doesn't seem that we can predict these breakthroughs looking at the economy, since the causal relationship is mostly the other way.

AI progress is ongoing.

Ok.

AI progress is hard to predict, but AI experts tend to expect human-level AI in mid-century.

But AI experts have a notoriously poor track record at predicting human-level AI.

Several plausible paths lead to superintelligence: brain emulations, AI, human cognitive enhancement, brain-computer interfaces, and organizations.

Organizations probably can't become much more "superintelligent" than they already are. Human cognitive enhancement, brain-computer interfaces, etc. also have limits.

Most of these probably lead to machine superintelligence ultimately.

Only digital intelligences (brain emulations and AIs) seem to have a realistic chance of becoming significantly more intelligent than anything that exists now, and even this is dubious.

That there are several paths suggests we are likely to get there.

There aren't really many paths, and they are not independent.

Replies from: TRIZ-Ingenieur, KatjaGrace↑ comment by TRIZ-Ingenieur · 2014-10-08T20:38:25.870Z · LW(p) · GW(p)

Organizations can become much more superintelligent than they are. A team of humans plus better and better weak AI has no upper limit to intelligence. Such a hybrid superintelligent organization can be the way to keep AI development under control.

Replies from: V_V↑ comment by V_V · 2014-10-09T09:29:28.444Z · LW(p) · GW(p)

In which case most of the "superintelligence" would come from the AI, not from the people.

Replies from: TRIZ-Ingenieur↑ comment by TRIZ-Ingenieur · 2014-10-09T17:17:01.307Z · LW(p) · GW(p)

The synergistic union human+AI (master+servant) is more intelligent than AI alone which will have huge deficits in several intelligence domains. Human+AI has not a single sub-human level intelligence domain. I agree that the superintelligence part originates primarily from AI capabilities. Without human initiative, creativity and capability using mighty tools these superintelligent capabilities would not come into action.

↑ comment by KatjaGrace · 2014-10-11T07:44:08.075Z · LW(p) · GW(p)

Do you think AI experts deserve their notoriety at predicting? The several public predictions that I know of prior to 1980 were indeed early (i.e. we have passed the time they predicted) but [Michie's survey] covers about ten times as many people and suggests that in the 70s, most CS researchers thought human-level AI would not arrive by 2014.

Replies from: V_V↑ comment by V_V · 2014-10-11T09:57:40.535Z · LW(p) · GW(p)

I thought that the main result by Armstrong and Sotala was that most AI experts who made a public prediction, predicted human-level AI within 15 to 20 years in their future, regardless on when they made the prediction.

Is this new data? Can you have some reference on how it was obtained?

Replies from: KatjaGrace↑ comment by KatjaGrace · 2014-10-12T21:36:30.454Z · LW(p) · GW(p)

That was one main result, yes. It looks like Armstrong and Sotala counted the Michie survey as one 'prediction' (see their dataset here). They have only a small number of other early predictions, so it is easy for that to make a big difference.

The image I linked is the dataset they used, with some modifications made by Paul Christiano and I (explained at more length here along with the new dataset for download). e.g. we took out duplications, and some things which seemed to have been sampled in a biased fashion (such that only early predictions would be recorded). We took out the Michie set altogether - our graph is now of public statements, not survey data.

comment by AlexMennen · 2014-10-07T02:16:53.830Z · LW(p) · GW(p)

I'd like to propose another possible in-depth investigation: How efficiently can money and research be turned into faster development of biological cognitive enhancement techniques such as iterated embryo selection? My motivation for asking that question is that, since extreme biological cognitive enhancement could reduce existential risk and other problems by creating people smart enough to be able to solve them (assuming we last long enough for them to mature, of course), it might make sense to pursue it if it can be done efficiently. Given the scarcity of resources currently devoted to existential risk from AI, I'm pretty sure it doesn't make sense to divert any of it to research on biological cognitive enhancement, but this could change if the recent increase of academic support for existential risk reduction ends up increasing the resources devoted to it proportionately.

Replies from: ChristianKl, skeptical_lurker, PhilGoetz↑ comment by ChristianKl · 2014-10-10T13:02:03.857Z · LW(p) · GW(p)

My motivation for asking that question is that, since extreme biological cognitive enhancement could reduce existential risk and other problems by creating people smart enough to be able to solve them

Smarter people can also come up with more dangerous ideas so it's not clear that existential risk get's lowered.

How efficiently can money and research be turned into faster development of biological cognitive enhancement techniques such as iterated embryo selection?

I think there already plenty of money invested into that field. Agriculture wants to be able to effectively clone animals and insert new genes. Various scientists also want to be able to change genes of organisms effectively without having to wait years till your mouse get's children.

Getting information information about what genes do largely depends on cheap sequencing and there a lot of money invested into getting more efficient gene sequencing.

↑ comment by skeptical_lurker · 2014-10-07T07:22:38.297Z · LW(p) · GW(p)

It might be more a problem of public perception, because at the end of the day people have to be willing to use these technologies. Whichever group funds embryo selection will be denounced by many other groups, so it may be wise to find a source of funding that is difficult to criticise.

↑ comment by PhilGoetz · 2014-10-07T11:56:19.811Z · LW(p) · GW(p)

One problem is that for that approach, you would need, say, standardized IQ tests and genomes for a large number of people, and then to identify genome properties correlated with high IQ.

First, all biologists everywhere are still obsessed with "one gene" answers. Even when they use big-data tools, they use them to come up with lists of genes, each of which they say has a measurable independent contribution to whatever it is they're studying. This is looking for your keys under the lamppost. The effect of one gene allele depends on what alleles of other genes are present. But try to find anything in the literature acknowledging that. (Admittedly we have probably evolved for high independence of genes, so that we can reproduce thru sex.)

Second, as soon as you start identifying genome properties associated with IQ, you'll get accused of racism.

Replies from: CarlShulman, gwern, ChristianKl↑ comment by CarlShulman · 2014-10-07T23:32:24.837Z · LW(p) · GW(p)

You can deal with epistasis using the techniques Hsu discusses and big datasets, and in any case additive variance terms account for most of the heritability even without doing that. There is much more about epistasis (and why it is of secondary importance for characterizing the variation) in the linked preprint.

↑ comment by gwern · 2014-10-07T15:51:53.252Z · LW(p) · GW(p)

First, all biologists everywhere are still obsessed with "one gene" answers. Even when they use big-data tools, they use them to come up with lists of genes, each of which they say has a measurable independent contribution to whatever it is they're studying. This is looking for your keys under the lamppost. The effect of one gene allele depends on what alleles of other genes are present. But try to find anything in the literature acknowledging that. (Admittedly we have probably evolved for high independence of genes, so that we can reproduce thru sex.)

? I see mentions of stuff like dominance and interaction all the time; the reason people tend to ignore it in practice seems to be that the techniques which assume additive/independence work pretty well and explain a lot of the heritability. For example, height the other day: "Defining the role of common variation in the genomic and biological architecture of adult human height"

Using genome-wide data from 253,288 individuals, we identified 697 variants at genome-wide significance that together explained one-fifth of the heritability for adult height. By testing different numbers of variants in independent studies, we show that the most strongly associated ~2,000, ~3,700 and ~9,500 SNPs explained ~21%, ~24% and ~29% of phenotypic variance. Furthermore, all common variants together captured 60% of heritability.

Seems like an excellent start to me.

Replies from: PhilGoetz↑ comment by PhilGoetz · 2014-10-07T23:05:27.351Z · LW(p) · GW(p)

It would be better than nothing. I am grinding one of my favorite axes more than I probably should. But those numbers make my case. My intuition says it would be hard to mine a few million SNPs, pick the most strongly associated 9500, and have them account for less than .29 of the variance, even if there were no relationship at all. And height is probably a very simple property, which may depend mainly on the intensity and duration of expression of a single growth program, minus interference from deficiencies or programs competing for resources.

Replies from: CarlShulman↑ comment by CarlShulman · 2014-10-07T23:41:54.478Z · LW(p) · GW(p)

"My intuition says it would be hard to mine a few million SNPs, pick the most strongly associated 9500, and have them account for less than .29 of the variance, even if there were no relationship at all."

With sample sizes of thousands or low tens of thousands you'd get almost nothing. Going from 130k to 250k subjects took it from 0.13 to 0.29 (where the total contribution of all common additive effects is around 0.5).

Most of the top 9500 are false positives (the top 697 are genome-wide significant and contribute most of the variance explained). Larger sample sizes let you overcome noise and correctly weight the alleles with actual effects. The approach looks set to explain everything you can get (and the bulk of heritability for height and IQ) without whole genome sequencing for rare variants just by scaling up another order of magnitude.

↑ comment by ChristianKl · 2014-10-10T12:54:17.382Z · LW(p) · GW(p)

One problem is that for that approach, you would need, say, standardized IQ tests and genomes for a large number of people, and then to identify genome properties correlated with high IQ.

That's just a matter of time till genome sequencing get's cheap enough. There will be a day where it makes sense for China to sequence the DNA of every citizen for health purposes. China has also standardized test scores of it's population and no issues with racism that will prevent people from analysing the data.

comment by KatjaGrace · 2014-10-07T03:53:53.361Z · LW(p) · GW(p)

Brain-computer interfaces for healthy people don't seem to help much, according to Bostrom. Can you think of BCIs that might plausibly exist before human-level machine intelligence, which you would expect to be substantially useful? (p46)

Replies from: Liso↑ comment by Liso · 2014-10-10T03:43:47.789Z · LW(p) · GW(p)

This is also one of points where I dont agree with Bostrom's (fantastic!) book.

We could use analogy from history: human-animal = soldier+hourse didnt need the physical iterface (like in Avatar movie) and still added awesome military advance.

Something similar we could get from better weak AI tools. (probably with better GUI - but it is not only about GUI)

"Tools" dont need to have big general intelligence. They could be at hourse level:

- their incredible power of analyse big structure (big memory buffer)

- speed of "rider" using quick "computation" with "tether" at your hands

↑ comment by Liso · 2014-10-10T03:57:02.248Z · LW(p) · GW(p)

This probably needs more explanation. You could tell that my reaction is not in appropriate place. It is probably true. BCI we could define like physicaly interconnection between brain and computer.

But I think in this moment we could (and have) analyse also trained "horses" with trained "raiders". And also trained "pairs" (or groups?)

Better interface between computer and human could be done also in nonivasive path = better visual+sound+touch interface. (hourse-human analogy)

So yes = I expect they could be substantially useful also in case that direct physical interace would too difficult in next decade(s).

comment by KatjaGrace · 2014-10-07T03:31:56.358Z · LW(p) · GW(p)

How would you start to measure intelligence in non-human systems, such as groups of humans?

Replies from: gwern, paulfchristiano, skeptical_lurker, TRIZ-Ingenieur↑ comment by gwern · 2014-10-07T15:45:21.833Z · LW(p) · GW(p)

One proposal goes that one measures predictive/reward-seeking ability on random small Turing machines: http://lesswrong.com/lw/42t/aixistyle_iq_tests/

↑ comment by paulfchristiano · 2014-10-07T15:19:23.720Z · LW(p) · GW(p)

Below you ask whether the definition of intelligence per se is important at all; it seems it's not, and this may be some indication of how to measure what you actually care about.

↑ comment by skeptical_lurker · 2014-10-07T07:17:25.002Z · LW(p) · GW(p)

Maybe a good starting point would be IQ tests?

Replies from: NxGenSentience, almostvoid↑ comment by NxGenSentience · 2014-10-09T15:06:08.220Z · LW(p) · GW(p)

I am a little curious that the "seven kinds of intelligence" (give or take a few, in recent years) notion has not been mentioned much, if at all, even if just for completeness.... Has that been discredited by some body of argument or consensus, that I missed somewhere along the line, in the last few years?

Particularly in many approaches to AI, which seem to view, almost a priori (I'll skip the italics and save them for emphasis) the approach of the day to be: work on (ostensibly) "component" features of intelligent agents as we conceive of them, or find them naturalistically.

Thus, (i) machine "visual" object recognition (wavelength band... up for grabs, perhaps, for some items might be better identified by switching up or down the E.M. scale and visual intelligence was one of the proposed seven kinds; (ii) mathematical intelligence or mathematical (dare I say it) intuition; (iii) facility with linguistic tasks, comprehension, multiple language acquisition -- another of the proposed seven; (i.v) manual dexterity and mechanical ability and motor skill (as in athletics, surgery, maybe sculpture, carpentry or whatever) -- another proposed form of intelligence, and so on. (Aside, interesting that these alleged components span the spectrum of difficulty... are, that is, problems from both easy and harder domains, as has been gradually -- sometimes unexpectedly -- revealed by the school of hard knocks, during the decades of AI engineering attempts.)

It seems that actors sympathetic to the top-down, "piecemeal" approach popular in much of the AI community would have jumped at this way of supplanting the ersatz "G" -- as it was called decades ago in early gropings in psychology and cogsci which sought a concept of IQ or living intelligence -- with, now, what many in cognitive science consider the more modern view and those in AI consider a more approachable engineering design strategy.

Any reason we aren't debating this more than we are? Or did I miss it in one of the posts, or bypass it inadvertently in my kindle app (where I read Bostrom's book)?

Replies from: SteveG↑ comment by SteveG · 2014-10-14T00:54:32.753Z · LW(p) · GW(p)

Bring these questions back up in later discussions!

Replies from: NxGenSentience↑ comment by NxGenSentience · 2014-10-14T09:54:29.105Z · LW(p) · GW(p)

Will definitely do so. I can see several upcoming weeks when these questions will fit nicely, including perhaps the very next one. Regards....

↑ comment by almostvoid · 2014-10-07T10:22:17.861Z · LW(p) · GW(p)

IQ tests verify inbuilt biases of the one doing the questioning. I have failed these gloriously yet got distinctions at uni. Tests per se mean nothing. [I blame psychologists]. As for non human systems they may mimic intelligence but unless they have sentience they will remain machines. [luckily]

Replies from: skeptical_lurker, TRIZ-Ingenieur↑ comment by skeptical_lurker · 2014-10-07T10:54:58.781Z · LW(p) · GW(p)

got distinctions at uni. Tests per se mean nothing.

Do you see a possible contradiction here?

↑ comment by TRIZ-Ingenieur · 2014-10-08T21:23:06.895Z · LW(p) · GW(p)

I scored an IQ of 60 at school. I was thinking too complex around the corner. Same experience I had with a Microsoft "computer driving license" test. I totally failed because I answered based on my knowledge of IT forensic possibilities. E.g. Question: If you delete a file in Windows trash bin: Is the file recoverable? If you want to pass this test you have to give the wrong answer no.

These examples show: We need cascaded test hierarchies:

- classification test

- test with adapted complexity level

↑ comment by TRIZ-Ingenieur · 2014-10-08T21:02:59.958Z · LW(p) · GW(p)

Survival was and is the challenge of evolution. Higher intelligence gives more options to cope with deadly dangers.

To measure intelligence we should challenge AI entities using standardized tests. To develop these tests will become a new field of research. IQ tests are not suitable because of their anthropocentrism. Tests should analyze capabilities how good and fast real world problems are solved.

comment by KatjaGrace · 2014-10-07T02:47:29.455Z · LW(p) · GW(p)

If ten percent of the population used a technology that made their children 10 IQ points smarter, how strong do you think the pressure would be for others to take it up? (p43)

Replies from: skeptical_lurker↑ comment by skeptical_lurker · 2014-10-07T07:16:36.175Z · LW(p) · GW(p)

With diet, modafinil, etc this might already be the case. Sugar alone makes it more difficult to concentrate for many people, as well as having many other deleterious effects. Yet all many people do is say "you can have your chocolate, but only after you take your ritalin"

Replies from: paulfchristiano, kgalias↑ comment by paulfchristiano · 2014-10-07T22:03:17.063Z · LW(p) · GW(p)

I'm extremely skeptical of extracting even 1-2 IQ points (in expectation, after weighing up other performance costs) from these mechanisms. Changing diet is the most plausible, but for people whose diets aren't actively bad by widely-recognized criteria, it's not clear we know enough to make things much better. For the benefits of long-term stimulant use (or even the net long-term impacts of short-term stimulant use) I remain far from convinced.

It seems true that more research on these topics could have large, positive expected effects, but these would accrue to society at large rather than to the researchers, and so would be in a different situaiton.

Replies from: skeptical_lurker↑ comment by skeptical_lurker · 2014-10-08T02:30:08.118Z · LW(p) · GW(p)

I seem to remember that eating enough fruit/vegetables alone raises your IQ by several points.

But rather than IQ, stimulants affects focus and conscientiousness, which is just as important. You can still fail with an IQ of 150 if you can't sit down on focus on work. I would say the same is true of sugar.

If you can spend more time focused on work, it might raise your IQ as a secondary effect, but this isn't necessary for a boost in effective intelligence.

Replies from: Lumifer↑ comment by Lumifer · 2014-10-08T15:06:04.526Z · LW(p) · GW(p)

I seem to remember that eating enough fruit/vegetables alone raises your IQ by several points.

That seems highly unlikely. Links?

Certain nutrient deficiencies in childhood can stunt development and curtail IQ (iodine is a classic example, that's why there is such a thing as iodized salt), but I don't think you're talking about that.

Replies from: skeptical_lurker↑ comment by skeptical_lurker · 2014-10-08T15:56:19.167Z · LW(p) · GW(p)

I'm not sure exactly where I read this, but here are some links with similarly impressive claims (albeit with the standard disclaimers about correlation not implying causation):

Based on parents’ reports, researchers assigned kids to one of three diet categories: a “processed” diet, high in fat, sugar and calories; a “traditional” diet (in the British sense), made up of meat, potatoes, bread and vegetables; and a “health-conscious” diet of whole grains, fresh fruits and vegetables, rice, pasta and lean proteins like fish.Based on parents’ reports, researchers assigned kids to one of three diet categories: a “processed” diet, high in fat, sugar and calories; a “traditional” diet (in the British sense), made up of meat, potatoes, bread and vegetables; and a “health-conscious” diet of whole grains, fresh fruits and vegetables, rice, pasta and lean proteins like fish. ... For each unit increase in processed food diets, children lost 1.67 points in IQ.

http://healthland.time.com/2011/02/08/toddlers-junk-food-diet-may-lead-to-lower-iq

It would help if they said what a 'unit' is.

On measures of mental sharpness, older people who ate more than two servings of vegetables daily appeared about five years younger at the end of the six-year study than those who ate few or no vegetables.

http://www.nootropics.com/vegetables/index.html

Replies from: Lumifer↑ comment by Lumifer · 2014-10-08T16:17:05.090Z · LW(p) · GW(p)

albeit with the standard disclaimers about correlation not implying causation

These standard disclaimers are pretty meaningful here.

The obvious question to ask of the first study is whether they controlled for the parents' IQ (or at least things like socio-economic status).

Replies from: skeptical_lurker↑ comment by skeptical_lurker · 2014-10-08T16:43:38.630Z · LW(p) · GW(p)

The obvious question to ask of the first study is whether they controlled for the parents' IQ (or at least things like socio-economic status).

Indeed. But I don't have the time to read their papers (not that the article linked to the original paper), and its not my field anyway. From a practical viewpoint, good diet might give significant advantages (if not in IQ, then in other areas of health) and is extremely unlikely to cause any harm, so the expected cost-benefit analysis is very positive.

Replies from: Lumifer↑ comment by Lumifer · 2014-10-08T16:57:21.547Z · LW(p) · GW(p)

From a practical viewpoint, good diet might give significant advantages

Oh, that is certainly true. The only problem is that everyone has their own idea of what "good diet" means and these ideas do not match X-)

Replies from: skeptical_lurker↑ comment by skeptical_lurker · 2014-10-08T17:50:47.754Z · LW(p) · GW(p)

I think most people agree on vegetables, in fact this is one of the few things diets do agree on.

↑ comment by kgalias · 2014-10-08T11:07:25.118Z · LW(p) · GW(p)

Sugar alone makes it more difficult to concentrate for many people, as well as having many other deleterious effects.

What do you mean?

Replies from: skeptical_lurker↑ comment by skeptical_lurker · 2014-10-08T12:06:16.724Z · LW(p) · GW(p)

I mean, if you are oscillating between sugar highs and crashes, it is difficult to concentrate, plus it causes diabetes etc..

Replies from: kgalias↑ comment by kgalias · 2014-10-08T12:13:42.277Z · LW(p) · GW(p)

Is this what you have in mind?

Replies from: skeptical_lurkerSugar does not cause hyperactivity in children.[230][231] Double-blind trials have shown no difference in behavior between children given sugar-full or sugar-free diets, even in studies specifically looking at children with attention-deficit/hyperactivity disorder or those considered sensitive to sugar.[232]

↑ comment by skeptical_lurker · 2014-10-08T12:58:47.097Z · LW(p) · GW(p)

No, I have this in mind:

The results indicate that children's performance declines throughout the morning and that this decline can be significantly reduced following the intake of a low GI cereal as compared with a high GI cereal on measures of accuracy of attention (M=-6.742 and -13.510, respectively, p<0.05) and secondary memory (M=-30.675 and -47.183, respectively, p<0.05).

http://www.ncbi.nlm.nih.gov/pubmed/17224202

Replies from: kgalias↑ comment by kgalias · 2014-10-08T17:35:57.171Z · LW(p) · GW(p)

I don't have time to evaluate which view is less wrong.

Still, I was somewhat surprised when I saw your first comment.

Replies from: skeptical_lurker↑ comment by skeptical_lurker · 2014-10-08T17:48:56.641Z · LW(p) · GW(p)

Upvoted for not wasting time!

comment by KatjaGrace · 2014-10-07T02:49:06.216Z · LW(p) · GW(p)

If parents had strong embryo selection available to them, how would the world be different, other than via increased intelligence?

Replies from: CarlShulman, skeptical_lurker, diegocaleiro, okay↑ comment by CarlShulman · 2014-10-07T23:29:21.037Z · LW(p) · GW(p)

A lot of negative-sum selection for height perhaps. The genetic architecture is already known well enough for major embryo selection, and the rest is coming quickly.

Height's contribution to CEO status is perhaps half of IQ's, and in addition to substantial effects on income it is also very helpful in the marriage market for men.

But many of the benefits are likely positional, reflecting the social status gains of being taller than others in one's social environment, and there are physiological costs (as well as use of selective power that could be used on health, cognition, and other less positional goods).

Choices at actual sperm banks suggests parents would use a mix that placed serious non-exclusive weight on each of height, attractiveness, health, education/intelligence, and anything contributing to professional success. Selection on personality might be for traits that improve individual success or for compatibility with parents, but I'm not sure about the net.

Selection for similarity on political and religious orientation might come into use, and could have disturbing and important consequences.

↑ comment by skeptical_lurker · 2014-10-07T07:12:43.589Z · LW(p) · GW(p)

Presumably many other traits would be selected for as well. Increasing intelligence has knee-jerk comparisons to eugenics & racism, so perhaps physical attractiveness/fitness would be selected for more strongly. Since personality traits are partially genetic, these may be selected for too. Homosexuality is partially genetic, so many gay rights movements would move to ban embryo selection (although some people would want bi kids because they don't want to deprive their kids of any options in life). Sexuality is correlated with other personality traits, so whatever choices are made will have knock-on effects. Some would advocate selecting against negative traits such as schizophrenia, ADHD and violence. Unfortunately, these traits are thought to provide an advantage in certain situations, or in combination with other genes, so we might also lose the creatively that comes with subclinical psychosis (poets are 20x more likely to go insane than average), the beneficial novel behaviour that comes with ADHD, and the ability to stand up for yourself (if aggression correlates with assertiveness). I know one should not generalise from fictional evidence, but it reminds me of the film 'demolition man' where society has evolved to a point where there is no violence, so when a murderer awakes from cryonic suspension they cannot defend themselves. Far better film than Gattaca.

Replies from: paulfchristiano↑ comment by paulfchristiano · 2014-10-07T22:00:32.220Z · LW(p) · GW(p)

Increasing intelligence has knee-jerk comparisons to eugenics & racism, so perhaps physical attractiveness/fitness would be selected for more strongly

If ture, this would be somewhat surprising from a certain angle. As if saying "selecting for what's on the inside is too superficial and prejudiced, so we should be sure our selection is only skin-deep."

I would bet against selection for things like sexual orientation or domesticity, and in favor of selection for general correlates of good health and successful life outcomes (which may in turn come along with other unintended characteristics).

Replies from: skeptical_lurker↑ comment by skeptical_lurker · 2014-10-08T02:52:32.377Z · LW(p) · GW(p)

If ture, this would be somewhat surprising from a certain angle. As if saying "selecting for what's on the inside is too superficial and prejudiced, so we should be sure our selection is only skin-deep."

This is, admittedly, a bizarre state of affairs. But if we were to admit that IQ is meaningful, and could be affected by genes, then this gives credence to the 'race realists'! But we can't concede a single argument to the hated enemy, therefore intelligence is independent of genes. QED.

Attractiveness OTOH is obviously genetic, because people look like their parents.

I would bet against selection for things like sexual orientation or domesticity, and in favour of selection for general correlates of good health and successful life outcomes (which may in turn come along with other unintended characteristics).

I concur. I would however bet in favour of a large argument over sexual orientation.

↑ comment by diegocaleiro · 2014-10-08T22:15:54.868Z · LW(p) · GW(p)

Yvain has a biodeterministic guide to parenting. Some people would do the same things: http://squid314.livejournal.com/346391.html

comment by KatjaGrace · 2014-10-07T01:37:30.389Z · LW(p) · GW(p)

Ambiguities around 'intelligence' often complicate discussions about superintelligence, so it seems good to think about them a little.

Some common concerns: is 'intelligence' really a thing? Can intelligence be measured meaningfully as a single dimension? Is intelligence the kind of thing that can characterize a wide variety of systems, or is it only well-defined for things that are much like humans? (Kruel's interviewees bring up these points several times)

What do we have to assume about intelligence to accept Bostrom's arguments? For instance, does the claim that we might reach superintelligence by this variety of means require that intelligence be a single 'thing'?

Replies from: RomeoStevens, diegocaleiro, SteveG↑ comment by RomeoStevens · 2014-10-07T06:37:19.328Z · LW(p) · GW(p)

Is intelligence really a single dimension?

Related: Do we see a strong clustering of strategies that work across all the domains we have encountered so far? I see the answer to original question being yes if there is just one large cluster, and no if it turns out there are many fairly orthogonal clusters.

Is robustness against corner cases (idiosyncratic domains) a very important parameter? We certainly treat it as such in our construction of least convenient worlds to break decision theories.

Replies from: PhilGoetz, TRIZ-Ingenieur↑ comment by PhilGoetz · 2014-10-07T11:44:05.507Z · LW(p) · GW(p)

There is a small set of operations (dimension reduction, 2-class categorization, n-class categorization, prediction) and algorithms for them (PCA, SVM, k-means, regression) that work well on a wide variety of domains. Does that help?

Replies from: Punoxysm↑ comment by Punoxysm · 2014-10-07T18:38:35.662Z · LW(p) · GW(p)

Not that wide a variety of domains, compared to all human tasks.

Specifically, they can only handle data that comes in matrix form, and often only after it has been cleaned up and processed by a human being. Consider, just the iris dataset: if instead of the measurements of the flowers you were working with photographs of the flowers, you might have made your problem substantially harder, since now you have a vision task not amenable to the algorithms you list.

Replies from: PhilGoetz↑ comment by PhilGoetz · 2014-10-07T23:11:26.843Z · LW(p) · GW(p)

Can you give an example of data that doesn't come in matrix form? If you have a set of neurons and a set of connections between them, that's a matrix. If you have asynchronous signals travelling between those neurons, that's a time series of matrices. If it ain't in a matrix, it ain't data.

[ADDED: This was a silly thing for me to say, but most big data problems use matrices.]

Replies from: Punoxysm↑ comment by Punoxysm · 2014-10-07T23:37:30.907Z · LW(p) · GW(p)

The answer you just wrote could be characterized as a matrix of vocabulary words and index-of-occurrence. But that's a pretty poor way to characterize it for almost all natural language processing techniques.

First of all, something like PCA and the other methods you listed won't work on a ton of things that could be shoehorned into matrix format.

Taking an image or piece of audio and representing it using raw pixel or waveform data is horrible for most machine learning algorithms. Instead, you want to heavily transform it before you consider putting it into something like PCA.

A different problem goes for the matrix of neuronal connections in the brain: it's too large-scale, too sparse, and too heterogenous to be usefully analyzed by anything but specialized methods with a lot of preprocessing and domain knowledge going into them. You might be able to cluster different functional units of the brain, but as you tried to get to more granular units, heterogeneity in number of connnections per neuron would cause dense clusters to "absorb" sparser but legitimate clusters in almost all clustering methods. Working with a time-series of activations is an even bigger problem, since you want to isolate specific cascades of activations that correspond to a stimulus, and then look at the architecture of the activated part of the brain, characterize it, and then be able to understand things like which neurons are functionally equivalent but correspond to different parallel units in the brain (left eye vs. right eye).

If I give you a time series of neuronal activations and connections with no indication of the domain, you'd probably be able to come up with a somewhat predictive model using non-domain-specific methods, but you'd be handicapping yourself horribly.

Inferring causality is another problem - none of these predictive machine learning methods do a good job of establishing whether two factors have a causal relation, merely whether they have a predictive one (within the particular given dataset).

Replies from: PhilGoetz↑ comment by PhilGoetz · 2014-10-18T17:08:19.885Z · LW(p) · GW(p)

First, yes, I overgeneralized. Matrices don't represent natural language and logic well.

But, the kinds of problems you're talking about--music analysis, picture analysis, and anything you eventually want to put into PCA--are perfect for matrix methods. It's popular to start music and picture analysis with a discrete Fourier transform, which is a matrix operation. Or you use MPEG, which is all matrices. Or you construct feature detectors, say edge detectors or contrast detectors, using simple neural networks such as those found in primary visual cortex, and you implement them with matrices. Then you pass those into higher-order feature detectors, which also use matrices. You may break information out of the matrices and process it logically further downstream, but that will be downstream of PCA. As a general rule, PCA is used only on data that has so far existed only in matrices. Things that need to be broken out are not homogenous enough, or too structured, to use PCA on.

There's an excellent book called Neural Engineering by Chris Eliasmith in which he develops a matrix-based programming language that is supposed to perform calculations the way that the brain does. It has many examples of how to tackle "intelligent" problems with only matrices.

↑ comment by TRIZ-Ingenieur · 2014-10-08T23:30:39.289Z · LW(p) · GW(p)

lukeprog linked above the Hsu paper that documents good correlation between different narrow human intelligence measurements. The author concludes that a general g factor is sufficient.

All humans have more or less the same cognitive hardware. The human brain is prestructured that specific areas normally have assigned specific functionality. In case of a lesion other parts of the brain can take over. If a brain is especially capable this covers all cranial regions. A single dimension measure for humans might suffice.

If a CPU has a higher clock frequency rating than another CPU of the identical series: the clock factor is the speedup factor for any CPU-centric algorithm.

An AI with NN pattern matching architecture will be similar slow and unreliable in mental arithmetics like us humans. Extend its architecture with a floating point coprocessor and its arithmetic capabilities will rise by magnitudes.

If you challenge an AI that is superintelligent in engineering but has low performance regarding this challenging requirement it will design a coprocessor for this task. Such coprocessors exist already: FPGA. Programming is highly complex but speedups of magnitudes reward all efforts. Once a coprocessor hardware configuration is in the world it can be shared and further improved by other engineering AIs.

To monitor AI intelligence development of extremly heterogeneous and dynamic architectures we need high dimensional intelligence metrics.

↑ comment by diegocaleiro · 2014-10-07T01:46:38.405Z · LW(p) · GW(p)

We have to assume only that we will not significantly improve our understanding of what intelligence is without attempting to create it (through reverse engineering, coding, or EMs). If our understanding remains incipient the safe policy is to assume that indeed intelligence is a capacity, or set of capacities that can be used to bootstrap itself. Given the 10¨52 lives at stake, even if we were fairly confident intelligence cannot bootstrap, we should still MaxiPok and act as if it was.

Replies from: skeptical_lurker↑ comment by skeptical_lurker · 2014-10-07T07:26:53.031Z · LW(p) · GW(p)

We have to assume only that we will not significantly improve our understanding of what intelligence is without attempting to create it

I disagree. By analogy, understanding of agriculture has increased greatly without the creation of an artificial photosynthetic cell. And yes, I know that photovoltic panels exist, but only a long time later.

Replies from: diegocaleiro↑ comment by diegocaleiro · 2014-10-08T22:07:14.081Z · LW(p) · GW(p)

Do you mind spelling out the analogy? (including where it breaks) I didn't get it.

Reading my comment I feel compelled to clarify what I meant:

Katja asked: in which worlds should we worry about what 'intelligence' designates not being what we think it does?

I responded: in all the worlds where increasing our understanding of 'intelligence' has the side effect of increasing attempts to create it - due to feasibility, curiosity, or an urge for power. In these worlds, expanding our knowledge increases the expected risk, because of the side effects.

Whether intelligence is or not what we thought will only be found after the expected risk increased, then we find out the fact, and the risk either skyrockets or plummets. In hindsight, if it plummets, having learned more would look great. In hindsight, if it skyrockets, we are likely dead.

↑ comment by SteveG · 2014-10-08T05:38:28.998Z · LW(p) · GW(p)

Single-metric versions of intelligence are going the way of the dinosaur. In practical contexts, it's much better to test for a bunch of specific skills and aptitudes and to create a predictive model of success at the desired task.

In addition, our understanding of intelligence frequently gives a high score to someone capable of making terrible decisions or someone reasoning brilliantly from a set of desperately flawed first principles.

Replies from: KatjaGrace, NxGenSentience↑ comment by KatjaGrace · 2014-10-08T19:42:06.482Z · LW(p) · GW(p)

Ok, does this matter for Bostrom's arguments?

Replies from: SteveG↑ comment by SteveG · 2014-10-08T20:18:00.807Z · LW(p) · GW(p)

Yeah, having high math or reading comprehension capability does not always make people more effective or productive. They can still, for instance, become suidical, sociopathic or rebel against well-meaning authorities. They still often do not go into their doctor when sick, they develop addictions, they may become too introverted or arrogant when it is counterproductive or fail to escape bad relationships.

We should not strictly be looking to enhance intelligence. If we're going down the enhancement route at all, we should wish to create good decision-makers without, for example, tendencies to mis-read people, sociopathy and self-harm.

Replies from: Lumifer↑ comment by Lumifer · 2014-10-08T20:54:23.949Z · LW(p) · GW(p)

or rebel against well-meaning authorities

What's wrong with that?

we should wish to create good decision-makers without, for example, tendencies to mis-read people, sociopathy and self-harm.

...and, presumably, without tendencies to rebel against well-meaning authorities?

I don't think I like the idea of genetic slavery.

Replies from: SteveG↑ comment by SteveG · 2014-10-08T21:28:21.681Z · LW(p) · GW(p)

For instance, rebelling against well-meaning authorities has been known to cause someone not to adhere to a correct medication regime or to start smoking.

Problems regularly rear their head when it comes to listening to the doctor.

I guess I'll add that the well-meaning authority is also knowledgeable.

Replies from: Lumifer, SteveG↑ comment by Lumifer · 2014-10-09T00:19:18.489Z · LW(p) · GW(p)

I guess I'll add that the well-meaning authority is also knowledgeable.

Let me point out the obvious: the knowledgeable well-meaning authority is not necessarily acting in your best interests.

Not to mention that authority that's both knowledgeable and well-meaning is pretty rare.

↑ comment by SteveG · 2014-10-08T21:37:11.684Z · LW(p) · GW(p)

Really, what I am getting at is that just like anyone else, smart people may rebel or conform as a knee-jerk reaction. Neither is using reason to come to an appropriate conclusion, but I have seen them do it all the time.

Replies from: KatjaGrace↑ comment by KatjaGrace · 2014-10-08T22:56:46.550Z · LW(p) · GW(p)

One might think an agent who was sufficiently smart would at some point apply reason to the question of whether they should follow their knee-jerk responses with respect to e.g. these decisions.

↑ comment by NxGenSentience · 2014-10-12T21:39:22.080Z · LW(p) · GW(p)

Single-metric versions of intelligence are going the way of the dinosaur. In practical contexts, it's much better to test for a bunch of specific skills and aptitudes and to create a predictive model of success at the desired task.

I thought that this had become a fairly dominant view, over 20 years ago. See this PDF: http://www.learner.org/courses/learningclassroom/support/04_mult_intel.pdf

I first read the book in the early nineties, though Howard Gardner had published the first edition in 1982. I was at first a bit extra skeptical that it would be based too much on some form of "political correctness", but I found the concepts to be very compelling.

Most of the discussion I heard in subsequent years, occasionally by psychology professor and grad student friends, continued to be positive.

I might say that I had no ulterior motive in trying to find reasons to agree with the book, since I always score in the genius range myself on standardized, traditional-style IQ tests.

So, it does seem to me that intelligence is a vector, not a scalar, if we have to call it by one noun.

As to Katja's follow-up question, does it matter for Bostrom's arguments? Not really, as long as one is clear (which it is from the contexts of his remarks) which kind(s) of intelligence he is referring to.

I think there is a more serious vacuum in our understanding, than whether intelligence is a single property, or comes in several irreducibly different (possibly context-dependent) forms, and that is this : with respect to the sorts of intelligence we usually default to conversing about (like the sort that helps a reader understand Bostrom's book, an explanation of special relativity, or RNA interference in molecular biology), do we even know what we think we know about what that is.

I would have to explain the idea of this purported "vacuum" in understanding at significant length; it is a set of new ideas that stuck me, together, as a set of related insights. I am working on a paper explaining the new perspective I think I have found, and why it might open up some new important questions and strategies for AGI.

When it is finished and clear enough to be useful, I will make it available by PDF or on a blog.

(Too lengthy to put in one post here, so I will put the link up. If these ideas pan out, they may suggest some reconceptualizations with nontrivial consequences, and be informative in a scalable sense -- which is what one in this area of research would hope for.)

comment by PhilGoetz · 2014-10-07T01:32:29.795Z · LW(p) · GW(p)

What are the trends in those things that make groups of humans smarter? e.g. How will world capacity for information communication change over the coming decades? (Hilbert and Lopez's work is probably relevant)

A social / economic / political system is not just analogous to, but is, an artificial intelligence. Its purpose is to sense the environment and use that information to choose actions that further its goals. The best way to make groups of humans smarter would be to consciously apply what we've learned from artificial intelligence to human organizations.

For example, neural networks have rules that adjust weights between nodes to make the "opinions" of certain nodes more important in particular situations. A backpropagation network with one hidden layer basically discovers which (reduced) dimension of input patterns each hidden node layer is an "expert" on, and adjusts the weights so each node's opinion counts for more on the problems with components that it is an expert in. We could apply this to our political systems today, replacing the Constitution with a better algorithm for combining votes and using predictions or standardized tests to adjust the weights given to the votes of different people on different issues so as to maximize the information-processing power of the body politic.

Of course, this would be politically... difficult.

But we might see something like this happen within corporations (my money's on Google), or in small progressive dictatorships like Singapore.

Replies from: almostvoid↑ comment by almostvoid · 2014-10-07T10:26:22.913Z · LW(p) · GW(p)

you would create a social nightmare politically and socially. The less interference in the political process [but never without consultation] the clearer the outcome. Total social input would become grey noise. The lowest common denominator would win. Populist politics is bad enough as it is. Representative democracy on the Scandinavian-Swiss model is credible as a system that seems to steer away from any form of extremism.

comment by KatjaGrace · 2014-10-07T01:30:18.707Z · LW(p) · GW(p)

Have we missed any plausible routes to superintelligence? (p50)

Replies from: PhilGoetz, paulfchristiano, diegocaleiro↑ comment by PhilGoetz · 2014-10-07T01:57:30.449Z · LW(p) · GW(p)

Unexpected advances in physics lead to super-exponential increases in computing power (such as were expected from quantum computing), allowing brute-force algorithms or a simulated ecosystem to achieve super-intelligence.

Real-time implanted or worn sensors plus genomics, physiology simulation, and massive on-line collaboration enables people to identify the self-improvement techniques that are useful to them.

Someone uses ancient DNA to make a Neanderthal, and it turns out they died out because their superhuman intelligence was too metabolically expensive.

Reading all of LessWrong in a single sitting.

↑ comment by paulfchristiano · 2014-10-07T22:10:49.754Z · LW(p) · GW(p)

It seems like the characterization of outcomes into distinct "routes" is likely to be fraught; even if such a breakdown was in some sense exhaustive I would not be surprised if actual developments didn't really fit into the proposed framework. For example, there is a complicated and hard to divide space between AI, improved tools, brain-computer interfaces, better institutions, and better training. I expect that ex post the whole thing will look like a bit of a mess.

One practical result is that even if there is a strong deductive argument for X given "We achieve superintelligence along route Y" for every particular Y in Bostrom's list, I would not take this as particularly strong evidence for X. I would instead view it as having considered a few random scenarios where X is true, and weighing this up alongside other kinds of evidence.

↑ comment by diegocaleiro · 2014-10-07T01:50:51.108Z · LW(p) · GW(p)

Besides the one's with extremely low likelihood (being handed SI by the simulators of our universe, or aliens find it first.) However this may be an artifice of Bostrom's construction. If you partition the space of possible progress in a way where one of the categories captures "non of the above", then it only seems as if the area has been searched.

comment by KatjaGrace · 2014-10-07T01:04:25.459Z · LW(p) · GW(p)

If a technology existed that could make your children 10 IQ points smarter, how willing do you think people would be to use it? (p42-3)

Replies from: lukeprog↑ comment by lukeprog · 2014-10-07T01:12:46.538Z · LW(p) · GW(p)

Shulman & Bostrom (2014) make a nice point about this:

The history of IVF... suggests that applications which were opposed in anticipation can rapidly become accepted when they become live options.

As table 2 in the paper shows, the American public generally opposed IVF until the first IVF baby was born, and then they were in favor of it.

As of 2004, only 28% of Americans approve of embryo selection for improving strength or intelligence, but that could change rapidly when the technology is available.

Replies from: gwern, paulfchristiano, PhilGoetz↑ comment by gwern · 2014-10-08T21:42:25.050Z · LW(p) · GW(p)

On the other hand, we could point to Down syndrome eugenics: while it's true that Down's has fallen a lot in America thanks to selective abortion, it's also true that Down's has not disappeared and the details make me pessimistic about any widespread use in America of embryo selection for relatively modest gains.

An interesting paper: "Decision Making Following a Prenatal Diagnosis of Down Syndrome: An Integrative Review", Choi et al 2012 (excerpts). To summarize:

- many people are, on principle, unwilling to abort based on a Down's diagnosis, and so simply do not get the test

the people who do abort tend to be motivated to do so out of fear: fear that a Down's child will be too demanding and wreck their life.

Not out of concern for the child's reduced quality of life, because Down's syndrome is extremely expensive to society, because sufferers go senile in their 40s, because they're depriving a healthy child of the chance to live etc - but personal selfishness.

Add onto this:

- testing for Down's is relatively simple and easy

most people see and endorse a strong asymmetry between 'healing the sick' and 'improving the healthy'

You can see this in the citation in Shulman & Bostrom 2014, to Kalfoglou et al., 2004 - the questions about preventing disease get far more positive responses than for enhancement - and you can see in the quotes that the grounds for this asymmetry is not one of simple analysis or cost-benefit calculations, but one of values & politics and so is intractable

- IVF is painful, difficult, and very expensive (see the comments in my sperm donation essay); as cryonics organizations know, intellectual support can be rather different from realized participants

- the gain in income or accomplishment per IQ point is meaningful but highly probabilistic, delayed by decades, and accrues to the child or outsiders (through positive externalities) - not the parent; while we might expect returns to IQ to increase in the future as the US economy 'polarizes', the polarization would have to be very extreme for potential parents to experience active dread about their offsprings' prospects anything remotely like those who receive Down's diagnoses.

- reproductive choices are most influenced by the woman; women in Kalfoglou et al 2004 generally are against such engineering, so that 28% is misleading, what counts is not the male support ~33% range but the female support in the low ~23% (Table 3.2, pg24) since they are the deciders

- reproductive choices are also influenced by education & income; in this, the same table shows that the wealthier and better educated (those who can most afford and understand it) are not keen on embryo selection: support drops by 10% from the poorest to the wealthiest, and 5% from the least to most educated

Down's is the easy case, and many people still refuse it. To engage in IVF for embryo selection for some relatively subtle gains... I can't say I see it happening on a mass scale. At best, it might be tacked onto existing IVF procedures but the political/religious/moral concerns might block a lot of that. (Of course, a lot of people would want some level of selection if they were forced into IVF over reproductive problems but - homo hypocritus - they don't want to admit to wanting a 'designer baby', want to be seen acting towards getting one, or be known to have one; so a lot will depend on how well fertility services can spin selection as a normal thing or for preventing problems. Perhaps they could sell it as 'neurological defect prevention' or simply select without asking and count on people to quietly spread the word the same way that people spread the word about how best to signal one's way into the Ivy Leagues or where the best schools are.)

For mass appeal, it needs to be dramatic and undeniable: a genius factory.

Replies from: KatjaGrace↑ comment by KatjaGrace · 2014-10-08T23:03:34.047Z · LW(p) · GW(p)

An interesting datapoint, thanks.

One big difference in favor of selection for intelligence relative to testing for Down syndrome is that at the point where people don't get a Down syndrome test, they have a fairly low probability of their child having the disease (something like 1/1000 while they are youngish), whereas selection for intelligence is likely to increase intelligence.

↑ comment by paulfchristiano · 2014-10-07T22:06:53.748Z · LW(p) · GW(p)

28% is a pretty large number. I expect that in more abstract framings such as "improving general well-being" you would see larger rates of approval already, and marketing would push technologies towards framings that people liked.

↑ comment by PhilGoetz · 2014-10-07T01:36:15.545Z · LW(p) · GW(p)

I'm used to Robin Hanson presenting near / far mode dichotomies as "near mode greedy and stupid, far mode rational". But perhaps far mode allows the slow machinery of reason to be brought to bear, and most people's reasoning about IVF and embryo selection is victim to irrational ideas about ethics. In such cases, near mode (IVF is now possible) could produce more "rational" decisions because it bypasses rationality, while the reasoning that would be done in far mode has faulty premises and performs worse than random.

Replies from: KatjaGrace↑ comment by KatjaGrace · 2014-10-07T03:00:06.181Z · LW(p) · GW(p)

Interesting, I always interpreted Robin as casting near in a positive light (realistic, sensible) and far more negatively (self-aggrandizing and delusional).

Replies from: PhilGoetz↑ comment by PhilGoetz · 2014-10-07T11:48:11.961Z · LW(p) · GW(p)

People in far mode say they will exercise more, eat better, get a new job, watch documentaries instead of Game of Thrones, read classic literature, etc., and we could call those far-sighted plans "rational". Near mode gives in to inertia and laziness.

Replies from: Lumifer↑ comment by Lumifer · 2014-10-07T15:21:08.241Z · LW(p) · GW(p)

People in far mode say they will exercise more, eat better, get a new job, watch documentaries instead of Game of Thrones, read classic literature, etc., and we could call those far-sighted plans "rational".

We could, but we really should call these plans lies for they intend to deceive -- either oneself to gain near-term contentment, or others to gain social status.

comment by KatjaGrace · 2014-10-07T01:02:46.793Z · LW(p) · GW(p)

Did you change your mind about anything as a result of this week's reading?

Replies from: selylindi, Sebastian_Hagen, almostvoid↑ comment by selylindi · 2014-10-13T06:24:12.147Z · LW(p) · GW(p)

Remember that effect where you read a newspaper and mostly trust what it says, at least until one of the stories is about a subject you have expertise in, and then you notice that it's completely full of errors? It makes it very difficult to trust the newspaper on any subject after that. I started Bostrom's book very skeptical of how well he would be handling the material, since it covers many different fields of expertise that he cannot hope to have mastered.

My personal field of expertise is BCI. I did my doctoral work in that field, 2006-2011. I endorse every word that Bostrom wrote on BCI in the book. And consequently, in the opposite of the newspaper effect, I dramatically raised my confidence that Bostrom has accurately characterized the subjects I'm more ignorant of.

↑ comment by Sebastian_Hagen · 2014-10-07T21:13:53.486Z · LW(p) · GW(p)

How close we are to making genetic enhancement work in a big way. I'm not fully convinced of the magnitudes of gains from iterated embryo selection as projected by Bostrom; but even being able to drag the average level of genetically-determined intelligence up close to the current maxima of the distribution would be immensely helpful, and it's informative that Bostrom suggests we'll have the means within a few decades.

↑ comment by almostvoid · 2014-10-07T10:30:28.010Z · LW(p) · GW(p)

YES. AI under present knowledge systems wont deliver the promise of real live intelligence. And the author[s] get bogged in computational details that delay detract for greater efficiency in the cognitive field. Still it's a rippa of a project. Given how hopeless humans are globally machine logic might offer real time solutions such as less humans to start off with. Would solve heaps of other problems we have and are creating.