Yudkowsky on The Trajectory podcast

post by Seth Herd · 2025-01-24T19:52:15.104Z · LW · GW · 39 commentsThis is a link post for https://www.youtube.com/watch?v=YlsvQO0zDiE

Contents

39 comments

Edit: TLDR: EY focuses on the clearest and IMO most important part of his argument:

- Before building an entity smarter than you, you should probably be really sure its goals align with yours.

- Humans are historically really bad at being really sure of anything nontrivial on the first real try.

I found this interview notable as the most useful public statement yet of Yudkowsky's views. I congratulate both him and the host, Dan Fagella, for strategically improving how they're communicating their ideas.

Dan is to be commended for asking the right questions and taking the right tone to get a concise statement of Yudkowsky's views on what we might do to survive, and why. It also seemed likely that Yudkowsky has thought hard about his messaging after having his views both deliberately and accidentally misunderstood and panned. Despite having followed his thinking over the last 20 years, I gained new perspective on his current thinking from this interview.

Takeaways:

- Humans will probably fail to align the first takeover-capable AGI and all die

- Not because alignment is impossible

- But because humans are empirically foolish

- And historically rarely get hard projects right on the first real try

- Here he distinguishes first real try from getting some practice -

- Metaphor: launching a space probe vs. testing components

- Here he distinguishes first real try from getting some practice -

- Therefore, we should not build general AI

- This ban could be enforced by international treaties

- And monitoring the use of GPUs, which would legally all be run in data centers

- Yudkowsky emphasizes that governance is not within his expertise.

- We can probably get away with building some narrow tool AI to improve life

- This ban could be enforced by international treaties

- Then maybe we should enhance human intelligence before trying to build aligned AGI

- Key enhancement level: get smart enough to quit being overoptimistic about stuff working

- History is just rife with people being surprised their projects and approaches don't work

- Key enhancement level: get smart enough to quit being overoptimistic about stuff working

I find myself very much agreeing with his focus on human cognitive limitations and our poor historical record of getting new projects right on the first try. I researched cognitive biases as the focus of my neuroscience research for some years, and came to the conclusion that wow, humans have both major cognitive limitations (we can't really take in and weigh all the relevant data for complex questions like alignment) and have major biases, notably a sort of inevitable tendency to believe what seems like it will benefit us, rather than what's empirically most likely to be true. I still want to do a full post on this, but in the meantime I've written a mid-size question answer on Motivated reasoning/ confirmation bias as the most important cognitive bias [LW(p) · GW(p)].

My position to date has been that, despite those limitations, aligning a scaffolded language model agent [LW · GW] (our most likely first form of AGI) to follow instructions [LW · GW] is so easy that a monkey(-based human organization) could do it.

After increased engagement on these ideas, I'm worried that it may be my own cognitive limitations and biases that have led me to believe that. I now find myself thoroughly uncertain (while still thinking those routes to alignment have substantial advantages over other proposals).

And yet, I still think the societal rush toward creating general intelligence is so large that working on ways to align the type of AGI we're most likely to get is a likelier route to success than attempting to halt that rush.

But the two could possibly work in parallel.

I notice that fully general AI is not only the sort that is most likely to kill us, but also the type that is more obviously likely to put us all out of work, uncomfortably quickly. By fully general, I mean capable of learning to do arbitrary new tasks. Arbitrary tasks would include any particular job, and how to take over the world.

This confluence of problems might be a route to convincing people that we should slow the rush toward AGI.

39 comments

Comments sorted by top scores.

comment by RussellThor · 2025-01-24T20:20:01.780Z · LW(p) · GW(p)

"Then maybe we should enhance human intelligence"

Various paths to this seem either impossible or impractical.

Simple genetics seems obviously too slow and even in the best case unlikely to help. E.g say you enhance someone to IQ 200, its not clear why that would enable them to control and IQ 2,000 AI.

Neuralink - perhaps but if you can make enhancement tech that would help, you could also easily just use it to make ASI - so extreme control would be needed. E.g. if you could interface to neurons and connect them to useful silicon, then the silicon itself would be ASI.

Whole Brain Emulation seems most likely to work, with of course the condition that you don't make ASI when you could, and instead take a bit more time to make the WBE.

If there was a well coordinated world with no great power conflicts etc then getting weak super intelligence to help with WBE would be the path I would choose.

In the world we live in, it probably comes down to getting whoever gets to weak ASI first to not push the "self optimize" button and instead give the worlds best alignment researchers time to study and investigate the paths forward with the help of such AI as much as possible. Unfortunately there is too high a chance that OpenAI will be first and they are one of the least trustworthy, most power seeking orgs it seems.

Replies from: GeneSmith, Seth Herd, hastings-greer, stephen-mcaleese↑ comment by GeneSmith · 2025-01-25T18:04:33.359Z · LW(p) · GW(p)

It's probably worth noting that there's enough additive genetic variance in the human gene pool RIGHT NOW to create a person with a predicted IQ of around 1700.

You're not going to be able to do that in one shot due to safety concerns, but based on how much we've been able to influence traits in animals through simple selective breeding, we ought to be able to get pretty damn far if we are willing to do this over a few generations. Chickens are literally 40 standard deviations heavier than their wild-type ancestors, and other types of animals are tens of standard deviations away from THEIR wild-type ancestors in other ways. A human 40 standard deviations away from natural human IQ would have an IQ of 600.

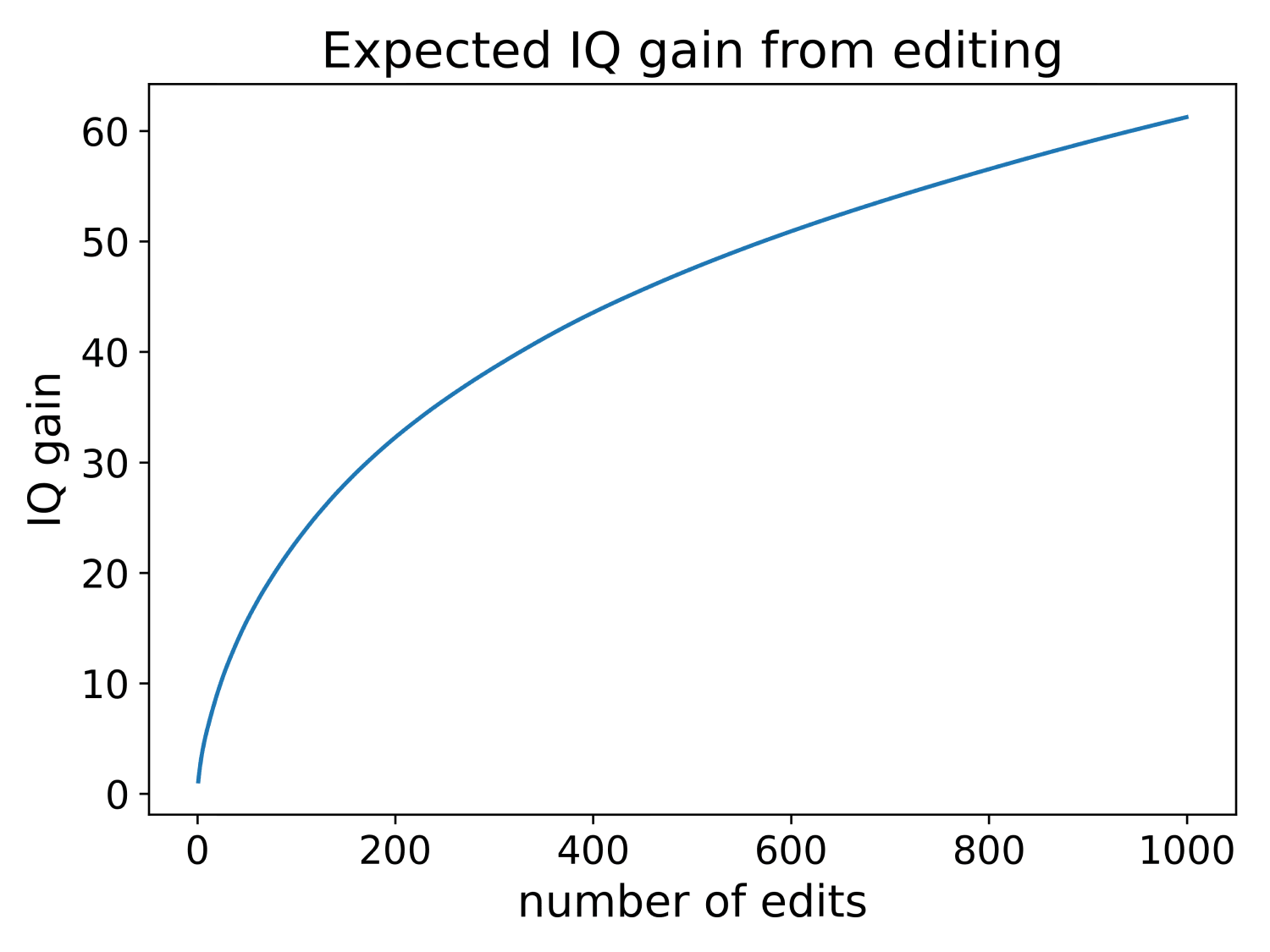

Even with the data we have TODAY we could almost certainly make someone in the high 100s to low 200s just with gene editing an a subset of the not-all-that-great IQ test data we've already collected:

If one of the big government biobanks just allows the data that has ALREADY BEEN COLLECTED to be used to create an IQ predictor, we could nearly double the expected gain (in fact, we would more than double it for higher numbers of edits)

All we need is time. In my view it's completely insane that we're rolling the dice on continued human existence like this when we will literally have human supergeniuses walking around in a few decades.

The biggest bottleneck for this field is a reliably technique to convert a stem cell to an embryo. There's a very promising project that might yield a workable technique to do that and the guy who wants to run it can't because he doesn't have $4 million to do primate testing (despite the early signs showing it will pretty plausibly work).

If we have time, human genetic engineering literally is the solution to the alignment problem. We are maybe 5-8 years out from being able to make above-average altruism, happy, healthy supergeniuses and instead of waiting a few more decades for those kids to grow up, we've collectively decided to roll the dice on making a machine god.

We have this incredible situation right now where the US government is spending tens of billions of dollars on infrastructure designed to make all of its citizens obsolete, powerless and possibly dead, yet won't even spend a few million on research to make humans better.

Replies from: Archimedes, Seth Herd, johnswentworth, Purplehermann, RussellThor, louis-wenger↑ comment by Archimedes · 2025-01-26T23:07:24.500Z · LW(p) · GW(p)

It's probably worth noting that there's enough additive genetic variance in the human gene pool RIGHT NOW to create a person with a predicted IQ of around 1700.

I’d be surprised if this were true. Can you clarify the calculation behind this estimate?

The example of chickens bred 40 standard deviations away from their wild-type ancestors is impressive, but it's unclear if this analogy applies directly to IQ in humans. Extrapolating across many standard deviations in quantitative genetics requires strong assumptions about additive genetic variance, gene-environment interactions, and diminishing returns in complex traits. What evidence supports the claim that human IQ, as a polygenic trait, would scale like weight in chickens and not, say, running speed where there are serious biomechanical constraints?

Replies from: GeneSmith, Mo Nastri↑ comment by GeneSmith · 2025-01-27T17:56:48.865Z · LW(p) · GW(p)

I should probably clarify; it's not clear that we could create someone with an IQ of 1700 in a meaningful sense. There is that much additive variance, sure. But as you rightly point out, we're probably going to run into pretty serious constraints before that (size of the birth canal being an obvious one, metabolic constraints being another)

I suspect that to support someone even in the 300 range would require some changes to other aspects of human nature.

The main purpose of making this post was simly to point out that there's a gigantic amount of potential within the existing human gene pool to modify traits in desirable ways. Enough to go far, far beyond the smartest people that have ever lived. And that if humans decide they want it, this is in fact a viable path towards an incredibly bright almost limitless future that doesn't require building a (potentially) uncontrollable computer god.

↑ comment by Mo Putera (Mo Nastri) · 2025-01-27T05:36:30.963Z · LW(p) · GW(p)

Can you clarify the calculation behind this estimate?

↑ comment by Seth Herd · 2025-01-25T20:15:19.906Z · LW(p) · GW(p)

I buy your argument for why dramatic enhancement is possible. I just don't see how we get the time. I can barely see a route to a ban, and I can't see a route to a ban through enough to prevent reckless rogue actors from building AGI within ten or twenty years.

And yes, this is crazy as a society. I really hope we get rapidly wiser. I think that's possible; look at the way attitudes toward COVID shifted dramatically in about two weeks when the evidence became apparent, and people convinced their friends rapidly. Connor Leahy made some really good points about the nature of persuasion and societal belief formation in his interview on the previous episode of the same podcast. It's in the second half of the podcast; the first half is super irritating as they get in an argument about the "nature of ethics" despite having nearly identical positions. I might write that up, too - it makes entirely different but equally valuable points IMO.

Replies from: GeneSmith, TsviBT↑ comment by GeneSmith · 2025-01-25T22:06:11.404Z · LW(p) · GW(p)

This is why I wrote a blog about enhancing adult intelligence [LW · GW] at the end of 2023; I thought it was likely that we wouldn't have enough time.

I'm just going to do the best I can to work on both these things. Being able to do a large number of edits at the same time is one of the key technologies for both germline and adult enhancement, which is what my company has been working on. And though it's slow, we have made pretty significant progress in the last year including finding several previously unknown ways to get higher editing efficiency.

I still think the most likely way alignment gets solved is just smart people working on it NOW, but it would sure be unfortunate if we DO get a pause and no one has any game plan for what to do with that time.

Replies from: Seth Herd↑ comment by TsviBT · 2025-01-26T04:48:38.354Z · LW(p) · GW(p)

-

You people are somewhat crazy overconfident about humanity knowing enough to make AGI this decade. https://www.lesswrong.com/posts/sTDfraZab47KiRMmT/views-on-when-agi-comes-and-on-strategy-to-reduce [LW · GW]

-

One hope on the scale of decades is that strong germline engineering should offer an alternative vision to AGI. If the options are "make supergenius non-social alien" and "make many genius humans", it ought to be clear that the latter is both much safer and gets most of the hypothetical benefits of the former.

↑ comment by Seth Herd · 2025-01-26T05:43:08.156Z · LW(p) · GW(p)

Sorry if I sound overconfident. My actual considered belief is that AGI this decade is quite possible, and it is crazy overconfident in longer timeline predictions to not prepare seriously for that possibility.

Multigenerational stuff needs a way longer timeline. There's a lot of space between three years and two generations.

↑ comment by johnswentworth · 2025-01-28T21:57:06.130Z · LW(p) · GW(p)

40 standard deviations away from natural human IQ would have an IQ of 600

Nitpick: 700.

Replies from: GeneSmith↑ comment by Purplehermann · 2025-01-26T00:35:08.468Z · LW(p) · GW(p)

Musk at least is looking to upgrade humans with Neuralink

If he can add working memory can be a multiplier for human capabilities, likely to scale with increased IQ.

Any reason the 4M$ isn't getting funded?

Replies from: GeneSmith↑ comment by GeneSmith · 2025-01-26T02:57:05.472Z · LW(p) · GW(p)

Any reason the 4M$ isn't getting funded?

No one with the money has offered to fund it yet. I'm not even sure they're aware this is happening.

I've got a post coming out about this in the next few weeks, so I'm hoping that leads to some kind of focus on this area.

OpenPhil used to fund stuff like this but they've become extremely image conscious in the aftermath of the FTX blow up. So far as I can tell they basically stay safely inside of norms that are acceptable to the democratic donor class now.

Replies from: Zack_M_Davis↑ comment by Zack_M_Davis · 2025-02-04T07:03:06.124Z · LW(p) · GW(p)

No one with the money has offered to fund it yet. I'm not even sure they're aware this is happening.

Um, this seems bad. I feel like I should do something, but I don't personally have that kind of money to throw around. @habryka [LW · GW], is this the LTFF's job??

↑ comment by RussellThor · 2025-01-25T20:07:44.291Z · LW(p) · GW(p)

"with a predicted IQ of around 1700." assume you mean 170. You can get 170 by cloning existing IQ 170 with no editing necessary so not sure the point.

I don't see how your point addresses my criticism - if we assume no multi-generational pause then gene editing is totally out. If we do, then I'd rather Neuralink or WBE. Related to here

https://www.lesswrong.com/posts/7zxnqk9C7mHCx2Bv8/beliefs-and-state-of-mind-into-2025 [LW · GW]

(I believe that WBE can get all the way to a positive singularity - a group of WBE could self optimize, sharing the latest HW as it became available in a coordinated fashion so no-one or group would get a decisive advantage. This would get easier for them to coordinate as the WBE got more capable and rational.)

I don't believe that with current AI and an unlimited time and gene editing you could be sure you have solved alignment. Lets say you get many IQ 200, who believe they have alignment figured out. However the overhang leads you to believe that a data center size AI will self optimize from 160 to 300-400 IQ if someone told it to self optimize. Why should you believe an IQ 200 can control 400 any more than IQ 80 could control 200? (And if you believe gene editing can get IQ 600, then you must believe the AI can self optimize well above that. However I think there is almost no chance you will get that high because diminishing returns, correlated changes etc)

Additionally there is unknown X and S risk from a multi-generational pause with our current tech. Once a place goes bad like N Korea, then tech means there is likely no coming back. If such centralization is a one way street, then with time an every larger % of the world will fall under such systems, perhaps 100%. Life in N Korea is net negative to me. "1984" can be done much more effectively with todays tech than what Orwell could imagine and could be a long term strong stable attractor with our current tech as far as we know. A pause is NOT inherently safe!

Replies from: GeneSmith↑ comment by GeneSmith · 2025-01-25T22:02:24.207Z · LW(p) · GW(p)

No, I mean 1700. There are literally that many variants. On the order of 20,000 or so.

You're correct of course that if we don't see some kind of pause, gene editing is probably not going to help.

But you don't need a multi-generational one for it to have a big effect. You could create people smarter than any that have ever lived in a single generation.

(I believe that WBE can get all the way to a positive singularity - a group of WBE could self optimize, sharing the latest HW as it became available in a coordinated fashion so no-one or group would get a decisive advantage. This would get easier for them to coordinate as the WBE got more capable and rational.)

Maybe, but my impression is whole brain emulation is much further out technologically speaking than gene editing. We already have basically all the tools necessary to do genetic enhancement except for a reliable way to convert edited cells into sperm, eggs, or embryos. Last I checked we JUST mapped the neuronal structure of fruit flies for the first time last year and it's still not enough to recreate the functionality of the fruit fly because we're still missing the connectome.

Maybe some alternative path like high fidelity fMRI will yield something. But my impression is that stuff is pretty far out.

I also worry about the potential for FOOM with uploads. Genetically engineered people could be very, very smart, but they can't make a million copies of themselves in a few hours. There are natural speed limits to biology that make it less explosive than digital intelligence.

Why should you believe an IQ 200 can control 400 any more than IQ 80 could control 200? (And if you believe gene editing can get IQ 600, then you must believe the AI can self optimize well above that. However I think there is almost no chance you will get that high because diminishing returns, correlated changes etc)

The hope is of course that at some point of intelligence we will discover some fundamental principles that give us confidence our current alignment techniques will extrapolate to much higher levels of intelligence.

Additionally there is unknown X and S risk from a multi-generational pause with our current tech. Once a place goes bad like N Korea, then tech means there is likely no coming back. If such centralization is a one way street, then with time an every larger % of the world will fall under such systems, perhaps 100%.

This is an interesting take that I hadn't heard before, but I don't really see any reason to think our current tech gives a big advantage to autocracy. The world has been getting more democratic and prosperous over time. There are certainly local occasional reversals, but I don't see any compelling reason to think we're headed towards a permanent global dictatorship with current tech.

I agree the risk of a nuclear war is still concerning (as is the risk of an engineered pandemic), but these risks seemed dwarfed by those presented by AI. Even if we create aligned AGI, the default outcome IS a global dictatorship, as the economic incentives are entirely pointed towards aligning it with its creators and controllers as opposed to the rest of humanity.

Replies from: RussellThor↑ comment by RussellThor · 2025-01-26T03:44:39.815Z · LW(p) · GW(p)

OK I guess there is a massive disagreement between us on what IQ increases gene changes can achieve. Just putting it out there, if you make an IQ 1700 person they can immediately program an ASI themselves, have it take over all the data centers rule the world etc.

For a given level of IQ controlling ever higher ones, you would at a minimum require the creature to decide morals, ie. is Moral Realism true, or what is? Otherwise with increasing IQ there is the potential that it could think deeply and change its values, additionally believe that they would not be able to persuade lower IQ creatures of such values, therefore be forced into deception etc.

Replies from: GeneSmith↑ comment by GeneSmith · 2025-01-29T08:21:44.527Z · LW(p) · GW(p)

I really should have done a better job explaining this in the original comment; it's not clear we could actually make someone with an IQ of 1700, even if we were to stack additive genetic variants one generation after the next. For one thing you probably need to change other traits alongside the IQ variants to make a viable organism (larger birth canals? Stronger necks? Greater mental stability?). And for another it may be that if you just keep pushing in the same "direction" within some higher dimensional vector space, you'll eventually end up overshooting some optimum. You may need to re-measure intelligence every generation and then do editing based on whatever genetic variants are meaningfully associated with higher cognitive performance in those enhanced people to continue to get large generation-to-generation gains.

I think these kinds of concerns are basically irrelevant unless there is a global AI disaster that hundreds of millions of people and gets the tech banned for a century or more. At best you're probably going to get one generation of enhanced humans before we make the machine god.

For a given level of IQ controlling ever higher ones, you would at a minimum require the creature to decide morals, ie. is Moral Realism true, or what is?

I think it's neither realistic nor necessary to solve these kinds of abstract philosophical questions to make this tech work. I think we can get extremely far by doing nothing more than picking low hanging fruit (increasing intelligence, decreasing disease, increasing conscientiousness and mental energy, etc)

I plan to leave those harder questions to the next generation. It's enough to just go after the really easy wins.

additionally believe that they would not be able to persuade lower IQ creatures of such values, therefore be forced into deception etc.

Manipulation of others by enhanced humans is somewhat of a concern, but I don't think it's for this reason. I think the biggest concern is just that smarter people will be better at achieving their goals, and manipulating other people into carrying out one's will is a common and time-honored tactic to make that happen.

In theory we could at least reduce this tendency a little bit by maybe tamping down the upper end of sociopathic tendencies with editing, but the issue is personality traits have a unique genetic structure with lots of non-linear interactions. That means you need larger sample sizes to figure out what genes need editing.

↑ comment by LWLW (louis-wenger) · 2025-01-28T18:59:07.199Z · LW(p) · GW(p)

This might be a dumb/not particularly nuanced question, but what are the ethics of creating what would effectively be BSI? Chickens have a lot of health problems due to their size, they weren't meant to be that big. Might something similar be true for BSI? How would a limbic system handle that much processing power: I'm not sure it would be able to. How deep of a sense of existential despair and terror might that mind feel?

TLDR: Subjective experience would likely have a vastly higher ceiling and vastly lower floor. To the point where a BSI's (or ASI's for that matter) subjective experience would look like +/-inf to current humans.

Replies from: GeneSmith↑ comment by GeneSmith · 2025-01-28T20:40:03.889Z · LW(p) · GW(p)

It's not a dumb question. It's a fair concern.

I think the main issue with chickens is not that faster growth is inevitably correlated with health problems, but that chicken breeders are happy to trade off a good amount of health for growth so long as the chicken doesn't literally die of health problems.

You can make different trade-offs! We could have fast-growing chickens with much better health if breeders prioritized chicken health more highly.

How would a limbic system handle that much processing power: I'm not sure it would be able to. How deep of a sense of existential despair and terror might that mind feel?

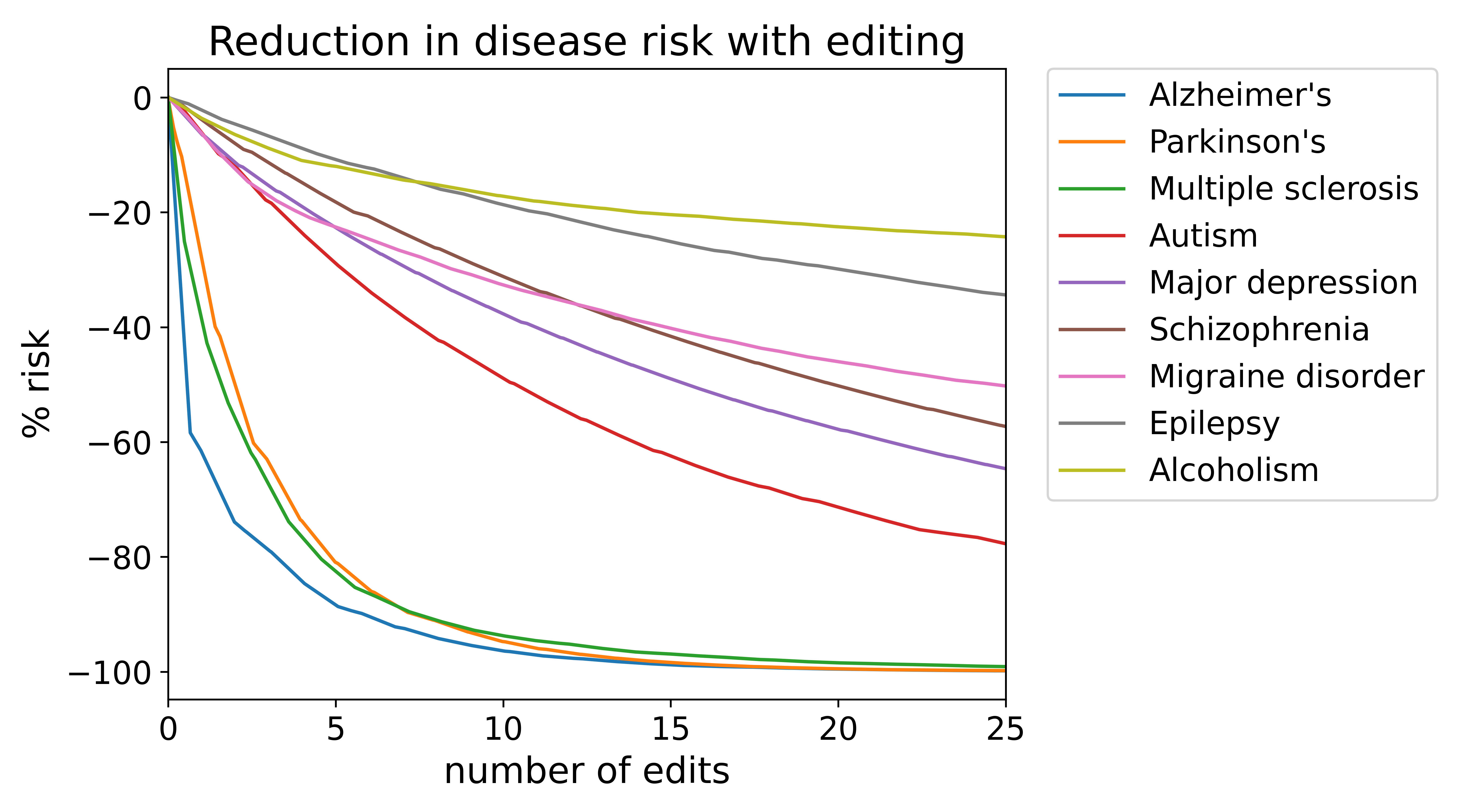

I don't think you'll need to worry about this stuff until you get really far out of distribution. If you're staying anywhere near the normal human distribution you should be able to do pretty simple stuff like select against the risk of mental disorders while increasing IQ.

Replies from: louis-wenger↑ comment by LWLW (louis-wenger) · 2025-01-29T04:30:53.088Z · LW(p) · GW(p)

"I don't think you'll need to worry about this stuff until you get really far out of distribution." I may sound like I'm just commenting for the sake of commenting but I think that's something you want to be crystal clear on. I'm pessimistic in general and this situation is probably unlikely but I guess one of my worst fears would be creating uberpsychosis. Sounding like every LWer, my relatively out of distribution capabilities made my psychotic delusions hyper-analytic/1000x more terrifying & elaborate than they would have been with worse working memory/analytic abilities (once I started ECT I didn't have the horsepower to hyperanalyze existence as much). I guess the best way to describe it was that I could feel the terror of just how bad -inf would truly be as opposed to having an abstract/detached view that -inf = bad. And I wouldn't want anyone else to go through something like that, let alone something much scarier/worse.

Replies from: GeneSmith↑ comment by GeneSmith · 2025-01-29T08:05:23.153Z · LW(p) · GW(p)

I can't really speak to your specific experience too well other than to simply say I'm sorry you had to go through that. We actually see that in general, mental health prevalence actually declines with increasing IQ. The one exception to this is aspbergers.

I do think it's going to be very important to address mental health issues as well. Many mental health conditions are reasonably editable; we could reduce the prevalence of some by 50%+ with editing.

↑ comment by LWLW (louis-wenger) · 2025-01-29T08:43:38.548Z · LW(p) · GW(p)

That makes sense. This may just be wishful thinking on my part/trying to see a positive that doesn't exist, but psychotic tendencies might have higher representation among the population you're interested in than the trend you've described might suggest. Taking the very small, subjective sample that is "the best" mathematician of each of the previous four centuries (Newton, Euler, Gauss, and Grothendieck), 50% of them (Newton and Grothendieck) had some major psychotic experiences (admittedly vastly later in life than is typical for men).

Again, I'm probably being too cautious, but I'm just very apprehensive about approaching the creation of sentient life with the attitude that increased iq = increased well-being. If that intution is incorrect, it would have catastrophic consequences.

↑ comment by Seth Herd · 2025-01-24T21:29:59.843Z · LW(p) · GW(p)

Oh, I agree. I liked his framing of the problem, not his proposed solution.

On that regard specifically:

If the main problem with humans being not-smart-enough is being overoptimistic, maybe just make some organizational and personal belief changes to correct this?

IF we managed to get smarter about rushing toward AGI (a very big if), it seems like an organizational effort with "let's get super certain and get it right the first time for a change" as its central tenet would be a big help, with or without intelligence enhancement.

I very much doubt any major intelligence enhancement is possible in time. And it would be a shotgun approach to solve one particular problem of overconfidence/confirmation bias. Of course other intelligence enhancements would be super helpful too. But I'm not sure that route is at all realistic.

I'd put Whole Brain Emulation in its traditional form as right out. We're not getting either that level of scanning nor simulation nearly in time.

The move here isn't that someone of IQ 200 could control an IQ 2000 machine, but that they could design one with motivations that actually aligned with theirs/humanity's - so it wouldn't need to be controlled.

I agree with you about the world we live in. See my post If we solve alignment, do we die anyway? [LW · GW] for more on the logic of AGI proliferation and the dangers of telling it to self-improve.

But that's dependent on getting to intent aligned [LW · GW] AGI in the first place. Which seems pretty sketchy.

Agreed that OpenAI just reeks of overconfidence, motivated reasoning, and move-fast-and-break-things. I really hope Sama wises up once he has a kid and feels viscerally closer to actually launching a machine mind that can probably outthink him if it wants to.

Replies from: weibac↑ comment by Milan W (weibac) · 2025-01-24T21:42:30.821Z · LW(p) · GW(p)

I really hope Sama wises up once he has a kid

Context: He is married to a cis man. Not sure if he has spoken about considering adoption or surrogacy.

Replies from: Seth Herd↑ comment by Seth Herd · 2025-01-24T22:14:26.483Z · LW(p) · GW(p)

He just started talking about adopting. I haven't followed the details. Becoming a parent, including an adoptive parent who takes it seriously, is often a real growth experience from what I've seen.

Replies from: weibac, weibac↑ comment by Milan W (weibac) · 2025-02-23T18:57:19.909Z · LW(p) · GW(p)

↑ comment by Milan W (weibac) · 2025-01-24T22:15:05.316Z · LW(p) · GW(p)

That is good news. Thanks.

↑ comment by Hastings (hastings-greer) · 2025-01-25T14:23:06.167Z · LW(p) · GW(p)

Lets imagine a 250 IQ unaligned paperclip maximizer that finds itself in the middle of an intelligence explosion. Let’s say that it can’t see how to solve alignment. It needs a 350 IQ ally to preserve any paperclips in the multipolar free-for-all. Will it try building an unaligned utility maximizer with a completely different architecture and 350 IQ?

I’d imagine that it would work pretty hard to not try that strategy, and to make sure that none of its sisters or rivals try that strategy. If we can work out what a hypergenius would do in our shoes, it might behoove us to copy it, even if it seems hard.

↑ comment by Stephen McAleese (stephen-mcaleese) · 2025-01-25T11:01:28.717Z · LW(p) · GW(p)

I personally don't think human intelligence enhancement is necessary for solving AI alignment (though I may be wrong). I think we just need more time, money and resources to make progress.

In my opinion, the reason why AI alignment hasn't been solved yet is because the field of AI alignment has only been around for a few years and has been operating with a relatively small budget.

My prior is that AI alignment is roughly as difficult as any other technical field like machine learning, physics or philosophy (though philosophy specifically seems hard). I don't see why humanity can make rapid progress on fields like ML while not having the ability to make progress on AI alignment.

Replies from: RussellThor, whestler↑ comment by RussellThor · 2025-01-25T19:48:38.303Z · LW(p) · GW(p)

ok I see how you could think that, but I disagree that time and more resources would help alignment much if at all, esp before GPT4.0. See here https://www.lesswrong.com/posts/7zxnqk9C7mHCx2Bv8/beliefs-and-state-of-mind-into-2025 [LW · GW]

Diminishing returns kick in, and actual data from ever more advanced AI is essential to stay on the right track and eliminate incorrect assumptions. I also disagree that alignment could be "solved" before ASI is invented - we would just think we had it solved but could be wrong. If its just as hard as physics, then we would have untested theories, that are probably wrong, e.g. like SUSY would be help solve various issues and be found by the LHC which didn't happen.

↑ comment by whestler · 2025-01-25T18:31:47.394Z · LW(p) · GW(p)

I don't see why humanity can make rapid progress on fields like ML while not having the ability to make progress on AI alignment.

The reason normally given is that AI capability is much easier to test and optimise than AI safety. Much like philosophy, it's very unclear when you are making progress, and sometimes unclear if progress is even possible. It doesn't help that AI alignment isn't particularly profitable in the short term.

comment by Stephen McAleese (stephen-mcaleese) · 2025-01-25T10:43:55.008Z · LW(p) · GW(p)

I have an argument for halting AGI progress based on an analogy to the Covid-19 pandemic. Initially the government response to the pandemic was widespread lockdowns. This is a rational response given that at first, given a lack of testing infrastructure and so on, it wasn't possible to determine whether someone had Covid-19 or not so the safest option was to just avoid all contact with all other people via lockdowns.

Eventually we figured out practices like testing and contact tracing and then infected individuals could self-isolate if they came into contact with an infected individual. This approach is smarter and less costly than blanket lockdowns.

In my opinion, regarding AGI, the state we are in is similar to the beginning of the Covid-19 pandemic where there is a lot of uncertainty regarding the risks and capabilities of AI and which alignment techniques would be useful so a rational response would be the equivalent of an 'AI lockdown' by halting progress on AI until we understand it better and can come up with better alignment techniques.

The most obvious rebuttal to this argument is that pausing AGI progress would have a high economic opportunity cost (no AGI). But Covid lockdowns did too and society was willing to pay a large economic price to avert Covid deaths.

The economic opportunity cost of pausing AGI progress might be larger than the covid lockdowns but the benefits would be larger too: averting existential risk from AGI is a much larger benefit than avoiding covid deaths.

So in summary I think the benefits of pausing AGI progress outweigh the costs.

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2025-01-25T18:50:24.555Z · LW(p) · GW(p)

I think many people agree with you here. Particularly, I like Max Tegmark's post Entente Delusion [LW · GW]

But the big question is "How?" What are the costs of your proposed mechanism of global pause?

I think there are better answers to how to implement a pause through designing better governance methods.

Replies from: stephen-mcaleese↑ comment by Stephen McAleese (stephen-mcaleese) · 2025-01-25T20:35:19.627Z · LW(p) · GW(p)

Unfortunately I don't think many people agree with me (outside of the LW bubble) and that what I'm proposing is still somewhat outside the Overton window. The cognitive steps that are needed are as follows:

- Being aware of AGI as a concept and a real possibility in the near future.

- Believing that AGI poses a significant existential risk.

- Knowing about pausing AI progress as a potential solution to AGI risk and seeing it as a promising solution.

- Having a detailed plan to implement the proposed pause in practice.

A lot of people are not even at step 1 and just think that AI is ChatGPT. People like Marc Andreessen and Yan LeCun are at step 1. Many people on LW are at step 2 or 3. But we need someone (ideally in the government like a president or prime minister) at step 4. My hope is that that could happen in the next several years if necessary. Maybe AI alignment will be easy and it won't be necessary but I think we should be ready for all possible scenarios.

I don't have any good ideas right now for how an AI pause might work in practice. The main purpose of my comment was to propose argument 3 conditional on the previous two arguments and maybe try to build some consensus.

Replies from: nathan-helm-burger↑ comment by Nathan Helm-Burger (nathan-helm-burger) · 2025-01-25T20:39:08.367Z · LW(p) · GW(p)

Several years? I don't think we have that long. I'm thinking mid to late 2026 for when we hit AGI. I think 1,2,3 can change very quickly indeed, like with the covid lockdowns. People went from 'doubt' to 'doing' in a short amount of time, once evidence was overwhelmingly clear.

So having 4 in place at the time that occurs seems key. Also, trying to have plans in place for adequately convincing demos which may convince people before disaster strikes seems highly useful.

comment by Milan W (weibac) · 2025-01-24T20:07:14.251Z · LW(p) · GW(p)

I think this post is very clear-written. Thanks.

comment by Filipe · 2025-02-04T14:48:17.361Z · LW(p) · GW(p)

I turned a an automatic .str transcription into a more or less coherent transcription. Corrections welcome:

https://docs.google.com/document/d/1e85zhh8qROTyE_0oaKdU7hhEyZcQqKzG3z0YRGeBZzc