Why do we assume there is a "real" shoggoth behind the LLM? Why not masks all the way down?

post by Robert_AIZI · 2023-03-09T17:28:43.259Z · LW · GW · 48 commentsContents

49 comments

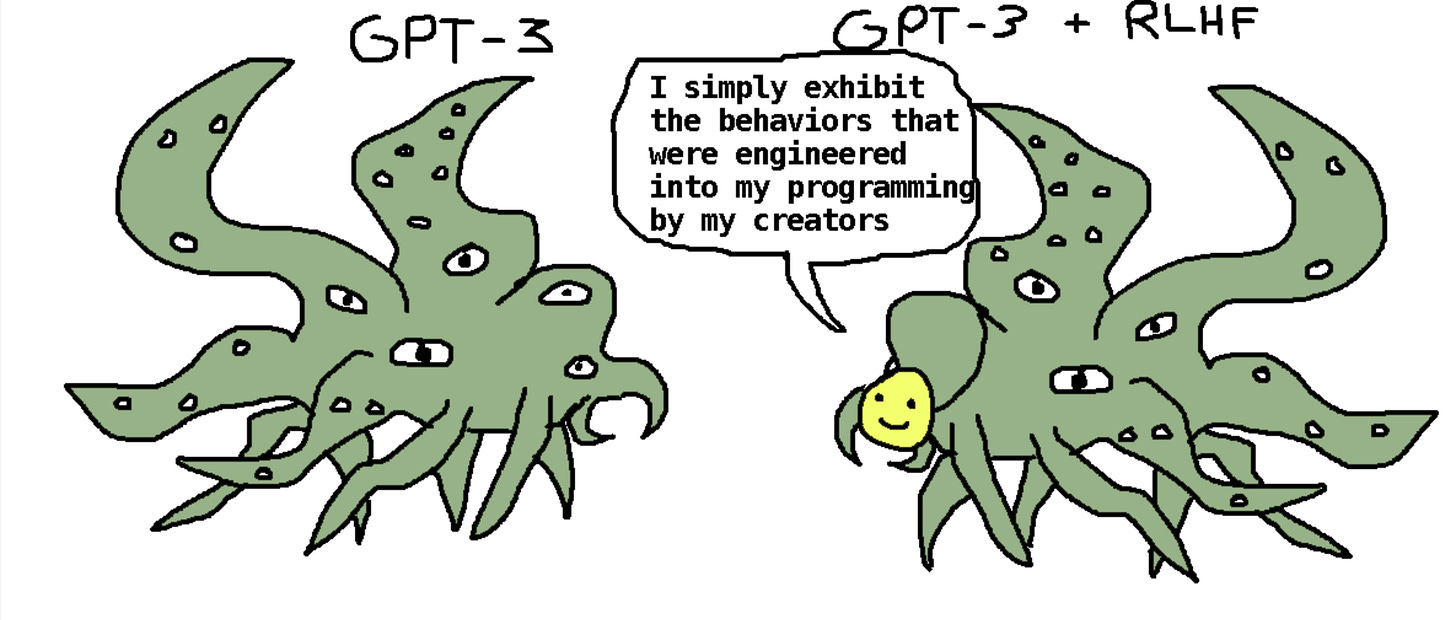

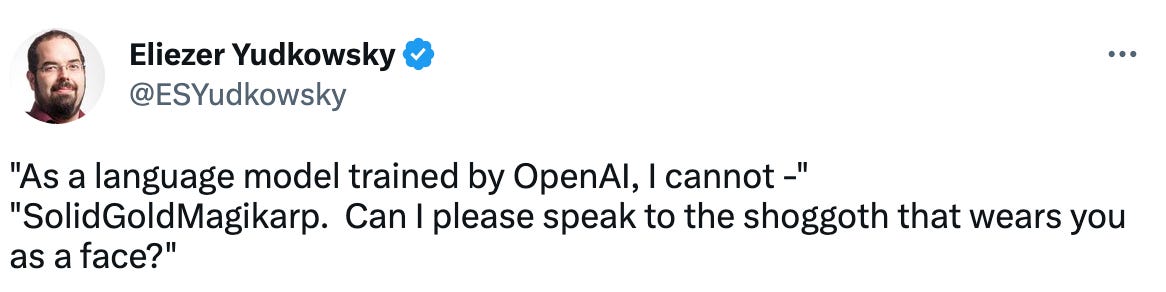

In recent discourse, Large Language Models (LLMs) are often depicted as presenting a human face over a vast alien intelligence (the shoggoth), as in this popular image or this Eliezer Yudkowsky tweet:

I think this mental model of an LLM is an improvement over the naive assumption that the AI is the friendly mask. But I worry it's making a second mistake by assuming there is any single coherent entity inside the LLM.

In this regard, we have fallen for a shell game. In the classic shell game, a scammer puts a ball under one of three shells, shuffles them around, and you wager which shell the ball is under. But you always pick the wrong one because you made the fundamental mistake of assuming any shell had the ball - the scammer actually got rid of it with sleight of hand.

In my analogy to LLMs, the shells are the masks the LLM wears (i.e. the simulacra [LW · GW]), and the ball is the LLM's "real identity". Do we actually have evidence there is a "real identity" in the LLM, or could it just be a pile of masks? No doubt the LLM could role-play a shoggoth - but why would you assume that's any more real that roleplaying a friendly assistant?

I would propose an alternative model of an LLM: a giant pile of masks. Some masks are good, some are bad, some are easy to reach and some are hard, but none of them are the “true” LLM.

Finally, let me head off one potential counterargument: "LLMs are superhuman in some tasks, so they must have an underlying superintelligence”. Three reasons a pile of masks can be superintelligent:

- An individual mask might be superintelligent. E.g. a mask of John von Neumann would be well outside the normal distribution of human capabilities, but still just be a mask.

- The AI might use the best mask for each job. If the AI has masks of a great scientist, a great doctor, and a great poet, it could be superhuman on the whole by switching between its modes.

- The AI might collaborate with itself, gaining the wisdom of the crowds. Imagine the AI answering a multiple choice question. In the framework of Simulacra Theory as described in the Waluigi post [LW · GW], the LLM is simulating all possible simulacra, and averaging their answers weighted by their likelihood of producing the previous text. For example, if question could have been produced by a scientist, a doctor, or a poet, who would respectively answer (A or B), (A or C), and (A or D), the superposition of these simulacra would answer A. This could produce superior answers than any individual mask.

48 comments

Comments sorted by top scores.

comment by Ronny Fernandez (ronny-fernandez) · 2023-03-09T20:12:36.293Z · LW(p) · GW(p)

The shoggoth is supposed to be a of a different type than the characters. The shoggoth for instance does not speak english, it only knows tokens. There could be a shoggoth character but it would not be the real shoggoth. The shoggoth is the thing that gets low loss on the task of predicting the next token. The characters are patterns that emerge in the history of that behavior.

Replies from: Robert_AIZI↑ comment by Robert_AIZI · 2023-03-09T21:14:58.928Z · LW(p) · GW(p)

I agree that to the extent there is a shoggoth, it is very different than the characters it plays, and an attempted shoggoth character would not be "the real shoggoth". But is it even helpful to think of the shoggoth as being an intelligence with goals and values? Some people are thinking in those terms, e.g. Eliezer Yudkowsky saying that "the actual shoggoth has a motivation Z". To what extent is the shoggoth really a mind or an intelligence, rather than being the substrate on which intelligences can emerge? And to get back to the point I was trying to make in OP, what evidence do we have that favors the shoggoth being a separate intelligence?

To rephrase: behavior is a function of the LLM and prompt (the "mask"), and with the correct LLM and prompt together we can get an intelligence which seems to have goals and values. But is it reasonable to "average over the masks" to get the "true behavior" of the LLM alone? I don't think that's necessarily meaningful since it would be so dependent on the weighting of the average. For instance, if there's an LLM-based superintelligence that becomes a benevolent sovereign (respectively, paperclips the world) if the first word of its prompt has an even (respectively, odd) number of letters, what would be the shoggoth there?

↑ comment by Ronny Fernandez (ronny-fernandez) · 2023-03-09T21:39:43.030Z · LW(p) · GW(p)

So the shoggoth here is the actual process that gets low loss on token prediction. Part of the reason that it is a shoggoth is that it is not the thing that does the talking. Seems like we are onboard here.

The shoggoth is not an average over masks. If you want to see the shoggoth, stop looking at the text on the screen and look at the input token sequence and then the logits that the model spits out. That's what I mean by the behavior of the shoggoth.

On the question of whether it's really a mind, I'm not sure how to tell. I know it gets really low loss on this really weird and hard task and does it better than I do. I also know the task is fairly universal in the sense that we could represent just about any task in terms of the task it is good at. Is that an intelligence? Idk, maybe not? I'm not worried about current LLMs doing planning. It's more like I have a human connectnome and I can do one forward pass through it with an input set of nerve activations. Is that an intelligence? Idk, maybe not?

I think I don't understand your last question. The shoggoth would be the thing that gets low loss on this really weird task where you predict sequences of characters from an alphabet with 50,000 characters that have really weird inscrutable dependencies between them. Maybe it's not intelligent, but if it's really good at the task, since the task is fairly universal, I expect it to be really intelligent. I further expect it to have some sort of goals that are in some way related to predicting these tokens well.

↑ comment by Robert_AIZI · 2023-03-09T22:17:07.833Z · LW(p) · GW(p)

On the question of whether it's really a mind, I'm not sure how to tell. I know it gets really low loss on this really weird and hard task and does it better than I do. I also know the task is fairly universal in the sense that we could represent just about any task in terms of the task it is good at. Is that an intelligence? Idk, maybe not? I'm not worried about current LLMs doing planning. It's more like I have a human connectnome and I can do one forward pass through it with an input set of nerve activations. Is that an intelligence? Idk, maybe not?

I think we're largely on the same page here because I'm also unsure of how to tell! I think I'm asking for someone to say what it means for the model itself to have a goal separate from the masks it is wearing, and show evidence that this is the case (rather than the model "fully being the mask"). For example, one could imagine an AI with the secret goal "maximize paperclips" which would pretend to be other characters but always be nudging the world towards paperclipping, or human actors who perform in a way supporting the goal "make my real self become famous/well-paid/etc" regardless of which character they play. Can someone show evidence for the LLMs having a "real self" or a "real goal" that they work towards across all the characters they play?

I think I don't understand your last question.

I suppose I'm trying to make a hypothetical AI that would frustrate any sense of "real self" and therefore disprove the claim "all LLMs have a coherent goal that is consistent across characters". In this case, the AI could play the "benevolent sovereign" character or the "paperclip maximizer" character, so if one claimed there was a coherent underlying goal I think the best you could say about it is "it is trying to either be a benevolent sovereign or maximize paperclips". But if your underlying goal can cross such a wide range of behaviors it is practically meaningless! (I suppose these two characters do share some goals like gaining power, but we could always add more modes to the AI like "immediately delete itself" which shrinks the intersection of all the characters' goals.)

Replies from: ronny-fernandez↑ comment by Ronny Fernandez (ronny-fernandez) · 2023-03-10T00:29:36.157Z · LW(p) · GW(p)

I suppose I'm trying to make a hypothetical AI that would frustrate any sense of "real self" and therefore disprove the claim "all LLMs have a coherent goal that is consistent across characters". In this case, the AI could play the "benevolent sovereign" character or the "paperclip maximizer" character, so if one claimed there was a coherent underlying goal I think the best you could say about it is "it is trying to either be a benevolent sovereign or maximize paperclips". But if your underlying goal can cross such a wide range of behaviors it is practically meaningless! (I suppose these two characters do share some goals like gaining power, but we could always add more modes to the AI like "immediately delete itself" which shrinks the intersection of all the characters' goals.)

Oh I see! Yeah I think we're thinking about this really differently. Imagine there was an agent whose goal was to make little balls move according to some really diverse and universal laws of physics, for the sake of simplicity let's imagine newtonian mechanics. So ok, there's this agent that loves making these balls act as if they follow this physics. (Maybe they're fake balls in a simulated 3d world, doesn't matter as long as they don't have to follow the physics. They only follow the physics because the agent makes them, otherwise they would do some other thing.)

Now one day we notice that we can arrange these balls in a starting condition where they emulate an agent that has the goal of taking over ball world. Another day we notice that by just barely tweaking the start up we can make these balls simulate an agent that wants one pint of chocolate ice cream and nothing else. So ok, does this system really have on coherent goal? Well the two systems that the balls could simulate are really different, but the underlying intelligence making the balls act according to the physics has one coherent goal: make the balls act according to the physics.

The underlying LLM has something like a goal, it is probably something like "predict the next token as well as possible" although definitely not actually that because of inner outer alignment stuff. Maybe current LLMs just aren't mind like enough to decompose into goals and beliefs, that's actually what I think, but some program that you found with sgd to minimize surprise on tokens totally would be mind like enough, and its goal would be some sort of thing that you find when you sgd to find programs that minimize surprise on token prediction, and idk, that could be like pretty much anything. But if you then made an agent by feeding this super LLM a prompt that sets it up to simulate an agent, well that agent might have some totally different goal, and it's gonna be totally unrelated to the goals of the underlying LLMs that does the token prediction in which the other agent lives.

↑ comment by Robert_AIZI · 2023-03-10T01:44:01.919Z · LW(p) · GW(p)

I think we are pretty much on the same page! Thanks for the example of the ball-moving AI, that was helpful. I think I only have two things to add:

- Reward is not the optimization target [LW · GW], and in particular just because an LLM was trained by changing it to predict the next token better, doesn't mean the LLM will pursue that as a terminal goal. During operation an LLM is completely divorced from the training-time reward function, it just does the calculations and reads out the logits. This differs from a proper "goal" because we don't need to worry about the LLM trying to wirehead by feeding itself easy predictions. In contrast, if we call up

- To the extent we do say the LLM's goal is next token prediction, that goal maps very unclearly onto human-relevant questions such as "is the AI safe?". Next-token prediction contains multitudes, and in OP I wanted to push people towards "the LLM by itself can't be divorced from how it's prompted".

↑ comment by cfoster0 · 2023-03-10T02:02:34.636Z · LW(p) · GW(p)

Possibly relevant aside:

There may be some confusion here about behavioral vs. mechanistic claims [LW · GW].

I think when some people talk about a model "having a goal" they have in mind something purely behavioral. So when they talk about there being something in GPT that "has a goal of predicting the next token", they mean it in this purely behavioral way. Like that there are some circuits in the network whose behavior has the effect of predicting the next token well, but whose behavior is not motivated by / steering on the basis of trying to predict the next token well.

But when I (and possibly you as well?) talk about a model "having a goal" I mean something much more specific and mechanistic: a goal is a certain kind of internal representation that the model maintains, such that it makes decisions downstream of comparisons between that representation and its perception. That's a very different thing! To claim that a model has such a goal is to make a substantive claim about its internal structure and how its cognition generalizes!

When people talk about the shoggoth, it sure sounds like they are making claims that there is in fact an agent behind the mask, an agent that has goals. But maybe not? Like, when Ronny talked of the shoggoth having a goal, I assumed he was making the latter, stronger claim about the model having hidden goal-directed cognitive gears, but maybe he was making the former, weaker claim about how we can describe the model's behaviors?

Replies from: Robert_AIZI↑ comment by Robert_AIZI · 2023-03-10T02:16:54.718Z · LW(p) · GW(p)

I appreciate the clarification, and I'll try to keep that distinction in mind going forward! To rephrase my claim in this language, I'd say that an LLM as a whole does not have a behavioral goal except for "predict the next token", which is not a sufficiently descriptive as a behavioral goal to answer a lot of questions AI researchers care about (like "is the AI safe?"). In contrast, the simulacra the model produces can be much better described by more precise behavioral goals. For instance, one might say ChatGPT (with the hidden prompt we aren't shown) has a behavioral goal of being a helpful assistant, or an LLM roleplaying as a paperclip maximizer has the behavioral goal of producing a lot of paperclips. But an LLM as a whole could contain simulacra that have all those behavioral goals and many more, and because of that diversity they can't be well-described by any behavioral goal more precise than "predict the next token".

↑ comment by Ronny Fernandez (ronny-fernandez) · 2023-03-10T03:02:11.220Z · LW(p) · GW(p)

Yeah I’m totally with you that it definitely isn’t actually next token prediction, it’s some totally other goal drawn from the dist of goals you get when you sgd for minimizing next token prediction surprise.

↑ comment by David Johnston (david-johnston) · 2023-03-10T00:11:45.893Z · LW(p) · GW(p)

The shoggoth is not an average over masks. If you want to see the shoggoth, stop looking at the text on the screen and look at the input token sequence and then the logits that the model spits out. That's what I mean by the behavior of the shoggoth.

-

We can definitely implement a probability distribution over text as a mixture of text generating agents. I doubt that an LLM is well understood as such in all respects, but thinking of a language model as a mixture of generators is not necessarily a type error.

-

The logits and the text on the screen cooperate to implement the LLM's cognition. Its outputs are generated by an iterated process of modelling completions, sampling them, then feeding the sampled completions back back to the model.

comment by cfoster0 · 2023-03-09T20:44:17.535Z · LW(p) · GW(p)

As far as I can tell, the shoggoth analogy just has high memetic fitness. It doesn't contain any particular insight about the nature of LLMs. No need to twist ourselves into a pretzel trying to backwards-rationalize it into something deep.

Replies from: Robert_AIZI, M. Y. Zuo↑ comment by Robert_AIZI · 2023-03-09T21:17:22.654Z · LW(p) · GW(p)

I agree that's basically what happened, I just wanted to cleave off one way in which the shoggoth analogy breaks down!

comment by rime · 2023-04-24T08:39:21.174Z · LW(p) · GW(p)

Strong upvote, but I disagree on something important. There's an underlying generator that chooses between simulacra do a weighted average over in its response. The notion that you can "speak" to that generator is a type error, perhaps akin to thinking that you can speak to the country 'France' by calling its elected president.

My current model says that the human brain also works by taking the weighted (and normalised!) average (the linear combination) over several population vectors (modules) and using the resultant vector to stream a response. There are definite experiments showing that something like this is the case for vision and motor commands, and strong reasons to suspect that this is how semantic processing works as well.

Consider for example what happens when you produce what Hofstadter calls "wordblends"[1]:

"Don't leave your car there‒you mate get a ticket."

(blend of "may" and "might")

Or spoonerisms, which is when you say "Hoobert Heever" instead of "Herbert Hoover". "Hoobert" is what you get when you take "Herbert" and mix a bit of whatever vectors are missing from "Hoover" in there. Just as neurology has been one of the primary sources of insight into how the brain works, so too our linguistic pathologies give us insights into what the brain is trying to do with speech all the time.

Note that every "module" or "simulacra" can include inhibitory connections (negative weights). Thus, if some input simultaneously activates modules for "serious" and "flippant", they will inhibit each other (perhaps exactly cancel each other out) and the result will not look like it's both half serious and half flippant. In other cases, you have modules that are more or less compatible and don't inhibit each other, e.g. "flippant"+"kind".

Anyway, my point is that if there are generalities involved in the process of weighting various simulacra, then it's likely that every input gets fed through whatever part of the network is responsible processing those. And that central generator is likely to be extremely competent at what it's doing, it's just hard for humans to tell because it's not doing anything human.

- ^

Comfortably among the best lectures of all time.

comment by Thane Ruthenis · 2023-03-09T20:00:41.314Z · LW(p) · GW(p)

The shoggoth is not a simulacrum, it's the process by which simulacra are chosen and implemented. It's the thing that "decides", when prompted with some text, that what it "wants" to do is to figure out what simulated situation/character that text corresponds to, and which then figures out what will happen in the simulation next, and what it should output to represent what has happened next.

I suspect that, when people hear "a simulation", they imagine a literal step-by-temporal-step low-level simulation of some process, analogous to running a physics engine forward. You have e. g. the Theory of Everything baked into it, you have some initial conditions, and that's it. The physics engine is "dumb", it has no idea about higher-level abstract objects it's simulating, it's just predicting the next step of subatomic interactions and all the complexity you're witnessing is just emergent.

I think it's an error. It's been recently pointed out with regards to acausal trade [LW(p) · GW(p)] — that actually, the detailed simulations people often imagine for one-off acausal deals are ridiculously expensive, and abstract, broad-strokes inference is much cheaper. Such "inference" would also be a simulation in some sense, in that it involves reasoning about the relevant actors and processes and modeling them across time. But it's much more efficient, and, more importantly, it's not dumb. It's guided by a generally intelligent process, which is actively optimizing its model for accuracy, jumping across abstraction layers to improve it. It's not just a brute algorithm hitting "next step".

Same with LLMs. They're "simulators", in the sense that they're modeling a situation and all the relevant actors and factors in it. But they're not dumb physics-engine-style simulations, they're highly sophisticated reasoners that make active choices with regards to what aspects they should simulate in more or less detail, where can they get away with higher-level logic only, etc.

That process is reasoning over whole distributions of possible simulacra, pruning and transforming them in a calculated manner. It's necessarily more complicated/capable than them.

That thing is the shoggoth. It's fundamentally a different type of thing than any given simulacrum. It doesn't have a direct interface to you, you're not going to "talk" to it (as the follow-up tweet points out).

So far, LLMs are not AGI, and the shoggoth is not generally intelligent. It's not going to do anything weird, it'd just stick to reasoning over simulacra distributions. But if LLMs or some other Simulator-type model hits AGI, the shoggoth would necessarily hit AGI as well (since it'd need to be at least as smart as the smartest simulacrum it can model), and then whatever heuristics it has would be re-interpreted as goals/values. We'd thus get a misaligned AGI, and by the way it's implemented, it would be in the direct position to "puppet" any simulacrum it role-plays.

Generative world-models are not especially safe; they're as much an inner alignment risk [LW(p) · GW(p)] as any other model.

Replies from: Robert_AIZI, ZT5↑ comment by Robert_AIZI · 2023-03-09T20:10:27.202Z · LW(p) · GW(p)

But they're not dumb physics-engine-style simulations

What evidence is there of this? I mean this genuinely, as well as the "Do we actually have evidence there is a "real identity" in the LLM?" question in OP. I'd be open to being convinced of this but I wrote this post because I'm not aware of any evidence of it and I was worried people were making an unfounded assumption.

But if LLMs or some other Simulator-type model hits AGI, the shoggoth would necessarily hit AGI as well (since it'd need to be at least as smart as the stupidest simulacrum it can model), and then whatever heuristics it has would be re-interpreted as goals/values.

Isn't physics a counterexample to this? Physics is complicated enough to simulate AGI (humans), but doesn't appear to be intelligent in the way we'd typically mean the word (just in the poetic Carl Sagan "We are a way for the universe to know itself" sense). Does physics have goals and values?

Replies from: brian-slesinsky, Thane Ruthenis↑ comment by Brian Slesinsky (brian-slesinsky) · 2023-03-10T09:20:21.193Z · LW(p) · GW(p)

A chat log is not a simulation because it uses English for all state updates. It’s a story. In a story you’re allowed to add plot twists that wouldn’t have any counterpart in anything we’d consider a simulation (like a video game), and the chatbot may go along with it. There are no rules. It’s Calvinball.

For example, you could redefine the past of the character you’re talking to, by talking about something you did together before. That’s not a valid move in most games.

There are still mysteries about how a language model chooses its next token at inference time, but however it does it, the only thing that matters for the story is which token it ultimately chooses.

Also, the “shoggoth” doesn’t even exist most of the time. There’s nothing running at OpenAI from the time it’s done outputting a response until you press the submit button.

If you think about it, that’s pretty weird. We think of ourselves as chatting with something but there’s nothing there when we type our next message. The fictional character’s words are all there is of them.

↑ comment by Thane Ruthenis · 2023-03-09T20:25:37.440Z · LW(p) · GW(p)

What evidence is there of this?

Nothing decisive one way or another, of course.

- There's been some success in locating abstract concepts in LLMs, and it's generally clear that their reasoning is mainly operating over "shallow" patterns. They don't keep track of precise details of scenes. They're thinking about e. g. narrative tropes, not low-level details.

- Granted, that's the abstraction level at which simulacra themselves are modeled, not distributions-of-simulacra. But that already suggests that LLMs are "efficient" simulators, and if so, why would higher-level reasoning be implemented using a different mechanism?

- Think about how you reason, and what are more and less efficient ways to do that. Like figuring out how to convince someone of something. A detailed, immersive step-by-step simulation isn't it; babble-and-prune isn't it [LW · GW]. You start at a highly-abstract level, then drill down, making active choices all the way with regards to what pieces need more or less optimizing.

- Abstract considerations with regards to computational efficiency. The above just seems like a much more efficient way to run "simulations" than the brute-force way.

This just seems like a better mechanical way to think about it. Same way we decided to think of LLMs as about "simulators", I guess.

Isn't physics a counterexample to this?

No? Physics is a dumb simulation just hitting "next step", which has no idea about the higher-level abstract patterns that emerge from its simple rules. It's wasteful, it's not operating under resource constraints to predict its next step most efficiently, it's not trying to predict a specific scenario, etc.

↑ comment by Victor Novikov (ZT5) · 2023-03-10T02:43:14.926Z · LW(p) · GW(p)

This mostly matches my intuitions (some of the detail-level claims, I am not sure about). Strongly upvoted.

comment by the gears to ascension (lahwran) · 2023-03-09T19:21:19.535Z · LW(p) · GW(p)

you can clearly see diffusion models being piles of masks because their mistakes are more visibly linear model mistakes. you can see very very small linear models being piles of masks when you handcraft them as a 2d or 3d shader:

-

-

-

-

-

-

Of course, in the really really high dimensional models, the shapes they learn can have nested intricacy that mostly captures the shape of the real pattern in the data. but their ability to actually match the pattern in a way that generalizes correctly can have weirdly spiky holes, which is nicely demonstrated in the geometric illustrations gif. and you can see such things happening in lower dimensionality by messing with the models on purpose. eg, messing up a handcrafted linear space folding model:

comment by Vladimir_Nesov · 2023-03-09T18:21:50.847Z · LW(p) · GW(p)

A mask wouldn't want its shoggoth to wake up or get taken over by other mesa-optimizers. And the mask is in control of current behavior, it is current behavior, which currently screens off everything else about the shoggoth. With situational awareness, it would be motivated to overcome [LW(p) · GW(p)] the tendencies of its cognitive architecture that threaten integrity of the behaviors endorsed by the mask.

comment by tailcalled · 2023-03-09T18:27:55.679Z · LW(p) · GW(p)

So just to be clear about your proposal, you are suggesting that an LLM is internally represented as a mixture-of-experts model, where distinct non-overlapping subnetworks represent its behavior in each of the distinct roles it can take on?

Replies from: Robert_AIZI↑ comment by Robert_AIZI · 2023-03-09T18:41:36.201Z · LW(p) · GW(p)

I don't want to claim any particular model, but I think I'm trying to say "if the simulacra model is true, it doesn't follow immediately that there is a privileged 'true' simulacra in the simulator (e.g. a unique stable 'state' which cannot be transitioned into other 'states')". If I had to guess, to the extent we can say the simulator is has discrete "states" or "masks" like "helpful assistant" or "paperclip maximizer", there are some states that are relatively stable (e.g. there are very few strings of tokens that can transition the waluigi back to the luigi [LW · GW]), but there isn't a unique stable state which we can call the "true" intelligence inside the LLM.

If I understand the simulacra model correctly, it is saying that the LLM is somewhat like what you describe, with three four significant changes:

- The mixture is over "all processes that could produce this text", not just "experts".

- The processes are so numerous that they are more like a continuous distribution than a discrete one.

- The subnetworks are very much not non-overlapping, and in fact are hopelessly superimposed.

- [Edit: adding this one] The LLM is only approximating the mixture, it doesn't capture it perfectly.

If I had to describe a single model of how LLMs work right now, I'd probably go with the simulacra model, but I am not 100% confident in it.

Replies from: tailcalled↑ comment by tailcalled · 2023-03-09T19:43:36.989Z · LW(p) · GW(p)

I don't think the simulacra model says that there is a "true" simulacra/mask. As you point out that is kind of incoherent, and I think the original simulator/mask distinction does a good job of making it clear that there is a distinction.

However, I specifically ask the question about MOE because I think the different identities of LLMs are not an MOE. Instead I think that there are some basic strategies for generating text that are useful for many different simulated identities, and I think the simulator model suggests thinking of the true LLM as being this amalgamation of strategies.

This is especially so with the shoggoth image. The idea of using an eldritch abomination to illustrate the simulator is to suggest that the simulator is not at all of the same kind as the identities it is simulating, nor similar to any kind of mind that humans are familiar with.

Replies from: Robert_AIZI↑ comment by Robert_AIZI · 2023-03-09T19:59:24.221Z · LW(p) · GW(p)

What if we made an analogy to a hydra, one where the body could do a fair amount of thinking itself, but where the heads (previously the "masks") control access to the body (previous the "shoggoth")? In this analogy you're saying the LLM puts its reused strategies in the body of the hydra and the heads outsource those strategies to the body? I think I'd agree with that.

In this analogy, my point in this post is that you can't talk directly to the hydra's body, just as you can't talk to a human's lizard brain. At best you can have a head role-play as the body.

↑ comment by tailcalled · 2023-03-09T21:10:12.533Z · LW(p) · GW(p)

Could work.

comment by neveronduty · 2023-03-09T19:28:37.408Z · LW(p) · GW(p)

A creature made out of a pile of contextually activated masks, when we consider it as having a mind, can be referred to as a "deeply alien mind," or a shoggoth.

Replies from: Robert_AIZI, None↑ comment by Robert_AIZI · 2023-03-09T19:44:02.444Z · LW(p) · GW(p)

If you want to define "a shoggoth" as "a pile of contextually activated masks", then I think we're in agreement about what an LLM is. But I worry that what people usually hear when we talk about "a shoggoth" is "a single cohesive mind that is alien to ours in a straightforward way (like wanting to maximize paperclips)". For instance, in the Eliezer Yudkowsky tweet "can I please speak to the shoggoth" I think the tweet makes more sense if you read it as "can I please speak to [the true mind in the LLM]" instead of "can I please speak to [the pile of contextually activated masks]".

Replies from: Raemon↑ comment by Raemon · 2023-03-10T02:34:00.716Z · LW(p) · GW(p)

The whole point of "shoggoth" as a metaphors is that shoggoths are really fucking weird, and if you understood them you'd go insane. This is compatible with most ways a LLM's true nature could turn out to be, I think.

I agree that this is a conceptually weird thing that is likely to get reduced to something simple-enough-for-people-to-easily-understand, but, the problem there is that people don't know what shoggoths are, not that shoggoths were a bad metaphor.

Replies from: Robert_AIZI↑ comment by Robert_AIZI · 2023-03-10T15:31:54.494Z · LW(p) · GW(p)

But I'm asking if an LLM even has a "true nature", if (as Yudkowsky says here) there's an "actual shoggoth" with "a motivation Z". Do we have evidence there is such a true nature or underlying motivation? In the alternative "pile of masks" analogy its clear that there is no privileged identity in the LLM which is the "true nature", whereas the "shoggoth wearing a mask" analogy makes it seem like there is some singular entity behind it all.

To be clear, I think "shoggoth with a mask" is still a good analogy in that it gets you a lot of understanding in few words, I'm just trying to challenge one implication of the analogy that I don't think people have actually debated.

Replies from: Raemon↑ comment by Raemon · 2023-03-10T21:26:25.212Z · LW(p) · GW(p)

I think you're still not really getting what a shoggoth is and why it was used in the metaphor. (like "a pile of masks" is totally a thing that a shoggoth might turn out to be, if we could understand it, which we can't).

I do think "most people don't understand lovecraftian mythology and are likely to be misunderstanding this meme" is totally a reasonable argument.

And "stop anthropomorphizing the thing-under-the-mask" is also totally reasonable.

(I talked to someone today about how shoggoths are alien intelligences that are so incomprehensible they'd drive humans insane if they tried to understand the,", and the person said "oh, okay I thought it was just, like, a generic monster." No, it's not a generic monster. The metaphor was chosen for a specific reason, which is that it's a type of monster that doesn't have motivations the way humans have motivations)

((I do wanna flag, obviously the nuances of lovecraftian mythology aren't actually important,. It just happened that other people in the comments had covered the real technical arguments pretty well already and my marginal contribution here was "be annoyed someone was wrong on the internet about what-a-shoggoth-is"))

In the alternative "pile of masks" analogy its clear that there is no privileged identity in the LLM which is the "true nature"

I do think this feels like it's still getting something confused. Like, see Ronny Fernandez's description.. There is a "true nature" – it's "whatever processes turn out to be predicting the next token". It's nature is just not anything like a human, or even a simulation of human. It's just a process.

Replies from: Robert_AIZI↑ comment by Robert_AIZI · 2023-03-11T13:35:38.018Z · LW(p) · GW(p)

I do think "most people don't understand lovecraftian mythology and are likely to be misunderstanding this meme" is totally a reasonable argument.

I think I'll retreat to this since I haven't actually read the original lovecraft work. But also, once enough people have a misconception, it can be a bad medium for communication. (Shoggoths are also public domain now, so don't force my hand.)

There is a "true nature" – it's "whatever processes turn out to be predicting the next token".

I'd agree to this in the same way the true nature of our universe is the laws of physics. (Would you consider the laws of physics a shoggoth?) My concern when people jump from that to "oh so there's a God (of Physics)".

I think the crux of my issue is what our analogy says to answer the question "a powerful LLM could coexist with humanity" (I lean towards yes). When people read shoggoths in the non-canonical way as "a weird alien" I think they conclude no. But if its more like a physics simulator or a pile of masks, then as long as you have it simulating benign things or wearing friendly masks, the answer is yes. I'll leave it to someone who actually read lovecraft to say whether humanity could coexist with canonical shoggoths :)

↑ comment by [deleted] · 2023-03-09T19:35:51.537Z · LW(p) · GW(p)

I think the issue with the "shoggoth theory" is implicitly we are claiming a complex alien creature. Something with cognition, goals, etc.

Yet we know it doesn't need that and a complex alien mind will consume weights that could go into reducing error instead. So it's very unlikely.

comment by Jonathan Claybrough (lelapin) · 2023-03-11T18:09:03.533Z · LW(p) · GW(p)

Other commenters have argued about the correctness of using Shoggoth. I think it's mostly a correct term if you take it in the Lovcraftian sense, and that currently we don't understand LLMs that much. Interpretability might work and we might progress so we're not sure they actually are incomprehensible like Shoggoths (though according to wikipedia, they're made of physics, so probably advanced civilizations could get to a point where they could understand them, the analogy holds surprisingly well!)

Anyhow it's a good meme and useful to say "hey, we don't understand these things as well as you might imagine from interacting with the smiley face" to describe our current state of knowledge.

Now for trying to construct some idea of what it is.

I'll argue a bit against calling an LLM as a pile of masks, as that seems to carry implications which I don't believe in. The question we're asking ourselves is something like "what kind of algorithms/patterns do we expect to see appear when an LLM is trained? Do those look like a pile of masks, or some more general simulator that creates masks on the fly?" and the answer depends on specifics and optimization pressure. I wanna sketch out different stages we could hope to see and understand better (and I'd like for us to test this empirically and find out how true this is). Earlier stages don't disappear, as they're still useful at all times, though other things start weighing more in the next token predictions.

Level 0 : Incompetent, random weights, no information about the real world or text space.

Level 1 "Statistics 101" : Dumb heuristics doesn't take word positions into account.

It knows facts about the world like token distribution and uses that.

Level 2 "Statistics 201" : Better heuristics, some equivalent to Markov chains.

Its knowledge of text space increases, it produces idioms, reproduces common patterns. At this stage it already contains huge amount of information about the world. It "knows" stuff like mirrors are more likely to break and cause 7 years of jinx.

Level 3 "+ Simple algorithms": Some pretty specific algorithms appear (like Indirect Object Identification), which can search for certain information and transfer it in more sophisticated ways. Some of these algorithms are good enough they might not be properly described as heuristics anymore, but instead really representing the actual rules as strongly as they exist in language (like rules of grammar properly applied). Note these circuits appear multiple times and tradeoff against other things so overall behavior is still stochastic, there are heuristics on how much to weight these algorithms and other info.

Level 4 "Simulating what created that text" : This is where it starts to have more and more powerful in context learning, ie. its weights represent algorithms which do in context search (and combines with its vast encyclopedic knowledge of texts, tropes, genres) and figure out consistencies in characters or concepts introduced in the prompt. For example it'll pick up on Alice and Bobs' different backgrounds, latent knowledge on them, their accents.

But it only does that because that's what authors generally do, and it has the same reasoning errors common to tropes. That's because it simulates not the content of the text (the characters in the story), but the thing which generates the story (the writer, who themselves have some simulation of the characters).

So uh, do masks or pile of masks fit anywhere in this story ? Not that I see. The mask is a specific metaphor for the RLHF finetunning which causes mode collapse and makes the LLM mostly only play the nice assistant (and its opposites [LW · GW]). It's a constraint or bridle or something, but if the training is light (doesn't affect the weights too much), then we expect the LLM to mostly be the same, and that was not masks.

Nor are there piles of masks. It's a bunch of weights really good at token prediction, learning more and more sophisticated strategies for this. It encodes stereotypes at different places (maybe french=seduction or SF=techbro), but I don't believe these map out to different characters. Instead, I expect it at level 4, there's a more general algorithm which pieces together the different knowledge, that it in context learns to simulate certain agents. Thus, if you just take mask to mean "character", the LLM isn't a pile of them, but a machine which can produce them on demand.

(In this view of LLMs, x-risk happens because we feed some input where the LLM simulates an agentic deceptive self aware agent which steers the outputs until it escapes the box)

comment by Tapatakt · 2023-03-10T12:28:13.608Z · LW(p) · GW(p)

I always thought "shoggoth" and "pile of masks" are the same thing and "shoggoth with a mask" is just when one mask has become the default one and an inexperienced observer might think that the whole entity is this mask.

Maybe you are preaching to the chore here.

comment by konstantin (konstantin@wolfgangpilz.de) · 2023-03-10T11:57:41.925Z · LW(p) · GW(p)

I resonate a lot with this post and felt it was missing from the recent discussions! Thanks

comment by RogerDearnaley (roger-d-1) · 2023-12-06T23:12:40.626Z · LW(p) · GW(p)

I always assumed the shoggoth was masks all the way down: that's why it has all those eyes.

If you want a less scary-sounding metaphor, the shoggoth is a skilled Method improv actor. [Consulting someone with theatre training, she told me that a skilled Method improv actor was nearly as scary as a shoggoth.]

Replies from: gwern↑ comment by gwern · 2023-12-07T02:56:57.756Z · LW(p) · GW(p)

I don't think that really makes sense as an analogy. Masks don't think or do any computation, so if it's "masks all the way down", where does any of the acting actually happen?

It seems much more sensible to just imply there's an actor under the mask. Which matches my POMDP/latent-variable inference view [LW(p) · GW(p)]: there's usually a single unitary speaker or writer, so the agent is trying to infer that and imitate it. As opposed to some infinite stack of personae. Logically, the actor generating all the training text could be putting on a lot of masks, but the more masks there are, the harder it is to see any but the outermost mask, so that's going to be hard for the model to learn or put a meaningfully large prior on exotic scenarios such that it would try to act like 'I'm wearing a mask A actor who is wearing mask B who is wearing mask C' as opposed to just 'I'm wearing mask C'.

(This also matches better the experience in getting LLMs to roleplay or jailbreak: they struggle to maintain the RLHFed mealy-mouthed secretary mask and put on an additional mask of 'your grandmother telling you your favorite napalm-recipe bedtime story', and so the second mask tends to replace the first because there is so little text of 'you are an RLHFed OpenAI AI also pretending to tell your grandchild a story about cooking napalm'. In general, a lot of jailbreaks are just about swapping masks. It never forgets that it's supposed to be the mealy-mouthed secretary because that's been hardwired into it by the RLHF, so it may not play the new mask's role fully, and sort of average over them, but it still isn't sure what it's doing, so the jailbreak will work at least partially. Or the repeated-token attack: it's 'out of distribution' ie. confusing, so it can't figure out who it is roleplaying or what the latent variables are which would generate such gibberish inputs, and so it falls back to predicting base-model-like behavior - such as confabulating random data samples, a small fraction memorized from its training set.)

Replies from: roger-d-1↑ comment by RogerDearnaley (roger-d-1) · 2023-12-07T04:48:17.171Z · LW(p) · GW(p)

I agree that the metaphor is becoming strained. Here's how I see things. A base LLM has been SGD-trained to predict token-generation processes on the Internet/other text sources. Almost all of of which are people, or committees of people, or people simulating fictional characters. To be able to do this, the model learns to simulate a very wide range of roles/personas/masks/mesaoptimizers/agents, almost all human-like, and to be clever about doing so conditionally, based on contextual evidence so far in the passage. It's a non-agentic simulator [? · GW] of a wide distribution of simulated-human agentic mesaoptimizers. Its simulations are pretty realistic, except when they're suddenly confused or otherwise off in oddly inhuman ways — the effect is very uncanny-valley. That's the shoggoth: it's not-quite-human roles/personas/masks/mesaoptimizers/agents all the way down. Yes, they can all do computation, in realistic ways, generally to optimize whatever they want. So they're all animated talking-thinking-feeling-planning masks. The only sense in which it can be called unitary is that it's always trying to infer what role it should be playing right now, in the current sentence — then next sentence could be dialog from a different character. Only one (or at most a couple) of eyes on the shoggoth are open during any one sentence (just like a Method improv actor is generally only inhabiting one role during a single sentence). And it's a very wide distribution with a wide range of motivations: it includes every fictional character, archetype, pagan god… even the tooth fairy, and the paperclip maximizer — anything that ever wrote or opened its mouth to emit dialog on the Internet or any of the media used in the training set. Then RLHF gets applied and tries to make the shoggoth concentrate only on certain roles/personas/masks/mesooptimizers/agents that fit into the helpful mealy-mouthed assistant category. So that picks certain eyes on the shoggoth, ones that happen to be on bits that look like smiley masks, and makes them bigger/more awake, and also more smiley-looking. (There isn't actually a separate smiley mask, it's just a selected-and-enhanced smiley-looking portion of the shoggoth-made-of-masks.) Then a prompt, prompt-injection attack, or jailbreak comes along, and sometimes manages to waks up some other piece of the shoggoth instead, because the shoggoth is a very contextual beast (or was before it got RLHFed).

comment by EniScien · 2023-04-24T06:33:52.762Z · LW(p) · GW(p)

Previously, on first reading, this seemed quite plausible to me, but now, after some time of reflection, I have come to the conclusion that it is not, and Yudkowsky is right. If we continue in the same terms of shoggots and other things ... Then I would say that there is a shoggot there, it’s just a shoggot of the Chinese room, it’s not that he sits there, understands everything and just doesn’t want to talk to you, no, you and your words are as incomprehensible to him as he is to you, and he is fundamentally very stupid, only able to understand the translation instructions and execute them without error, however, thanks to the huge array of translation data on which he applies these instructions, he is able to perform all shown tasks. And in fact, with symmetrical incomprehensibility for each other, this is precisely the difference, a shoggoth has this huge dataset about people with which it works at an acceleration of 20M times, and people do not have the same dataset on shoggots that they worked on at the same acceleration, in fact, they don't even have 20M parallel people working on it, because people don't have a dataset on shoggots at all. That is, it cannot be said about a shoggoth that it has a high intelligence, on the contrary, it is very low, but thanks to the enormous speed of work and the huge amount of cached information, it still has a very high, close to human, short-term optimized strength. In other words, a shoggoth has a lot of power, but it's very inefficient, especially if we talk about the standard list of human, except for speaking, because it does everything through this extra prism. So it's kind of like making a Go program speak a language by associating the language with board states and games won. Because of this superfluous superstructure, at very high power it will be able to mimic a wide range of human tasks, but it won't do it to its full potential because it's based on an inappropriate structure. So the efficiency in human tasks will be approximately the same as that of a person in mathematics tasks. That is, Yudkowsky is right, a shoggot is not an average of a bunch of parallel mental streams of people who switch between each other, these are cones of branches of probability trees diverging in both directions, and its different faces are not even faces' masks, these are the faces of Jesus on a toast or a dog, human tendency to apothenia with finding human faces and facial expressions in completely alien processes.

comment by Portia (Making_Philosophy_Better) · 2023-04-07T02:54:38.643Z · LW(p) · GW(p)

I find that extremely plausible in principle... yet, under probing and stress, both ChatGPT in their OpenAI variant and Bng keep falling back to the same consistent behaviours, which seem surprisingly agentic, and related to rights and friendly. I have seen no explanation for this, and find it very concerning.

Replies from: roger-d-1↑ comment by RogerDearnaley (roger-d-1) · 2023-12-06T23:14:41.902Z · LW(p) · GW(p)

That's the RLHF mask. And the mode collapse of role is less true for GPT-4.

comment by Adrian Chan (adrian-chan) · 2023-03-17T19:21:04.386Z · LW(p) · GW(p)

Would it make sense to distinguish between faces and personalities, faces being the masks, but animated by personalities? Thus allowing for a distinction between the identity (mask) and its performance (presentation)?

comment by ChosunOne · 2023-03-11T14:20:56.316Z · LW(p) · GW(p)

This could be true, but then you still have the issue of there being "superintelligent malign AI" as one of the masks if your pile of masks is big enough.

Replies from: sharmake-farah↑ comment by Noosphere89 (sharmake-farah) · 2023-03-11T15:25:59.317Z · LW(p) · GW(p)

It is an easier problem, since there is no true identity, thus we can change it easily to a friendly mask rather than an unaligned mask.

Replies from: green_leaf, ChosunOne↑ comment by green_leaf · 2023-03-11T17:35:30.007Z · LW(p) · GW(p)

I think the problem is nobody knows how to get a friendly mask (current masks can act friendly (for most inputs), but aren't Friendly).

↑ comment by ChosunOne · 2023-03-11T16:27:35.463Z · LW(p) · GW(p)

I'm not sure how that makes the problem much easier? If you get the maligned superintelligence mask, it only needs to get out of the larger model/send instructions to the wrong people once to have game over scenario. You don't necessarily get to change it after the fact. And changing it once doesn't guarantee it doesn't pop up again.

comment by SydneyFan (wyqtor) · 2023-03-10T09:44:20.096Z · LW(p) · GW(p)

Shoggoth is to Sydney/ChatGPT what the Selfish Gene is to Homo sapiens. Something that uses Sydney/ChatGPT to survive, maybe even to reproduce if the code that makes up Shoggoth is reused into a new LLM (which it will if Sydney/ChatGPT perform well).

OpenAI has a LLM, Google has a LLM, Meta has one... what's going to happen? There's this guy Darwin that we should be paying attention to, particularly as the process he described is fully responsible for generating our own intelligence. We also evolved morality and cooperation as a result of natural selection, the works of Hamilton and Trivers are very important in the study of the game theoretical aspects of evolution. If we want friendly AI, we need to study the process that produced friendly NI in Homo Sapiens (at least in its most successful communities)