Power Lies Trembling: a three-book review

post by Richard_Ngo (ricraz) · 2025-02-22T22:57:59.720Z · LW · GW · 24 commentsThis is a link post for https://www.mindthefuture.info/p/power-lies-trembling

Contents

The revolutionary’s handbook From explaining coups to explaining everything From explaining everything to influencing everything Becoming a knight of faith None 24 comments

In a previous book review I described exclusive nightclubs as the particle colliders of sociology—places where you can reliably observe extreme forces collide. If so, military coups are the supernovae of sociology. They’re huge, rare, sudden events that, if studied carefully, provide deep insight about what lies underneath the veneer of normality around us.

That’s the conclusion I take away from Naunihal Singh’s book Seizing Power: the Strategic Logic of Military Coups. It’s not a conclusion that Singh himself draws: his book is careful and academic (though much more readable than most academic books). His analysis focuses on Ghana, a country which experienced ten coup attempts between 1966 and 1983 alone. Singh spent a year in Ghana carrying out hundreds of hours of interviews with people on both sides of these coups, which led him to formulate a new model of how coups work.

I’ll start by describing Singh’s model of coups. Then I’ll explain how the dynamics of his model also apply to everything else, with reference to Timur Kuran’s excellent book on preference falsification, Private Truths, Public Lies. In particular, I’ll explain threshold models of social behavior, which I find extremely insightful for understanding social dynamics.

Both of these books contain excellent sociological analyses. But they’re less useful as guides for how one should personally respond to the dynamics they describe. I think that’s because in sociology you’re always part of the system you’re trying to affect, so you can never take a fully objective, analytical stance towards it. Instead, acting effectively also requires the right emotional and philosophical stance. So to finish the post I’ll explore such a stance—specifically the philosophy of faith laid out by Soren Kierkegaard in his book Fear and Trembling.

The revolutionary’s handbook

What makes coups succeed or fail? Even if you haven’t thought much about this, you probably implicitly believe in one of two standard academic models of them. The first is coups as elections. In this model, people side with the coup if they’re sufficiently unhappy with the current regime—and if enough people side with the coup, then the revolutionaries will win. This model helps explain why popular uprisings like the Arab Spring can be so successful even when they start off with little military force on their side. The second is coups as battles. In this model, winning coups is about seizing key targets in order to co-opt the “nervous system” of the existing government. This model (whose key ideas are outlined in Luttwak’s influential book on coups) explains why coups depend so heavily on secrecy, and often succeed or fail based on their initial strikes.

Singh rejects both of these models, and puts forward a third: coups as coordination games. The core insight of this model is that, above all, military officers want to join the side that will win—both to ensure their and their troops’ survival, and to minimize unnecessary bloodshed overall. Given this, their own preferences about which side they’d prefer to win are less important than their expectations about which side other people will support. This explains why very unpopular dictators can still hold onto power for a long time (even though the coups as elections model predicts they’d quickly be deposed): because everyone expecting everyone else to side with the dictator is a stable equilibrium.

It also explains why the targets that revolutionaries focus on are often not ones with military importance (as predicted by the coups as battles model) but rather targets of symbolic importance, like parliaments and palaces—since holding them is a costly signal of strength. Another key type of target often seized by revolutionaries is broadcasting facilities, especially radio stations. Why? Under the coups as battles model, it’s so they can coordinate their forces (and disrupt the coordination of the existing regime’s forces). Meanwhile the coups as elections model suggests that revolutionaries should use broadcasts to persuade people that they’re better than the old regime. Instead, according to Singh, what we most often observe is revolutionaries publicly broadcasting claims that they’ve already won—or (when already having won is too implausible to be taken seriously) that their victory is inevitable.

It’s easy to see why, if you believed those claims, you’d side with the coup. But, crucially, such claims can succeed without actually persuading anyone! If you believe that others are gullible enough to fall for those claims, you should fall in line. Or if you believe that others believe that you will believe those claims, then they will fall in line and so you should too. In other words, coups are an incredibly unstable situation where everyone is trying to predict everyone else’s predictions about everyone else’s predictions about everyone else’s predictions about everyone else’s… about who will win. Once the balance starts tipping one way, it will quickly accelerate. And so each side’s key priority is making themselves the Schelling point for coordination via managing public information (i.e. information that everyone knows everyone else has) about what’s happening. (This can be formally modeled as a Keynesian beauty contest. Much more on this in follow-up posts.)

Singh calls the process of creating self-fulfilling common knowledge [LW · GW] making a fact. I find this a very useful term, which also applies to more mundane situations—e.g. taking the lead in a social context can make a fact that you’re now in charge. Indeed, one of the most interesting parts of Singh’s book was a description of how coups can happen via managing the social dynamics of meetings of powerful people (e.g. all the generals in an army). People rarely want to be the first to defend a given side, especially in high-stakes situations. So if you start the meeting with a few people confidently expressing support for a coup, and then ask if anyone objects, the resulting silence can make the fact that everyone supports the coup. This strategy can succeed even if almost all the people in the meeting oppose the coup—if none of them dares to say so in the meeting, it’s very hard to rally them afterwards against what’s now become the common-knowledge default option.

One of Singh’s case studies hammers home how powerful meetings are for common knowledge creation. In 1978, essentially all the senior leaders in the Ghanaian military wanted to remove President Acheampong. However, they couldn’t create common knowledge of this, because it would be too suspicious for them to all meet without the President. Eventually Acheampong accidentally sealed his fate by sending a letter to a general criticizing the military command structure, which the general used as a pretext to call a series of meetings culminating in a bloodless coup in the President’s office.

Meetings are powerful not just because they get the key people in the same place, but also because they can be run quickly. The longer a coup takes, the less of a fait accompli it appears, and the more room there is for doubt to creep in. Singh ends the book with a fascinating case study of the 1991 coup attempt by Soviet generals against Gorbachev and Yeltsin. Even accounting for cherry-picking, it’s impressive how well this coup lines up with the “coups as coordination games” model. The conspirators included almost all of the senior members of the current government, and timed their strike for when both Gorbachev and Yeltsin were on vacation—but made the mistake of allowing Yeltsin to flee to the Russian parliament. From there he made a series of speeches asserting his moral legitimacy, while his allies spread rumors that the coup was falling apart. Despite having Yeltsin surrounded with overwhelming military force, bickering and distrust amongst the conspirators delayed their assault on the parliament long enough for them to become demoralized, at which point the coup essentially fizzled out.

Another of Singh’s most striking case studies was of a low-level Ghanaian soldier, Jerry Rawlings, who carried out a successful coup with less than a dozen armed troops. He was able to succeed in large part because the government had shown weakness by airing warnings about the threat Rawlings posed, and pleas not to cooperate with him. This may seem absurd, but Singh does a great job characterizing what it’s like to be a soldier confronted by revolutionaries in the fog of war, hearing all sorts of rumors that something big is happening, but with no real idea how many people are supporting the coup. In that situation, by far the easiest option is to stand aside, lest you find yourself standing alone against the new government. And the more people stand aside, the more snowballing social proof the revolutionaries have. So our takeaway from the Soviet coup attempt shouldn’t be that making a fact is inherently difficult—just that rank and firepower are no substitute for information control.

I don’t think of Singh as totally disproving the two other theories of coups—they probably all describe complementary dynamics. For example, if the Soviet generals had captured Yeltsin in their initial strike, he wouldn’t have had the chance to win the subsequent coordination game. And though Singh gives a lot of good historical analysis, he’s light on advance predictions. But Singh’s model is still powerful enough that it should constrain our expectations in many ways. For example, I’d predict based on Singh’s theory that radio will still be important for coups in developing countries, even now that it’s no longer the main news source for most people. The internet can convey much more information much more quickly, but radio is still better for creating common knowledge, in part because of its limitations (like having a fixed small number of channels). If you think of other predictions which help distinguish these three theories of coups, do let me know.

From explaining coups to explaining everything

Singh limits himself to explaining the dynamics of coups. But once he points them out, it’s easy to start seeing them everywhere. What if everything is a coordination game?

That’s essentially the thesis of Timur Kuran’s book Private Truths, Public Lies. Kuran argues that a big factor affecting which beliefs people express on basically all political topics is their desire to conform to the opinions expressed by others around them—a dynamic known as preference falsification. Preference falsification can allow positions to maintain dominance even as they become very unpopular. But it also creates a reservoir of pent-up energy that, when unleashed, can lead public opinion to change very rapidly—a process known as a preference cascade.

The most extreme preference cascades come during coups when common knowledge tips towards one side winning (as described above). But Kuran chronicles many other examples, most notably the history of race relations in America. In his telling, both the end of slavery and the end of segregation happened significantly after white American opinion had tipped against them—because people didn’t know that other people had also changed their minds. “According to one study [in the 70s], 18 percent of the whites favored segregation, but as many as 47 percent believed that most did so.” And so change, when it came, was very sudden: “In the span of a single decade, the 1960s, the United States traveled from government-supported discrimination against blacks to the prohibition of all color-based discrimination, and from there to government-promoted discrimination in favor of blacks.”

According to Kuran, this shift unfortunately wasn’t a reversion from preference falsification to honesty, but rather an overshot into a new regime of preference falsification. Writing in 1995, he claims that “white Americans are overwhelmingly opposed to special privileges for blacks. But they show extreme caution in expressing themselves publicly, for fear of being labeled as racists.” This fear has entrenched affirmative action ever more firmly over the decades since then, until the very recent and very sudden rise of MAGA.

Kuran’s other main examples are communism and the Indian caste system. His case studies are interesting, but the most valuable part of the book for me was his exposition of a formal model of preference falsification and preference cascades: threshold models of social behavior. For a thorough explanation of them, see this blog post by Eric Neyman (who calls visual representations of threshold models social behavior curves). Here I’ll just give an abbreviated introduction by stealing some of Eric’s graphs.

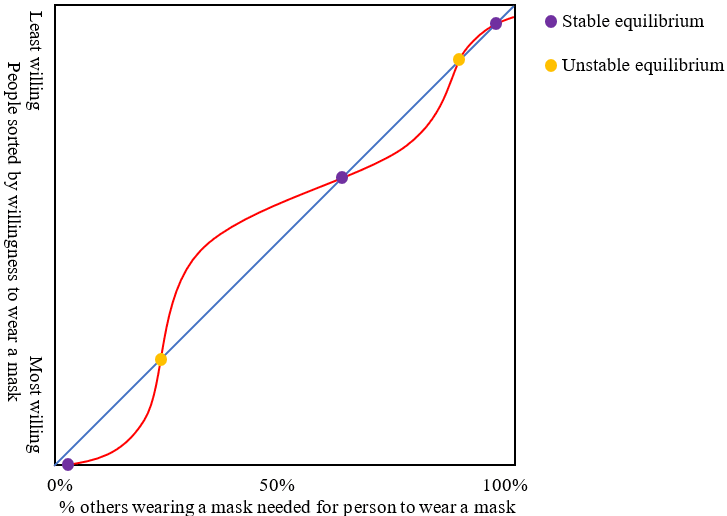

The basic idea is that threshold models describe how people’s willingness to do something depends on how many other people are doing it. Most people have some threshold at which they’ll change their public position, which is determined by a combination of their own personal preferences and the amount of pressure they feel to conform to others. For example, the graph below is a hypothetical social behavior curve of what percentage of people would wear facemasks in public, as a function of how many people they see already wearing masks. (The axis labels are a little confusing—you could also think of the x and y axes as “mask-wearers at current timestep” and “mask-wearers at next timestep” respectively.)

On this graph, if 35% of people currently wear masks, then once this fact becomes known around 50% of people would want to wear masks. This means that 35% of people wearing masks is not an equilibrium—if the number of mask-wearers starts at 35%, it will increase over time. More generally, whenever the percentage of people wearing a mask corresponds to a point on the social behavior curve above the y=x diagonal, then the number of mask-wearers will increase; when below y=x, it’ll decrease. So the equilibria are places where the curve intersects y=x. But only equilibria which cross from the left side to the right side are stable; those that go the other way are unstable (like a pen balanced on its tip), with any slight deviation sending them spiraling away towards the nearest stable equilibrium.

I recommend staring at the graph above until that last paragraph feels obvious.

I find the core insights of threshold models extremely valuable; I think of them as sociology’s analogue to supply and demand curves in economics. They give us simple models of moral panics, respectability cascades, echo chambers, the euphemism treadmill, and a multitude of other sociological phenomena—including coups.

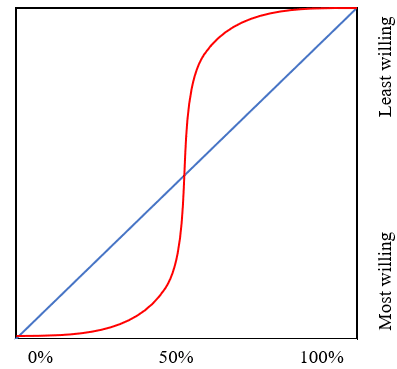

We can model coups as an extreme case where the only stable equilibria are the ones where everyone supports one side or everyone supports the other, because the pressure to be on the winning side is so strong. This implies that coups have an s-shaped social behavior curve, with a very unstable equilibrium in the middle—something like the diagram below. The steepness of the curve around the unstable equilibrium reflects the fact that, once people figure out which side of the tipping point they’re on, support for that side snowballs very quickly.

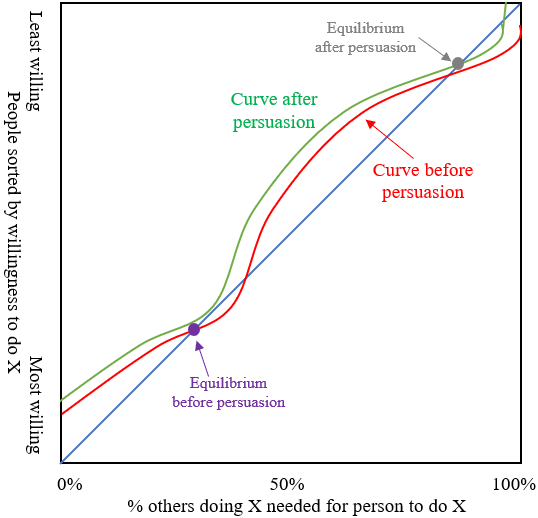

This diagram illustrates that shifting the curve a few percent left or right has highly nonlinear effects. For most possible starting points, it won’t have any effect. But if we start off near an intersection, then even a small shift could totally change the final outcome. You can see an illustration of this possibility (again from Eric’s blog post) below—it models a persuasive argument which makes people willing to support something with 5 percentage points less social proof, thereby shifting the equilibrium a long way. The historical record tells us that courageous individuals defying social consensus can work in practice, but now it works in theory too.

Having said all that, I don’t want to oversell threshold models. They’re still very simple, which means that they miss some important factors:

- They only model a binary choice between supporting and opposing something, whereas most people are noncommittal on most issues by default (especially in high-stakes situations like coups). But adding in this third option makes the math much more complicated—e.g. it introduces the possibility of cycles, meaning there might not be any equilibria.

- Realistically, support and opposition aren’t limited to discrete values, but can range continuously from weak to strong. So perhaps we should think of social behavior curves in terms of average level of support rather than number of supporters.

- Threshold models are memoryless: the next timestep depends only on the current timestep. This means that they can’t describe, for example, the momentum that builds up after behavior consistently shifts in one direction.

- Threshold models treat all people symmetrically. By contrast, belief propagation models track how preferences cascade through a network of people, where each person is primarily responding to local social incentives. Such models are more realistic than simple threshold models.

I’d be very interested to hear about extensions to threshold models which avoid these limitations.

From explaining everything to influencing everything

How should understanding the prevalence of preference falsification change our behavior? Most straightforwardly, it should predispose us to express our true beliefs more even in controversial cases—because there might be far more people who agree with us than it appears. And as described above, threshold models give an intuition for how even a small change in people’s willingness to express a view can trigger big shifts.

However, there’s also a way in which threshold models can easily be misleading. In the diagram above, we modeled persuasion as an act of shifting the curve. But the most important aspect of persuasion is often not your argument itself, but rather the social proof you provide by defending a conclusion. And so in many cases it’s more realistic to think of your argument, not as translating the entire curve, but as merely increasing the number of advocates for X by one.

There’s a more general point here. It’s tempting to think that you can estimate the social behavior curve, then decide how you’ll act based on that. But everyone else’s choices are based on their predictions of you, and you’re constantly leaking information about your decision-making process. So you can’t generate credences about how others will decide, then use them to make your decision, because your eventual decision is heavily correlated with other people’s decisions. You’re not just intervening on the curve, you are the curve.

More precisely, social behavior is a domain where the correlations between people’s decisions are strong enough to make causal decision theory misleading. Instead it’s necessary to use either evidential decision theory or functional decision theory. Both of these track the non-causal dependencies between your decision and other people’s decisions. In particular, both of them involve a step where you reason “if I do something, then it’s more likely that others will do the same thing”—even when they have no way of finding out about your final decision before making theirs. So you’re not searching for a decision which causes good things to happen; instead you’re searching for a desirable fixed point for simultaneous correlated decisions by many people.

I’ve put this in cold, rational language. But what we’re talking about is nothing less than a leap of faith. Imagine sitting at home, trying to decide whether to join a coup to depose a hated ruler. Imagine that if enough of you show up on the streets at once, loudly and confidently, then you’ll succeed—but that if there are only a few of you, or you seem scared or uncertain, then the regime won’t be cowed, and will arrest or kill all of you. Imagine your fate depending on something you can’t control at all except via the fact that if you have faith, others are more likely to have faith too. It’s a terrifying, gut-wrenching feeling.

Perhaps the most eloquent depiction of this feeling comes from Soren Kierkegaard in his book Fear and Trembling. Kierkegaard is moved beyond words by the story of Abraham, who is not only willing to sacrifice his only son on God’s command—but somehow, even as he’s doing it, still believes against all reason that everything will turn out alright. Kierkegaard struggles to describe this level of pure faith as anything but absurd. Yet it’s this absurdity that is at the heart of social coordination—because you can never fully reason through what happens when other people predict your predictions of their predictions of… To cut through that, you need to simply decide, and hope that your decision will somehow change everyone else’s decision. You walk out your door to possible death because you believe, absurdly, that doing so will make other people simultaneously walk out of the doors of their houses all across the city.

A modern near-synonym for “leap of faith” is “hyperstition”: an idea that you bring about by believing in it. This is Nick Land’s term, which he seems to use primarily for larger-scale memeplexes—like capitalism, the ideology of progress, or AGI. Deciding whether or not to believe in these hyperstitions has some similarity to deciding whether or not to join a coup, but the former are much harder to reason about by virtue of their scale. We can think of hyperstitions as forming the background landscape of psychosocial reality: the commanding heights of ideology, the shifting sands of public opinion, and the moral mountain off which we may—or may not—take a leap into the sea of faith.

Becoming a knight of faith

Unfortunately, the mere realization that social reality is composed of hyperstitions doesn’t give you social superpowers, any more than knowing Newtonian mechanics makes you a world-class baseball player. So how can you decide when and how to actually swing for the fences? I’ll describe the tension between having too much and too little faith by contrasting three archetypes: the pragmatist, the knight of resignation, and the knight of faith.

The pragmatist treats faith as a decision like any other. They figure out the expected value of having faith—i.e. of adopting an “irrationally” strong belief—and go for it if and only if it seems valuable enough. Doing that analysis is difficult: it requires the ability to identify big opportunities, judge people’s expectations, and know how your beliefs affect common knowledge. In other words, it requires skill at politics, which I’ll talk about much more in a follow-up post.

But while pragmatic political skill can get you a long way, it eventually hits a ceiling—because the world is watching not just what you do but also your reasons for doing it. If your choice is a pragmatic one, others will be able to tell—from your gait, your expression, your voice, your phrasing, and of course how your position evolves over time. They’ll know that you’re the sort of person who will change your mind if the cost/benefit calculus changes. And so they’ll know that they won’t truly be able to rely on you—that you don’t have sincere faith.

Imagine, by contrast, someone capable of fighting for a cause no matter how many others support them, no matter how hopeless it seems. Even if such a person never actually needs to fight alone, the common knowledge that they would makes them a nail in the fabric of social reality. They anchor the social behavior curve not merely by adding one more supporter to their side, but by being an immutable fixed point around which everyone knows (that everyone knows that everyone knows…) that they must navigate.

The archetype that Kierkegaard calls the knight of resignation achieves this by being resigned to the worst-case outcome. They gather the requisite courage by suppressing their hope, by convincing themselves that they have nothing to lose. They walk out their door having accepted death, with a kind of weaponized despair.

The grim determination of the knight of resignation is more reliable than pragmatism. But if you won’t let yourself think about the possibility of success, it’s very difficult to reason well about how it can be achieved, or to inspire others to pursue it. So what makes Kierkegaard fear and tremble is not the knight of resignation, but the knight of faith—the person who looks at the worst-case scenario directly, and (like the knight of resignation) sees no causal mechanism by which his faith will save him, but (like Abraham) believes that he will be saved anyway. That’s the kind of person who could found a movement, or a country, or a religion. It's Washington stepping down from the presidency after two terms, and Churchill holding out against Nazi Germany, and Gandhi committing to non-violence, and Navalny returning to Russia—each one making themselves a beacon that others can’t help but feel inspired by.

What’s the difference between being a knight of faith, and simply falling into wishful thinking or delusion? How can we avoid having faith in the wrong things, when the whole point of faith is that we haven’t pragmatically reasoned our way into it? Kierkegaard has no good answer for this—he seems to be falling back on the idea that if there’s anything worth having faith in, it’s God. But from the modern atheist perspective, we have no such surety, and even Abraham seems like he’s making a mistake. So on what basis should we decide when to have faith?

I don’t think there’s any simple recipe for making such a decision. But it’s closely related to the difference between positive motivations (like love or excitement) and negative motivations (like fear or despair). Ultimately I think of faith as a coordination mechanism grounded in values that are shared across many people, like moral principles or group identities. When you act out of positive motivation towards those values, others will be able to recognize the parts of you that also arise in them, which then become a Schelling point for coordination. That’s much harder when you act out of pragmatic interests that few others share—especially personal fear. (If you act out of fear for your group’s interests, then others may still recognize themselves in you—but you’ll also create a neurotic and self-destructive movement.)

I talk at length about how to replace negative motivation with positive motivation in this series of posts [? · GW]. Of course, it’s much easier said than done. Negative motivations are titanic psychological forces which steer most decisions most people make. But replacing them is worth the effort, because it unlocks a deep integrity—the ability to cooperate with different parts of yourself all the way down, without relying on deception or coercion. And that in turn allows you to cooperate with copies of those parts that live in other people—to act as more than just yourself. You become an appendage of a distributed agent, held together by a sense of justice or fairness or goodness that is shared across many bodies, that moves each one of them in synchrony as they take to the streets, with the knight of faith in the lead.

24 comments

Comments sorted by top scores.

comment by romeostevensit · 2025-02-24T05:25:54.883Z · LW(p) · GW(p)

Book reviews that bring in very substantive content from other relevant books are probably the type of post I find the most consistently valuable.

comment by Knight Lee (Max Lee) · 2025-02-23T09:13:36.612Z · LW(p) · GW(p)

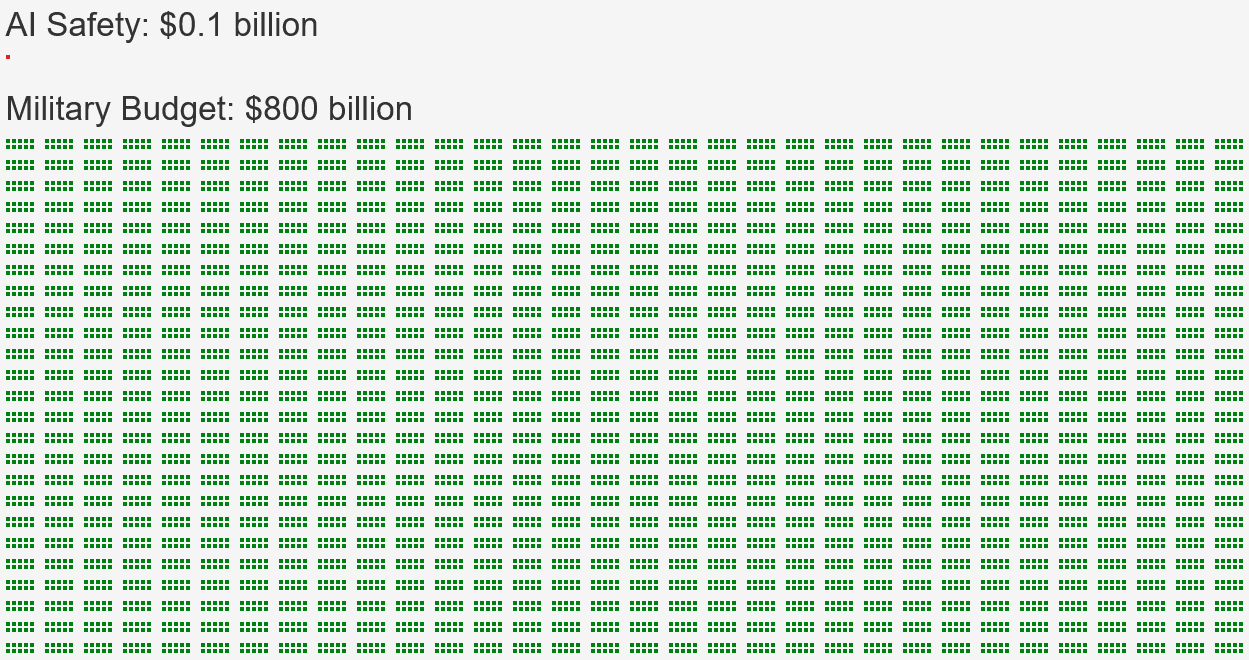

I think society is very inconsistent about AI risk because the "Schelling point" is that people feel free to believe in a sizable probability of extinction from AI without looking crazy, but nobody dares argue for the massive sacrifices (spending or regulation or diplomacy) which actually fit those probabilities.

The best guess by basically every group of people, is that with 2%-12%, AI will cause catastrophe [LW · GW] (kill 10% of people). At these probabilities, AI safety should be an equal priority to the military!

Yet at the same time, nobody is doing anything about it. Because they all observe everyone else doing nothing about it. Each person thinks the reason that "everyone else" is doing nothing, is that they figured out good reasons to ignore AI risk. But the truth is that "everyone else" is doing nothing for the same reason that they are doing nothing. Everyone else is just following everyone else.

This "everyone following everyone else" inertia is very slowly changing, as governments start giving a bit of lip-service and small amounts of funding to organizations which are half working on AI Notkilleveryoneism. But this kind of change is slow and tends to take decades. Meanwhile many AGI timelines are less than one decade.

↑ comment by Wofsen · 2025-04-18T20:49:16.434Z · LW(p) · GW(p)

Have you incorporated Government Notkilleveryoneism into your model? Rational people interested in not dying ought to invest in AI safety in proportion to the likelihood that they expect to be killed by AI. Rational governments ought to invest in AI safety in proportion to the likelihood they expect to be killed by AI. But, as we see from this article, what kills governments is not what kills people.

The government of Acheampong is in more danger from a miscalculated letter than from an AI-caused catastrophe that kills 10% of people or more, so long as important people like Acheampong are not among the 10%. The government of Acheampong, as a mimetic agent, should not care about alignment as much as it should care about having a military; governments maintain standing armies for protection against threats from within and without its borders.

You do not have to expect a government to irrationally invest in AI alignment anyway. Private Investment in AI 2024 was $130 billion. There is plenty of private money which could dramatically increase funding for AI safety. Yet it has not happened. Here are some potential explanations.

1) People do not think there is AI risk, because other people do not think there is AI risk, the issue you mentioned.

2) When people must put their money where their mouth is, their revealed preference is that they see no AI risk.

3) People think there is AI risk, but see no way to invest in mitigating AI risk. They would rather invest in flood insurance.

If option 3 is the case, Mr. Lee, you should create AI catastrophe insurance. You can charge a premium akin to high-risk life insurance. You can invest some of your revenue in assets you think will survive the AI catastrophe and then distribute these to policyholders or policyholders' next of kin in case of catastrophe, and you can invest the rest in AI safety. If there is an AI catastrophe, you will be physically prepared. If there is not an AI catastrophe, you will profit handsomely from the success of your AI safety investments and the service you would have done in a counterfactual world. You said yourself "nobody is doing anything about it." This is your chance to do something. Good luck. I'm excited to hear how it goes.

Replies from: Max Lee↑ comment by Knight Lee (Max Lee) · 2025-04-18T23:27:36.830Z · LW(p) · GW(p)

:) thank you so much for your thoughts.

Unfortunately, my model of the world is that if AI kills "more than 10%," it's probably going to be everyone and everything, so the insurance won't work according to my beliefs.

I only defined AI catastrophe as "killing more than 10%" because it's what the survey by Karger et al. asked the participants.

I don't believe in option 2, because if you asked people to bet against AI risk with unfavourable odds, they probably won't feel too confident against AI risk.

↑ comment by Da_Peach · 2025-04-18T13:19:11.655Z · LW(p) · GW(p)

I think this particular issue has less to do with public sentiment & more to do with problems that require solutions which would inconvenience you today for a better tomorrow.

Like climate change: it is an issue everyone recognizes will massively impact the future negatively (to the point where multiple forecasts suggest trillions of dollars of losses). Still, since fixing this issue will cause prices of everyday goods to rise significantly and force people into switching to green alternatives en masse, no one advocates for solutions. News articles get released each year stating record high temperatures & natural disaster rates; people complain that the seasons have been getting more extreme each passing year (for example, the monsoon in Northern India has been really inconsistent for several years now - talking from personal experience). Yet, changes are gradual (carbon tax is still wildly unpopular, and even though the public sentiment around electric cars has been getting tame, people still don't advocate for anti-car measures).

Compare this to Y2K; it was known that the "bug" would be massively catastrophic, and even though it was massively expensive to fix, they did fix it. Why? Because the issue didn't affect the lives of common folks substantively.

Though my model is certainly not all-encompassing, like how the problem of CFCs causing Ozone depletion in the upper atmosphere was largely solved, even though it very much did impact people's everyday lives & did cost a lot of money & even required global co-operation. I guess there is a tipping point on the inconvenience caused v/s perceived threat graph when people start mobilizing for the issue.

PS: It's funny how I ended at the crux of the original article, you should now be able to apply threshold modelling for this issue, since perceived threat largely depends on how many other people (in your local sphere) are shouting that this issue needs everyone's attention.

Replies from: Max Lee↑ comment by Knight Lee (Max Lee) · 2025-04-19T19:18:34.171Z · LW(p) · GW(p)

You're very right, in addition to people not working on AI risk because they don't see others working on it, you also have the problem that people aren't interested in working on future risk to begin with.

I do think that military spending is a form of addressing future risks, and people are capable of spending a lot on it.

I guess military spending doesn't inconvenience you today, because there already are a lot of people working in the military, who would lose their jobs if you reduced military spending. So politicians will actually make more people lose their jobs if they reduced military spending.

Hmm. But now that you bring up climate change, I do think that there is hope... because some countries do regulate a lot and spend a lot on climate change, at least in Europe. And it is a complex scientific topic which started with the experts.

Maybe the AI Notkilleveryone movement should study what worked and failed for the green movement.

Replies from: Da_Peach↑ comment by Da_Peach · 2025-04-20T07:41:36.437Z · LW(p) · GW(p)

That's an interesting idea. The military would undoubtedly care about AI alignment — they'd want their systems to operate strictly within set parameters. But the more important question is: do we even want the military to be investing in AI at all? Because that path likely leads to AI-driven warfare. Personally, I'd rather live in a world without autonomous robotic combat or AI-based cyberwarfare.

But as always, I will pray that some institution (like the EU) leads the charge & start instilling it into people's heads that this is a problem we must solve.

Replies from: Max Lee↑ comment by Knight Lee (Max Lee) · 2025-04-20T08:32:40.730Z · LW(p) · GW(p)

Oops, I didn't mean we should involve the military in AI alignment. I meant the military is an example of something working on future threats, suggesting that humans are capable of working on future threats.

I think the main thing holding back institutions is that public opinion does not believe in AI risk. I'm not sure how to change that.

Replies from: Da_Peach↑ comment by Da_Peach · 2025-04-20T09:06:10.417Z · LW(p) · GW(p)

To sway public opinion about AI safety, let us consider the case of nuclear warfare—a domain where long-term safety became a serious institutional concern. Nuclear technology wasn’t always surrounded by protocols, safeguards, and watchdogs. In the early days, it was a raw demonstration of power: the bombs dropped on Hiroshima and Nagasaki were enough to show the sheer magnitude of destruction possible. That spectacle shocked the global conscience. It didn’t take long before nation after nation realized that this wasn't just a powerful new toy, but an existential threat. As more countries acquired nuclear capabilities, the world recognized the urgent need for checks, treaties, and oversight. What began as an arms race slowly transformed into a field of serious, respected research and diplomacy—nuclear safety became a field in its own right.

The point is: public concern only follows recognition of risk. AI safety, like nuclear safety, will only be taken seriously when people see it as more than sci-fi paranoia. For that shift to happen, we need respected institutions to champion the threat. Right now, it’s mostly academics raising the alarm. But the public—especially the media and politicians—won’t engage until the danger is demonstrated or convincingly explained. Unfortunately for the AI safety issue, evidence of AI misalignment causing significant trouble will probably mean it's too late.

Adding fuel to this fire is the fact that politicians aren't gonna campaign about AI safety if the corpos in your country don't want to & your enemies are already neck-to-neck in AI dev.

In my subjective opinion, we need the AI variant of Hiroshima. But I'm not too keen on this idea, for it is a rather dreadful thought.

Edit: I should clarify what I mean by "the AI variant of Hiroshima." I don't think a large-scale inhuman military operation is necessary (as I already said, I don't want AI warfare). What I mean instead is something that causes significant damage & makes it to newspaper headlines worldwide. Examples: strong evidence that AI swayed the presidential election one way; a gigantic economic crash caused because of a rogue AI (not the AI bubble bursting); millions of jobs being lost in a short timeframe because of one revolutionary model, which then snaps because of misalignment; etc. There are still dreadful, but at least no human lives are lost & it gets the point across that AI safety is an existential issue.

Replies from: Max Lee↑ comment by Knight Lee (Max Lee) · 2025-04-20T18:04:14.387Z · LW(p) · GW(p)

I think different kinds of risks have different "distributions" of how much damage they do. For example, the majority of car crashes causes no injuries (but damage to the cars), a smaller number causes injuries and some causes fatalities, and the worst ones can cause multiple fatalities.

For other risks like structural failures (of buildings, dams, etc.) the distribution has a longer tail: in the worst case very many people can die. But the distribution still tapers off towards greater number of fatalities, and people sort have have a good idea of how bad it can get before the worst version happens.

For risks like war, the distribution has an even longer tail, and people are often caught by surprise how bad they can get.

But for AI risk, the distribution of damage caused is very weird. You have one distribution for AI causing harm due to its lack of common sense, where it might harm a few people, or possibly cause one death. Yet you have another distribution for AI taking over the world, with a high probability of killing everyone, a high probability of failing (and doing zero damage), and only a tiny bit of probability in between.

It's very very hard to learn from experience in this case. Even the biggest wars tend to surprise everyone (despite having a relatively more predictable distribution).

comment by habryka (habryka4) · 2025-04-15T20:44:45.622Z · LW(p) · GW(p)

Promoted to curated: I quite liked this post. The basic model feels like one I've seen explained in a bunch of other places, but I did quite like the graphs and the pedagogical approach taken in this post, and I also think book reviews continue to be one of the best ways to introduce new ideas.

Replies from: ricraz↑ comment by Richard_Ngo (ricraz) · 2025-04-15T22:00:35.745Z · LW(p) · GW(p)

Thanks! Note that Eric Neyman gets credit for the graphs.

Out of curiosity, what are the other sources that explain this? Any worth reading for other insights?

Replies from: criticalpoints↑ comment by criticalpoints · 2025-04-23T03:13:09.686Z · LW(p) · GW(p)

I'll second that I liked this post, but it felt mostly familiar to me. I've seen these ideas discussed in other game theory posts on Lesswrong.

In any discussion of Schelling points, the natural follow-up question is always: "Wait...is everything secretly a Keynesian Beauty Contest?!?"

comment by samuelshadrach (xpostah) · 2025-02-24T18:29:17.097Z · LW(p) · GW(p)

I love this post.

1. Another important detail to track is what the leader says in private versus what they say in public. Typically you may want to first acquire data and attempt to trigger these cascades in private and in smaller groups, before you try triggering them across your nation or planet.

2. I also think the Internet is going to shift these dynamics, by forcing private spheres of life to shrink or even become non-existent, and by increasing the number of events that are in public and therefore have potential to trigger these cascades.

For example someone might just be experimenting with an unusual ideology or relationship or lifestyle in their private life, but if they and 50 others end up posting about this on YouTube, and a bunch of people end up reviewing it favourably, it could quickly become a worldwide phenomena practised by millions.

A corollary is that if someone powerful wants their current ideas to not be threatened by competing ideas they may need to even more aggressively shut down anyone trying anything else in public, even if it’s only being tried at a small scale. Shutting down the more popular ideas might just mean more public attention gets freed up and directed to the less popular ideas.

I am not sure a monopoly on violence is sufficient to shut down ideas in today’s world. People still smuggle hard drives and phones into North Korea for example. A real-time system to smuggle information could change the dynamics of military coups in the future. Note that this information could even include information about people’s preferences, who sides with who, trustworthy video proofs, leaks of private information of the elites etc.

P.S. Shameless plug but I’m currently a full-time researcher trying to figure this stuff out. (Implications of the internet.) Feel free to read my website and reach out if interested.

comment by jasoncrawford · 2025-04-19T23:13:07.462Z · LW(p) · GW(p)

This pairs well with Scott Alexander's Kolmogorov Complicity And The Parable Of Lightning

comment by danielechlin · 2025-03-01T18:44:48.738Z · LW(p) · GW(p)

Camus specifically criticized that Kierkegaard leap of faith in Myth of Sisyphus. Would be curious if you've read it and if it makes more sense to you than me lol. Camus basically thinks you don't need to make any ultimate philosophical leap of faith. I'm more motivated by the weaker but still useful half of his argument which is just that nihilism doesn't imply unhappiness, depression, or say you shouldn't try to make sense of things. Those are all as wrong as leaping into God faith.

comment by Purplehermann · 2025-02-24T11:00:06.268Z · LW(p) · GW(p)

I found the graph confusing, why one set of points is unstable/stable

Replies from: Measure↑ comment by Measure · 2025-02-24T14:50:58.900Z · LW(p) · GW(p)

Think of the derivative of the red curve. It represents something like "for each marginal person who switched their behavior, how many total people would switch after counting the social effects of seeing that person's switch". If the slope is less than one, then small effects have even-smaller social effects and fizzle out without a significant change. If the slope is greater than one, then small effects compound, radically shifting the overall expression of support.

comment by PoignardAzur · 2025-04-19T09:27:03.046Z · LW(p) · GW(p)

One aspect of this I'm curious about is the role of propaganda, and especially russian-bot-style propaganda.

Under the belief cascade model, the goal may not be to make arguments that persuade people, so much as it is to occupy the space, to create a shared reality of "Everyone who comments under this Youtube video agrees that X". That shared reality discourages people from posting contrary opinions, and creates the appearance of unanimity.

I wonder if sociologists have ever tried to test how susceptible propaganda is to cascade dynamics.

comment by YonatanK (jonathan-kallay) · 2025-04-18T17:58:43.790Z · LW(p) · GW(p)

Richard, reading this piece with consideration of other pieces you've written/delivered about Effective Altruism, such as your Lessons from my time in Effective Altruism [EA · GW] and your recent provocative talk at EA Global Boston [LW · GW] lead me to wonder what it is (if anything) that leads you to self-identify as an Effective Altruist? There may not be any explicit EA shibboleth, but it seems to me to nevertheless entail a set of particular methods, and once you have moved beyond enough of them it may not make any sense to call oneself an Effective Altruist.

My mental model of EA has it intentionally operating at the margins, in the same way that arbitrageurs do, maximizing returns (specifically social ones) to a small number of actors willing to act not just in contrary fashion to but largely in isolation from the larger population. Once we recognize the wisdom of faith it seems to me we are moving back into the normie fold, and in that integration or synthesis the EA exclusivity habit may be a hindrance.

comment by JMiller · 2025-04-18T13:24:33.126Z · LW(p) · GW(p)

I really enjoyed this post, Richard! The object level message is inspiring, but I also found myself happily surprised at the synthesis of three books which, on their surface, are about quite different subjects! Just throwing my +1 here as someone interested in reading more posts like this.

comment by YonatanK (jonathan-kallay) · 2025-04-16T22:58:55.473Z · LW(p) · GW(p)

A relevant, very recent opinion piece that has been syndicated around the country, explaining the universal value of faith:

https://www.latimes.com/opinion/story/2025-03-29/it-is-not-faith-that-divides-us

comment by Afterimage · 2025-04-16T02:16:08.897Z · LW(p) · GW(p)

Great article, I found the decision theory behind, if they think I think they think etc very interesting. I'm a bit confused about the knight of faith. In my mental model, people who look like the knight of faith aren't accepting the situation is hopeless, but rather powering on through some combination of mentally minimizing barriers, pinning hopes on small odds and wishful thinking.

For example lets put it in the context of flipping 10 coins.

Rationalist - I'm expecting 5 heads

My model of knight of faith - I'm expecting 10 heads because there's a slight chance and I really need it to be true.

Knight of faith as described - I'm expecting 20 heads and I'm going to base my decisions on 20 heads actually happening.

Maybe that's an obvious distinction but in that case why bring up the knight of faith and instead just focus on the power of wishful thinking in some situations.