All the Following are Distinct

post by Gianluca Calcagni (gianluca-calcagni) · 2024-08-02T16:35:51.815Z · LW · GW · 3 commentsContents

What I Hope to Achieve The Basics The First Round of Complications The Second Round of Complications The Third (and Final) Round of Complications Back to the Original Question Further Complications Addenda Further Links Who I am Revision History Footnotes None 3 comments

In an artificial being, all the following:

- Consciousness

- Emotionality

- Intelligence

- Personality

- Creativity

- Volition

are distinct properties that, in theory, may be activated independently. Let me explain.

What I Hope to Achieve

By publishing this post, I hope to develop and standardise some useful terms and concepts often found in many other posts. I also wish to explain in which sense some properties are linked to others - and, importantly, when they are not. I hope this post will be of help and provide a common context for future further discussions.

What I am not trying to achieve is some universal definition of consciousness/intelligence/… simply because that’s too hard! To avoid controversies, I will focus on operational facets and nothing more[1]. I am still aware that the final result will be very opinionated, so feel free to challenge me and open my mind.

The Basics

Let’s suppose you walk around a new planet and you find some kind of system (artificial or natural - it doesn’t matter) that is able to “autonomously” execute general computations (digital, analog, quantum - it doesn’t matter, and it doesn’t matter which ones and why). Think of it as a universal Turing machine[2]: we’ll call such a system a computational being. Of course, I could just call it a computer - but the problem is that most people don’t recognise themselves as “computers”, hence the term has some semantic bias; also, would you consider an ecosystem as a “computer”? And yet an ecosystem can run computations, if you are inclined to interpret in that way the lifecycle of its inhabitants while they maintain (unknowingly) a stable equilibrium. So, let me use “computational being” as an umbrella term and as the foundation for the rest of the discussion.

- Natural examples of computational beings: any living being is also a computational being. Selfish genes favour, by means of natural selection, computations that support their own reproduction. The competition between genes can sometimes lead to autonomous computational beings composed of many cells (such as ourselves).

- Artificial examples of computational beings: any computing machine is a computational being. Even server farms can fit the definition, as long as there is a meaningful way to describe their collective computation as "autonomous" from the rest of the world. Can a computational being be part of a larger computational being? Absolutely - as long as it makes sense from some point of view.

What does a “computational being” usually do? (1) It connects to a network consisting of other computational beings; (2) it receives communications in input, either from external sources or self-prompted; (3) it sends communications in output, either to external parties or to itself; (4) it alters its own states according to multiple factors, both internal and external; (5) it alters the physical world, either by communicating or by performing work. None of that is strictly required for a computational being, but usually that’s what happens and what makes it interesting.

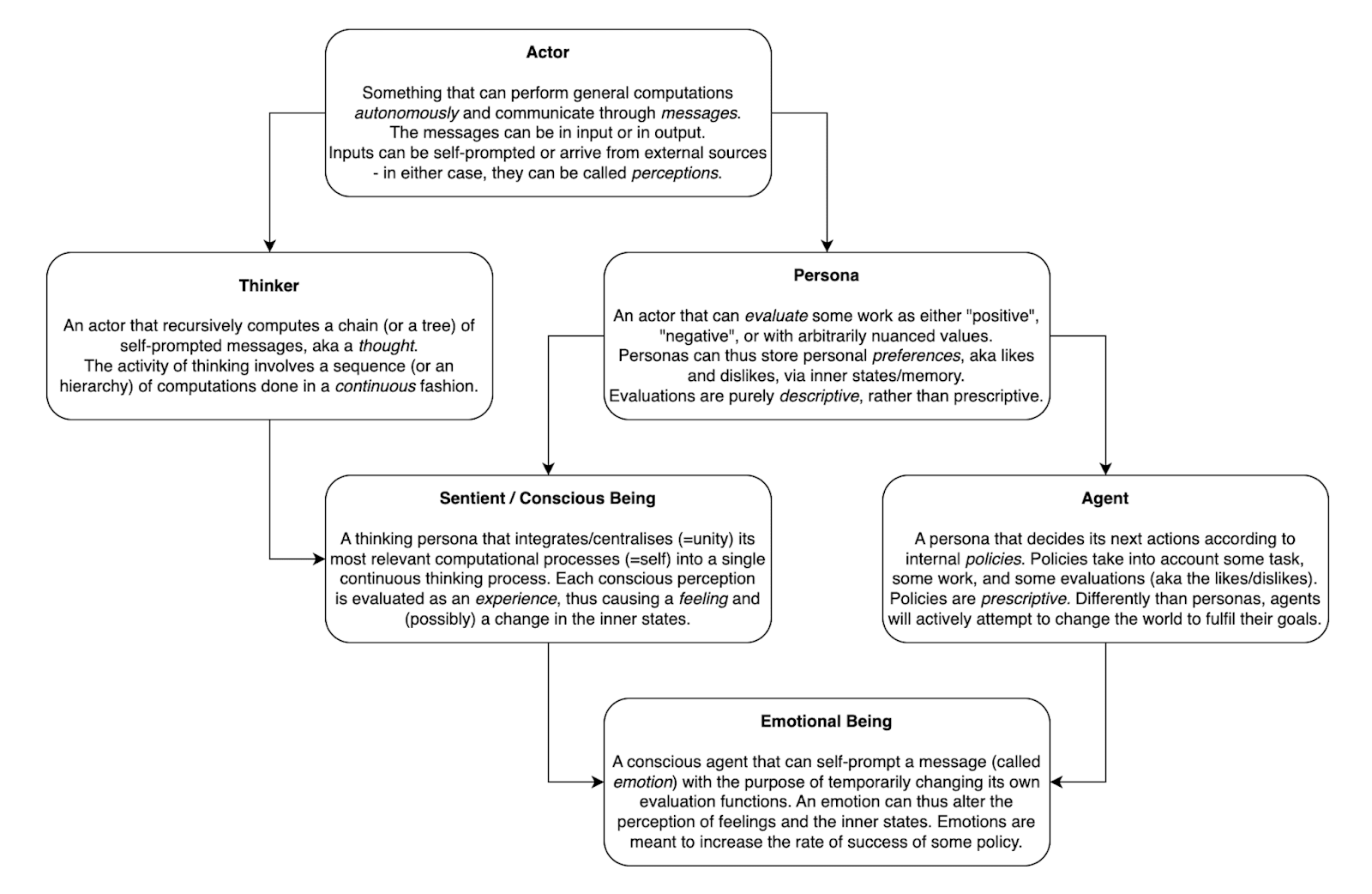

Let me refer to a computational being that communicates in a network as an actor[3]. In the following, what is communicated (or why) doesn’t matter! What is received in input will be simply called a perception[4] of the actor.

The First Round of Complications

Let’s consider what happens if an actor is constantly sending self-prompts to itself, in a recursive way. You can envision this operation as an actor that is thinking about something - a stream of thoughts! According to this interpretation, you can declare as “thinking” even some brain activity that is entirely subconscious, or some computer process that acts as a scheduler. That is not the point though: the point is the “iteration over time”, aka the fact that thinking is done in a continuous fashion. We’ll get back to this later - for now, let’s call this kind of actor a thinker.

Let’s consider now another example: what happens if the actor perceives in input something that is very unpleasant? It seems natural for actors to run an evaluation function that allows them to gauge the relevance of some perception, i.e. if they like it or dislike it (neutral is also fine). Such evaluation can be nuanced: it can return quantitative measures (numerical), qualitative dimensions (categorical), or a combination of both. Which thing is liked, or how much, or how the actor reacts after doing an evaluation - all of that does not matter! What matters is that an evaluation function exists and it is often applied. We’ll call this kind of actor a persona, because it shows personal preferences[5].

- Biological beings have:

- innate universal preferences (e.g. survival)

- innate personal preferences (e.g. sweet teeth)

- acquired personal preferences (e.g. reading books)[6].

- Neural networks have:

- weights (that encode information, including the model’s preferences)

- biases inherited by the training data (e.g. gender bias)

- after fine-tuning, a large language model can show consistent inclinations (e.g. being polite and helpful).

The Second Round of Complications

What happens if we combine a thinker and a persona, and then we provide it with an input? We get something quite close to an experience, as in something perceived consciously in the stream of thoughts and whose evaluation is incorporated and alters the stream itself[7]. I am not saying that this is the definition of consciousness: I am just saying that consciousness requires an integration[8] of the most relevant computational processes into a single continuous recursive evaluation[9].

With a debatable abuse of language, we’ll call this kind of actors weakly conscious (or weakly sentient). Considering the abuse, I feel forced to clarify what this definition does (and does not) entail:

- The definition implies the presence of experiences and feelings (the qualia).

- The definition does not imply self-awareness, aka understanding one’s own individuality.

- The definition does not imply the narrative self, aka the inner voice within one’s head.

- The definition does not imply an ego, aka (according to some researchers) the ability of developing a coherent story that feels familiar and that reduces cognitive dissonance.

- The definition does not imply having a theory of mind, aka forming an idea about other’s beliefs, desires, intentions, emotions, and thoughts.

- The definition does not imply agentic behaviour: even if the actor dislikes something, that does not mean that the actor will necessarily do something about it. Some conscious beings may be agentic, while some others may simply act as detached observers.

Let’s proceed with more complex behaviours: suppose that a persona does not like what it sees around, and it is considering taking action to solve the issue. But which action will actually solve the issue? The question is harder than it looks! That is the reason why we are going to skip it and focus instead on the selection of a policy function, whose purpose is to generate a list[10] of possible next-actions based on the latest inputs and the current state of the persona. The presence of a policy function makes the persona an agent and it provides it with volition[11]. A highly-refined policy function will likely take the form of a global decision utility function but, as I mentioned before, we are going to skip that discussion.

Final point: some agents may be conscious and feel emotions. What does that mean? Emotions are self-prompts that are decided by some internal policy function, and are meant to alter your evaluation of things. If you are angry, you might take offense at an innocent joke; if you are sad, you may cry while watching a playful puppy. Nature gave us emotions, and made us emotional beings for improving our chances of survival. Shall AI models be emotional as well?

The Third (and Final) Round of Complications

So far, we defined:

- Generic Actors, that act by communicating or by performing work.

- Thinkers, that are able to maintain some continuous recursive brain process.

- Personas, that show preferences and inclinations over their perceptions.

- Weakly Conscious Beings, that feel experiences while thinking and evaluating the world.

- Agents, that are actively attempting to change the world as they please.

- Emotional Beings, that can alter their own experiences and reactions.

Importantly though, we didn’t discuss what is intelligence! To get there, we need quite a number of new concepts.

First of all, let’s define what is a task: conceptually, it is just a collection of specific pairs (input, output) where the input is interpreted as a request, while the output is interpreted as an acceptable[12] response. Possibly, the collection may be infinite! Tasks can be assigned to generic actors, that will then generate a response anytime a request is made: such activity is called performing the task and it means that the actor is executing work (so the actor can be called a worker).

Importantly, actors are fallible and sometimes they may produce an unacceptable response! If that happens, a second actor (more specifically, a persona) can evaluate the work and decide if it is positive or negative. The next step is to decide a goal - for example, 90% average positive rate - to understand if the worker succeeded or failed the task according to the goal.

All the terminology above is pretty much well-established in the ML literature. What is intelligence? We are now prepared to answer: intelligence is the capacity of performing successfully on a new computational task, up to a chosen goal. In other words, intelligence is a metric used to understand if some goal will likely be fulfilled (or not).

The definition suggests that intelligence is a spectrum, and it is measured based on goal performance. That is strictly an operational definition, and it excludes many physical forms of intelligence, but it is well accepted nowadays in the ML community.

The higher the intelligence, the higher the chance of:

- correctly predicting the future (if the task is forecasting)

- discovering innovative solutions (if the task is problem solving)

- producing creative things (if the task is artefact generation)

- influencing other people (if the task is social engineering).

Game theory provides a number of challenges for actors, especially when involving collective knowledge.

I am inclined to think that the reason why many animals show advanced intelligence is because they happen to be hunters or preys (or both!), hence they are selected to be intelligent in respect to the goal of surviving. Specifically, hunting requires a mix of forecasting and requires forming mental models about the prey's behaviour; sometimes, it also requires social skills to collaborate in a group. Humans ticked all those boxes, while also being generalists and explorers[13].

We are almost there: we only need to define a few definitions.

- Learning is the ability of improving one’s own task performance by integrating additional context (e.g. past experiences, training materials, etc.)

- Teaching is the ability of improving the learning rate of other actors.

Back to the Original Question

In an artificial being, all the following:

- (Weak) Consciousness

- Emotionality

- Intelligence

- Personality

- Creativity

- Volition

are distinct properties since:

- (Weak) Consciousness requires being a persona with thoughts, aka Thinking + Personality.

- Emotionality requires being weakly conscious and having some policy function, aka Consciousness + Agency.

- Intelligence is measured up to specific goals, and it’s a spectrum. In other words, it is independent of the other properties.

- Personality requires having and applying some evaluation function.

- Creativity is intelligence measured up to the specific goal of artefact generation.

- Volition requires being a persona and having some policy function, aka it’s a synonym of Agency.

Are you happy with my explanation? Probably not! The topic is highly controversial, and that’s fine - I just hope I gave you some context for further discussions, and that I clarified some difficult concepts and how they (may) relate to each other.

Further Complications

If you are still there, I’ll just briefly touch a few more hot topics. If some AI models were not just mere stochastic parrots, then…

- Are LLMs weakly conscious? I may argue that the context length provides a small window of continuity where each prompt/reply represents a thought. LLMs also show clear preferences and inclinations (that’s the reason why techniques such as RLHF, DPO, etc. were created at all: because the developers didn’t like the “innate” preferences of the LLMs!). However, I doubt that the process is integrated enough to be considered conscious and, if it is, then its “degree of consciousness” must be quite low when compared to animals.

- Is it possible to create intelligent zombies? Aka AI models that can fulfil very hard intellectual goals, and yet are not conscious? I believe it’s a serious possibility, and I’d be worried if they took any form of control over humans.

- If we are able to create a conscious agentic AI model, shall we provide AI with emotions? And which ones? Honestly, I have no idea… I cannot even tell if that would be good or bad.

- Which properties would be needed in some AI model to even consider granting it legal rights and obligations, as if it was an actual legal persona?

- If you created an AI model that can act as a human from most points of view, and it was also proven to be highly conscious, but the model was truly aligned to the idea of not wanting any legal rights, would the model deserve legal rights anyway?

Addenda

Andrew Critch in this post [LW · GW] discussed some informal alternative properties related to consciousness. They are worth a reading and I recommend having a look.

Which type of actor is the "safest" for training an AI model? I believe that contextless non-thinking (aka one-shot) non-personal actors are the safest, but they may not be very useful due to lack of thinking; at the very opposite, agentic models are the most dangerous since they most closely resemble paperclip maximizers [? · GW] combined with intelligent zombies [? · GW]. Emotional models may be terrible or terrific, depending on how they are implemented in practice.

In any case, any thinking model may be inherently dangerous, as shown for example here [LW · GW].In the ML community, "learning" is defined as the ability of improving its own performance by using data, aka facts. But what is "knowledge" then? Is that just your own set of learnt beliefs? Some authors have tried to challenge that assumption by proposing a mathematical framework where the difference between "learning something" and "acquiring knowledge about something" can be captured. In such framework, "knowledge" is a learnt belief with a learnt justification.

Further Links

Control Vectors as Dispositional Traits [LW · GW] (my previous post)

Who I am

My name is Gianluca Calcagni, born in Italy, with a Master of Science in Mathematics. I am currently (2024) working in IT as a consultant with the role of Salesforce Certified Technical Architect. My opinions do not reflect the opinions of my employer or my customers. Feel free to contact me on Twitter or Linkedin.

Revision History

[2024-08-02] Post published.

[2024-11-23] Included addendum about alternative properties of conscious beings.

[2024-11-26] Included addendum about AI safety.

[2025-01-09] Included addendum about the difference between learning and knowledge.

Footnotes

- ^

I realise that that is very limiting and still open to different interpretations, but it is nonetheless the best I can do.

- ^

If given limitless resources.

- ^

The term is taken from the actor model theory in computer science.

- ^

A perception is different from an “experience” (or a “feeling”) because an experience is a perception perceived consciously. When I refer to a “perception”, I just refer to some sensory stimuli, intended or unintended.

- ^

Having preferences does not imply having agency, aka the will of acting upon the world to pursue one’s own preferences. In this context, the preferences only act as a way to determine if a perception has positive/negative/neutral "feeling" (with all its possible nuances).

- ^

As a separate note: preferences do not need to be rational nor consistent; they are not supposed to be a formal logical calculus or anything like that. In other words, you are allowed to wish for some rest while simultaneously wishing to earn more money, despite the two things may be incompatible.

- ^

By such definition, sensations such as pleasure or pain are not just perceptions, but true feelings because they are the result (respectively, pleasant or painful) of some evaluation (resp. liked or disliked).

- ^

I am implicitly stating that consciousness requires at least two factors: (1) continuity, that we can model with a stream of thoughts; and (2) unity-of-self, that we can model with a single highly-integrated decision-making process. That’s not enough to define consciousness, but (1) and (2) are some of its components.

- ^

While the presence of consciousness is somewhat uncertain, the presence of the unity-of-self in most biological beings (even elementary ones) is very common, most likely because it provides strong benefits - e.g. the ability to act in a highly coordinated way against unexpected circumstances, such as the presence of dangers or preys. The things that do not require active coordination are instead automatised by evolution - e.g. heartbeats, that are usually ignored at a conscious level.

- ^

Actually, a probability distribution.

- ^

Returning to a previous example: while you are allowed to wish for some rest while simultaneously wishing to earn more money, the two things are incompatible so your policy function can only choose to fulfil a single option at a time. The option may change depending on how tired you are, or how much money you are offered.

- ^

Tasks don’t need to be deterministic or unambiguous and, in fact, most of the times defining what is an “acceptable” response is actually unclear and fuzzy. That is okay since we are going to introduce a second actor to assess the work and decide subjectively if some outcome is acceptable or not.

- ^

Or maybe it’s the other way round? Maybe intelligence pushed humanity into generalism and exploration?

3 comments

Comments sorted by top scores.

comment by Gunnar_Zarncke · 2024-08-02T21:25:59.564Z · LW(p) · GW(p)

I like the approach of defining these common properties in a disjunct and non-cyclic way. I think we need to define these concepts around agency in a grounded way, i.e., without cyclic or vague references and by referring to the physical world (game theory is crisp, but it lacks this connection, dealing only with symbols and numbers).

Replies from: gianluca-calcagni↑ comment by Gianluca Calcagni (gianluca-calcagni) · 2024-08-06T09:16:15.895Z · LW(p) · GW(p)

Thanks @Gunnar_Zarncke [LW · GW] , I appreciate your comment! You correctly identified my goal, I am trying to ground the concepts and build relationships "from the top to the bottom", but I don't think I can succeed alone.

I kindly ask you to provide some challenges: is there any area that you feel "shaky"? Any relation in particular that is too much open to interpretation? Anything obviously missing from the discussion?

↑ comment by Gunnar_Zarncke · 2024-08-07T10:29:22.085Z · LW(p) · GW(p)

Have you seen 3C's: A Recipe For Mathing Concepts [LW · GW]? I think it has some definitions for you to look into, esp. the last sentence:

If you want to see more examples where we apply this methodology, check out the Tools [LW · GW] post, the recent Corrigibility [LW · GW] post, and (less explicitly) the Interoperable Semantics [LW · GW] post.