Money Pump Arguments assume Memoryless Agents. Isn't this Unrealistic?

post by Dalcy (Darcy) · 2024-08-16T04:16:23.159Z · LW · GW · 3 commentsThis is a question post.

Contents

Answers 6 Richard_Kennaway 5 Charlie Steiner None 3 comments

I have been reading about money pump arguments for justifying the VNM axioms, and I'm already stuck at the part where Gustafsson justifies acyclicity. Namely, he seems to assume that agents have no memory. Why does this make sense?[1] To elaborate:

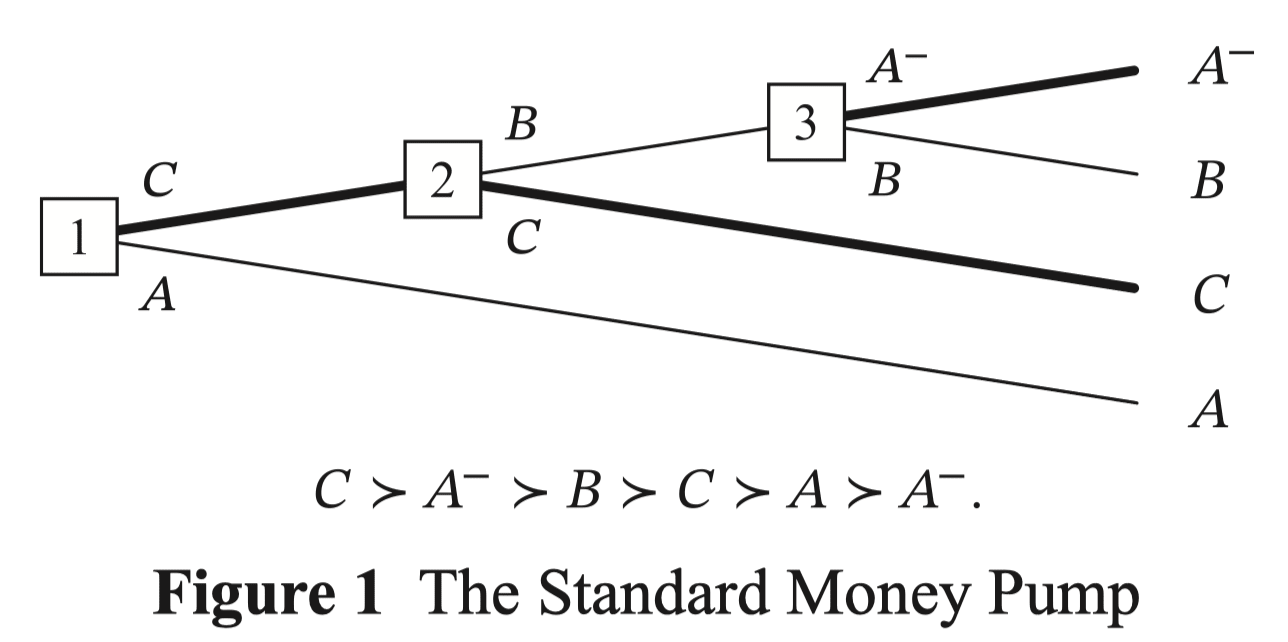

The standard money pump argument looks like this.

Let's assume (1) , and that we have a souring of such that it satisfies (2) , and (3) [2]. Then if you start out with , at each of the nodes you'll trade for , , and , so you'll end up paying for getting what you started with.

This makes sense, until you realize that the agent here implicitly believes that their current choice is the last choice they'll ever make, i.e. they're behaving myopically.

Notice that without such restriction, there is the obvious strategy of: "look at the full tree, only pick leaf nodes that aren't in the state of having been money pumped, and stick to the plan of only reaching that node."

- In practice, this looks fairly reasonable: "Yes, I do in fact prefer onions to pineapples to mushrooms to onions on my pizza. So what? If I know that you're trying to money pump me, I'll just refuse the trade. I may end up locally choosing options that I disprefer, but I don't care since there is no rule that I must be myopic, and I will end up with a preferred outcome at the end."

I'd say myopic agents are unnatural (as Gustafsson notes) because we want to assume the agent has full knowledge of all the trades that are available to them. Otherwise a defect (i.e. getting money-pumped) could be associated not necessarily with their preferences, but with their incomplete knowledge of the world.

So he proceeds to consider less restrictive agents such as sophisticated[3] and minimally sophisticated[4] agents, for which the above setup fails - but there exist modifications that still make money pump possible as long as they have cyclic preferences.

However, all of these agents still follow one critical assumption:

Decision-Tree Separability: The rational status of the options at a choice node does not depend on other parts of the decision tree than those that can be reached from that node.

This means that agents have no memory (this ruling out my earlier strategy of "looking at the final outcome and committing to a plan"). This still seems very restrictive.

Can anyone give a better explanation as to why such money pump arguments (with very unrealistic assumptions) are considered good arguments for the normativity of acyclic preferences as a rationality principle?

- ^

To be clear, I'm not claiming "... therefore I think cyclic preferences are actually okay." I understand that the point of formalizing money pump arguments is to capture our intuitive notion of something being wrong when someone has cyclic preferences. I'm more questioning the formalism and its assumptions.

- ^

Note (3) doesn't follow from (2), because here is a general binary relation. We are trying to derive all the nice properties like acyclicity, transitivity and such.

- ^

Agents that can, starting from their current node, use backwards induction to locally take the most preferred path.

- ^

A modification of sophisticated agents such that it no longer needs to predict it will act rationally in nodes that can only be reached by irrational decisions.

Answers

Yes, it is unrealistic. The problem is that no-one has devised something more complex that everyone agrees on, leaving EU (expected utility) theory as the only Schelling point in the field.

With the VNM axioms (and all similar setups for deriving utility functions), there are no decision trees and no history. Decisions are one-off, and assumed to be determined by a preference relation which considers only the two options presented. There is no past and no future for the agent making these "decisions".

I put quote marks there because a decision in the real world leads to actions which change the world in some way according to the agent's intentions. Actions are followed by more decisions and actions, and so on. All of this is excluded from the VNM framework, in which there are no "decisions", only preferences. EU theory hardly merits being called a decision theory, for all that the SEP article on decision theory talks almost exclusively about EU and only dips a toe into the waters of sequential decisions, recording how philosophers have made rather heavy weather even of the simple story of Ulysses and the sirens.

If you try to extend the VNM framework to the real world of sequential decisions and strategies, you end up making the "outcomes" be all possible configurations of the whole of your future light-cone. The preference relation is defined on all pairs of these configurations. The agent's "actions" are all the possible sequences of its individual actions. But who can know how their actions will affect their entire future light-cone, or what actions will become available to them in their far future?

I do not think that EU theory can usefully be extended in this way. It is a spherical cow too far.

If we only had the idea of generalized preferences that allow cycles, it would be necessary to invent a new idea that was acyclic, because that's a much more useful mental tool for talking about systems that choose based on predicting the impact of their choices. Or more loosely, that are "trying to achieve things in the world."

The force of money-pump arguments comes from taking for granted that you want to describe the facets of a system that are about achieving things in the world.

If you want to describe other facets of a system, that aren't about achieving things in the world, feel free to depart from talking about them in terms of a preference ordering.

I don't think fighting the hypothetical by introducing memory is actually all that interesting, because sure, if you set up the environment such that the agent can never be presented with a new choice, you can't do money-pumping, but it doesn't dispose of the underlying question of whether you should model this agent as having a preference ordering over outcomes for the purposes of decision-making. The memoryless setting is just a toy problem that illustrates some key issues.

↑ comment by [deleted] · 2024-08-16T08:40:22.299Z · LW(p) · GW(p)

Or more loosely, that are "trying to achieve things in the world."

I think this is too loose, to the point of being potentially misleading to someone who is not entirely familiar with the arguments around EU maximization. I have rallied against [LW(p) · GW(p)] such language in the past, and I will continue to do so again, because I think it communicates the wrong type of intuition by making the conclusion seem more natural and self-evident (and less dependent on the boilerplate [LW · GW]) than it actually is.[1]

By "trying to achieve things in the world," you really mean "selecting the option that maximizes the expected value of the world-state you will find yourself in, given uncertainty, in a one-shot game." But, at a colloquial level, someone who is trying to optimize over universe-histories [LW · GW] or trajectories [LW(p) · GW(p)] would likely also get assigned the attribute of "trying to achieve things in the world", but now the conclusions reached by money-pump arguments are far less interesting and important.

it doesn't dispose of the underlying question of whether you should model this agent as having a preference ordering over outcomes for the purposes of decision-making

The money-pump arguments don't dispose of this question either, they just assume that the answer is "yes" (to be clear, "preference ordering over outcomes" here refers to outcomes as lottery-outcomes instead of world-state-outcomes, since knowing your ordering over the latter tells you very little decision-relevant stuff about the former).

- ^

This conforms with the old (paraphrased) adage that "If you don't tell someone that an idea is hard/unnatural, they will come away with the wrong impression that it's easy and natural, and then integrate this mistaken understanding into their world-model", which works nice and good until they get hit with a situation where rigor and deep understanding become crucial.

A basic sample illustration is introductory proof-based math courses, where if students are shown a dozen examples of induction working and no examples of it failing to produce a proof, they will initially think everything can be solved by induction, which will hurt their grade on the midterm when you need a method other than induction.

3 comments

Comments sorted by top scores.

comment by [deleted] · 2024-08-16T08:03:29.575Z · LW(p) · GW(p)

These topics (although not this exact question in its present form, AFAICT) have been discussed on LW over the past few years. A few highlights that seem relevant here:

- Rohin Shah has [LW · GW] explained that if the domain of definition of the utility function is the collection of universe-histories as opposed to the collection of (analyzed-at-present-time) world states, then a consequentialist agent can display any external behavior whatsoever. (Note that @johnswentworth [LW · GW] has criticized the conclusions that are often reached as supposed corollaries of this, but he has done so in a comment to a now-deleted post that I can no longer link; the closest available link I have is this [LW(p) · GW(p)])

- Steve Byrnes has [LW · GW] distinguished preferences over future states and preferences over trajectories and has argued [LW(p) · GW(p)] that future powerful AIs will have preferences over both distant-future world-states and other stuff like trajectories.

- EJT has explained [LW(p) · GW(p)] that "agents can make themselves immune to all possible money-pumps for completeness by acting in accordance with the following policy: ‘if I previously turned down some option X, I will not choose any option that I strictly disprefer to X.’ Acting in accordance with this policy need never require an agent to act against any of their preferences.

- My own question post [LW · GW] from a couple months ago on the implications of coherence arguments for agentic behavior got a ton of engagement and contains other important links.

↑ comment by habryka (habryka4) · 2024-08-16T08:09:10.502Z · LW(p) · GW(p)

now-deleted post that I can no longer link

Do you remember the title of the post? I can probably link to a comment-only version of the post.

Replies from: None↑ comment by [deleted] · 2024-08-16T08:12:45.662Z · LW(p) · GW(p)

"Every system is equivalent to some utility maximizer. So, why are we still alive? [LW(p) · GW(p)]"

(I think this links to Richard Kennaway's comment on the post instead of Wentworth's, but it was the link that I was most easily able to find)