"Fractal Strategy" workshop report

post by Raemon · 2024-04-06T21:26:53.263Z · LW · GW · 23 commentsContents

tl;dr on results

Workshop Outline

Beforehand:

Day 1: Practice skills on quick-feedback exercises

Day 2: Big picture strategic thinking

Day 3: Choose your own short exercises, and object-level work

Day 4: Consolidation

Core Workshop Skills

"Metastrategic Brainstorming"

"2+ Plans at 3 Strategic Levels"

"Fluent, Cruxy Operationalization"

Feedback

"What changes did you make to you plan?"

What's the most money you'd have paid for this workshop?

What's the most money you'd have paid for the idealized version of this workshop that lives in your head, tailored for you?

Overall, how worthwhile was the workshop compared to what else you could have been doing? (scale of 1-7)

Rating individual activities/skills

My own updates

Would you like to attend a Ray Rationality Workshop?

(or: what are your cruxes for doing so?)

None

23 comments

I just ran a workshop teaching the rationality [LW · GW] concepts [LW · GW] I've developed this year.

If you're interested in paying money for a similar workshop, please fill out this form.

Six months ago, I started thinking about improving rationality.

Originally my frame was "deliberate practice for confusing problems". For the past two months, I've been iterating on which skills seemed useful to me personally, and which I might convey to others in a short period of time.

I settled into the frame "what skills are necessary for finding and pivoting to 10x better plans?". It's the area I most needed rationality for, myself, and it seemed generalizable to a lot of people I know.

I ended up with 5-10 skills I used on a regular basis, and I put together a workshop aiming to teach those skills in an immersive bootcamp environment. The skills wove together into a framework I'm tentatively called "Fractal Strategy", although I'm not thrilled with that name.

Basically, whenever I spend a bunch of resources on something, I...

- Explicitly ask "what are my goals?"

- Generate 2-5 plans at 3 different strategic levels

- Identify my cruxes for choosing between plans

- Fluently operationalize fatebook predictions about those cruxes

- Check if I can cheaply reduce uncertainty on my cruxes

The framework applies to multiple timescales. I invest more in this meta-process when making expensive, longterm plans. But I often find it useful to do a quick version of it even on the ~30-60 minute timescale.

I put together a workshop, aiming to:

- help people improve their current, object level plan

- help people improve their overall planmaking/OODA-loop process

tl;dr on results

I didn't obviously succeed at #1 (I think people made some reasonable plan updates, but not enough to immediately say an equivalent of "Hot Damn, look at that graph". See the Feedback section for more detail).

I think many people made conceptual and practical updates to their planning process, but it's too early to tell if it'll stick, or help.

Nonetheless, everyone at the workshop said it seemed like at least a good use of their time as what they'd normally have been doing. I asked "how much would you have paid for this?" and the average answer was $800 (range from $300 to $1,500).

When I was applying these techniques to myself, it took me more like ~3 weeks to update my plans in a significant way. My guess is that the mature version of the workshop comes with more explicit followup-coaching.

Workshop Outline

First, here's a quick overview of what happened.

Beforehand:

- People sent me a short writeup of their current plans for the next 1-2 weeks, and broader plans for the next 1-6 months.

Day 1: Practice skills on quick-feedback exercises

- Everyone installs the fatebook chrome/firefox extension

- Solve a puzzle with Dots and a Grid with an unspecified goal

- Solve a GPQA question with 95% confidence

- Try to one-shot a Baba is You puzzle [LW · GW]

- For both of those puzzles (Baba and GPQA), ask "How could I have thought that faster? [LW · GW]"

- Play a videogame like Luck Be a Landlord, and make fermi-calculations about your choices within the game.

- For all exercises, make lots of fatebook predictions about how the exercise will go.

Day 2: Big picture strategic thinking

- Work through a series of prompts about your big picture plans.

- Write up at least two different big-picture plans that seem compelling

- Think about short-feedback exercises you could do on Day 3

Day 3: Choose your own short exercises, and object-level work

- Morning: Do concrete exercises/games/puzzles that require some kind of meta-planning skill, that feels useful to you.

- Afternoon: Do object-level work on your best alternative big picture plan,

- You get to practice "applying the method" on the ~hour timescale

- You flesh out your second favorite plan, helping you treat it as "more real"

Day 4: Consolidation

- Write up your plan for the next week (considering at least two alternative plans or frames)

- Review how the workshop went together

- Consolidate your takeaways and Murphitjsu [LW · GW].

- What practices do you hope to still be trying a week, month, or year from now? Do you predict you'll actually stick with them? Do you endorse that? What can you do to help make things stick.

- Fill out a feedback form

Most of the workshop had people working independently, with me and Eli Tyre cycling through, chatting with people about what they were currently working on.

I expect to change the workshop structure a fair amount if/when I run it again, but, I feel pretty good about the overall approach. The simplest change I'd make is dropping the "Fermi calculations on Luck Be a Landlord" section, unless I can think of a better way to build on it. (I have aspirations of learning/teaching how to make real fermi estimates about your plans, but this feels like my weakest skill in the skill tree)

Core Workshop Skills

A good explanation of each concept at the workshop would be an entire sequence of posts. I hope to write that sequence, but for now, here are some less obvious things I want to spell out.

"Metastrategic Brainstorming"

I didn't actually do a great job teaching this at the workshop, but, a core building block skill upon which all others rest, is:

"Be able to generate lots of ideas for strategies to try."

If you get stuck, or if you have a suspicion there might be a much better path towards solving a problem, you should be able to generate lots of ideas on how to approach the problem. If you can't, you should be able to generate metastrategies that would help you fix that.

This is building off of "Babble [LW · GW]"-esque skillset. (See the Babble challenges [LW · GW], which encourages generating 50 ways of accomplishing things, to develop the muscle of "not getting stuck.")

A few examples of what I mean:

- Break a problem into simpler pieces

- Take a nap, or get a drink of water

- Ask yourself what a smart friend would do

I have more suggestions for good meta-strategies that often work for me. However, I prefer not to give people many strategies at first: I think one of the most important skills is to figure out how to generate new strategies, in novel situations, where none of your existing strategies is quite the right fit.

"2+ Plans at 3 Strategic Levels"

The central move of the workshop is "come up with at least 2 plans, at multiple strategic levels."

Reasons I think this is important include:

Escaping local optima. If you want to find plans that are 10x better than your current plan, you probably have to cast a fairly broad search process.

Don't get trapped by tunnel vision. It's easier to notice it's time to pivot if you've explicitly considered your best alternatives. Leave yourself a line of retreat [LW · GW]. Avoid "idea scarcity [LW · GW]."

Practice having lots of good ideas. Strategic creativity is a muscle you can train. If it feels like a struggle to come up with more than one good idea, I think it's probably worthwhile to invest in more practice in metastrategic brainstorming. (Meanwhile, if you're good at it, it shouldn't take long)

For context, when I'm doing this process for myself, even on the hour-long timescale, I basically always am able to generate 2 alternative ways to achieve my goal that feel "real". And, quite often when I force myself to generate alternatives, I discover a better way to accomplish my goal than whatever I was going to do by default. This process takes a couple minutes, so it pays for itself on smallish timescales.

Originally, when I first conceptualized this I wrote down "have at least 2 plans at basically every level of meta." As soon as I wrote it I was like "well, that's too many meta levels. That can't possibly be practical." But what can be practical is to track:

- The object level goal you're currently planning around

- The higher level strategic awareness that informs your current plan

- The narrower tactical level of how to implement your plans.

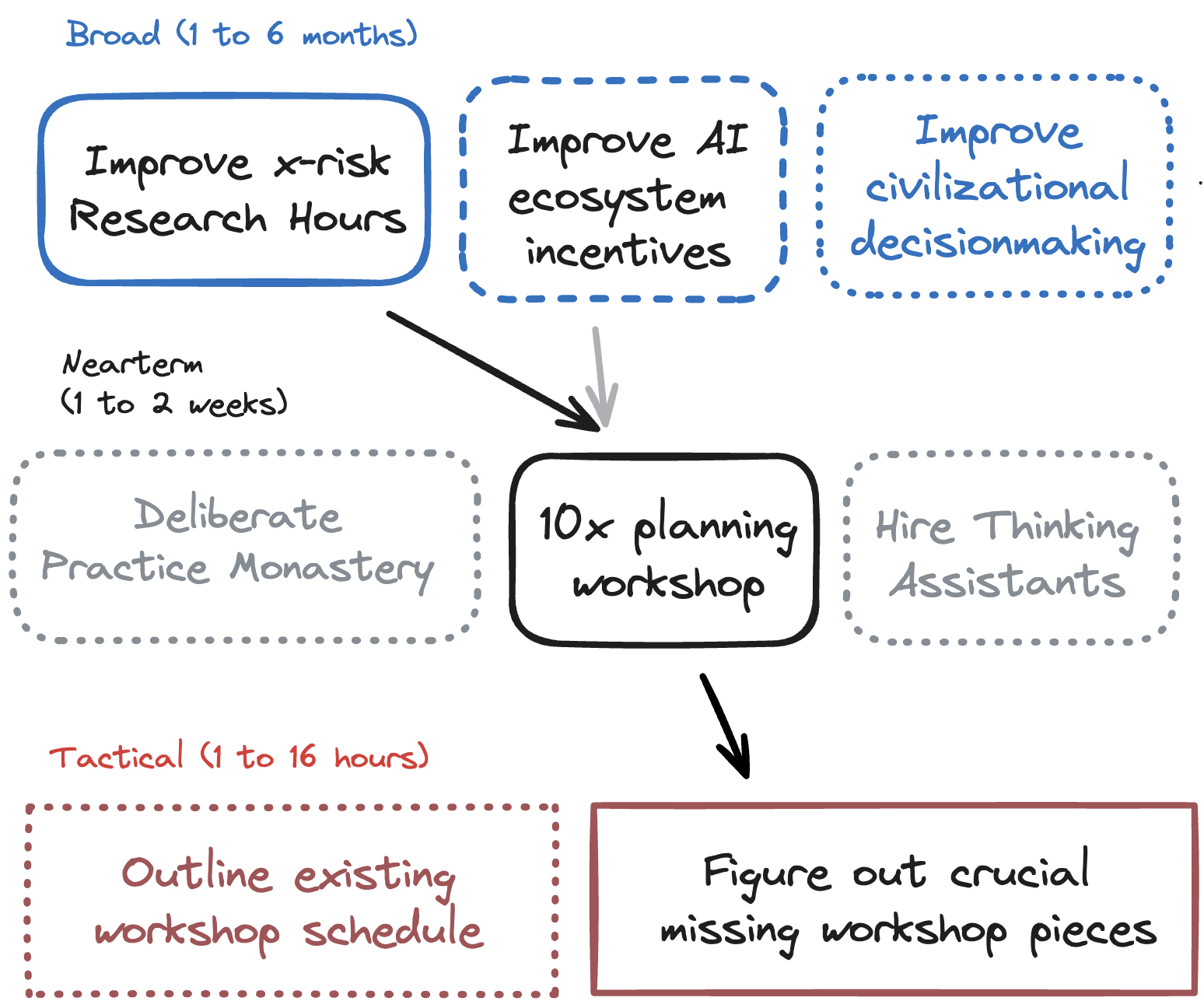

I'll followup more on this in a future post, but here's an example of what this looked like for me, when I was planning for the workshop and it was about 2 weeks away:

In this example, I had previously decided to run the 10x planning workshop. The most natural thing to do was write out the outline of the existing pieces I had and put them into a schedule. But, I was pretty sure I could get away with doing that at the last minute. Meanwhile, the workshop still had some major unsolved conceptual problems to sort out, that I wanted to allocate some spacious time towards figuring out.

"Fluent, Cruxy Operationalization"

Since 2020, I've been interested in calibration training for "real life." I had done simple calibration games, but the exercises felt contrived and fake and I wasn't sure they generalized to the real world.

I've intermittently practiced since then. But, only 3 weeks ago did I feel like I "got good enough to matter."

The problem with making predictions is that the most natural-to-predict things aren't actually that helpful. Forming a useful prediction is clunky and labor intensive. If it's clunky and labor-intensive, it can't be part of a day-to-day process. And if it can't be part of a day-to-day process that connects with your real goals, it's hard to practice enough to get calibrated on things that matter.

I have a lot of tacit, hard-to-convey knowledge about "how to make a good prediction-operationalization". For now, some prompts I find helpful are:

- What would I observe (physically, in the world) if Plan A turned out to fail?

- What would I observe if Plan A turned out to not matter as much as I thought?

- What would I observe in worlds where Plan B turned out to be way better than plan A?

- What's the ideal (even if stupidly expensive) experiment I could run that would inform my plan?

I also have a couple "basic predictions" that are usually relevant to most plans, such as:

- How likely am I to be "surprised in a surprising-way", which ends up being strategically relevant?

- How likely am I to "operationally screw up" my plan? (i.e. the basic plan was good but I made a basic error).

Note that most of the immediate value comes from the operationalization itself, as opposed to putting a probability on it. Often, simply asking the right question is enough to prompt your internal surprise-anticipator [LW · GW]to notice "oh, yeah I basically don't expect this to work" or "oh, when you put it like that, obviously X will happen."

But, longterm, a major point is to get calibrated. It's unclear to me how much calibration "transfers" between domains, but I think that at least if you practice predicting and calibrating on "whatever sorts of plans you actually make", you'll get more grounded intuitions in those domains, which help your internal surprise anticipator be more confidently calibrated.

A major point of the workshop is to just grind on making cruxy-predictions for 4 days, and hopefully reach some kind of "fluency escape velocity", where it feels easy enough that you'll keep doing it.

Feedback

Nine people came to the workshop. Two of them dropped out over the course of the workshop. I've got substantial feedback from the other seven, and a few quick notes from the remaining two.

The most obvious effects I was aiming at with workshop were:

- Help people come up with better object level plans.

- Help people learn skills, or changing their planning/OODA-loop process

Before people came to the workshop, I asked them to submit a few paragraphs about their current ~6 month plans. Some people had explicit plans, some people have "processes they expected to follow" (which would hopefully later generate more fleshed out plans), and some people didn't have much in the way of plans.

Here are feedback questions I asked, and some anonymized and/or aggregated answers:

"What changes did you make to you plan?"

I think nobody made a major change to a longterm plan (although I don't know if that was actually a realistic goal for one workshop).

But answers included:

- Two people said "I basically no longer have an object level plan". (They both spent much of the workshop thinking about what their meta level planning process should look like)

- One person said "My original plan is now a substep in my new plan, which I might not take for a month or two."

- One person aid "I'm placing a much higher premium on maintaining optionality"

- One person didn't make changes to their ~6 months plan but seemed like the workshop helped them realize they really need a vacation, which came up during the "make your plan for next week" phase.

- Two people upweighted some ideas they'd been thinking about

What's the most money you'd have paid for this workshop?

Average was ~$800. Answers were:

- $300-400

- ~$300 (would pay $2000 more for more help/attention/coaching)

- $800

- $1500, maybe $2000

- $1500, $2500 if at a better time/place

- ~$500, maybe more if they had more disposable income

I think there were some fairly distinct clusters here, where the people who got more value were also at stages of their life where the "planmaking skillset" was obviously a bottleneck for them.

What's the most money you'd have paid for the idealized version of this workshop that lives in your head, tailored for you?

Average ~$5000.

- $3,500

- $10,000

- ~$3,500

- $4,000

- $5,000 ($10,000 if they had more discretionary income)

- $5,000

- $2,000 (maybe $3,000 if their company was paying)

Overall, how worthwhile was the workshop compared to what else you could have been doing? (scale of 1-7)

Average was 5.5 (where 4 was "about the same").

Results were: 5, 4, 5, 7, 6, 7, 6, 4.

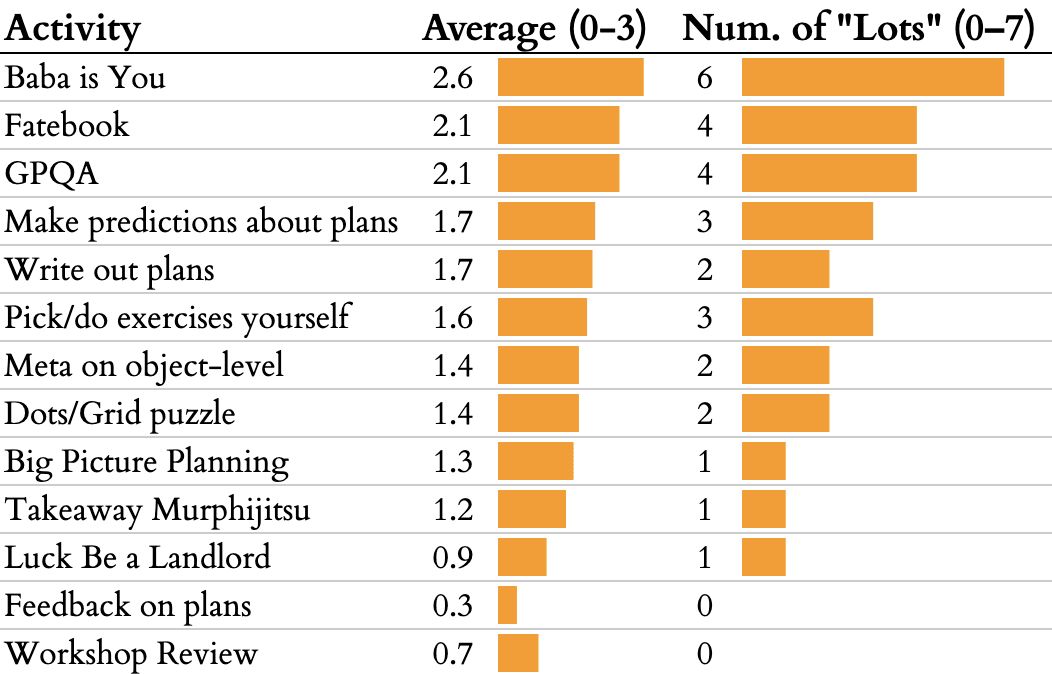

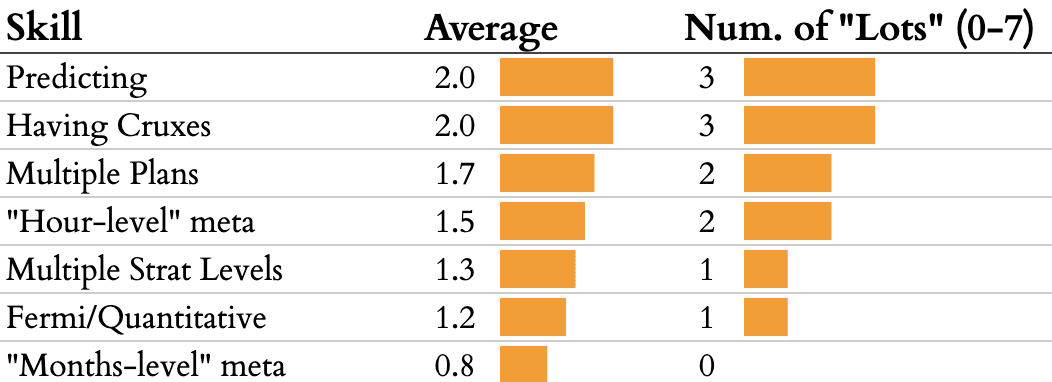

Rating individual activities/skills

I had people rate activity and skills, with options "not valuable", "somewhat/mixed valuable", or "lots!". When reviewing that feedback, I counted "some" as 1 point, and "lots!" as 3 points.

Here was how the activities rated

My own updates

I feel like this was a solid Draft 1 of the workshop format. Eli Tyre, who I hired to collaborate on it, says his experience is that most "workshops" tend to cohere in the third iteration.

Major things I'd like to change are:

- Figure out which skills end up actually help people, and focus more on those.

- Figure out how to teach quantitative estimating (i.e. fermi-calculations) that allow you to compare plans. (I feel like I got some handle on this recently, but it's still pretty messy)

- Find better prompts to help people with operationalizing predictions.

- Make a better environment for people giving each other nuanced feedback on their plans. (I think this requires selecting participants for having useful technical background, so they can actually engage with the details).

- Do better at creating a safe space for people really question their life plan, and/or confront very difficult things. (Related: teach "grieving [LW · GW]")

My overall sense was that for most people the different skills didn't really gel into a cohesive framework (though 2 people found it quite cohesive, and another 2 people said something like "I can see the edges of how it might fit together but haven't really grokked it"). I came up with the "fractal strategy" framing the day before the workshop, and then was kinda scrambling to figure out how to convey it clearly over the 4 days.

I came up with better phrasings over the course of the workshop, but I think there's a bunch of room to improve there.

One interesting thing is that the person who was "the most experienced planmaker" IMO, still got benefit from "semi-structured deliberate practice on the skills they found most important." AKA "basically the original version of my plan."

Would you like to attend a Ray Rationality Workshop?

(or: what are your cruxes for doing so?)

Whether I continue working on this depends largely on whether people are willing to pay for it at sustainable prices. "People" can either be individuals, or grantmakers who want to subsidize upskilling for x-risk researchers (and similar).

Reasons I want to charge money, rather than provide this at subsidized rates:

- "Lightcone just actually does need to pay the bills somehow, and running workshops like this isn't free."

- These workshops only actually make sense if they are really changing people's plans/skills in radical ways. "Make sure they seem valuable enough that people are willing to pay for them" is a good way to check that this is actually worth doing.

- As Andrew Critch [LW · GW] notes, there are some good selection effects on charging money, such that attendees are people who have most of the pieces necessary to achieve cool things, and you're filling in a few missing pieces that hopefully can unlock a lot of value.

The workshop format I'm actually most excited about is "team workshop", where an organization sends a team of decisionmakers together. I feel more optimistic about this resulting in "actual new plans that people act on", since:

- Everyone on the team will actually share context with each other, and they can evolve their planmaking together based on what seems actually likely to work.

- Since they're an existing org with some resources, they're more likely to be able to put longterm plans into action.

If you're interested in any of that enough to pay money for it, fill out this interest form.

Meanwhile, I'll be trying to write up posts that go into more detail about what I learned here.

23 comments

Comments sorted by top scores.

comment by Algon · 2024-04-12T14:47:05.733Z · LW(p) · GW(p)

I saw an interesting thread about how to strategically choose a problem & plan to make progress on it. It was motivated by the idea that you don't get taught how to choose good problems to work on in academia, so the author's wrote a paper on just that. This sorta reminded me of your project to teach people how to 10x their OODA looping, so I wanted to bring it to your attention @Raemon [LW · GW].

Replies from: Raemoncomment by winstonBosan · 2024-04-07T02:18:03.655Z · LW(p) · GW(p)

I am curious, what were other "visions" of this workshop that you generated in the pre-planning stage?

And now that you have done the workshop, which part of the previous visions might you incorporate into later workshops?

↑ comment by Raemon · 2024-04-08T05:28:30.938Z · LW(p) · GW(p)

I'm hoping to go into more detail in the examples for the "Having 2+ plans at 3 levels of meta" post. But, when I was generating visions, it mostly wasn't at the "workshop" level. Here's what actually happened:

- I started out thinking "MATS [LW · GW] is coming to Lighthaven (the event center where I work). MATS is one of the biggest influxes of new people into the community, and I would like to experiment with rationality training on them while they're here."

- My starting vision was:

- run an initial single-afternoon workshop early in the MATS program based on the Thinking Physics [LW · GW] exercises I ran last summer.

- invite MATS students for beta-testing various other stuff I'd come up with, which I'd iterate on based on their needs and interests

- try to teach them some kind of research-relevant skills that would help them go on to be successful alignment researchers

- I hoped the longterm result of this would be, in future years, we might have a "pre-MATS" program, where aspiring alignment researchers come for (somewhere between 1 week and 3 months), for a "cognitive boot camp", and then during MATS there'd be coaching sessions that helped keep ideas fresh in their mind while they did object level work.

- I got some pushback from a colleague who believed::

- there wasn't a sufficient filter on MATS students such that it seemed very promising to try and teach all of them

- In general, it's just really hard to teach deep cognitive mindsets, people seem to either already have the mindsets, or they don't, and tons of effort teaching them doesn't help. It also seems like meaningfully contributing to alignment research requires those hard-to-teach mindsets.

- At best, the program would still be expected to take 3 months, and take maybe like 1-2 years to develop that 3-month program, and that's a. lot of time on everyone's part, enough that it seemed to them more like a "back to the drawning board" moment than an "iterate a bit more" moment.

- They felt more promise "teaching one particular skill that seemed important, that many people didn't seem to able to do at all."

- I disagreed with the collaborator, but, did grudgingly admit to myself "well, the whole reason I'm optimistic about this idea is I think people could be way better at making plans. If this program is real, I should be able to make better plans. What better plans could I make?

Then I sat and thought for an hour, and came up with a bunch of interventions in the class of "improve researcher hours":

- Targeted interventions for established researchers I thought were already helping.

- Instead of trying to teach everyone, figure out which researchers I already thought were good, and see if they had any blindspots, skill gaps or other problems that seemed fixable.

- Get everyone Thinking Assistants.

- There's a range of jobs that go from "person who just sorta stares at you and helps you focus" to "person who notices your habits and suggests metacognitive improvements" to "actual research assistant." Different people might need different versions, but, my impression is at least some people benefit tremendously from this. I know of one senior researcher who got an assistant and felt that their productivity went up 2-4x.

- Get everyone once-a-week coaches.

- cheaper than full-time assistants, and might still be good, in particular for independent researchers who don't otehrwise have managers.

- Figure out particular skills that one can learn quickly rather than requiring 3 months of practice.

- a skill that came up that felt promising was teaching "hamming nature", or, "actually frequently asking yourself 'is this the most important thing I could be working on?".

I also considered other types of plans like:

- Go back and build LessWrong features, maybe like "good distillation on the Alignment Forum that made it easier for people to get up to speed."

- Go figure out what's actually happening in the Policy World and what I can do to help with that.

- Help with compute governance.

I actually ended up doing some of many of those plans. Most notably I switched towards thinking of it as "teach a cluster of interelated skills in a short time period." I've also integrated "weekly followup coaching" into something more like a mainline plan, in tandem with the 5-day workshop. (I'm not currently acting on it because it's expensive and I'm still iterating, but I think of it as necessary and a good compromise between '3 month bootcamp' and 'just throw one workshop at them and pray')

I've also followed up with a senior researcher and found at least some potential traction on helping them with some stuff, though it's early and hard to tell how that went.

comment by meedstrom · 2025-01-11T12:13:40.005Z · LW(p) · GW(p)

Gonna reuse the term "fluency escape velocity"!

A major point of the workshop is to just grind on making cruxy-predictions for 4 days, and hopefully reach some kind of "fluency escape velocity", where it feels easy enough that you'll keep doing it.

Fits my experience with a lot of mental skills, because it often takes me many months or years after reading about a skill that I actually reach a point where I've stacked up enough experience with it that it becomes fluent / natural / a tool in my toolkit.

comment by Towards_Keeperhood (Simon Skade) · 2024-09-25T19:35:55.420Z · LW(p) · GW(p)

- Solve a GPQA question with 95% confidence

Does this mean think about a feasible-seeming GPQA question until you assign a 95% probability that you're guess for the answer is correct, or what exactly do you mean by this? (Seems slightly weird to me to do that the same day people start calibration training. I'd guess most people to be wrong more often than 5% if they do that but idk.)

What was your motivation behind this exercise?

(<50% I will try this particular exercise, but) can you give a bit more precise intructions: Should I choose a problem in a field I know well or where I don't know much? Can I use GPT-o1 or just Google or nothing? Where exactly can I see the questions and answers?

Replies from: Raemon↑ comment by Raemon · 2024-09-25T21:36:37.991Z · LW(p) · GW(p)

You think about it until you are 95% confident. Yep, this is pretty hard, but I think calibration on this sort of thing is pretty different from many other types of calibration and it's not that helpful to have practiced in advance. Basically the entire weekend is doing hard puzzles (and real world planning), and making Fatebook predictions about it, to start training that skill in realistic/meaningful circumstances.

The idea behind 95% confidence is that you have thought about the problem until you think you really thoroughly understand it, rather than getting pretty close and saying "well, good enough"

(See Exercise: Solve "Thinking Physics" [LW · GW] for some more thoughts)

In the Thinking Physics exercise (I hadn't to not do in this workshop but probably will in future ones), the rules are "no internet or getting help at all, except for when pairing with a specific partner who has also just started thinking about it."

In GPQA, the rules are "you can use the internet, but no LLMs" (both because LLMs feel a bit more cheating-y, but more important because it violates the 'don't leak this to LLMs' policy you are supposed to sign before downloading GPQA.

Replies from: Raemon↑ comment by Raemon · 2024-09-25T23:06:25.090Z · LW(p) · GW(p)

Also: when I normally do this exercise, I give people unlimited time (because it's really not supposed to be about rushing)

During the workshop context, there is some practicality of 'well, we do kinda need to keep moving to the next session' so I typically give a 1.5 or 2 hour time window, which in practice is enough for some people but not others.

comment by Dweomite · 2024-04-12T22:09:22.492Z · LW(p) · GW(p)

I feel confused about how Fermi estimates were meant to apply to Luck Be a Landlord. I think you'd need error bars much smaller than 10x to make good moves at most points in the game.

Replies from: Raemon↑ comment by Raemon · 2024-04-12T23:01:47.268Z · LW(p) · GW(p)

I think I do mostly mean "rough quantitative estimates", rather than specifically targeting Femi-style orders of magnitude. (though I think it's sort of in-the-spirit-of-fermi to adapt the amount of precision you're targeting to the domain?)

The sort of thing I was aiming for here was: "okay, so this card gives me N coins on average by default, but it'd be better if there were other cards synergizing with it. How likely are other cards to synergize? How large are the likely synergies? How many cards are there, total, and how quickly am I likely to land on a synergizing card?"

(This is all in the frame of one-shotting the game, i.e. you trying to maximize score on first play through, inferring any mechanics based on the limited information you're presented with)

One reason I personally found Luck Be a Landlord valuable is it's "quantitative estimates on easy mode, where it's fairly pre-determined what common units of currency you're measuring everything in."

My own experience was:

- trying to do fermi-estimates on things like "which of these research-hour interventions seem best? How do I measure researcher hours? If researcher-hours are not equal, what makes some better or worse?"

- trying to one-shot Luck Be a Landlord

- trying to one-shot the game Polytopia (which is more strategically rich than Luck Be a Landlord, and figuring out what common currencies make sense is more of a question

- ... I haven't yet gone back to try to and do more object-level, real-world messy fermi calculations, but, I feel better positioned to do so.

↑ comment by Dweomite · 2024-04-13T01:08:14.316Z · LW(p) · GW(p)

OK. So the thing that jumps out at me here is that most of the variables you're trying to estimate (how likely are cards to synergize, how large are those synergies, etc.) are going to be determined mostly by human psychology and cultural norms, to the point where your observations of the game itself may play only a minor role until you get close-to-complete information. This is the sort of strategy I call "reading the designer's mind."

The frequency of synergies is going to be some compromise between what the designer thought would be fun and what the designer thought was "normal" based on similar games they've played. The number of cards is going to be some compromise between how motivated the designer was to do the work of adding more cards and how many cards customers expect to get when buying a game of this type. Etc.

As an extreme example of what I mean, consider book games, where the player simply reads a paragraph of narrative text describing what's happening, chooses an option off a list, and then reads a paragraph describing the consequences of that choice. Unlike other games, where there are formal systematic rules describing how to combine an action and its circumstances to determine the outcome, in these games your choice just does whatever the designer wrote in the corresponding box, which can be anything they want.

I occasionally see people praise this format for offering consequences that truly make sense within the game-world (instead of relying on a simplified abstract model that doesn't capture every nuance of the fictional world), but I consider that to be a shallow illusion. You can try to guess the best choice by reasoning out the probable consequences based on what you know of the game's world, but the answers weren't actually generated by that world (or any high-fidelity simulation of it). In practice you'll make better guesses by relying on story tropes and rules of drama, because odds are quite high that the designer also relied on them (consciously or not). Attempting to construct a more-than-superficial model of the story's world is often counter-productive.

And no matter how good you are, you can always lose just because the designer was in a bad mood when they wrote that particular paragraph.

Strategy games like Luck Be A Landlord operate on simple and knowable rules, rather than the inscrutable whims of a human author (which is what makes them strategy games). But the particular variables you listed aren't the outputs of those rules, they're the inputs that the designer fed into them. You're trying to guess the one part of the game that can't be modeled without modeling the game's designer.

I'm not quite sure how much this matters for teaching purposes, but I suspect it matters rather a lot. Humans are unusual systems in several ways, and people who are trying to predict human behavior often deploy models that they don't use to predict anything else.

What do you think?

Replies from: Raemon↑ comment by Raemon · 2024-04-13T02:49:21.545Z · LW(p) · GW(p)

Basically: yep, a lot of skills here are game design specific and not transfer. But, I think a bunch of other skills do transfer, in particular in a context where the you only play Luck Be a Landlord once (as well as 2-3 other one-shot games, and non-game puzzles), but then also follow it up the next day with applying the skills in more real-world domains.

Few people are playing videogames to one-shot them, and doing so requires a different set of mental muscles than normal. Usually if you play Luck Be a Landlord, you'll play it one or twice just to get the feel for how the game works, and by the time you sit down and say "okay, now, how does this game actually work?" you'll already have been exposed to the rough distribution of cards, etc.

In one-shotting, you need to actually spell out your assumptions, known unknowns, and make guesses about unknown unknowns. (Especially at this workshop where the one-shotting comes with '"take 5 minutes per turn, make as many fatebook predictions as you can for the first 3 turns, and then for the next 3 turns try to make two quantitative comparisons".

The main point here is to build up a scaffolding of those mental muscles such that the next day when you ask "okay, now, make a quantitative evaluation between [these two research agendas] or [these two product directions] [this product direction and this research agenda]", you've not scrambling to think about both the immense complexity of the messy details and also the basics of how to do a quantitative estimate in a strategic environment.

Replies from: Dweomite↑ comment by Dweomite · 2024-04-13T04:09:13.705Z · LW(p) · GW(p)

I'm kinda arguing that the skills relevant to the one-shot context are less transferable, not more.

It might also be that they happen to be the skills you need, or that everyone already has the skills you'd learn from many-shotting the game, and so focusing on those skills is more valuable even if they're less transferable.

But "do I think the game designer would have chosen to make this particular combo stronger or weaker than that combo?" does not seem to me like the kind of prompt that leads to a lot of skills that transfer outside games.

Replies from: Raemon↑ comment by Raemon · 2024-04-13T04:25:08.576Z · LW(p) · GW(p)

I'm not quite sure what things you're contrasting here.

The skills I care about are:

- making predictions (instead of just doing stuff without reflecting on what else is likely to happen)

- thinking about which things are going to be strategically relevant

- thinking about what resources you have available and how they fit together

- thinking about how to quantitatively compare your various options

And it'd be nice to train thinking about that in a context without the artificialness of gaming, but I don't have great alternatives. In my mind, the question is "what would be a better way to train those skills?", and "are simple strategy games useful enough to be worth training on, if I don't have better short-feedback-cycle options?"

(I can't tell from your phrasing so far if you were oriented around those questions, or some other one)

Replies from: Dweomite↑ comment by Dweomite · 2024-04-13T16:23:50.637Z · LW(p) · GW(p)

Oh, hm. I suppose I was thinking in terms of better-or-worse quantitative estimates--"how close was your estimate to the true value?"--and you're thinking more in terms of "did you remember to make any quantitative estimate at all?"

And so I was thinking the one-shot context was relevant mostly because the numerical values of the variables were unknown, but you're thinking it's more because you don't yet have a model that tells you which variables to pay attention to or how those variables matter?

Replies from: Raemon↑ comment by Raemon · 2024-04-13T17:57:40.755Z · LW(p) · GW(p)

Yeah.

"did you remember to make any quantitative estimate at all?"

I'm actually meaning to ask the question "did you estimate help you strategically?" So, if you get two estimates wildly wrong, but they still had the right relatively ranking and you picked the right card to draft, that's a win.

Also important: what matters here is not whether you got the answer right or wrong, it's whether you learned a useful thing in the process that transfers (and, like, you might end up getting the answer completely wrong, but if you can learn something about your thought process that you can improve on, that's a bigger win.

Replies from: Dweomite↑ comment by Dweomite · 2024-04-17T00:22:40.678Z · LW(p) · GW(p)

I have an intuition that you're partly getting at something fundamental, and also an intuition that you're partly going down a blind alley, and I've been trying to pick apart why I think that.

I think that "did your estimate help you strategically?" has a substantial dependence on the "reading the designer's mind" stuff I was talking about above. For instance, I've made extremely useful strategic guesses in a lot of games using heuristics like:

- Critical hits tend to be over-valued because they're flashy

- Abilities with large numbers appearing as actual text tend to be over-valued, because big numbers have psychological weight separate from their actual utility

- Support roles, and especially healing, tend to be under-valued, for several different reasons that all ultimately ground out in human psychology

All of these are great shortcuts to finding good strategies in a game, but they all exploit the fact that some human being attempted to balance the game, and that that human had a bunch of human biases.

I think if you had some sort of tournament about one-shotting Luck Be A Landlord, the winner would mostly be determined by mastery of these sorts of heuristics, which mostly doesn't transfer to other domains.

However, I can also see some applicability for various lower-level, highly-general skills like identifying instrumental and terminal values, gears-based modeling, quantitative reasoning, noticing things you don't know (then forming hypotheses and performing tests), and so forth. Standard rationality stuff.

Different games emphasize different skills. I know you were looking for specific things like resource management and value-of-information, presumably in an attempt to emphasize skills you were more interested in.

I think "reading the designer's mind" is a useful category for a group of skills that is valuable in many games but that you're probably less interested in, and so minimizing it should probably be one of the criteria you use to select which games to include in exercises.

I already gave the example of book games as revolving almost entirely around reading the designer's mind. One example at the opposite extreme would be a game where the rules and content are fully-known in advance...though that might be problematic for your exercise for other reasons.

It might be helpful to look for abstract themes or non-traditional themes, which will have less associational baggage.

I feel like it ought to be possible to deliberately design a game to reward the player mostly for things other than reading the designer's mind, even in a one-shot context, but I'm unsure how to systematically do that (without going to the extreme of perfect information).

Replies from: Raemon↑ comment by Raemon · 2024-04-21T21:07:21.036Z · LW(p) · GW(p)

One thing to remember is I (mostly) am advocating playing each game only once, and doing a variety of games/puzzles/activities, many of which should just be "real-world" activities, as well as plenty of deliberate Day Job stuff. Some of them should focus on resource management, and some of that should be "games" that have quick feedback loops, but it sounds like you're imagining it being more focused on the goodhartable versions of that than I think it is.

(also, I think multiplayer games where all the information is known is somewhat an antidote to these particular failure modes? even when all the information is known, there's still uncertainty about how the pieces combine together, and there's some kind of brute-reality-fact about 'well, the other players figured it out better than you')

↑ comment by Dweomite · 2024-04-22T03:16:11.471Z · LW(p) · GW(p)

In principle, any game where the player has a full specification of how the game works is immune to this specific failure mode, whether it's multiplayer or not. (I say "in principle" because this depends on the player actually using the info; I predict most people playing Slay the Spire for the first time will not read the full list of cards before they start, even if they can.)

The one-shot nature makes me more concerned about this specific issue, rather than less. In a many-shot context, you get opportunities to empirically learn info that you'd otherwise need to "read the designer's mind" to guess.

Mixing in "real-world" activities presumably helps.

If it were restricted only to games, then playing a variety of games seems to me like it would help a little but not that much (except to the extent that you add in games that don't have this problem in the first place). Heuristics for reading the designer's mind often apply to multiple game genres (partly, but not solely, because approx. all genres now have "RPG" in their metaphorical DNA), and even if different heuristics are required it's not clear that would help much if each individual heuristic is still oriented around mind-reading.

comment by Dalcy (Darcy) · 2024-04-07T00:58:27.993Z · LW(p) · GW(p)

btw there's no input box for the "How much would you pay for each of these?" question.

Replies from: Raemon