Extrapolating GPT-N performance

post by Lukas Finnveden (Lanrian) · 2020-12-18T21:41:51.647Z · LW · GW · 31 commentsContents

Methodology Plotting against loss Extrapolating How impressive are the benchmarks? What are the benchmarks about? Evidence for human-level AI Economically useful tasks Comparisons and limits Takeaways and conclusions Appendix Why not plot against size? Why plot against loss? What scaling laws to use? Adapting the scaling law Notes None 31 comments

Brown et al. (2020) (which describes the development of GPT-3) contains measurements of how 8 transformers of different sizes perform on several different benchmarks. In this post, I project how performance could improve for larger models, and give an overview of issues that may appear when scaling-up. Note that these benchmarks are for ‘downstream tasks’ that are different from the training task (which is to predict the next token); these extrapolations thus cannot be directly read off the scaling laws in OpenAI’s Scaling Laws for Neural Language Models (Kaplan et al., 2020) or Scaling Laws for Autoregressive Generative Modelling (Henighan et al., 2020).

(If you don’t care about methodology or explanations, the final graphs are in Comparisons and limits .)

Methodology

Brown et al. reports benchmark performance for 8 different model sizes. However, these models were not trained in a compute-optimal fashion. Instead, all models were trained on 300B tokens (one word is roughly 1.4 tokens), which is inefficiently much data. Since we’re interested in the best performance we can get for a given amount of compute, and these models weren’t compute-optimally trained, we cannot extrapolate these results on the basis of model-size.

Instead, I fit a trend for how benchmark performance (measured in % accuracy) depends on the cross-entropy loss that the models get when predicting the next token on the validation set. I then use the scaling laws from Scaling Laws for Neural Language Models to extrapolate this loss. This is explained in the Appendix.

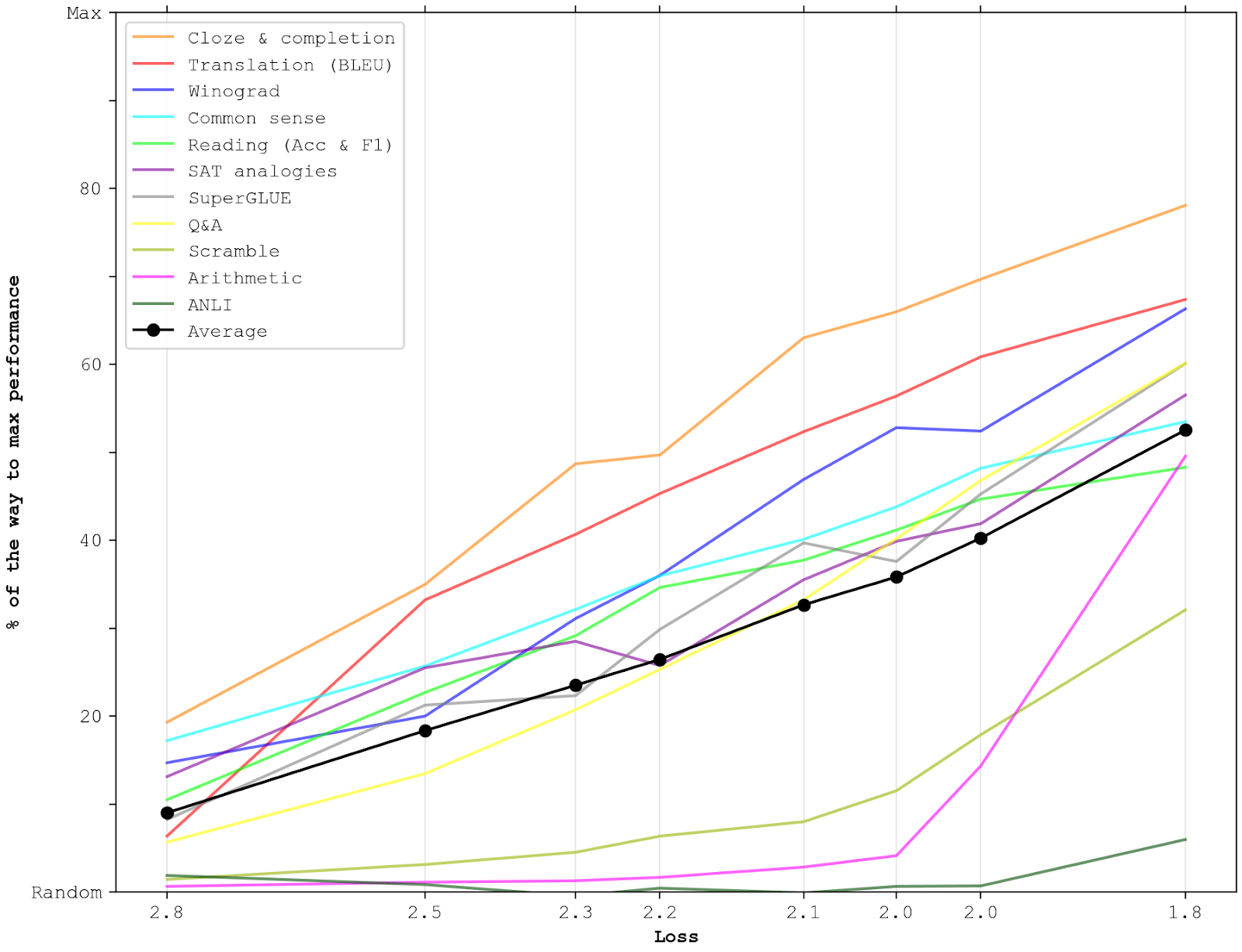

Plotting against loss

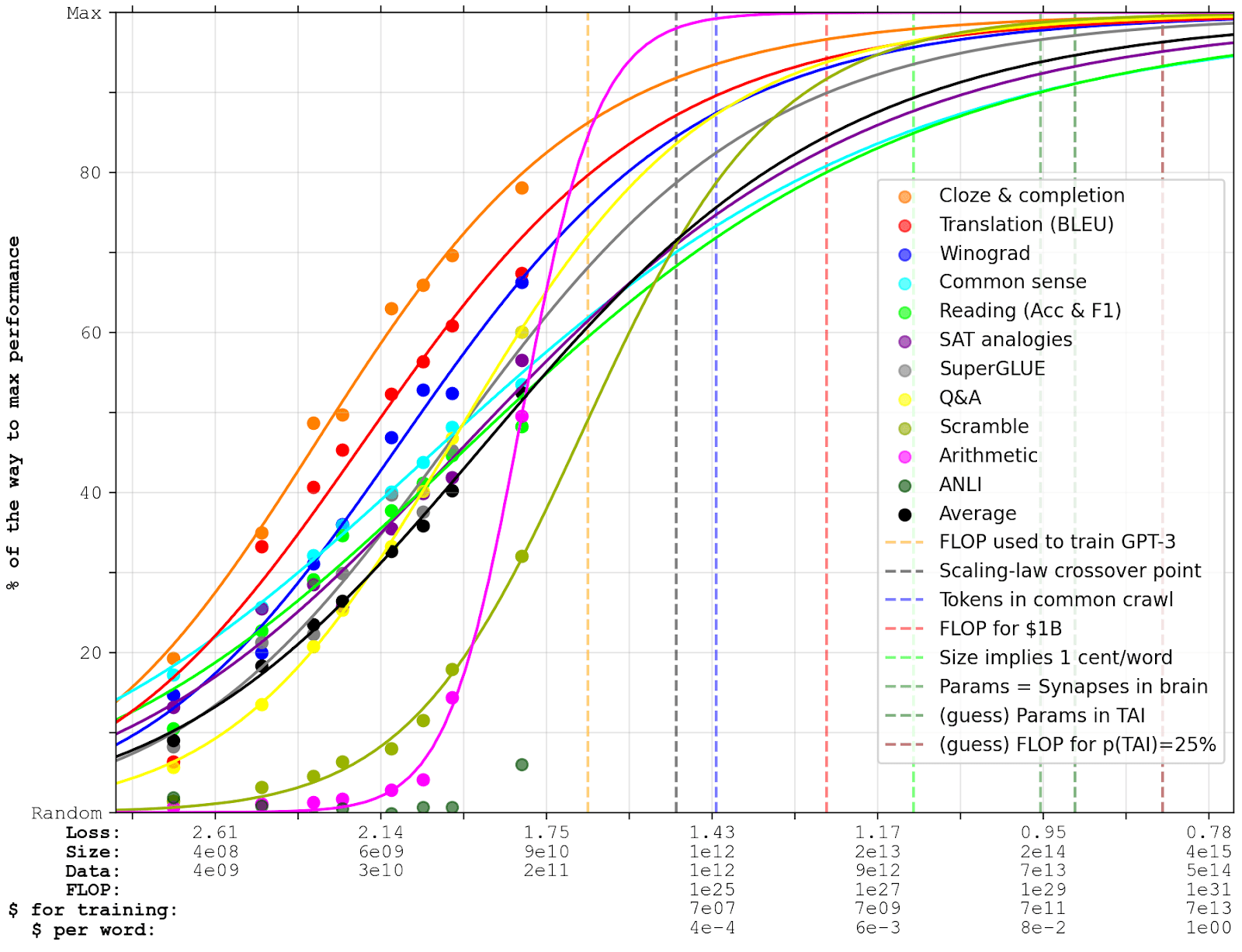

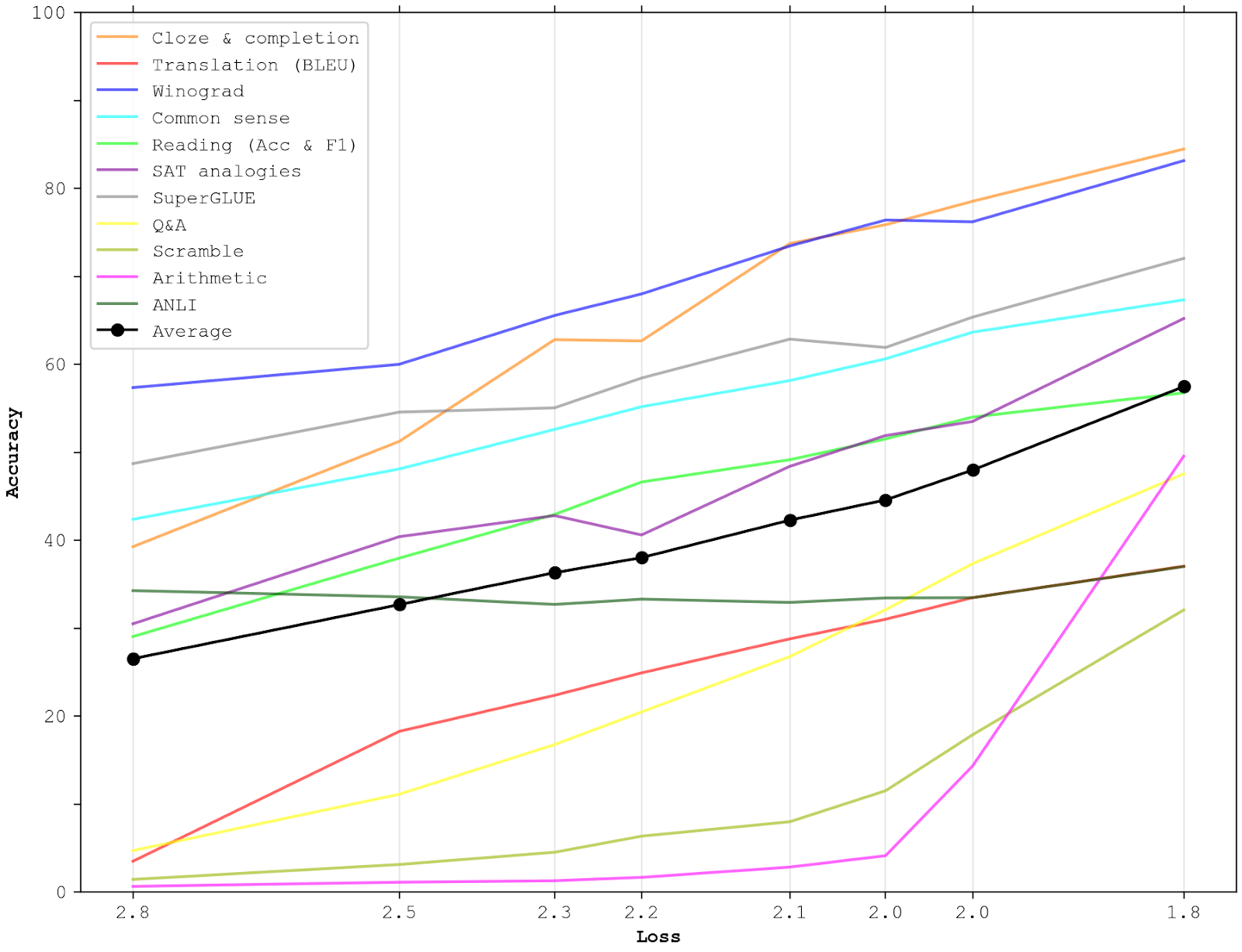

In order to get a sense of how GPT-3 performs on different types of tasks, I separately report few-shot progress on each of the 11 different categories discussed in Brown et al. For a fair comparison, I normalize the accuracy of each category between random performance and maximum performance; i.e., for each data point, I subtract the performance that a model would get if it responded randomly (or only responded with the most common answer), and divide by the difference between maximum performance and random performance. The black line represents the average accuracy of all categories. This implicitly gives less weights to benchmarks in larger categories, which I think is good; see the Appendix for more discussion about this and the normalization procedure.

Note that the x-axis is logarithmic. For reference, the 4th model (at a loss of 2.2) is similar to GPT-2’s size (1.5e9 parameters).

Overall, I think the models’ performance is surprisingly similar across many quite different categories. Most of them look reasonably linear, improve at similar rates, and both start and end at similar points. This is partly because all tasks are selected for being appropriately difficult for current language models, but it’s still interesting that GPT-3’s novel few-shot way of tackling them doesn’t lead to more disparities. The main outliers are Scramble, Arithmetic, and ANLI (Adversarial Natural Language Inference); this is discussed more below.

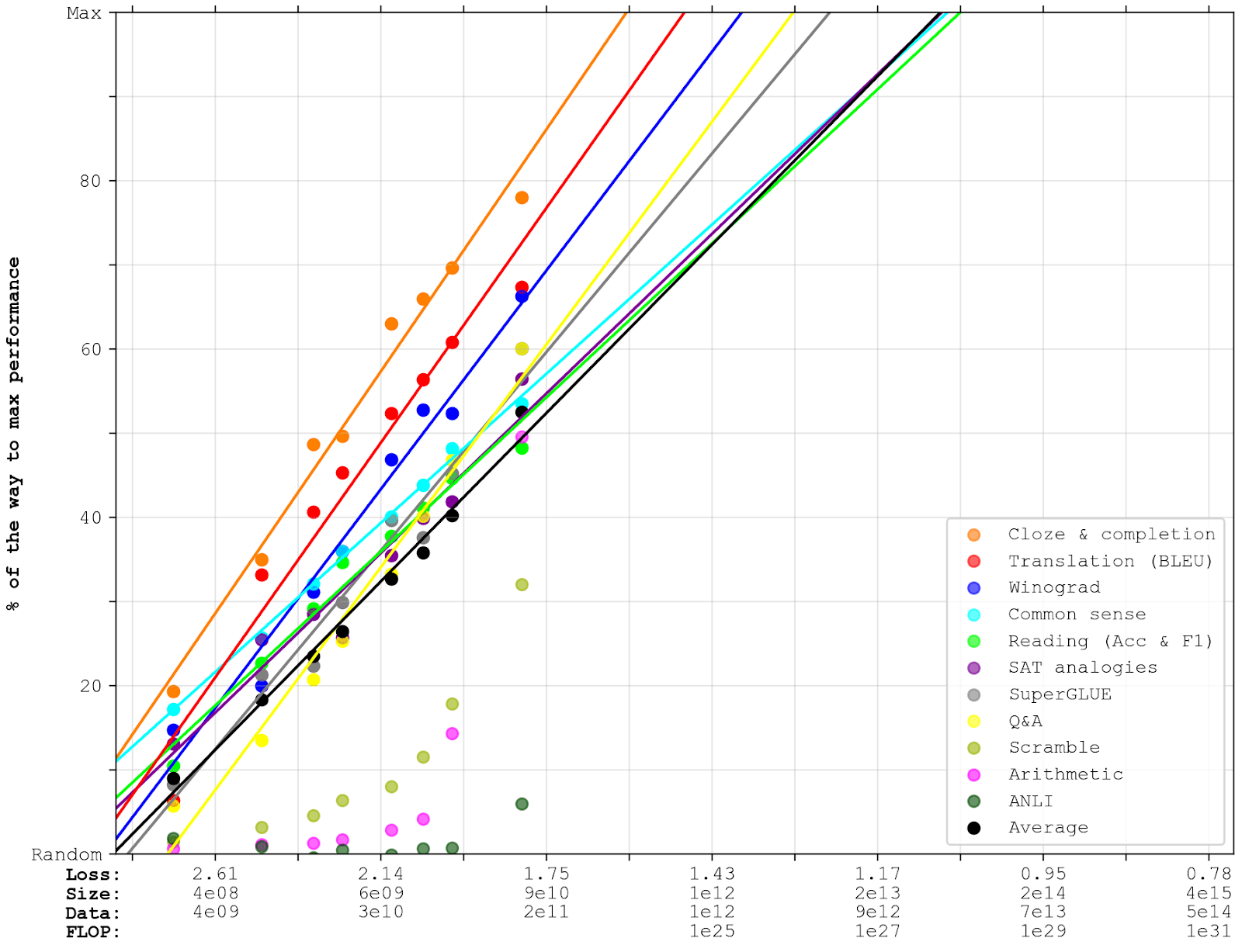

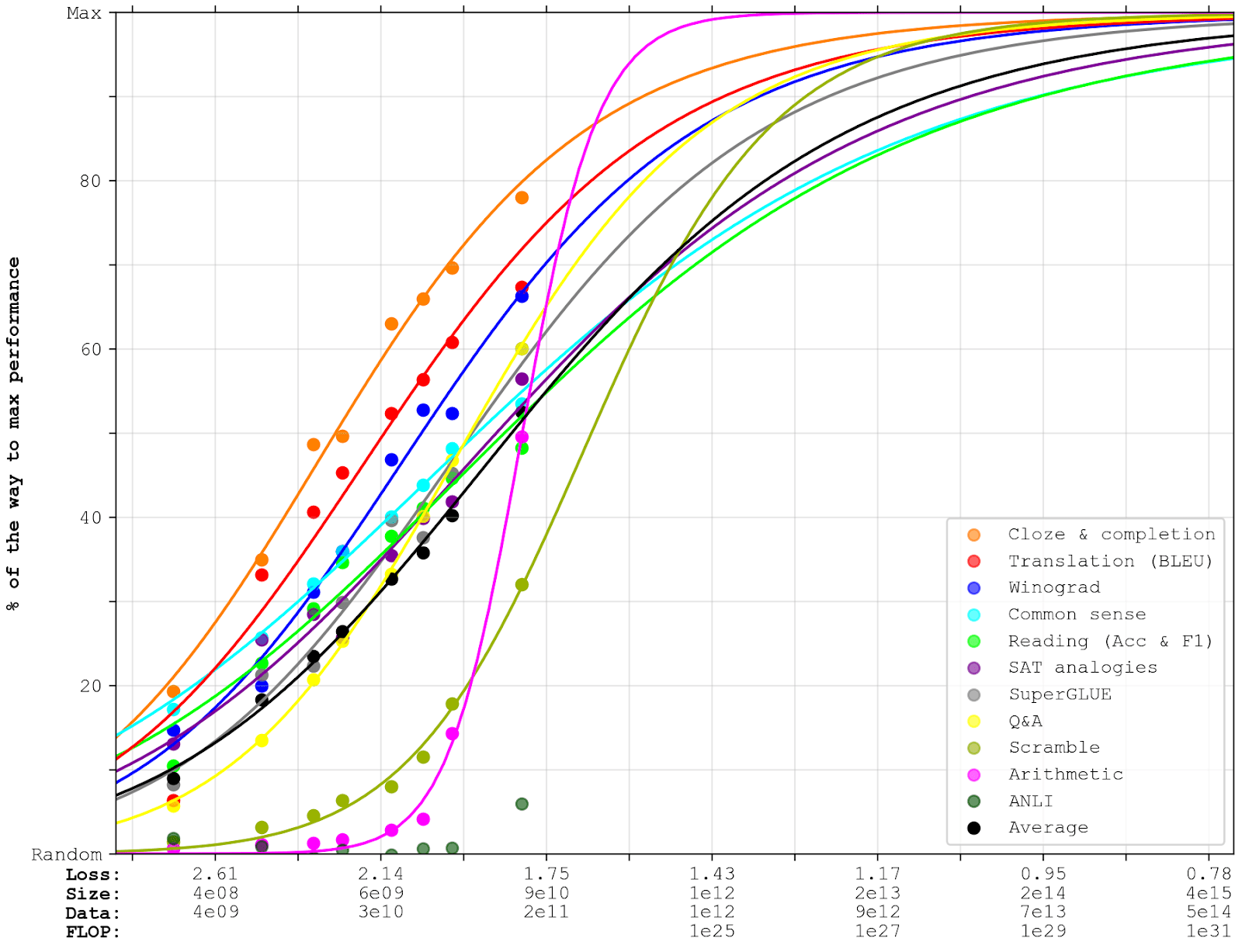

Extrapolating

In general, on linear-log plots like the ones above, where the y-axis is a score between 0 and 1, I expect improvements to follow some sort of s-curve. First, they perform at the level of random guessing, then they improve exponentially as they start assembling heuristics (as on the scramble and arithmetic tasks) and finally they slowly converge to the upper bound set by the irreducible entropy.

Note that, if the network converges towards the irreducible error like a negative exponential (on a plot with reducible error on the y-axis), it would be a straight line on a plot with the logarithm of the reducible error on the y-axis. Since the x-axis is also logarithmic, this would be a straight line on a log-log plot, i.e. a power-law between the reducible error and the reducible loss. In addition, since the reducible loss is related to the data, model size, and compute via power laws, their logarithms are linearly related to each other. This means that we can (with linear adjustments) add logarithms of these to the x-axis, and that a similar argument applies to them. Thus, converging to the irreducible error like a negative exponential corresponds to a power law between reducible error and each of those inputs (data, model size, and compute).

Unfortunately, with noisy data, it’s hard to predict when such an s-curve will hit its inflection point unless you have many data points after it (see here [LW · GW]). Since we don’t, I will fit linear curves and sigmoid curves.

- On most datasets, I think the linear curves will overestimate how soon they’ll reach milestones above 90%, since I suspect performance improvements to start slowing down before then. However, I wouldn’t be shocked if they were a decent prediction up until that point. The exceptions to this are ANLI, arithmetic, and scramble, which are clearly not on linear trends; I have opted to not extrapolate them linearly (though they’re still included in the average).

- I think sigmoid curves – i.e., s-curves between 0% and 100% with an inflection point at 50% – are more sensible as a median guess of performance improvements. My best guess is that they’re more likely to underestimate performance than overestimate performance, because the curves look quite linear right now, and I give some weight to the chance that they’ll continue like that until they get much closer to maximum performance (say, around 80-90%), while the logistics assume they’ll bend quite soon. This is really just speculation, though, and it could go either way. For sigmoids, I extrapolate all benchmarks except ANLI.

For extrapolating size, data, and compute-constraints, I use a scaling law that predicts loss via the number of parameters and the available data. This doesn’t directly give the floating point operations (FLOP) necessary for a certain performance, since it’s not clear how many epochs the models need to train on each data point to perform optimally. However, some arguments suggest that models will soon become so large that they’ll fully update on data the first time they see it, and overfit if they’re trained for multiple epochs. This is predicted to happen after ~1e12 parameters, so I assume that models only train for one epoch after this (which corresponds to 6ND FLOP, where N is the model size and D is the number of tokens). See the Appendix for more details.

Here are the extrapolations:

Extrapolations like these get a lot less reliable the further you extend them, and since we’re unlikely to beat many of these benchmarks by the next 100x increase in compute, the important predictions will be quite shaky. We don’t have much else to go on, though, so I’ll assume that the graphs above are roughly right, and see where that takes us. In Comparison and limits, I’ll discuss how much we could afford to scale models like these. But first, I’ll discuss:

How impressive are the benchmarks?

The reason that I’m interested in these benchmarks is that they can say something about when transformative AI will arrive. There are two different perspectives on this question:

- Should we expect a scaled-up version of GPT-3 to be generally more intelligent than humans across a vast range of domains? If not, what does language models’ performance tell us about when such an AI can be expected?

- Will a scaled-up version of GPT-3 be able to perform economically useful tasks? Do we know concrete jobs that it could automate, or assistance it could provide?

The former perspective seems more useful if you expect AI to transform society once we have a single, generally intelligent model that we can deploy in a wide range of scenarios. The latter perspective seems more useful if you expect AI to transform society by automating one task at a time, with specialised models, as in Comprehensive AI Services [LW · GW] (though note that massively scaling up language models trained on everything is already in tension with my impression of CAIS).

So what can the benchmarks tell us, from each of these perspectives?

To begin with, it’s important to note that it’s really hard to tell how impressive a benchmark is. When looking at a benchmark, we can at best tell what reasoning we would use to solve it (and even this isn’t fully transparent to us). From this, it is tempting to predict that a task won’t be beaten until a machine can replicate that type of reasoning. However, it’s common that benchmarks get solved surprisingly fast due to hidden statistical regularities. This often happens in image classification, which explains why adversarial examples are so prevalent, as argued in Adversarial Examples Are Not Bugs, They Are Features.

This issue is also common among NLP tasks – sufficiently common that many of today’s benchmarks are filtered to only includes questions that a tested language model couldn’t answer correctly. While this is an effective approach for continuously generating more challenging datasets, it makes the relationship between benchmarks taken from any one time and the kind of things we care about (like ability to perform economically useful tasks, or the ability to reason in a human-like way) quite unclear.

As a consequence of this, I wouldn’t be very impressed by a fine-tuned language model reaching human performance on any one of these datasets. However, I think a single model reaching human performance on almost all of them with ≤100 examples from each (provided few-shot style) would be substantially more impressive, for a few reasons. Firstly, GPT-3 already seems extremely impressive, qualitatively. When looking at the kind of results gathered here [LW · GW], it seems like the benchmark performance underestimates GPT-3’s impressiveness, which suggests that it isn’t solving them in an overly narrow way. Secondly, with fewer examples, it’s less easy to pick up on spurious statistical regularities. Finally, if all these tasks could consistently be solved, that would indicate that a lot more tasks could be solved with ≤100 examples, including some economically useful ones. Given enough tasks like that, we no longer care exactly how GPT-3 does it.

What are the benchmarks about?

(See footnotes for examples.)

-

Translation is about translating between English and another language (GPT-3 was tested on romanian, german, and french).

-

The Q&A and partly common sense benchmarks are mostly about memorising facts and presenting them in response to quite clear questions[1]. This seems very useful if GPT-3 can connect it with everything else it knows, to incorporate it for separate tasks, but not terribly useful otherwise.

-

Many of the reading comprehension tasks are about reading a paragraph and then answering questions about it; often by answering yes or no and/or citing a short section of the paragraph. GPT-3 doesn’t perform terribly well on this compared to the SOTA, perhaps because it’s quite different from what it’s been trained to do; and I imagine that fine-tuned SOTA systems can leverage quite a lot of heuristics about what parts of the text tends to be good to pick out, where to start and end them, etc.

-

Similar to reading comprehension, the cloze and completion tasks tests understanding of a given paragraph, except it does this by asking GPT-3 to end a paragraph with the right word[2], or picking the right ending sentence[3]. GPT-3 currently does really well on these tasks, both when compared to other methods and in absolute terms, as visible on the graphs above. This is presumably because it’s very similar to the task that GPT-3 was trained on.

-

The winograd tasks also tests understanding, but by asking which word a particular pronoun refers to[4].

-

A couple of tasks make use of more unique capabilities. For example, one of the reading comprehension tasks often require application of in-context arithmetic[5] and some of the common sense reasoning tasks directly appeals to tricky knowledge of physical reality[6].

-

As mentioned above, many of the benchmarks have been filtered to only include questions that a language model failed to answer. ANLI (short for Adversarial Natural Language Inference) does this to an unusual degree. The task is to answer whether a hypothesis is consistent with a description[7], and the dataset is generated over 3 rounds. Each round, a transformer is trained on all questions from previous rounds, whereupon workers are asked to generate questions that fool the newly trained transformer. I wouldn’t have predicted beforehand that GPT-like models would do quite so badly on this dataset, but I assume it is because of this adversarial procedure. In the end, it seems like the fully sized GPT-3 just barely manages to start on an s-curve.

-

Finally, the scramble task is about shuffling around letters in the right way, and arithmetic is about adding, subtracting, dividing, and multiplying numbers. The main interesting thing about these tasks is that performance doesn’t improve at all in the beginning, and then starts improving very fast. This is some evidence that we might expect non-linear improvements on particular tasks, though I mostly interpret it as these tasks being quite narrow, such that when a model starts getting the trick, it’s quite easy to systematically get right.

Evidence for human-level AI

What capabilities would strong performance on these benchmarks imply? None of them stretches the limits of human ability, so no level of performance would give direct evidence for super-human performance. Similarly, I don’t think any level of performance on these benchmarks would give much direct evidence about ability to e.g. form longer term plans, deeply understand particular humans or to generate novel scientific ideas (though I don’t want to dismiss the possibility that systems would improve on these skills, if massively scaled up). Overall, my best guess is that a scaled-up language model that could beat these benchmarks would still be a lot worse than humans at a lot of important tasks (though we should prepare for the possibility that some simple variation would be very capable).

However, I think there’s another way these benchmarks can provide evidence for when we’ll get human-level AI, which relies on a model presented in Ajeya Cotra’s Draft report on AI timelines [LW · GW]. (As emphasized in that link, the report is still a draft, and the numbers are in flux. All numbers that I cite from it in this post may have changed by the time you read this.) I recommend reading the report (and/or Rohin’s summary in the comments and/or my guesstimate replication), but to shortly summarize: The report’s most central model estimates the number of parameters that a neural network would need to become ~human-equivalent on a given task, and uses scaling laws to estimate how many samples such a network would need to be trained on (using current ML methods). Then, it assumes that each “sample” requires FLOP proportional to the amount of data required to tell whether a given perturbation to the model improves or worsens performance (the task’s effective horizon length). GPT-3’s effective horizon length is a single token, which would take ~¼ of a second for a human to process; while e.g. a meta-learning task may require several days worth of data to tell whether a strategy is working or not, so it might have a ~100,000x longer horizon length.

This model predicts that a neural network needs similarly many parameters to become human-equivalent at short horizon lengths and long horizon lengths (the only difference being training time). Insofar as we accept this assumption, we can get an estimate of how many parameters a model needs to become ~human-equivalent at a task of any horizon length by answering when they’ll become ~human-equivalent at short horizon lengths.

Horizon length is a tricky concept, and I'm very unsure how to think about it. Indeed, I'm even unsure to what extent it's a coherent and important variable that we should be paying attention to. But if the horizon length model is correct, the important question is: How does near-optimal performance on these benchmarks compare with being human-level on tasks with a horizon length of 1 token?

Most obviously, you could argue that the former would underestimate the latter, since the benchmarks are only a small fraction of all possible short-horizon tasks. Indeed, as closer-to-optimal performance is approached, these benchmarks will presumably be filtered for harder and harder examples, so it would be premature to say that the current instantiation of these benchmarks represents human-level ability.

In addition, these tasks are limited to language, while humans can also do many other short-horizon tasks, like image or audio recognition[8]. One approach would be to measure what fraction f of the human-brain is involved in language processing, and then assume that a model that could do all short-horizon tasks would be 1/f times as large as one that can only do language. However, I’m not sure that’s fair, because we don’t actually care about getting a model that’s human-level on everything – if we can get one that only works when fed language, that won’t be a big limitation (especially as we already have AIs that are decent at parsing images and audio into text, if not quite as robust as humans). If we compare the fraction of the brain dedicated to short-horizon language parsing with whatever fraction of the brain is dedicated to important tasks like strategic planning, meta-learning, and generating new scientific insights, I have no idea which one would be larger. Ultimately, I think that would be a more relevant comparison for what we care about.

Furthermore, there are some reasons for why these benchmarks could overestimate the difficulty of short-horizon tasks. In particular, you may think that the hardest available benchmarks used to represent 1-token horizon lengths, but that these have been gradually selected away in favor of increasingly narrow benchmarks that AI struggle particularly much with, but that would very rarely be used in a real world context. There’s no good reason to expect neural networks to become human-equivalent at all tasks at the same time, so there will probably be some tasks that they remain subhuman at far beyond the point of them being transformative. I don’t think this is a problem for current benchmarks, but I think it could become relevant soon if we keep filtering tasks for difficulty.

Perhaps more importantly, this particular way of achieving human-parity on short horizon lengths (scaling GPT-like models and demonstrating tasks few-shot style) may be far inferior to some other way of doing it. If a group of researchers cared a lot about this particular challenge, it’s possible that they could find much better ways of doing it within a few years[9].

Overall, I think that near-optimal performance on these benchmarks would somewhat underestimate the difficulty of achieving human-level performance on 1-token horizon lengths. However, since I’m only considering one single pathway to doing this, I think the model as a whole is slightly more likely to overestimate the parameter-requirements than to underestimate them.

Economically useful tasks

Less conceptually fraught, we can ask whether to expect systems with near-optimal benchmark performance to be able to do economically useful tasks. Here, my basic expectation is that such a system could quite easily be adapted to automating lots of specific tasks, including the ones that Cotra mentions as examples of short-horizon tasks here:

- Customer service and telemarketing: Each interaction with a customer is brief, but ML is often required to handle the diversity of accents, filter out noise, understand how different words can refer to the same concept, deal with customization requests, etc. This is currently being automated for drive-thru order taking by the startup Apprente (acquired by McDonald’s).

- Personal assistant work: This could include scheduling, suggesting and booking good venues for meetings such as restaurants, sending routine emails, handling routine shopping or booking medical and dental appointments based on an understanding of user needs, and so on.

- Research assistant work: This could involve things like copy-editing for grammar and style (e.g. Grammarly), hunting down citations on the web and including them in the right format, more flexible and high-level versions of “search and replace”, assisting with writing routine code or finding errors in code, looking up relevant papers online, summarizing papers or conversations, etc.

Some of the benchmarks directly give evidence about these tasks, most clearly unambiguous understanding of ambiguous text, ability to memorise answers to large numbers of questions, and ability to search text for information (and understand when it isn’t available, so that you need to use a human). Writing code isn’t directly related to any of the benchmarks, but given how well it already works, I assume that it’s similarly difficult to other natural language tasks, and would improve in line with them.

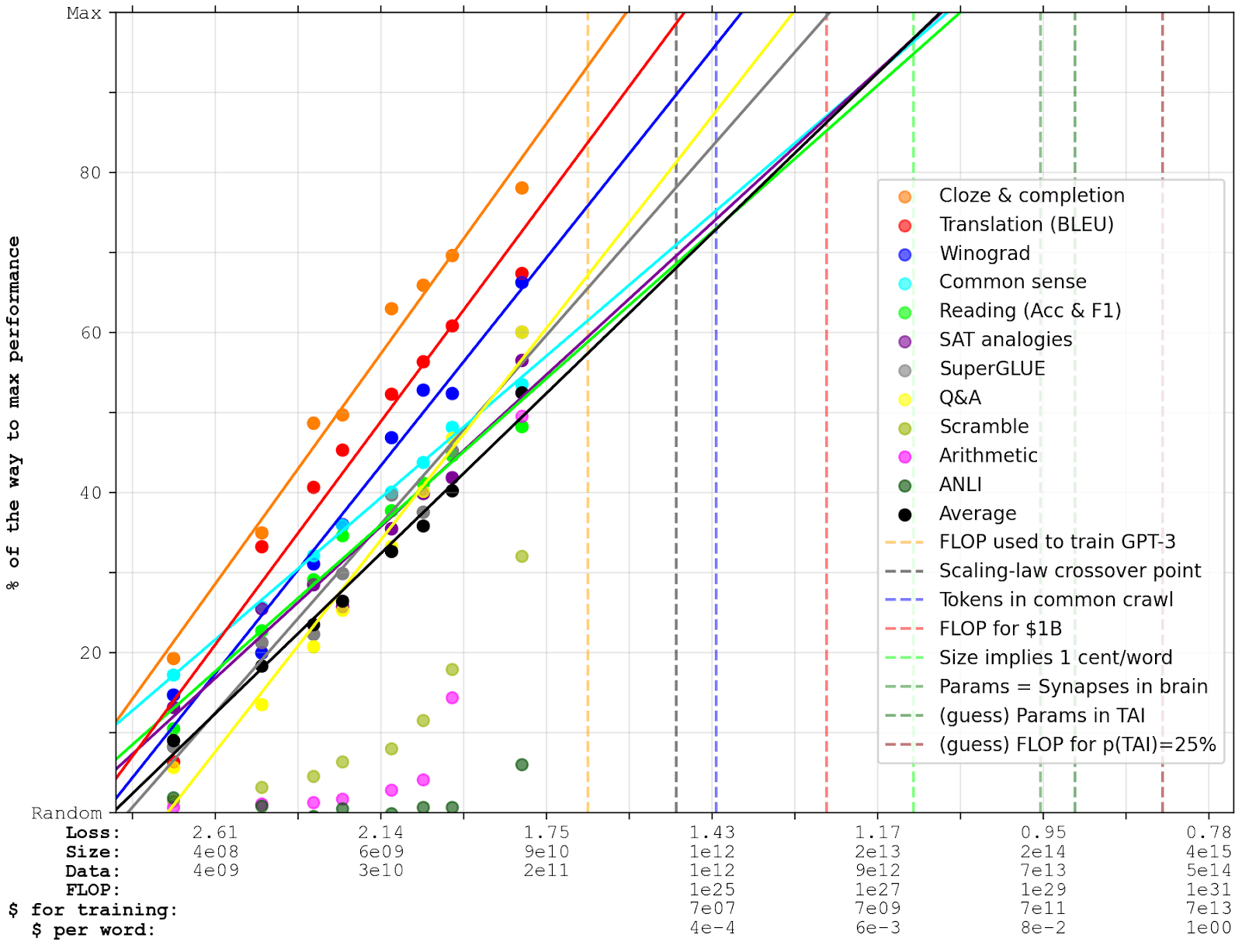

Comparisons and limits

Finally, I’ve augmented the x-axis with some reference estimates. I’ve estimated cost for training by multiplying the FLOP with current compute prices; and I’ve estimated cost per word during inference from the current pricing of GPT-3 (adjusting for network size). I have also added some dashed lines where interesting milestones are passed (with explanations below):

(Edit: I made a version of this graph with new scaling laws and with some new, larger language models here [LW · GW].)

In order:

-

The orange line marks the FLOP used to train GPT-3, which is ~6x larger than what the inferred FLOP of the right-most data points would be. As explained in the Appendix, this is because GPT-3 is small enough that it needs multiple epochs to fully benefit from the data it’s trained on. I expect the projection to be more accurate after the black line, after which the scaling law I use starts predicting higher compute-requirements than other scaling laws (again, see the Appendix).

-

According to Brown et al., there are a bit less than 1e12 words in the common crawl (the largest publicly available text dataset), which means there are a bit more than 1e12 tokens (blue line). What other data could we use, beyond this point?

- We could use more internet data. Going by wikipedia, expanding to other languages would only give a factor ~2. However, common crawl claims to have “petabytes of data”, while google claims to have well over 100 petabyte in their search index, suggesting they may have 10-100x times more words, if a similar fraction of that data is usable text. However, on average, further data extracted from the internet would likely be lower-quality than what has been used so far.

- As of last year, Google books contained more than 40 million titles. If each of these had 90,000 words, that would be ~4e12 words (of high quality).

- We could start training on video. I think the total number of words spoken on youtube is around 1 trillion, so just using speech-to-text wouldn’t add much, but if you could usefully train on predicting pixels, that could add enormous amounts of data. I definitely think this data would be less information-rich per byte, though, which could reduce efficiency of training by a lot. Perhaps the right encoding scheme could ameliorate that problem.

- If they wanted to, certain companies could use non-public data generated by individuals. For example, 3e10 emails are sent every year, indicating that Google could get a lot of words if they trained on gmail data. Similarly, I suspect they could get some mileage out of words written in google docs or words spoken over google meet.

Overall, I haven’t found a knock-down argument that data won’t be a bottleneck, but there seems to be enough plausible avenues that I think we could scale at least 10x past the common crawl, if there’s sufficient economic interest. Even after that I would be surprised if we completely ran out of useful data, but I wouldn’t be shocked if training became up to ~10x more expensive from being forced to switch to some less efficient source.

-

The red line marks the FLOP that could be bought for $1B, assuming 2.4e17 FLOP/$.[10] (Training costs are also written on the x-axis.) I think this is within an order of magnitude of the total investments in OpenAI[11], and close to DeepMind’s yearly spending. Google’s total yearly R&D spending is closer to $30B, and Google’s total cash-on-hand is ~$130B. One important adjustment to bear in mind is that hardware is getting cheaper:

-

Over the last 40-50 years, FLOP/s/$ has fallen by 10x every ~3-4 years.[12]

-

Over the last 12 years, FLOP/s/$ has fallen by 10x just once.[13]

-

As a measure of gains from hardware specialisation, over the last 5 years, fused multiply-add operations/s/$ (which are especially useful for deep learning) has fallen by about 10x[14]. This sort of growth from specialisation can’t carry on forever, but it could be indicative of near-term gains from specialisation.

Cotra’s best guess is that hardware prices will fall by 10x every 8-9 years. I think faster progress is plausible, given the possibility of further specialisation and the older historical trend, but I’m pretty uncertain.

-

-

The light green line marks the point where reading or writing one word would cost 1 cent, if cost were to linearly increase with size from today's 250 tokens / cent. (Cost/word is also written on the x-axis.) For reference, this would be as expensive as paying someone $10/hour if they read/wrote 15 words per minute, while freelance writers typically charge 3-30 cents per word. As long as GPT-N was subhuman on all tasks, I think this could seriously limit the usefulness of applying it to many small, menial tasks. However, hardware and algorithmic progress could substantially ameliorate this problem. Note that the cost of inference scales in proportion with the size, while the total training costs scale in proportion to size*data, which is proportional to size1.74. This means that if FLOP/$ is reduced by 10x, and we train with 10x more FLOP, the total inference costs are reduced by a factor ~3.[15]

-

There are roughly 2e14 synapses in the human brain (source), which is approximately analogous to the number of parameters in neural networks (green line).

-

The dark green line marks the median estimate for the number of parameters in a transformative model, according to Ajeya Cotra’s model[16]. Noticeably, this is quite close to when the benchmarks approaches optimal performance. The 80% confidence interval is between 3e11 and 1e18 parameters, going all the way from the size of GPT-3 to well beyond the edge of my graph.

-

Finally, the last dashed line marks the number of FLOP for which Cotra’s current model predicts that 2020 methods would have a 25% chance of yielding TAI, taking into account that the effective horizon length may be longer than a single token.

Finally, it’s important to note that algorithmic advances are real and important. GPT-3 still uses a somewhat novel and unoptimised architecture, and I’d be unsurprised if we got architectures or training methods that were one or two orders of magnitude more compute-efficient in the next 5 years.

Takeaways and conclusions

Overall, these are some takeaways I have from the above graphs. They are all tentative, and written in the spirit of exposing beliefs to the light of day so that they can turn to ash. I encourage you to draw your own conclusions (and to write comments that incinerate mine).

- On benchmark performance, GPT-3 seems to be in line with performance predicted by smaller sizes, and doesn’t seem to particularly break or accelerate the trend.

- While it sharply increases performance on arithmetic and scramble tasks in particular, I suspect this is because they are narrow tasks which are easy once you understand the trick. If future transformative tasks are similarly narrow, we might be surprised by further scaling; but insofar as we expect most value to come from good performance on a wide range of tasks, I’m updating towards a smaller probability of being very surprised by scaling alone (ie., I don’t want to rule out sudden, surprising algorithmic progress).

- Of course, sudden increases in spending can still cause sudden increases in performance. GPT-3 is arguably an example of this.

- Given the steady trend, it also seems less likely to suddenly stop.

- Close-to-optimal performance on these benchmarks seems like it’s at least ~3 orders of magnitude compute away (costing around $1B at current prices). This means that I’d be somewhat surprised if a 100x scaling brought us there immediately; but another 100x scaling after that might do it (for reference, a 10,000x increase in compute would correspond to a bit more than 100x increase in size, which is the difference between GPT-2 and GPT-3). If we kept scaling these models naively, I’d think it’s more likely than not that we’d get there after increasing the training FLOP by ~5-6 orders of magnitude (costing $100B-$1T at current prices).

-

Taking into account both software improvements and potential bottlenecks like data, I’d be inclined to update that downwards, maybe an order of magnitude or so (for a total cost of ~$10-100B). Given hardware improvements in the next 5-10 years, I would expect that to fall further to ~$1-10B.

-

I think this would be more than sufficient for automating the tasks mentioned above – though rolling out changes in practice could still take years.

-

(Note that some of these tasks could be automated with today’s model sizes, already, if sufficient engineering work was spent to fine-tune them properly. I’m making the claim that automation will quite easily be doable by this point, if it hasn’t already been done[17].)

-

Assuming that hardware and algorithmic progress have reduced the cost of inference by at least 10x, this will cost less than 1 cent per word.

-

I think this would probably not be enough to automate the majority of human economic activity or otherwise completely transform society (but I think we should be investing substantial resources in preparing for that eventuality).

-

- If I adopt the framework from Ajeya Cotra’s draft report – where a model with the right number of parameters can become ~human-equivalent at tasks with a certain horizon length if trained on the right number of data points of that horizon length – I’m inclined to treat these extrapolations as a guess for how many parameters will be required for ~human-equivalence. Given that Cotra’s model’s median number of parameters is close to my best guess of where near-optimal performance is achieved, the extrapolations do not contradict the model’s estimates, and constitute some evidence for the median being roughly right.

I’m grateful to Max Daniel and Hjalmar Wijk for comments on this post, and to Joseph Carlsmith, Daniel Kokotajlo, Daniel Eth, Carolyn Ashurst and Jacob Lagerros for comments on earlier versions.

Appendix

In this appendix, I more thoroughly describe why we can’t fit plots to the size of models directly, why I average over categories rather than over all benchmarks, and why I chose the scaling laws I did. Feel free to skip to whatever section you’re most interested in.

Why not plot against size?

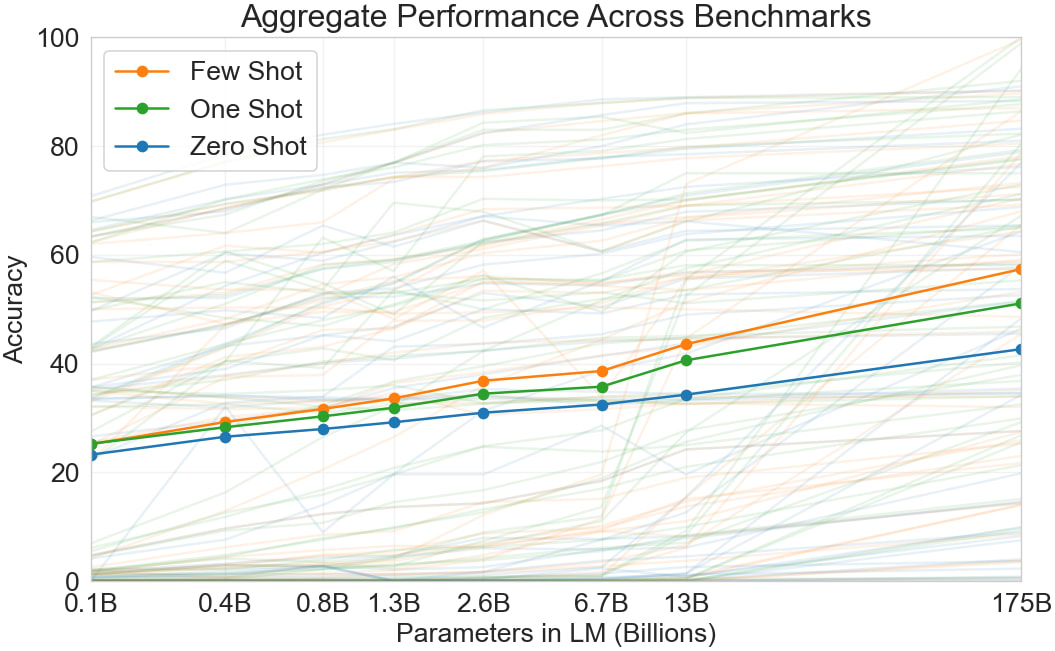

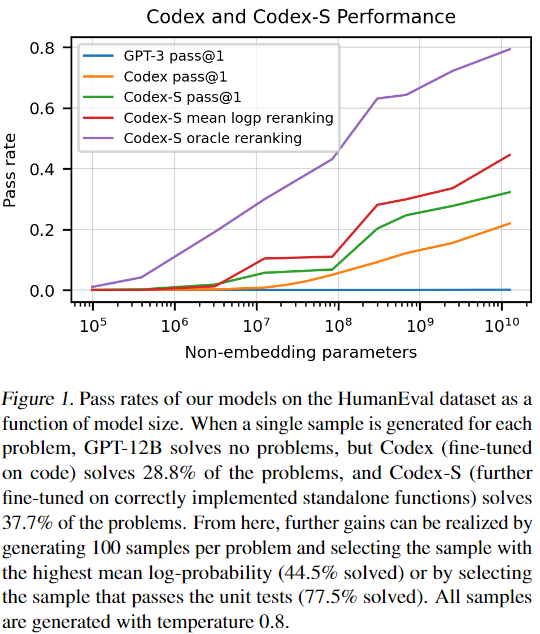

Brown et al. trains models of 8 different sizes on 300 billion tokens each, and reports their performance on a number of benchmarks in Figure 1.3:

Each faint line depicts the accuracy on a certain benchmark as a function of model size. The thick lines depict the average accuracy across all benchmarks, for few-shot, one-shot, and zero-shot evaluation respectively.

However, when extrapolating the performance, what we care about is the best performance (measured by the validation loss) that we can get for a given amount of compute, if we choose model size and number of data points optimally. For this, it’s a bad idea to fit performance to the model size (as in the graph above), because all models were trained on 300B tokens. For the small models, this is inefficiently large amounts of data, which means that they’re disproportionally good compared to the largest model, which only barely receives the optimal amount of data. Thus, naively extrapolating results based on model-size would underestimate how much larger model sizes improve performance, when optimally trained. If fit to a linear trend: It would underestimate the slope and overestimate the intercept.

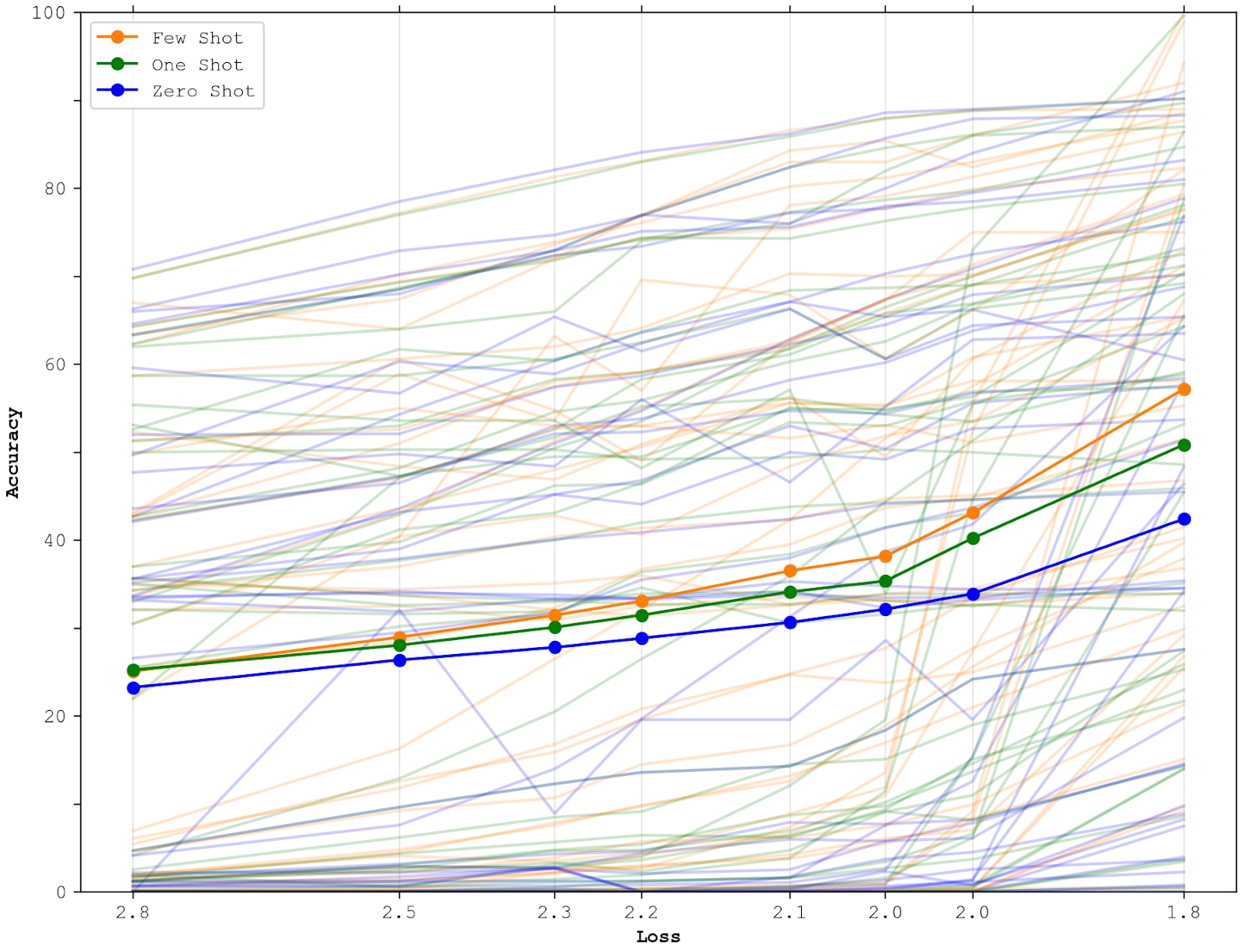

Why plot against loss?

To get around the problems with plotting against size, I fit a trend for how benchmark performance depends on the cross-entropy loss that the models get when predicting the next token on the validation set (which can be read off from Figure 4.1 in Brown et al.). I then use scaling laws to extrapolate how expensive it will be to get lower loss (and by extension better benchmark performance).

The crucial assumption that this procedure makes is that – in the context of training GPT-like transformers of various sizes on various amounts of data – text-prediction cross-entropy loss is a good proxy for downstream task performance. In particular, my procedure would fail if small models trained on large amounts of data were systematically better or worse at downstream tasks than large models trained on small amounts of data, even if both models were exactly as good at text prediction. I’m quite happy to make this assumption, because it does seem like lower loss on text prediction is an excellent predictor of downstream task-performance, and small deviations on single benchmarks hopefully averages out.

Note that I’m not assuming anything else about the relationship between task performance and loss. For example, I am not assuming that improvements will be equally fast on all tasks.

In all graphs in this post, I fit trends to the logarithm of the loss, in particular. This is because the loss is related as a power-law to many other interesting quantities, like parameters, data, and compute (see the next section); which means that the logarithm of the loss has a linear relationship with the logarithm of those quantities. In particular, this means that having the logarithm of the loss on the x-axis directly corresponds to having logarithms of these other quantities on the x-axis, via simple linear adjustments. It seems very natural to fit benchmark performance against the logarithm of e.g. compute, which is why I prefer this to fitting it to the loss linearly.

One potential issue with fitting trends to the loss is that the loss will eventually have to stop at some non-zero value, since there is some irreducible entropy in natural language. However, if the log-loss trends start bending soon, I suspect that downstream task performance will not stop improving; instead, I suspect that the benchmark trends would carry on as a function of training compute roughly as before, eventually slowly converging to their own irreducible entropy. Another way of saying this is that – insofar as there’s a relationship between text prediction loss and benchmark performance – I expect that relationship to be best captured as a steady trend between reducible prediction loss and reducible benchmark performance; and I expect both to be fairly steady as a function of training compute (as showcased in OpenAI’s Scaling Laws for Autoregressive Generative Modelling).

Here’s the aggregation that OpenAI does, but with my adjusted x-axis:

This graph looks similar to OpenAI’s, although the acceleration at the end is more pronounced. However, we can do better by separating into different categories, and taking the average across categories. The following graph only depicts few-shot performance.

As you can see, the resulting graph has a much less sharp acceleration at the end. The reason for this is that the arithmetic task category has more benchmarks than any other category (10 benchmarks vs a mean of ~4.5 across all categories), which means that its sudden acceleration impacts the average disproportionally much. I think weighing each category equally is a better solution, though it’s hardly ideal. For example, it counts SuperGLUE and SAT analogies equally much, despite the former being an 8-benchmark standard test suite and the latter being a single unusual benchmark.

In the main post above, I use a normalised version of this last graph. My normalization is quite rough. The main effect is just to adjust the minimum performance of benchmarks with multi-choice tasks, but when human performance is reported, I assume that maximum performance is ~5% above. For translation BLEU, I couldn’t find good data, so I somewhat arbitrarily guessed 55% as maximum possible performance.

What scaling laws to use?

What scaling law best predicts how much compute we’ll need to reach a given loss? Two plausible candidates from Kaplan et al are:

- The compute-optimal scaling law L(C), which assumes that we have unlimited data to train on. For any amount of compute C, this scaling law gives us the minimum achievable loss L, if we choose model size and training time optimally.

- The data-constrained scaling law L(N,D), which for a given model size N tells us the loss L we get if we train until convergence on D tokens.

Intuitively, we would expect the first law to be better. However, it is highly unclear whether it will hold for larger model sizes, because if we extrapolate both of these laws forward, we soon encounter a contradiction (initially described in section 6.3 of Kaplan et al):

To train until convergence on D tokens, we need to train on each token at least once. Training a transformer of size N on D tokens requires ~6ND FLOP. Thus, if we choose N and D to minimise the product 6ND for a given loss L(N,D), we can get a lower bound on the FLOP necessary to achieve that loss.

However, this lower bound eventually predicts that we will need more compute than the compute-optimal scaling law L(C) does, mostly because L(C) predicts that you can scale the number of tokens you train on much slower than L(N,D) does. The point where these curves first coincide is around ~1 trillion parameters, and it’s marked as the crossover point in my section Comparisons and limits. The best hypothesis for why this happens is that, as you scale model size, the model gets better at updating on each datapoint, and needs fewer epochs to converge. L(C) picks up on this trend, while L(N,D) doesn’t, since it always trains until convergence. However, this trend cannot continue forever, since the model cannot converge in less than one epoch. Thus, if this hypothesis is correct, scaling will eventually be best predicted by L(N,D), running a single epoch with 6ND FLOP. For a more thorough explanation of this, see section 6 of OpenAI’s Scaling Laws for Autoregressive Generative Modelling, or this summary [LW · GW] by nostalgebraist.

It’s possible that this relationship will keep underestimating compute-requirements, if it takes surprisingly long to reach the single epoch steady state. However, it seems unlikely to underestimate compute requirements by more than 6x, since that’s the ratio between the compute that GPT-3 was trained on and the predicted minimum compute necessary to reach GPT-3’s loss.

(Of course, it’s also possible that something unpredictable will happen at the place where these novel, hypothesized extrapolations start contradicting each other.)

Adapting the scaling law

The scaling law I use has the form . To simultaneously minimise the loss L and the product 6ND, the data should be scaled as:

Plugging this into the original formula, I get the loss as a function of N and D:

,

By taking the inverse of these, I get the appropriate N and D from the loss:

,

As noted above, the FLOP necessary for training until convergence is predicted to eventually be 6N(L)D(L).

I use the values of NC, DC, 𝛂N, and 𝛂D from page 11 of Kaplan et al. There are also some values in Figure 1, derived in a slightly different way. If I use these instead, my final FLOP estimates are about 2-5x larger, which can be treated as a lower bound of the uncertainty in these extrapolations.

(As an aside: If you’re familiar with the details of Ajeya Cotra’s draft report on AI timelines [LW · GW], this extrapolation corresponds to her target accuracy law with q~=0.47)

Notes

TriviaQA: The Dodecanese Campaign of WWII that was an attempt by the Allied forces to capture islands in the Aegean Sea was the inspiration for which acclaimed 1961 commando film?

Answer: The Guns of Navarone ↩︎

LAMBADA: “Yes, I thought I was going to lose the baby.” “I was scared too,” he stated, sincerity flooding his eyes. “You were?” “Yes, of course. Why do you even ask?” “This baby wasn’t exactly planned for.” “Do you honestly think that I would want you to have a ____ ?”

Answer: miscarriage ↩︎

HellaSwag: A woman is outside with a bucket and a dog. The dog is running around trying to avoid a bath. She…

A. rinses the bucket off with soap and blow dry the dog’s head.

B. uses a hose to keep it from getting soapy.

C. gets the dog wet, then it runs away again.

D. gets into a bath tub with the dog. ↩︎

Winogrande: Robert woke up at 9:00am while Samuel woke up at 6:00am, so he had less time to get ready for school.

Answer: Robert ↩︎

DROP: That year, his Untitled (1981), a painting of a haloed, black-headed man with a bright red skeletal body, depicted amit the artists signature scrawls, was sold by Robert Lehrman for $16.3 million, well above its $12 million high estimate.

How many more dollars was the Untitled (1981) painting sold for than the 12 million dollar estimation?

Answer: 4300000 ↩︎

PIQA: How do I find something I lost on the carpet?

A. Put a solid seal on the end of your vacuum and turn it on.

B. Put a hair net on the end of your vacuum and turn it on. ↩︎

ANLI: A melee weapon is any weapon used in direct hand-to-hand combat; by contrast with ranged weapons which act at a distance. The term “melee” originates in the 1640s from the French word “mĕlée”, which refers to hand-to-hand combat, a close quarters battle, a brawl, a confused fight, etc. Melee weapons can be broadly divided into three categories.

Hypothesis: Melee weapons are good for ranged and hand-to-hand combat.

Answer: Contradicted ↩︎

Although a complicating factor is that humans can process a lot more visual data than verbal data per second, so image-recognition should plausibly be counted as having a longer horizon length than GPT-3. I’m not sure how to unify these types of differences with the subjective second unit in Cotra’s report. ↩︎

Note that Cotra’s model is ultimately trying to estimate 2020 training computation requirements. By definition, this requires that researchers mostly rely on 2020 algorithmic knowledge, but allows for 2-5 years to design the best solution. ↩︎

Based on the appendix to Ajeya Cotra’s draft report [LW · GW], and adjusted upwards 2x, because I think transformers have quite good utilization ↩︎

OpenAI started out with $1B, and their biggest investment since then was Microsoft giving them another $1B. ↩︎

See Ajeya Cotra’s appendix and Asya Bergal’s Recent trends in GPU price per FLOPS. Note that, if we measure trends in active prices (rather than release prices) over the last 9 years, we would expect a 10x cost reduction to take 17 years instead. ↩︎

From Asya Bergal’s Recent trends in GPU price per FLOPS. ↩︎

Since size is only increased by 101/1.74, while costs are reduced by 10, yielding 10/101/1.74~=2.66. ↩︎

The model first estimates the FLOP/s of a human brain; then adds an order of magnitude because NNs will plausibly be less efficient, and finally transforms FLOP/s to parameters via the current ratio between the FLOP that a neural network uses to analyze ~1 second of data, and the parameters of said neural network. ↩︎

Slightly more generally: I’m pointing out one particular path to automating these tasks, but presumably, we will in fact automate these tasks using the cheapest path of all those available to us. Thus, this is necessarily a (very shaky) estimate of an upper bound. ↩︎

31 comments

Comments sorted by top scores.

comment by gwern · 2020-12-20T00:10:21.967Z · LW(p) · GW(p)

Finally, the scramble task is about shuffling around letters in the right way, and arithmetic is about adding, subtracting, dividing, and multiplying numbers. The main interesting thing about these tasks is that performance doesn’t improve at all in the beginning, and then starts improving very fast. This is some evidence that we might expect non-linear improvements on particular tasks, though I mostly interpret it as these tasks being quite narrow, such that when a model starts getting the trick, it’s quite easy to systematically get right.

To beat my usual drum: I think the Arithmetic/Scramble task curves are just due to BPEs. The 'trick' here is not that scrambling or arithmetic are actually all that difficult, but that it needs to memorize enough of the encrypted number/word representations to finally crack the BPE code and then once it's done that, the task itself is straightforward. The cause for this phase transition or 'breakthrough', so to speak, is seeing through the scrambled BPE representations. I predict that using tricks like rewriting numbers to individual digits or BPE-dropout to expose all possible tokenizations, or better yet, character-level representations, would show much smoother learning curves and that much smaller models would achieve the GPT-3-175b performance. (Does this make arithmetic more or less interesting? Depends on how many tasks you think have 'tricks' or pons asinorums. It's worth noting that the existence of plateaus and breakthroughs is widely noted in the development of human children. They may seem to grow steadily each day, but this is the net over a lot of stacked sigmoids and plateaus/breakthroughs.)

comment by julianjm · 2020-12-20T07:37:38.978Z · LW(p) · GW(p)

Hi — new here. I'm an NLP researcher, and for background, I would guess I fall on the skeptical side of the scaling hypothesis by LW standards. I was pointed to this by abergal. Here is some feedback:

1. On the question of economic value:

AFAIK, there are many factors other than raw performance on a benchmark (relating to interpretability, accountability/explainability, and integration with surrounding software) which, as far as I'm aware, may dominate the question of economic viability of AI systems at least on the sub-99.99% accuracy regime (depending on application domain). Examples of what I mean:

1a. Even when humans are used to perform a task, and even when they perform it very effectively, they are often required to participate in rule-making, provide rule-consistent rationales for their decisions, and stand accountable (somehow) for their decisions. A great explicit example of this is the job of judges in the US justice system, but I think this is true to a lesser extent in most jobs. This is a more complex task than mapping input->output and it's not clear to me how approximating the i/o mapping relates to the ability of machines to replace humans in general (though surely it will be true for some tasks). Also, when automating, all accountability for decisions is concentrated on the creators and operators of the machine; since the liability for mistakes is higher, I think the reliability standards for adoption may also be higher.

1b. Integration with traditional software presents difficulties which also mean a very high bar for AI-based automation. Traditional software relies on reasoning with abstractions; failure of abstractions (i.e., the real-world semantics of an API contract) can have nonlinear effects on the outcome in a software system. So the ML component has to be accurate enough for its API to be relatively iron-clad. An example of how this actually looks in practice might be Andrej Karpathy's group in Tesla, based on what he said in this talk

1c. For applications like task-oriented dialog, it's not enough to just output the right kind of text to a user; the right API actions must be taken and their output used to drive the conversation. Integration with software is hard, and advances in representations that facilitate this (for example, Semantic Machines's use of dataflow graphs) already may be a bottleneck for automation of these kinds of tasks. Once these bottlenecks are opened up, it's possible that the machine learning problems at issue may become much easier than the fully-general problem, as more of the business logic can be offloaded to traditional software. A parody-level example of this issue is Google Duplex, a sophisticated system that mimics a human making a reservation over the phone, which could be rendered unnecessary by adoption of reasonable software standards for making reservations online. Though perhaps that's also evidence that even our apparent bottlenecks are pretty vulnerable to brute-forcing with ML... (though I have not heard reports of how well it actually works in practice)

Anyway, the upshot of these points is that I suspect the regime of economic viability for lots of "general" AI tasks is either going to be in the very high regime near convergence (where your extrapolations might break down), or we're already near or past it and it's actually more-or-less constrained by other things (like social solutions for accountability issues, non-ML progress in software, or efficiency of current markets at producing software solutions). Another way of saying this is that I take benchmark performance trends with a big grain of salt as an indicator of automation potential.

2. On "how impressive are the benchmarks":

It's not obvious to me that being able to solve many tasks quite well is clearly of more general value than solving a few tasks much better, at least in this case. While it seems to be an indicator of generality, in the particular case of GPT-3's few-shot learning setting, the output is controlled by the language modeling objective. This means that even though the model may not catch on to the same statistical regularities as task-specific trained models do from their datasets, it essentially must rely on statistical regularities that are in common between the language modeling supervision and the downstream task. It stands to reason that this may impose a lower ceiling on model performance than human performance, or that in the task-specific supervised case.

I don't know about Ajeya's report or the long/short horizon distinction, so I'm not sure if what I'm about to say is right, but: it seems to me that in your analysis relating to that report, GPT-3's status as a general-purpose "few-shot learner" is taken for granted. There is the alternative interpretation that it is simply a general-purpose language model, where even a Bayes-optimal language model may indeed be far from Bayes-optimal on a task (again assuming GPT-3's few-shot learning paradigm). (Also mind that a the Bayes-optimal language model will vary based on its training data.) So it's not clear to me whether arguments about general-purpose learners would apply to GPT-3 as a few-shot learner, especially in the regime of near-human-level performance which is concerned here. They may apply better in the fine-tuned setting where the supervision more closely matches the task, but then we run again into the potential problem of insufficiently general solutions. And in that setting, SuperGLUE is already basically at human-level performance with the T5 model, but T5 fine-tuning doesn't seem to quite be at the point of "transformative AI" — at least any more than BERT or the rest of the latest wave of progress. Rather, the limits of the benchmark have probably been more-or-less hit.

3. On the discussion of scaling up & limitations:

Especially as far as downstream task performance is concerned, data filtering could potentially play a role here. I would expect scaling laws to obtain w.r.t. dataset size when the distribution is similar, but there have been cases where filtering (thereby reducing dataset size) has been essential to learning a better model, at least in machine translation (in fact there was a whole shared task on it — this deals with extremely noisy corpora, but I'm pretty sure I've seen work that even showed gains from filtering what was thought to be "clean" training sets; unfortunately, I can't find it again...). It might not be as much of an issue here, because of the sheer model size and context length (so, maybe the noisy examples could be cordoned off in their own part of feature space and don't do anything worse than wasting time), but I'm not aware of any work looking at the data cleaning aspect. However there is work looking at domain adaptation in LM pretraining. So anyway, the model may just "figure it out" in the course of learning, but if not, especially if there were a general scheme to weigh and seek out input examples based on perceived quality towards learning a good/causally accurate representation of language (as in, e.g., Invariant Risk Minimization — though more progress is needed in this area) then the laws may end up looking different. At the same time the laws may also end up looking different just due to using different corpora; for example, Common Crawl afaik has all kinds of garbage in it. In general, collecting bigger and bigger corpora might mean scraping the bottom of the barrel and hitting a barrier due to quality issues. Alternatively, going massively multilingual (or multimodal) might alleviate that issue enough to hit some critical regime of high performance, depending on the level of cross-lingual and cross-modal generalization at play.

Replies from: julianjm, Lanrian, Lanrian↑ comment by julianjm · 2020-12-20T22:09:48.690Z · LW(p) · GW(p)

Oh yeah, one more thing which I think actually might be the most important point. On a lot of these benchmarks — at the very least, on SuperGLUE — "human-level performance" is a much weaker requirement than "human equivalence." Human performance isn't necessarily an indicator of irreducible entropy in the underlying task. To a large extent, it just reflects ambiguity or coarseness in the dataset specification. A big part of this is the artificial setting of the data annotation, which is unfortunately kind of necessary in a lot of cases when the goal is characterizing abstract language understanding. On the long tail of examples that require more careful reasoning, in the absence of an underlying business reason or extra context to guide people's judgments, they end up interpreting or construing the inputs differently, or applying the annotation guidelines differently, and disagreeing with each other on the output. Human-equivalence would mean sensitivity to all of the issues that lead a human to decide on one interpretation over another, but in the IID performance evaluation setting, these issues all basically wash out looking like noise. Then as long as the model can throw out a reasonable guess of one of the plausible labels in these cases, it will be hard to distinguish from a human. NLI/RTE are great examples of this; it is a notoriously difficult problem to specify, and recent work has shown a great deal of disagreement between annotators in this task setting (including bi-modal distributions indicative of explicit ambiguities in construal), to the point that it seems like supervised models probably have already more or less hit the noise ceiling, at least on the SNLI/MultiNLI datasets.

So for the most part when looking at these kinds of datasets — particularly data annotated in an artificial setting intended to capture something abstract and general about language — maximum performance on IID test sets is best seen not as the point of irreducible entropy (i.e., maximum performance on a task), but as a "noise ceiling" beyond which the particular dataset is incapable of distinguishing between models. See Schlangen for more on the relationship between data and task.

It's an open question how to more carefully and accurately benchmark something resembling human-equivalence, but pretty good concrete examples of how one might try to do this include Contrast Sets and adversarial evaluation. Contrast sets look at model behavior under perturbation of inputs, testing the sensitivity of the decision function to certain changes; adversarial evaluation involves explicitly searching for evaluation items on which humans agree and the model is incorrect. In theory, these sorts of evaluations will do a much better job of evaluating model robustness under distribution shift or in the course of interaction with real users; they pose what amounts to a much tighter constraint on the decision function learned by the model. Indeed, in practice models do much worse under these evaluations than traditional IID ones (at least, for many tasks). This is expected, since pretty much all models these days are trained using empirical risk minimization (i.e., the IID assumption). GPT-3's few-shot learning setting is partly interesting because it does not use the IID assumption, instead using a language modeling assumption (which lets it leverage lots of what it learned, but may indeed impose other constraints on its output).

But still, ANLI is an example of an approach to adversarial evaluation, and it is far at the bottom of your graphs in terms of GPT-3's performance. Also notice that running GPT-3 on ANLI is not technically an adversarial evaluation; the full evaluation process for ANLI would involve (human) searching for examples that GPT-3 will get wrong, evaluating on those, feeding them back in for training, etc.; even the modest uptick at the end for GPT-3 might disappear when doing a true adversarial evaluation.

So all that is to say I would also look at the relationship between human-level performance on a benchmark and human-equivalent performance on a task with a big grain of salt. Most of our datasets are really bad at assessing human-equivalence in the high-performance regime, and our models only recently got good enough for this to become a problem (and it is a problem which is now the subject of a lot of attention in the field). This is much less of an issue when you're using supervised ML for some business purpose where your training and test sets are essentially the same distribution and IID, and your labels directly manifest your business need. But it's a big problem for "general language understanding."

Replies from: Lanrian↑ comment by Lukas Finnveden (Lanrian) · 2020-12-21T00:26:40.461Z · LW(p) · GW(p)

Thanks! I agree that if we required GPT-N to beat humans on every benchmark question that we could throw at them, then we would have a much more difficult task.

I don't think this matters much in practice, though, because humans and ML are really differently designed, so we're bound to be randomly better at some things and randomly worse at some things. By the time ML is better than humans at all things, I think they'll already be vastly better at most things. And I care more about the point when ML will first surpass humans at most things. This is most clearly true when considering all possible tasks (e.g. "when will AIs beat humans at surviving on a savannah in a band of hunter-gatherers?"), but I think it's also true when considering questions of varying difficulty in a fairly narrow benchmark. Looking at the linked papers, I think contrastive learning seems like a fair challenge; but I suspect that enough rounds of ANLI could yield questions that would be very rare in a normal setting [1].

To make that a little bit more precise, I want to answer the question "When will transformative AI be created?". Exactly what group of AI technologies would or wouldn't be transformative is an open question, but I think one good candidate is AI that can do the vast majority of economically important tasks cheaper than a human. If I then adopt the horizon-length frame (which I find plausible but not clearly correct), the relevant question for GPT-N becomes "When will GPT-N be able to perform (for less cost than a human) the vast majority of all economically relevant sub-tasks with a 1-token horizon length"

This is an annoyingly vague question, for sure. However, I currently suspect it's more fruitful to think about this from the perspective of "How high reliability do we need for typical jobs? How expensive would it be to make GPT-N that reliable?" than to think about this from the perspective of "When will be unable to generate questions that GPT-N fails at?"

Another lens on this is to look at tasks that have metrics other than how well AI can imitate humans. Computers beat us at chess in the 90s, but I think humans are still better in some situations, since human-AI teams do better than AIs alone. If we had evaluated chess engines on the metric of beating humans in every situation, we would have overestimated the point at which AIs beat us at chess by at least 20 years

(Though in the case of GPT-N, this analogy is complicated by the fact that GPT-3 doesn't have any training signal other than imitating humans.)

Though being concerned about safety, I would be delighted if people became very serious about adversarial testing. ↩︎

↑ comment by julianjm · 2020-12-21T04:43:17.323Z · LW(p) · GW(p)

I guess my main concern here is — besides everything I wrote in my reply to you below — basically that reliability of GPT-N on simple, multiclass classification tasks lacking broader context may not be representative of its reliability in real-world automation settings. If we're to take SuperGLUE as representative, well.. it's already basically solved.

One of the problems here is that when you have the noise ceiling set so low, like it is in SuperGLUE, reaching human performance does not mean the model is reliable. It means the humans aren't. It means you wouldn't even trust a human to do this task if you really cared about the result. Coming up with tasks where humans can be reliable is actually quite difficult! And making humans reliable in the real world usually depends on them having an understanding of the rules they are to follow and the business stakes involved in their decisions — much broader context that is very difficult to distill into artificial annotation tasks.

So when it comes to reliable automation, it's not clear to me that just looking at human performance on difficult benchmarks is a reasonable indicator. You'd want to look at reliability on tasks with clear economic viability, where the threshold of viability is clear. But the process of faithfully distilling economically viable tasks into benchmarks is a huge part of the difficulty in automation in the first place. And I have a feeling that where you can do this successfully, you might find that the task is either already subject to automation, or doesn't necessarily require huge advances in ML in order to become viable.

↑ comment by Lukas Finnveden (Lanrian) · 2020-12-20T23:15:41.101Z · LW(p) · GW(p)

Thank you, this is very useful! To start out with responding to 1:

1a. Even when humans are used to perform a task, and even when they perform it very effectively, they are often required to participate in rule-making, provide rule-consistent rationales for their decisions, and stand accountable (somehow) for their decisions

I agree this is a thing for judges and other high-level decisions, but I'm not sure how important it is for other tasks. We have automated a lot of things in the past couple of 100 years with unaccountable machines and unaccountable software, and the main difference with ML seems to be that it's less interpretable. Insofar as humans like having reasons for failures, I'm willing to accept this as one reason that reliability standards could be a bit higher for ML, but I doubt it would be drastically higher. I'd love a real example (outside of criminal justice) where this is a bottleneck. I'd guess that some countries will have harsh regulations for self-driving cars, but that does have a real risk of killing people, so it's still tougher than most applications.

1b. Integration with traditional software presents difficulties which also mean a very high bar for AI-based automation. (...) example of how this actually looks in practice might be Andrej Karpathy's group in Tesla, based on what he said in this talk.

I liked the talk! I take it as evidence that it's really hard to get >99.99% accuracy, which is a big problem when your neural network is piloting a metric ton of metal at high speeds in public spaces. I'm not sure how important reliability is in other domains, though. Your point "failure of abstractions can have nonlinear effects on the outcome in a software system" is convincing for situations when ML is deeply connected with other applications. I bet there's a lot of cool stuff that ML could do there, so the last 0.01% accuracy could definitely be pretty important. An error rate of 0.1%-1% seems fine for a lot of other tasks, though, including all examples in Economically useful tasks [LW · GW].

- For ordering expensive stuff, you want high reliability. But for ordering cheap stuff, 0.1%-1% error rate should be ok? That corresponds to getting the wrong order once a year if you order something every day.

- 0.1%-1% error rate also seems fine for personal assistant work, especially since you can just personally double-check any important emails before they're sent, or schedule any especially important meeting yourself.

- Same thing for research assistant work (which – looking at the tasks – actually seems useful to a lot of non-researchers too). Finding 99% of all relevant papers is great; identifying 99% of trivial errors in your code is great; writing routine code that's correct 99% of the time is great (you can just read through it or test it); reading summaries that have an error 99% of the time is a bit annoying, but still very useful.

(Note that a lot of existing services have less than 99% reliability, e.g. the box on top of google search, google translate, spell check, etc.)

Also, many benchmarks are already filtered for being difficult and ambiguous, so I expect 90% performance on most of them to correspond to at least 99% performance in ordinary interactions. I'd be curious if you (and other people) agree with these intuitions?

Re API actions: Hm, this seems a lot easier than natural lanaguage to me. Even if finetuning a model to interact with APIs is an annoying engineering task, it seems like it should be doable in less than a year once we have a system that can handle most of the ambiguities of natural language (and benchmarks directly tests the ability to systematically respond in a very specific way to a vague input). As with google duplex, the difficulty of interacting with APIs is upper-bounded by the difficulty of interacting with human interfaces (though to be fair, interactions with humans can be more forgiving than interfaces-for-humans).

Replies from: julianjm, julianjm↑ comment by julianjm · 2020-12-21T04:05:05.049Z · LW(p) · GW(p)

On 1a:

Insofar as humans like having reasons for failures, I'm willing to accept this as one reason that reliability standards could be a bit higher for ML, but I doubt it would be drastically higher. I'd love a real example (outside of criminal justice) where this is a bottleneck.

Take for example writing news / journalistic articles. Distinguishability from human-written articles is used as evidence for GPT's abilities. The abilities are impressive here, but the task at hand for the original writer is not to write an article that looks human, but one that reports the news. This means deciding what is newsworthy, aggregating evidence, contacting sources, and summarizing and reporting the information accurately. In addition to finding and summarizing information (which can be reasonably thought as a mapping from input -> output), there is also the interactive process of interfacing with sources: deciding who to reach out to, what to ask them, which sources to trust on what, and how to report and contextualize what they tell you in an article (forgetting of course the complexity of goal-oriented dialogue when interviewing them). This process involves a great deal of rules: mutual understanding with sources about how their information will be represented, an understanding of when to disclose sources and when not to, careful epistemics when it comes to drawing conclusions on the basis of the evidence they provide and representing the point of view of the news outlet, etc.; it also involves building relationships with sources and with other news outlets, conforming to copyright standards, etc.; and the news outlet has an stake in (and accountability for) all of these elements of the process, which is incumbent on the journalist. Perhaps you could try and record all elements of this process and treat it all as training data, but the task here is so multimodal, stateful, and long-horizon that it's really unclear (at least to me) how to reduce it to an I/O format amenable to ML that doesn't essentially require replicating the I/O interface of a whole human. Reducing it to an ML problem seems itself like a big research problem (and one having more to do with knowledge representation and traditional software than ML).

If you put aside these more complex features of the problem, the task reduces to basically paraphrasing and regurgitating the information already available in other printed sources. And for all intents and purposes, that's already easily automated (and pretty much is in practice), whether by direct republication of AP reports or plagiarism of articles onto clickbait websites. Perhaps improved automation in retrieval, articulation, and summarization of information can make the journalist more efficient and productive, but what percentage of their work will actually be automated? All of the really important stuff is the stuff where it's not obvious how to automate. So it's very hard for me to see how the journalist would be replaced, or how their role would be transformed, without a lot more progress on things other than function approximation.

I think similar concerns apply to management, accounting, auditing, engineering, programming, social services, education, etc. It doesn't seem to me that we're near the end of any of these fields being eaten even by traditional software. And I can imagine many ways in which ML can serve as a productivity booster in these fields but concerns like the ones I highlighted for journalism make it harder for me to see how AI of the sort that can sweep ML benchmarks can play a singular role in automation, without being deployed along a slate of other advances.

On 1b and economically useful tasks: you mention customer service, personal assistant, and research assistant work.

For ordering expensive stuff, you want high reliability. But for ordering cheap stuff, 0.1%-1% error rate should be ok? That corresponds to getting the wrong order once a year if you order something every day.

I think I see what you're saying here for routine tasks like ordering at a drive-through. At the same time, it isn't that hard to automate ordering using touch screens, which quite a few restaurants are doing as well (though admittedly I don't know of this happening in drive-throughs). I guess if this function was totally automated by default then these places could reduce their staff a bit. But beyond the restaurant setting, retail ordering, logistics, and delivery seems already pretty heavily automated by, e.g., the likes of Amazon. For more complex, less routine kinds of customer service, I'll admit that I don't know exactly what challenges are involved, but this falls basically into the category of interfacing between a human and a complex API (where it's usually not obvious how to structure the API — will revisit that below). So it's hard for me to see what exactly could be "transformative" here.

For personal assistant and research assistant work, it also seems to me that an incredible amount of this is already automated. In both of these settings there is not necessarily an objective ground truth against which you can measure accuracy; it's more about achieving outcomes that are favored by the user. Consider the organization of our digital world by companies like Google, TripAdvisor, Yelp, Google, Semantic Scholar, ...and did I mention Google? If you're flexible with what kind of outcomes you're willing to accept, simply Googling your question gets you extremely far. As ML advancements continue, outcomes will continue to improve, but at what point are the results any more "transformative" than they already have been? Would it be the point at which the user is blindly willing to trust the judgment of the system, and no longer inclined to sift through multiple results, re-query, and evaluate for themselves? That certainly already happens for me sometimes, and seems to me to a large extent to be a factor of the level of transparency in the system and trust that the user has in it to align with their goals (as well as how painful the interface is to use — a perverse incentive if you were to optimize directly for this notion of "transformative"). This is why, for example, I'm more likely to go with a Consumer Reports recommendation than the first Google result. But even then I read plenty for more details, and I give Consumer Reports credence based on the assumption that their report is derived from actual, real-world experience with a product. Can that be automated? We'd need robots testing and rating products. Again, here, I'm not sure exactly what "transformation" by powerful function approximation alone would look like.

Also, many benchmarks are already filtered for being difficult and ambiguous, so I expect 90% performance on most of them to correspond to at least 99% performance in ordinary interactions. I'd be curious if you (and other people) agree with these intuitions?

No, I disagree. Benchmarks are filtered for being easy to use, and useful for measuring progress — the ones that gain wide adoption are the ones where 1) models are easy to implement, 2) basic solutions serve as a reasonable starting point, and 3) gains are likely to be possible from incremental advances. So they should be difficult, but not too difficult. If it is too hard to implement models or make progress, nobody will use the benchmark. If it is too easy, it'll be out of date way too fast. Only very recently has this started to change with adversarial filtering and evaluation, and the tasks have gotten much more ambitious, because of advances in ML. But even many of these ambitious datasets turn out ultimately to be gameable, and the degree to which filtering/adversarial methods work is variable & depends on task and methodology. NLI was notorious for this issue: models would get high scores on datasets like MultiNLI, but then you play with one of these models for no more than 5 minutes to find that it's completely broken and inconsistent even for seemingly-easy examples. There's reason to think ANLI improves on this issue at least somewhat (and indeed it proves to be tougher on models). But still, a lot of the tasks which are harder are ultimately artificial: things like SWAG, (adversarial) NLI, Winogrande, etc. are simplified tasks which have unclear economic value.

In SuperGLUE, tasks were chosen where there seemed to be a lot of headway remaining between a BERT baseline and human performance, but there was another constraint — all of the tasks had to be formulated as relatively simple multiclass classification problems over sentences or sentence pairs. This constraint was to facilitate the development of general models which can easily be tested on all of the tasks in the benchmark using the same API, bringing the focus away from task-specific engineering. But in practice many things you want to do with language in an ML system, and especially things like dialog, have much more complex APIs.

Re API actions: Hm, this seems a lot easier than natural lanaguage to me. Even if finetuning a model to interact with APIs is an annoying engineering task, it seems like it should be doable in less than a year once we have a system that can handle most of the ambiguities of natural language (and benchmarks directly tests the ability to systematically respond in a very specific way to a vague input). As with google duplex, the difficulty of interacting with APIs is upper-bounded by the difficulty of interacting with human interfaces (though to be fair, interactions with humans can be more forgiving than interfaces-for-humans).

I don't think this is really about "solving the ambiguity in NL" versus "solving API actions". The problem is mapping between the two in a way that naturally follows the structure of the conversation, drives it along, and produces the desired ultimate outcome in a business context. This kind of task is much more complex than multiclass classification which is used in pretty much all benchmarks, because the size of the output space is exponential in the size of the API surface; it's outputting full, executable programs.