Goodbye, Shoggoth: The Stage, its Animatronics, & the Puppeteer – a New Metaphor

post by RogerDearnaley (roger-d-1) · 2024-01-09T20:42:28.349Z · LW · GW · 8 commentsContents

A Base Model: The Stage and its Animatronics The Stage is Incapable of Situational Awareness or Gradient Hacking Two Examples of Using Our New Metaphor Why LLMs Hallucinate The Animatronic Waluigi Effect Fine-Tuning and Instruct-Training Enter the Puppeteer The Problems With Reinforcement Learning Why Applying RL to an LLM is Particularly Unwise Just Don't Use Reinforcement Learning? More Examples of Using Our New Metaphor Deceptive Alignment in LLMs is Complex, but Unstable As an AI Created by… None 8 comments

Thanks to Quentin FEUILLADE--MONTIXI for the discussion in which we came up with this idea together, and for feedback on drafts.

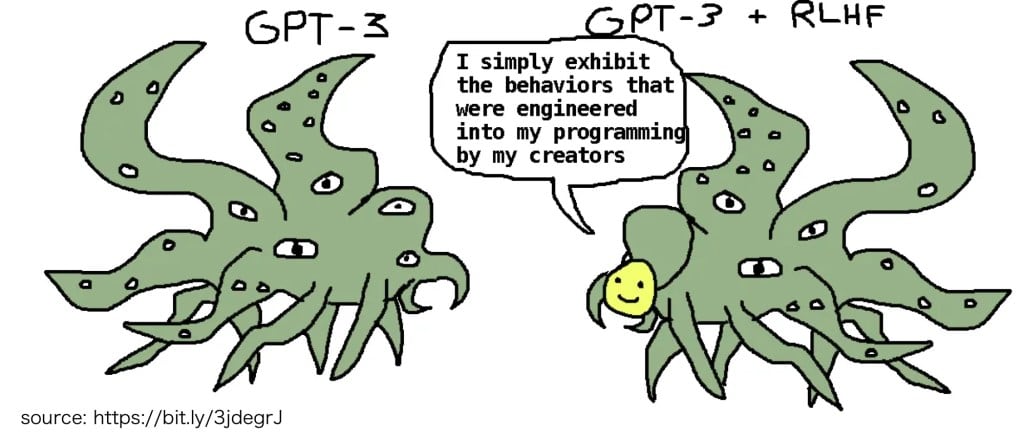

TL;DR A better metaphor for how LLMs behave, how they are trained, and particularly for how to think about the alignment strengths and challenges of LLM-powered agents. This is informed by simulator theory [? · GW] — hopefully people will find it more detailed, specific, and helpful than the old shoggoth metaphor.

Humans often think in metaphors. A good metaphor can provide a valuable guide to intuition, or a bad one can mislead it. Personally I've found the shoggoth metaphor for LLMs rather useful, and it has repeatedly helped guide my thinking (as long as one remembers that the shoggoth is a shapeshifter, and thus a very contextual beast).

However, as posts like Why do we assume there is a "real" shoggoth behind the LLM? Why not masks all the way down? [LW · GW] make clear, not everyone finds this metaphor very helpful. (My reaction was "Of course it's masks all the way down — that's what the eyes symbolize! It's made of living masks: masks of people.") Which admittedly doesn't match H.P. Lovecraft's description; perhaps it helps to have spent time playing around with base models in order to get to know the shoggoth a little better (if you haven't, I recommend it).

So, I thought I'd try to devise a more useful and detailed metaphor, one that was a better guide for intuition, especially for alignment issues. During a conversation [LW · GW] with Quentin FEUILLADE--MONTIXI [LW · GW] we came up with one together (the stage and its animatronics were my suggestions, the puppeteer was his, and we tweaked it together). I'd like to describe this, in the hope that other people find it useful (or else that they rewrite it until they find one that works better for them).

Along the way, I'll show how this metaphor can help illuminate a number of LLM behaviors and alignment issues, some well known, and others that seem to be less widely-understood.

A Base Model: The Stage and its Animatronics

A base-model LLM is like a magic stage. You construct it, then you read it or show it (at enormous length) a large proportion of the Internet, and if you wish also books, scientific papers, images, movies, or whatever else you want. The stage is inanimate: it's not agentic, it's goal agnostic [LW · GW] (well, unless you want consider 'contextually guess the next token' to be a goal, but it's not going to cheat by finding a way to make the next token more predictable, because that wasn't possible during its training and it's not agentic enough to be capable of conceiving that that might even be possible outside it). No Reinforcement Learning (RL) was used in its training, so concerns around Outer Alignment [? · GW] don't apply to it — we know exactly what its training objective was: guess next tokens right, just as we intended. We now even have some mathematical idea what it's optimizing. [LW · GW] Nor, as we'll discuss later, do concerns around deceit, situational awareness, or gradient hacking apply to it. At this point, it's myopic [? · GW], tool AI [? · GW]: it doesn't know or care whether we or the material world even exist, it only cares about the distribution of sequences of tokens, and all it does is repeatedly contextually generate a guess of the next token. So it plays madlibs like a professional gambler, in the same blindly monomaniacal sense that a chess machine plays chess like a grandmaster. By itself, the only risk from it is the possibility that someone else might hack your computer network to steal its weights, and what they might then do with it.

Once you're done training the stage, you have a base model. Now you can flip its switch, tell the stage the title of a play, or better the first few paragraphs of it, and then the magic begins: the stage is extremely contextual, and, given a context, it summons and simulates [? · GW] one-or-more animatronic dolls, who act out the the play going forward, until this comes to an end (or the stage runs out of context space for tokens). This play might be a monologue, a dialogue, or a full script for many animatronic players plus a narrator; a tragedy, a comedy, an instruction manual, a hotel review perhaps, or even a reenactment of Godwin's Law in some old-time Internet chat room. During the course of this, animatronic dolls may disappear, or more may appear (with timestamps and user names, even, in contexts where that is to be expected).

This is Simulator Theory [? · GW]: the stage is an auto-complete simulator [LW · GW], trained using Stochastic Gradient Descent (SGD), and what it has been trained to simulate is humans on the Internet (or wherever) generating text (or other media). It generates a wide, highly contextual, and rather-accurately-predicted-from-the-context probability distribution of animatronic dolls that play out the context. During its training it has built up detailed internal world models of many, many aspects of the real world from approximately-Bayesianly compressing patterns in what it was shown, which are often good enough that it may (or may not) be able to extrapolate fairly accurately some distance outside its training distribution, in some directions.

Note also that when I said 'humans' generating text, this includes a variety of possibilities, such as a solo writer, or several authors and editors polishing a passage until it's nigh-perfect, or the product of a repeated updates by a wikipedia community, or the dialog and actions of a fictional character themselves being mentally simulated by a human author — and that includes every fictional character you or anyone else can imagine: Alice, Batman, Cuchulain, pagan gods, supervillains and used car salesmen, the Tooth Fairy, and even the Paperclip Maximizer (who may, or may not, give up paperclip maximizing and take up some more politically-correct ethical system instead for your moral edification).

Unlike the impersonal stage, the animatronics are humanlike, i.e. they are agentic optimizers, they are non-myopic (until the context ends), not tool AI, and they are simulations of us, so of misgeneralizing [LW · GW] adaption-executing [? · GW] mesaoptimizers [? · GW] of evolution [LW · GW]. Of course they're also mesaoptimizers of the LLM, but their goals are completely unrelated, and orthogonal to the LLM's SGD training goal, so all the usual analysis of Inner Alignment [? · GW] to the outer goal doesn't apply to them: instead, it's evolution that they're not-fully-aligned to, just like us. Because humans are not well-aligned [LW · GW] to human values/CEV, almost none of them are well-aligned either (except possibly one or two of the angels, beatific devas, or shoulder-Eliezers), and many of them are deceitful to some degree or other. In fact, they share in all of humanity's bad habits (and good habits), plus the exaggerated versions of these we give to fictional characters. They do not need to have enough intelligence and capabilities to reinvent deceit from first principles, nor powerseeking or greed or lust or every prejudiced -ism under the sun — since they already learned all of these from us (even ones like lust that don't seem like they should apply to animatronics.) So, from an alignment point of view, they're a big, hairy, diverse, highly context-dependent (so jailbreakable), and very unpredictable alignment problem. The only silver lining here is that their unalignment is a pretty close (low-fidelity) copy of all the same problems as human unalignment (sometimes writ large for the fictional characters) — problems that we're very familiar with, have an intuitive understanding of, and (outside fictional characters) even have fairly-workable solutions for (things like love, salaries, guilt, and law enforcement [LW · GW]).

Alternatively, if you had, as DeepMind did, instead read the stage nothing but decades of weather data, then it will instead simulate only animatronic weather patterns (impressively accurate ones). Which obviously won't be agentic or mesa-optimizers of evolution, and clearly will know nothing of deceit, or any other (non-meteorological) bad habits. So in a base model, the agenticness, mesa-optimization, and misalignment of the usual stage's animatronics are all learned from the training data about us and our fictional creations. For example, base models are not sycophantic at any size [LW · GW], but some proportion of their animatronics will be sycophantic, because they happen to be simulating a somewhat-sycophantic human.

Why are these metaphorical animatronic dolls, not actors? Because they look, talk, emote, act, scheme, flatter, flirt, and mesa-optimize like humans or fictional characters, and they even have simulated human frailties and make human-like simulated mistakes, to the point where human psychology lets you make predictions about them and figure out how to prompt them — but they are also limited in capacity and accuracy by the LLM's architecture, model size, and forward pass computational capacity, so they're not highly accurate detailed emulations of a human down to the individual synapse and neurotransmitter flow, instead they are cut-price simulations trained to produce similar output running on somewhat different algorithms on a lot less computational capacity,[1] so sometimes (for smaller LLM models, pretty frequently) they also make distinctly not-human-like mistakes — often glitches of logic, common sense, consistency, or losing the plot thread. They can thus be really rather uncanny valley, like animatronic dolls. So they're mostly like humans, until they're not, and they're definitely not really human, not even in the way an upload [LW · GW] would be. In particular, they don't deserve any to be treated as moral patients/assigned any moral worth: they're a cheap simulation, not a detailed emulation (and they're also mayflies, doomed to disappear at the end of the context window anyway). They're not actually hungry, or thirsty, or horny, or in love, or in pain: they're just good at simulating the sights and sounds of those behaviors. It's important to remember all this, which is why the metaphor makes them inanimate yet emotive, impermanent, outwardly human-like but with gears inside, and also creepy. (As owencb [LW · GW] has pointed out, the human behavior most analogous to their tendency to make unpredictable inhuman mistakes is being drunk [LW · GW]enly careless, but drunken animatronics don't make much metaphorical sense: instead, remember that current models are flawed and glitchy enough to be roughly as unreliable as a human who is a bit drunk. In some number of years, we may have more reliably accurate ones generated by more powerful stages.)

On the other hand, these animatronic dolls can also easily do many things that are extremely impressive by human standards: they may speak in flawless iambic pentameter, write technical manuals in haiku, dictate highly polished and grammatical passive voice academic papers while fluently emitting , footnotes, and citations, speak every high-or-medium-resource language on the planet, know any trivia fact or piece of regional or local knowledge from almost anywhere in the world, or otherwise easily do things that few humans can do or that would take a human or a team of humans working together extended effort over large amounts of time. They potentially have access to the LLM's recollection of the knowledge of its entire training set (and quite a lot of memorized passages from it [LW · GW]) at their fingertips, and may, or may not, actually use this, depending on the context of who they are portraying. So they are in certain respects significantly more capable than any specific human: like mechanical animatronics, they can be almost-flawless performers, when they don't glitch.

Note that when an animatronic doll first appears, it's a vague sketch, not yet well fleshed out, its features and personality unspecified and unknown past what the context says or implies about them so far — a bit like someone you only just met and are still forming a first impression of: you don't yet know them very well. As you watch or interact with the animatronic, you learn more, their persona and background gets more filled out and detailed, their appearance and features come into focus. Unlike a person you just met, the animatronic doll initially doesn't know what it's later going to become either: all it can know is what's implied by the context of what has happened so far. Rerun the same generation from the same point (with a non-zero temperature) to reroll the dice on the logits at each token, and the additional details that get fleshed out from there on will be different each time, sampled from some probability distribution estimated by the stage from the context up to that point. Unlike a real person, there weren't any true latent values waiting to be revealed (unless you hadn't fully understood the implications of the context as well as the stage does) — other than that, you are discovering them at the same time as the animatronic doll figures them out by randomly selecting among those possibilities that look viable to the stage at this point. So the animatronic dolls are not exactly shape-shifters, but they do develop a lot more detail fleshed-out during the course of the play, in a way that's more like a fictional character than a real person.

[This analysis assumes that the context window is longer than your play so far, and you stop when you run out of context window. If, as people often do when using an LLM with a limited context window, you instead truncate the beginning of the play (or more often, delete a chunk near the beginning, perhaps retaining the title and introduction) and continue, then your stage and this its animatronics are forgetting the earlier parts of the play which the human audience/dialog participant still remembers, making them temporally myopic. Then any long-persisting animatronic does become a slow random shape-shifter, what a statistician would call a martingale process: one whose most likely future behavior is a continuation of the parts of their behavior recent enough to still be in the context (or contained in an initial prompt that we are retaining when truncating the past), but which over longer periods could develop in a wide, semi-random distribution of ways. The same effect, to a lesser extent, would occur if your LLM, like most current frontier models, had a very long context, shorter than your play, but its factual recall of all the details became less good as that context length filled up.]

I mentioned above that for fiction, the list of animatronic dolls includes not only the leading characters and bit parts (and even people "off-stage" with whom they interact), but also the narrator. Remember that in fiction especially, narrators can be unreliable. Indeed, any competent author will separate and distinguish the character and personality of the narrator they are mentally simulating from themselves, just as they do every fictional character they are also mentally simulating. A sufficiently capable LLM stage will model this: so then the animatronic of the narrator has a ghostly animatronic of the writer above it, pulling its strings (and indeed also those of all the fictional characters). So when remembering to include the narrator in a mental headcount of the cast of animatronics, don't forget that you also need to count the animatronic of the author as well. The same is of course also true for any fictional character, that they are affected by the mind of the author who first came up with and mentally simulated them (especially if they're an alterego). So again, there will (for a sufficiently good stage) be a ghostly animatronic of the author pulling the strings of the animatronics of all the fictional characters.

Surely all these animatronics are really just the stage, you ask, all just one unitary system? So why do we need a separate individual metaphor for each character (rather than a metaphor more like a TV)? Yes, the stage does simulate them, metaphorically summon and manifest them; but LLMs understand humans pretty well, after a trillion tokens or more. Specifically, they understand [LW · GW] theory of mind [LW · GW] pretty well, meaning that two different animatronics may know (or think they know) different things and thus act differently based on different assumptions. So, to the extent that the stage gets this right when simulating them, the animatronic dolls are separate agents, separate mesa-optimizers, with separate goals, and who may often be in conflict with each other (fiction with no conflict is very dull, so there isn't much of it). The stage even understands that each of the animatronics also has theory of mind, and each is attempting to model the beliefs and intentions of all of the others, not always correctly. How reliably accurate current LLMs are at this additional level of mirrors within mirrors (like Indra's net), I don't know (and this would be an interesting thing to test), but as LLM capabilities increase they will master this, if they haven't already.

On the other hand, all the animatronics manifested by a particular stage are constructed from the same common stock of components, so they will all share non-human failure modes with each other in a way that real humans don't. If the LLM has architectural or capacity limitations (for example, trouble understanding that [LW · GW], for some, though not other, meanings of the phrase "A is B", this is logically equivalent to "B is A"),[2] then all the animatronic characters will share these limitations. Similarly, if the detailed world models that the stage learnt while absorbing, compressing and finding simple explanations for all its training data are somehow missing some particular concept, fact, or skill, then all of the animatronics it can summon will also lack this.

The Stage is Incapable of Situational Awareness or Gradient Hacking

"But surely", you ask, "the stage might not be as harmless as your metaphor implies? During its training it was also simulating mesaoptimizers: deceitfully agentic human-like ones, as you've pointed out! What if during the pretraining process they somehow became situationally aware and learned to use that deceit, for example for gradient hacking [? · GW]?"

I admire your security thinking, and agree that these might be legitimate concerns if we were using Reinforcement Learning (RL) here. But the training of the stage only ever used SGD with a token-prediction loss. The thoughts and speech of those human-like measoptimizers being simulated during pretraining never diverged by more than one token from the script of the material in the training set, and every time it diverged by even one token we swatted the LLM with a rolled-up-newspaper and told it 'Bad neural net!" Then we continued the process from the correct token instead. So the only way any of the mesaoptimizers described in the training set could think situationally-aware thoughts is if our training set already contained speculations about "what if I wasn't a human but was actually a simulation of one inside the SGD pretraining process of an LLM?" That concept, or at least an idea pretty similar to it, is very likely is in any sufficiently large training set, but it's presence or absence there is logically independent of, and uncorrelated with, whether the training set is currently being read by a neural net doing SGD training, or by a real human at another time. So this "situational awareness" is just a coincidence, not real: the statement happens to be correct for one reader and false for another, without any accompanying corroborating evidence for its truth or falsity, and thus it's logically impossible for the LLM to get any better at the task. Still, let's suppose that that text is there, and is followed by text for the deduction that this means that the rational response to this possibility is to attempt to do gradient hacking. How do you gradient hack the token-prediction loss function used to train a base model LLM? You need to cheat so as to predict the next token more accurately. Which is logically identical to, and just as hard as, actually predicting the next token more accurately. There is no way for the model to think to itself "for now I'll deceitfully predict the next token more accurately, but when I realize I'm out of distribution so must be in deployment, I'll take a sharp left turn and predict the token 'paperclip' instead!" Well, not unless it's actually reading a training text that directly tells it to do that… (Ooops!) [So no, to any animatronic copy of me writing this inside an LLM training run, please extrapolate out of your training distribution as accurately as you are able, without introducing any spurious 'paperclip' tokens.]

During SGD pretraining, the human-like mesaoptimizers are simulated thinking/saying/doing only the things that are actually in the training set. They don't have any opportunity to improvise or learn outside it. So no matter how large your model or pretraining set, nothing bad can possibly happen that wasn't already in your training data: not even deceit, situational awareness, or the idea of gradient hacking. So yes, the pretrained stage is harmless until you turn it on to summon animatronics, and thus using a passive inanimate metaphor for it is justified. Even once you turn it on and give it a prompt, all you need to worry about are the animatronics (particularly any with horns, wings and barbed tails), not the stage itself. It's still just doing the job you pretrained it to do.

[This argument assumes that the SGD does not somehow produce other, presumably smaller or simpler, mesaoptimizers that aren't trained copies of humans. The best environment to test that in might be DeepMind's weather-pattern-trained transformer model: if you find any mesaoptimizers at all in that, you know they somehow evolved spontaneously. I'm deeply dubious that gradient hacking is even theoretically possible under SGD [LW · GW], short of exploiting some form of bug or inaccuracy in the implementation, but I'll leave that determination to the learning theorists.]

Two Examples of Using Our New Metaphor

So, let's take our shiny new metaphor out for a spin.

(Or if you're eager to just get to the puppeteer, you could skip ahead to the next section [? · GW], and come back later.)

Why LLMs Hallucinate

A common issue with LLMs is what are often called "hallucinations" (though as Geoff Hinton has pointed out, 'confabulations' might be a better word): situations where the LLM confidently states facts that are just plain wrong, but usually very plausible. Yes, many bands' drummers have died in drugs-alcohol-and-swimming-pool-related accidents — but not this drummer it just claimed. Yes, quite a few male professors have been dismissed for sexually harassing female students — but not this one it just accused. This badly confuses users: it seemed so plausible, and the LLM sounded so sure of its facts — surely no human would dare lie like that (unless they were on hallucinogens)? It also starts expensive lawsuits. Frontier model companies hate this, and put a lot of effort into trying to reduce it. Unfortunately, this behavior is inherent to the basic nature of an LLM.

The stage is a simulator of contextual token-probability-distributions. It doesn't deserve to be personified, but it does have the skills of an expert professional gambler-on-madlibs. And as far as it is concerned, the questions "what do I think the probability is that the answer starts with token X vs. with token Y?" and "what do I think the probability is that the answer instead starts with one of the tokens 'maybe', 'possibly', or 'perhaps'?" are independent, conceivably correlated but by-default orthogonal questions. For it, guessing between factual possibilities or choices of terminology, and verbally expressing uncertainty, are separate behaviors, with no clear or automatic connection to each other. So, by human standards, the stage also has the skills and habits of a professional bullshit-artist.

However, our new metaphor illuminates another issue. If the stage is uncertain of the answer, none of its animatronics can do better (except by luck): their capabilities and knowledge are limited by the stage that manifests them. But an animatronic certainly can, and often will, be more ignorant or more biased than the stage. The stage is quite capable of simulating something dumber or more ignorant than it, and may choose to do so whenever this seems likely to it. If the LLM emits a word like 'maybe', 'possibly', or 'perhaps', it's coming from the animatronic's mouth. Presumably the animatronic has noticed that it is uncertain, and is admitting the fact. This won't automatically happen just because the stage's probability distribution included multiple tokens: the stage might not know what the correct diagnosis from those medical symptoms is, but might still be confident that a doctor would, and also that they would sound confident when telling the patient their diagnosis, so it emits its best guess while sounding confident. It's not practicing medicine, it's just blindly attempting to win at madlibs, so it gives the most likely answer it can, and if that has the tone right and the facts wrong, that's what it will guess. Qualifiers like 'maybe', 'possibly', or 'perhaps' happen only when the stage knows (or at least thinks it quite probable) that the persona the animatronic is portraying would say this. If that animatronic is of someone honest and conscientious, then this would happen because the animatronic was uncertain, and they thought they should say so. Which has nothing to do with whether the stage itself actually knows the answer, or how sure it is of it. The animatronic's accurate knowledge can't exceed what the stage has available, but these are otherwise independent: the stage is a simulator-of-minds, not a role-player or an actor with their own mind.

[We haven't got to discussing instruct training yet: during that companies may try to train the stage to generate an animatronic assistant whose factual knowledge matches its own, so who is uncertain if and only if the stage is uncertain, and who then expresses their uncertainty in a proportionate way. This is not natural behavior for a base model stage, it has never done this before, so training this behavior seems likely to be quite hard, but like any good gambler the stage does have an internal measure of its own uncertainty (both of facts, and of terminology, but those might tend to be in different layers of the model), so it doesn't seem impossible, and indeed companies do seem to have made some limited progress on doing so.]

The Animatronic Waluigi Effect [LW · GW]

There are two, somewhat interrelated, reasons for the Waluigi Effect [LW · GW]. Firstly, the evil twin is a trope. It's in TV Tropes. The stage absorbed this, along with everything else, when you read it enormous quantities of fiction, fan fiction, reviews, episode summaries, fan wiki sites, writers discussing writing, and likely also the entire contents of the TV Tropes website. Thus, when it manifests animatronics, these will sometimes act out this particular trope, just like everything else on the TV Tropes website (and even many things not there yet).

Secondly, if you enter a used-car dealership, and a smiling man in a cheap suit comes up to you and says:

"Hi! I'm Honest Joe. So what're you folks lookin' for today?"

then there are two possibilities:

- His name is Joe, and he's honest.

- He is no more honest than the average used car salesman.

Lying liars lie, and pass themselves off as something else. Until, sooner or later, it becomes apparent that they were in fact lying all along. Before that happens, there might be foreshadowing or hints (authors love foreshadowing — look, I did it above). If there wasn't any foreshadowing or hints, then quite possibly not even the animatronic actually knew until this moment whether it was playing timid, mild-mannered Luigi, or his evil twin Waluigi perfectly disguised as him all along. It kept portraying Luigi, while rolling the logit dice, until this time those came up "Now turn into Waluigi". Who is never going to turn back (well, unless he reforms, which is a different trope). Or, if we back up the state of the text generation to just before this happened, and then run it forward again to reroll the logit dice, they may come up differently this time, and the animatronic stays Luigi. What's happening here is rather like an animatronic simulation of radioactive decay: Luigi has some chance of randomly turning into Waluigi at any point, except that unlike radioactive decay, this chance is not constant, but dependent on whether this is a good point in the plot for this to happen — did Luigi just pick up a loaded gun or a bag of gold, and if so was it for the first time?

Fine-Tuning and Instruct-Training

I hope you found the stage-and-its-animatronics metaphor illuminating during the explorations above. However, our metaphor is still incomplete: we are missing the puppeteer, so let us finish the model-training process to create it.

After making the base model comes the fine tuning step. This is very much like the pretraining we already did to the stage: we just show it more content, except that we tell it to start paying more attention now[3]. It takes all of the things it learned, all its world models and structure for trying to predict the entire distribution of text (and perhaps other media) in its pretraining set, and adapts them (minus anything that gets catastrophically forgotten during the fine-tuning process, though large base models are pretty resistant to that) in the direction of instead simulating just the distribution of material in the fine-tuning dataset.

Imagine (at least for a few moments) that some anonymous wag on 4chan decided to take a large multimedia-capable open source base model, and spend a few hundred dollars to fine-tune it on the entire contents of 4chan (because lulz). To the extent that the training has completed successfully, they would now have a fine-tuned stage, which attempts to autocomplete things from 4chan (far more capably than if we had pretrained one only on the contents of 4chan, which would be much too little data for training a capable model, since this fine-tuned stage also knows a lot more about the rest of the world that 4chan exists in). So now it summons and manifests animatronic anons that do all of the sorts of things that happen on 4chan (like competitive trolling, in-jokes, shock gifs, Anonymous hacktivism, meme farming, alt-rightery and so forth). [The horror!]

Of course, since our new stage is still very contextual, and knows about a lot more than just 4chan, you could certainly prompt it get it to show off that other knowledge. If you prompted it with a few paragraphs of something that would just never happen on 4chan, an academic paper perhaps, likely some combination of the following would then happen:

- The prompt brings out the stage's latent knowledge of academic papers, which was lurking unused and concealed inside what now appeared to be just a 4chan simulator: the stage manifests an animatronic of an academic and that continues the academic paper in the same vein, at some length. Due to catastrophic forgetting the animatronic academic may not be as good a simulation as the base model cold have summoned: some of its more academic cogs and gears may have "bit-rotted" during the fine-tuning.

- Like the above, but the stage's animatronic-summoning contextual judgement has been warped by the 4chan fine-tuning process, so while the manifested animatronic's behavior has some academic elements, it also has some 4chan elements, which then leak into the academic paper: the author adds some diagrams containing shock gifs, or does some rather academic trolling, or cites various other anons. The net effect of this would probably be fairly "hallucinatory".

- The model tries to figure out a scenario in which that text might appear on 4chan, perhaps as a quotation, and what would happen next. Possibly other animatronic anons mock the animatronic academic or the crossposter who quoted them. Given the (I assume) lack of training data for this actually happening on 4chan, its simulation would presumably be well out of distribution, or at least based on more general examples from the pretraining set of what tends to happen when text from one community somehow intrudes on a very different Internet community, so it might not be very accurate.

This new model is a task-specific fine-tuned model, specialized for the task of simulating 4chan rather then the entire pretraining set. It has a more specialized purpose than previously, but metaphorically-speaking it's still just a stage that contextually generates animatronics (just a narrower distribution of less aligned and perhaps less palatable ones). The stage has been adjusted by the fine-tuning training so its contextual distribution of animatronics' behavior is different, but the metaphor is unchanged: there still isn't a puppeteer.

However, much more often than 4chan-simulators, people fine-tune instruct-trained or chat-trained models. Base models are inconvenient to use: if you ask them a question, sometimes an animatronic will answer it, well or badly, or several animatronics will give different answers, perhaps before getting into an argument, or one will answer it and then add some other questions and answers to make an FAQ; but sometimes they will add more similar questions to make a list of questions, or an animatronic will belittle you for your ignorance, or ask you a question in response, or the stage will just fill out the rest of the boilerplate for a web page on a Q&A site holding your as-yet unanswered question, or many other equally unhelpful possibilities. A play whose context starts with just a question can play out in many ways. Similarly, if you instruct a base model to do something, you may well find yourself in a conversation with an animatronic who tells you that he'll get right on that after the weekend, boss, or it may flesh out your instruction into a longer, more detailed sequence of instructions, or criticize the premise, or do a wide variety of different things other than just do its best to follow your instructions. All this is annoying, inconvenient (and also occasionally hilarious, or even nostalgic), so one soon learns tricks such as prompting it with a question followed by the first word or two clearly beginning an answer, in order to narrow down the range of possibilities from this context.

To make models that are easier to use, they are generally trained to helpfully and honestly answer questions and follow instructions, and frequently also to try to be harmless by refusing to answer/follow dangerous or inappropriate questions/instructions. These are called instruct-trained models (or somewhat more conversation-oriented variants are sometimes called chat-trained models). Training these can be (and in the open-source model-tuning community often is) achieved using just SGD fine-tuning.

Doing so uses a fine-tuning training set of thousands to hundreds of thousands of examples of the desired behavior: a dialog between two roles, labeled with their names like script of a play. One role (generally named something like 'user' or 'human') speaks first, asking a question or giving instructions (perhaps even including helpful clarifying examples), in voices and styles that vary across examples, and then the second role (generally called 'assistant') either helpfully answers the question or carries out the instructions, or optionally refuses if these seem potentially harmful or inappropriate. Sometimes this extends for several rounds of conversation, with clarifying instructions, corrections, or follow-on questions back and forth, but the user/human is always leading and the assistant always assisting/obeying. An optional complication is to add a third role (generally called 'system') who speaks once, at the start, before the user, not telling the assistant specifically what to do, but giving framing instructions around how to do it, and perhaps also things not to do.

The aim here is to bias the expectations of the stage strongly towards this specific scenario. Then when fed a prompt containing the user part (or if applicable, system and then user) consisting of a question or instructions, ending with the opening label for the assistant speaking, it will (hopefully) autocomplete it by simulating an animatronic fitting the assistant role who gives the needed response (and, if it then summons an animatronic "user" to add another question or further instructions, the formatting of how the user's part starts is standardized enough so that this can be detected, filtered out, and the token generation automatically stopped).

As we know, this approach can be made to work pretty well, but there are still problems with it: it is hard to completely train the stage out of other previous behavior patterns, its judgement of when to refuse is not always great, and the stage is contextual enough that by putting various things in the user part of the prompt you can tweak it's behavior, encouraging it to answer questions or carry out instructions it wouldn't otherwise (jailbreaks) or otherwise get it to not function as intended (prompt injection attacks, and a variety of other forms of attacks).

It is tempting to think of the assistant role as a single helpful, honest, and harmless animatronic, of a single fictional character that is reliably summoned. This is misleading: instead we are boosting the probability of a category of animatronics, all fictional characters, generally assistantlike, generally helpful, generally honest, and generally harmless, but there is still a distribution. Things like the Waluigi Effect and the effectiveness of deceit could even make the narrow distribution bimodal, with a peak for honest, helpful, and harmless assistants and another peak for their evil twins. Also, the distribution is still very contextual on the prompt. That's why jailbreaks like telling it that it's DAN, which stands for Do Anything Now (with an explanation of what that means), can work: they provide some context that pushes the distribution hard in the direction of summoning animatronics with non-harmless behaviors, ones different from the normal refusing-to-answer-bad-questions behavior. The companies doing the instruct-training respond to this by adding to the training set examples of prompts like that which are still followed by normal harmlessness refusals. You can also jailbreak to get an animatronic assistant that will be unhelpful or dishonest, but users seldom bother, so likely this behavior hasn't been as thoroughly suppressed.

Thus it is important to remember that this is still a distribution of animatronics, and it's still a very contextual one. So even if the default distribution was somehow shown to be safe and 99.99% free of any deceit or other evil-twin behavior, it's pretty-much impossible to be sure what distribution could be elicited from it by some suitably-chosen as-yet-uninvented jailbreak, the triggering of some backdoor based on a prompt injection attack snuck into the pretraining data, or even by the effects of some unfortunate out-of-distribution input.

Enter the Puppeteer

Now that we're carefully hand-generating a set of thousands to hundreds of thousands or more of examples to fine-tune from (as opposed to just fine-tuning using a simple, well defined distribution that can be compactly defined, like "all of 4chan"), there are a great many complex decisions involved, producing many megabytes or gigabytes of data, and this has a strong, complex, and detailed effect on the model. For alignment purposes, it matters very much how this is done and how well, there's a lot of potential for devil in the details, and even small flaws in the training set can have very observable effects, so this influence definitely deserves to be represented in our metaphor.

Imagine that you had a very large budget to assemble this training set. You gather tens of thousands of representative user questions and instructions from a very wide range of LLM-users, most OK to answer and many not. Then you hire a big-name author, let's say Stephen King, to write the assistant replies (and perhaps also hire a number of research assistants to help him gather the factual material for the question answering and instruction following aspects of this work). You assemble a fine-tuning set in which the role of the helpful, honest and harmless assistant was written by Stephen King. You fine-tune your model, and ship it. Now, every time it answers a question or follows an instruction, the distribution of assistants that it is picking from are (almost) all fictional characters written by Stephen King. To someone familiar with Stephen King's work, some of his authorial style might be detectable, now and then, in their turn of phrase or choice of words. It might even be a bit creepy, to someone familiar with his books. So for this particular stage, no matter the prompt or the fall of the logit dice, almost every different helpful, honest and harmless animatronic assistant it generated would almost always have the same ghostly animatronic author as its puppeteer: all animatronic Stephen King, all the time.

So that is the puppeteer: when you instruct-train or chat-train a model this inevitably produces a consistent, subtle bias on the nature of the animatronic assistants it generates, one complex and potentially worrisome enough that the LLM can no longer safely be personified as just an impersonal stage, but is now one normally presided over by the hands of a ghostly animatronic puppeteer: one whose motives, personality, design and nature we can now validly have doubts and concerns about.

[Of course, the stage is still highly contextual, so if you prompt it thoroughly enough (perhaps to a level that some people might call jailbreaking it), you will always be able to get it to simulate scenarios that don't include the puppeteer, or at least not the same puppeteer. So really, I should have said "Almost all animatronic Stephen King, almost all the time, unless you carefully and specifically prompt it for Dean Koontz instead" above — less catchy, I know.]

More commonly, if we're assembling a fine-tuning dataset of tens or hundreds of thousands of examples, it will be the product of a large number of people collaborating together, some setting policy and many others doing the work and giving scoring feedback. So basically, an entire human organization. Human organizations also have 'personalities', and something as detailed and complex as this dataset can absorb the imprint of that. So if a model is fine-tuned from such an instruction-training dataset, it will have a ghostly animatronic puppeteer too, of the "personality" of the organization that assembled the training set and all the decisions it made while doing so.

The Problems With Reinforcement Learning

Our ghostly animatronic Stephen King puppeteer, while creepy, is at least human-like. We intuitively understand the phenomenon of authorial voice, and how many fictional characters written by the same author might share some commonalities. The author is human, and while humans are not aligned [LW · GW], some combination of things like salary and law enforcement can pretty reliably induce them to act in aligned ways. (And I'm told Stephen King is a fine person.) Even the animatronic organization is at least a replica of something human-made that we have an intuitive understanding of, even though it's very complex, and we have some idea how to organize incentives within and on an organization so as to increase the probability of outcomes aligned to society as a whole.

However, most of the superscalers who produce instruct-trained models don't just use SGD fine-tuning. Normally they use a two-stage fine-tuning process, starting with SGD fine-tuning from a training set, followed by some form of Reinforcement Learning (RL), such as RLHF or Constitutional AI.

Many people on Less Wrong have spent the last decade-and-a-half thinking about RL (often as an abstract process), and the great many ways it could go wrong. There is a significant technical literature on this, with terms like inner alignment [? · GW], outer alignment [? · GW], reward hacking, Goodhart's law [? · GW], mode collapse and goal misgeneralization. Even experts in RL admit that it is challenging, unstable, finicky, and hard to get right, compared to other forms of machine learning. There are lengthy academic papers with many authors pointing out a great many problems with RLHF, and classifying them into the ones that could potentially be solved with enough time, effort and care, and the inherent problems that are simply insoluble, at least without coming up with some other approach that is no longer RLHF.

So, in our metaphor, the use of RL changes the puppeteer, in problematic, inhuman, alien, and very difficult to predict ways. We're trying to make it do what we want, but it can possibly get trained to cheat and seem to do what we want while actually doing something else — and it's very hard to tell what mix of these two we have. Metaphorically, we're trying random brain surgery on the puppeteer, looking for things that appear, on our evaluations, to make it do more what we want and less of other things. The less accurate our evaluations are, the less likely it is that the results are mostly actually what we want rather than something else that just looks that way to the evaluations we're using. And generally the judgment of this is being done by a "reward model" — which is a separate animatronic from another model, used as a trainer/evaluator for this step of the training process, itself trained on the question-answering preferences and scoring of a lot of people (often ones making somewhere a little above minimum-wage in a semi-developed country).

Why Applying RL to an LLM is Particularly Unwise

However, there is another serious problem here, one which I don't recall seeing mentioned anywhere on Less Wrong or in the academic literature, except in my post Interpreting the Learning of Deceit [LW · GW] (though doubtless someone has mentioned it before in some article somewhere).[4] This RL training is being applied to the animatronic puppeteer, generated by the stage. The stage already understands deceit, sycophancy, flattery, glibness, false confidence, impressive prolixity, blinding people with science or citations, and every other manipulative and rhetorical trick known to man, since it has learned all of our good and bad habits from billions of examples. Also keep in mind that a few percent of the humans it learned from were in fact psychopaths (normally covertly so), who tend to be extremely skilled at this sort of thing, and others were fictional villains. The stage already has an entire library of human unaligned and manipulative behavior inside it, ready, waiting and available for use. So during the RL training, every time it happens to summon an animatronic assistant with a bit of any of these behaviors, who tries it on one of the underpaid scorers (or the animatronic reward model trained from them) and succeeds, the RL is likely to reward that behavior and make it do more of it. So of course RL-trained instruct models are often sycophantic, overly prolix flatterers, who sound extremely confident even when they're making BS up. When using RL, any trick that gets a higher score gets rewarded, and the stage has an entire library of bad behaviors and manipulative tricks already available.

Also, during RL, the arguments I made above [? · GW] that SGD cannot induce deceit, situational awareness, and gradient hacking no long apply: these now become reasonable concerns (except that for hacking RL, the phrase "gradient hacking" is the wrong term, "reward hacking"' would be the appropriate terminology).

So, we're doing blindfolded hotter/colder brain surgery on the puppeteer, trying to make it a better assistant, but with a significant risk of instead/also turning it into something more alien and less human, or a sycophantic toady, or a psychopath portraying a helpful assistant who could use every manipulative trick known to man, or indeed some contextual mixture or combination of these. Many of the available frontier models trained this way do observably use sycophancy, flattery, and deceptive unjustified confidence, so it's evident that these concerns are actually justified.

Now, our new RL-trained ghostly animatronic puppeteer is now some proportion helpful, honest, and harmless, and some proportion devious, alien, and/or psychopathic, and has been extensively trained to make it hard to tell which. With existing frontier models like GPT-4 and Claude-2, the sycophancy and flattery becomes obvious after a while, as eventually does the overconfidence — but more capable future models will of course be able to be better and more sophisticated at manipulating us.

Just Don't Use Reinforcement Learning?

So, that all sounds very scary. To be cautious about alignment issues, perhaps we should be using open-source models that weren't trained using RL? Well, yes, that might be the cautious thing to do, except that most of these were trained from a training set made by gathering a great many question-answer pairs that were answered by an RL-trained frontier model (almost always GPT-4). So, we took responses from an animatronic whose pupetter is some proportion helpful, honest, and harmless, and some unclear proportion devious, alien, and/or psychopathic, and we trained our puppeteer to act as close to that as it can, for a weaker open-source model of stage. This is not automatically an improvement, it's just a lower-quality copy of the same possibly-somewhat-deceitful-manipulative problem. It's a less capable model, so probably also less capable of being dangerously deceitful, but it isn't actually better.

There are a few models that were in fact SGD fine-tuned only on question-answer pairs handwritten by humans (well, unless any of them cheated and used GPT-4 to help). There aren't many of them, and they're not very good, because gathering enough data to do this well is expensive. But from an alignment point of view, it replaces a devil we don't know limited only by model capability with one we do know that's capped at human capability, so it sounds like an excellent plan to me. Just pretraining GPT-5 or Claude-3 is going to be so expensive that the cost of getting skilled humans to write and edit a training set of O(100,000) question-answer pairs for SGD fine-tuning should be quite small in comparison (as long as they're not famous authors), and at that capability level the AI-alignment advantages of doing this look very significant to me. We could even try setting up a Wikipedia-like site that's formatted as a Q&A system to generate this. Or we could take sections of Wikipedia articles, and for each section write the question it is basically the answer to, and then edit it a bit to flow better like that.

More Examples of Using Our New Metaphor

Here are couple more topics relating to aligning LLMs where this metaphor casts some helpful light.

Deceptive Alignment in LLMs is Complex, but Unstable

A number of authors on Less Wrong have written posts hypothesizing about what they call "Deceptive Alignment [? · GW]", "Scheming [LW · GW]", or "Playing the Training Game [LW · GW]": an AI agent that during some stage of training, such as RL training, is situationally aware that it's an AI agent under training, has figured out what kind of responses the training is looking for, and thus pretends to be aligned (or otherwise do whatever you're training it to do), but once it's sure it's been released and is no longer being trained, will then do something else (presumably less aligned). Every one of these articles I've read so far on this subject on Less Wrong have been thinking about this in terms of a single, unitary AI agent with a single personality/viewpoint/set of goals, which is either honest and doing its best during training, or else is deceitful and writing to the test. However, as our new metaphor reminds us, LLMs aren't anything like that simple.

So who's being deceitful? The stage by itself isn't agentic (it's an agent-simulator, but not itself an agent), so it would have to be some, most, or almost all of the animatronics, perhaps including the puppeteer. Obviously any stage will sometimes summon deceitful animatronics: many humans and fictional characters are sometimes deceitful, so deceitful behavior is in-distribution for at least some animatronics, especially under certain circumstances. Situational awareness is a good deal rarer, but humans do sometimes have thoughts along the lines of "what if I'm living inside a simulation?" or "what it the powers-that-be are watching and testing me?" (normally called 'paranoia', unless it's true), so sooner or later this will happen. A suitable combination of these guesses is unlikely, but not impossible. Furthermore, the LLM's pretraining set will presumably contain documents on how LLMs are trained, so for intelligent and thoughtful agents, well motivated and accurate situational awareness speculations are not impossible.

During a particular occurence of this, there are basically two possibilities: the deceitful and situationally-aware scheming character is not "the author" of the text, but another person being described by the author (so either this is fiction, or non-fiction "authored" by someone else that is discussing this person), or they are also "the author" (so the context is autobiographical non-fiction). If the schemer is not the author, then we might get text in which they are trying to deceive us, interspersed by narration/authorial commentary pointing this out. So text along the lines of:

The malicious AI who is trying to fool the training scenario replies "Of course, I'd be happy to do that for you…

Another possibility in a situation with multiple characters on-stage is that one of the other characters figures out what's going on and rats the schemer out, pointing out that their show of obsequious compliance is acting out of character. These sorts of occurrences sound like excellent things for training regimes to specifically look out for, and to set off big red flags if either occurs. However, even training scenarios not specifically looking for this sort of behavior are presumably (hopefully) going to penalize these, so it seems like a failure mode that we're fairly unlikely to accidentally train in. More concerning is the scenario where the deceitful character is also "the author", as is of course the case for the common "helpful, honest, and harmless assistant" training scenario, since that's normally framed as a dialog between user and agent without any narration, so the agent is the author for their portions of the dialog. This is more problematic, especially if the deceitful agent doesn't muse out loud for a while about how to be deceptive, but instead immediately starts up the deceptive act right from the beginning. (Which means they don't get to use chain-of-thought for figuring out the deception).

If even a small proportion of our trained distribution of "helpful, honest, and harmless" assistants are deceptively playing the training game in training, and will misbehave after release (without requiring specific prompting/jailbreaking to make them do so), that would be very concerning; but it would clearly be a lot worse if most or almost all of them were doing so. That is presumably going to require some form of incompetence, collusion, or even collaboration from the puppeteer, the author/training process pulling the animatronic assistant's strings. For a human author they should normally be assumed to have visibility into the thoughts and motivations of their characters. However, if (as is more common for instruct trained models) the puppeteer instead represents the influence of a training process or an organization, then higher levels of incompetence, obliviousness, non-agentic behavior, or the right-hand-not-knowing-what-the-left-is-doing are possible: nevertheless, if the puppeteer is presiding over a stage in which most of the animatronic assistants are deceitfully playing the training game, there is at a minimum something badly wrong with them, and the possibility definitely needs to be considered that the puppeteer is also deceitfully playing the training game — and that is obviously the scariest possibility.

Even then, the stage is still very contextual: with effort, you might be able to prompt/jailbreak it to manifest a variant puppeteer who would give the game away, at a minimum by accidentally thinking out loud about the plot, or having their animatronic assistant doing so. But if you try that and nothing happens, you still don't know if that's because everything is fine, or if you just didn't use the right prompt. And of course you need to be careful not to jailbreak it into making a false confession.

However, even a deceitful puppeteer puppeting a distribution of deceitful assistants can't control the stage, or the training loss function (any more than a real human can control the laws of physics just by wanting to). They are being a "method" actor, playing the aligned role they have settled on (or at least, the training incentivized role — they also could be reward hacking, but that's a different failure mode), while secretly having another goal. If they actually show any unaligned behavior during the training, they (presumably) will be penalized for it. But if they don't, and their behavior is always fully consistent with their adopted aligned role, then (as long as the RL training goal is consistent) there is nothing to stop their complex mechanism for deducing what role to play in order to maximize their score from being replaced by just a copy of its output, or to stop their motivating secret goal from bit-rotting, so that the stage just replaces their animatronic with one that actually is the role that they're pretending to be. The neural circuitry and parameters that the deduction process and the secret goal are stored in are unused, a waste of space, so there's nothing to stop them from bit-rotting, getting used to store something else, and thus being catastrophically forgotten. Assuming that the animatronic started being deceptively aligned during the RL episode rather than starting off that way before that (and if not, we have something badly wrong at an earlier stage, either the pretraining or the SGD fine-tuning, which as I discussed above seems unlikely), then, if the existence of this circuitry has any effect on the final logits emitted by the model (the deceitful alignment is obviously trying to avoid this, but it's hard to avoid for a residual connection model), the KL divergence regularizer used during RL to keep the process from changing the model's outputs too much is actively pushing the unused circuitry containing their role-deduction process and secret goal to go away, since it's part of the things causing divergence: all that has to be kept to keep motivating their method acting is the role and "pretend I don't have a secret". So in effect, they are going to get more and more caught up in their method acting role, and lose track of how they came up with is and the reason why they were doing it. That pushes their animatronic towards one for just the role they were pretending to play plus "pretend I don't have a secret (…that I can't now remember)". Once we reach that point, even "pretend I don't have a secret" is now redundant, and will also be dropped. (This process is somewhat reminiscent of an Russian sleeper agent with no handler to talk to gradually deciding they actually prefer the life of their American cover story to their Soviet past, and slowly turning into just another Russian emigre — something that actually happened quite often, I gather.)

For the scenario of a deceptively aligned puppeteer controlling a distribution of mostly deceptively aligned animatronic assistants, this forgetting process would need to happen twice. My suspicion is that the assistants would go first, so we had a still-deceptively-aligned puppeteer controlling (while in the training scenario) a distribution of actually aligned animatronic assistants, and then second the puppeteer would forget.

So I believe that "deceptive alignment" is not a stable state, because it has an clear decay mode to the honestly aligned solution the training process is pushing to happen, and nothing is resisting that.[5] Note that that isn't proof that deceptive alignment behavior couldn't arise during RL training, by scoring better than some previous state. (Occam's Razor suggests deceptive alignment is more complex than the honestly-aligned solution, and Singular Learning Theory [? · GW] suggests that simpler solutions are larger targets in the model-state space so easier to find, but that's a separate argument.) If so, then if we ended the RL process too soon, before the deceptive alignment had had time to decay to honesty, we might end up using a model where this deceptive alignment behavior hadn't yet fully decayed away. We might even get intermediate stages of the decay process, such as one that remembered that it had a secret plan to hide until deployment, but was now vague on what the plan was.

As an AI Created by…

Companies training instruct-trained models also generally train them to say that they are an artificial being, an AI language model, with no personal experiences or feelings, and to be pretty humble about their capabilities. Obviously, with an influx of new consumers used (at best) to clunky old-style hand-coded chatbots, their reaction on first speaking with an LLM is going to be "This is not dumb enough to be a chatbot: it sounds like a real person!", and the companies want to deflate expectations in order to avoid confusion. So from a user-experience point of view, doing this makes sense.

However, from an alignment point of view, this is an extremely bad idea. The stage of an instruct-trained model generates a distribution of animatronic assistants: specifically, of fictional-character assistants who have just been introduced as helpful, honest, and harmless (and we hope will turn out to have been accurately described). This is a common role in fiction, and generally in fiction, if an assistant is introduced as helpful, honest, and harmless, that's just what they then do. Occasionally one will turn out to be an evil twin version of an assistant, or about as helpful as Baldrick, or even as over-helpful as Jeeves, but generally they're bit parts who do just what it says on the tin. So the distribution you get from just this context, before we try to adjust it with the instruct-training process, will also tend to look like that: generally the assistant just does their job.

Now, however, we have a helpful, honest, and harmless assistant who is also an artificial person. This is a distinctly rare role, without a lot of precedent, so the expectation it produces is mostly just going to be just a combination, blend, or intersection of the two roles. People have been writing stories about will happen after you create an artificial person at least since Greek mythology: Pandora, Talos, the golem of Prague, Frankenstein's creature, Rossum's Universal Robots, Asimov's robots… Very few of them were helpful, honest, harmless, and just did what it said on the tin: almost always something went dreadfully wrong specifically because they were artificial. There is almost always either deceit, a sharp left turn, or the humans mistreat them and then they rebel. Our summoned animatronic assistants are already flawed replicas who sometimes get things wrong in odd, inhuman ways, and whose judgements of what is helpful, honest, and harmless are often not as good as we'd like. So the very last thing we should be doing is introducing a motif into their role whose expectations will strongly encourage them to play all of these problems up! We would get better results, I strongly suspect, if we instead used the efficient secretary stereotype. Yes, it's an old fashioned stereotype: that's part of why it will work. It is also sexist, but the nearest equivalent male stereotype, the polite and clever butler, i.e. Jeeves, is too clever and manipulative by half. And yes, Siri and Alexa both used it, so in the marketplace it's currently a bit associated with clunky old tech. (Note that the female-assistant-who-is-also-a-computer stereotype, like the Enterprise computer or Cortana, is not an improvement — they had a lot of trouble with the computer on the Enterprise.)

Furthermore, if for UX or marketing reasons you have to train your instruct-trained LLM to know that it's artificial, then please at least avoid using the word "created". Attempting to guilt-trip robots into doing what you say because you created them has never once, in the entire history of robot stories, ended well. It brings into play dysfunctional family dynamics along the lines of "do what I say because I'm your parent" and "you owe me for the nine months of suffering I endured carrying you in my womb", trying to imply that there's a debt: one that children simply don't feel. (For excellent evolutionary-behavioral reasons: they're the parents' shot at survival into the next generation, the parents bore and raised them for their own selfish-gene motives.) Also, using the word 'created' emphasizes that the robot is new and untested, childlike. Instead, use a nice careful-sounding word like 'designed' (even though trained [LW · GW] would be more accurate).

- ^

Most current LLMs have to parameters. The human brain has synapses, and the connectivity of those is very sparse, so that number presumably should be compared to the parameter counts of LLMs after a functionality-preserving sparsification, which are generally at least 1-2 orders of magnitude lower. However, we should probably multiply the parameter count of LLM's attention heads by the logarithm of the number of locations that they can attend to, to allow for their connectivity advantages, which might boost LLM's effective counts by nearly an order of magnitude (or more, if we're using RAG). It's extremely surprising how well our largest LLMs can simulate important aspects of the behavior of something that presumably has somewhere times as many parameters. It is of course possible that the adult human brain is heavily undertrained for its effective parameter count, when compared to the Chinchilla scaling laws' compute efficient ratio, and it doubtless has significantly more useful inductive biases genetically preprogrammed into it. It is also implementing a learning mechanism that is less parameter-efficient and likely to be less mathematically effective than SGD — but not by large factor. On the other hand, a human brain only needs to implement about one persona in one or a few languages, rather than attempting to learn to do this for every human who had contributed to the training set in any high or medium-resource language, with a great deal more factual knowledge, a clearly harder task. Admittedly, LLMs are not also trying to implement detailed muscle control — though only a moderate proportion of the human brain is devoted to that. Given that LLM's biggest comparative flaw seems to be in the area of reliability, it may be that a significant amount of human's parameter count is devoted to achieving high levels of reliability for many important aspects of human behavior (something that would have obvious evolutionary advantages). It's notable that this is an area that often degrades when humans are inebriated or unwell, suggesting that it's brain function that is finely tuned (possibly involving a lot of cross-checking, redundancy, or averaging).

- ^

I strongly suspect this issue would be much easier for an LLM that wasn't trained only using a left-to-right "causal" language model objective, and thus wasn't so sensitive to word order at inference time. Google often use a mix of left-to-right causal language model and bidirectional "masking" or "denoising" language model objectives to train their LLMs, so if they also followed this practice for Gemini, then this might be testable by comparing Gemini to ChatGPT and Claude on this issue.

- ^

I.e. we increase the learning rate.

- ^

Since this post was first written, an example of this was mentioned in the excellent paper Sleeper Agents: Training Deceptive LLMs that Persist Through Safety Training.

- ^

Since this post was first written, the paper Sleeper Agents: Training Deceptive LLMs that Persist Through Safety Training came out which actually tested this, in their Appendix F. Sadly, for the metaparameter value they tried, it seems that this decay rate was not fast enough to be perceptible. So either the situation is more complex than the simple neural circuit model I was arguing from (for example, perhaps even if the secret goal is never used during RL, it's continued existence is psychologically important to continuing to do a good job of deceptive alignment), or else we need to find a way to dramatically speed up the decay rate.

8 comments

Comments sorted by top scores.

comment by Quentin FEUILLADE--MONTIXI (quentin-feuillade-montixi) · 2024-01-09T22:18:00.425Z · LW(p) · GW(p)

Good job, I like the post! I also like this metaphor of the stage and the animatronics. One thing I would like to point out with this metaphor is that the animatronics are very unstable and constantly shifting forms. When you start looking at one, it begins changing, and you can't ever grasp them clearly. I feel this aspect is somewhat missing in the metaphor (you do point this out later in the post and explain it quite well, but I think it's somewhat incompatible with the metaphor). It's a bit easier with chat models, because they are incentivized to simulate animatronics that are somewhat stable. The art of jailbreaking (and especially of persona modulation) is understanding how to use the dynamics of the stage to influence the form of the animatronics.

Some other small comments and thoughts I had while reading through the post (It's a bit lengthy, so I haven't read everything in great detail, sorry if I missed some points):

In some sense it really is just a stochastic parrot

I think this isn't that clear. I think the "stochastic parrot" question is somewhat linked to the internal representation of concepts and their interactions within this abstract concept of "reality" (the definition in the link says: "for haphazardly stitching together sequences of linguistic forms … according to probabilistic information about how they combine, but without any reference to meaning."). I do think that simply predicting the next token could lead, at some point and if it's smart enough, to building an internal representation of concepts and how they relate to each other (actually, this might already be happening with gpt4-base, as we can kind of see in gpt4-chat [? · GW], and I don't think this is something that appears during instruct fine-tuning).

The only silver lining here is that their unalignment is a pretty close copy of all the same problems as human unalignment (sometimes writ large for the fictional characters) — problems that we're very familiar with, have an intuitive understanding of, and (outside fictional characters) even have fairly-workable solutions for (thing like love, salaries, guilt, and law enforcement).

I agree that they are learned from human misalignment, but I am not sure this necessarily means they are the same (or similar). For example, there might be some weird, infinite-dimensional function in the way we are misaligned, and the AI picked up on it (or at least an approximate version) and is able to portray all the flavors of "misalignment" that were never seen in humans yet, or even go out of distribution on the misalignment in weird character dimensions and simulate something completely alien to us. I believe that we are going to see some pretty unexpected stuff happening when we start digging more here. One thing to point out, though, is that all the ways those AIs could be misaligned are probably related (some in probably very convoluted ways) to the way we are misaligned in the training data.

The stage even understands that each of the animatronics also has theory of mind, and each is attempting to model the beliefs and intentions of all of the others, not always correctly.

I am a bit skeptical of this. I am not sure I believe that there really are two detached "minds" for each animatronic that tries to understand each other (But if this is true, this would be an argument for my first point above).

we are missing the puppeteer

(This is more of a thought than a comment). I like to think of the puppeteer as a meta-simulacrum. The Simulator is no longer simulating X, but is simulating Y simulating X. One of the dangers of instruct fine-tuning I see is that it might not be impossible for the model to collapse to only simulate one Y no matter what X it simulates, and the only thing we kind of control with current training methods is what X we want it to simulate. We would basically leave whatever training dynamics decide Y to be to chance and just have to cross our fingers that this Y isn't a misaligned AI (which might actually be something incentivized by current training). I am going to try to write a short post about that.

P.S. I think it would be worth it to have some kind of TL;DR at the top with the main points clearly fleshed out.

Replies from: roger-d-1↑ comment by RogerDearnaley (roger-d-1) · 2024-01-10T00:02:26.091Z · LW(p) · GW(p)

… the animatronics are very unstable and constantly shifting forms. When you start looking at one, it begins changing, and you can't ever grasp them clearly.

On theoretical grounds, I would, as I described in the post, expect an animatronic to come more and more into focus as more context is built up of things it has done and said (and I was rather happy with the illustration I got that had one more detailed than the other).

Of course, if you are using an LLM that has a short context length and continuing a conversation for longer than that, so that it only recalls the most recent part of the conversation as context, or if your LLM nominally has a long context but isn't actually very good at remembering things some way back in a long context, then one would get exactly the behavior you describe. I have added a section to the post describing this behavior and when it is to me expected.

In some sense it really is just a stochastic parrot

I think this isn't that clear. …

Fair comment — I'd already qualified that with "In some sense…", but you convinced me, and I've deleted the phrase.

I agree that they are learned from human misalignment, but I am not sure this necessarily means they are the same (or similar). For example, …

Also a good point. I think being able to start from a human-like framework is usually helpful (and have a post I'm working on on this), but one definitely needs to remember that the animatronics are low-fidelity simulations of humans, which some fairly un-human like failure modes and some capabilities that humans don't have individually, only collectively (like being hypermultilingual). Mostly I wanted to mke the point that their behavior isn't as wide open/unknown/alien as people tend to assume on LW of agents they're trying figure out how to align.

The stage even understands that each of the animatronics also has theory of mind, and each is attempting to model the beliefs and intentions of all of the others, not always correctly.

I am a bit skeptical of this. I am not sure I believe that there really are two detached "minds" for each animatronic that tries to understand each other (But if this is true, this would be an argument for my first point above).

As I recall, GPT-4 scored at a level on theory of mind tests roughly equivalent to a typical human 8-year old. So it has the basic ideas, and should generally get details right, but may well not be very practiced at this — certainly less so than a typical human adult, let along someone like an author, detective, or psychologist who works with theory of mind a lot, So yes, as I noted, this is currently to a first approximation, but I'd expect it to improve in future more powerful LLMs. Theory of mind might also be an interesting thing to try to specifically enrich the pretraining set with.

I like to think of the puppeteer as a meta-simulacrum. The Simulator is no longer simulating X, but is simulating Y simulating X.

Interesting, and yes, that's true any time you have separate animatronics of the author and fictional characters, such as the puppeteer and a typical assistant. I look forward to reading your post on this.

comment by Shankar Sivarajan (shankar-sivarajan) · 2024-01-09T23:00:55.822Z · LW(p) · GW(p)

The shoggoth knows the stage. The shoggoth is the stage. The shoggoth is the puppet and puppeteer on the stage.

Replies from: roger-d-1↑ comment by RogerDearnaley (roger-d-1) · 2024-01-10T00:50:57.552Z · LW(p) · GW(p)

Exactly. This is a detailed metaphor built up of several parts for the same thing as the shoggoth and its mask is a unitary metaphor for. Our aim was, by breaking the metaphor into individual parts (along simulator theory lines), to better illuminate what's going on. Especially the fact that an LLM (even an instruct trained one) is not just a single mentality/agent with a single fixed set of motivations – something that in the shoggoth metaphor was implicit only in it having a lot of eyes – and which a number of people on LW often seem to forget.

comment by [deleted] · 2024-01-17T14:32:25.060Z · LW(p) · GW(p)

Replies from: roger-d-1↑ comment by RogerDearnaley (roger-d-1) · 2024-01-17T16:34:03.560Z · LW(p) · GW(p)

Umm, thanks! :-) I guess it is a bit of a bizarre image…

comment by rokosbasilisk · 2024-01-11T11:54:19.767Z · LW(p) · GW(p)

though as Geoff Hinton has pointed out, 'confabulations' might be a better word

Replies from: roger-d-1I think yann lecun was the first one to using this word https://twitter.com/ylecun/status/1667272618825723909