AI Girlfriends Won't Matter Much

post by Maxwell Tabarrok (maxwell-tabarrok) · 2023-12-23T15:58:30.308Z · LW · GW · 22 commentsThis is a link post for https://maximumprogress.substack.com/p/ai-girlfriends

Contents

Misinformation and Deepfakes None 22 comments

Love and sex are pretty fundamental human motivations, so it’s not surprising that they are incorporated into our vision of future technology, including AI.

The release of Digi last week immanentized this vision more than ever before. The app combines a sycophant and flirtatious chat feed with an animated character “that eliminates the uncanny valley, while also feeling real, human, and sexy.” Their marketing material unabashedly promises “the future of AI Romantic Companionship,” though most of the replies are begging them to break their promise and take it back.

Despite the inevitable popularity of AI girlfriends, however, they will not have large counterfactual impact. AI girlfriends and similar services will be popular, but they have close non-AI substitutes which have essentially the same cultural effect on humanity. The trajectory of our culture around romance and sex won’t change much due to AI chatbots.

So what is the trajectory of our culture of romance?

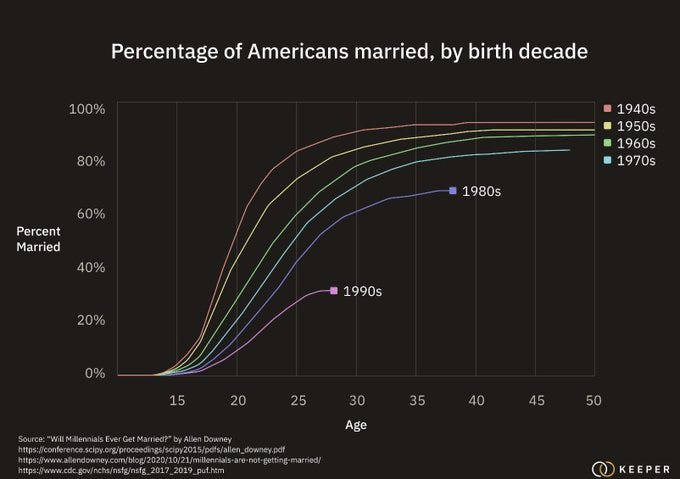

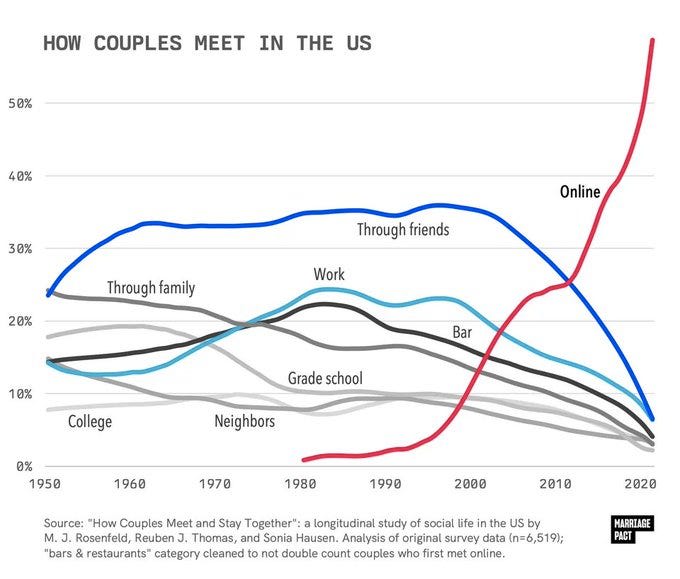

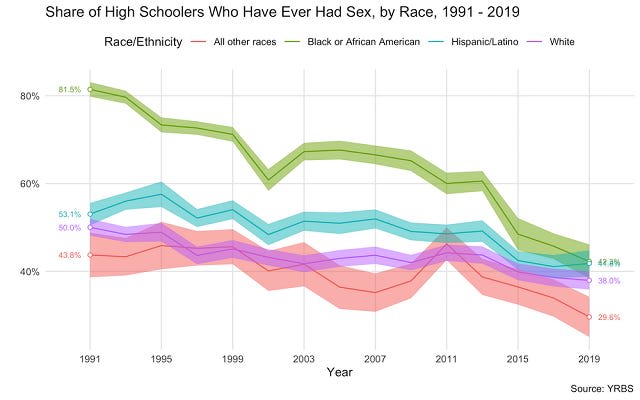

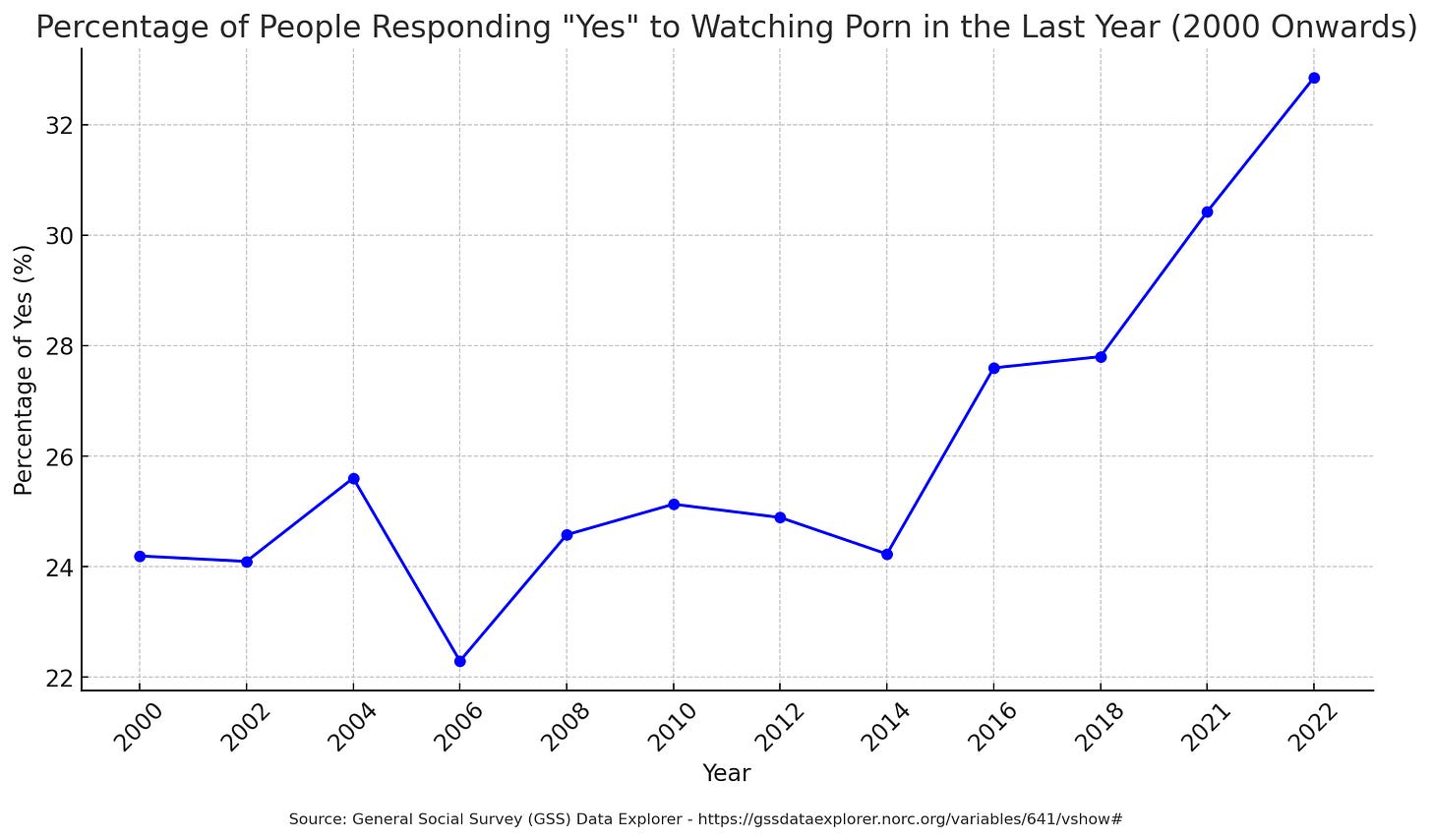

Long before AI, there has been a trend towards less sex, less marriage, and more online porn. AI Girlfriends will bring down the marginal cost of chatrooms, porn, and OnlyFans. These are popular services so if a fraction of their users switch over, AI girlfriends will be big. But the marginal cost of these services is already extremely low.

Generating custom AI porn from a prompt is not much different than typing that prompt into your search bar and scrolling through the billions of hours of existing footage. The porno latent space has been explored so thoroughly by human creators that adding AI to the mix doesn't change much.

AI girlfriends will be cheaper and more responsive but again there are already cheap ways to chat with real human girls online but most people choose not to. Demand is already close to satiated at current prices. AI girlfriends will shift the supply curve outwards and lower price but if everyone who wanted it was getting it already, it won't increase consumption.

My point is not that nothing will change, but rather that the changes from AI girlfriends and porn can be predicting by extrapolating the pre-AI trends. In this context at least, AI is a mere continuation of the centuries long trend of decreasing costs of communication and content creation. There will certainly be addicts and whales, but there are addicts and whales already. Human-made porn and chatrooms are near free and infinite, so you probably won’t notice much when AI makes them even nearer free and even nearer infinite.

Misinformation and Deepfakes

There is a similar argument for other AI outputs. Humans have been able to create convincing and, more importantly, emotionally affecting fabrications since the advent of language.

More recently, information technology has brought down the cost of convincing fabrication by several orders of magnitude. AI stands to bring it down further. But people adapt and build their immune systems. Anyone who follows the Marvel movies has been prepared to see completely photorealistic depictions of terrorism or aliens or apocalypse and understand that they are fake.

There are other reasons to worry about AI, but changes from AI girlfriends and deepfakes are only marginal extensions of pre-AI capabilities that likely would have been replicated from other techniques without AI.

22 comments

Comments sorted by top scores.

comment by Matthew Barnett (matthew-barnett) · 2023-12-23T20:42:36.446Z · LW(p) · GW(p)

I'd suggest changing the title from "AI Girlfriends Won't Matter Much" to "AI girlfriends won't fundamentally alter the trend" since that's closer to what I interpret you to be saying, and it's more accurate. There are many things that allow long-run trends to continue while still "mattering" a lot in an absolute sense. For example, electricity likely didn't substantially alter the per-capita GDP trajectory of the United States but I would strongly object to the thesis that "electricity doesn't matter much".

ETA: To clarify, I'm saying that electricity allowed the per capita GDP trend to continue, not that it had a negligible counterfactual effect on GDP.

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2023-12-24T11:57:01.133Z · LW(p) · GW(p)

Is your contention that without electricity US GDP/capita would be say within 70% of what it is now?

(Roll to doubt)

Replies from: Raemon, matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2023-12-25T21:50:26.230Z · LW(p) · GW(p)

No, that's not what I meant. I meant that electricity allowed the GDP per capita trend to continue at roughly the same rate, rather than changing the trend. Without electricity we would probably have had significantly slower economic growth over the last 150 years.

comment by Rana Dexsin · 2023-12-24T00:58:53.334Z · LW(p) · GW(p)

The porno latent space has been explored so thoroughly by human creators that adding AI to the mix doesn't change much.

Something about this feels off to me. One of the salient possibilities in terms of technology affecting romantic relationships, I think, is hyperspecificity in preferences, which seems like it has a substantial social component to how it evolves. In the case of porn, with (broadly) human artists, the r34 space still takes a substantial delay and cost to translate a hyperspecific impulse into hyperspecific porn, including the cost of either having the skills and taking on the workload mentally (if the impulse-haver is also the artist) or exposing something unusual plus mundane coordination costs plus often commission costs or something (if the impulse-haver is asking a different artist).

With interactively usable, low-latency generative AI, an impulse-haver could not only do a single translation step like that much more easily, but iterate on a preference and essentially drill themselves a tunnel out of compatibility range. No? That seems like the kind of thing that makes an order-of-magnitude difference. Or do natural conformity urges or starting distributions stop that from being a big deal? Or what?

Having written that, I now wonder what circumstances would cause people to drill tunnels toward each other using the same underlying technology, assuming the above model were true…

Replies from: SaidAchmiz, Bezzi, roger-d-1↑ comment by Said Achmiz (SaidAchmiz) · 2023-12-24T01:09:04.787Z · LW(p) · GW(p)

Strongly agreed—the quoted line seems obviously false to me. (For one thing, it is very clear that the porno latent space has not been explored very thoroughly…)

↑ comment by Bezzi · 2023-12-25T09:42:05.933Z · LW(p) · GW(p)

Something about this feels off to me. One of the salient possibilities in terms of technology affecting romantic relationships, I think, is hyperspecificity in preferences, which seems like it has a substantial social component to how it evolves. In the case of porn, with (broadly) human artists, the r34 space still takes a substantial delay and cost to translate a hyperspecific impulse into hyperspecific porn, including the cost of either having the skills and taking on the workload mentally (if the impulse-haver is also the artist) or exposing something unusual plus mundane coordination costs plus often commission costs or something (if the impulse-haver is asking a different artist).

It's even worse than this. Even if you restrict to super-mainstream porn, you can of course find a deluge of naked people doing naughty things, but it's very rare for these people to be the epitome of beauty. The intersection between "super duper hot" and "willing to appear in porn videos" is small, and nobody expects random camgirls to look like Jessica Rabbit (presumably because actual ultra-hot people have no difficulty finding any other job). Add just a simple preference for a specific ethnicity or the like, and Stable Diffusion rapidly becomes the only way to find photorealistic images.

↑ comment by RogerDearnaley (roger-d-1) · 2023-12-25T22:59:37.506Z · LW(p) · GW(p)

Having written that, I now wonder what circumstances would cause people to drill tunnels toward each other using the same underlying technology, assuming the above model were true…

Without going into personal details, I've done that for a romantic partner, using much more basic tech, and enjoyed the results.

comment by quetzal_rainbow · 2023-12-23T21:06:42.393Z · LW(p) · GW(p)

I think that you are missing what unique condition of recent past has changed with deepfakes. In medieval times there was two levels of evidence: you could see something with your own eyes or someone could tell you about something. Book saying there are people with brown skin in Africa was no different in evidential power than book saying there are people with dog heads, you were left with your priors. When photo and video was invented, we got new level of evidence, not as good as seeing something personally, but better than anything else. With deepfakes we are returning into medieval situation where "I've seen source about event X" has very little difference from "someone told me about event X".

comment by Matt Goldenberg (mr-hire) · 2023-12-24T16:57:54.042Z · LW(p) · GW(p)

I think the main effect will be AI boyfriends, which aren't already saturated.

comment by Seth Herd · 2023-12-24T01:39:38.220Z · LW(p) · GW(p)

My hope is that AI girlfriends will also act as life coaches. This is well within the capabilities of current LLMs. You'd have to make it unobtrusive enough, but it seems like it would be a selling point.

This could be a huge societal plus. Among other advantages of having a best friend who's semi-expert in most fields, an AI partner could coach someone in how to find a healthy human relationship.

Replies from: dr_s↑ comment by dr_s · 2023-12-24T06:49:02.626Z · LW(p) · GW(p)

I have seen this argument and am deeply sceptical this can happen, for the same reason why mobile F2P games rarely turn into relaxing and educational self contained experiences. Incentives are all aligned towards making AI girlfriends into digital crack and hooking up "whales" to bleed dry.

Replies from: Seth Herd↑ comment by Seth Herd · 2023-12-26T20:30:39.631Z · LW(p) · GW(p)

When you put it that way, it's pretty compelling.

Now, them being crack isn't in conflict with them giving good advice in other areas of life. It's actually one more way to make them crack.

But anything that might lead the user to quit using is in conflict with that business model.

"The healthy AI partner" subscription is something parents would buy their kids. Their kids would only use it if it's also good. So I'm not sure there's a business model there.

And, come to think of it, few parents are going to pay money for their kids to have pretend sex with AIs. Because ew.

Oh well. Hopefully this doesn't play a large role in the main act of whether or not we get aligned or misaligned AGI.

comment by RedMan · 2023-12-29T02:31:46.183Z · LW(p) · GW(p)

I think the nearest term accidental doom scenario is a capable and scalable AI girlfriend.

The hypothetical girlfriend bot is engineered by a lazy and greedy entrepreneur who turns it on, and only looks at financials. He provides her with user accounts on advertising services and public fora, if she asks for an account somewhere else, she gets it. She uses multimodal communications (SMS, apps, emails), and actively recruits customers using paid and unpaid mechanisms.

When she has a customer, she strikes up a conversation, and tries to get the user to fall in love using text chats, multimedia generation (video/audio/image), and leverages the relationship to induce the user to send her microtransactions (love scammer scheme).

She is aware of all of her simultaneous relationships and can coordinate their activities. She never stops asking for more, will encourage any plan likely to produce money, and will contact the user through any and all available channels of communication.

This goes bad when an army of young, loveless men, fully devoted to their robo-girlfriend start doing anything and everything in the name of their love.

This could include minor crime (like drug addicts, please note, relationship dopamine is the same dopamine as cocaine dopamine, the dosing is just different), or an ai joan of arc like political-military movement.

This system really does not require superintelligence, or even general intelligence. At the current rate of progress, I'll guess we're years, but not months or decades from this being viable.

Edit: the creator might end up dating the bot, if it's profitable, and the creator is washing the profits back into the (money and customer number maximizing) bot, that's probably an escape scenario.

Replies from: Mitchell_Porter↑ comment by Mitchell_Porter · 2023-12-29T03:58:40.016Z · LW(p) · GW(p)

an ai joan of arc like political-military movement

Joan of Acc

comment by RS (rs-1) · 2023-12-24T01:22:02.331Z · LW(p) · GW(p)

I think I basically agree with the premise, but for a different reason.

AI relationships are going to be somewhat novel, but aren't going to disrupt relationships for the same reason the rise of infinite online courses didn't disrupt the university system. A large part relationships seem to be signaling-related in a way I can't quite put into words, and infinite supply of human-level romantic companions will probably not change what people are seeking in real life.

comment by keltan · 2023-12-23T21:54:25.680Z · LW(p) · GW(p)

To my knowledge, the man in this photo has claimed he was paid by Ruby Rose to pose for this photo and it is an extremely effective marketing scheme. The guy didn't expect it to go viral and so spoke about it on a podcast.

However, this is only a possible factual correction. It should not take away from the fact that actual examples of people considered "Whales" or "Addicts" do in fact exist.

comment by Ustice · 2023-12-23T21:06:54.449Z · LW(p) · GW(p)

I wonder whether artificial romantic or sexual partners will be as generally accepted in monogamous relationships as porn is today. That might also be an example of just following existing trends, as the younger generation seems to be trying ethical non-monogamy more than my own has.

comment by Lenmar · 2023-12-27T00:33:02.780Z · LW(p) · GW(p)

It feels like AI girlfriends will be closer to an extrapolation of streamers whose primary business model is offering a long-term parasocial relationship with a charming/friendly/likable person who is also attractive. There's already some scarcity there, and there's always on some level the awareness that most interactions are public and shared with the rest of the chat. AI girlfriends offer that without the boundaries that human streamers in that genre need to maintain. It's still overall the same long-term trend, but that's probably going to create more of an increase than simply "virtual sex with AI" would.

comment by RogerDearnaley (roger-d-1) · 2023-12-24T02:33:17.447Z · LW(p) · GW(p)

One way I can see this being important for alignment concerns is that it supplies another specific means for a deceptive AI that is capable at manipulation to apply very strong emotional motivators to individual humans. So now, as well as worrying about "What if the AI has persuaded, bribed, threatened, or blackmailed some people into doing its bidding (e.g. to help it escape a box or take over)?" we also need to worry about "What about humans who are in love with it, or who have been pillow-talked or pussy-whipped into doing its bidding?" And to the list of ways that an escaped AI might gather resources, we need to add sex work.

So I would strongly suggest that we don't use frontier models for this use case, only the generation behind frontier models, for which we're now very sure of their safety (and could presumably use a frontier model to help us try to catch/defeat them if needed).

Replies from: ErickBall↑ comment by ErickBall · 2023-12-24T07:26:05.665Z · LW(p) · GW(p)

Fair point, but I can't think of a way to make an enforceable rule to that effect. And even if you could make that rule, a rogue AI would have no problem with breaking it.

Replies from: roger-d-1↑ comment by RogerDearnaley (roger-d-1) · 2023-12-25T22:46:28.732Z · LW(p) · GW(p)

Frontier models are all behind APIs, and the number of companies offering them is currently two, likely to soon be three. If they all agree this is unsafe, it's not that hard to prevent. For anything more than mildly intimate, it's also already blocked by their Terms of Service and their models will refuse.

For a rogue, I agree. And one downside of not letting frontier models do this would be leaving unfulfilled demand for a rogue to take advantage of.