Spiracular's Shortform Feed

post by Spiracular · 2019-06-13T20:36:26.603Z · LW · GW · 48 commentsContents

48 comments

I was just thinking about how to work myself up to posting full-posts, and this seemed like exactly the right level of difficulty and exposure for what I'm currently comfortable with. I'm glad that a norm for this exists!

This is mostly going to consist of old notebook-extracts regarding various ideas I've chewed on over the years.

48 comments

Comments sorted by top scores.

comment by Spiracular · 2020-06-04T18:43:42.702Z · LW(p) · GW(p)

A Parable on Visual Impact

A long time ago, you could get the biggest positive visual impact for your money by generating art, and if you wanted awe you could fund gardens and cathedrals. And lo, these areas were well-funded!

The printing press arrived. Now, you could get massive numbers of pamphlets and woodcuts for a fraction of the price of paintings. And lo, these areas were well-funded!

Then tv appeared. Now, if you wanted the greatest awe and the biggest positive visual impact for your money, you crafted something suitable for the new medium. And in that time, there were massive made-for-tv propaganda campaigns, and money poured into developing spacecraft, and we got our first awe-inspiring images of Earth from the moon.

Some even claim the Soviet Union was defeated by the view through a television screen of a better life in America.

And then we developed CGI and 3D MMORPGs. And lo, the space program defunded, as people built entire cities, entire planets in CGI for a tiny fraction of the cost!

Replies from: mr-hire, romeostevensit↑ comment by Matt Goldenberg (mr-hire) · 2020-06-05T18:09:25.224Z · LW(p) · GW(p)

While I don't agree with this narrative, I really enjoyed the story, thanks for writing it!

Replies from: Spiracular↑ comment by Spiracular · 2020-06-08T22:22:03.518Z · LW(p) · GW(p)

At minimum it's oversimplified. That's why I called it a parable.

I appreciate the nuance in your comment even as it is. But I'm curious about the facts/narratives you have that disagree with it?

(My personal strongest murphyjitsu was that "space program defunding" was more complicated than this. If you know more about that than I do, I'd be curious to hear about it.)

Replies from: mr-hire↑ comment by Matt Goldenberg (mr-hire) · 2020-06-08T22:45:41.004Z · LW(p) · GW(p)

Your murphyjitsu was correct (what were you imagine was failing here... our communication?)

I like the narrative where video games caused stagnation, but if indeed there is stagnation, I don't believe they were in the top 10 factors :).

Edit: Big maybe at top 10, certainly not top 5.

↑ comment by romeostevensit · 2020-06-05T19:40:24.778Z · LW(p) · GW(p)

Video games and porn don't have any essential quality to them though we might like to come up with stories about them and their impact on society/evo-psycho implications, but they're literally just the lowest $:hedons trade currently available.

comment by Spiracular · 2019-06-13T20:44:07.308Z · LW(p) · GW(p)

Thinking about value-drift:

It seems correct to me to term the earlier set of values a more "naive set.*" To keep a semi-consistent emotional tone across classes, I'll call the values you get later the "jaded set" (although I think jadedness is only one of several possible later-life attractor states, which I'll go over later).

I believe it's unreasonable to assume that any person's set of naive values are, or were, perfect values. But it's worth noting that there are several useful properties that you can generally associate with them, which I'll list below.

My internal model of how value drift has worked within myself looks a lot more like "updating payoff matrices" and "re-binning things." Its direction feels determined by not purely by drift, but by some weird mix of deliberate steering, getting dragged by currents, random walk, and more accurately mapping the surrounding environment.

That said, here's some generalizations...

Naive:

- Often oversimplified

- This is useful in generating ideals, but bad for murphyjitsu

- Some zones are sparsely-populated

- A single strong value (positive or negative) anywhere near those zones will tend to color the assumed value of a large nearby area. Sometimes it does this incorrectly.

- Generally stronger emotional affects (utility assignments), and larger step sizes

- Fast, large-step, more likely to see erratic changes

- Closer to black-and-white thinking; some zones have intense positive or negative associations, and if you perform nearest neighbor on sparse data, the cutoff can be steep

Jaded:

- Usually, your direct experience of reality has been fed more data and is more useful.

- Things might shift so the experientially-determined system plays a larger role determining what actions you take relative to theory

- Experiential ~ Sys1, Theoretical ~ Sys2, but the match isn't perfect. The former handles the bulk of the data right as it comes in, the later sees heavily-filtered useful stuff and makes cleaner models.**

- Things might shift so the experientially-determined system plays a larger role determining what actions you take relative to theory

- Speaking generally... while a select set of things may be represented in a more complete and complex way (ex: aspects of jobs, some matters of practical and realistic politics, most of the things we call "wisdom"), other axes of value/development/learning may have gotten stuck.

- Here are names for some of those sticky states: jaded, bitter, stuck, and conformist. See below for more detail.

- Related: Freedomspotting

** What are some problems with each? Theoretical can be bad at speed and time-discounting, while Experimental is bad at noticing the possibility of dangerous unknown-unknowns?

Jaded modes in more detail

Here are several standard archetypical jaded/stuck error-modes. I tend to think about this in terms of neural nets and markov chains, so I also put down the difficult-learning-terrain structures that I tend to associate with them.

Jaded: Flat low-affect zones offer no discernible learning slope. If you have lost the ability to assign different values to different states, that spells Game Over for learning along that axis.

Bitter: Overlearned/overgeneralized negatives are assigned to what would otherwise have been inferred to be a positive action by naive theory. Thenceforth, you are unable to collect data from that zone due to the strong expectations of intense negative-utility. Keep in mind that this is not always wrong. But when it is wrong, this can be massively frustrating.

(Related: The problems with reasoning about counterfactual actions as an embedded agent with a self-model [LW · GW])

Stuck: Occupying an eccentric zones on the wrong side of an uncanny valley. One common example is people who specialized into a dead field, and now refuse to switch.

Conformist: Situated in some strongly self-reinforcing zone, often at the peak of some locally-maximal hill. If the hill has steep moat-like drop-off, this is a common subtype of Stuck. In some sense, hill-climbing is just correct neural net behavior; this is only a problem because you might be missing a better global maximum, or might have different endorsed values than your felt ones.

An added element that can make this particularly problematic is that if you stay on a steep, tiny hill for long enough, the gains from hill-climbing can set up a positive incentive for learning small step size. If you believe that different hills have radically different maximums, and you aspire for global maximization, then this is something you very strongly want to avoid.

*From context, I'm inferring that we're discussing not completely naive values, but something closer to the values people have in their late-teens early-twenties.

Naive modes in more detail

Naive values are often simplified values; younger people have many zones of sparse or merely theorized data, and are more prone to black-and-white thinking over large areas . They are also perhaps notable sometimes for their lack of stability, and large step-size (hence the phrase "it's just a phase"). Someone in their early 20s is less likely than someone in their 40s to be in a stable holding pattern. There's been less time to get stuck in a self-perpetuating rut, and your environment and mind are often changing fast enough that any holding patterns will often get shaken up when the outside environment alters if you give it enough time.

The pattern often does seem to be towards jaded (flat low-affect zones that offer no discernible learning slope), bitter (overlearned/overgeneralized negatives assigned to what would otherwise have been inferred to be a positive action), stuck (occupying eccentric zones on the wrong side of an uncanny valley), or conformist (strongly self-reinforcing zones, often at the peak of some locally-maximal hill).

Many people I know have at east one internal-measurement instrument that is so far skewed that you may as well consider it broken. Take, for instance, people who never believe that other people like them despite all evidence to the contrary. This is not always a given, but some lucky jaded people will have identified some of the zones where they cannot trust their own internal measuring devices, and may even be capable of having a bit of perspective about that in ways that are far less common among the naive. They might also have the epistemic humility to have a designated friend filling in that particular gap in their perception, something that requires trust built over time.

comment by Spiracular · 2019-07-06T00:39:39.385Z · LW(p) · GW(p)

I stumbled into a satisfying visual description:

3D "Renderers" of Random Sampling/Monte Carlo estimation

This came out of thinking about explaining sparseness in higher dimensions*, and I started getting into visuals (as I am known to do). I've see most of the things below described in 2D (sometimes incredibly well!), but 3D is a tad trickier, while not being nearly as painfully-unintuitive as 4D. Here's what I came up with!

Also, someone's probably already done this, but... shrug?

The Setup

In a simple case of Monte Carlo/random-sampling from a three-dimensional space with a one-dimensional binary or float output...

You're trying to work out what is in a sealed box using only a thin needle (analogy for the function that produces your results). The needle has a limited number of draws, but you can aim the needle really well. You can use it to find out what color the object in the box is at each of the exact points you decided to measure. You also have an open box in which to mark off the exact spot in space at which you did the draw, using Unobtainium to keep it in place.

Each of your samples is a fixed point in space, with a color set by the needle(function/sampler's) output/result. These are going to be the cores that you base your render around. From there, you can...

Nearest Neighbor Render

For Nearest Neighbor (NN), you can see what happens when you situate colored-or-clear balloons at each of the sample points, and start simultaneously blowing up all of them up with their sample point as a loose center. Gradually, this will give you a bad "rendering" of whatever the color-results were describing.

(Technically it's Voronoi regions; balloons are more evocative)

Decision Trees

Decision trees are when you fill in the space using colored rectangular blocks of varying sizes, based on the sample points.

Gradients

Most other methods involve creating a gradient between 2 different points that yielded different colors. It could be a linear gradient, an exponential gradient, a step function, a sine wave, some other complicated gradient... as you get more complicated, you better hope that your priors were really good!

Neural Net

A human with lots of prior experience looking at similar objects tries to infer what the rest looks like. (This is kinda a troll answer, I know. Thinking about it.)

Sample All The Things!

3D Pointillism even just sounds like a really bad idea. Pointillism on a 2D surface in a 3D space, not as bad of an idea! Sparsity at work?

Bonus

The Blind Men and an Elephant fable is an example of why you don't want to do a render from a single datapoint, or else you might decide that the entire world is "pure rope." If they'd known from sound where each other were located when they touched the elephant, and had listened to what the others were observing, they might have actually Nearest Neighbor'd themselves a pretty decent approximate elephant.

*Specifically, how it's dramatically more effort to get a high-resolution pixel-by-pixel picture of a high-dimensional space. But if you're willing to sacrifice accuracy for faster coverage, in high dimensions a single point's Nearest Neighbor zone can sloppily cover a whole lot of ground (or multidimensional "volume").

comment by Spiracular · 2019-06-14T01:50:30.493Z · LW(p) · GW(p)

Out-of-context quote: I'd like for things I do to be either ignored or deeply-understood and I don't know what to do with the in-betweens.

comment by Spiracular · 2022-07-14T15:33:27.520Z · LW(p) · GW(p)

Give a man a fish, feed him for a day. Teach a man to fish, feed him for a lifetime.

Cultivate someone with an earnest and heartfelt interest in fishing, until he learns how to grow his own skills and teach himself... and you can probably feed at least 12 people.

Which just might finally allow the other 11 to specialize into something more appealing to them than subsistence fishing.

comment by Spiracular · 2020-08-18T03:17:56.604Z · LW(p) · GW(p)

On Community Coordinator Roles

Some things I think help are:

- Personal fit to the particular job demands (which can include some subset of: lots of people-time, navigating conflict, frequent task-switching, personal initiative, weighing values (or performing triage), etc.)

- Personal investment in appropriate values, and a lasting commitment to doing the role well.

- Having it be fairly clear what they're biting off, what resources they can use, and what additional resources they can petition for. Having these things be actually fairly realistic.

(Minimum expertise to thrive is probably set by needing to find someone who will advocate for these things well enough to make them clear, or who can at least learn how to. Nebulous roles can be navigated, but it is harder, and in practice means they need to be able to survive redefining the role for themselves several times.)

- Having some connections they (and others) respect who will help support what (and how) they're doing, lend some consistent sense of meaning to the work, and people who will offer reliable outside judgement. Quite possibly, these things come from different people.

(Public opinion is often too noisy and fickle for most human brains to learn off of it alone.)

- For roles that require the buy-in of others, it's helpful if they have enough general respect or backing that they will mostly be treated as "legitimate" in the role. But some roles really only require a small subset of high-buy-in people.

- Also, some sort of step-down procedure.

Extracted from a FB thread, where I was thinking about burnout around nebulously-defined community leader roles, and what preparedness and a good role would look like. Parts of this answer feel like I'm being too vague and obvious, but I thought it was worth making the list slightly more findable.

comment by Spiracular · 2019-06-14T01:17:48.863Z · LW(p) · GW(p)

After seeing a particularly overt form of morality-of-proximity argued (poorly) in a book...

Something interesting is going on in my head in forming a better understanding of what the best steelman of morality-of-proximity looks like? The standard forms are obviously super-broken (there's a lot of good reasons why EA partially builds itself as a strong reaction against that; a lot of us cringe at "local is better" charity speak unless it gets tied into "capacity building"). But I'm noticing that if I squint, the intuition bears a trace of similarity to my attitude on "What if we're part of an "all possible worlds" multiverse, in which the best and worst and every in-between happens, and where on a multiversal scale... anything you do doesn't matter, because deterministically all spaces of possibility will be inevitably filled."

Obviously, the response is: "Then try to have a positive influence on the proximal. Try to have a positive influence on the things you can control." And sure, part of that comes from giving some weight to the possibility that the universe is not wired that way, but it's also just a very broadly-useful heuristic to adopt if you want to do useful things in the world. "Focus on acting on the things you can control" is generally pretty sound advice, and I think it forms a core part of the (Stoic?) concept of rating your morals on the basis of your personal actions and not the noisy environmental feedback you're getting in reply. Which is... at least half-right, but runs the risk of disconnecting you from the rest of the world too much.

If we actually can have the largest positive impact on the proximal, then absolutely help the proximal. This intuition just breaks down in a world where money is incredibly liquid and transportable and is worth dramatically more in value/utility in different places.

(Of course, another element of that POV might center around a stronger sense that sys1 empathetic feelings are a terminal good in-and-of-itself, which is something I can't possibly agree with. I've seen more than enough of emotional contagion and epidemics-of-stress for a lifetime. Some empathy is useful and good, but too much sounds awful. Moving on.)

I guess there needs to be a distinction made between "quantity of power" and "finesse of power," and while quantity clearly can be put to a lot more use if you're willing to cross state (country, continent...) lines, finesse at a distance is still hard. And you need at least some of both (at this point) to make a lasting positive impact (convo for later: what those important components of finesse are, which I think might trigger some interesting disagreements). Finding specialists you trust to handle the finesse (read: steering) of large amounts of power is often hard, and it's even harder to judge for people who don't have that finesse themselves.

And "Finesse of power" re: technical impact, re: social impact, re: group empowerment/disempowerment (whether by choice or de-facto), re: PR or symbology... they can be really different skills.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2019-06-14T09:11:49.805Z · LW(p) · GW(p)

The standard forms are obviously super-broken (there’s a lot of good reasons why EA partially builds itself as a strong reaction against that; a lot of us cringe at “local is better” charity speak unless it gets tied into “capacity building”).

Could you say more about this? What do you consider “obviously super-broken” about (if I understand you correctly) moralities that are not agent-neutral and equal-consideration? Why does “local is better” make you cringe?

Replies from: Spiracular, Spiracular, Spiracular↑ comment by Spiracular · 2019-06-15T00:58:14.264Z · LW(p) · GW(p)

(Meta-note: I spent more time on this than I wanted to. I think if I run into this sort of question again, I'm going to ask clarifying questions about what it was that you in particular wanted, because answering broad questions without having a clear internal idea of a target audience contributed to both spending too much time on this, and contributed to feeling bad about it. Oops.)

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2019-06-15T01:35:53.174Z · LW(p) · GW(p)

This certainly seems reasonable.

↑ comment by Spiracular · 2019-06-15T00:50:11.815Z · LW(p) · GW(p)

I think you're inferring some things that aren't there. I'm not claiming an agent-neutral morality. I'm claiming that "physical proximity," in particular, being a major factor of moral worth in-and-of-itself never really made sense to me, and always seemed a bit cringey.

Using physical proximity as a relevant metric in judging the value of alliances? Factoring other metrics of proximity into my personal assessments of moral worth? I do both.

(Although I think using agent-neutral methods to generate Schelling Points for coordination reasons is quite valuable, and at times where that coordination is really important, I tend to weight it extremely heavily.)

When I limit myself to looking at charity and not alliance-formation, all types of proximity-encouraging motives get drowned out by the sheer size of the difference in magnitude-of-need and the drastically-increased buying power of first-world money in parts of the third world. I think that's a pretty common feeling among EAs. That said, I do conduct a stronger level of time- and uncertainty-discounting, but I still ended up being pretty concerned about existential risk.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2019-06-15T01:25:30.994Z · LW(p) · GW(p)

I think you’re inferring some things that aren’t there. I’m not claiming an agent-neutral morality. I’m claiming that “physical proximity,” in particular, being a major factor of moral worth in-and-of-itself never really made sense to me, and always seemed a bit cringey.

I see. It seems to me that the more literally you interpret “physical proximity”, the more improbable it is to find people who consider it “a major factor of moral worth”.

Is your experience different? Do you really find that people think that literal physical proximity matters morally? Not cultural proximity, not geopolitical proximity, not proximity in communication-space or proximity in interaction-space, not even geographical proximity—but quite literal Euclidian distance in spacetime? If so, then I would be very curious to see an example of someone espousing such a view—and even more curious to see an example of someone explicitly defending it!

Whereas if you begin to take the concept less literally (following something like the progression I implied above), then it is increasingly difficult to see why it would be “cringey” to consider it a “major factor” in moral considerations. If you disagree with that, then—my question stands: why?

When I limit myself to looking at charity and not alliance-formation, all types of proximity-encouraging motives get drowned out by the sheer size of the difference in magnitude-of-need and the drastically-increased buying power of first-world money in parts of the third world. I think that’s a pretty common feeling among EAs.

Yes, perhaps that is so, but (as you correctly note), this has to do proximity as a purely instrumental factor in how to implement your values. It does not do much to address the matter of proximity as a factor in what your values are (that is: who, and what, you value, and how much).

↑ comment by Spiracular · 2019-06-15T00:57:24.857Z · LW(p) · GW(p)

Personally, one of the most damning marks against the physical-distance intuition in particular is its rampant exploitability in the modern world, where distance is so incredibly easily abridged. If someone set up a deal where they extract some money and kidnap 2 far-away people in exchange for letting 1 nearby person go, someone with physical-distance-discounting might keep making this deal, and the only thing the kidnappers would need to use to exploit it is a truck. If view through a camera is enough to abridge the physical distance, it's even easier to exploit. I think this premise is played around with in media like The Box, but I've also heard of some really awful real-world cases, especially if phone calls or video count as abridging distance (for a lot of people, it seems to). The ease and severity of exploitation of it definitely contributes to why, in the modern world, I don't just call it unintuitive, I call it straight-up broken.

When the going exchange rate between time and physical distance was higher, this intuition might not have been so broken. With the speed of transport where it is now...

Maybe at bottom, it's also just not very intuitive to me. I find a certain humor in parodies of it, which I'm going to badly approximate here.

"As you move away from me at a constant speed, how rapidly should I morally discount you? Should the discounting be exponential, or logarithmic?"

"A runaway trolley will kill 5 people tied to the train track, unless you pull a switch and redirect it to a nearby track with only 1 person on it. Do you pull the switch?" "Well, that depends. Are the 5 people further away from me?"

I do wonder where that lack-of-intuition comes from... Maybe my lack of this intuition was originally because when I imagine things happening to someone nearby and someone far away, the real object I'm interacting with in judging /comparing is the imagination-construct in my head, and if they're both equally vivid that collapses all felt physical distance? Who can say.

In my heart of hearts, though... if all else is truly equal, it also does just feel obvious that a person's physical distance from you really should not affect your sense of their moral worth.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2019-06-15T01:35:29.031Z · LW(p) · GW(p)

If someone set up a deal where they extract some money and kidnap 2 far-away people in exchange for letting 1 nearby person go, someone with physical-distance-discounting might keep making this deal, and the only thing the kidnappers would need to use to exploit it is a truck. If view through a camera is enough to abridge the physical distance, it’s even easier to exploit.

I’ve two things to say to this.

First, the moral view that you imply here, seems to me to be an awful caricature. As I say in my other comment [LW(p) · GW(p)], I should be very curious to see some real-world examples of people espousing, and defending, this sort of view. To me it seems tremendously implausible, like you’ve terribly misunderstood the views of the people you’re disagreeing with. (Of course, it’s possible I am wrong; see below. However, even if such views exist—after all, it is possible to find examples of people espousing almost any view, no matter how extreme or insane—do you really suggest that they are at all common?!)

Second… any moral argument that must invoke such implausible scenarios as this sort of “repeatedly kidnap and then transport people, forcing the mark to keep paying money over and over, a slave to his own comically, robotically rigid moral views” story in order to make its point is, I think, to be automatically discounted in plausibility. Yes, if things happened in this way, and if someone were to react in this way, that would be terrible, but of course nothing like this could ever take place, for a whole host of reasons. What are the real-world justifications of your view?

… I’ve also heard of some really awful real-world cases, especially if phone calls or video count as abridging distance (for a lot of people, it seems to). The ease and severity of exploitation of it definitely contributes to why, in the modern world, I don’t just call it unintuitive, I call it straight-up broken.

Now this is interesting! Could you cite some such cases? I think it would be quite instructive to examine some case studies!

Replies from: Spiracular↑ comment by Spiracular · 2019-06-15T02:10:41.393Z · LW(p) · GW(p)

Yeah... this is reading as more "moralizing" and "combative" than as "trying to understand and model my view," to me. I do not feel like putting more time into hashing this out with you, so I most likely won't reply.

It has a very... "gotcha" feel to it. Even the curiosity seems to be phrased to be slightly accusatory, which really doesn't help matters. Maybe we have incompatible conversation styles.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2019-06-15T03:30:55.665Z · LW(p) · GW(p)

Giving offense wasn’t my intent, by any means!

Certainly it’s your right to discontinue the conversation if you find it unproductive. But I find that I’m confused; what was your goal in posting these things publicly, if not to invite discussion?

Do you simply prefer that people not engage with these “shortform feed” entries? (It may be useful to note that in the top-level post, if so. Is there some sort of accepted norm for these things?)

Replies from: habryka4↑ comment by habryka (habryka4) · 2019-06-15T03:47:17.554Z · LW(p) · GW(p)

My preference for most of my shortform feed entries is to intentionally have a very limited amount of visibility, with most commenting coming from people who are primarily interested in a collaborative/explorative framing. My model of Spiracular (though they are very welcome to correct me) feels similar.

I think I mentioned in the past that I think it's good for ideas to start in an early explorative/generative phase and then later move to a more evaluative phrase, and the shortform feeds for me try to fill the niche of making it as low-cost as possible for me to generate things. Some of these ideas (usually the best ones) tend to then later get made into full posts (or in my case, feature proposals for LessWrong) where I tend to be more welcoming of evaluative frames.

Replies from: SaidAchmiz↑ comment by Said Achmiz (SaidAchmiz) · 2019-06-15T03:54:23.704Z · LW(p) · GW(p)

I see. Well, fair enough. Would it be possible to add (or perhaps simply encourage authors to add) some sort of note to this effect to shortform feeds, if only as a reminder?

(As an aside, I don’t think I quite grasp how you’re using the term “visibility” here. With that clause removed, what you’re saying seems straightforward enough, but that part makes me doubt my understanding.)

Replies from: habryka4↑ comment by habryka (habryka4) · 2019-06-15T04:03:05.420Z · LW(p) · GW(p)

*nods* I think definitely when we make shortform feeds more of a first-class feature then we should encourage authors to specify their preferences for comments on their feeds.

I mean visibility pretty straightforwardly in that I often want to intentionally limit the number of people who can see my content because I feel worried about being misunderstood/judged/dragged into uncomfortable interactions.

Happy to discuss any of this further since I think shortform feeds and norms around them are important, but would prefer to do so on a separate post. You're welcome to start a thread about this over on my own shortform feed.

comment by Spiracular · 2019-09-17T03:03:26.806Z · LW(p) · GW(p)

One of my favorite little tidbits from working on this post [LW · GW]: realizing that idea innoculation and the Streisand effect are opposite sides of the same heuristic.

comment by Spiracular · 2019-09-12T18:59:45.116Z · LW(p) · GW(p)

While I could rattle off the benefits of "delegating" or "ops people", I don't think I've seen a highly-discrete TAP + flowchart for realizing when you're at the point where you should ask yourself "Have my easy-to-delegate annoyances added up to enough that I should hire a full-time ops person? (or more)."

Many people whose time is valuable seem likely to put off making this call until they reach the point where it's glaringly obvious. Proposing an easy TAP-like decision-boundary seems like a potentially high-value post? Not my area of specialty, though.

Replies from: rossry↑ comment by rossry · 2019-09-12T22:53:59.965Z · LW(p) · GW(p)

My guess is that one gets a reasonable start by framing more tasks as self-delegation (adding the middle step of "decide to do" / "describe task on to-do list" / "do task"), then periodically reviewing the tasks-competed list and pondering the benefits and feasibility of outsourcing a chunk of the "do task"s.

Creating a record of task-intentions has a few benefits in making self-reflection possible; reflecting on delegation opportunities is a special case.

comment by Spiracular · 2019-07-02T21:53:00.136Z · LW(p) · GW(p)

If The Ocean was a DM:

Player: I want to play a brainless incompletely-multiceullular glob with no separation between its anus and its mouth and who might have an ability like "glow in the dark" or "eject organs and then grow them back." It eats tiny rocks in the water column.

The Land DM: No! At least have some sensible distribution-of-nutrients gimmick! Like, you're not even trying! Like, I'm not anti-slimes, I'll allow some of this for the Slime Mold, but they had a clever mechanic for succinctly generating wider pathways for nutrient transfer between high-nutrition areas that I'd be happy to...

The Ocean DM: OMG THAT'S MY FAVORITE THING. Do you have any other character concepts? Like, maybe it can also eject its brain and grow it back? Or maybe its gonads are also in the single hole where the mouth and anus are?

The Land DM: ...

Replies from: Raemon, Spiracular↑ comment by Spiracular · 2019-07-02T21:53:15.787Z · LW(p) · GW(p)

I have this vague intuition that some water-living organisms have fuzzier self-environment boundaries than most land organisms. It sorta makes sense. Living in water, you might at least get close to matching your nutritional, hydrational, thermal, and dispersion needs via low-effort diffusion. In contrast, on land you almost certainly need to create strongly-defined boundaries between yourself and "air" or "rock", since environments can reach dangerous extremes.

Fungi feel like a bit of an exception, though, with their extracellular digestion habits.

comment by Spiracular · 2021-04-14T22:13:51.714Z · LW(p) · GW(p)

Live Parsers and Quines

An unusual mathematical-leaning definition of a living thing.

(Or, to be more precise... a potential living immortal? A replicon? Whatever.)

A self-replicating physical entity with...

3 Core Components:

- Quine: Contains the code to produce itself

- Parser: A parser for that code

- Code includes instructions for the parser; ideally, compressed instructions

- Power: Actively running (probably on some substrate)

- Not actively running is death, although for some the death is temporary.

- Access to resources may be a subcomponent of this?

Additional components:

- Substrate: A material that can be converted into more self

- Possibly a multi-step process

- Access to resources is probably a component of this

- Translator: Converts quine into power, or vice-versa

- Not always necessary; sometimes a quine is held as power, not substrate

Parser and Code: the information can actually be stored on either; you can extract the correct complex signal from complete randomness using an arbitrarily-complex parser chosen for that purpose. There are analogies that can be drawn in the other direction, too: a fairly dumb parser can make fairly complicated things, given enough instructions. (Something something Turing Machines)

Ideally, though, they're well-paired and the compression method is something sensible, to reduce complexity.

A quine by itself has only a partial life; it is just its own code, it requires a parser to replicate.

(If you allow for arbitrarily complex parsers, any code could be a quine, if you were willing to search for the right parser.)

Compilers are... parsers? (Or translators?)

It is possible for what was "code" information to be embedded into the parser. I think this is part of what happens when you completely integrate parts in IFS.

I'm less clear on what's important about power? Things to poke: Power, Electicity, Energy (& time-as-the-entropy-direction?). Animating. Continuity, Boltzmann brains, and "Is it frozen/discontinuous relative to this frame of time/reference?" (Permutation City). What is Death? (vs Life, or vs a frozen/cryonic quine)

Examples

Example replicons: A bacterium, a cell, a clonal organism, a pair of opposite-sexed humans (but not a single), self-contained repeating Game of Life automata, the eventual goal of RepRap (a 3D printer fully producing a copy of itself)

Viruses: Sometimes quines, sometimes pre-quine and translator

A scholar is just a library's way of making another library.

-- Daniel Dennett

The distinctive thing about Lisp is that its core is a language defined by writing an interpreter in itself. It wasn't originally intended as a programming language in the ordinary sense. It was meant to be a formal model of computation, an alternative to the Turing machine. If you want to write an interpreter for a language in itself, what's the minimum set of predefined operators you need? The Lisp that John McCarthy invented, or more accurately discovered, is an answer to that question.

-- What I Worked On, Paul Graham

Related: Self-Reference, Post-Irony, Hofstadter's Strange Loop, Turing Machine, Automata (less-so), deconstruction (less-so)

Is a cryonics'd human a quine? (wrt future technology)

The definition of parasite used in Nothing in evolution makes sense except in the light of parasites is literally quines.

(Complex multi-step life-cycle in the T5 case, though?. The quine produced a bare-bones quine-compiling "spawner" when it interacts with the wild-type, and then replicates on that. Almost analogous to CS viruses that infect the compiler, and any future compiler compiled by that compiler.)

Process is Art: Is art that demonstrates how to craft the art, a quine on a human compiler?

Some connection to: Compartmentalization (Black Box Testing, unit tests, separation of software components) and the "swappability" of keeping things general and non-embedded, with clearly-marked and limited interface-surfaces (APIs). Generates pressure against having "arbitrary compilers" in practice.

comment by habryka (habryka4) · 2019-06-13T20:50:54.117Z · LW(p) · GW(p)

Yay, shortform feeds!

Replies from: Spiracular↑ comment by Spiracular · 2019-06-13T21:56:59.665Z · LW(p) · GW(p)

Damn it, Oli. Keep your popularity away from my very-fake pseudoprivacy :P

(Tone almost exactly half-serious half-jokingly-sarcastic?)

((It's fine, but I'm a little stressed by it.))

Replies from: Raemon↑ comment by Raemon · 2019-06-13T22:01:40.349Z · LW(p) · GW(p)

FYI shortform feeds are currently pretty hard to follow even when you're actively trying so I wouldn't worry.

Replies from: Spiracular↑ comment by Spiracular · 2019-06-13T22:07:47.994Z · LW(p) · GW(p)

Good to know! I'm assuming that's mostly an automatic consequence of everything being a comment, but that also fits with what I'd want from this sort of thing.

comment by Spiracular · 2021-09-05T22:24:40.685Z · LW(p) · GW(p)

A copy machine copies a 5 minute video over time, going line by line, one line per second. Every pixel it sees, it copies perfectly. Is it an accurate copy?

A teacher teaches a student every thing the teacher knows, building up from basic to advanced knowledge over the course of years. At each weekly lesson, the student learns the planned material perfectly. That exact topic is never revisited again.

By the end of the last class, does the student know and understand everything the teacher knows?

Replies from: Spiracular↑ comment by Spiracular · 2021-09-05T22:32:28.879Z · LW(p) · GW(p)

Bits of koan that dropped into my head.

The sort-of-mathematical phrasing I might use to describe the copy-machine copy is "a slice of a flat diagonal hyperplane, with both time and space components."

The second question is a sort of "oh, I guess this gesture has a few useful-to-think-about analogs in real life."

comment by Spiracular · 2021-04-03T19:02:10.720Z · LW(p) · GW(p)

OODAs vs TAPs

TAPs [LW · GW] are just abbreviated or stunted OODA loops, and I think I prefer some aspects of the OODA phrasing.

OODA: Observe -> Orient -> Decide -> Act (loop)

TAP: Trigger -> Action (Plan/Pattern)

TAPs usually map to "Observe->Act."

Orient loosely means setting up a Model/Metrics/Framework/Attitude.

(This can take anywhere from 1/2 a second to years; wildly variable amounts of time depending on how simple and familiar the problem and system is. TAPs implicitly assume this is one of the simple/fast cases.)

One case where OODA's phrasing is better, is "Attitude TAPs":

"Attitude TAPs" are one of the most valuable TAP types, but are a non-obvious feature of the TAPs framework unless this is explicitly mentioned.

Ex: (from here [LW · GW])"when I'm finding my daily writing difficult, and I'm thinking about quitting, I'll notice that and try to figure out what's going wrong (rather than mindlessly checking Twitter to avoid writing)."

Alternatively, this could be phrased as an abbreviated OODA that cements an "Observe ->Orient" without fixing an action. Personally, I find this a bit cleaner.

comment by Spiracular · 2020-03-14T19:37:22.895Z · LW(p) · GW(p)

My nCOV/SARS-CoV-2 research head until I figure out exactly where to post things.

Things to post next: Link and summary of the research into the nCOV spike-protein mutations.

Replies from: Spiracular, Spiracular, Spiracular, Spiracular, Spiracular↑ comment by Spiracular · 2020-03-15T20:12:57.594Z · LW(p) · GW(p)

Paper on some of nCOV's mutations

Incidentally, also strong evidence against it being a lab-strain. It's a wild strain.

Closest related viruses: bats and Malayan pangolins

Mutation Descriptions

Polybasic Cleavage Sites (PCS): They seem to have something to do with increased rates of cell-cell fusion (increased rate of virus-induced XL multi-nucleated cells). Mutations generating PCS have been seen in Influenza strains to increase their pathogenicity, and they had similar effects in a few other viruses. So it's not exactly increasing virus-cell fusion, it's actually... increasing the rate at which infected cells glom into nearby cells. Fused cells are called syncytia.

O-linked glycans : Are theorized (with uncertainty) to help the virions masquerade as mucin, so hiding from the immune system. (Mutation unlikely to evolve in a lab on a petri dish)

Arguments strongly in favor of it being a wild strain

- It's not that similar to one of the known lab-strains, so it probably was wild

- The "polybasic cleavage site" and "O-linked glycans" mutations would have required a very human-like ACE-protein binding site, so basically only human or ferret cells

- O-linked glycans are usually evolved as an immune defense, which isn't something cell cultures do.

↑ comment by Spiracular · 2020-03-14T19:59:56.319Z · LW(p) · GW(p)

The possibility of Antibody-Dependent Enhancement looks very real, to me.

(I've repeatedly had to update in the direction of it being plausible, and I currently think it's more-likely-than-not to be a factor that will complicate vaccine development.)

Other coronaviruses, ex: FIP, have had vaccines that presented with this problem (imperfect antibodies against the vaccine resulted in increased severity of illness compared to baseline).

An in-vitro experiment suggesting that nCOV could use imperfect antibodies as a viable "anchor" for infecting white blood cells. Was tested using previous SARS-1 vaccines.

Interpretation: Assuming it's the same case among SARS subtypes, antibodies against the spike-protein are a bad idea, but antibodies against other components of the virus (which don't evolve as fast as the S-protein) seemed to work. The one N-protein vaccine didn't have this bad effect.

Interpretation: in-vitro isn't nearly as conclusive as in-vivo, though...

A preprint suggesting that ADE may already be part of why we have such wide variance in the severity of symptoms. Severe cases may be severe in part because of this exacerbating response to non-neutralizing antibodies.

Interpretation: Geez, this actually seems to match-up with the disease pattern well. The elderly have worse immune responses and tend to be more prone to poorly-constructed antibodies (resulting in things like ex: autoimmune responses), and the high-severity disease tends to happen late (around when the antibody-based adaptive immune response kicks in). I need to double-check, but if kids have better innate immune responses, it fits fantastically. The white blood cell deficiencies which the paper mentions occur in the severe cases feels fairly conclusive to me.

This proposes that China may have had a far-worse death rate in part because of exposure to previous cases of SARS-1.

Interpretation: At least a few points towards the hypothesis, but my prior was that a zoonotic disease straight-off-the-literal-bat would be more severe anyway.

T-cell exhaustion may have some bearing on this question, as either evidence or counter-evidence depending on whether the infected/dying immune cells are specifically Fc-bearing or not.

(ADE is likely to specifically affect Fc receptor bearing cells, which consist of: B lymphocytes, follicular dendritic cells, natural killer cells, macrophages, neutrophils, eosinophils, basophils, human platelets, and mast cells. I need to run throgh the preprint on symptom-variance and double-check the types of WBC affected.)

Future prediction: People who had the SARS-1 or MERS vaccine previously (esp. if vs. S-protein, which most include) will tend to get more severe cases with SARS-2.

Replies from: leggi↑ comment by Spiracular · 2020-03-14T20:04:47.861Z · LW(p) · GW(p)

I am under the impression from a few case-studies that people have high-virus-titer a day before, and at least a few days after symptoms appear. Including mild symptoms.

It was hard to find conclusive studies on how long after symptoms the mild cases retain a high virus titer, but a German mild case still had high titer 2 days post-symptoms. Probably longer than that.

↑ comment by Spiracular · 2020-03-14T19:40:50.897Z · LW(p) · GW(p)

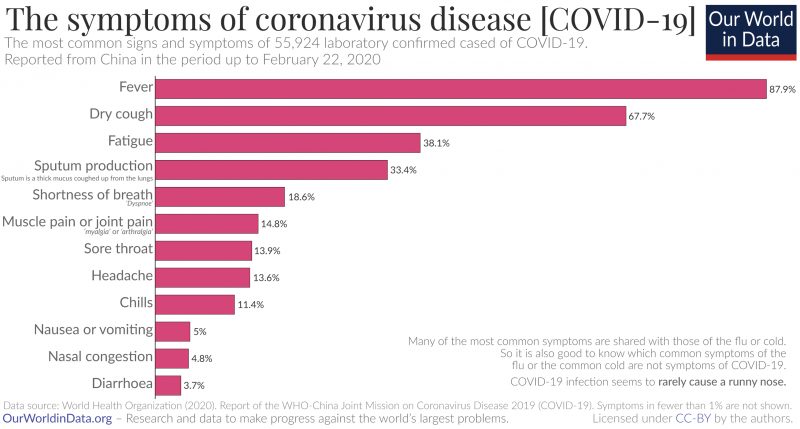

Symptom frequency chart via ourworldindata

Additional impressions: Fever is pretty common and incriminating (but case-studies elsewhere suggest it isn't always the first symptom to show up!), runny nose is rare (although I've heard it's more common in mild child cases).

↑ comment by Spiracular · 2020-03-14T19:37:38.327Z · LW(p) · GW(p)

CIDRAP seems to confirm my impression that "6ft distance from people" came from assuming that the only relevant transmission method was distance-of-large-droplets-from-cough/sneeze. Aerosols describe transmission via fine droplets over much larger distances, and are exemplified in Influenza transmission.

This CIDRAP article suggests that SARS-CoV-2 aerosol transmission is at least plausible since it had been demonstrated in MERS.

More recently, this aerosol and surface stability preprint says the SARS-CoV-2 virions remained viable as an aerosol for 3 hours.

(Quick side sanity-checks: The SARS virion is 1.2x the size of Influenza's, the R0 is higher than Influenza's but over a longer time-period. Aerosol transmission feels plausible to me.)

comment by Spiracular · 2019-09-17T02:49:20.467Z · LW(p) · GW(p)

Bubbles in Thingspace

It occurred to me recently that, by analogy with ML, definitions might occasionally be more like "boundaries and scoring-algorithms in thingspace" than clusters per-say (messier! no central example! no guaranteed contiguity!). Given the need to coordinate around definitions, most of them are going to have a simple and somewhat-meaningful center... but for some words, I suspect there are dislocated "bubbles" and oddly-shaped "smears" that use the same word for a completely different concept.

Homophones are one of the clearest examples; totally disconnected bubbles of substance.

Another example is when a word covers all cases except those where a different word applies better; in that case, you can expect to see a "bite" taken out of its space, or even a multidimensional empty bubble, or a doughnut-like gap in the definition. If the hole is centered ("the strongest cases go by a different term" actually seems like a very common phenomenon), it even makes the idea of a "central" definition rather meaningless, unless you're willing to fuse or switch terms.

comment by Spiracular · 2019-06-13T21:49:02.622Z · LW(p) · GW(p)

This is pasted in from a very different context, so it doesn't match how I would format it as a post at all. But it paints a sketch of a system I might want to flesh out into a post sometime (although possibly not on LessWrong): Predictiveness vs Alignment as sometimes-diverging axes of Identity.

There's probably some very interesting things to be said about the points where external predictiveness and internal alignment differ, and I might be a bit odd in that I think I have strong reason to believe that a divergence between them is usually not a bad thing. In fact, at times, I'd call it a legitimately good thing, especially in cases where it feeds into motivation or counterbalances other forces.

Ex: For me in particular, anti-identifying as "sensitive" is load-bearing, and props up my ability to sometimes function as a less-than-excessively-sensitive human being. However, the sensitive aspect is always going to show through in the contexts where there's no action I can take to cover for it (ex: very easily disturbed by loud noise).

There's still some question in my head about what internal alignment even is. It feels to me like "Internal Predictiveness" is a subcomponent of it, and aspiration is another part of it, but neither describes it in full. But it felt like there was a meaningful cluster there, and it was easier to feel-out and measure than it was to describe, so for now I've stuck with that...

Q: What are some identity labels that apply to you? How aligned with or alienated from them do you feel?

I was only able to do this exercise if I separated out "Externally Predictive" (P-, NP, and P+) and "Internal Alignment," (AN-, N/A, AG+, and sometimes 404). These sometimes feel like totally divergent axes, and trying to catch both in a single axis felt deeply dishonest to me. Besides, finding the divergence between them is fun.

(I apologize for the sparse formatting on this; I'll try to find a way to reformat it better later.)

Predictive-First Identities

- Nerd & Geek

- Predictive (P+), Aligned (AG)

- Rationalist

- P+, AG+

- Biologist

- P+, full range from AG+ to bitterly AN- (Alienated)

- Programmer

- Moderately P+, N/A

- Extroverted Geek

- P+, Highly AN

- Introvert

- Slightly P- (used to be P+), AG

- Anxious

- Slightly P+, Slightly AN

Alignment/Alienation-First Identities

- Healthy Boundaries

- P+, AG+

- Visual Thinker

- NP to P+, AG+

- Queer

- NP (by design!), AG

- Smart

- P+, 404 (I can't properly internalize it in a healthy way unless I add more nuance to it)

- Not Smart/Good Enough but Getting Better by Doing it

- NP to Moderately P+, AG+

- Sensitive

- P+, AN

- Immature

- NP (used to be P-), AN

- Self-centered

- P-, AN-

comment by Spiracular · 2021-11-08T18:37:26.949Z · LW(p) · GW(p)

I do think some things are actually quite real and grounded? Everything is shoved through a lens as you perceive it, but not all lenses are incredibly warping.

If you're willing to work pretty close to the lower-levels of perception, and be quite careful while building things up, well and deeply-grounded shit EXISTS.

To give an evocative, and quite literally illustrative, example?

I think learning how to see the world well enough to do realistic painting is an exceptionally unwarping and grounding skill.

Any other method of seeing while drawing, doubles up on your attentional biases and lets you see the warped result*. When you view it, you re-apply your lens to your lens' results, and see the square any warping you were doing.

It's no coincidence that most people who try take one look at their first attempt at realistic drawing, will cringe and go "that's obviously wrong..."

When you can finally produce an illustration that isn't "obviously wrong," it stands as a piece of concrete evidence that you've learned some ability to engage at-will with your visual-perception, in a way that is relatively non-warping.

Or, to math-phrase it badly...

* Taking as a totally-unreasonable given, that your "skill at drawing" is good enough to not get in the way.

Replies from: Spiracular↑ comment by Spiracular · 2021-11-08T18:40:48.216Z · LW(p) · GW(p)

Working out how this applies to other fields is left as an exercise to the reader, because I'm lazy and the space of places I use this metaphor is large (and paradoxically, so overbuilt that it's probably quite warped).

Also: minimally-warped lenses aren't always the most useful lens! Getting work done requires channeling attention, and doing it disproportionately!

And most heavily-built things are pretty warped; it's usually a safe default assumption. Doesn't make heavily-built things pointless, that is not what I'm getting at.

...but stuff that hews close to base-reality has the the important distinction of surviving most cataclysms basically-intact, and robustness is a virtue that works in their favor.