Shulman and Yudkowsky on AI progress

post by Eliezer Yudkowsky (Eliezer_Yudkowsky), CarlShulman · 2021-12-03T20:05:22.552Z · LW · GW · 16 commentsContents

9.14. Carl Shulman's predictions

[Shulman][20:30]

[Yudkowsky][21:38]

[Cotra][21:44]

[Shulman][21:44]

[Yudkowsky][21:50]

[Shulman][21:59]

[Yudkowsky][22:02]

[Shulman][22:06]

[Yudkowsky][22:07]

[Shulman][22:07]

[Yudkowsky][22:09]

[Shulman][22:09]

[Yudkowsky][22:09]

[Shulman][22:09]

[Yudkowsky][22:09]

[Shulman][22:12]

[Yudkowsky][22:12]

[Shulman][22:13]

[Yudkowsky][22:13]

[Shulman][22:15]

[Yudkowsky][22:15]

[Shulman][22:15]

[Yudkowsky][22:16]

[Shulman][22:18]

[Yudkowsky][22:19]

[Shulman][22:19]

[Yudkowsky][22:21]

[Shulman][22:24]

[Yudkowsky][22:27]

[Shulman][22:28]

[Yudkowsky][22:29]

[Shulman][22:29]

[Yudkowsky][22:31]

[Shulman][22:33]

[Yudkowsky][22:34]

[Shulman][22:36]

[Yudkowsky][22:36]

[Shulman][22:37]

[Yudkowsky][22:37]

[Shulman][22:37]

[Yudkowsky][22:37]

[Shulman][22:38]

[Yudkowsky][22:39]

10. September 22 conversation

10.1. Scaling laws

[Shah][3:05]

[Bensinger][3:36]

[Cotra][10:01]

[Yudkowsky][10:24]

[Cotra][10:35]

[Yudkowsky][10:55]

[Shulman][11:09]

[Yudkowsky][11:10]

[Shulman][11:18]

[Yudkowsky][11:19]

[Shulman][11:20]

[Yudkowsky][11:21]

[Shulman][11:23]

[Yudkowsky][11:23]

[Shulman][11:25]

None

16 comments

This post is a transcript of a discussion between Carl Shulman and Eliezer Yudkowsky, following up on a conversation with Paul Christiano and Ajeya Cotra [LW · GW].

Color key:

| Chat by Carl and Eliezer | Other chat |

9.14. Carl Shulman's predictions

[Shulman][20:30] I'll interject some points re the earlier discussion about how animal data relates to the 'AI scaling to AGI' thesis. 1. In humans it's claimed the IQ-job success correlation varies by job, For a scientist or doctor it might be 0.6+, for a low complexity job more like 0.4, or more like 0.2 for simple repetitive manual labor. That presumably goes down a lot with less in the way of hands, or focused on low density foods like baleen whales or grazers. If it's 0.1 for animals like orcas or elephants, or 0.05, then there's 4-10x less fitness return to smarts. 2. But they outmass humans by more than 4-10x. Elephants 40x, orca 60x+. Metabolically (20 watts divided by BMR of the animal) the gap is somewhat smaller though, because of metabolic scaling laws (energy scales with 3/4 or maybe 2/3 power, so ). https://en.wikipedia.org/wiki/Kleiber%27s_law If dinosaurs were poikilotherms, that's a 10x difference in energy budget vs a mammal of the same size, although there is debate about their metabolism. 3. If we're looking for an innovation in birds and primates, there's some evidence of 'hardware' innovation rather than 'software.' Herculano-Houzel reports in The Human Advantage (summarizing much prior work neuron counting) different observational scaling laws for neuron number with brain mass for different animal lineages.

[Editor’s Note: Quote source is “Cellular scaling rules for primate brains.”] In rodents brain mass increases with neuron count n^1.6, whereas it's close to linear (n^1.1) in primates. For cortex neurons and cortex mass 1.7 and 1.0. In general birds and primates are outliers in neuron scaling with brain mass. Note also that bigger brains with lower neuron density have longer communication times from one side of the brain to the other. So primates and birds can have faster clock speeds for integrated thought than a large elephant or whale with similar neuron count. 4. Elephants have brain mass ~2.5x human, and 3x neurons, but 98% of those are in the cerebellum (vs 80% in or less in most animals; these are generally the tiniest neurons and seem to do a bunch of fine motor control). Human cerebral cortex has 3x the neurons of the elephant cortex (which has twice the mass). The giant cerebellum seems like controlling the very complex trunk. https://nautil.us/issue/35/boundaries/the-paradox-of-the-elephant-brain Blue whales get close to human neuron counts with much larger brains. https://en.wikipedia.org/wiki/List_of_animals_by_number_of_neurons 5. As Paul mentioned, human brain volume correlation with measures of cognitive function after correcting for measurement error on the cognitive side is in the vicinity of 0.3-0.4 (might go a bit higher after controlling for non-functional brain volume variation, lower from removing confounds). The genetic correlation with cognitive function in this study is 0.24: https://www.nature.com/articles/s41467-020-19378-5 So it accounts for a minority of genetic influences on cognitive ability. We'd also expect a bunch of genetic variance that's basically disruptive mutations in mutation-selection balance (e.g. schizophrenia seems to be a result of that, with schizophrenia alleles under negative selection, but a big mutational target, with the standing burden set by the level of fitness penalty for it; in niches with less return to cognition the mutational surface will be cleaned up less frequently and have more standing junk). Other sources of genetic variance might include allocation of attention/learning (curiosity and thinking about abstractions vs immediate sensory processing/alertness), length of childhood/learning phase, motivation to engage in chains of thought, etc. Overall I think there's some question about how to account for the full genetic variance, but mapping it onto the ML experience with model size, experience and reward functions being key looks compatible with the biological evidence. I lean towards it, although it's not cleanly and conclusively shown. Regarding economic impact of AGI, I do not buy the 'regulation strangles all big GDP boosts' story. The BEA breaks down US GDP by industry here (page 11): https://www.bea.gov/sites/default/files/2021-06/gdp1q21_3rd_1.pdf As I work through sectors and the rollout of past automation I see opportunities for large-scale rollout that is not heavily blocked by regulation. Manufacturing is still trillions of dollars, and robotic factories are permitted and produced under current law, with the limits being more about which tasks the robots work for at low enough cost (e.g. this stopped Tesla plans for more completely robotic factories). Also worth noting manufacturing is mobile and new factories are sited in friendly jurisdictions. Software to control agricultural machinery and food processing is also permitted. Warehouses are also low-regulation environments with logistics worth hundreds of billions of dollars. See Amazon's robot-heavy warehouses limited by robotics software. Driving is hundreds of billions of dollars, and Tesla has been permitted to use Autopilot, and there has been a lot of regulator enthusiasm for permitting self-driving cars with humanlike accident rates. Waymo still hasn't reached that it seems and is lowering costs. Restaurants/grocery stores/hotels are around a trillion dollars. Replacing humans in vision/voice tasks to take orders, track inventory (Amazon Go style), etc is worth hundreds of billions there and mostly permitted. Robotics cheap enough to replace low-wage labor there would also be valuable (although a lower priority than high-wage work if compute and development costs are similar). Software is close to a half trillion dollars and the internals of software development are almost wholly unregulated. Finance is over a trillion dollars, with room for AI in sales and management. Sales and marketing are big and fairly unregulated. In highly regulated and licensed professions like healthcare and legal services, you can still see a licensee mechanically administer the advice of the machine, amplifying their reach and productivity. Even in housing/construction there's still great profits to be made by improving the efficiency of what construction is allowed (a sector worth hundreds of billions). If you're talking about legions of super charismatic AI chatbots, they could be doing sales, coaching human manual labor to effectively upskill it, and providing the variety of activities discussed above. They're enough to more than double GDP, even with strong Baumol effects/cost disease, I'd say. Although of course if you have AIs that can do so much the wages of AI and hardware researchers will be super high, and so a lot of that will go into the intelligence explosion, while before that various weaknesses that prevent full automation of AI research will also mess up activity in these other sectors to varying degrees. Re discontinuity and progress curves, I think Paul is right. AI Impacts went to a lot of effort assembling datasets looking for big jumps on progress plots, and indeed nukes are an extremely high percentile for discontinuity, and were developed by the biggest spending power (yes other powers could have bet more on nukes, but didn't, and that was related to the US having more to spend and putting more in many bets), with the big gains in military power per $ coming with the hydrogen bomb and over the next decade. For measurable hardware and software progress (Elo in games, loss on defined benchmarks), you have quite continuous hardware progress, and software progress that is on the same ballpark, and not drastically jumpy (like 10 year gains in 1), moreso as you get to metrics used by bigger markets/industries. I also agree with Paul's description of the prior Go trend, and how DeepMind increased $ spent on Go software enormously. That analysis was a big part of why I bet on AlphaGo winning against Lee Sedol at the time (the rest being extrapolation from the Fan Hui version and models of DeepMind's process for deciding when to try a match). | ||

[Yudkowsky][21:38] I'm curious about how much you think these opinions have been arrived at independently by yourself, Paul, and the rest of the OpenPhil complex? | ||

[Cotra][21:44] Little of Open Phil's opinions are independent of Carl, the source of all opinions

| ||

[Shulman][21:44] I did the brain evolution stuff a long time ago independently. Paul has heard my points on that front, and came up with some parts independently. I wouldn't attribute that to anyone else in that 'complex.' On the share of the economy those are my independent views. On discontinuities, that was my impression before, but the additional AI Impacts data collection narrowed my credences. TBC on the brain stuff I had the same evolutionary concern as you, which was I investigated those explanations and they still are not fully satisfying (without more micro-level data opening the black box of non-brain volume genetic variance and evolution over time). | ||

[Yudkowsky][21:50] so... when I imagine trying to deploy this style of thought myself to predict the recent past without benefit of hindsight, it returns a lot of errors. perhaps this is because I do not know how to use this style of thought, but. for example, I feel like if I was GPT-continuing your reasoning from the great opportunities still available in the world economy, in early 2020, it would output text like: "There are many possible regulatory regimes in the world, some of which would permit rapid construction of mRNA-vaccine factories well in advance of FDA approval. Given the overall urgency of the pandemic some of those extra-USA vaccines would be sold to individuals or a few countries like Israel willing to pay high prices for them, which would provide evidence of efficacy and break the usual impulse towards regulatory uniformity among developed countries, not to mention the existence of less developed countries who could potentially pay smaller but significant amounts for vaccines. The FDA doesn't seem likely to actively ban testing; they might under a Democratic regime, but Trump is already somewhat ideologically prejudiced against the FDA and would go along with the probable advice of his advisors, or just his personal impulse, to override any FDA actions that seemed liable to prevent tests and vaccines from making the problem just go away." | ||

[Shulman][21:59] Pharmaceuticals is a top 10% regulated sector, which is seeing many startups trying to apply AI to drug design (which has faced no regulatory barriers), which fits into the ordinary observed output of the sector. Your story is about regulation failing to improve relative to normal more than it in fact did (which is a dramatic shift, although abysmal relative to what would be reasonable). That said, I did lose a 50-50 bet on US control of the pandemic under Trump (although I also correctly bet that vaccine approval and deployment would be historically unprecedently fast and successful due to the high demand). | ||

[Yudkowsky][22:02] it's not impossible that Carl/Paul-style reasoning about the future - near future, or indefinitely later future? - would start to sound more reasonable to me if you tried writing out a modal-average concrete scenario that was full of the same disasters found in history books and recent news like, maybe if hypothetically I knew how to operate this style of thinking, I would know how to add disasters automatically and adjust estimates for them; so you don't need to say that to Paul, who also hypothetically knows but I do not know how to operate this style of thinking, so I look at your description of the world economy and it seems like an endless list of cheerfully optimistic ingredients and the recipe doesn't say how many teaspoons of disaster to add or how long to cook it or how it affects the final taste | ||

[Shulman][22:06] Like when you look at historical GDP stats and AI progress they are made up of a normal rate of insanity and screwups.

| ||

[Yudkowsky][22:07] on my view of reality, I'm the one who expects business-as-usual in GDP until shortly before the world ends, if indeed business-as-usual-in-GDP changes at all, and you have an optimistic recipe for Not That which doesn't come with an example execution containing typical disasters? | ||

[Shulman][22:07] Things like failing to rush through neural network scaling over the past decade to the point of financial limitation on model size, insanity on AI safety, anti-AI regulation being driven by social media's role in politics. | ||

[Yudkowsky][22:09] failing to deploy 99% robotic cars to new cities using fences and electronic gates | ||

[Shulman][22:09] Historical growth has new technologies and stupid stuff messing it up. | ||

[Yudkowsky][22:09] so many things one could imagine doing with current tech, and yet, they are not done, anywhere on Earth | ||

[Shulman][22:09] AI is going to be incredibly powerful tech, and after a historically typical haircut it's still a lot bigger. | ||

[Yudkowsky][22:09] so some of this seems obviously driven by longer timelines in general do you have things which, if they start to happen soonish and in advance of world GDP having significantly broken upward 3 years before then, cause you to say "oh no I'm in the Eliezerverse"? | ||

[Shulman][22:12] You may be confusing my views and Paul's. | ||

[Yudkowsky][22:12] "AI is going to be incredibly powerful tech" sounds like long timelines to me, though? | ||

[Shulman][22:13] No. | ||

[Yudkowsky][22:13] like, "incredibly powerful tech for longer than 6 months which has time to enter the economy" if it's "incredibly powerful tech" in the sense of immediately killing everybody then of course we agree, but that didn't seem to be the context | ||

[Shulman][22:15] I think broadly human-level AGI means intelligence explosion/end of the world in less than a year, but tons of economic value is likely to leak out before that from the combination of worse general intelligence with AI advantages like huge experience. | ||

[Yudkowsky][22:15] my worldview permits but does not mandate a bunch of weirdly powerful shit that people can do a couple of years before the end, because that would sound like a typically messy and chaotic history-book scenario especially if it failed to help us in any way | ||

[Shulman][22:15] And the economic impact is increasing superlinearly (as later on AI can better manage its own introduction and not be held back by human complementarities on both the production side and introduction side). | ||

[Yudkowsky][22:16] my worldview also permits but does not mandate that you get up to the chimp level, chimps are not very valuable, and once you can do fully AGI thought it compounds very quickly it feels to me like the Paul view wants something narrower than that, a specific story about a great economic boom, and it sounds like the Carl view wants something that from my perspective seems similarly narrow which is why I keep asking "can you perhaps be specific about what would count as Not That and thereby point to the Eliezerverse" | ||

[Shulman][22:18] We're in the Eliezerverse with huge kinks in loss graphs on automated programming/Putnam problems. Not from scaling up inputs but from a local discovery that is much bigger in impact than the sorts of jumps we observe from things like Transformers. | ||

[Yudkowsky][22:19] ...my model of Paul didn't agree with that being a prophecy-distinguishing sign to first order (to second order, my model of Paul agrees with Carl for reasons unbeknownst to me) I don't think you need something very much bigger than Transformers to get sharp loss drops? | ||

[Shulman][22:19] not the only disagreement but that is a claim you seem to advance that seems bogus on our respective reads of the data on software advances | ||

[Yudkowsky][22:21] but, sure, "huge kinks in loss graphs on automated programming / Putnam problems" sounds like something that is, if not mandated on my model, much more likely than it is in the Paulverse. though I am a bit surprised because I would not have expected Paul to be okay betting on that. like, I thought it was an Eliezer-view unshared by Paul that this was a sign of the Eliezerverse. but okeydokey if confirmed to be clear I do not mean to predict those kinks in the next 3 years specifically they grow in probability on my model as we approach the End Times | ||

[Shulman][22:24] I also predict that AI chip usage is going to keep growing at enormous rates, and that the buyers will be getting net economic value out of them. The market is pricing NVDA (up more than 50x since 2014) at more than twice Intel because of the incredible growth rate, and it requires more crazy growth to justify the valuation (but still short of singularity). Although NVDA may be toppled by other producers. Similarly for increasing spending on model size (although slower than when model costs were <$1M). | ||

[Yudkowsky][22:27] relatively more plausible on my view, first because it's arguably already happening (which makes it easier to predict) and second because that can happen with profitable uses of AI chips which hover around on the economic fringes instead of feeding into core production cycles (waifutech) it is easy to imagine massive AI chip usage in a world which rejects economic optimism and stays economically sad while engaging in massive AI chip usage so, more plausible | ||

[Shulman][22:28] What's with the silly waifu example? That's small relative to the actual big tech company applications (where they quickly roll it into their software/web services or internal processes, which is not blocked by regulation and uses their internal expertise). Super chatbots would be used as salespeople, counselors, non-waifu entertainment. It seems randomly off from existing reality. | ||

[Yudkowsky][22:29] seems more... optimistic, Kurzweilian?... to suppose that the tech gets used correctly the way a sane person would hope it would be used | ||

[Shulman][22:29] Like this is actual current use. Hollywood and videogames alone are much bigger than anime, software is bigger than that, Amazon/Walmart logistics is bigger. | ||

[Yudkowsky][22:31] Companies using super chatbots to replace customer service they already hated and previously outsourced, with a further drop in quality, is permitted by the Dark and Gloomy Attempt To Realistically Continue History model I am on board with wondering if we'll see sufficiently advanced videogame AI, but I'd point out that, again, that doesn't cycle core production loops harder | ||

[Shulman][22:33] OK, using an example of allowable economic activity that obviously is shaving off more than an order of magnitude on potential market is just misleading compared to something like FAANGSx10. | ||

[Yudkowsky][22:34] so, like, if I was looking for places that would break upward, I would be like "universal translators that finally work" but I was also like that when GPT-2 came out and it hasn't happened even though you would think GPT-2 indicated we could get enough real understanding inside a neural network that you'd think, cognition-wise, it would suffice to do pretty good translation there are huge current economic gradients pointing to the industrialization of places that, you might think, could benefit a lot from universal seamless translation | ||

[Shulman][22:36] Current translation industry is tens of billions, English learning bigger. | ||

[Yudkowsky][22:36] Amazon logistics are an interesting point, but there's the question of how much economic benefit is produced by automating all of it at once, Amazon cannot ship 10x as much stuff if their warehouse costs go down by 10x. | ||

[Shulman][22:37] Definitely hundreds of billions of dollars of annual value created from that, e.g. by easing global outsourcing. | ||

[Yudkowsky][22:37] if one is looking for places where huge economic currents could be produced, AI taking down what was previously a basic labor market barrier, would sound as plausible to me as many other things | ||

[Shulman][22:37] Amazon has increased sales faster than it lowered logistics costs, there's still a ton of market share to take. | ||

[Yudkowsky][22:37] I am able to generate cheerful scenarios, eg if I need them for an SF short story set in the near future where billions of people are using AI tech on a daily basis and this has generated trillions in economic value | ||

[Shulman][22:38] Bedtime for me though. | ||

[Yudkowsky][22:39] I don't feel like particular cheerful scenarios like that have very much of a track record of coming true. I would not be shocked if the next GPT-jump permits that tech, and I would then not be shocked if use of AI translation actually did scale a lot. I would be much more impressed, with Earth having gone well for once and better than I expected, if that actually produced significantly more labor mobility and contributed to world GDP. I just don't actively, >50% expect things going right like that. It seems to me that more often in real life, things do not go right like that, even if it seems quite easy to imagine them going right. good night! |

10. September 22 conversation

10.1. Scaling laws

[Shah][3:05] My attempt at a reframing: Places of agreement:

Places of disagreement:

To the extent this is accurate, it doesn't seem like you really get to make a bet that resolves before the end times, since you agree on basically everything until the point at which Eliezer predicts that you get the zero-to-one transition on the underlying driver of impact. I think all else equal you probably predict that Eliezer has shorter timelines to the end times than Paul (and that's where you get things like "Eliezer predicts you don't have factory-generating factories before the end times whereas Paul does"). (Of course, all else is not equal.) | |

[Bensinger][3:36]

Eliezer said in Jan 2017 that the Caplan bet was kind of a joke: https://www.econlib.org/archives/2017/01/my_end-of-the-w.html/#comment-166919. Albeit "I suppose one might draw conclusions from the fact that, when I was humorously imagining what sort of benefit I could get from exploiting this amazing phenomenon, my System 1 thought that having the world not end before 2030 seemed like the most I could reasonably ask." | |

[Cotra][10:01] @RobBensinger sounds like the joke is that he thinks timelines are even shorter, which strengthens my claim about strong timing predictions? Now that we clarified up-thread that Eliezer's position is not that there was a giant algorithmic innovation in between chimps and humans, but rather that there was some innovation in between dinosaurs and some primate or bird that allowed the primate/bird lines to scale better, I'm now confused about why it still seems like Eliezer expects a major innovation in the future that leads to deep/general intelligence. If the evidence we have is that evolution had some innovation like this, why not think that the invention of neural nets in the 60s or the invention of backprop in the 80s or whatever was the corresponding innovation in AI development? Why put it in the future? (Unless I'm misunderstanding and Eliezer doesn't really place very high probability on "AGI is bottlenecked by an insight that lets us figure out how to get the deep intelligence instead of the shallow one"?) Also if Eliezer would count transformers and so on as the kind of big innovation that would lead to AGI, then I'm not sure we disagree. I feel like that sort of thing is factored into the software progress trends used to extrapolate progress, so projecting those forward folds in expectations of future transformers But it seems like Eliezer still expects one or a few innovations that are much larger in impact than the transformer? I'm also curious what Eliezer thinks of the claim "extrapolating trends automatically folds in the world's inadequacy and stupidness because the past trend was built from everything happening in the world including the inadequacy" | |

[Yudkowsky][10:24] Ajeya asked before, and I see I didn't answer:

If you mean the best/luckiest people, they're already there. If you mean that say Mike Blume starts getting paid $20m/yr base salary, then I cheerfully say that I'm willing to call that a narrower prediction of the Paulverse than of the Eliezerverse.

Well, of course, because now it's a headline figure and Goodhart's Law applies, and the Earlier point where this happens is where somebody trains a useless 10T param model using some much cheaper training method like MoE just to be the first to get the headline where they say they did that, if indeed that hasn't happened already. But even apart from that, a 10T param model sure sounds lots like a steady stream of headlines we've already seen, even for cases where it was doing something useful like GPT-3, so I would not feel surprised by more headlines like this. I will, however, be alarmed (not surprised) relatively more by ability improvements, than headline figure improvements, because I am not very impressed by 10T param models per se. In fact I will probably be more surprised by ability improvements after hearing the 10T figure, than my model of Paul will claim to be, because my model of Paul much more associates 10T figures with capability increases. Though I don't understand why this prediction success isn't more than counterbalanced by an implied sequence of earlier failures in which Paul's model permitted much more impressive things to happen from 1T Goodharted-headline models, that didn't actually happen, that I expected to not happen - eg the current regime with MoE headlines - so that by the time that an impressive 10T model comes along and Imaginary Paul says 'Ah yes I claim this for a success', Eliezer's reply is 'I don't understand the aspect of your theory which supposedly told you in advance that this 10T model would scale capabilities, but not all the previous 10T models or the current pointless-headline 20T models where that would be a prediction failure. From my perspective, people eventually scaled capabilities, and param-scaling techniques happened to be getting more powerful at the same time, and so of course the Earliest tech development to be impressive was one that included lots of params. It's not a coincidence, but it's also not a triumph for the param-driven theory per se, because the news stories look similar AFAICT in a timeline where it's 60% algorithms and 40% params." | |

[Cotra][10:35] MoEs have very different scaling properties, for one thing they run on way fewer FLOP/s (which is just as if not more important than params, though we use params as a shorthand when we're talking about "typical" models which tend to have small constant FLOP/param ratios). If there's a model with a similar architecture to the ones we have scaling laws about now, then at 10T params I'd expect it to have the performance that the scaling laws would expect it to have Maybe something to bet about there. Would you say 10T param GPT-N would perform worse than the scaling law extraps would predict? It seems like if we just look at a ton of scaling laws and see where they predict benchmark perf to get, then you could either bet on an upward or downward trend break and there could be a bet? Also, if "large models that aren't that impressive" is a ding against Paul's view, why isn't GPT-3 being so much better than GPT-2 which in turn was better than GPT-1 with little fundamental architecture changes not a plus? It seems like you often cite GPT-3 as evidence for your view But Paul (and Dario) at the time predicted it'd work. The scaling laws work was before GPT-3 and prospectively predicted GPT-3's perf | |

[Yudkowsky][10:55] I guess I should've mentioned that I knew MoEs ran on many fewer FLOP/s because others may not know I know that; it's an obvious charitable-Paul-interpretation but I feel like there's multiple of those and I don't know which, if any, Paul wants to claim as obvious-not-just-in-retrospect. Like, ok, sure people talk about model size. But maybe we really want to talk about gradient descent training ops; oh, wait, actually we meant to talk about gradient descent training ops with a penalty figure for ops that use lower precision, but nowhere near a 50% penalty for 16-bit instead of 32-bit; well, no, really the obvious metric is the one in which the value of a training op scales logarithmically with the total computational depth of the gradient descent (I'm making this up, it's not an actual standard anywhere), and that's why this alternate model that does a ton of gradient descent ops while making less use of the actual limiting resource of inter-GPU bandwidth is not as effective as you'd predict from the raw headline figure about gradient descent ops. And of course we don't want to count ops that are just recomputing a gradient checkpoint, ha ha, that would be silly. It's not impossible to figure out these adjustments in advance. But part of me also worries that - though this is more true of other EAs who will read this, than Paul or Carl, whose skills I do respect to some degree - that if you ran an MoE model with many fewer gradient descent ops, and it did do something impressive with 10T params that way, people would promptly do a happy dance and say "yay scaling" not "oh wait huh that was not how I thought param scaling worked". After all, somebody originally said "10T", so clearly they were right! And even with respect to Carl or Paul I worry about looking back and making "obvious" adjustments and thinking that a theory sure has been working out fine so far. To be clear, I do consider GPT-3 as noticeable evidence for Dario's view and for Paul's view. The degree to which it worked well was more narrowly a prediction of those models than mine. Thing about narrow predictions like that, if GPT-4 does not scale impressively, the theory loses significantly more Bayes points than it previously gained. Saying "this previously observed trend is very strong and will surely continue" will quite often let you pick up a few pennies in front of the steamroller, because not uncommonly, trends do continue, but then they stop and you lose more Bayes points than you previously gained. I do think of Carl and Paul as being better than this. But I also think of the average EA reading them as being fooled by this. | |

[Shulman][11:09] The scaling laws experiments held architecture fixed, and that's the basis of the prediction that GPT-3 will be along the same line that held over previous OOM, most definitely not switch to MoE/Switch Transformer with way less resources.

| |

[Yudkowsky][11:10] You can redraw your graphs afterwards so that a variant version of Moore's Law continued apace, but back in 2000, everyone sure was impressed with CPU GHz going up year after year and computers getting tangibly faster, and that version of Moore's Law sure did not continue. Maybe some people were savvier and redrew the graphs as soon as the physical obstacles became visible, but of course, other people had predicted the end of Moore's Law years and years before then. Maybe if superforecasters had been around in 2000 we would have found that they all sorted it out successfully, maybe not. So, GPT-3 was $12m to train. In May 2022 it will be 2 years since GPT-3 came out. It feels to me like the Paulian view as I know how to operate it, says that GPT-3 has now got some revenue and exhibited applications like Codex, and was on a clear trend line of promise, so somebody ought to be willing to invest $120m in training GPT-4, and then we get 4x algorithmic speedups and cost improvements since then (iirc Paul said 2x/yr above? though I can't remember if that was his viewpoint or mine?) so GPT-4 should have 40x 'oomph' in some sense, and what that translates to in terms of intuitive impact ability, I don't know. | |

[Shulman][11:18] The OAI paper had 16 months (and is probably a bit low because in the earlier data people weren't optimizing for hardware efficiency much): https://openai.com/blog/ai-and-efficiency/

Projecting this: https://arxiv.org/abs/2001.08361 | |

[Yudkowsky][11:19] 30x then. I would not be terribly surprised to find that results on benchmarks continue according to graph, and yet, GPT-4 somehow does not seem very much smarter than GPT-3 in conversation. | |

[Shulman][11:20] There are also graphs of the human impressions of sense against those benchmarks and they are well correlated. I expect that to continue too.

| |

[Yudkowsky][11:21] Stuff coming uncorrelated that way, sounds like some of the history I lived through, where people managed to make the graphs of Moore's Law seem to look steady by rejiggering the axes, and yet, between 1990 and 2000 home computers got a whole lot faster, and between 2010 and 2020 they did not. This is obviously more likely (from my perspective) to break down anywhere between GPT-3 and GPT-6, than between GPT-3 and GPT-4. Is this also part of the Carl/Paul worldview? Because I implicitly parse a lot of the arguments as assuming a necessary premise which says, "No, this continues on until doomsday and I know it Kurzweil-style." | |

[Shulman][11:23] Yeah I expect trend changes to happen, more as you go further out, and especially more when you see other things running into barriers or contradictions. Re language models there is some of that coming up with different scaling laws colliding when the models get good enough to extract almost all the info per character (unless you reconfigure to use more info-dense data). | |

[Yudkowsky][11:23] Where "this" is the Yudkowskian "the graphs are fragile and just break down one day, and their meanings are even more fragile and break down earlier". | |

[Shulman][11:25] Scaling laws working over 8 or 9 OOM makes me pretty confident of the next couple, not confident about 10 further OOM out. |

16 comments

Comments sorted by top scores.

comment by johnswentworth · 2021-12-03T20:45:43.920Z · LW(p) · GW(p)

I read this line:

I also correctly bet that vaccine approval and deployment would be historically unprecedently fast and successful due to the high demand).

... and I was like "there is no way in hell that this was unprecedentedly fast". The first likely-counterexample which sprang to mind was the 1957 influenza pandemic, so I looked it up. The timeline goes roughly like this:

The first cases were reported in Guizhou of southern China, in 1956[6][7] or in early 1957.[1][3][8][9] They were soon reported in the neighbouring province of Yunnan in late February or early March 1957.[9][10] By the middle of March, the flu had spread all over China.[9][11]

The People's Republic of China was not a member of the World Health Organization at the time (not until 1981[12]), and did not inform other countries about the outbreak.[11] The United States CDC, however, states that the flu was "first reported in Singapore in February 1957".

[...]

The microbiologist Maurice Hilleman was alarmed by pictures of those affected by the virus in Hong Kong that were published in The New York Times. He obtained samples of the virus from a US Navy doctor in Japan. The Public Health Service released the virus cultures to vaccine manufacturers on 12 May 1957, and a vaccine entered trials at Fort Ord on 26 July and Lowry Air Force Base on 29 July.[20]

The number of deaths peaked the week ending 17 October, with 600 reported in England and Wales.[18] The vaccine was available in the same month in the United Kingdom.

So it sure sounds like they were faster in 1957. (Though that article does note that the 1957 vaccine "was initially available only in limited quantities". Even so, it sounds like it took about 5 months to do what took us about 11 months in the 2020 pandemic.)

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2021-12-03T21:39:59.763Z · LW(p) · GW(p)

My understanding is that the correct line is something like, "The COVID-19 vaccines were developed and approved unprecedentedly fast, excluding influenza vaccines." If you want to find examples of short vaccine development, you don't need to go all the way back to the 1957 influenza pandemic. For the 2009 Swine flu pandemic,

Analysis of the genetic divergence of the virus in samples from different cases indicated that the virus jumped to humans in 2008, probably after June, and not later than the end of November,[38] likely around September 2008... By 19 November 2009, doses of vaccine had been administered in over 16 countries.

And more obviously, the flu shot is modified yearly to keep up-to-date with new variants. Wikipedia notes that influenza vaccines were first successfully distributed in the 1940s, after developement began in 1931.

When considering vaccines other than influenza shot, this 2017 EA forum post [EA · GW] from Peter Wildeford is informative. He tracks the development history of "important" vaccines, as he notes,

This is not intended to be an exhaustive list of all vaccines, but is intended to be exhaustive of all vaccines that would be considered "important", such as the vaccines on the WHO list of essential medicines and notable vaccines under current development.

His bottom line:

Taken together and weighing these three sources of evidence evenly, this suggests an average of 29 years for the typical vaccine.

No vaccine on his list had been researched, manufactured, and distributed in less than one year. The closest candidate is the Rabies vaccine, which had a 4 year timeline, from 1881-1885.

comment by RomanS · 2021-12-04T20:48:04.443Z · LW(p) · GW(p)

so, like, if I was looking for places that would break upward, I would be like "universal translators that finally work"

The story of DeepL might be of relevance.

DeepL has created a translator that is noticeably better than Google Translate. Their translations are often near-flawless.

The interesting thing is: DeepL is a small company that has OOMs less compute, data, and researchers than Google.

Their small team has beaten Google (!), at the Google's own game (!), by the means of algorithmic innovation.

Replies from: Owain_Evans↑ comment by Owain_Evans · 2021-12-05T15:59:17.070Z · LW(p) · GW(p)

Interesting. Can you point to a study by external researchers (not DeepL) that compares DeepL to other systems (such as Google Translate) quantitatively? After a quick search, I could only find one paper, which was just testing some tricky idioms in Spanish and didn't find significant differences between DeepL and Google. (Wikipedia links to this archived page of comparisons conducted by DeepL but there's no information about the methodology used and the difference in performance seem too big to be credible to me.)

Replies from: RomanS↑ comment by RomanS · 2021-12-05T17:21:10.618Z · LW(p) · GW(p)

The primary source of my quality assessment is my personal experience with both Google Translate and DeepL. I speak 3 languages, and often have to translate between them (2 of them are not my native languages, including English).

As I understand, making such comparisons in a quantitative manner is tricky, as there are no standardized metrics, there are many dimensions of translation quality, and the quality strongly depends on the language pair and the input text.

Google Scholar lists a bunch of papers that compare Google Translate and DeepL. I checked a few, and they're all over the place. For example, one claims that Google is better, another claims that they score the same, and yet another claims that DeepL is better.

My tentative conclusion: by quantitative metrics, DeepL is in the same league as Google Translate, and might be better by some metrics. Which is still an impressive achievement by DeepL, considering the fact that they have orders-of-magnitude less data, compute, and researchers than Google.

Replies from: matthew-barnett↑ comment by Matthew Barnett (matthew-barnett) · 2021-12-05T18:19:56.582Z · LW(p) · GW(p)

My tentative conclusion: by quantitative metrics, DeepL is in the same league as Google Translate, and might be better by some metrics. Which is still an impressive achievement by DeepL, considering the fact that they have orders-of-magnitude less data, compute, and researchers than Google.

Do they though? Google is a large company, certainty, but they might not actually give Google Translate researchers a lot of funding. Google gets revenue from translation by offering it as a cloud service, but I found this thread from 2018 where someone said,

Google Translate and Cloud Translation API are two different products doing some similar functions. It is safe to assume they have different algorithms and differences in translations are not only common but expected.

From this, it appears that there is little incentive for Google to improve the algorithms on Google Translate.

Replies from: RomanS↑ comment by RomanS · 2021-12-05T19:04:58.628Z · LW(p) · GW(p)

We could try to guesstimate how much money Google spends on the R&D for Google Translate.

Judging by these data, Google Translate has 500 million daily users, or 11% of the total Internet population worldwide.

Not sure how much it costs Google to run a service of such a scale, but I would guesstimate that it's in the order of ~$1 bln / year.

If they spend 90% of the total Translate budget on keeping the service online, and 10% on the R&D, they have ~$100 mln / year on the R&D, which is likely much more than the total income of DeepL.

It is unlikely that Google is spending ~$100 mln / year on something without a very good reason.

One of the possible reasons is training data. Judging by the same source, Google Translate generates 100 billion words / day. It means, Google gets about the same massive amount of new user-generated training data per day.

The amount is truly massive: thanks to Google Translate, Google gets the Library-of-Congress worth of new data per year, multiplied by 10.

And some of the new data is hard to get by other means (e.g. users trying to translate their personal messages from a low-resource language like Basque or Azerbaijani).

Replies from: T3t↑ comment by RobertM (T3t) · 2021-12-06T02:22:06.561Z · LW(p) · GW(p)

I think your estimate for the operating costs (mostly compute) of running a service that has 500 million daily users (with probably well under 10 requests per user on average, and pretty short average input) is too high by 4 orders of magnitude, maybe even 5. My main uncertainty is how exactly they're servicing each request (i.e. what % of requests are novel enough to actually need to get run though some more-expensive ML model instead of just returning a cached result).

5 billion requests per day translates to ~58k requests per second. You might have trouble standing up a service that handles that level of traffic on a 10k/year compute budget if you buy compute on a public cloud (i.e. AWS/GCP) but those have pretty fat margins so the direct cost to Google themselves would be a lot lower.

comment by Rob Bensinger (RobbBB) · 2021-12-03T21:03:18.878Z · LW(p) · GW(p)

Note: I've written up short summaries of each entry in this sequence so far on https://intelligence.org/late-2021-miri-conversations/, and included links to audio recordings of most of the posts.

comment by Sammy Martin (SDM) · 2021-12-09T11:49:35.651Z · LW(p) · GW(p)

Compare this,

[Shulman][22:18]

We're in the Eliezerverse with huge kinks in loss graphs on automated programming/Putnam problems.

Not from scaling up inputs but from a local discovery that is much bigger in impact than the sorts of jumps we observe from things like Transformers.

[Yudkowsky][22:21]

but, sure, "huge kinks in loss graphs on automated programming / Putnam problems" sounds like something that is, if not mandated on my model, much more likely than it is in the Paulverse. though I am a bit surprised because I would not have expected Paul to be okay betting on that.

to this,

[Rohin] To the extent this is accurate, it doesn't seem like you really get to make a bet that resolves before the end times, since you agree on basically everything until the point at which Eliezer predicts that you get the zero-to-one transition on the underlying driver of impact.

Eliezer does (at least weakly) expect more trend breaks before The End (even on metrics that aren't qualitative measures of impressiveness/intelligence, but just things like model loss functions), despite the fact that Rohin's summary of his view is (I think) roughly accurate.

What explains this? I think it's something roughly like, part of the reason Eliezer expects a sudden transition when we reach the core of generality in the first place is because he thinks that's how things usually go in the history of tech/AI progress - there's also specific reasons to think it will happen in the case of finding the core of generality, but there are also general reasons [LW(p) · GW(p)]. See e.g. this from Eliezer:

well, the Eliezerverse has more weird novel profitable things, because it has more weirdness

I take 'more weirdness' to mean something like more discoveries that induce sudden improvements out there in general.

So I think that's why his view does make (weaker) differential predictions about earlier events that we can test, not because the zero-to-one core of generality hypothesis predicts anything about narrow AI progress, but because some of the beliefs that led to that hypothesis do.

We can see there's two (connected) lines of argument and that Eliezer and Paul/Carl/Richard have different things to say on each - 1 is more localized and about seeing what we can learn about AGI specifically, and 2 is about reference class reasoning and what tech progress in general tells us about AGI:

- Specific to AGI: What can we infer from human evolution and interrogating our understanding of general intelligence (?) about whether AGI will arrive suddenly?

- Reference Class: What can we infer about AGI progress from the general record of technological progress, especially how common big impacts are when there's lots of effort and investment?

My sense is that Eliezer answers

- Big update for Eliezer's view: This tells us a lot, in particular we learn evolution got to the core of generality quickly, so AI progress will probably get there quickly as well. Plus, Humans are an existence proof for the core of generality, which suggests our default expectation should be sudden progress when we hit the core.

- Smaller update for Eliezer's view: This isn't that important - there's no necessary connection between AGI and e.g. bridges or nukes. But, you can at least see that there's not a strong consistent track record of continuous improvement once you understand the historical record the right way (plus the underlying assumption in 2 that there will be a lot of effort and investment is probably wrong as well). Nonetheless, if you avoid retrospective trend-fitting and look at progress in the most natural (qualitative?) way, you'll see that early discoveries that go from 0 to 1 are all over the place - Bitcoin, the Wright flyer, nuclear weapons are at least not crazy exceptions and quite possibly the default.

While Paul and Carl(?) answer,

- Smaller update for Paul's view: The disanalogies between AI progress and Evolution all point in the direction of AI progress being smoother than evolution (we're intelligently trying to find the capabilities we want) - we get a weak update in favour of the smooth progress view from understanding this disanalogy between AI progress and evolution, but really we don't learn much, except that there aren't any good reasons to think there are only a few paths to a large set of powerful world affecting capabilities. Also, the core of generality idea is wrong, so the idea that Humans are an existence proof for it or that evolution tells us something about how to find it is wrong.

- Big update for Paul's view: reasoning from the reference class of 'technologies where there are many opportunities for improvement and many people trying different things at once' lets us see why expecting smooth progress should be the default. It's because as long as there are lots of paths to improvement in the underlying capability landscape (which is the default because that's how the world works by default), and there are lots of people trying to make improvements in different ways, the incremental changes add up to smooth outputs.

So Eliezer's claim that Paul et al's trend-fitting must include

doing something sophisticated but wordless, where they fit a sophisticated but wordless universal model of technological permittivity to bridge lengths, then have a wordless model of cognitive scaling in the back of their minds

is sort of correct, but the model isn't really sophisticated or wordless.

The model is: as long as the underlying 'capability landscape' offers many paths to improvements, not just a few really narrow ones that swamp everything else, lots of people intelligently trying lots of different approaches will lead to lots of small discoveries that add up. Additionally, most examples of tech progress look like 'multiple ways of doing something that add up', this is confirmed by the historical record.

And then the model of cognitive scaling consists of (among other things) specific counterarguments to the claim that AGI progress is one of those cases with a few big paths to improvement (e.g. Evolution doesn't give us evidence that AGI progress will be sudden).

[Shulman]

As I work through sectors and the rollout of past automation I see opportunities for large-scale rollout that is not heavily blocked by regulation...[Long list of examples]

[Yudkowsky]

so... when I imagine trying to deploy this style of thought myself to predict the recent past without benefit of hindsight, it returns a lot of errors. perhaps this is because I do not know how to use this style of thought...

..."There are many possible regulatory regimes in the world, some of which would permit rapid construction of mRNA-vaccine factories well in advance of FDA approval. Given the overall urgency of the pandemic some of those extra-USA vaccines would be sold to individuals or a few countries like Israel willing to pay high prices for them, which would provide evidence of efficacy and break the usual impulse towards regulatory uniformity among developed countries..."

On Carl's view, it sure seems like you'd just say something like "Healthcare is very overregulated, there will be an unusually strong effort anyway in lots of countries because Covid is an emergency, so it'll be faster by some hard to predict amount but still bottlenecked by regulatory pressures." And indeed the fastest countries got there in ~10 months instead of the multiple years predicted by superforecasters, or the ~3 months it would have taken with immediate approval [EA · GW].

The obvious object-level difference between Eliezer 'applying' Carl's view to retrodict covid vaccine rollout and Carl's prediction about AI is that Carl is saying there's an enormous number of potential applications of intermediately general AI tech, and many of them aren't blocked by regulation, while Eliezer's attempted operating of Carl's view for covid vaccines is saying "There are many chances for countries with lots of regulatory barriers to do the smart thing".

The vaccine example is a different argument than AI predictions, because what Carl is saying is that there are many completely open goals for improvement like automating factories and call centres etc. not that there are many opportunities to avoid the regulatory barriers that will block everything by default.

But it seems like Eliezer is making a more outside view appeal, i.e. approach stories where big innovations are used wisely with a lot of scepticism because of our past record, even if you can tell a story about why it will be quite different this time.

comment by Gram Stone · 2021-12-04T00:58:18.951Z · LW(p) · GW(p)

Now that we clarified up-thread that Eliezer's position is not that there was a giant algorithmic innovation in between chimps and humans, but rather that there was some innovation in between dinosaurs and some primate or bird that allowed the primate/bird lines to scale better

Where was this clarified...? My Eliezer-model says "There were in fact innovations that arose in the primate and bird lines which allowed the primate and bird lines to scale better, but the primate line still didn't scale that well, so we should expect to discover algorithmic innovations, if not giant ones, during hominin evolution, and one or more of these was the core of overlapping somethingness that handles chipping handaxes but also generalizes to building spaceships."

If we're looking for an innovation in birds and primates, there's some evidence of 'hardware' innovation rather than 'software.'

For a speculative software innovation hypothesis, there seem to be cognitive adaptations that arose in the LCA of all anthropoids for mid-level visual representations e.g. glossiness, above the level of, say, lines at a particular orientation, and below the level of natural objects, which seem like an easy way to exapt into categories, then just stack more layers for generalization and semantic memory. These probably reduce cost and error by allowing the reliable identification of foraging targets at a distance. There seem to be corresponding cognitive adaptations for auditory representations that strongly correlate with foraging targets, e.g. calls of birds that also target the fruits of angiosperm trees. Maybe birds and primates were under similar selection for mid-level visual representations that easily exapt into categories, etc.

comment by lsusr · 2021-12-04T00:44:59.830Z · LW(p) · GW(p)

Whenever birds are an outlier I ask myself "is it because birds fly?" Bird cells (especially bird mitochondria) are intensely optimized for power output because flying demands a high power-to-weight ratio. I think bird cells' individually high power output produces brains that can perform more (or better) calculations per unit volume/mass.

comment by calef · 2021-12-04T01:28:46.727Z · LW(p) · GW(p)

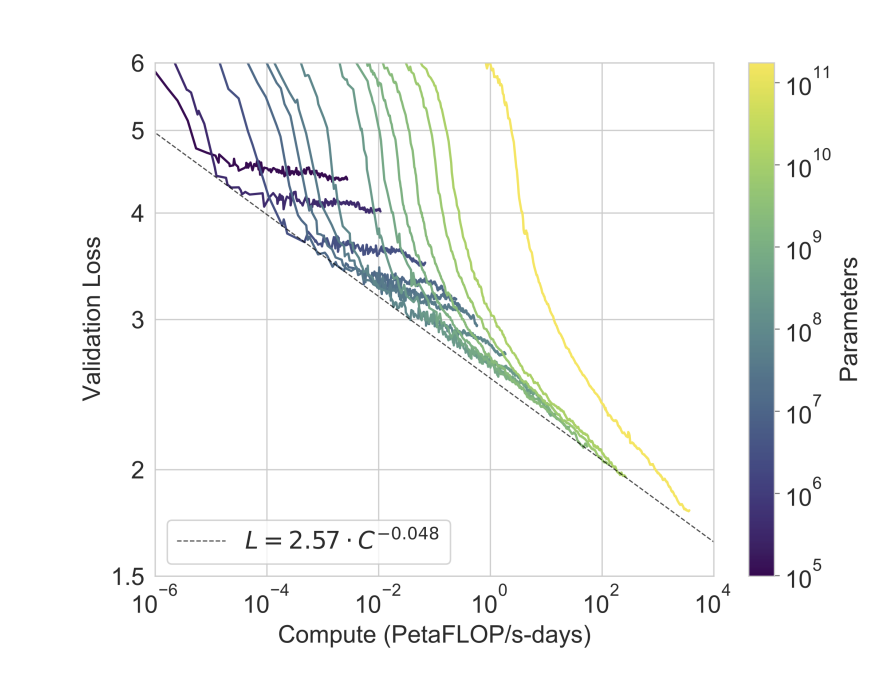

For what it's worth, the most relevant difficult-to-fall-prey-to-Goodheartian-tricks measure is probably cross entropy validation loss, as shown in this figure from the GPT-3 paper:

Serious scaling efforts are much more likely to emphasize progress here over Parameter Count Number Bigger clickbait.

Further, while this number will keep going down, we're going to crash into the entropy of human generated text at some point. Whether that's within 3 OOM or ten is anybody's guess, though.

comment by Veedrac · 2021-12-04T01:30:21.136Z · LW(p) · GW(p)

I have some comments on things Eliezer has said. I don't expect these disagreements are very important to the main questions, because I tend to agree with him overall despite it.

my worldview also permits but does not mandate that you get up to the chimp level [...]

A naïve model of intelligence is a linear axis, that puts everything on a simple trendline with one-dimensional distances. I assume most people here understand that this is a gross oversimplification. Intelligence is made of multiple pieces, which can have unique strengths. There is still such a thing as generality of intelligence, that at some point you have enough tools to dynamically apply your reasoning to a great many more things than those tools were originally adapted for. This ability does seem to have some degree of scale, in that a human is more general than a chimp is more general than a mouse, though it also seems to be fairly sharp-edged, in that the difference in generality between a human and a chimp seems much greater than between a chimp and a mouse.

Because of the great differences between computer systems and biological ones, the individual components of computer intelligence (whether necessary for generality or not) when measured relative to a human tend to jump quickly between zero, when the program doesn't have that ability, and effectively infinite, when the program has that ability. There is also a large set of relations between capabilities whereby one ability can substitute for another, typically at the cost of some large factor reduction in performance.

A traditional chess engine has several component skills, like searching moves and updating decision trees, that it does vastly better than a human. This ability feeds down into some metrics of positional understanding. Positional understanding is not a particularly strong fundamental ability of a traditional chess engine, but rather something inefficiently paid for with its other skills that it does have in excess. The same idea holds for human intelligence, where we can use our more fundamental evolved skills, like object recognition, to build more complex skills. Because we have a broad array of baseline skills, and the enough tools to combine them to fit novel tasks, we can solve a much wider array of tasks, and can transfer domains with generally less cost than computers can. Nonetheless, there exist cognitive tasks we know can be done well that are outside of human mental capability.

When I envision AI progress leading up to AGI, I don't think of a single figure of merit that increases uniformly. I think of a set of capabilities, of which some are ~0, some are ~∞, and others are derived quantities not explicitly coded in. Scale advances in NNs push the effective infinities to greater effective infinities, and by extension push up the derived quantities across the board. Fundamental algorithmic advances increase the set of capabilities at ~infinity. At some point I expect the combination of fundamental and derived quantities to capture enough facets of cognition to push generality past a tipping point. In the run up to that point, lesser levels of generality will likely make AI systems applicable to more and more extensions of their primary domains.

This seems to me like it's mostly, if not totally, a literal interpretation of the world. Yet, to finally get to the point, nowhere in my map do I have a clear interpretation of what “get up to the chimp level” means. The degree to which chimps are generally intelligent seems very specific to the base skill set that chimps have, and it seems much more likely than not that AI will approach it from a completely different angle, because their base skillset is completely different and will generalize in a completely different way. The comment that “chimps are not very valuable” does not seem to map onto any relevant comment about pre-explosion AI. I do not know what it would mean to have a chimp level AI, or even chimp level generality.

I would not be terribly surprised to find that results on benchmarks continue according to graph, and yet, GPT-4 somehow does not seem very much smarter than GPT-3 in conversation.

I would be quite surprised for a similar improvement in perplexity not to correspond to at least a similar improvement in apparent smartness, versus GPT-3 over GPT-2.

I would not be surprised for the perplexity improvement to level off, maybe not immediately but at least in some small count of generations, as it seems entirely reasonable that there are some aspects of cognition that GPT-style models can't purchase. But for perplexity to improve on cue without an apparent improvement in intelligence, while logically coherent, would imply some very weird things about either the entropy of language or the scaling of model capacity.

That is, either language has a bunch of easy to access regularity between what GPT-3 reached and what an agent with a more advanced understanding of the world could reach, distributed coincidentally in line with previous capability increases, or GPT-3 roughly caps out the semantic capabilities of the network, but extra parameters added on top are still practically identically effective at extracting a huge amount of more marginal non-semantic regularities at a rate fast enough to compete with prior model scale increases that did both.

Stuff coming uncorrelated that way, sounds like some of the history I lived through, where people managed to make the graphs of Moore's Law seem to look steady by rejiggering the axes, and yet, between 1990 and 2000 home computers got a whole lot faster, and between 2010 and 2020 they did not.

There is truth to this comment, in that Dennard scaling fell around the turn of the millenia, but the 2010s were deceptive in that home computers stagnated in performance despite Moore's Law improvements, because Intel sold you your CPUs, were stuck on an old node, being stuck on an old node prevented them from pushing out new architectures, and their monopoly position meant they never really needed to compete on price or core count either.

But Moore's Law did continue, just through TSMC, and the corresponding performance improvements were felt primarily in GPUs and mobile SoCs. Both of those have improved at a great pace. In the last three years competition has returned to the desktop CPU market, and Intel has just managed to get out of their node crisis, so CPU performance really is picking up steam again. This is true both for per-core performance, driven by architectures making use of the great many transistors available, and even moreso true of aggregate performance, what with core counts in the Steam Survey increasing from an average of 3.0 in early 2017 to an average of 5.0 in April this year.

You are correct that the scaling regimes are different now, and Dennard scaling really is dead for good, but if you look back at the original Moore's Law graphs from 1965, they never mentioned frequency, so I don't buy the claim that the graphs have been rejigged.

comment by DanielFilan · 2022-01-17T23:14:34.635Z · LW(p) · GW(p)

One thing Carl notes is that a variety of areas where AI could contribute a lot to the economy are currently pretty unregulated. But I think there's a not-crazy story where once you are within striking range of making an area way more efficient with computers, then the regulation hits. I'm not sure how to evaluate how right that is (e.g. I don't think it's the story of housing regulation), but just wanted it said.

comment by mary coca (mary-coca) · 2022-03-04T07:35:55.769Z · LW(p) · GW(p)

I want to thank you for the great article!! I definitely enjoyed every bit of it. I've bookmarked it to check out the new stuff you post.