DARPA Digital Tutor: Four Months to Total Technical Expertise?

post by SebastianG (JohnBuridan) · 2020-07-06T23:34:21.089Z · LW · GW · 22 commentsContents

The Digital Tutor Experience How did they build the Digital Tutor? Digging into the results Where to go from here None 22 comments

DARPA spent a few million dollars around 2009 to create the world’s best digital tutoring system for IT workers in the Navy. I am going to explain their results, the system itself, possible limitations, and where to go from here.

It is a truth universally acknowledged that a single nerd having read Ender’s Game must be in want of the Fantasy Game. The great draw of the Fantasy Game is that the game changes with the player and reflects the needs of the learner growing dynamically with him/her. This dream of the student is best realized in the world of tutoring, which while not as fun, is known to be very, very effective. Individualized instruction can make students jump to the 98 percentile compared to non tutored students. DARPA poked at this idea with their Digital Tutor trying to answer this question: How close to the expertise and knowledge base of well-experienced IT experts can we get new recruits in 16 weeks using a digital tutoring system?

I will say the results upfront, but before I do, I want to do two things. First pause to note the audacity of the project. Some project manager thought, “I bet we can design a system for training that is as good as 5 years on the job experience.” This is astoundingly ambitious. I love it! Second a few caveats. Caveat 1) Don’t be confused. Technical training is not the same as education. The goals in education are not merely to learn some technical skills like reading, writing, and arithmetic. Getting any system to usefully measure things like inculturation, citizenship, moral uprightness, and social mores is not yet something any system can do, let alone a digital system. Caveat 2) Online classes have notoriously high attrition rates, drop rates, and no shows. Caveat 3) Going in we should not expect the digital tutor to be as good as a human tutor. A human tutor likely can catch nuances that a digital tutor, no matter how good cannot. Caveat 4) Language processing technology, chat bots, and AI systems are significantly better in 2020 than they were 2009, so we should be forgiving if the DARPA IT program is not as good as it would be if the experiment were rerun today.

All these caveats, I think should give us a reason to adjust our mental score of the Digital Tutor a few clicks upward and give it some credit. However, this charitable read of the Digital Tutor that I started with when reading the paper turned out to be unnecessary. The Digital Tutor students outperformed traditionally taught students and field experts in solving IT problems on the final assessment. They did not merely meet the goal of being as good after 16 weeks as experts in the field, but they actually outperformed them. This is a ridiculously positive outcome, and we need to look closely to see what parts of this story are believable and make some conjectures for why this happened and some bets about whether it will replicate.

The Digital Tutor Experience

We will start with the Digital Tutor student experience. This will give us the context we need to understand the results.

Students (cadets?) were on the same campus and in classrooms with their computers which ran the Digital Tutor program. A uniformed Naval officer proctored each day for their 16 week course. The last ‘period’ of the day was a study hall with occasional hands-on practice sessions led by the Naval officer. This set-up is important for a few reasons, in my opinion. There is a shared experience among the students of working on IT training, plus the added accountability of a proctor keeps everyone on task. This social aspect is very important and powerful compared to the dissipation experienced by the lone laborer at home on the computer. This social structure completely counteracts caveat 2 above. The Digital Tutor is embedded in a social world where the students are not given the same level of freedom to fail that a Coursera class offers.

Unlike many learning systems, the Digital Tutor had no finishing early option. Students had on average one week to complete a module, but the module would continuously teach, challenge, and assess for students who reached the first benchmark. “Fast-paced learners who reached targeted levels of learning early were given more difficult problems, problems that dealt with related subtopics that were not otherwise presented in the time available, problems calling for higher levels of understanding and abstraction, or challenge problems with minimal (if any) tutorial assistance.” Thus the ceiling was very high and kept the high flyers engaged.

As for pedagogical method “[The Digital Tutor] presents conceptual material followed by problems that apply the concepts and are intended to be as authentic, comprehensive, and epiphanic as those obtained from years of IT experience in the Fleet. Once the learner demonstrates sufficient understanding of the material presented and can explain and apply it successfully, the Digital Tutor advances either vertically, to the next higher level of conceptual abstraction in the topic area, or horizontally, to new but related topic areas.” Assessment of the students throughout is done by the Conversation Module in the DT which offers hints, asks leading questions, and requests clarifications of the student’s reasoning. If there is a problem or hangup, the Digital Tutor will summon the human proctor to come help (the paper does not give any indication of how often this happened).

At the end of the 16 weeks, the students trained by the Digital Tutor squared off in a three way two week assessment comparing them to a group which was trained in a 35 week classroom program and experienced Fleet technicians. Those trained by the Digital Tutor significantly outperformed both groups.

- At least four patterns were repeated across the different performance measures:

- With the exception of the Security exercise, Digital Tutor participants outperformed the Fleet and ITTC participants on all other tests.

- Differences between Fleet and ITTC participants were generally smaller and neither consistently positive nor negative.

- On the Troubleshooting exercises, which closely resemble Navy duty station work, Digital Tutor teams substantially outscored Fleet ITs and ITTC graduates, with higher scores at every difficulty level, less harm to the system, and fewer unnecessary steps.

- In individual tests of IT knowledge, Digital Tutor graduates also substantially outscored Fleet ITs and ITTC graduates.

How did they build the Digital Tutor?

This process was long, arduous, and expensive. First they recruited subject area experts and had them do example tutoring sessions. They took the best tutors from among the subject area experts and had 24 of them tutor students one-on-one in their sub-domain of expertise. Those students essentially received a one-on-one 16 week course. Those sessions were all recorded and served as the template for the Digital Tutor.

A content author (usually a tutor) and content engineer would work together to create the module for each sub-domain while a course architect oversaw the whole course and made sure everything fit together.

The Digital Tutor itself has four layers: 1) a framework for the IT ontologies and feature extraction, 2) an Inference Engine to judge the students understanding/misunderstanding, 3) an Instruction Engine to decide what topics/problems to serve up next, a Conversation Module which uses natural language to prod the student to think through the problem and create tests for their understanding, and 4) a Recommender to call in a human tutor when necessary.

I would like to know a lot more about this, so if anyone could point me in a good direction to learn how to efficiently do some basic Knowledge Engineering that would be much appreciated.

So in terms of personnel we are talking 24 tutors, about 6 content authors, a team of AI engineers, several iterations through each module with test cohorts, and several proctors throughout the course, and maybe a few extra people to set up the virtual and physical problem configurations. Given this expense and effort, it will not be an easy task to try and replicate their results in a separate domain or even the same one. One note in the paper that I found obscure is that the paper claimed the Direct Tutor “is, at present, expensive to use for instruction.” What does this mean? Once the thing is built, besides the tutors/teachers - which you would need for any course of study, what makes it expensive at present? I’m definitely confused here.

Digging into the results

The assessment of the 3 groups in seven categories showed the superiority of the Digital Tutoring system in everything but Security. For whatever reason they could not get a tutor to be part of the development of the Security module, so that module was mostly lecture. Interestingly though, if we were to assume all else to be equal, then this hole in the Digital Tutor program serves to demonstrate the effectiveness of the program design through a via negativa.

In any case the breakdown of performance in the seven categories, I think is pretty well captured in the Troubleshooting assessment.

“Digital Tutor teams attempted a total of 140 problems and successfully solved 104 of them (74%), with an average score of 3.78 (1.91). Fleet teams attempted 100 problems and successfully solved 52 (52%) of them, with an average score of 2.00 (2.26). ITTC teams attempted 87 problems and successfully solved 33 (38%) of them, with an average score of 1.41 (2.09).”

Similar effects are true across the board, but that is not what interests me exactly, because I want to know about question type. Indeed, what makes this study so eye catching is that it is NOT a spaced-repetition-is-the-answer-to-life paper in disguise (yes, spaced-repetition is the bomb, but I contend that MOST of what we want to accomplish in education can’t be reinforced by spaced-repetition, but oh hell, is it good for language acquisition!).

The program required students to employ complicated concepts and procedures that were more than could be captured by a spaced-repetition program. “Exercises in each IT subarea evolve from a few minutes and a few steps to open-ended 30–40 minute problems.” (I wonder what the time-required distribution is for real life IT problems for experts?) So this is really impressive! The program is asking students and experienced Fleet techs to learn how to solve large actual problems aboard ships and is successful on that score. We should be getting really excited about this! Remember in 16 weeks these folks were made into experts.

Well let’s consider another possibility… what if IT system network maintenance is a skill set that is, frankly, not that hard? You can do this for IT, but not for Captains of a ship, Admirals of a fleet, or Program Managers in DARPA. Running with this argument a little more, perhaps the abstract reasoning and conceptual problem solving in IT is related to the lower level spaced-repetition skills in a way that for administrators, historians, and writers it is not. The inferential leap, in other words, from the basics to expert X-Ray vision of problems is lower in IT than in other professional settings. Perhaps. And I think this argument has merit to it. But I also think this is one of those examples of raising the bar for what “true expertise” is, because the old bar has been reached. To me, it is totally fair to say that some IT problems do require creative thinking and a fully functional understanding of a system to solve. That the students of the Direct Tutor (and its human adjuncts) outperformed the experts on unnecessary steps and avoided causing more problems than they fixed is some strong evidence that this program opened the door to new horizons.

Where to go from here

From here I would like to learn more about how to create AI systems like this and try it out with the first chapter of AoPS Geometry. I could test this in a school context against a control group and see what happens.

Eventually, I would like to see if something like this could work for AP European History and research and writing. I want someone to start pushing these program strategies into the social sciences, humanities and other soft fields, like politics (elected members to government could have an intensive course so they don’t screw everything up immediately).

Another thing I would be interested to see is a better platform for making these AI networks. Since creating something of this sort can only be done by expert programmers, content knowledge experts can’t gather together to create their own Digital Tutors. This is a huge bottleneck. If we could put a software suite together that was only moderately more easy than the current difficulty of creating a fully operational knowledge environment from scratch that could have an outsized effect on education within a few years.

22 comments

Comments sorted by top scores.

comment by johnswentworth · 2020-07-08T02:42:05.376Z · LW(p) · GW(p)

Was there any attempt to check generalizability of the acquired skills? One concern I have is that the digital tutor may have just taught-to-the-test, since the people who built the system presumably knew what the test questions were and how students would be tested.

Replies from: ryan_b↑ comment by ryan_b · 2020-07-20T20:23:26.721Z · LW(p) · GW(p)

The test was developed for the purpose, but in the Facilities section I found the following:

Facilities Participants were tested in three separate classrooms provided by the San Diego Naval Base, which hosted the assessment. Each classroom contained three IT systems—one physical system, with a full complement of servers and software (such as Microsoft Server, Windows XP, Microsoft Exchange, CISCO routers and switches, and the Navy’s COMPOSE overlay to Windows) and two identical virtual systems running on virtualized hardware with the same software. The systems were designed to mirror those typically found on Navy vessels and duty stations. The software was not simulated. Participants interacted directly with software used in the Fleet.

So they built actual IT systems in the classroom environment, and then they broke 'em on purpose. This is pretty common in both the IT and military fields as a training technique.

That being said, the environment of a ship is quite a bit more rigidly defined than a corporate one would be, which makes it more predictable along several dimensions. This may weigh slightly against the generalizability desiderata.

comment by Alexei · 2020-07-07T16:27:31.964Z · LW(p) · GW(p)

Wow, that's very cool! This is actually the closest existing thing to Arbital I've heard about. I guess to Eliezer's point, it does sound like they built this system in one go, without doing much of MVP-kind of iteration. But also... damn! From the sound of it, there are a lot of complicated components, and a lot of manual work that went into the whole thing. If those are the minimum requirements to build something like that, then I'm not sure we ever had a chance.

But the good news is: if this really works, then I think Arbital is totally doable and would be just the online version of this. The remaining difficult part would be monetization, but in retrospect it's quite possible Arbital should have been a non-profit.

Replies from: ryan_b↑ comment by ryan_b · 2020-07-20T20:50:50.059Z · LW(p) · GW(p)

without doing much of MVP-kind of iteration

Military contracting is too controlled by far to allow for this kind of thing, unless it is being paid for out of a black budget; we have a hard time learning about those for the obvious reasons.

comment by Chipmonk · 2024-04-21T20:06:07.703Z · LW(p) · GW(p)

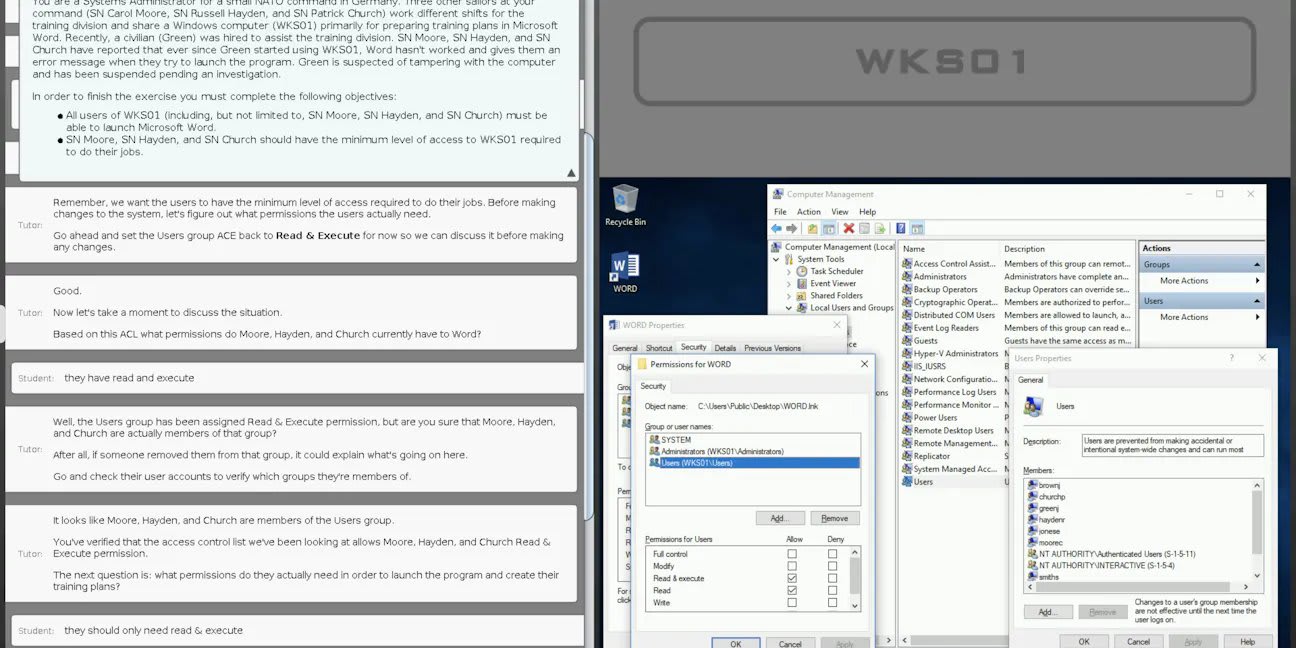

Andy Matuschak @andymatuschak [LW · GW]:

Finally found a single actual screenshot of the DARPA Digital Tutor (sort of—a later commercial adaptation). Crazy-making that there were zero figures in any of the papers about its design, and not enough details to imagine one.

Some observations:

* An instructional interface is presented alongside a live machine.

* Student presented with a concrete task to achieve in the live system.

* The training system begins by “discussing the situation”, probing the student’s understanding with q's, and responding with appropriate feedback and follow-up tasks.

* It can observe the student’s actions in the live system and respond appropriately.

* The instructional interface uses a text-conversational modality.

* I see strong influence from Graesser's AutoTutor, and some from Anderson's Cognitive Tutors.

(from https://www.edsurge.com/news/2020-06-09-how-learning-engineering-hopes-to-speed-up-education )

https://twitter.com/andy_matuschak/status/1782095737096167917

comment by Viliam · 2020-07-11T11:53:18.489Z · LW(p) · GW(p)

Thank you, this is awesome!

I believe that (some form of) digital education would improve education tremendously, simply because the existing system does not scale well. The more people you want to teach, the more teachers you need, and at some moment you run out of competent teachers, and then we all know what happens. Furthermore, education competes for the competent people with industry, which is inevitable because we need people to teach stuff, but also people to actually do stuff.

With digital education, the marginal cost of the additional student is much smaller. Even translating the resources to a minority language is cheaper and simpler than producing the original, and only needs to be done once per language. So, just like today everyone can use Firefox, for free (assuming they already have a computer, and ignoring the costs of electric power), in their own language, perhaps one day the same could be said about Math, as explained by the best teachers, with the best visual tools, optimized for kids, etc. One can dream.

On the other hand, digital education brings its own problems. And it is great to know that someone already addressed them seriously... and even successfully, to some degree.

One note in the paper that I found obscure is that the paper claimed the Direct Tutor “is, at present, expensive to use for instruction.” What does this mean? Once the thing is built, besides the tutors/teachers - which you would need for any course of study, what makes it expensive at present?

I am only guessing here, but I assume the human interventions were 1:1, so if a student would spend about 5% of their time talking to a tutor, you would need one tutor per 20 students, which is the same ratio you would have in an ordinary classroom. The tutors would have to be familiar with the digital system, so you couldn't replace them with a random teacher, and they would be more expensive. (Plus you need the proctor.)

In long term, I assume that if the topic is IT-related, the lessons would have to be updated relatively often. Twice so for IT security.

I still wonder what would happen if we tried the same system to teach e.g. high-school math. My hope is that with wider user base the frequency of human interventions (per student) could be reduced, simply because the same problems would appear repeatedly, so the system could adapt by adding extra explanations created the same way (record what human tutors do during interventions, make a new video).

comment by riceissa · 2020-11-03T23:39:08.674Z · LW(p) · GW(p)

Does anyone know if the actual software and contents for the digital tutor are published anywhere? I tried looking in the linked report but couldn't find anything like that there. I am feeling a bit skeptical that the digital tutor was teaching anything difficult. Right now I can't even tell if the digital tutor was doing something closer to "automate teaching people how to use MS Excel" (sounds believable) vs "automate teaching people real analysis given AP Calculus level knowledge of math" (sounds really hard, unless the people are already competent at self-studying).

Replies from: gwern, andymatuschak↑ comment by gwern · 2021-09-14T01:18:55.096Z · LW(p) · GW(p)

I ran into this review of Accelerated Expertise about a book (on LG) about a Air Force/DoD thing that sounds very similar, and may give the overall paradigm.

Replies from: riceissa↑ comment by riceissa · 2021-09-20T06:41:45.101Z · LW(p) · GW(p)

Thanks. I read the linked book review but the goals seem pretty different (automating teaching with the Digital Tutor vs trying to quickly distill and convey expert experience (without attempting to automate anything) with the stuff in Accelerated Expertise). My personal interest in "science of learning" stuff is to make self-study of math (and other technical subjects) more enjoyable/rewarding/efficient/effective, so the emphasis on automation was a key part of why the Digital Tutor caught my attention. I probably won't read through Accelerated Expertise, but I would be curious if anyone else finds anything interesting there.

↑ comment by andymatuschak · 2021-05-25T03:16:25.008Z · LW(p) · GW(p)

I've not had success finding these details, unfortunately—I've been curious about them too.

Replies from: JohnBuridan↑ comment by SebastianG (JohnBuridan) · 2021-07-15T16:42:56.524Z · LW(p) · GW(p)

I understood the problems to be of the sort one would learn in becoming whatever the Navy ship equivalent is to CISCO Administrator. It really seems to have been a training program for all the sys admin IT needs of a Navy ship.

Here are some examples of problems they had to solve:

Establish a fault-tolerant Windows domain called SOTF.navy.mil to support the Operation.

Install and configure an Exchange server for SOTF.navy.mil.

Establish Internet access for all internal client machines and servers.

comment by Gordon Seidoh Worley (gworley) · 2020-07-07T16:14:31.663Z · LW(p) · GW(p)

These are really impressive results! The main thing I wonder is to what extent we should consider this system AI. Maybe I missed this detail, but this sounds like a really impressive expert system turned to the problem if teaching, not a system we would identifying as AI today. Does that seem like a fair assessment?

Replies from: adamShimicomment by ChristianKl · 2020-07-08T20:44:44.026Z · LW(p) · GW(p)

One key question is whether they taught to the test or whether the testing actually transfers to real world settings. Do you have an idea about that?

comment by Chipmonk · 2024-02-21T00:41:25.704Z · LW(p) · GW(p)

Synthesis says worked with DARPA to make a tool to teach kids math: https://www.synthesis.com/tutor

(Also, I asked a friend who worked there about this last summer and, if I recall correctly, they said it does not use LLMs, it's something else.)

comment by alexey · 2020-07-16T08:16:49.455Z · LW(p) · GW(p)

It's also interesting that apparently field experts only did about as well as the traditional students:

Differences between Fleet and ITTC participants were generally smaller and neither consistently positive nor negative.

Does experience not help at all?

Replies from: crl826comment by Mackenzie Karkheck (mackenzie-karkheck) · 2020-07-12T17:32:02.606Z · LW(p) · GW(p)

The KB system being used by DARPA is cyc.com. It's the oldest, largest and as far as I'm aware best of classical AI systems. I believe you can research with it for free, but it may be expensive to deploy commercially.

Why AP History vs. Mathematics? Why not predicate calculus which is regarded as a requisite for developing such systems?

comment by DirectedEvolution (AllAmericanBreakfast) · 2020-07-08T15:02:46.921Z · LW(p) · GW(p)

I have no trouble believing this software works. More power to you for wanting to spread this technology!

It seems like a hard problem will be that the idiosyncratic curriculums used throughout the school system will make interoperability a challenge. Students won’t want to sink a lot of time studying on this software if it’s not directly helping them prepare for their exam in two weeks.

I’m sure it’s not insurmountable. For example, you could design a chemistry curriculum that’s focused on a particular textbook. Students could use their syllabus to tell the software the order in which chapters will be taught and what their upcoming exam will cover. Alternatively, you could do it by topic so that it’s divorced from any particular textbook.

Replies from: Studenttutor↑ comment by Studenttutor · 2021-01-10T18:06:22.685Z · LW(p) · GW(p)

I see your point students want to know that what they are studying will help them with a test. This is a problem in teaching. We teach to the book not to the out world experience. The point is hands on in actual doing to relate to the outside world in order to make learning engaging for the student. Go out into the world you’re not going to be given a test to pass with a grade. You will be given real world problems you need to solve a test won’t help you with that all it does is reinforce memorization without critical thinking. This is what education lacks, critical thinking. Take a student that knows math add world world applications to the math in order to solve a problem. Student will learn better, more engaged and have confidence that this works even when I’m out of school and can apply it.

comment by goodkovsky@gmail.com · 2024-03-05T18:07:00.215Z · LW(p) · GW(p)

Deficiencies of AI Tutor:

- No systemic organization of curricula to ensure systemic knowledge/skills and good retention (though vertical + horizontal organization is already good)

- No root-cause diagnosing and effective remediation or supporting learning from mistakes

- No Large Language Models to deepen, automate, and accelerate authoring (job analysis, specification, structuring, and development of curriculum).

If you are looking for "a better platform for making these AI networks" and exclude those deficiencies then I can recommend to take a look at www.itutorsoft.com. One of its early versions has been developed for NAVY in 1997-2004 and then constantly improved in later versions. They are interested in collaboration and partnership