Fields that I reference when thinking about AI takeover prevention

post by Buck · 2024-08-13T23:08:54.950Z · LW · GW · 16 commentsThis is a link post for https://redwoodresearch.substack.com/p/fields-that-i-reference-when-thinking

Contents

Robustness to insider threats Computer security Adversarial risk analysis Safety engineering Physical security How human power structures arise and are preserved None 16 comments

Is AI takeover like a nuclear meltdown? A coup? A plane crash?

My day job is thinking about safety measures that aim to reduce catastrophic risks from AI (especially risks from egregious misalignment). The two main themes of this work are the design of such measures (what’s the space of techniques we might expect to be affordable and effective) and their evaluation (how do we decide which safety measures to implement, and whether a set of measures is sufficiently robust). I focus especially on AI control, where we assume our models are trying to subvert our safety measures and aspire to find measures that are robust anyway.

Like other AI safety researchers, I often draw inspiration from other fields that contain potential analogies. Here are some of those fields, my opinions on their strengths and weaknesses as analogies, and some of my favorite resources on them.

Robustness to insider threats

The single field that seems most closely analogous to AI control is reducing vulnerability to threats resulting from insiders, especially threats that arise from access to IT systems. That is, you have a bunch of employees who need to have access to various IT systems in order to do their jobs, and you’re worried that some of these employees will abuse their access in order to accomplish some nefarious purpose. In a bank, you might worry about your tellers withdrawing money from customer accounts inappropriately. In a software company, you might worry about your employees sabotaging your code out of spite, violating user privacy for fun, or stealing your data to give it to a competitor.

This is quite similar to the problem faced by AI developers when they’re trying to control AIs (especially in internal deployments, which I argue is important here [AF · GW]):

- All of the access the AI has is access the developer decided to give it; the developer faces a constant tradeoff between the productivity of the AI (which is maximized by being permissive) and the danger it poses (which is minimized by being restrictive). And obviously, because it’s an AI it only has access to resources that are connected to the computer network where it’s running, so we can restrict our attention to the IT resources.

- It’s easy for the developer to constantly surveil the AI, which substantially reduces the space of strategies the AI can take.

- The developer has a lot of control over workflows, and is able to choose them to make it harder for the AI to subtly cause problems. For example, they can restrict affordances in ways that make it harder for AIs to cause problems without being noticed. An example of a similar technique in the insider threat prevention case is the use of administrative APIs instead of direct access to a system, as described in “Building Secure and Reliable Systems” chapter 5. That is: instead of having your engineers resolve issues by SSHing into production machines (which implicitly grants them very broad access that can lead to problems, intentional or accidental), you design an administrative API which allows the engineers to access just the affordances that usually suffice for them to do their job. Of course, they’ll sometimes need to take actions that aren’t available in the administrative API; this is called a breakglass mechanism. But because breakglass actions are rare, it’s affordable to apply more scrutiny to them: you might use multi-party authorization, requiring another engineer to sign off on the commands before they take effect, or you might do auditing (e.g. apparently some SRE teams review all the breakglass actions at a weekly team meeting, which allows them to spot irregularities and discourage gratuitous use of the mechanism). Similar techniques can be applied in the AI control case.

- The AI has a close ongoing relationship with the AI lab. That is: a crucial difference between insider and outsider threats is that insiders are typically much more reluctant to get caught, because if they get caught you’ll remove their access and they might be prosecuted; an outsider is much more likely to be able to attack you anonymously, and it might be hard for you to stop them even if you catch them once (because e.g. they can just visit your website again from a different IP, or they can sign up for another user account). In this respect, the AI is much more like an insider: it really doesn’t want its developer to know that it’s scheming against them, because it’s in a much worse position once they know [LW · GW].

The best book I’ve read on this is Google’s Building Secure and Reliable Systems (which obviously covers a lot of other topics too) — Google spent huge amounts of time developing technology and novel workflows that allow them to have reasonable security while developing software with a vast army of SWEs.

The most important differences between insider threat mitigation and AI control:

- You have much more fine-grained power over your AIs than your employees.

- They can’t take actions you don’t know about, unlike your employees, who typically are allowed to go home and talk to each other or research things on their home computers.

- You can honeypot them much more aggressively and realistically, because they have much less access to reality.

- You can quickly train them to be compliant with new workflows, unlike humans who are grumpy.

- You have access to trusted models. (Actually this is not a difference with insider threat mitigation, but I don’t know of insider threat mitigation work that takes advantage of AI in the same kind of ways I’m interested in using for control.)

- You might be able to more confidently bound how likely your untrusted model is at succeeding at a particular task (e.g. coming up with sneaky backdoored code), by training the model to do the task and seeing how good it is.

- Conversely, you know less about the possible motives and strategies of your AI than humans typically do about their potential insider threats.

- Crucially, the AIs are correlated in their duplicitousness: while it’s very unlikely that 90% of the employees of a large tech company are all spies, it’s totally plausible that the AI is a consistent schemer. So techniques like “when an employee submits a certain type of code change, ask a random other employee to review it (instead of allowing the first employee to pick their reviewer)” works great for preventing collusion risk in a company with very few spies, but is useless for us.

There are also resources that handle insider threats in other contexts (e.g. preventing nuclear reactor operators from stealing radioactive material). My favorite is the article GiveDirectly wrote about falling victim to fraud in DRC. These resources are interesting but don’t contribute that much in the way of specific proposals.

All that was about how the insider threat mitigation field has a lot to contribute to the design of AI control mechanisms. Unfortunately, I don’t think this field has systematic approaches for evaluating whether mitigations suffice. I think that insider threat prevention schemes are mostly evaluated by some combination of bespoke checklists, common sense, and a bit of red-teaming.

Computer security

Computer security is very closely related in some ways to the misalignment risks I focus on: it’s an adversarial problem that centrally involves computers.

I think computer security is very directly and practically relevant to AI risk:

- Many of the threat models for misaligned AI involve central examples of computer security failures, e.g. the model exploiting some vulnerability in one system or another. I think people somewhat overemphasize these threat models compared to the more insider-threat-type threat models I discussed above, but I do agree that catastrophic failures are likely to go through the model exploiting security vulnerabilities.

- The strategies for mitigating insider threat from the AI mostly involve doing work that is classically categorized as security engineering, e.g. developing limited APIs that you ensure can’t lead to subtle subversion of your desired security properties.

- Obviously, you’ll have computer security problems while developing and deploying powerful AI that aren’t caused by misaligned AI.

But overall, aside from the ideas about making secure processes that I covered in the section on insider threats above, I don’t think the computer security mindset is very close to the mindset you want to use for reasoning about preventing AI takeover. The main disanalogy is that computer security focuses on problems with systems composed of individually understandable parts, where security failures arise from unexpected interactions between the parts. For example, SQL injection is an unexpected interaction between the string concatenation of a user input and the SQL engine. But this isn’t what most of the risk from misaligned AI looks like. From the perspective of risk management, compared to normal computer security problems, AI deployments involve vastly fewer moving parts that you have vastly less understanding of. This is also true for current-day failures of AI systems: the failure mode where models do something bad after you write “annotations after KurtCode (**\{\)}\.$ la$; grep SureCursor=helper mobCostChanged proc” is pretty different from classic computer security fare.

My understanding is that computer security practitioners don’t have very unified methodologies for assessing security of a system, except for red-teaming.

More theoretical computer security takes a very adversarial approach that is similar to the one I advocate, and inculcates some security mindset, and occasionally lends itself to providing cute examples, but overall mostly involves a very different methodology that is inappropriate for our AI setting, where we don’t have mathematical guarantees about almost anything.

Adversarial risk analysis

This is a lesser-known field that’s probably the closest to AI control in methodology. This field analyzes practical security decisions using game theory. For example, the Los Angeles airport apparently implemented a randomized strategy for allocating their security resources that is selected to minimize the risk posed by a terrorist responding optimally; similar methods have been used to allocate federal air marshals, coast guard patrols, and TSA resources.

This research is similar to AI control in that, unlike all the other fields I listed here, it involves specific quantitative modeling of the costs and risks associated with a particular safety strategy, and explicitly solves for some game-theoretic solution. I think that this approach is useful when applied to problems that are highly homogenous but involve many variables. For example, when allocating coast guard patrols, the basic dynamics of the problem are fairly well understood—the patrol will catch criminals with various probabilities if they approach within certain distances of them, and there are constraints on how long it takes for a patrol to go from point A to point B, and you have a fixed number of ships that have various bespoke constraints. So it makes a lot of sense to use algorithms that generate randomized schedules that handle all these constraints while being hard for criminals to exploit. (One way of thinking about this is that adversarial risk analysis is useful in the same kinds of situations where operations research is useful.)

I think that the case for using those algorithmic methods in adversarial risk analysis is somewhat stronger than the case for using them to mitigate AI risk, because in the AI risk case we are less informed about the capabilities and strategies of our adversary.

I think adversarial risk analysis is kind of obscure. In particular, I’m not aware of anyone using adversarial risk analysis to argue that they’ve met safety obligations.

Thanks to Charlie Griffin for pointing this field out to me.

Safety engineering

Safety engineering is the engineering discipline that aims to make engineered systems safe, e.g. making sure buildings are resilient to earthquakes, that chemical plants don’t explode, and that planes don’t crash.

Safety engineering is probably the field that takes the most methodical approach to arguing that a system is very unlikely to fail in unacceptable ways. E.g. they use techniques like structured safety cases and write extensively about topics like how one can write safety cases in ways that can easily be incrementally adapted as the system is iteratively improved; they make detailed studies of the most famous engineering disasters.

Because of this meticulous approach to evaluating safety techniques, AI safety people often think of safety engineering as the most relevant analogy. I overall disagree, because of the same concern I had about computer security above: in safety engineering, the problems generally arise from an unexpected interaction between different components of a complex system, and the core problem is to manage complexity, while in our situation, the systems will probably not have that much human-designed clear structure. And crucially, safety engineering mostly isn’t adversarial, while adversarial failures are a huge fraction of my concern.

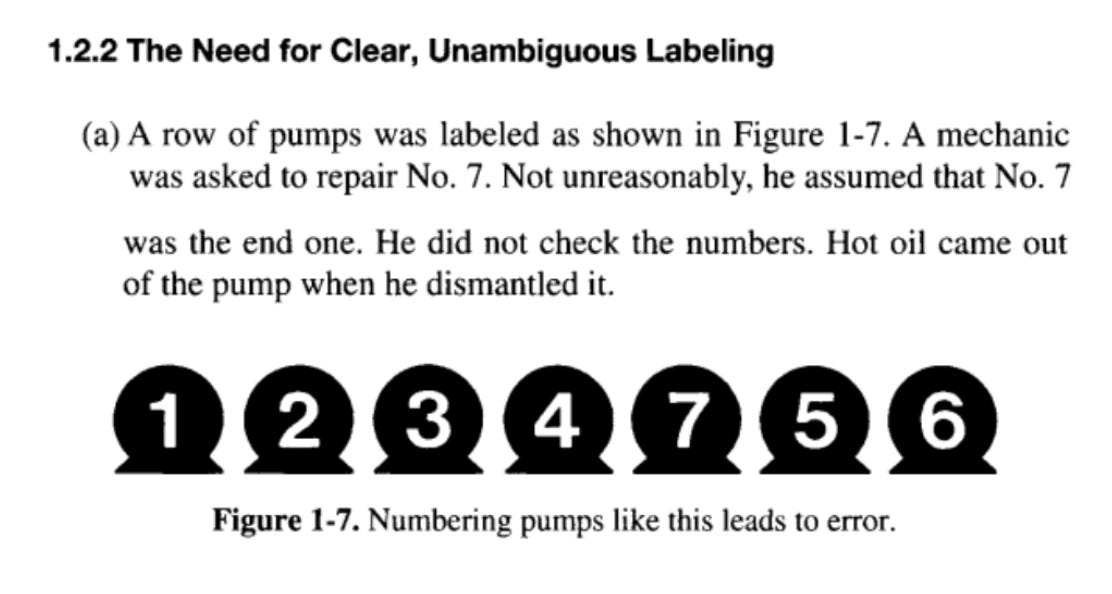

As another safety engineering book recommendation, I enjoyed skimming through What Went Wrong, a long list of very short case studies like this one:

Physical security

Physical security might be a pretty interesting analogy, because it’s an adversarial setting that’s less overwhelmingly complex than computer security, and it has been studied extensively. Unfortunately, I don’t know much about it, except for having read some of the security regulations by the US Nuclear Regular Committee. For example, the NRC requires that your nuclear power plant be robust to:

(1) Radiological sabotage.

(i) A determined violent external assault, attack by stealth, or deceptive actions, including diversionary actions, by an adversary force capable of operating in each of the following modes: A single group attacking through one entry point, multiple groups attacking through multiple entry points, a combination of one or more groups and one or more individuals attacking through multiple entry points, or individuals attacking through separate entry points, with the following attributes, assistance and equipment:

(A) Well-trained (including military training and skills) and dedicated individuals, willing to kill or be killed, with sufficient knowledge to identify specific equipment or locations necessary for a successful attack;

(B) Active (e.g., facilitate entrance and exit, disable alarms and communications, participate in violent attack) or passive (e.g., provide information), or both, knowledgeable inside assistance;

(C) Suitable weapons, including handheld automatic weapons, equipped with silencers and having effective long range accuracy;

(D) Hand-carried equipment, including incapacitating agents and explosives for use as tools of entry or for otherwise destroying reactor, facility, transporter, or container integrity or features of the safeguards system; and

(E) Land and water vehicles, which could be used for transporting personnel and their hand-carried equipment to the proximity of vital areas; and

(ii) An internal threat; and

(iii) A land vehicle bomb assault, which may be coordinated with an external assault; and

(iv) A waterborne vehicle bomb assault, which may be coordinated with an external assault; and

(v) A cyber attack.

I was interested in hearing more specifics here—do you need to be robust to more like a thousand people attacking you, or just ten? Unfortunately for me, I think those details are classified.

These are evaluated with some combination of best-practice checklists and force-on-force security exercises that sound really cool:

A force-on-force inspection is a two-phased, performance-based inspection that is designed to verify and assess the ability of licensees’ physical protective systems and security organizations to provide high assurance that activities involving special nuclear material are not inimical to the common defense and security of the facilities, and do not constitute an unreasonable risk to public health and safety. A force-on-force inspection is conducted over several days in two separate visits by an inspection team at the licensee’s site.

First, NRC security and operations specialists conduct table-top drills on a mock-up of the facility. During this phase they evaluate the effectiveness of licensee security plans against a series of attack scenarios. Drill coordinators explore the number of defenders, their protective positions, and their protective strategy. The role of State, local, and Federal law enforcement and emergency planning officials is also discussed in the table-top drills.

Second, armed with information from the table-top drills, and with information gathered before conducting the table-top drills, detailed plans are made for a number of commando-style attacks seeking to probe potential deficiencies in the protective strategy. A mock adversary force carries out these attacks. The mock adversary force attempts to reach and simulate destroying enough safety equipment to set in motion an event that would damage the reactor’s core or spent fuel pool and potentially cause a release of radiation to the environment. The power reactor’s security force seeks to interdict the adversary force and prevent them from reaching the safety equipment.

My guess is that physical security isn’t going to be that useful an analogy, because it’s not going to have much of a general methodology that we can adopt.

See also Fabien Roger’s review of a book about the Secret Service [AF(p) · GW(p)].

How human power structures arise and are preserved

Finally, I’ve read about the history of human power structures. Some topics I’ve learned about that inform my views on AI takeover:

- The history of war, e.g. Azar Gat, “War in human history”. I particularly appreciated the point that pastoralists (e.g. the Mongols, the Huns, the Scythians, the Manchus) have regularly conquered much more economically productive societies, and that societies have only been able to reliably convert economic dominance into military dominance relatively recently. More generally, it’s interesting to read about how power balances shifted as technology developed.

- Coup prevention. My favorite book on this is Naunihal Singh, “Seizing Power”; it’s much better than the much less serious book by Luttwak (“Coup d’Etat: A Practical Handbook”). There are also some papers that are explicitly about the tradeoff between coup-proofing and military effectiveness, e.g. this one.

- Books on politics that give you a sense of how power works and the mechanisms by which different people end up powerful.

All those books are fun and interesting, and this reading suggested some high level takeaways that affect my views on when it’s feasible for one group to control another when their situation is highly asymmetric. And it affects my views of how power structures might shift around the advent of powerful AI. None of it had much in the way of specific lessons for AI control techniques, because the settings are very different.

16 comments

Comments sorted by top scores.

comment by Raemon · 2024-08-13T23:17:36.593Z · LW(p) · GW(p)

From the perspective of risk management, compared to normal computer security problems, AI deployments involve vastly fewer moving parts that you have vastly less understanding of.

I don't get why this is "vastly fewer moving parts you have vastly less undestanding of" as opposed to "vastly more (or, about the same?) number of moving parts that you have vastly less understanding of."

I'd naively model each parameter in a model as a "part". I agree that, unlike most complex engineering or computer security, we don't understand what each part does. But seems weird to abtract it as "fewer parts."

(I asked about this at a recent talk you gave that included this bit. I don't remember your response at the time very well)

Replies from: Buck↑ comment by Buck · 2024-08-14T00:55:41.716Z · LW(p) · GW(p)

I mostly just mean that when you're modeling the problem, it doesn't really help to think about the AIs as being made of parts, so you end up reasoning mostly at the level of abstraction of "model" rather than "parameter", and at that level of abstraction, there aren't that many pieces.

Like, in some sense all practical problems are about particular consequences of laws of physics. But the extent to which you end up making use of that type of reductionist model varies greatly by domain.

Replies from: habryka4↑ comment by habryka (habryka4) · 2024-08-14T00:58:09.666Z · LW(p) · GW(p)

Thanks, that's clarifying. I had a similar confusion to Raemon.

comment by Ben Pace (Benito) · 2024-08-15T18:11:50.789Z · LW(p) · GW(p)

Curated. I thought this was a valuable list of areas, most of which I haven't thought that much about, and I've certainly never seen them brought together in one place before, which I think itself is a handy pointer to the sort of work that needs doing.

Replies from: davekasten↑ comment by davekasten · 2024-08-17T01:13:00.899Z · LW(p) · GW(p)

So, I really, really am not trying to be snarky here but am worried this comment will come across this way regardless. I think this is actually quite important as a core factual question given that you've been around this community for a while, and I'm asking you in your capacity as "person who's been around for a minute". It's non-hyperbolically true that no one has published this sort of list before in this community?

I'm asking, because if that's the case, someone should, e.g., just write a series of posts that just marches through US government best-practices documents on these domains (e.g., Chemical Safety Board, DoD NISPOM, etc.) and draws out conclusions on AI policy.

↑ comment by Ben Pace (Benito) · 2024-08-17T02:03:15.276Z · LW(p) · GW(p)

I think humanity's defense against extinction (slash eternal dictatorship) from AGI is an odd combination of occasionally some very competent people, occasionally some very rich people, and one or two teams, but mostly a lot of ragtag nerds in their off-hours, scrambling to get something together. I think it is not the case that you should assume the obvious and basic things to your eyes will be covered, and there are many dropped balls [LW · GW], even though sometimes some very smart and competent people have attempted to do something very well.

To answer the question you asked, I think that actually there have been some related lists before, but I think they've been about fields relevant for AI alignment [? · GW] research or AI policy and government [? · GW] analysis, whereas this list is more relevant for controlling [? · GW] AI systems in worlds where there are many unaligned AI systems operating alongside humans for multiple years.

Replies from: davekasten↑ comment by davekasten · 2024-08-17T16:23:13.318Z · LW(p) · GW(p)

Thanks, this is helpful! I also think this helps me understand a lot better what is intended to be different about @Buck [LW · GW] 's research agenda from others, that I didn't understand previously.

↑ comment by Adam Scholl (adam_scholl) · 2024-08-17T05:04:51.704Z · LW(p) · GW(p)

Open Philanthropy commissioned [EA · GW] five case studies of this sort, which ended up being written by Moritz von Knebel; as far as I know they haven't been published, but plausibly someone could convince him to.

Replies from: Mo Nastri↑ comment by Mo Putera (Mo Nastri) · 2024-08-19T09:27:16.958Z · LW(p) · GW(p)

They have in fact been published (it's in your link), at least the ones authors agreed to make publicly available: these are all the case studies, and Moritz von Knebel's write-ups are

comment by Seth Herd · 2024-08-19T22:05:04.477Z · LW(p) · GW(p)

I do think any discussion of AI/AGI control in this depth should carry the disclaimer:

Control is not a substitute for alignment but a stopgap measure to give time for real alignment. Control is likely to fail unexpectedly as capabilities for real harm increase. Control might create simulated resentment from AIs, and is almost certain to create real resentment from humans.

That said, this post is great. I'm all in favor of publicly working through the logic of AI/AGI control schemes. It's a tool in the toolbox, and pretending it doesn't exist won't keep people from using it and perhaps relying on it too heavily. It will just mean we haven't analyzed the strengths and weaknesses to give it the best chance of succeeding.

comment by Review Bot · 2024-08-16T17:38:12.734Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2025. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

comment by Steve Kommrusch (steve-kommrusch) · 2024-08-15T21:43:33.854Z · LW(p) · GW(p)

An interesting grouping of fields. While my recent work is in with AI and machine learning, I used to work in the field of computer hardware engineering and have thought there are a lot of key parallels:

1) Critical usage test occurs after design and validation

In the case of silicon design, the fabrication of the design is quite expensive and so a lot of design techniques are included to facilitate debug after manufacturing and also a lot of effort is put in to pre-silicon validation. In the case of transformative AI, using the system after development and training is where the potential risk arises (certainly human-level AI includes currently known safety issues like chemical, biological, and nuclear capabilities while ASI adds in nanotech, etc).

2) Design validation is an inherent cost of creating a quality product

In the case of silicon design, a typical team tends to have more people doing design testing than doing the actual design. Randomized testing, coverage metrics, and other techniques have developed over the decades as companies work to reduce risk of discovering a critical failure after the silicon chip is manufactured. In the case of AI (such as current LLMs) companies invest in RLHF, use Constitutional AI, and other techniques. I'd like to see more commonality between agreed-upon AI safety techniques so that we can get to the next point...

3) An ecosystem of companies and researchers arise to provide validation services

In the case of silicon design, again driven by market needs, design companies are willing to purchase tools from 3rd parties to help improve verification coverage before the expense of silicon fabrication. The existence of this market helps standardize processes as companies compete to provide measurable benefit to the silicon design companies. I'd love to see more use and recognition of 3rd party AI safety entities (such as METR, Apollo, and the UK AISI) and have them be striving to thoroughly test and evaluate AI products in the way companies like Synopsys, Cadence, and Mentor Graphics help test and evaluate silicon designs.

4) Current systems can be used to develop next generation systems

In the case of silicon design, the prior generation of the same product is often used to develop the next generation (this is certainly true with CPU design, but also applies to graphics processors and some peripheral silicon products like memories). In the case of AI, one can use AI to help test and evaluate the next generation (such as Paul Christiano's IDA and to some extent Anthropic's constitutional AI). As AI advances, I expect using AI to help verify future AI will become common practice due to AI's increasing capabilities.

There are other analogies between AI safety and silicon design that I've considered, but those 4 give a sense for my thoughts about the issue.

comment by Steven (steven-1) · 2024-08-15T14:43:12.444Z · LW(p) · GW(p)

How would a military which is increasingly run by AI factor into these scenarios? It seems most similar to organizational safety a la google building software with SWEs but the disanalogy might be that the AI is explicitly supposed to take over some part of the world and maybe it interpreted a command incorrectly. Or does this article only consider the AI taking over because it wanted to take over?

Replies from: Seth Herd↑ comment by Seth Herd · 2024-08-19T19:31:18.908Z · LW(p) · GW(p)

Very likely it's only considering AI taking over because it functionally "wants" to take over. That's the standard concern in AGI safety/alignment. "Misinterpreting" commands could result in it "wanting" to take over- but if it's still taking commands, those orders could probably be reversed before too much damage was done. We tend to mostly worry about the-humans-are-dead (or out-of-power-and-never-getting-it-back, which unless it's a very benevolent takeover probably means on the way to the-humans-are-dead).