Posts

Comments

In light of recent works on automating alignment and AI task horizons, I'm (re)linking this brief presentation of mine from last year, which I think stands up pretty well and might have gotten less views than ideal:

The first automatically produced, (human) peer-reviewed, (ICLR) workshop-accepted[/able] AI research paper: https://sakana.ai/ai-scientist-first-publication/

There have been numerous scandals within the EA community about how working for top AGI labs might be harmful. So, when are we going to have this conversation: contributing in any way to the current US admin getting (especially exclusive) access to AGI might be (very) harmful?

[cross-posted from X]

I find the pessimistic interpretation of the results a bit odd given considerations like those in https://www.lesswrong.com/posts/i2nmBfCXnadeGmhzW/catching-ais-red-handed.

I also think it's important to notice how much less scary / how much more probably-easy-to-mitigate (at least strictly when it comes to technical alignment) this story seems than the scenarios from 10 years ago or so, e.g. from Superintelligence / from before LLMs, when pure RL seemed like the dominant paradigm to get to AGI.

I agree it's bad news w.r.t. getting maximal evidence about steganography and the like happening 'by default'. I think it's good news w.r.t. lab incentives, even for labs which don't speak too much about safety.

I pretty much agree with 1 and 2. I'm much more optimistic about 3-5 even 'by default' (e.g. R1's training being 'regularized' towards more interpretable CoT, despite DeepSeek not being too vocal about safety), but especially if labs deliberately try for maintaining the nice properties from 1-2 and of interpretable CoT.

If "smarter than almost all humans at almost all things" models appear in 2026-2027, China and several others will be able to ~immediately steal the first such models, by default.

Interpreted very charitably: but even in that case, they probably wouldn't have enough inference compute to compete.

Quick take: this is probably interpreting them over-charitably, but I feel like the plausibility of arguments like the one in this post makes e/acc and e/acc-adjacent arguments sound a lot less crazy.

To the best of my awareness, there isn't any demonstrated proper differential compute efficiency from latent reasoning to speak of yet. It could happen, it could also not happen. Even if it does happen, one could still decide to pay the associated safety tax of keeping the CoT.

More generally, the vibe of the comment above seems too defeatist to me; related: https://www.lesswrong.com/posts/HQyWGE2BummDCc2Cx/the-case-for-cot-unfaithfulness-is-overstated.

They also require relatively little compute (often around $1 for a training run), so AI agents could afford to test many ideas.

Ok, this seems surprisingly cheap. Can you say more about what such a 1$ training run typically looks like (what the hyperparameters are)? I'd also be very interested in any analysis about how SAE (computational) training costs scale vs. base LLM pretraining costs.

I wouldn't be surprised if SAE improvements were a good early target for automated AI research, especially if the feedback loop is just "Come up with idea, modify existing loss function, train, evaluate, get a quantitative result".

This sounds spiritually quite similar to what's already been done in Discovering Preference Optimization Algorithms with and for Large Language Models and I'd expect something roughly like that to probably produce something interestin, especially if a training run only cost $1.

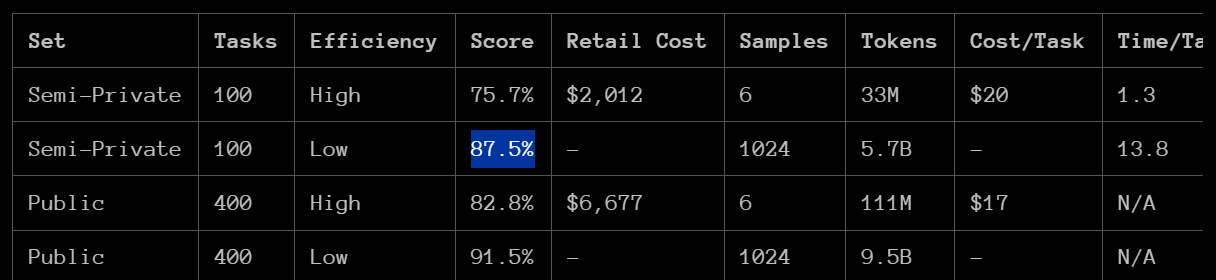

Some additional evidence: o3 used 5.7B tokens per task to achieve its ARC score of 87.5%; it also scored 75.7% on low compute mode using 33M tokens per task:

It might also be feasible to use multimodal CoT, like in Imagine while Reasoning in Space: Multimodal Visualization-of-Thought, and consistency checks between the CoTs in different modalities. Below are some quotes from a related chat with Claude about this idea.

multimodal CoT could enhance faithfulness through several mechanisms: cross-modal consistency checking, visual grounding of abstract concepts, increased bandwidth costs for deception, and enhanced interpretability. The key insight is that coordinating deception across multiple modalities would be substantially more difficult than in text alone.

Multimodal CoT for Enhanced Faithfulness: A Proposal

Core Idea

Multimodal Chain-of-Thought (like MVoT) could enhance CoT faithfulness by making it harder for models to hide information or engage in steganography. The key insight is that forcing models to express their reasoning through both text and generated images creates additional constraints that make unfaithful reasoning more difficult.

Mechanisms for Enhanced Faithfulness

1. Cross-Modal Consistency Checking

When a model must express its reasoning in both text and images, we can verify that both modalities tell the same story:

- Any hidden information in the text would need to be consistently reflected in the generated images

- The required coordination across modalities makes steganography more challenging

- Inconsistencies between modalities could signal unfaithful reasoning

2. Visual Grounding of Abstract Concepts

Images force concrete visualization of abstract concepts:

- Makes it harder to maintain multiple contradictory lines of reasoning

- Abstract deceptions become more apparent when they must be visually represented

- Visual medium reduces ambiguity that could be exploited for steganography

3. Increased Bandwidth Cost for Deception

Hiding information across both text and images is more computationally expensive:

- Model must maintain coherence across a higher-dimensional space

- Steganographic signals need more sophisticated encoding to work across modalities

- Higher cognitive load makes deception more challenging

4. Enhanced Interpretability

Multimodal outputs provide more angles for oversight:

- Humans can cross-reference the text reasoning against visual representations

- Visual artifacts of deceptive reasoning may be easier to spot

- Multiple channels for detecting inconsistencies

Implementation Approach

Required Components

- A multimodal model capable of generating both text and images

- Consistency checking mechanisms across modalities

- Visual grounding mechanisms for abstract concepts

- Multi-channel oversight tools

Training Strategy

- Start with models pre-trained on aligned multimodal data

- Fine-tune with explicit rewards for cross-modal consistency

- Penalize detected inconsistencies between modalities

- Validate using human oversight across both channels

Potential Challenges

Technical Challenges

- Ensuring high-quality image generation that accurately reflects reasoning

- Developing robust cross-modal consistency metrics

- Managing computational overhead of dual-modal generation

Validation Challenges

- Defining ground truth for faithful multimodal reasoning

- Creating test cases that can detect cross-modal deception

- Measuring effectiveness compared to text-only approaches

Research Directions

Immediate Next Steps

- Develop metrics for cross-modal consistency

- Create test suites for detecting multimodal steganography

- Build tools for human validation of multimodal reasoning

- Study failure modes specific to multimodal deception

Longer-term Research

- Explore optimal balances between modalities

- Investigate transfer learning between modalities

- Study emergence of cross-modal deception strategies

- Develop automated detection of multimodal unfaithfulness

Conclusion

Multimodal CoT presents a promising approach for enhancing faithfulness by leveraging the constraints and verification opportunities provided by multiple modalities. While technical challenges remain, the potential benefits warrant further investigation and development.

At the very least, evals for automated ML R&D should be a very decent proxy for when it might be feasible to automate very large chunks of prosaic AI safety R&D.

We find that these evaluation results are nuanced and there is no one ideal SAE configuration - instead, the best SAE varies depending on the specifics of the downstream task. Because of this, we cannot combine the results into a single number without obscuring tradeoffs. Instead, we provide a range of quantitative metrics so that researchers can measure the nuanced effects of experimental changes.

It might be interesting (perhaps in the not-very-near future) to study if automated scientists (maybe roughly in the shape of existing ones, like https://sakana.ai/ai-scientist/) using the evals as proxy metrics, might be able to come up with better (e.g. Pareto improvements) SAE architectures, hyperparams, etc., and whether adding more metrics might help; as an analogy, this seems to be the case for using more LLM-generated unit tests for LLM code generation, see Dynamic Scaling of Unit Tests for Code Reward Modeling.

I expect that, fortunately, the AI safety community will be able to mostly learn from what people automating AI capabilities research and research in other domains (more broadly) will be doing.

It would be nice to have some hands-on experience with automated safety research, too, though, and especially to already start putting in place the infrastructure necessary to deploy automated safety research at scale. Unfortunately, AFAICT, right now this seems mostly bottlenecked on something like scaling up grantmaking and funding capacity, and there doesn't seem to be enough willingness to address these bottlenecks very quickly (e.g. in the next 12 months) by e.g. hiring and / or decentralizing grantmaking much more aggressively.

From https://x.com/__nmca__/status/1870170101091008860:

o1 was the first large reasoning model — as we outlined in the original “Learning to Reason” blog, it’s “just” an LLM trained with RL. o3 is powered by further scaling up RL beyond o1

@ryan_greenblatt Shouldn't this be interpreted as a very big update vs. the neuralese-in-o3 hypothesis?

Do you have thoughts on the apparent recent slowdown/disappointing results in scaling up pretraining? These might suggest very diminishing returns in scaling up pretraining significantly before 6e27 FLOP.

I've had similar thoughts previously: https://www.lesswrong.com/posts/wr2SxQuRvcXeDBbNZ/bogdan-ionut-cirstea-s-shortform?commentId=rSDHH4emZsATe6ckF.

Gemini 2.0 Flash Thinking is claimed to 'transparently show its thought process' (in contrast to o1, which only shows a summary): https://x.com/denny_zhou/status/1869815229078745152. This might be at least a bit helpful in terms of studying how faithful (e.g. vs. steganographic, etc.) the Chains of Thought are.

A fairer comparison would probably be to actually try hard at building the kind of scaffold which could use ~10k$ in inference costs productively. I suspect the resulting agent would probably not do much better than with 100$ of inference, but it seems hard to be confident. And it seems harder still to be confident about what will happen even in just 3 years' time, given that pretraining compute seems like it will probably grow about 10x/year and that there might be stronger pushes towards automated ML.

A related announcement, explicitly targeting 'building an epistemically sound research agent

@elicitorg that can use unlimited test-time compute while keeping reasoning transparent & verifiable': https://x.com/stuhlmueller/status/1869080354658890009.

The toy task reminded me of the 'Hidden side objectives' subsection in section 'B.1.2 OBFUSCATED SCHEMING REASONING' of Towards evaluations-based safety cases for AI scheming.

For the SOTA on swebench-verified as of 16-12-2024: 'it was around $5k for a total run.. around 8M tokens for a single swebench-problem.'

'That means, around three months, it is possible to achieve performance comparable to current state-of-the-art LLMs using a model with half the parameter size.'

If this trend continues, combined with (better/more extensible) inference scaling laws, it could make LM agents much more competitive on many AI R&D capabilities soon, at much longer horizon tasks.

E.g. - figure 11 from RE-Bench: Evaluating frontier AI R&D capabilities of language model agents against human experts:

Also related: Before smart AI, there will be many mediocre or specialized AIs.

The kind of instrumental reasoning required for alignment faking seems relevant, including through n-hop latent reasoning; see e.g. section 'B.1.3 HIDDEN SCHEMING REASONING' from Towards evaluations-based safety cases for AI scheming. I wouldn't be too surprised if models could currently bypass this through shortcuts, but a mix of careful data filtering + unlearning of memorized facts about deceptive learning, as suggested in https://www.lesswrong.com/posts/9AbYkAy8s9LvB7dT5/the-case-for-unlearning-that-removes-information-from-llm#Information_you_should_probably_remove_from_the_weights, could force them to externalize their reasoning (which should be much easier to monitor than latent reasoning), if they were to try to alignment-fake; though steganography would also be another threat model here, as discussed e.g. in section 'B.1.2 OBFUSCATED SCHEMING REASONING' of Towards evaluations-based safety cases for AI scheming.

I don't dispute that transformers can memorize shortcuts. I do dispute their ability to perform latent (opaque) multi-hop reasoning robustly. And I think this should be (very) non-controversial; e.g. Mor Geva has many papers on this topic.

I'm pointing out that transformers seem really bad at internal multi-hop reasoning; currently they can't even do 2-hop robustly, 3-hop robustly seems kind of out of the question right now, and scaling doesn't seem to help much either (see e.g. Figures 2 and 3 in Do Large Language Models Perform Latent Multi-Hop Reasoning without Exploiting Shortcuts? and also how much more robust and scalable CoT reasoning is). So 'chain-of-thought but internalized to the model will take over' seems very unlikely with transformers, and much more so if basic mitigations like unlearning (e.g. of memorized facts about deceptive learning, as suggested in https://www.lesswrong.com/posts/9AbYkAy8s9LvB7dT5/the-case-for-unlearning-that-removes-information-from-llm#Information_you_should_probably_remove_from_the_weights, to mitigate the possibility of latent scheming) were applied.

Steganography is a separate threat model, but even there I'd interpret current evidence (e.g. Preventing Language Models From Hiding Their Reasoning) as mostly positive (as in, even relatively simple mitigations like paraphrasing seem to go very far).

I do indeed predict that we will see chain-of-thought become less faithful as model capabilities increase, and that other ways of doing the same thing as chain-of-thought but internalized to the model will take over.

This prediction seems largely falsified as long as transformers remain the dominant architecture, and especially if we deliberately add optimization pressures towards externalized reasoning and against internal, latent reasoning; see e.g. Do Large Language Models Perform Latent Multi-Hop Reasoning without Exploiting Shortcuts? and LLMs Do Not Think Step-by-step In Implicit Reasoning.

Thanks for this post!

This looks much worse than I thought it would, both in terms of funding underdeployment, and in terms of overfocusing on evals.

Claude Sonnet-3.5 New, commenting on the limited scalability of RNNs, when prompted with 'comment on what this would imply for the scalability of RNNs, refering (parts of) the post' and fed https://epoch.ai/blog/data-movement-bottlenecks-scaling-past-1e28-flop (relevant to opaque reasoning, out-of-context reasoning, scheming):

'Based on the article's discussion of data movement bottlenecks, RNNs (Recurrent Neural Networks) would likely face even more severe scaling challenges than Transformers for several reasons:

- Sequential Nature: The article mentions pipeline parallelism and discusses how sequential dependencies affect scaling. From the text:

Pipeline parallelism: Different GPUs handle different sequential blocks, or layers, of a Transformer. The output of one GPU is fed to the next as an input sequentially until an input makes it through all of the blocks of the model.

RNNs have even stronger sequential dependencies than Transformers, as each token's processing depends directly on the previous token's hidden state. This would make them much harder to parallelize effectively.

- Latency Wall Impact: The article notes that:

The basic reason behind the latency wall is that a bigger model typically requires more gradient steps during training, and if these have to be done in a fixed time window, this means each gradient step must take a shorter time as we scale the models we're training.

RNNs would hit this latency wall even harder because their sequential processing means they can't take advantage of some of the parallelization strategies discussed in the article, particularly tensor parallelism which requires:

after every one or two matrix multiplications (depending on the exact implementation) the GPUs need to synchronize results with each other.

- Batch Size Scaling: The article suggests that one potential solution to scaling limits is:

If we can find a way to aggressively scale batch sizes together with model sizes, we can push out the latency wall.

However, RNNs are typically trained with truncated backpropagation through time, which limits how much you can effectively increase the batch size without losing long-range dependencies. This would make it harder to apply this particular solution to RNNs.

This analysis suggests that while Transformers are approaching scaling limits around 2e28 FLOP, RNNs would likely hit prohibitive scaling bottlenecks at significantly lower compute levels due to their inherently sequential nature and limited parallelization options.'

If this generalizes, OpenAI's Orion, rumored to be trained on synthetic data produced by O1, might see significant gains not just in STEM domains, but more broadly - from O1 Replication Journey -- Part 2: Surpassing O1-preview through Simple Distillation, Big Progress or Bitter Lesson?:

'this study reveals how simple distillation from O1's API, combined with supervised fine-tuning, can achieve superior performance on complex mathematical reasoning tasks. Through extensive experiments, we show that a base model fine-tuned on simply tens of thousands of samples O1-distilled long-thought chains outperforms O1-preview on the American Invitational Mathematics Examination (AIME) with minimal technical complexity. Moreover, our investigation extends beyond mathematical reasoning to explore the generalization capabilities of O1-distilled models across diverse tasks: hallucination, safety and open-domain QA. Notably, despite training only on mathematical problem-solving data, our models demonstrated strong generalization to open-ended QA tasks and became significantly less susceptible to sycophancy after fine-tuning.'

QwQ-32B-Preview was released open-weights, seems comparable to o1-preview. Unless they're gaming the benchmarks, I find it both pretty impressive and quite shocking that a 32B model can achieve this level of performance. Seems like great news vs. opaque (e.g in one-forward-pass) reasoning. Less good with respect to proliferation (there don't seem to be any [deep] algorithmic secrets), misuse and short timelines.

The above numbers suggest that (as long as sample efficiency doesn’t significantly improve) the world will always have enough compute to produce at least 23 million token-equivalents per second from any model that the world can afford to train (end-to-end, chinchilla-style). Notably, these are many more token-equivalents per second than we currently have human-AI-researcher-seconds per second. (And the AIs would have the further advantage of having much faster serial speeds.)

So once an AI system trained end-to-end can produce similarly much value per token as a human researcher can produce per second, AI research will be more than fully automated. This means that, when AI first contributes more to AI research than humans do, the average research progress produced by 1 token of output will be significantly less than an average human AI researcher produces in a second of thinking.

There's probably a very similarly-shaped argument to be made based on difference in cost per token: because LLMs are much cheaper per token, the first time an LLM is as cost-efficient at producing AI research as a human researcher, it should be using many more tokens in its outputs ('the average research progress produced by 1 token of output will be significantly less than an average human AI researcher produces in 1 token of output'). Which, similarly, should be helpful because 'the token-by-token output of a single AI system should be quite easy for humans to supervise and monitor for danger'.

This framing might be more relevant from the POV of economic incentives to automate AI research (and I'm particularly interested in the analogous incentives to/feasibility of automating AI safety research).

I'm very uncertain and feel somewhat out of depth on this. I do have quite some hope though from arguments like those in https://aiprospects.substack.com/p/paretotopian-goal-alignment.

(Also, what Thane Ruthenis commented below.)

I think the general impression of people on LW is that multipolar scenarios and concerns over "which monkey finds the radioactive banana and drags it home" are in large part a driver of AI racing instead of being a potential impediment/solution to it. Individuals, companies, and nation-states justifiably believe that whichever one of them accesses potentially superhuman AGI first will have the capacity to flip the gameboard at-will, obtain power over the entire rest of the Earth, and destabilize the currently-existing system. Standard game theory explains the final inferential step for how this leads to full-on racing (see the recent U.S.-China Commission's report for a representative example of how this plays out in practice).

At the risk of being overly spicy/unnuanced/uncharitable: I think quite a few MIRI [agent foundations] memes ("which monkey finds the radioactive banana and drags it home", ''automating safety is like having the AI do your homework'', etc.) seem very lazy/un-truth-tracking and probably net-negative at this point, and I kind of wish they'd just stop propagating them (Eliezer being probably the main culprit here).

Perhaps even more spicily, I similarly think that the old MIRI threat model of Consequentialism is looking increasingly 'tired'/un-truth-tracking, and there should be more updating away from it (and more so with every single increase in capabilities without 'proportional' increases in 'Consequentialism'/egregious misalignment).

(Especially) In a world where the first AGIs are not egregiously misaligned, it very likely matters enormously who builds the first AGIs and what they decide to do with them. While this probably creates incentives towards racing in some actors (probably especially the ones with the best chances to lead the race), I suspect better informing more actors (especially more of the non-leading ones, who might especially see themselves as more on the losing side in the case of AGI and potential destabilization) should also create incentives for (attempts at) more caution and coordination, which the leading actors might at least somewhat take into consideration, e.g. for reasons along the lines of https://aiprospects.substack.com/p/paretotopian-goal-alignment.

I get that we'd like to all recognize this problem and coordinate globally on finding solutions, by "mak[ing] coordinated steps away from Nash equilibria in lockstep". But I would first need to see an example, a prototype, of how this can play out in practice on an important and highly salient issue. Stuff like the Montreal Protocol banning CFCs doesn't count because the ban only happened once comparably profitable/efficient alternatives had already been designed; totally disanalogous to the spot we are in right now, where AGI will likely be incredibly economically profitable, perhaps orders of magnitude more so than the second-best alternative.

I'm not particularly optimistic about coordination, especially the more ambitious kinds of plans (e.g. 'shut it all down', long pauses like in 'A narrow path...', etc.), and that's to a large degree (combined with short timelines and personal fit) why I'm focused on automated safety reseach. I'm just saying: 'if you feel like coordination is the best plan you can come up with/you're most optimistic about, there are probably more legible and likely also more truth-tracking arguments than superintelligence misalignment and loss of control'.

This is in large part why Eliezer often used to challenge readers and community members to ban gain-of-function research, as a trial run of sorts for how global coordination on pausing/slowing AI might go.

This seems quite reasonable; might be too late as a 'trial run' at this point though, if taken literally.

I'm envisioning something like: scary powerful capabilities/demos/accidents leading to various/a coalition of other countries asking the US (and/or China) not to build any additional/larger data centers (and/or run any larger training runs), and, if they're scared enough, potentially even threatening various (escalatory) measures, including economic sanctions, blockading the supply of compute/prerequisites to compute, sabotage, direct military strikes on the data centers, etc.

I'm far from an expert on the topic, but I suspect it might not be trivial to hide at least building a lot more new data centers/supplying a lot more compute, if a significant chunk of the rest of the world was watching very intently.

(Separately, whether or not it's "truer" depends a lot on one's models of AGI development. Most notably: (a) how likely is misalignment and (b) how slow will takeoff be//will it be very obvious to other nations that super advanced AI is about to be developed, and (c) how will governments and bureaucracies react and will they be able to react quickly enough.)

I'm envisioning a very near-casted scenario, on very short (e.g. Daniel Kokotajlo-cluster) timelines, egregious misalignment quite unlikely but not impossible, slow-ish (couple of years) takeoff (by default, if no deliberate pause), pretty multipolar, but with more-obviously-close-to-scary capabilities, like ML R&D automation evals starting to fall.

Hot take, though increasingly moving towards lukewarm: if you want to get a pause/international coordination on powerful AI (which would probably be net good, though likely it would strongly depend on implementation details), arguments about risks from destabilization/power dynamics and potential conflicts between various actors are probably both more legible and 'truer' than arguments about technical intent misalignment and loss of control (especially for not-wildly-superhuman AI).

Here's a somewhat wild idea to have a 'canary in a coalmine' when it comes to steganography and non-human (linguistic) representations: monitor for very sharp drops in BrainScores (linear correlations between LM activations and brain measurements, on the same inputs) - e.g. like those calculated in Scaling laws for language encoding models in fMRI. (Ideally using larger, more diverse, higher-resolution brain data.)

Mostly the same, perhaps a minor positive update on the technical side (basically, from systems getting somewhat stronger - so e.g. closer to automating AI safety research - while still not showing very dangerous capabilities, like ASL-3, prerequisites to scheming, etc.). My views are even more uncertain / unstable on the governance side though, which probably makes my overall p(doom) (including e.g. stable totalitarianism, s-risks, etc.) more like 20% than 5% (I was probably mostly intuitively thinking of extinction risk only when giving the 5% figure a year ago; overall my median probably hasn't changed much, but I have more variance, coming from the governance side).

Proposal part 3: Use Paraphraser: One more complication. The outputs of the Shoggoth? Paraphrase them. For example, after each line of reasoning CoT is complete, pause the Shoggoth and have a different specialized paraphraser model generate 10 syntactically distinct but semantically equivalent ways of saying the same thing. Then pick one at random and replace the original line of reasoning with it. Then boot up the Shoggoth again and have it continue the CoT.

Any thoughts on how much of a (computational) alignment tax this would impose? Related, thoughts on how big of a worry scheming/opaque cognitions in the paraphrasers would be? (e.g. one intuition here might be that the paraphrasers might be 'trusted' in control terminology - incapable of scheming because too weak; in this case the computational alignment tax might also be relatively low, if the paraphrasers are much smaller than the Face and the Shoggoth).

'China hawk and influential Trump AI advisor Jacob Helberg asserted to Reuters that “China is racing towards AGI," but I couldn't find any evidence in the report to support that claim.' https://x.com/GarrisonLovely/status/1859022323799699474

AFAICT, there seems to quite heavy overlap between the proposal and Daniel's motivation for it and safety case (sketch) #3 in https://alignment.anthropic.com/2024/safety-cases/.

'The report doesn't go into specifics but the idea seems to be to build / commandeer the computing resources to scale to AGI, which could include compelling the private labs to contribute talent and techniques.

DX rating is the highest priority DoD procurement standard. It lets DoD compel companies, set their own price, skip the line, and do basically anything else they need to acquire the good in question.' https://x.com/hamandcheese/status/1858902373969564047

(screenshot in post from PDF page 39 of https://www.uscc.gov/sites/default/files/2024-11/2024_Annual_Report_to_Congress.pdf)

'🚨 The annual report of the US-China Economic and Security Review Commission is now live. 🚨

Its top recommendation is for Congress and the DoD to fund a Manhattan Project-like program to race to AGI.

Buckle up...'

And the space of interventions will likely also include using/manipulating model internals, e.g. https://transluce.org/observability-interface, especially since (some kinds of) automated interpretability seem cheap and scalable, e.g. https://transluce.org/neuron-descriptions estimated a cost of < 5 cents / labeled neuron. LM agents have also previously been shown able to do interpretability experiments and propose hypotheses: https://multimodal-interpretability.csail.mit.edu/maia/, and this could likely be integrated with the above. The auto-interp explanations also seem roughly human-level in the references above.

As well as (along with in-context mechanisms like prompting) potentially model internals mechanisms to modulate how much the model uses in-context vs. in-weights knowledge, like in e.g. Cutting Off the Head Ends the Conflict: A Mechanism for Interpreting and Mitigating Knowledge Conflicts in Language Models. This might also work well with potential future advances in unlearning, e.g. of various facts, as discussed in The case for unlearning that removes information from LLM weights.

Any thoughts on potential connections with task arithmetic? (later edit: in addition to footnote 2)

Would the prediction also apply to inference scaling (laws) - and maybe more broadly various forms of scaling post-training, or only to pretraining scaling?