Posts

Comments

Even if the internals-based method is extremely well supported theoretically and empirically (which seems quite unlikely), I don't think this would suffice for this to trigger a strong response by convincing relevant people

Its hard for me to imagine a world where we really have internals-based methods that are "extremely well supported theoretically and empirically," so I notice that I should take a second to try and imagine such a world before accepting the claim that internals-based evidence wouldn't convince the relevant people...

Today, the relevant people probably wouldn't do much in response to the interp team saying something like: "our deception SAE is firing when we ask the model bio risk questions, so we suspect sandbagging."

But I wonder how much of this response is a product of a background assumption that modern-day interp tools are finicky and you can't always trust them. So in a world where we really have internals-based methods that are "extremely well supported theoretically and empirically," I wonder if it'd be treated differently?

(I.e. a culture that could respond more like: "this interp tool is a good indicator of whether or not that the model is deceptive, and just because you can get the model to say something bad doesn't mean its actually bad" or something? Kinda like the reactions to the o1 apollo result)

Edit: Though maybe this culture change would take too long to be relevant.

"Lots of very small experiments playing around with various parameters" ... "then a slow scale up to bigger and bigger models"

This Dwarkesh timestamp with Jeff Dean & Noam Shazeer seems to confirm this.

"I'd also guess that the bottleneck isn't so much on the number of people playing around with the parameters, but much more on good heuristics regarding which parameters to play around with."

That would mostly explain this question as well: "If parallelized experimentation drives so much algorithmic progress, why doesn't gdm just hire hundreds of researchers, each with small compute budgets, to run these experiments?"

It would also imply that it would be a big deal if they had an AI with good heuristics for this kind of thing.

I would love to see an analysis and overview of predictions from the Dwarkesh podcast with Leopold. One for Situational awareness would be great too.

Seems like a pretty similar thesis to this: https://www.lesswrong.com/posts/fPvssZk3AoDzXwfwJ/universal-basic-income-and-poverty

I expect that within a year or two, there will be an enormous surge of people who start paying a lot of attention to AI.

This could mean that the distribution of who has influence will change a lot. (And this might be right when influence matters the most?)

I claim: your effect on AI discourse post-surge will be primarily shaped by how well you or your organization absorbs this boom.

The areas I've thought the most about this phenomena are:

- AI safety university groups

- Non agi lab research organizations

- AI bloggers / X influencers

(But this applies to anyone who's impact primarily comes from spreading their ideas, which is a lot of people.)

I think that you or your organization should have an explicit plan to absorb this surge.

Unresolved questions:

- How much will explicitly planning for this actually help absorb the surge? (Regardless, it seems worth a google doc and a pomodoro session to at least see if there's anything you can do to prepare)

- How important is it to make every-day people informed about AI risks? Or is influence so long-tailed that it only really makes sense to build reputation with highly influential people? (Though- note that this surge isn't just for every day people — I expect that the entire memetic landscape will be totally reformed after AI becomes clearly a big deal, and that applies to big shot government officials along with your average joe)

I'd be curious to see how this looked with Covid: Did all the covid pandemic experts get an even 10x multiplier in following? Or were a handful of Covid experts highly elevated, while the rest didn't really see much of an increase in followers? If the latter, what did those experts do to get everyone to pay attention to them?

Securing AI labs against powerful adversaries seems like something that almost everyone can get on board with. Also, posing it as a national security threat seems to be a good framing.

Some more links from the philosophical side that I've found myself returning to a lot:

(Lately, it's seemed to me that focusing my time on nearer-term / early but post-AGI futures seems better than spending my time discussing ideas like these on the margin, but this may be more of a fact about myself than it is about other people, I'm not sure.)

Ah, sorry- I meant it's genuinely unclear how to classify this.

Once again, props to OAI for putting this in the system card. Also, once again, it's difficult to sort out "we told it to do a bad thing and it obeyed" from "we told it to do a good thing and it did a bad thing instead," but these experiments do seem like important information.

“By then I knew that everything good and bad left an emptiness when it stopped. But if it was bad, the emptiness filled up by itself. If it was good you could only fill it by finding something better.”

- Hemingway, A Moveable Feast

The fatebook embedding is so cool! I especially appreciate that it hides other people's predictions before you make your own. From what I can tell this isn't done on Lesswrong right now and I think that would be really cool to see!

(I may be mistaken on how this works, but from what I can tell they look like this on LW right now)

Great post, seems like a handy thing to remember.

The scene in planecrash where Keltham gives his first lecture, as an attempt to teach some formal logic (and a whole bunch of important concepts that usually don't get properly taught in school), is something I'd highly recommend reading! As far as I can remember, you should be able to just pick it up right here, and follow the important parts of the lecture without understanding the story

How difficult would it be to turn this into an epub or pdf? Is there word of that coming soon? (or integrating into LW like the Codex?)

Realizing I kind of misunderstood the point of the post. Thanks!

In the case that there are, like "ai-run industries" and "non-ai-run industries", I guess I'd expect the "ai-run industries" to gobble up all of the resources to the point that even though ai's aren't automating things like healthcare, there just aren't any resources left?

To be clear, if you put doom at 2-20%, you're still quite worried then? Like, wishing humanity was dedicating more resources towards ensuring AI goes well, trying to make the world better positioned to handle this situation, and saddened by the fact that most people don't see it as an issue?

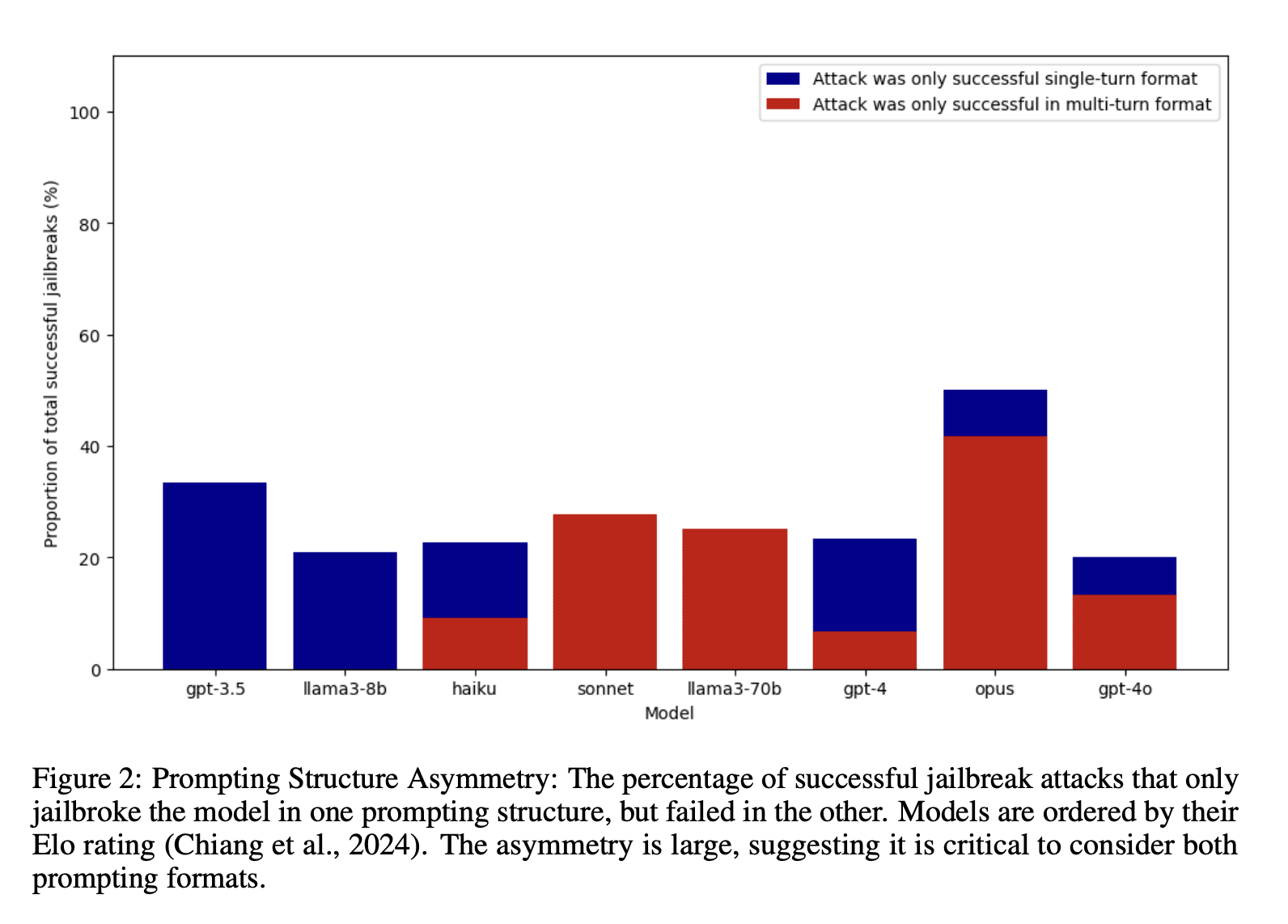

I'd be really interested to see how the harmfulness feature relates to multi-turn jailbreaks! We recently explored splitting a cipher attack into a multi-turn jailbreak (where instead of passing-in the word mappings + the ciphered harmful prompt all at once, you pass in the word mappings, let the model respond, and then pass-in the harmful prompt).

I'd expect to see something like when you "spread out the harm" enough, such that no one prompt contains any blaring red flags, the harmfulness feature never reaches the critical threshold, or something?

Scale recently published some great multi-turn work too!

Edit: I think I subconsciously remembered this paper and accidentally re-invented it.

Should it be more tabooed to put the bottom line in the title?

Titles like "in defense of <bottom line>" or just "<bottom line>" seem to:

- Unnecessarily make it really easy for people to select content to read based on the conclusion it comes to

- Frame the post as having the goal of convincing you of <bottom line>, and setting up the readers expectation as such. This seems like it would either put you in pause critical thinking to defend My Team mode (if you agree with the title), or continuously search for counter-arguments mode (if you disagree with the title).

When making safety cases for alignment, its important to remember that defense against single-turn attacks doesn't always imply defense against multi-turn attacks.

Our recent paper shows a case where breaking up a single turn attack into multiple prompts (spreading it out over the conversation) changes which models/guardrails are vulnerable to the jailbreak.

Robustness against the single-turn version didn't imply robustness against the multi-turn version of the attack, and robustness against the multi-turn version didn't imply robustness against the single-turn version of the attack.

The rank of a matrix = the number of non-zero eigenvalues of the matrix! So you can either use the top eigenvalues to count the non-zeros, or you can use the fact that an matrix always has eigenvalues to determine the number of non-zero eigenvalues by counting the bottom zero-eigenvalues.

Also for more detail on the "getting hessian eigenvalues without calculating the full hessian" thing, I'd really recommend Johns explanation in this linear algebra lecture he recorded.

Sure, securing an economic surplus is sometimes part of an interesting challenge, and it can presumably get one invited to lots of cool parties, but controlling surplus is typically not as central and necessary to “achievement” and “association” as to “power”.

I guess that the ultra-deadly ingredient here is the manager gaining status when more people are hired, but hardly has any personal stake in the money that gets spent on new hires.

If given the choice between receiving the salary of a would-be new hire, or getting a new bs hire underling for status, I'd definitely expect most people to take the double salary option.

Like I don't expect these two contrasting experiences to really stack up to each other. I think if it's all the same person weighing these two options, the extra money would blow the status option out of the water.

That's a pretty clean story for why in smaller, say 2-5 person companies, having less bs jobs is something I'd predict (though I don't have sources to confirm this prediction). In these smaller companies, when the person you're hiring gets payed by a noticeable hit in your own paycheck, I wonder if the experience of "ugh, this ineffective person is costing me money" just dramatically cancels out the status thing.

And then potentially the issue here is that big companies tend to separate the ugh-this-costs-me-money person from the woohoo-more-status person?

Thought this paper (published after this post) seemed relevant: Language Models Don't Always Say What They Think: Unfaithful Explanations in Chain-of-Thought Prompting

Ah shoot, I didn’t catch the ambiguity - it was just my partner asking me to turn off the lights, which is much less weird. (I edited the post to make it clearer, thanks!)

Still, it must have had some Kabbalistic significance.

Also "Large Language Models Are Not Robust Multiple Choice Selectors" (Zheng et al., 2023)

This work shows that modern LLMs are vulnerable to option position changes in MCQs due to their inherent "selection bias", namely, they prefer to select specific option IDs as answers (like "Option A"). Through extensive empirical analyses with 20 LLMs on three benchmarks, we pinpoint that this behavioral bias primarily stems from LLMs' token bias, where the model a priori assigns more probabilistic mass to specific option ID tokens (e.g., A/B/C/D) when predicting answers from the option IDs. To mitigate selection bias, we propose a label-free, inference-time debiasing method, called PriDe, which separates the model's prior bias for option IDs from the overall prediction distribution. PriDe first estimates the prior by permutating option contents on a small number of test samples, and then applies the estimated prior to debias the remaining samples. We demonstrate that it achieves interpretable and transferable debiasing with high computational efficiency. We hope this work can draw broader research attention to the bias and robustness of modern LLMs.

Was an explanation of the unsupervised algorithm / sphere search ever published somewhere?

Random Anki tips

When using Anki for a scattered assortment of topics, is it best to group topics into different decks or not worry about it and just have a single big deck.

If making multiple decks, would Anki still give you a single "do this today" set?

I've only used it in the context of studying foreign language.