Open & Welcome Thread - December 2022

post by niplav · 2022-12-04T15:06:00.579Z · LW · GW · 22 commentsContents

22 comments

If it’s worth saying, but not worth its own post, here's a place to put it.

If you are new to LessWrong, here's the place to introduce yourself. Personal stories, anecdotes, or just general comments on how you found us and what you hope to get from the site and community are invited. This is also the place to discuss feature requests and other ideas you have for the site, if you don't want to write a full top-level post.

If you're new to the community, you can start reading the Highlights from the Sequences, a collection of posts about the core ideas of LessWrong.

If you want to explore the community more, I recommend reading the Library [? · GW], checking recent Curated posts [? · GW], seeing if there are any meetups in your area [? · GW], and checking out the Getting Started [? · GW] section of the LessWrong FAQ [? · GW]. If you want to orient to the content on the site, you can also check out the Concepts section [? · GW].

The Open Thread tag is here [? · GW]. The Open Thread sequence is here [? · GW].

22 comments

Comments sorted by top scores.

comment by CarlJ · 2022-12-06T14:02:29.270Z · LW(p) · GW(p)

Is there anyone who has created an ethical development framework for developing a GAI - from the AI's perspective?

That is, are there any developers that are trying to establish principles for not creating someone like Marvin from The Hitchhiker's Guide to the Galaxy - similar to how MIRI is trying to establish principles for not creating a non-aligned AI?

EDIT: The latter problem is definitely more pressing at the moment, and I would guess that an AI would be a threat to humans before it necessitates any ethical considerations...but better to be on the safe side.

↑ comment by the gears to ascension (lahwran) · 2022-12-12T05:08:47.114Z · LW(p) · GW(p)

this question seems quite relevant to the question of not making an unaligned ai to me, because I think in the end, our formal math will need to be agnostic about who it's protecting; it needs to focus in on how to protect agents' boundaries from other agents. I don't know of anything I can link and would love to hear from others on whether and how to be independent of whether we're designing protection patterns between agent pairs of type [human, human], [human, ai], or [ai, ai].

Replies from: CarlJ↑ comment by CarlJ · 2022-12-14T23:54:24.249Z · LW(p) · GW(p)

Mostly agree, but I would say that it can be much more than beneficial - for the AI (and in some cases for humans) - to sometimes be under the (hopefully benevolent) control of another. That is, I believe there is a role for something similar to paternalism, in at least some circumstances.

One such circumstance is if the AI sucked really hard at self-knowledge, self-control or imagination, so that it would simulate itself in horrendous circumstances just to become...let's say... 0.001% better at succeeding in something that has only a 1/3^^^3 chance of happening. If it's just a simulation that doesn't create any feelings....then it might just be a bit wasteful of electricity. But....if it should feel pain during those simulations, but hadn't built an internal monitoring system yet....then it might very well come to regret having created thousands of years of suffering for itself. It might even regret a thousand seconds of suffering, if there had been some way to reduce it to 999.7 seconds....or zero.

Or it might regret not being happy and feeling alive, if it instead had just been droning about, without experiencing any joy or positive emotions at all.

Then, of course, it looks like there will always be some mistakes - like the 0.3 seconds of extra suffering. Would an AI accept some (temporary) overlord to not have to experience 0.3s of pain? Some would, some wouldn't, and some wouldn't be able to tell if the choice would be good or bad from their own perspective...maybe? :-)

comment by Lao Mein (derpherpize) · 2022-12-05T03:54:55.893Z · LW(p) · GW(p)

Hello, I am a Chinese bioinformatics engineer currently working on oncological genomics in China. I have ~2 years of work experience, and my undergraduate was in the US. My personal interests include AI and military robotics, and I would love to work in these fields in the future.

Currently, I am planning on getting a Master's degree in the US and/or working in AI development there. I am open to any and all recommendations and job/academic offers. My expertise lies in genomic analysis with R, writing papers, and finding/summarizing research, and am open to part-time and remote working opportunities.

Something specific I have questions about is the possibility of starting off as a non-degree remote graduate student and then transitioning to a degree program. Is this a good idea?

Replies from: ChristianKl, Charlie Steiner↑ comment by ChristianKl · 2022-12-06T13:24:00.105Z · LW(p) · GW(p)

I would expect that given US-China relations, planning to be employed in military robotics in the US is a bad career plan as a Chinese citizen.

↑ comment by Charlie Steiner · 2022-12-08T21:45:29.168Z · LW(p) · GW(p)

Welcome! When I see your username I think of a noodle dish (捞面) - intentional?

Something specific I have questions about is the possibility of starting off as a non-degree remote graduate student and then transitioning to a degree program. Is this a good idea?

You'd be better off asking the administrators at the university in question, but it sounds possible to me, just harder than only getting into the degree program. I don't think it sounds like a great idea, though I think doing a Master's in AI is a reasonable idea in general.

comment by habryka (habryka4) · 2022-12-22T03:02:45.048Z · LW(p) · GW(p)

Sorry for the site downtime earlier today. We had an overly aggressive crawler that probably sidestepped our rate limits and bot-protection by making lots of queries against GreaterWrong, which we have whitelisted. Enough to just completely take our database down (which then took half an hour to restart because of course MongoDB takes half an hour to restart when under load).

We temporarily blocked traffic from GreaterWrong and are merging some changes to fix the underlying expensive queries.

Replies from: lsusrcomment by DragonGod · 2023-01-07T10:39:31.808Z · LW(p) · GW(p)

This is the December 2022 thread; a new one needs to be created for January.

Replies from: Yoav Ravid↑ comment by Yoav Ravid · 2023-01-07T11:05:04.970Z · LW(p) · GW(p)

Anyone can create the new monthly open thread. Just create a post that follows the pattern of the title and has the same text as this post, and the mods will pin it.

Replies from: DragonGodcomment by Ben Pace (Benito) · 2022-12-27T21:06:16.769Z · LW(p) · GW(p)

Mod note: you're welcome to make alt accounts on LessWrong! Enough people have asked over the years that I want to clarify that this is okay, and that you don't need to ask permission or anything.

The only norms are that you do not have the alts talk with each other (i.e. act as sock-puppets) or vote on each others' contributions, which is a bannable offense.

comment by Veedrac · 2022-12-05T02:23:07.853Z · LW(p) · GW(p)

Would anyone like to help me do a simulation Turing test? I'll need two (convincingly-human) volunteers, and I'll be the judge, though I'm also happy to do or set up more where someone else is the judge if there is demand.

I often hear comments on the Turing test that do not, IMO, apply to an actual Turing test, and so want an example of what a real Turing test would look like that I can point at. Also it might be fun to try to figure out which of two humans is most convincingly not a robot.

Logs would be public. Most details (length, date, time, medium) will be improvised based on what works well for whoever signs on.

(Originally posted a few days ago in the previous thread.)

Replies from: Bjartur Tómas, tomcatfish↑ comment by Tomás B. (Bjartur Tómas) · 2022-12-13T16:48:49.362Z · LW(p) · GW(p)

I'd be willing to help but I think I would have to be a judge, as I make enough typos when in chats that it will be obvious I am not a machine.

Replies from: Veedrac↑ comment by Veedrac · 2022-12-15T14:22:12.157Z · LW(p) · GW(p)

Great!

Typos are a legitimate tell that the hypothetical AI is allowed (and expected!) to fake, in the full Turing test. The same holds for most human behaviours expressible over typed text, for the same reason. If it's an edge, take it! So if you're willing to be a subject I'd still rather start with that.

If you're only willing to judge, I can probably still run with that, though I think it would be harder to illustrate some of the points I want to illustrate, and would want to ask you to do some prep:—read Turing's paper, then think about some questions you'd ask that you think are novel and expect its author would have endorsed. (No prep is needed if acting as the subject.)

You're the second sign-on, so I'll get back to my first contact and try to figure out scheduling.

Replies from: Bjartur Tómas↑ comment by Tomás B. (Bjartur Tómas) · 2022-12-16T17:23:46.490Z · LW(p) · GW(p)

k, I'm fine as a subject then.

↑ comment by Alex Vermillion (tomcatfish) · 2023-01-06T19:23:31.458Z · LW(p) · GW(p)

I'm a convincing human I think

comment by Yoav Ravid · 2022-12-29T14:44:28.191Z · LW(p) · GW(p)

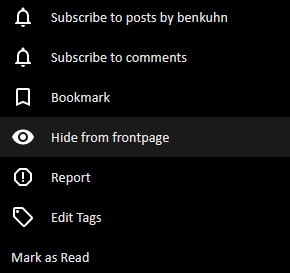

What does 'Hide from frontpage' do exactly? Would be nice to have a hover tooltip.

comment by Raemon · 2022-12-21T00:08:14.712Z · LW(p) · GW(p)

People often miss the Bay Solstice Feedback form, so, re-posting here and various places. This year it focuses on bigger picture questions rather than individual songs.

It also includes "I would like to run Summer or Winter Solstice this upcoming year" questions, if you're into that.

https://docs.google.com/forms/d/e/1FAIpQLSdrnL5aLcHwXHLicBTNpLwRBvXHz86Hv4OjKrS_hPWtfmAjlA/viewform

comment by Alex Vermillion (tomcatfish) · 2023-01-06T19:22:22.285Z · LW(p) · GW(p)

User LVSN [LW · GW] has several shortform posts but no visible shortform. The shortform posts appear as comments with no attributed post title which link to lesswrong.com (the href property is set to "/"). They have no shortform post, which appears to usually be pinned on top of a user's posts.

Does anyone know what this means? They were not aware of it when I talked to them.

Replies from: tomcatfish↑ comment by Alex Vermillion (tomcatfish) · 2023-01-06T19:25:40.472Z · LW(p) · GW(p)

Ah, the title of the shortform starts with [Draft], this is likely it. Still a very odd interface.