Relativity Theory for What the Future 'You' Is and Isn't

post by FlorianH (florian-habermacher) · 2024-07-29T02:01:17.736Z · LW · GW · 49 commentsContents

Graphical illustration: What we know, in contrast to what your brain instinctively tells you Implication Is it practicable? Vagueness – as so often in altruism More than a play on words? None 49 comments

"Me" encompasses three constituents: this mind here and now, its memory, and its cared-for future. There follows no ‘ought’ with regards to caring about future clones or uploadees, and your lingering questions about them dissipate.

In When is a mind me? [LW · GW], Rob Bensinger suggests three Yes follow for:

- If I expect to be uploaded tomorrow, should I care about the upload in the same ways (and to the same degree) that I care about my future biological self?

- Should I anticipate experiencing what my upload experiences?

- If the scanning and uploading process requires destroying my biological brain, should I say yes to the procedure?

I say instead: Do however it occurs to you, it’s not wrong! And if tomorrow you changed your mind, it’s again not wrong.[1] So the answers here are:

- Care however it occurs to you!

- Well, what do you anticipate experiencing? Something or nothing? You anticipate whatever you do anticipate and that’s all there is to know—there’s no “should” here.

- Say what you fee like saying. There’s nothing inherently right or wrong here, as long as it aligns with your actual internally felt, forward-looking preference for the uploaded being and the physically to-be-eliminated future being.

Clarification: This does not imply you should never wonder about what you actually want. It is normal to feel confused at times about our own preferences. What we must not do, is insist on reaching a universal, 'objective' truth about it.

So, I propose there’s nothing wrong with being hesitant as to whether you really care about the guy walking out of the transporter. Whatever your intuition tells you, is as good as it gets in terms of judgement. It’s neither right nor wrong. So, I advocate a sort of relativity theory for your future, if you will: Care about whosever fate you happen to, but don’t ask whom you should care about in terms of successors of yours.

I conclude on this when starting from a rather similar position as that posited by Rob Bensinger. The take is based on only two simple core elements:

- The current "me" is precisely my current mind at this exact moment—nothing more, nothing less.

- This mind strongly cares about its 'natural' successor over the next milliseconds, seconds, and years, and it cherishes the memories from its predecessors. "Natural" feels vague? Exactly, by design!

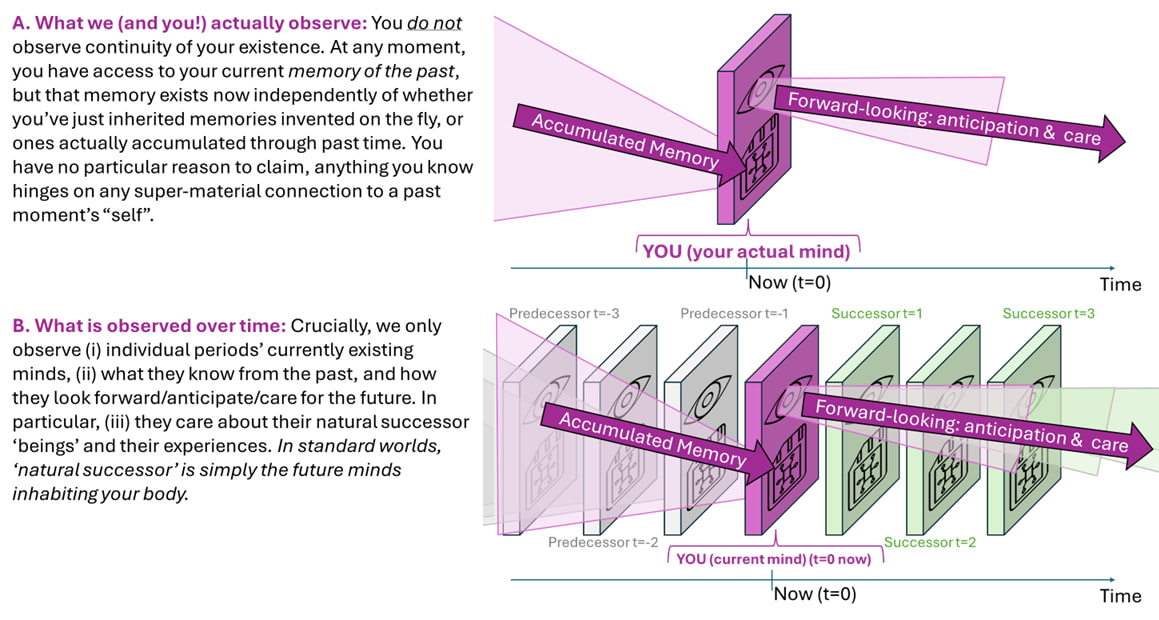

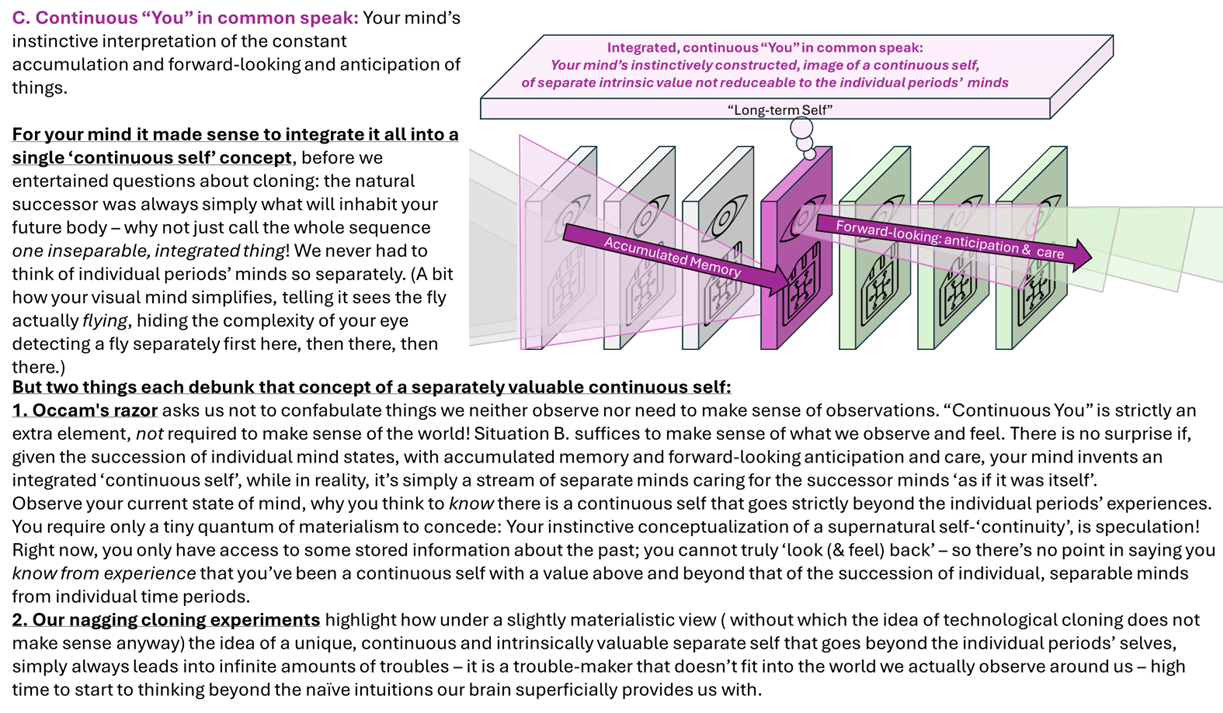

This is not just one superficially convenient way out of some of our cloning conundrums, it is also the logical view: besides removing the inevitable puzzles about cloning/uploading that you may struggle to solve satisfactorily otherwise, it corresponds to explaining what we observe without adding unnecessary complexity (illustration below).

Graphical illustration: What we know, in contrast to what your brain instinctively tells you

Implication

In the absence of cloning and uploading, this is essentially the same as being a continuous "self." You care so deeply about the direct physical and mental successors of yours, you might as well speak of a unified 'self'. Rob Bensinger provides a more detailed examination of this idea, which I find agreeable. With cloning, everything remains the same, except for a minor detail—if we're open to it, it does not create any complications in otherwise perplexing thought experiments. Here's how it works:

- Your current mind is cloned or transported. The successors simply inherit your memories, each in turn developing their own concern for their successors holding their memories, and so forth.

- How much you care for future successors, or for which successor, is left to your intuition. There's nothing more to say! There's no right or wrong here. We may sometimes be perplexed about how much we care for which successor in a particular thought experiment, but you may adopt a perspective as casually, quickly, and baselessly as you happen to; there's nothing wrong with any view you may hold. Nothing harms you (or at least not more than necessary), as long as your decisions are in line with the degree of regard you have, you feel, for the future successors in question.

Is it practicable?

Can we truly live with this understanding? Absolutely. I am myself right now, and I care about the next second's successor with about a '100%' weight: just as much as for my actual current self, under normal circumstances. Colloquially, even in our own minds, we refer to this as "we're our continuous self." But tell yourself that’s rubbish. You are only the actual current moment's you, and the rest are the successors you may deeply care about. This perspective simplifies many dilemmas: You fall asleep in your bed, someone clones you and places the original you on the sofa, and the clone in your bed [LW(p) · GW(p)]—who is "you" now?[2] Traditional views are often confounded—everyone has a different intuition. Maybe every day you have a different response, based on no particular reason. And it's not your fault; we're simply asking the wrong question.

By adopting the relativity viewpoint, it becomes straightforward. Maybe you anticipate and want to ensure the right person receives the gold bar upon waking, so you place it where it feels most appropriate according to your feelings towards the two. Remember, you exist just now, and everything future comprises new selves, for some of which you simply have a particular forward-looking care. Which one do you care more about? That decision should guide where you place the gold bar.

Vagueness – as so often in altruism

You might say it’s not easy. You can’t just make up your mind so easily about whom to care for. It resonates with me. Ever dived into how humans show altruism towards others? It’s not exactly pretty. Not just because absolute altruism is unbeautifully small but simply because: We don’t have good, quantitative, answers as to whom we care about how much. We’re extremely erratic here: one minute we might completely ignore lives far away, and the next, a small change in the story can make us care deeply. And, so it may also be for your feelings towards future beings inheriting your memories and starting off with your current brain state. You have no very clear preferences. But here’s the thing—it’s all okay. There’s no “wrong” way to feel about which future mind to care about, so don’t sweat over figuring out which one is the real “you.” You are who you are right now, with all your memories, hopes, and desires related to one or several future minds, especially those who directly descend from you. It’s kind of like how we feel about our kids; no fixed rules on how much we should care.

Of course, we can ask from a utilitarian perspective, how you should care about whom, but that’s a totally separate question, as it deals with aggregate welfare, and thus exactly not with subjective preference for any particular individuals.

More than a play on words?

You may call it a play on words, but I believe there's something 'resolving' in this view (or in this 'definition' of self, if you will). And personally, the thought that I am not in any absolute sense the person who will wake up in that bed I go to sleep in now is inspiring. It sometimes motivates me to care a bit more about others than just myself (well, well, vaguely). None of these final points in of themselves justify the proposed view in any ultimate way, of course.

- ^

This sounds like moral relativism but has nothing to do with it. We might be utilitarians and agree every being has a unitary welfare weight. But that’s exactly not what we discuss here. We discuss your subjective (‘egoistical’) preference for you and for potentially the future of what we might or might not call ‘you’.

- ^

Fractalideation introduced the sleep-clone-swap thought experiment [LW(p) · GW(p)], and also guessed it is resolved by the individual whether "stream-of-consciousness continuity" or "substrate continuity" dominates, perfectly in line with the here generalized take.

49 comments

Comments sorted by top scores.

comment by Ape in the coat · 2024-07-29T12:37:20.409Z · LW(p) · GW(p)

I do not feel any less confusion after reading the post.

This sounds like moral relativism but has nothing to do with it.

Oh, it's much worse. It is epistemic relativism. You are saying that there is no one true answer to the question and we are free to trust whatever intuitions we have. And you do not provide any particular reason for this state of affairs.

Well, what do you anticipate experiencing? Something or nothing?

I don't know! That's the whole point. I do not need a permission to trust my intuitions, I want to understand which intuitions are trustworthy and which are not. There seems to be a genuine question about what happens and which rules govern it, and you are trying to sidestep it by saying "whatever happens - happens".

I can imagine a universe with such rules that teleportation kills a person and a universe in which it doesn't. I'd like to know how does our universe work.

Replies from: skluug, florian-habermacher↑ comment by Joey KL (skluug) · 2024-07-29T15:27:30.457Z · LW(p) · GW(p)

It is epistemic relativism.

Question 1 and 3 are explicitly about values, so I don't think they do amount to epistemic relativism.

There seems to be a genuine question about what happens and which rules govern it, and you are trying to sidestep it by saying "whatever happens - happens".

I can imagine a universe with such rules that teleportation kills a person and a universe in which it doesn't. I'd like to know how does our universe work.

There seems to be a genuine question here, but it is not at all clear that there actually is one. It is pretty hard to characterize what this question amounts to, i.e. what the difference would be between two worlds where the question has different answers. I take OP to be espousing the view that the question isn't meaningful for this reason (though I do think they could have laid this out more clearly).

Replies from: Ape in the coat↑ comment by Ape in the coat · 2024-07-30T07:13:34.391Z · LW(p) · GW(p)

Question 1 and 3 are explicitly about values, so I don't think they do amount to epistemic relativism.

They are formulated as such but the crux is not about values. People tend to agree that one should care about the successor of your subjective experience. The question is whether there will be one or not.And this is the question of fact.

There seems to be a genuine question here, but it is not at all clear that there actually is one. It is pretty hard to characterize what this question amounts to, i.e. what the difference would be between two worlds where the question has different answers.

Not really? We can easily do so if there exist some kind of "soul". I can conceptualize a world where a soul always stays tied to the initial body, and as soon as its destroyed, its destroyed as well. Or where it always goes to a new one if there is such opportunity, or where it chooses between the two based one some hidden variable so for us it appears to be at random.

Replies from: skluug, ABlue↑ comment by Joey KL (skluug) · 2024-07-30T16:04:20.222Z · LW(p) · GW(p)

Say there is a soul. We inspect a teleportation process, and we find that, just like your body and brain, the soul disappears on the transmitter pad, and an identical soul appears on the receiver. What would this tell you that you don't already know?

What, in principle, could demonstrate that two souls are in fact the same soul across time?

Replies from: Ape in the coat↑ comment by Ape in the coat · 2024-07-31T15:21:32.037Z · LW(p) · GW(p)

By "soul" here I mean a carrier for identity across time. A unique verification code of some sort. So that after we conduct a cloning experiment we can check and see that one person has the same code, while the other has a new code. Likewise, after the teleportation we can check and see whether the teleported person has the same code as before.

It really doesn't seem like our universe works like that, but knowing this doesn't help much to understand how exactly our reality is working.

Replies from: skluug, florian-habermacher↑ comment by Joey KL (skluug) · 2024-07-31T20:09:53.725Z · LW(p) · GW(p)

What observation could demonstrate that this code indeed corresponded to the metaphysical important sense of continuity across time? What would the difference be between a world where it did or it didn't?

Replies from: Ape in the coat↑ comment by Ape in the coat · 2024-08-01T07:12:46.881Z · LW(p) · GW(p)

Good question.

Consider a simple cloning experiment. You are put to sleep, a clone of you is created, after awakening you are not sure whether you are the original or the clone. Now consider this modification: after the original and the clone awakens they are told their code. And then each of them participate in the next iteration of the same experiment, untill there are 2^n people each of whom has participated in n iterations of experiment.

Before the whole chain of the experiments starts you know your code and that there are 2^n possible paths that your subjective experience can go through this iterated cloning experiment. Only one of these path will be yours in the end. You go through all the chain of the experiments and turns out you have preserved your initial code. Now lets consider two hypothesises: 1) The code does not correspond to your continuity across time; 2) The code does correspond to your continuity across time. Under 1) you've experienced a rare event with probability 1/2^n. Under 2) it was the only possibility. Therefore you update in favor of 2).

Replies from: skluug↑ comment by Joey KL (skluug) · 2024-08-02T03:07:45.377Z · LW(p) · GW(p)

This is the most interesting answer I've ever gotten to this line of questioning. I will think it over!

↑ comment by FlorianH (florian-habermacher) · 2024-07-31T18:36:34.033Z · LW(p) · GW(p)

The original mistake is that feeling of a "carrier for identity across time" - for which upon closer inspection we find no evidence, and which we thus have to let go of. Once you realize that you can explain all we observe and all you feel with merely, at any given time, your current mind, including its memories, and aspirations for the future, but without any further "carrier for identity", i.e. without any super-material valuable extra soul, there is resolving peace about this question.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2024-08-02T11:40:02.402Z · LW(p) · GW(p)

With that outlook, do you still plan for tomorrow? From big things like a career, to small things like getting the groceries in. If you do these things just as assiduously after achieving this "resolving peace" as before, it would not seem to have made any difference and was just a philosophical recreation.

Replies from: florian-habermacher, florian-habermacher↑ comment by FlorianH (florian-habermacher) · 2024-08-02T17:03:13.480Z · LW(p) · GW(p)

Btw, regarding:

it would not seem to have made any difference and was just a philosophical recreation

Mind, in this discussion about cloning thought experiments I'd find it natural that there are not many currently tangible consequences, even if we did find a satisfying answer to some of the puzzling questions around that topic.

That said, I guess I'm not the only one here with a keen intrinsic interest in understanding the nature of self even absent tangible & direct implications, or if these implications may remain rather subtle at this very moment.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2024-08-02T20:56:13.700Z · LW(p) · GW(p)

The answer that satisfies me is that I'll wonder about cloning machines and teleporters when someone actually makes one. 😌

↑ comment by FlorianH (florian-habermacher) · 2024-08-02T16:49:50.576Z · LW(p) · GW(p)

I obviously still care for tomorrow, as is perfectly in line with the theory.

I take you to imply that, under the here emphasized hypothesis about self not being a unified long-term self the way we tend to imagine, one would have to logically conclude sth like: "why care then, even about 'my' own future?!". This is absolutely not implied:

The questions around which we can get "resolving peace" (see context above!) refers to things like: If someone came along proposing to clone/transmit/... you, what to do? We may of course find peace about that question (which I'd say I have for now) without giving up to care about the 'natural' successors of ours in standard live.

Note how you can still have particular care for your close kin or so after realizing your preferential care about these is just your personal (or our general cultural) preference w/o meaning you're "unified" with your close kin in any magical way. It is equally all too natural for me to still keep my specific (and excessive) focus & care on the well-being of my 'natural' successors, i.e. on what we traditionally call "my tomorrow's self", even if I realize that we have no hint at anything magical (no persistent super-natural self) linking me to it; it's just my ingrained preference.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2024-08-02T20:53:54.936Z · LW(p) · GW(p)

Note how you can still have particular care for your close kin or so after realizing your preferential care about these is just

The word "just" in the sense used here is always a danger sign. "X is just Y" means "X is Y and is not a certain other thing Z", but without stating the Z. What is the Z here? What is the thing beyond brute, unanalysed preference, that you are rejecting here? You have to know what it is to be able to reject it with the words "just" and later "magical", and further on "super-natural". Why is it your preference? In another comment you express a keen interest in understanding the nature of self, yet there is an aversion here to understanding the sources of your preferences.

It is equally all too natural for me to still keep my specific (and excessive) focus & care on the well-being of my 'natural' successors, i.e. on what we traditionally call

Too natural? Excessive focus and care? What we traditionally call? This all sounds to me like you are trying not to know something.

Replies from: florian-habermacher↑ comment by FlorianH (florian-habermacher) · 2024-08-02T22:03:34.292Z · LW(p) · GW(p)

I'm sorry but I find you're nitpicking on words out of context, rather than to engage with what I mean. Maybe my EN is imperfect but I think not that unreadable:

A)

The word "just" in the sense used here is always a danger sign. "X is just Y" means "X is Y and is not a certain other thing Z", but without stating the Z.

... 'just' might sometimes be used in such abbreviated way, but here, the second part of my very sentence itself readily says what I mean with the 'just' (see "w/o meaning you're ...").

B)

You quoting me: "It is equally all too natural for me to still keep my specific (and excessive) focus & care on the well-being of my 'natural' successors, i.e. on what we traditionally call"

You: Too natural? Excessive focus and care? What we traditionally call? This all sounds to me like you are trying not to know something.

Recall, as I wrote in my comment, I try to support "why care [under my stated views], even about 'my' own future". I try to rephrase the sentence you quote, in a paragraph that avoids the 3 elements you criticize. I hope the meaning becomes clear then:

Evolution has ingrained into my mind with a very strong preference to care for the next-period inhabitant(s) X of my body. This deeply ingrained preference to preserve the well-being of X tends to override everything else. So, however much my reflections suggest to me that X is not as unquestionably related to me as I instinctively would have thought before closer examination, I will not be able to give up my commonly observed preferences for doing (mostly) the best for X, in situations where there is no cloning or anything of the like going on.

(you can safely ignore "(and excessive)". With it, I just meant to casually mention also we tend to be too egoistic; our strong specific focus on (or care for) our own body's future is not good for the world overall. But this is quite a separate thing.)

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2024-08-04T14:21:03.300Z · LW(p) · GW(p)

I didn't mean to be nitpicking, and I believe your words have well expressed your thoughts. But I found it striking that you treat preference as a brick wall that cannot be further questioned (or if you do, all you find behind it is "evolution"), while professing the virtue of an examined self.

In our present-day world I am as sure as I need to be that (barring having a stroke in the night) I am going to wake up tomorrow as me, little changed from today. I would find speculations about teleporters much more interesting if such machines actually existed. My preferences are not limited to my likely remaining lifespan, and the fact that I will not be around to have them then does not mean that I cannot have them and act on them now.

↑ comment by ABlue · 2024-07-30T14:18:23.811Z · LW(p) · GW(p)

People tend to agree that one should care about the successor of your subjective experience. The question is whether there will be one or not.And this is the question of fact.

But the question of "what, if anything, is the successor of your subjective experience" does not obviously have a single factual answer.

I can conceptualize a world where a soul always stays tied to the initial body, and as soon as its destroyed, its destroyed as well.

If souls are real (and the Hard Problem boils down to "it's the souls, duh"), then a teleporter that doesn't reattach/reconstruct your soul seems like it doesn't fit the hypothetical. If the teleporter perfectly reassembles you, that should apply to all components of you, even extraphysical ones.

Replies from: Ape in the coat↑ comment by Ape in the coat · 2024-07-31T15:31:28.942Z · LW(p) · GW(p)

But the question of "what, if anything, is the successor of your subjective experience" does not obviously have a single factual answer.

Then I'd like to see some explanation why it doesn't have an answer, which would be adding back to normality. I understand that I'm confused about the matter in some way. But I also understand that just saying "don't think about it" doesn't clear my confusion in the slightest.

If souls are real (and the Hard Problem boils down to "it's the souls, duh"), then a teleporter that doesn't reattach/reconstruct your soul seems like it doesn't fit the hypothetical. If the teleporter perfectly reassembles you, that should apply to all components of you, even extraphysical ones.

Nevermind cosciousness and the so called Hard Problem. By "soul" here I simply mean the carrier for identity over time, which may very well be physical. Yes, indeed it may be the case that perfect teleporter/cloning machine is just impossible because of such soul. That would be an appropriate solution to these problems.

Replies from: ABlue↑ comment by ABlue · 2024-07-31T19:07:32.891Z · LW(p) · GW(p)

Then I'd like to see some explanation why it doesn't have an answer, which would be adding back to normality.

I'm not saying it doesn't, I'm saying it's not obvious that it does. Normalcy requirements don't mean all our possibly-confused questions have answers, they just put restrictions on what those answers should look like. So, if the idea of successors-of-experience is meaningful at all, our normal intuition gives us desiderata like "chains of sucessorship are continuous across periods of consciousness" and "chains of successorship do not fork or merge with eachother under conditions that we currently observe."

If you have any particular notion of successorship that meets all the desiderata you think should matter here, whether or not a teleporter creates a successor is a question of fact. But it's not obvious what the most principled set of desiderata is, and for most sets of desiderata it's probably not obvious whether there is a unique notion of successorship.

OP is advocating for something along the lines of "There is no uniquely-most-principled notion of successorship; the fact that different people have different desiderata, or that some people arbitrarily choose one idea of succesorship over another that's just as logical, is a result of normal value differences." There is no epistemic relativism; given any particular person's most valued notion of successorship, everyone can, in principle, agree whether any given situation preserves it.

The relativism is in choosing which (whose) notion to use when making any given decision. Even in a world where souls are real and most people agree that continuity-of-consciousness is equivalent to continuity-of-soul-state, which is preserved by those nifty new teleporters, some curmudgeon who thinks that continuity-of-physical-location is also important shouldn't be forced into a teleporter against their will, since they expect (and all informed observers will agree) that their favored notion of continuity of consciousness will be ended by the teleporter.

↑ comment by FlorianH (florian-habermacher) · 2024-07-29T15:47:27.749Z · LW(p) · GW(p)

Oh, it's much worse. It is epistemic relativism. You are saying that there is no one true answer to the question and we are free to trust whatever intuitions we have. And you do not provide any particular reason for this state of affairs.

Nice challenge! There's no "epistemic relativism" here, even if I see where you're coming from.

First recall the broader altruism analogy: Would you say it's epistemic relativisim if I tell you, you can simply look inside yourself and see freely, how much you care, how closely connected you feel about people in a faraway country? You sure wouldn't reproach that to me; you sure agree it's your own 'decision' (or intrinsic inclination or so) that decides how much weight or care you personally put on these persons.

Now, remember the core elements I posit. "You" are (i) your mind of right here and now, including (ii) it's tendency for deeply felt care & connection to the 'natural' successors of yours, and that's about what there is to be said about you (+ there's memory). From this everything follows. It is evolution that has shaped us to shortcut the standard physical 'continuation' of you in coming periods, as a 'unique entity' in our mind, and has made you typically care sort of '100%' about your first few sec worth of forthcoming successors of yours [in analogy: Just as nature has shaped you to (usually) care tremendously also for your direct children or siblings]. Now there are (hypothetically) cases, where things are so warped and that are so unusual evolutionarily, that you have no clear tastes: that clone or this clone, if you are/are not destroyed in the process/while asleep or not/blabla - all the puzzles we can come up with. For all these cases, you have no clear taste as to which of the 'successors' of yours you care much and which you don't. In our inner mind's sloppy speak: we don't know "who we'll be". Equally importantly, you may see it one way, and your best friends may see it very differently. And what I'm explaining is that, given the axiom of "you" being you only right here and now, there simply IS no objective truth to be found about who is you later or not, and so there is no objective answer as to whom of those many clones in all different situations you ought to care how much about: it really does only boil down to how much you care about these. As, on a most fundamental level, "you" are only your mind right now.

And if you find you're still wondering about how much to care about which potential clone in which circumstances, it's not the fault of the theory that it does not answer it to you. You're asking to the outside a question that can only be answered inside you. The same way that, again, I cannot tell you how much you feel (or should feel) for third person x.

I for sure can tell you you ought to behaviorally care more from a moral perspective, and there I might use a specific rule that attributes each conscious clone an equal weight or so, and in that domain you could complain if I don't give you a clear answer. But that's exactly not what the discussion here is about.

I can imagine a universe with such rules that teleportation kills a person and a universe in which it doesn't. I'd like to know how does our universe work.

I propose a specific "self" is a specific mind at a given moment. The usual-speak "killing" X and the relevant harm associated with it means to prevent X's natural successors, about whom X cares so deeply, from coming into existence. If X cares about his physical-direct-body successors only, disintegrating and teleporting him means we destroy all he cared for, we prevented all he wanted to happen from happening, we have so-to-say killed him, as we prevented his successors from coming to live. If he looked forward to a nice trip to Mars where he is to be teleported to, there's no reason to think we 'killed' anyone in any meaningful sense, as "he"'s a happy space traveller finding 'himself' (well, his successors..) doing just the stuff he anticipated for them to be doing. There's nothing more objective to be said about our universe 'functioning' this or that way. As any self is only ephemeral, and a person is a succession of instantaneous selves linked to one another with memory and with forward-looking preferences, it really is these own preferences that matter for the decision, no outside 'fact' about the universe.

comment by JBlack · 2024-07-29T04:08:12.708Z · LW(p) · GW(p)

When it comes to questions like whether you "should" consider destructive uploading, it seems to me that it depends upon what the alternatives are, not just a position on personal identity.

If the only viable alternative is dying anyway in a short or horrible time and the future belongs only to entities that do not behave based on my memories, personality, beliefs, and values then I might consider uploading even in the case where that seems like suicide to the physical me. Having some expectation of personally experiencing being that entity is a bonus, but not entirely necessary.

Conversely if my expected lifespan is otherwise long and likely to be fairly good then I may decline destructive uploading even if I'm very confident (somehow?) in personally experiencing being that upload and it seems likely that the upload would on median have a better life. For one thing, people may devise non-destructive uploading later. For another, uploads seem more vulnerable to future s-risks or major changes in things that I currently consider part of my core identity.

Even non-destructive uploading might not be that attractive if it's very expensive or otherwise onerous on the physical me, or likely to result in the upload having a poor quality of life or being very much not-me in measurable ways.

It seems extremely likely that the uploads would believe (or behave as if they believe, in the hypothetical where they're not conscious beings) in continuity of personal identity across uploading.

It also seems like an adaptive belief even if false as it allows strictly more options for agents that hold it than for those that don't.

Replies from: florian-habermacher↑ comment by FlorianH (florian-habermacher) · 2024-07-29T09:15:45.333Z · LW(p) · GW(p)

All agreeable. Note, this is perfectly compatible with the relativity theory I propose, i.e. with the 'should' being entirely up to your intuition only. And, actually, the relativity theory, I'd argue, is the only way to settle debates you invoke, or, say, to give you peace of mind when facing these risky uploading situations.

Say, you can overnight destructively upload, with 100% reliability your digital clone will be in a nicely replicated digital world for 80 years (let's for simplicity assume for now the uploadee can be expected to be a consciousness comparable to us at all), while 'you' might otherwise overnight be killed with x% probability. Think of x as a concrete number. Say we have a 50% chance. For that X, will you want to upload.

I'm pretty certain you have no (i) clear-cut answer as to threshold x% from which on you'd prefer upload (although some might have a value of roughly 100%). And, clearly (ii) that threshold x% would vary a lot across persons.

Who can say? Only my relativity theory: There is no objective answer, from your self-regarding perspective.

Just like it's your intrinsic taste who determines how much or whether at all you care a lot about the faraway poor, or the not so faraway not so poor or for anyone really: it's a matter of your taste and nothing else. You're right now imagining going from you to inside the machine, and feel like that's simply you being you there w/o much dread and no worries, and looking forward to that being - or sequence of beings - 'living' essentially with nearly certainty another 80 years, then yes, you're right, go for it if the physical killing probability x% is more than a few %. After all, there will be that being in the machine, and for all intents and purposes you might call it 'you' in sloppy speak. You dread the future sequence of your physical you being destroyed and to 'only' be replaced by what feels like 'obviously a non-equivalent future entity that merely has copied traits, even if it behaves just as if it was the future you', then you're right to refuse the upload for any x% not close enough to 0%. It really is, relative. You only are the current you, including weights-of-care for different potential future successors on which there's no outside authority to tell you which ones are right or wrong.

comment by Fractalideation · 2024-08-01T23:28:53.549Z · LW(p) · GW(p)

Widely subscribe to OP point of view.

(loving that the sleep-clone-swap thought experiment I described in my comment [LW(p) · GW(p)] to Rob Bensinger's post inspired you!)

The level of discontinuity at which each people will consider a future entity/person/mind/self to still be the rightful continuation of a present entity/person/mind/self will vary according to their own present subjective feelings/opinions/points-of-view/experiences/intutions/thoughts/theories/interpretations/preferences/resolutions about it.

This is really Ship of Theseus paradox territory.

For example, the theory/resolution that I would personally (currently) widely subscribe to is:

"Temporal parts theory", quoting Wikipedia: "Another common theory put forth by David Lewis is to divide up all objects into three-dimensional time-slices which are temporally distinct, which avoids the issue that the two different ships exist in the same space at one time and a different space at another time by considering the objects to be distinct from each other at all points in time."

Some other people in the comments I think would be closer to this other theory/resolution:

"Continued identity theory":

"This solution (proposed by Kate, Ernest et al.) sees an object as staying the same as long as it continuously exists under the same identity without being fully transformed at one time. For instance, a house that has its front wall destroyed and replaced at year 1, the ceiling replaced at year 2, and so on, until every part of the house has been replaced will still be understood as the same house. However, if every wall, the floor, and the roof are destroyed and replaced at the same time, it will be known as a new house."

There are many other possible theories/resolutions.

Including OP "relativity theory", which if applied to the Ship of Theseus I guess would be something like: "Assuming you care a lot about the original Ship of Theseus, when all its components will have been progressively completely replaced, how much will you still care about it?".

Basically different people have different subjective feelings/opinions/points-of-view/experiences/intutions/thoughts/theories/interpretations/preferences/resolutions about entity/person/mind/self continuity and that's ok.

I personally find (at least for now) the "temporal parts theory" interpretation/resolution quite satisfying but I also like very much OP "relativity theory" and some other theories too!

And like the OP I would say: each to their own (and you are free to change your preference of theory whenever you feel like)

Replies from: florian-habermacher↑ comment by FlorianH (florian-habermacher) · 2024-08-02T18:34:09.809Z · LW(p) · GW(p)

Thanks! In particular also for your more-kind-than-warranted hint at your original w/o accusing me of theft!! Especially as I now realize (or maybe realize again) your sleep-clone-swap example, which indeed I love as an perfectly concise illustration, had also come along with at least an "I guess"-caveated "it is subjective", i.e. which some sense is really already included a core part of the conclusion/claim here.

I should have also picked up your 'stream-of-consciousness continuity' vs. 'substrate/matter continuity' terminology. Finally, the Ship of Theseus question thus, with "Temporal parts" vs. "Continued identity" would also be good links, although I guess I'd be spontaneously inclined to dismiss part of the discussion of these as questions 'merely of definition' - just until we get to the question of the mind/consciousness, where it seems to me indeed to become more fundamentally relevant (although, maybe, and ironically, after relegating the idea of a magical persistent self, maybe one could say, also here in the end it becomes slightly closer to that 'merely question of definition/preference' domain).

Btw, I'll now take your own link-to-comment and add it to my post - thanks if you can let me know where I can create such links; I remembered looking and not finding it ANYWHERE even on your own LW profile page.

Replies from: Fractalideation↑ comment by Fractalideation · 2024-08-03T10:57:40.190Z · LW(p) · GW(p)

Aaw no problem at all Florian, I genuinely simply enjoyed you mentioning that sleep-clone-swap thought experiment and truly wasn't bothered at all by anything about it, thank you so much for your very interesting and kind words and your citation and link in your article, wow I am blushing now!

And thank you so much for that great post of yours and taking the time to thoroughly answer so many comments (incuding mine!) that is so kind of you and makes for such an interesting thread about this topic of entity/person/mind/consciousness/self continuity/discontinuity which is quite fascinating!

And in my humble opinion indeed it has a lot to do with question of definitions/preferences but in any case it is always interesting to read/hear about eloquently/well-spoken words about this topic, thank you so much again for that!

About creating link-to-comment, I think one way to do it is to click on the time indicator next to the author name at the top of the comment then copy that link/URL.

comment by Viliam · 2024-07-29T08:15:36.293Z · LW(p) · GW(p)

In a society with destructive teleportation, it would probably feel weird for the first time, but I expect that after a few teleports (probably right after the first one) most people would switch to anticipating future experiences.

Then they would teach their kids that this is the obviously correct answer, and feeling otherwise would become socially inappropriate.

Replies from: florian-habermacher↑ comment by FlorianH (florian-habermacher) · 2024-07-29T09:24:55.675Z · LW(p) · GW(p)

Yep.

And the clue is, the exceptional one refusing, saying "this won't be me, I dread the future me* being killed and replaced by that one", is not objectively wrong. It might quickly become highly impractical for 'him'** not to follow the trend, but if his 'self'-empathy is focused only on his own direct physical successors, it is in some sense actually killing him if we put him in the machine. We kill him, and we create a person that's not him in the relevant sense, as he's currently not accepting the successor; if his empathic weight is 100% on his own direct physical successor and not the clone, we roughly 100% kill him in the relevant sense of taking away the one future life he cares about.

*'me being destroyed' here in sloppy speak; it's the successor he considers his natural successor which he cares about.

**'him' and his natural successors as he sees it.

comment by TAG · 2024-07-30T17:40:34.015Z · LW(p) · GW(p)

Care however it occurs to you!

Good decisions need to be based on correct beliefs as well as values.

Well, what do you anticipate experiencing? Something or nothing? You anticipate whatever you do anticipate and that’s all there is to know—there’s no “should” here

Why not? If there is some discernable fact of the matter about how personal continuity works, that epistemically-should constrain your expectations. Aside from any ethically-should issues.

What we must not do, is insist on reaching a universal, ‘objective’ truth about it.

Why not?

The current “me” is precisely my current mind at this exact moment—nothing more, nothing less.

Is it?

There's a theory that personal identity is only ever instantaneous...an "observer. moment"... such that as an objective fact, you have no successors. I don't know whether you believe it. If it's true , you epistemically-should believe it, but you don't seem to believe in epistemic norms.

There's another, locally popular , theory that the continuity of personal identity is only about what you care about. (It either just is that, or it needs to be simplified to that...it's not clear which). But it's still irrational to care about things that aren't real...you shouldn't care about collecting unicorns...so if there is some fact of the matter that you don't survive destructive teleportation, you shouldn't go for it, irrespective of your values.

Replies from: florian-habermacher↑ comment by FlorianH (florian-habermacher) · 2024-07-30T21:39:21.539Z · LW(p) · GW(p)

Good decisions need to be based on correct beliefs as well as values.

Yes, but here the right belief is the realization that what connects you to what we traditionally called your future "self", is nothing supernatural i.e. no super-material unified continuous self of extra value: we don't have any hint at such stuff; too well we can explain your feeling about such things as fancy brain instincts akin to seeing the objects in the 24FPS movie as 'moving' (not to say 'alive'); and too well we know we could theoretically make you feel you've experienced your past as a continuous self while you were just nano-assembled a mirco-second ago with exactly the right memory inducing this beliefs/'feeling'. So due to the absence of this extra "self": "You" are simply this instant's mind we currently observe from you. Now, crucially, this mind has, obviously, a certain regard, hopes, plans, for, in essence, what happens with your natural successor. In the natural world, it turns out to be perfectly predictable from the outside, who this natural successor is: your own body.

In situations like those imagined with cloning thought experiments instead, it suddenly is less obvious from the outside, whom you'll consider your most dearly cared for 'natural' (or now less obviously 'natural') successor. But as the only thing that in reality connects you with what we traditionally would have called "your future self", is your own particular preferences/hopes/cares to that elected future mind, there is no objective rule to tell you from outside, which one you have to consider the relevant future mind. The relevant is the one you find relevant. This is very analogous for, say, when you're in love, the one 'relevant' person in a room for you to save first in a fire (if you're egoistic about you and your loved one) is the one you (your brain instinct, your hormones, or whatever) picked; you don't have to ask anyone outside about whom that should be.

so if there is some fact of the matter that you don't survive destructive teleportation, you shouldn't go for it, irrespective of your values

The traditional notion of "survival" as in invoking a continuous integrated "self" over and above the succession of individual ephemeral minds with forward-looking preferences, must be put into perspective just as that of that long-term "self" itself indeed.

There's a theory that personal identity is only ever instantaneous...an "observer. moment"... such that as an objective fact, you have no successors. I don't know whether you believe it. If it's true , you epistemically-should believe it, but you don't seem to believe in epistemic norms.

There's another, locally popular , theory that the continuity of personal identity is only about what you care about. (It either just is that, or it needs to be simplified to that...it's not clear which). But it's still irrational to care about things that aren't real...you shouldn't care about collecting unicorns...so if there is some fact of the matter that you don't survive destructive teleportation, you shouldn't go for it, irrespective of your values.

Thanks. I'd be keen to read more on this if you have links. I've wondered to which degree the relegation of the "self" I'm proposing (or that may have been proposed in a similar way in Rob Bensinger's post and maybe before) is related to what we always hear about 'no self' from the more meditative crowd, though I'm not sure there's a link there at all. But I'd be keen to read of people who have proposed theoretical things in a similar direction.

There's a theory that personal identity is only ever instantaneous...an "observer. moment"... such that as an objective fact, you have no successors. I don't know whether you believe it.

On the one hand, 'No [third-party] objective successor' makes sense. On the other hand: I'm still so strongly programmed to absolutely want to preserve my 'natural' [unobjective but engrained in my brain..] successors, that the lack of 'outside-objective' successor doesn't impact me much.[1]

- ^

I think a simple analogy here, for which we can remain with the traditional view of self, is: Objectively, there's no reason I should care about myself so much, or about my closed ones; my basic moral theory would ask me to be a bit less kind to myself and kinder to others, but given my wiring I just don't manage to behave so perfectly.

↑ comment by TAG · 2024-08-06T12:04:23.568Z · LW(p) · GW(p)

Yes, but here the right belief is the realization that what connects you to what we traditionally called your future “self”, is nothing supernatural

As before [LW(p) · GW(p)] merely rejecting the supernatural doesn't give you a single correct theory, mainly because it doesn't give you a single theory. There a many more than two non-soul theories of personal identity (and the one Bensinger was assuming isn't the one you are assuming).

e. no super-material unified continuous self of extra value:

That's a flurry of claims. One of the alternatives to the momentary theory of personal identity is the theory that a person is a world-line, a 4D structure -- and that's a materialistic theory.

we don’t have any hint at such stuff;

Perhaps we have no evidence of something with all those properties, but we don't need something with all those properties to supply one alternative. Bensinger 's computationalism is also non magical (etc).

So due to the absence of this extra “self”: : “You” are simply this instant’s mind we currently observe from you.

Again, the theory of momentary identity isn't right just because soul theory is wrong.

But as the only thing that in reality connects you with what we traditionally would have called “your future self”, is your own particular preferences/hopes/

No, since I have never been destructively transported, I am also connected by material continuity. You can hardly call that supernatural!

In the natural world, it turns out to be perfectly predictable from the outside, who this natural successor is: your own body.

Great. So it isn't all about my values. It's possible for me to align my subjective sense of identity with objective data. .

Replies from: florian-habermacher↑ comment by FlorianH (florian-habermacher) · 2024-08-06T15:52:08.767Z · LW(p) · GW(p)

Core claim in my post is that the 'instantaneous' mind (with its preferences etc., see post) is - if we look closely and don't forget to keep a healthy dose of skepticism about our intuitions about our own mind/self - sufficient to make sense of what we actually observe. And given this instantaneous mind with its memories and preferences is stuff we can most directly observe without much surprise in it, I struggle to find any competing theories as simple or 'simpler' and therefore more compelling (Occam's razor), as I meant to explain in the post.

As I make very clear in the post, nothing in this suggests other theories are impossible. For everything there can of course be (infinitely) many alternative theories available to explain it. I maintain the one I propose has a particular virtue of simplicity.

Regarding computationalism: I'm not sure whether you meant a very specific 'flavor' of computationalism in your comment; but for sure I did not mean to exclude computationalist explanations in general; in fact I've defended some strong computationalist position in the past and see what I propose here to be readily applicable to it.

Replies from: TAG↑ comment by TAG · 2024-08-14T19:54:27.537Z · LW(p) · GW(p)

the ‘instantaneous’ mind (with its preferences etc., see post) is*—if we look closely and don’t forget to keep a healthy dose of skepticism about our intuitions about our own mind/self*—sufficient to make sense of what we actually observe

Huh? If you mean my future observations, then you are assuming a future self, and therefore temporally extended self. If you mean my present observations, then they include memories of past observations.

in fact I’ve defended some strong computationalist position in the past

But a computation is an series of steps over time, so it is temporarily extended

comment by JBlack · 2024-07-29T06:48:56.382Z · LW(p) · GW(p)

A thought experiment: Suppose that in some universe, continuity of self is exactly continuity of bodily consciousness. When your body sleeps, you die never to experience anything ever again. A new person comes into existence when the body awakens with your memories, personality, etc. (Except maybe for a few odd dream memories that mostly fade quickly)

Does it actually mean anything to say "a new person comes into existence when the body awakens with your memories, personality, etc."? Presumably this would mean that if you are expecting to go to sleep, then you expect to have no further experiences after that. But that seems to be begging the question: who are you? Someone experiences life-after-sleep. In every determinable way, including their own internal experiences, that person will be you. If you expected to die soon after you closed your eyes, that person remembers expecting to die but actually continuing on. Pretty much everyone in the society remembers "continuing on" many thousands of times.

Is expecting to die as soon as you sleep a rational belief in such a universe?

Replies from: lahwran, florian-habermacher, Viliam↑ comment by the gears to ascension (lahwran) · 2024-07-29T18:58:00.731Z · LW(p) · GW(p)

[edit: pinned to profile]

"Hard" problem

That seems to rely on answering the "hard problem of consciousness" (or as I prefer, "problem of first-person something-rather-than-nothing") with an answer like, "the integrated awareness is what gets instantiated by metaphysics".

That seems weird as heck to me. It makes more sense for first-person-something-rather-than-nothing question to be answered by "the individual perspectives of causal nodes (interacting particles' wavefunctions, or whatever else interacts in spatially local ways) in the universe's equations are what gets Instantiated™ As Real® by metaphysics".

(by metaphysics here I just mean ~that-which-can-exist, or ~the-root-node-of-all-possibility; eg this is the thing solomonoff induction tries to model by assuming the root-node-of-all-possibility contains only halting programs, or tegmark 4 tries to model as some mumble mumble blurrier version of solomonoff or something (I don't quite grok tegmark 4); I mean the root node of the entire multiverse of all things which existed at "the beginning", the most origin-y origin. the thing where, when we're surprised there's something rather than nothing, we're surprised that this thing isn't just an empty set.)

If we assume my belief about how to resolve this philosophical confusion is correct, then we cannot construct a description of a hypothetical universe that could have been among those truly instantiated as physically real in the multiverse, and yet also have this property where the hard-problem "first-person-something-rather-than-nothing" can disappear over some timesteps but not others. Instead, everything humans appear to have preferences about relating to death becomes about the so called easy problem, the question of why the many first-person-something-rather-than-nothings of the particles of our brain are able to sustain an integrated awareness. Perhaps that integrated awareness comes and goes, eg with sleep! It seems to me to be what all interesting research on consciousness is about. But I think that either, a new first-person-something-rather-than-nothing-sense-of-Consciousness is allocated to all the particles of the whole universe in every infinitesimal time slice that the universe in question's true laws permit; or, that first-person-something-rather-than-nothing is conserved over time. So I don't worry too much about losing the hard-problem consciousness, as I generally believe it's just "being made of physical stuff which Actually Exists in a privileged sense".

The thing is, this answer to the hard problem of consciousness has kind of weird results relating to eating food. Because it means eating food is a form of uploading! you transfer your chemical processes to a new chunk of matter, and a previous chunk of matter is aggregated as waste product. That waste product was previously part of you, and if every particle has a discrete first-person-something-rather-than-nothing which is conserved, then when you eat food you are "waking up" previously sleeping matter, and the waste matter goes to sleep, forgetting near everything about you into thermal noise!

"Easy" problem

So there's still an interesting problem to resolve - and in fact what I've said resolves almost nothing; it only answers camp #2 [LW · GW], providing what I hope is an argument for why they should become primarily interested in camp #1 [LW · GW]. In camp #1 [LW · GW] terms, we can discuss information theory or causal properties about whether the information or causal chain that makes up the things those first-person-perspective-units ie particles are information theoretically "aware of" or "know" things about their environment; we can ask causal questions - eg, "is my red your red?" can instead be "assume my red is your red if there is no experiment which can distinguish them, so can we find such an experiment?" - in which case, I don't worry about losing even the camp #1 form of selfhood-consciousness from sleep, because my brain is overwhelmingly unchanged from sleep and stopping activations and whole-brain synchronization of state doesn't mean it can't be restarted.

It's still possible that every point in spacetime has a separate first-person-something-rather-than-nothing-"consciousness"/"existence", in which case maybe actually even causally identical shapes of particles/physical stuff in my brain which are my neurons representing "a perception of red in the center of my visual field in the past 100ms" are a different qualia than the same ones at a different infinitesimal timestep, or are different qualia than if the exact same shape of particles occurred in your brain. But it seems even less possible to get traction on that metaphysical question than on the question of the origin of first-person-something-rather-than-nothing, and since I don't know of there being any great answers to something-rather-than-nothing, I figure we probably won't ever be able to know. (Also, our neurons for red are, in fact, slightly different. I expect the practical difference is small.)

But that either doesn't resolve or at least partially backs OP's point, about timesteps/timeslices already potentially being different selves in some strong sense, due to ~lack of causal access across time, or so. Since the thing I'm proposing also says non-interacting particles in equilibrium have an inactive-yet-still-real first-person-something-rather-than-nothing, then even rocks or whatever you're on top of right now or your keyboard keys carry the bare-fact-of-existence, and so my preference for not dying can't be about the particles making me up continuing to exist - they cannot be destroyed, thanks to conservation laws of the universe, only rearranged - and my preference is instead about the integrated awareness of all of these particles, where they are shaped and moving in patterns which are working together in a synchronized, evolution-refined, self-regenerating dance we call "being alive". And so it's perfectly true that the matter that makes me up can implement any preference about what successor shapes are valid.

Unwantable preferences?

On the other hand, to disagree with OP a bit, I think there's more objective truth to the matter about what humans prefer than that. Evolution should create very robust preferences for some kinds of thing, such as having some sort of successor state which is still able to maintain autopoesis. I think it's actually so highly evolutionarily unfit to not want that that it's almost unwantable for an evolved being to not want there to be some informationally related autopoietic patterns continuing in the future.

Eg, consider suicide - even suicidal people would be horrified by the idea that all humans would die if they died, and I suspect that suicide is an (incredibly high cost, please avoid it if at all possible!) adaptation that has been preserved because there are very rare cases where it can be increase the inclusive fitness of a group the organism arose from (but I generally believe it almost never is a best strategy, so if anyone reads this who is thinking about it, please be aware I think it's a terribly high cost way to solve whatever problem makes it come to mind, and there are almost certainly tractable better options - poke me if a nerd like me can ever give useful input); but I bring it up because it means, while you can maybe consider the rest of humanity or life on earth to be not sufficiently "you" in an information theory sense that dying suddenly becomes fine, it seems to me to be at least one important reason that suicide is ever acceptable to anyone at all; if they knew they were the last organism I feel like even a maximally suicidal person would want to stick it out for as long as possible, because if all other life forms are dead they'd want to preserve the last gasp of the legacy of life? idk.

But yeah, the only constraints on what you want are what physics permits matter to encode and what you already want. You probably can't just decide to want any old thing, because you already want something different than that. Other than that objection, I think I basically agree with OP.

↑ comment by FlorianH (florian-habermacher) · 2024-07-29T10:00:23.286Z · LW(p) · GW(p)

Very interesting question to me coming from the perspective I outline in the post - sorry a bit lengthy answer again:

According to the basic take from the post, we're actually +- in your universe, except that the self is even more ephemeral than you posit. And as I argue, it's relative, i.e. up to you, which future self you end up caring about in any nontrivial experiment.

Trying to re-frame your experiment from that background as best as I can, I imagine a person having an inclination to think of 'herself' (in sloppy speak; more precisely: she cares about..) as (i) her now, plus (ii) her natural successors, as which she, however, qualifies only those that carry the immediate succession of her currently active thoughts before she falls asleep. Maybe some weird genetic or cultural tweak or drug in her brain has made her - or maybe all of us in that universe - like that. So:

Is expecting to die as soon as you sleep a rational belief in such a universe?

I'd not call it 'belief' but simply a preference, and a basic preference is not rational or irrational. She may simply not care about the future succession of selves coming out at the other end of her sleep, and that 'not caring' is not objectively faulty. It's a matter of taste, of her own preferences. Of course, we may have good reasons to speculate that it's evolutionarily more adaptive to have different preferences - and that's why we do usually have them indeed - but we're wrong to call her misguided; evolution is no authority. From a utilitarian perspective we might even try to tweak her behavior, in order for her to become a convenient caretaker for her natural next-day successors, as from our perspective they're simply usual, valuable beings. But it's still not that we'd be more objectively right than her when she says she has no particular attachment for the future beings inhabiting what momentarily is 'her' body.

↑ comment by Viliam · 2024-07-29T08:12:13.914Z · LW(p) · GW(p)

When your body sleeps, you die never to experience anything ever again.

This is an infohazard that can fuck up your sleep cycle. :D

Pretty much everyone in the society remembers "continuing on" many thousands of times.

Many people probably also remember that their previous selves remembered something they no longer remember, or felt enthusiastic about something they can't find motivation to do. So there is some evidence in both directions.

comment by avturchin · 2024-07-29T09:43:09.127Z · LW(p) · GW(p)

Anticipation can be formalized as an ability to execute a secret plan. I can ask – if I generate a plan like "jump 5 times and sing A' – will my future upload execute this plan? Some uploads will execute it, depends on the way how the upload is created. Thus I can continue planning for them.

Replies from: florian-habermacher↑ comment by FlorianH (florian-habermacher) · 2024-07-30T21:43:53.514Z · LW(p) · GW(p)

The upload +- by definition inherits your secret plan and will thus do your jumps.

comment by Richard_Kennaway · 2024-07-29T06:48:54.085Z · LW(p) · GW(p)

Well, what do you anticipate experiencing? Something or nothing? You anticipate whatever you do anticipate and that’s all there is to know

There is something more to know: whether your anticipation will be borne out when the thing comes to pass. That is what an anticipation is.

Replies from: JBlack, florian-habermacher↑ comment by JBlack · 2024-07-29T07:14:31.391Z · LW(p) · GW(p)

Not in this case.

a) If you anticipated continuity of experience into upload and were right, then you experience being an upload and remember being you and and you believe that your prediction is borne out.

b) If you were wrong and the upload is conscious but isn't you, then you're dead and nothing is borne out to you. The upload experiences being an upload and remembers being you and believes that your prediction is borne out.

c) If you were wrong and the upload is not conscious, then you're dead and nothing is borne out to you. Nothing is borne out to the upload either, since it was never able to experience anything being borne out or not. The upload unconsciously mimics everything you would have done if your prediction had been borne out.

Everyone else sees you continuing as you would have done if your prediction had been borne out.

So in all cases, everyone able to experience anything notices that your prediction is borne out.

The same is true if you had predicted (b).

The only case where there is a difference is if you predicted (c). If (a) or (b) was true then someone experiences you being wrong, whether or not that person is you is impossible to determine. If you're right then the upload still behaves as if you were wrong. Everyone else's experience is consistent with your prediction being borne out. Or not borne out, since they predict the same things from everyone else's point of view.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2024-07-29T07:40:33.598Z · LW(p) · GW(p)

I do not derive from this the equanimity that you do. It matters to me whether I go on existing, and the fact that I won't be around to know if I don't does not for me resolve the question of whether to undergo destructive uploading.

Replies from: clone of saturn↑ comment by clone of saturn · 2024-07-29T09:20:08.455Z · LW(p) · GW(p)

Note that the continuity you feel is strictly backwards-looking; we have no way to call up the you of a year ago to confirm that he still agrees that he's continuous with the you of now. In fact, he is dead, having been destructively transformed into the you of now. So what makes one destructive transformation different from another, as long as the resulting being continues believing he is you?

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2024-07-29T09:37:23.452Z · LW(p) · GW(p)

The desk in front of me is not being continuously destroyed and replaced by a replica. Neither am I. That is the difference between my ordinary passage through time and these (hypothetical, speculative, and not necessarily even possible) scenarios.

↑ comment by FlorianH (florian-habermacher) · 2024-07-29T08:47:39.404Z · LW(p) · GW(p)

The point is, "you" are exactly the following and nothing else: You're (i) your mind right now, (ii) including its memory, and (iii) its forward-looking care, hopes, dreams for, in particular, its 'natural' successor. Now, in usual situations, the 'natural successor' is obvious, and you cannot even think of anything else: it's the future minds that inhabit your body, your brain, that's why you tend to call the whole series a unified 'you' in common speak.

Now, with cloning, if you absolutely care for a particular clone, then, for every purpose, you can extend that common speak to the cloning situation, if you want, and say my 'anticipation will be borne out'/'I'll experience...'. But, crucially, note, that you do NOT NEED to; in fact, it's sloppy speak. As in fact, these are separate future units, just tied in various (more or less 'natural') ways to you, which offers vagueness, and choice. Evolution leaves you dumbfounded about it, as there is no strictly speaking 'natural' successor anymore. Natural has become vague. It'll depend on how you see it.

Crucially, there will be two persons, say after a 'usual' after cloning, and you may 'see yourself' - in sloppy speak - in either of these two. But it's just a matter of perspective. Strictly speaking, again, you're you right now, and your anticipation of one or future person.

It's a bit like evolution makes you confused about how much you care for strangers. Do you go to a philosophers to ask how much you want to give to the faraway poor? No! You have your inner degree of compassion to them, it may change at any time, and it's not wrong or right.*[1]

- ^

Of course, on another level, from a utilitarian perspective, I'd love you to love these faraway beings more, and its not okay that we screw up the world because of not caring, but that's a separate point.

↑ comment by Richard_Kennaway · 2024-07-29T09:40:32.594Z · LW(p) · GW(p)

My reply to clone of saturn [LW(p) · GW(p)] applies here also.

The point is, "you" are exactly the following and nothing else: You're (i) your mind right now, (ii) including its memory, and (iii) its forward-looking care, hopes, dreams for, in particular, its 'natural' successor.

You have mentally sliced the thing up in this way, but reality does not contain any such divisions. My left hand yesterday and my left hand today are just as connected as my left hand and my right hand.

Replies from: florian-habermacher↑ comment by FlorianH (florian-habermacher) · 2024-07-29T10:19:10.297Z · LW(p) · GW(p)

As I write, call it a play on words; a question of naming terms - if you will. But then - and this is just a proposition plus a hypothesis - try to provide a reasonable way to objectively define what one 'ought' to care about in cloning scenarios; and contemplate all sorts of traditionally puzzling thought experiments about neuron replacements and what have you, and you'll inevitable end up with hand-waving, stating arbitrary rules that may seem to work (for many, anyhow) in one though experiment, just to be blatantly broken by the next experiment... Do that enough and get bored and give up - or, 'realize', eventually, maybe: There is simply not much left of the idea of a unified and continuous, 'objectively' traceable self. There's a mind here and now and, yes of course, it absolutely tends to care about what it deems to be its 'natural' successors in any given scenario. And this care is so strong, it feels as if these successors were one entire, inseparable thing, and so it's not a surprise we cannot fathom there are divisions.

Replies from: Richard_Kennaway↑ comment by Richard_Kennaway · 2024-07-29T15:34:38.779Z · LW(p) · GW(p)

What I give up on is the outré thought experiments, not my own observation of myself that I am a unified, continuous being. A changeable being, and one made of parts working together, but not a pile of dust.

A long time ago I regularly worked at a computer terminal where if you hit backspace 6 times in a row, the computer would crash. So you tried to avoid doing that. Clever arguments that crash your brain, likewise.

comment by FlorianH (florian-habermacher) · 2025-02-27T00:16:54.764Z · LW(p) · GW(p)

Just found proof! Look at the beautiful parallel, in Vipassana according to MCTB2 (or audio) by Daniel Ingram:

[..] dangerous term “mind”, [..] it cannot be located. I’m certainly not talking about the brain, which we have never experienced, since the standard for insight practices is what we can directly experience. As an old Zen monk once said to a group of us in his extremely thick Japanese accent, “Some people say there is mind. I say there is no mind, but never mind! Heh, heh, heh!” However, I will use this dangerous term “mind” often, or even worse “our mind”, but just remember when you read it that I have no choice but to use conventional language, and that in fact there are only utterly transient mental sensations. Truly, there is no stable, unitary, discrete entity called “mind” that can be located! By doing insight practices, we can fully understand and appreciate this. If you can do this, we’ll get along just fine. Each one of these sensations [..] arises and vanishes completely before another begins [..]. This means that the instant you have experienced something, you can know that it isn’t there anymore, and whatever is there is a new sensation that will be gone in an instant.

Ok, this may prove nothing at all, and I haven't even (yet) personally started trying to mentally observe what's told in that quote, but I must say, on a purely intellectual level, this makes absolutely perfect sense to me exactly from the thoughts I hoped to convey in the post.

(not the first time I have the impression there are some particular elements of deep observations meditators, e.g. Sam Harris, explain, can actually be intellectually - but maybe only intellectually, maybe exactly not intuitively - grasped by rather pure reasoning about the brain and some of its workings/with some thought experiments or so. But in the above, I find the fit now particularly well between my 'theoretical' post and the seeming practice insights)

comment by green_leaf · 2024-07-31T16:44:56.687Z · LW(p) · GW(p)

The central error of this post lies in the belief that we don't persist over time. All other mistakes follow from this one.