I don't think MIRI "gave up"

post by Raemon · 2023-02-03T00:26:07.552Z · LW · GW · 64 commentsContents

Eliezer and "Death With Dignity" Q1: Does 'dying with dignity' in this context mean accepting the certainty of your death, and not childishly regretting that or trying to fight a hopeless battle? Nate and "Don't go through the motions of doing stuff you don't really believe in." Don't delude yourself about whether you're doing something useful. Be able to think clearly about what actually needs doing, so that you can notice things that are actually worth doing. None 64 comments

Disclaimer: I haven't run this by Nate or Eliezer, if they think it mischaracterizes them, whoops.

I have seen many people assume MIRI (or Eliezer) ((or Nate?)) has "given up", and been telling other people to give up.

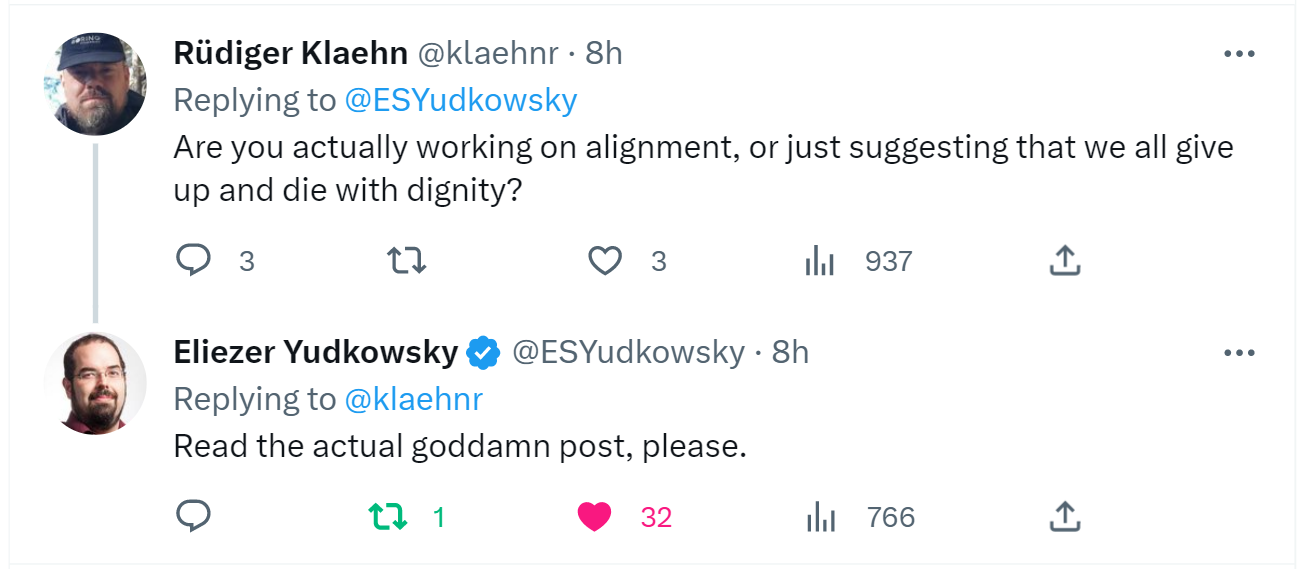

On one hand: if you're only half paying attention, and skimmed a post called "Death With Dignity", I think this is a kinda reasonable impression to have ended up with. I largely blame Eliezer for choosing a phrase which returns "support for assisted suicide" when you google it.

But, I think if you read the post in detail, it's not at all an accurate summary of what happened, and I've heard people say this who I feel like should have read the post closely enough to know better.

Eliezer and "Death With Dignity"

Q1: Does 'dying with dignity' in this context mean accepting the certainty of your death, and not childishly regretting that or trying to fight a hopeless battle?

Don't be ridiculous. How would that increase the log odds of Earth's survival?

The whole point of the post is to be a psychological framework for actually doing useful work that increases humanity's long log odds of survival. "Giving Up" clearly doesn't do that.

[left in typo that was Too Real]

[edited to add] Eliezer does go on to say:

That said, I fought hardest while it looked like we were in the more sloped region of the logistic success curve, when our survival probability seemed more around the 50% range; I borrowed against my future to do that, and burned myself out to some degree. That was a deliberate choice, which I don't regret now; it was worth trying, I would not have wanted to die having not tried, I would not have wanted Earth to die without anyone having tried. But yeah, I am taking some time partways off, and trying a little less hard, now. I've earned a lot of dignity already; and if the world is ending anyways and I can't stop it, I can afford to be a little kind to myself about that.

I agree this means he's "trying less heroically hard." Someone in the comments says "this seems like he's giving up a little". And... I dunno, maybe. I think I might specifically say "giving up somewhat at trying heroically hard". But I think if you round "give up a little at " to "give up" you're... saying an obvious falsehood? (I want to say "you're lying", but as previously discussed, sigh, no, it's not lying, but, I think your cognitive process is fucked up in a way that should make you sit-bolt-upright-in-alarm [LW · GW].)

I think it is a toxic worldview to think that someone should be trying maximally hard, to a degree that burns their future capacity, and that to shift from that to "work at a sustainable rate while recovering from burnout for a bit" count as "giving up." No. Jesus Christ no. Fuck that worldview.

(I do notice that what I'm upset about here is the connotation of "giving up", and I'd be less upset if I thought people were simply saying "Eliezer/MIRI has stopped taking some actions" with no further implications. But I think people who say this are bringing in unhelpful/morally-loaded implications, and maybe equivocating between them)

Nate and "Don't go through the motions of doing stuff you don't really believe in."

I think Nate says a lot of sentences that sound closer to "give up" upon first read/listen. I haven't run this post by him, and am least confident in my claim he wouldn't self-describe as "having given up". But would still bet against it. (Meanwhile, I do think it's possible for someone to have given up without realizing it, but, don't think that's what happened here)

From his recent post Focus on the places where you feel shocked everyone's dropping the ball [LW · GW]:

Look for places where everyone's fretting about a problem that some part of you thinks it could obviously just solve.

Look around for places where something seems incompetently run, or hopelessly inept, and where some part of you thinks you can do better.

Then do it better.

[...]

Contrast this with, say, reading a bunch of people's research proposals and explicitly weighing the pros and cons of each approach so that you can work on whichever seems most justified. This has more of a flavor of taking a reasonable-sounding approach based on an argument that sounds vaguely good on paper, and less of a flavor of putting out an obvious fire that for some reason nobody else is reacting to.

I dunno, maybe activities of the vaguely-good-on-paper character will prove useful as well? But I mostly expect the good stuff to come from people working on stuff where a part of them sees some way that everybody else is just totally dropping the ball.

In the version of this mental motion I’m proposing here, you keep your eye out for ways that everyone's being totally inept and incompetent, ways that maybe you could just do the job correctly if you reached in there and mucked around yourself.

That's where I predict the good stuff will come from.

And if you don't see any such ways?

Then don't sweat it. Maybe you just can't see something that will help right now. There don't have to be ways you can help in a sizable way right now.

I don't see ways to really help in a sizable way right now. I'm keeping my eyes open, and I'm churning through a giant backlog of things that might help a nonzero amount—but I think it's important not to confuse this with taking meaningful bites out of a core problem the world is facing, and I won’t pretend to be doing the latter when I don’t see how to.

Like, keep your eye out. For sure, keep your eye out. But if nothing in the field is calling to you, and you have no part of you that says you could totally do better if you deconfused yourself some more and then handled things yourself, then it's totally respectable to do something else with your hours.

I do think this looks fairly close to "having given up." The key difference is the combo of keeping his eyes open for ways to actually help in a serious way.

The point of Death With Dignity, and with MIRI's overall vibe, as I understand it, is:

Don't keep doing things out a vague belief that you're supposed to do something.

Don't delude yourself about whether you're doing something useful.

Be able to think clearly about what actually needs doing, so that you can notice things that are actually worth doing.

(Note: I'm not sure I agree with Nate here, strategically, but that's a separate question from whether he's given up or not)

((I think reasonable people can disagree about whether the thing Nate is saying amounts to "give up", for some vague verbal-cultural clustering of what "give up" means. But, I don't think this is at all a reasonable summary of anything I've seen Eliezer say, and I think most people were responding to Death With Dignity rather than random 1-1 Nate conversations or his most recent post))

64 comments

Comments sorted by top scores.

comment by Alex_Altair · 2023-02-03T02:27:43.020Z · LW(p) · GW(p)

I don't think the statement "MIRI has given up" is true, unqualified, and I don't think I'm one of the people you're referring to who say it.

But like, Death With Dignity sure did cause me to make some updates about MIRI and Eliezer. For all intents and purposes, I go around acting the same as I would act if MIRI and Eliezer gave up. When I read people saying thing like "blah blah since MIRI gave up" my reaction isn't "what? No they didn't!", my reaction is more like "mm, nod". (The post itself is probably only about half the reason for this; the other half would just be their general lack of visible activity over the last few years.)

I feel like those who are upset that Death With Dignity caused people to believe that MIRI has given up are continuing to miss some critical lesson. I think the result is much less of a failure of people to read and understand the post, and much more of a failure of the prominent people to understand how communication works.

Replies from: Raemon, Vaniver↑ comment by Raemon · 2023-02-03T03:32:33.469Z · LW(p) · GW(p)

the other half would just be their general lack of visible activity over the last few years

This seems... quite false to me? Like, I think there's probably been more visible output from MIRI in the past year than in the previous several? Hosting and publishing the MIRI dialogues counts as output. AGI Ruin: A List of Lethalities [LW · GW] and the surrounding discourse is, like, one of the most important pieces to come out in the past several years. I think Six Dimensions of Operational Adequacy in AGI Projects [LW · GW] is technically old, but important. See everything in 2021 MIRI Conversations [? · GW] and 2022 MIRI Discussion [? · GW].

I do think MIRI "at least temporarily gave up" on personally executing on technical research agendas, or something like that, but, that's not the only type of output.

Most of MIRI's output is telling people why their plans are bad and won't work, and sure that's not very fun output, but, if you believe the set of things they believe, seems like straightforwardly good/important work.

Replies from: Duncan_Sabien, Alex_Altair, cubefox, DaemonicSigil↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-03T04:22:35.648Z · LW(p) · GW(p)

Additionally, during the past couple of years, MIRI has:

- Continuously maintained a "surviving branch" of its 2017 research agenda (currently has three full-time staff on it)

- Launched the Visible Thoughts Project, which while not a stunning exemplar of efficient success has produced two complete 1000-step runs so far, with more in the works

- Continued to fund Scott Garrabrant's Agent Foundations research, including things like collab retreats and workshops with the Topos Institute (I helped run one two weeks ago and it went quite well by all accounts)

- Continued to help with AI Impacts, although I think the credit there is more logistical/operational (approximately none of MIRI's own research capacity is involved; AI Impacts is succeeding based on its own intellectual merits)

- I don't know much about this personally, but I'm pretty sure we're funding part or all of what Vivek's up to

This is not a comprehensive list.

Replies from: Davidmanheim↑ comment by Davidmanheim · 2023-02-06T08:08:52.008Z · LW(p) · GW(p)

Vanessa Kosoy's research has split off a little bit to push in a different direction, but it was also directly funded by MIRI for several years, and came out of the 2017 agenda.

↑ comment by Alex_Altair · 2023-02-03T04:55:43.614Z · LW(p) · GW(p)

Oh, yeah, that's totally fair. I agree that a lot of those writings are really valuable, and I've been especially pleased with how much Nate has been writing recently. I think there are a few factors that contributed to our disagreement here;

- I meant to refer to my beliefs about MIRI at the time that Death With Dignity was published, which means most of what you linked wasn't published yet. So by "last few years" I meant something like 2017-2021, which does look sparse.

- I was actually thinking about something more like "direct" alignment work. 2013-2016 was a period where MIRI was outputting much more research, hosting workshops, et cetera.

- MIRI is small enough that I often tend to think in terms of what the individual people are doing, rather than attributing it to the org, so I think of the 2021 MIRI conversations as "Eliezer yells at people" rather than "MIRI releases detailed communications about AI risk"

Anyway my overall reason for saying that was to argue that it's reasonable for people to have been updating in the "MIRI giving up" direction long before Death With Dignity.

Replies from: Vaniver, RobbBB↑ comment by Vaniver · 2023-02-03T05:16:05.317Z · LW(p) · GW(p)

it's reasonable for people to have been updating in the "MIRI giving up" direction long before Death With Dignity.

Hmm I'm actually not as sure about this one--I think there was definitely a sense that MIRI was withdrawing to focus on research and focus less on collaboration, there was the whole "non-disclosed by default" thing, and I think a health experiment on Eliezer's part ate a year or so of intellectual output, but like, I was involved in a bunch of attempts to actively hire people / find more researchers to work with MIRI up until around the start of COVID. [I left MIRI before COVID started, and so was much less in touch with MIRI in 2020 and 2021.]

↑ comment by Rob Bensinger (RobbBB) · 2023-02-03T10:12:14.527Z · LW(p) · GW(p)

My model is that MIRI prioritized comms before 2013 or so, prioritized a mix of comms and research in 2013-2016, prioritized research in 2017-2020, and prioritized comms again starting in 2021.

(This is very crude and probably some MIRI people would characterize things totally differently.)

I don't think we "gave up" in any of those periods of time, though we changed our mind about which kinds of activities were the best use of our time.

I was actually thinking about something more like "direct" alignment work. 2013-2016 was a period where MIRI was outputting much more research, hosting workshops, et cetera.

2013-2016 had more "research output" in the sense that we were writing more stuff up, not in the sense that we were necessarily doing more research then.

I feel like your comment is blurring together two different things:

- If someone wasn't paying much attention in 2017-2020 to our strategy/plan write-ups, they might have seen fewer public write-ups from us and concluded that we've given up.

- (I don't know that this actually happened? But I guess it might have happened some...?)

- If someone was paying some attention to our strategy/plan write-ups in 2021-2023, but was maybe misunderstanding some parts, and didn't care much about how much MIRI was publicly writing up (or did care, but only for technical results?), then they might conclude that we've given up.

Combining these two hypothetical misunderstandings into a single "MIRI 2017-2023 has given up" narrative seems very weird to me. We didn't even stop doing pre-2017 things like Agent Foundations in 2017-2023, we just did other things too.

↑ comment by cubefox · 2023-02-03T13:45:12.528Z · LW(p) · GW(p)

For what it's worth, the last big MIRI output I remember is Arbital, which unfortunately didn't get a lot of attention. But since then? Publishing lightly edited conversations doesn't seem like a substantial research output to me.

(Scott Garrabrant has done a lot of impressive foundational formal research, but it seems to me of little applicability to alignment, since it doesn't operate in the usual machine learning paradigm. It reminds a bit of research in formal logic in the last century: People expected it to be highly relevant for AI, yet it turned out to be completely irrelevant. Not even "Bayesian" approaches to AI did go anywhere. My hopes for other foundational formal research today are similarly low, except for formal work which roughly fits into the ML paradigm, like statistical learning theory.)

Replies from: D0TheMath, lahwran↑ comment by Garrett Baker (D0TheMath) · 2023-02-04T07:46:30.379Z · LW(p) · GW(p)

I actually think his recent work on geometric rationality will be very relevant for thinking about advanced shard theories. Shards are selected using winner-take-all dynamics. Also, in worlds where ML alone does not in fact get you all the way to AGI, his work will become far more relevant than the alignment work you feel bullish on.

↑ comment by the gears to ascension (lahwran) · 2023-02-04T09:37:50.835Z · LW(p) · GW(p)

formal logic is not at all irrelevant for AI. the problem with it is that it only works once you've got low enough uncertainty weights to use it on. Once you do, it's an incredible boost to a model. And deep learning folks have known this for a while.

Replies from: cubefox↑ comment by DaemonicSigil · 2023-02-03T07:58:50.411Z · LW(p) · GW(p)

Question about this part:

I do think MIRI "at least temporarily gave up" on personally executing on technical research agendas, or something like that, but, that's not the only type of output.

So, I'm sure various people have probably thought about this a lot, but just to ask the obvious dumb question: Are we sure that this is even a good idea?

Let's say the hope is that at some time in the future, we'll stumble across an Amazing Insight that unblocks progress on AI alignment. At that point, it's probably good to be able to execute quickly on turning that insight into actual mathematics (and then later actual corrigible AI designs, and then later actual code). It's very easy for knowledge of "how to do things" to be lost, particularly technical knowledge. [1] Humanity loses this knowledge on a generational timescale, as people die, but it's possible for institutions to lose knowledge much more quickly due to turnover. All that just to say: Maybe MIRI should keep doing some amount of technical research, just to "stay in practice".

My general impression here is that there's plenty of unfinished work in agent foundations and decision theory, things like: How do we actually write a bounded program that implements something like UDT? How do we actually do calculations with logical decision theories such that we can get answers out for basic game-theory scenarios (even something as simple as the ultimatum game is unsolved IIRC)? What are some common-sense constraints the program-value-functions should obey (eg. how should we value a program that simulates multiple other programs?)? These all seem like they are likely to be relevant to alignment, and also intrinsically worth doing.

[1] This talk is relevant: https://www.youtube.com/watch?v=ZSRHeXYDLko

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-03T08:23:10.593Z · LW(p) · GW(p)

Agent Foundations research has stuttered a bit over the team going remote and its membership shifting and various other logistical hurdles, but has been continuous throughout.

There's also at least one other team (the one I provide ops support to) that has been continuous since 2017.

I think the thing Raemon is pointing at is something like "in 2020, both Nate and Eliezer would've answered 'yes' if asked whether they were regularly spending work hours every day on a direct, technical research agenda; in 2023 they would both answer 'no.'"

Replies from: RobbBB, DaemonicSigil↑ comment by Rob Bensinger (RobbBB) · 2023-02-03T10:31:41.374Z · LW(p) · GW(p)

What Duncan said. "MIRI at least temporarily gave up on personally executing on technical research agendas" is false, though a related claim is true: "Nate and Eliezer (who are collectively a major part of MIRI's research leadership and play a huge role in the org's strategy-setting) don't currently see a technical research agenda that's promising enough for them to want to personally focus on it, or for them to want the organization to make it an overriding priority".

I do think the "temporarily" and "currently" parts of those statements is quite important: part of why the "MIRI has given up" narrative is silly is that it's rewriting history to gloss "we don't know what to do" as "we know what to do, but we don't want to do it". We don't know what to do, but if someone came up with a good idea that we could help with, we'd jump on it!

There are many negative-sounding descriptions of MIRI's state that I could see an argument for, as stylized narratives ("MIRI doesn't know what to do", "MIRI is adrift", etc.). Somehow, though, people skipped over all those perfectly serviceable pejorative options and went straight for the option that's definitely just not true?

Replies from: Viliam↑ comment by Viliam · 2023-02-03T12:24:23.340Z · LW(p) · GW(p)

We don't know what to do, but if someone came up with a good idea that we could help with, we'd jump on it!

In that case, I think the socially expected behavior is to do some random busywork, to send a clear signal that you not lazy.

(In corporate environment, the usual solution is to organize a few meetings. Not sure what is the equivalent for non-profits... perhaps organizing conferences?)

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2023-02-04T23:13:36.099Z · LW(p) · GW(p)

I don't see how that would help at all, and pure busywork is silly when you have lots of things to do that are positive-EV but probably low-impact.

MIRI "doesn't know what to do" in the sense that we don't see a strategy with macroscopic probability of saving the world, and the most-promising ones with microscopic probability are very diverse and tend to violate or side-step our current models in various ways, such that it's hard to pick actions that help much with those scenarios as a class.

That's different from MIRI "not knowing what to do" in the sense of having no ideas for local actions that are worth trying on EV grounds. (Though a lot of these look like encouraging non-MIRI people to try lots of things and build skill and models in ways that might change the strategic situation down the road.)

(Also, I'm mainly trying to describe Nate and Eliezer's views here. Other MIRI researchers are more optimistic about some of the technical work we're doing, AFAIK.)

↑ comment by DaemonicSigil · 2023-02-03T09:23:45.422Z · LW(p) · GW(p)

Ah got it, thanks for the reply!

↑ comment by Vaniver · 2023-02-03T05:09:45.278Z · LW(p) · GW(p)

From my view (formerly at MIRI, but left >2yrs ago, and so not speaking for them), I do think there's an important delusion that "MIRI's given up" does pop, which is an important element of this conversation, which Alex_Altair is maybe hinting at here. I think a lot of people viewed MIRI as "handling" AI x-risk instead of "valiantly trying to do something about" AI x-risk.

Once, at the MIRI lunch table, a shirt like this one ("Keep calm and let Nate handle it") became the topic of conversation. (MIRI still hadn't made any swag yet, and the pro-swag faction was jokingly proposing this as our official t-shirt.) Nate responded with "I think our t-shirts should say 'SEND HELP' instead."

I think The Death With Dignity post made it clear that MIRI did not think it was handling AI x-risk, and that no one else was either; more poetically, it was not wearing the "Keep calm and let Nate handle it" t-shirt and was instead wearing the "SEND HELP" t-shirt. [Except, you know, the t-shirt actually said "SEND DIGNITY" because Eliezer thought that would get better reactions than "SEND HELP".]

And so when I hear someone say that MIRI has "given up" I assume they have a model where this was the short (very lossy, not actually correct!) summary of that update.

comment by LawrenceC (LawChan) · 2023-02-03T10:20:53.330Z · LW(p) · GW(p)

As Alex Altair says above, I don't think that it's completely true that MIRI has given up. That being said, if you just include two more paragraphs below the ones you quoted from the Death with Dignity [LW · GW] post:

Q1: Does 'dying with dignity' in this context mean accepting the certainty of your death, and not childishly regretting that or trying to fight a hopeless battle?

Don't be ridiculous. How would that increase the log odds of Earth's survival?

My utility function isn't up for grabs, either. If I regret my planet's death then I regret it, and it's beneath my dignity to pretend otherwise.

That said, I fought hardest while it looked like we were in the more sloped region of the logistic success curve, when our survival probability seemed more around the 50% range; I borrowed against my future to do that, and burned myself out to some degree. That was a deliberate choice, which I don't regret now; it was worth trying, I would not have wanted to die having not tried, I would not have wanted Earth to die without anyone having tried. But yeah, I am taking some time partways off, and trying a little less hard, now. I've earned a lot of dignity already; and if the world is ending anyways and I can't stop it, I can afford to be a little kind to myself about that.

In particular, the final sentence really does read like "I'm giving up to some extent"!

Replies from: Raemon, DragonGod↑ comment by Raemon · 2023-02-03T17:27:26.976Z · LW(p) · GW(p)

Okay, that is fair (but also I think it's pretty bullshit to summarize the next section as "gave up" too).

Added a new section of the post that explicitly argues this point, repeating it here so people reading comments can see it more easily:

Replies from: RobbBB[edited to add] Eliezer does go on to say:

That said, I fought hardest while it looked like we were in the more sloped region of the logistic success curve, when our survival probability seemed more around the 50% range; I borrowed against my future to do that, and burned myself out to some degree. That was a deliberate choice, which I don't regret now; it was worth trying, I would not have wanted to die having not tried, I would not have wanted Earth to die without anyone having tried. But yeah, I am taking some time partways off, and trying a little less hard, now. I've earned a lot of dignity already; and if the world is ending anyways and I can't stop it, I can afford to be a little kind to myself about that.

I agree this means he's "trying less heroically hard." Someone in the comments says "this seems like he's giving up a little". And... I dunno, maybe. I think I might specifically say "giving up somewhat at trying heroically hard". But I think if you round "give up a little at " to "give up" you're... saying an obvious falsehood? (I want to say "you're lying", but as previously discussed, sigh, no, it's not lying, but, I think your cognitive process is fucked up in a way that should make you sit-bolt-upright-in-alarm [LW · GW].)

I think it is a toxic worldview to think that someone should be trying maximally hard, to a degree that burns their future capacity, and that to shift from that to "work at a sustainable rate while recovering from burnout for a bit" count as "giving up." No. Jesus Christ no. Fuck that worldview.

(I do notice that what I'm upset about here is the denotation of "giving up", and I'd be less upset if I thought people were simply saying "Eliezer/MIRI has stopped taking some actions" with no further implications. But I think people who say this are bringing in unhelpful/morally-loaded implications, and maybe equivocating between them)

↑ comment by Rob Bensinger (RobbBB) · 2023-02-04T23:18:16.015Z · LW(p) · GW(p)

I agree with this.

(Separately, your comment and Lawrence's are conflating "Eliezer" with "MIRI". I don't mind swapping back and forth between 'Eliezer's views' and 'MIRI's views' when talking about a lot of high-level aspects of MIRI's strategy, given Eliezer's huge influence here and his huge role in deciding MIRI's strategy. But it seems weirder to swap back and forth between 'what Eliezer is currently working on' and 'what MIRI is currently working on', or 'how much effort Eliezer is putting in' and 'how much effort MIRI is putting in'.)

comment by Richard_Kennaway · 2023-02-03T09:49:04.206Z · LW(p) · GW(p)

What a person gets from the slogan "Death with Dignity" depends on what they consider to be "dignified". In the context of assisted suicide it means declaring game over and giving up. But Dignitas should have no transhumanist customers. For those, dying with dignity would mean the opposite of giving up. It would mean going for cryonic suspension when all else has failed.

In Eliezer's article, it clearly means fighting on to the end, regardless of how hopeless the prospect, but always aiming for what has the best chance of working. Dignity is measured in the improvement to log-odds, and whether that improvement was as large as could be attained, even if in the end it is not enough. Giving up is never "dignified".

But give people a catchy slogan and that is all most of them will remember.

Replies from: RobbBB, ViktoriaMalyasova↑ comment by Rob Bensinger (RobbBB) · 2023-02-03T10:38:00.764Z · LW(p) · GW(p)

Yep. If I could go back in time, I'd make a louder bid for Eliezer to make it obvious that the "Death With Dignity" post wasn't a joke, and I'd add a bid to include some catchy synonym for "Death With Dignity", so people can better triangulate the concept via having multiple handles for it. I don't hate Death With Dignity as one of the handles, but making it the only handle seems to have caused people to mostly miss the content/denotation of the phrase, and treat it mainly as a political slogan defined by connotation/mood.

Replies from: Quadratic Reciprocity, CronoDAS, DanielFilan↑ comment by Quadratic Reciprocity · 2023-02-14T08:42:37.500Z · LW(p) · GW(p)

I like "improving log odds of survival" as a handle. I don't like catchy concept names in this domain because they catch on more than understanding of the concept they refer to.

Replies from: Raemon↑ comment by Raemon · 2023-02-14T18:57:09.611Z · LW(p) · GW(p)

I... don't know whether it actually accomplishes the right things but I am embarrassed at how long it took "improve log-odds of survival" to get promoted to my hypothesis space as the best handle for the concept of "improving log odds of survival [+ psychological stuff]"

Replies from: Quadratic Reciprocity↑ comment by Quadratic Reciprocity · 2023-02-14T19:31:35.869Z · LW(p) · GW(p)

Fairs. I am also liking the concept of "sanity" and notice people use that word more now. To me, it points at some of the psychological stuff and also the vibe in the What should you change in response to an "emergency"? And AI risk [LW · GW] post.

↑ comment by CronoDAS · 2023-02-05T00:49:46.583Z · LW(p) · GW(p)

"Go Down Fighting"?

Replies from: Raemon↑ comment by Raemon · 2023-02-14T02:40:12.231Z · LW(p) · GW(p)

A few people have suggested something like this, and I think it's failing to accomplish a thing that Death With Dignity was trying to accomplish, which was to direct people's attention to more-useful-points in strategy-space, and to think sanely while doing so.

↑ comment by DanielFilan · 2023-02-07T01:06:57.856Z · LW(p) · GW(p)

FWIW I don't think it's too late to introduce more handles.

↑ comment by ViktoriaMalyasova · 2023-02-04T23:58:16.050Z · LW(p) · GW(p)

But give people a catchy slogan and that is all most of them will remember.

Also, many people will only read the headline of your post, so it's important to make it sound unambiguous.

comment by Jeff Rose · 2023-02-04T18:13:13.982Z · LW(p) · GW(p)

If the risk from AGI is significant (and whether you think p(doom) is 1% or 10% or 100% is it unequivocally significant) and imminent (and whether your timelines are 3 years or 30 years it is pretty imminent) the problem is that an institution as small as MIRI is a significant part of the efforts to mitigate this risk, not whether or not MIRI gave up.

(I recognize that some of the interest in MIRI is the result of having a relatively small community of people focused on the AGI x-risk problem and the early prominence in that community of a couple of individuals, but that really is just a restatement of the problem).

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2023-02-04T23:43:50.718Z · LW(p) · GW(p)

Yep! At least, I'd say "as small as MIRI and as unsuccessful at alignment work thus far". I think small groups and individual researchers might indeed crack open the problem, but no one of them is highly likely on priors to do so (at least from my perspective, having seen a few people try the really obvious approaches for a few years). So we want there to exist a much larger ecosystem of people taking a stab at it, in the hope that some of them will do surprisingly well.

It could have been that easy to pull off; it's ultimately just a technical question, and it's hard to say how difficult such questions are to solve in advance, when they've literally never been attempted before.

But it was obviously never wise for humanity to take a gamble on it being that easy (and specifically easy for the one org that happened to start talking about the problem first). And insofar as we did take that gamble, it hasn't paid out in "we now have a clear path to solving alignment".

comment by Alex Flint (alexflint) · 2023-02-03T15:17:03.307Z · LW(p) · GW(p)

I just want to acknowledge the very high emotional weight of this topic.

For about two decades, many of us in this community have been kind of following in the wake of a certain group of very competent people tackling an amazingly frightening problem. In the last couple of years, coincident with a quite rapid upsurge in AI capabilities, that dynamic has really changed. This is truly not a small thing to live through. The situation has real breadth -- it seems good to take it in for a moment, not in order to cultivate anxiety, but in order to really engage with the scope of it.

It's not a small thing at all. We're in this situation where we have AI capabilities kind of out of control. We're not exactly sure where any of the leader's we've previously relied on stand. We all have this opportunity now to take action. The opportunity is simply there. Nobody, actually, can take it away. But there is also the opportunity, truly available to everyone regardless of past actions, to falter, exactly when the world most needs us.

What matters, actually, is what, concretely, we do going forward.

comment by Raemon · 2023-02-04T23:51:10.603Z · LW(p) · GW(p)

Some more nuance to add here, since I ranted this out quickly the other day:

I definitely think organizations, and individuals, can give up without realizing they've given up. Some people said this post felt like it made it harder for them to think or talk about MIRI, pushing them to adopt some narrative and kinda yelling at them for questioning it. And I don't at all mean to do that (in response to this, I got rid of the "obviously IMO?" part of the title).

I maybe want to distinguish several types of claims you might care about:

- "MIRI" the organization has given up

- Most of the individuals who work (or recently worked) at MIRI have given up

- Eliezer has given up

- Nate has given up

- "MIRI" the organization is in some kind of depressed and/or confused state and isn't able to coordinate with itself well and is radiating depressed / confused vibes, or is radiating something that feels like giving up.

- MIRI has become a sort of zombie-org that should probably disband, each of the individual people there would be better off thinking of themselves as an independent thinker/researcher.

- #5, but for Eliezer, or Nate, or most individuals at MIRI

- MIRI has given up on technical alignment research

- Eliezer, Nate, or any given individual has given up on technical alignment research.

I think at least some of those claims are either true, or might be true, for reasonable-definitions of "gave up", and I want people to have an easy time thinking about it for themselves. But I feel like the people I see saying "MIRI gave up" are doing some kind of epistemically screwy thing that I think warrants being called out. (note: different people are saying slightly different things and maybe mean slightly different things)

comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-03T04:14:29.235Z · LW(p) · GW(p)

Thanks; as someone who has struggled to respond productively to the "MIRI has given up" meme that is floating around, this is quite helpful and I appreciate the care taken.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2023-02-03T10:42:56.620Z · LW(p) · GW(p)

+1. Thanks, Ray. :)

There are things I like about the "MIRI has given up" meme, because maybe it will cause some people to up their game and start taking more ownership of the problem.

But it's not true, and it's not fair to the MIRI staff who are doing the same work they've been doing for years.

It's not even fair to Nate or Eliezer personally, who have sorta temporarily given up on doing alignment work but (a) have not given up on trying to save the world, and (b) would go back to doing alignment work if they found an approach that seemed tractable to them.

comment by Raemon · 2023-02-04T01:31:03.255Z · LW(p) · GW(p)

(I changed the title of the post from "MIRI isn't 'giving up' (obviously so, IMO?)" to "It doesn't look to me like MIRI 'gave up'")

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-04T01:37:21.066Z · LW(p) · GW(p)

I feel some twinge of monkey regret at something like the ... unilateral disarmament? ... of the title change, since you're using a strictly truer and less-manipulative phrasing that will be weaker in practice given others' refusal to follow suit. Feels a bit like you're hitting "cooperate" in a world where I strongly predict others will continue to hit "defect."

but

I do indeed greatly prefer the world where both sides use this superior language, so an additional +1 for being-the-change.

(I think a lot of confusion and conflict would've been avoided in the first place if more people habitually said true and defensible things like "Sure does seem to me like MIRI gave up" rather than much shakier things like "MIRI gave up".)

Replies from: Raemon↑ comment by Raemon · 2023-02-04T02:03:22.661Z · LW(p) · GW(p)

One of my reasons here is that a pet peeve of mine is people using the word "obviously" as a rhetorical bludgeon, and upon reflection it felt particularly bad to me to have used it.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2023-02-04T23:38:01.429Z · LW(p) · GW(p)

I actually do think it's obvious -- like, you have to believe like four false things at once in order to get there, several of which are explicitly disclaimed in the very posts people are mainly citing as evidence for "MIRI gave up" (and others of which are disclaimed in the comments).

- You have to miss the part where Eliezer said that "dignity" denotes working to try to reduce the probability of existential catastrophe.

- Separately, you have to either assume that the title of the post isn't an April Fool's joke (even though it doesn't make sense, because "Death With Dignity" is not a strategy??), or you have to otherwise forget that other people work at MIRI who are not Eliezer.

- You have to assume that all the recent LW write-ups by Eliezer, Nate, and other MIRI people are just stuff we're doing for fun because we like spending our time that way, as opposed to attempts to reduce p(doom).

- You have to falsely believe that a version of Eliezer and/or MIRI that had high p(doom) would immediately give up for that reason (e.g., because you'd give up in that circumstance); or you have to believe that a version of Eliezer or MIRI that was feeling sad or burnt out would give up.

- You probably have to have false beliefs about the amount of actual technical research MIRI's doing, given that we were doing a lot less in e.g. 2014? Unless the idea is that doing research while sad means that you've given up, but doing research while happy means that you haven't given up.

- You probably have to have false beliefs about MIRI's counterfactuals? "Giving up" not only suggests that we aren't doing anything now; it suggests we aren't trying to find high-impact stuff to do, and wouldn't jump on an opportunity to do a high-impact thing if we knew of one. "GiveDirectly is pausing most of their donations to re-evaluate its strategy and figure out what to do next, because they've realized the thing they were doing wasn't cost-effectively getting money into poor people's" is very different from "GiveDirectly has given up on trying to alleviate poverty".

- You have to... misunderstand something sort of fundamental about MIRI's spirit? And probably misunderstand things about how doomy we already were in 2017, etc.

My main hesitation is that "giving up" is in quotation marks in the title, which in some contexts can signify scare quotes or jargon; I think it's obvious that MIRI hasn't given up, but maybe someone has a very nonstandard meaning of "gave up" (e.g., 'has no object-level strategy they're particularly bought into at the organizational level, and feels pessimistic about finding such a strategy') that is either true, or false for non-obvious reasons.

On the other hand, including "IMO" and the question mark addressed this in my books.

If we can bid for long titles, I like: MIRI isn't "giving up" (obviously so, IMO, as I understand that phrase?)

Replies from: Raemon↑ comment by Raemon · 2023-02-04T23:55:34.202Z · LW(p) · GW(p)

Yeah, it did feel obvious to me (for largely the reasons you state), and it felt like there was something important about popping the bubble of "if you actually think about it it doesn't make sense, even if it sure feels true".

But, a) I do think there is something anti-epistemically bludgeony about saying "obviously" (although I agree the "IMO?" softened it), b) at least some smart thoughtful people seem to earnestly define "giving up" differently from me (even though I still feel like something fishy was going on with their thought process)

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2023-02-05T00:05:26.225Z · LW(p) · GW(p)

Kk!

comment by Thomas Kwa (thomas-kwa) · 2023-02-03T19:03:18.736Z · LW(p) · GW(p)

Even if it has some merits, I find the "death with dignity" thing an unhelpful, mathematically flawed [LW(p) · GW(p)], and potentially emotionally damaging way to relate to the problem. Even if MIRI has not given up, I wouldn't be surprised if the general attitude of despair has substantially harmed the quality of MIRI research. Since I started as a contractor for MIRI in September, I've deliberately tried to avoid absorbing this emotional frame, and rather tried to focus on doing my job, which should be about computer science research. We'll see if this causes me problems.

comment by Rob Bensinger (RobbBB) · 2023-02-04T23:01:03.337Z · LW(p) · GW(p)

As long as we're distilling LessWrong posts into shortened versions, here's my submission.

↑ comment by TAG · 2023-02-05T03:36:23.996Z · LW(p) · GW(p)

So that's how you summarise Proust!

comment by Erik Jenner (ejenner) · 2023-02-03T06:50:34.616Z · LW(p) · GW(p)

The whole point of the post is to be a psychological framework for actually doing useful work that increases humanity's long odds of survival.

I can't decide whether "long odds" is a simple typo or a brilliant pun.

Replies from: Raemoncomment by the gears to ascension (lahwran) · 2023-02-03T07:27:09.103Z · LW(p) · GW(p)

[edit: due to the replies, I'm convinced this is yet another instance where I was harsh unnecessarily; please don't upvote above 2. I've reversed my own vote to get back to 2.]

My view has always been that MIRI had already given up in 2015. They were blindsided by alphago! If you check the papers that alphago cited, there were plenty of hints that it was about to happen; how did ai researchers miss that?

But I also generally think that their entire infinities view of ai is fundamentally doomed. As far as I can tell, all of MIRI's useful work has been post-giving-up, which, like, fair enough, if giving up on your valiant plan is what gets you to do something useful, sure, sounds good.

I just wish yudkowsky would have admitted his entire worldview was catastrophically unstable as soon as alexnet hit in 2012.

Replies from: Raemon, Duncan_Sabien↑ comment by Raemon · 2023-02-03T07:30:56.782Z · LW(p) · GW(p)

Separate from whether this is relevant/useful in general, I don't think "being wrong" has anything to do with giving up.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-02-03T07:34:58.900Z · LW(p) · GW(p)

yeah maybe that's fair. perhaps what I'm bothered about is that they didn't give up sooner then, because they seem to have gotten a lot more useful as soon as they got around to giving up

Replies from: Raemon↑ comment by Raemon · 2023-02-03T07:38:16.196Z · LW(p) · GW(p)

That is an interesting point, although still downvoted for ignoring the core point of my post and continuing in the frame I'm specifically arguing against.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-02-03T07:43:43.686Z · LW(p) · GW(p)

wait, I'm actually confused now. I think that was me pivoting? I'm agreeing that "giving up" wasn't a process of deciding to do nothing, but rather one of abandoning their doing-nothing plan and starting to do something? My point is, the point at which they became highly useful was the one at which they stopped trying to be the Fancy Math Heros and instead became just fancy community organizers.

That or maybe I'm being stupid and eyes-glazed again and failing to even comprehend your meaning. Sometimes I can have remarkable difficulty seeing through the surface levels of things, to the point that I legitimately wonder if there's something physically wrong with my brain. If I'm missing something, could you rephrase it down enough that a current gen language model would understand what I missed? If not, I'll try to come back to this later.

Replies from: Raemon↑ comment by Raemon · 2023-02-03T08:36:03.517Z · LW(p) · GW(p)

Okay, it was not clear that's what you meant.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-02-03T08:56:49.757Z · LW(p) · GW(p)

yeah, I guess it wasn't.

↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-03T20:40:27.861Z · LW(p) · GW(p)

They were blindsided by alphago!

I'm curious about this specifically because I was physically in the MIRI office in the week that alphago came out, and floating around conversations that included Nate, Eliezer, Anna Salamon, Scott, Critch, Malo, etc., and I would not describe the mood as "blindsided" so much as "yep, here's a bit of What Was Foretold; we didn't predict this exact thing but this is exactly the kind of thing we've been telling everyone about ad infinitum; care to make bets about how much people update or not?"

But also, there may have been e.g. written commentary that you read, that I did not read, that says "we were blindsided." i.e. I'm not saying you're wrong, I'm saying I'm surprised, and I'm curious if you can explain away my surprise.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-02-03T20:48:11.461Z · LW(p) · GW(p)

I was surprised alphago happened in january 2016 rather than november 2016. Everyone in the MIRI sphere seemed surprise it happened in the 2010s rather than the 2020s or 2030s. My sense at the time was that this was because they had little to no interest in tracking SOTA of the most capable algorithms inspired by the brain, due to what I still see as excessive focus on highly abstract and generalized relational reasoning about infinities. Though it's not so bad now, and I think Discovering Agents, Kenton et al's agent foundations work at deepmind, and the followup Interpreting systems as solving POMDPs: a step towards a formal understanding of agency [LW · GW], are all incredible work on Descriptive Models of Agency [LW · GW], so now the MIRI normative agent foundations approach does have a shot. But I think that MIRI could have come up with Discovering Agents if their attitude towards deep learning had been more open-minded. And I still find it very frustrating how they respond when I tell them that ASI is incredibly soon. It's obvious to anyone who knows how to build it that there's nothing left in the way, and that there could not possibly be; anyone who is still questioning that has deeply misunderstood deep learning, and while they shouldn't update hard off my assertion, they should take a step back and realize that the model that deep learning and connectionism doesn't work has been incrementally shredded over time and it is absolutely reasonable to expect that exact distillations of intelligence can be discovered by connectionism. (though maybe not deep gradient backprop. We'll see about that one.)

I agree voted you because, yes, they did see it as evidence they were right about some of their predictions, but it didn't seem hard to me to predict it - all I'd been doing was, since my CFAR workshop in 2015, spending time reading lots and lots and lots of abstracts and attempting to estimate from abstracts which papers actually had real improvements to show in their bodies. Through this, I trained a mental model of the trajectory of abstracts that has held comfortably to this day and predicted almost every major transition of deep learning, including that language-grade conversation AI was soon, and I continue to believe that this mental model is easy to obtain and that the only way one fails to obtain it is stubbornness that it can't be done.

Critch can tell you I was overconfident about the trajectory in early 2016, after alphago and before attention failed to immediately solve complicated problems. It took a while before someone pointed out attention was all you need and that recurrence was (for a while) a waste of effort, and that is what allowed things to finally get really high scores on big problems, but again, similar to go, attention had been starting to work in 2015-2016 as well.

If you'd like I can try to reconstruct this history more precisely with citations.

[editing with more points complete.]

Replies from: Duncan_Sabien↑ comment by Duncan Sabien (Deactivated) (Duncan_Sabien) · 2023-02-03T20:55:04.308Z · LW(p) · GW(p)

I don't know if I need citations so much as "I'm curious what you observed that led you to store 'Everyone in the MIRI sphere seemed surprise it happened in the 2010s rather than the 2020s or 2030s.' in your brain."

Like, I don't need proof, but like ... did you hear Eliezer say something, did you see some LW posts from MIRI researchers, is this more just a vibe that you picked up from people around MIRI and you figured it was representative of the people at MIRI, etc.

Replies from: lahwran↑ comment by the gears to ascension (lahwran) · 2023-02-03T20:58:30.652Z · LW(p) · GW(p)

I don't remember, so I'll interpret this as a request for lit review (...partly just cuz I wanna). To hopefully reduce "you don't exist" feeling, I recognize this isn't quite what you asked and that I'm reinterpreting. ETA one to two days, I've been putting annoying practical human stuff off to do unnecessary lit review again today anyway.

Replies from: RobbBB↑ comment by Rob Bensinger (RobbBB) · 2023-02-04T23:59:06.113Z · LW(p) · GW(p)

From AlphaGo Zero and the Foom Debate:

I wouldn’t have predicted AlphaGo and lost money betting against the speed of its capability gains, because reality held a more extreme position than I did on the Yudkowsky-Hanson spectrum.

IMO AlphaGo happening so soon was an important update for Eliezer and a lot of MIRI. There are things about AlphaGo that matched our expectations (and e.g. caused Eliezer to write this post), but the timing was not one of them.

The parts of Gears' account I'm currently skeptical of are:

- Gears' claim (IIUC) that ~every non-stupid AI researcher who was paying much attention knew in advance that Go was going to fall in ~2015. (For some value of "non-stupid" that's a pretty large number of people, rather than just "me and two of my friends and maybe David Silver" or whatever.)

- Gears' claim that ML has been super predictable and that Gears has predicted it all so far (maybe I don't understand what Gears is saying and they mean something weaker than what I'm hearing?).

- Gears' level of confidence in predicting imminent AGI. (Seems possible, but not my current guess.)

↑ comment by the gears to ascension (lahwran) · 2023-02-05T22:22:56.094Z · LW(p) · GW(p)

Gears' claim (IIUC) that ~every non-stupid AI researcher who was paying much attention knew in advance that Go was going to fall in ~2015. (For some value of "non-stupid" that's a pretty large number of people, rather than just "me and two of my friends and maybe David Silver" or whatever.)

Specifically the ones *working on or keeping up with* go could *see it coming* enough to *make solid research bets* about what would do it. If they had read up on go, their predictive distribution over next things to try contained the thing that would work well enough to be worth scaling seriously if you wanted to build the thing that worked. What I did was, as someone not able to implement it myself at the time, read enough of the go research and general pattern of neural network successes to have a solid hunch about what it looks like to approximate a planning trajectory with a neural network. It looked very much like the people actually doing the work at facebook were on the same track. What was surprising was mostly that google funded scaling it so early, which relied on them having found an algorithm that scaled well sooner than I expected, by a bit. Also, I lost a bet about how strong it would be; after updating on the matches from when it was initially announced, I thought it would win some but lose overall, instead it won outright.

Gears' claim that ML has been super predictable and that Gears has predicted it all so far (maybe I don't understand what Gears is saying and they mean something weaker than what I'm hearing?).

I have hardly predicted all ml, but I've predicted the overall manifold of which clusters of techniques would work well and have high success at what scales and what times. Until you challenged me to do it on manifold, I'd been intentionally keeping off the record about this except when trying to explain my intuitive/pretheoretic understanding of the general manifold of ML hunchspace, which I continue to claim is not that hard to do if you keep up with abstracts and let yourself assume it's possible to form a reasonable manifold of what abstracts refine the possibility manifold. Sorry to make strong unfalsifiable claims, I'm used to it. But I think you'll hear something similar - if phrased a bit less dubiously - from deep learning researchers experienced at picking which papers to work on in the pretheoretic regime. Approximately, it's obvious to everyone who's paying attention to a particular subset what's next in that subset, but it's not necessarily obvious how much compute it'll take, whether you'll be able to find hyperparameters that work, if your version of the idea is subtly corrupt, or whether you'll be interrupted in the middle of thinking about it because boss wants a new vision model for ad ranking.

Gears' level of confidence in predicting imminent AGI. (Seems possible, but not my current guess.)

Sure, I've been the most research-trajectory optimistic person in any deep learning room for a long time, and I often wonder if that's because I'm arrogant enough to predict other people's research instead of getting my year-scale optimism burnt out by the pain of the slog of hyperparameter searching ones own ideas, so I've been more calibrated about what other people's clusters can do (and even less calibrated about my own). As a capabilities researcher, you keep getting scooped by someone else who has a bigger hyperparam search cluster! As a capabilities researcher, you keep being right about the algorithms' overall structure, but now you can't prove you knew it ahead of time in any detail! More effective capabilities researchers have this problem less, I'm certainly not one. Also, you can easily exceed my map quality by reading enough to train your intuitions about the manifold of what works - just drastically decrease your confidence in *everything* you've known since 2011 about what's hard and easy on tiny computers, treat it as a palette of inspiration for what you can build now that computers are big. Roleplay as a 2015 capabilities researcher and try to use your map of the manifold of what algorithms work to predict whether each abstract will contain a paper that lives up to its claims. Just browse the arxiv, don't look at the most popular papers, those have been filtered by what actually worked well.

Btw, call me gta or tgta or something. I'm not gears, I'm pre-theoretic map of or reference to them or something. ;)

Also, I should mention - Jessicata, jack gallagher, and poossssibly tsvi bt can tell you some of what I told them circa 2016-2017 about neural networks' trajectory. I don't know if they ever believed me until each thing was confirmed, and I don't know which things they'd remember or exactly which things were confirmed as stated, but I definitely remember arguing in person in the MIRI office on addison, in the backest back room with beanbags and a whiteboard and if I remember correctly a dripping ceiling (though that's plausibly just memory decay confusing references), that neural networks are a form of program inference that works with arbitrary complicated nonlinear programs given an acceptable network interference pattern prior, just a shitty one that needs a big network to have enough hypotheses to get it done (stated with the benefit of hindsight; it was a much lower quality claim at the time). I feel like that's been pretty thoroughly demonstrated now, though pseudo-second-order gradient descent (ADAM and friends) still has weird biases that make its results less reliable than the proper version of itself. It's so damn efficient, though, that you'd need a huge real-wattage power benefit to use something that was less informationally efficient relative to its vm.

comment by Review Bot · 2024-03-06T21:31:52.597Z · LW(p) · GW(p)

The LessWrong Review [? · GW] runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?