Basin broadness depends on the size and number of orthogonal features

post by CallumMcDougall (TheMcDouglas), Avery, Lucius Bushnaq (Lblack) · 2022-08-27T17:29:32.508Z · LW · GW · 21 commentsContents

TL;DR Introduction - why do we care about broadness? Measuring broadness Broadness and feature space Some potential implications None 21 comments

TL;DR

For neural networks trained to perfect loss, the broadness of optima in parameter space is given by the number and norm of independent orthogonal features the neural network has. The inner product that defines this "feature norm" and independence/orthogonality is the product of Hilbert space.

Introduction - why do we care about broadness?

Recently, there's been some discussion [LW · GW] of what determines the broadness of optima of neural networks in parameter space.

People care about this because the size of the optimum basin may influence how easy it is for gradient descent (or other local optimisation algorithms which you might use to train a neural network) to find the corresponding solution, since it's probably easier to stumble across a broader optimum's attractor.

People also care about this because there's some hypotheses floating around [LW · GW] saying that the broadness of optima is correlated with their generality somehow. The broader the basin of your solution is in parameter space, the more likely it seems to be that the network will successfully generalise to new data in deployment. So understanding what kinds of circuit configurations are broad seems like it might help us grasp better how AIs learn things, and how an AGI might really work internally.

Meaning, understanding what kind of solutions are broad is probably relevant to start developing general predictive theories of AI and AI training dynamics, which we'd like to have so we can answer questions like "What goals is my AGI going to develop, given that it has architecture , and I'm training it with loss function on data set ?"

Measuring broadness

Warning: calculus and linear algebra incoming!

Say we have a loss function for a neural network , with some data points and labels , and we've trained the network to perfect loss, setting the parameters to some set .

Now, lets try to look at how much the loss changes when we make small changes to the parameters . To do this, we're going to perform a polynomial expansion of up to quadratic order. This isn't going to describe the function behaviour perfectly, but within a small neighbourhood of , it'll be mostly accurate.

The first order term vanished because (since we're at an optimum). is a vector with entries, specifying how much we're perturbing each parameter in the network from its value at the optimum . is going to be a matrix with entries.

So far so good. Now, if we wanted to work out how broad the basin is by looking at this matrix, how would we do it?

The matrix is filled with second derivatives. Or in other words, curvatures.

If you only had one parameter, this matrix would be a single number (the second derivative), telling you the curvature of the parabola you can fit to the optimum. And the curvature of a parabola determines its broadness, the closer the curvature is to zero, the broader the parabola, and with it the optimum, is.

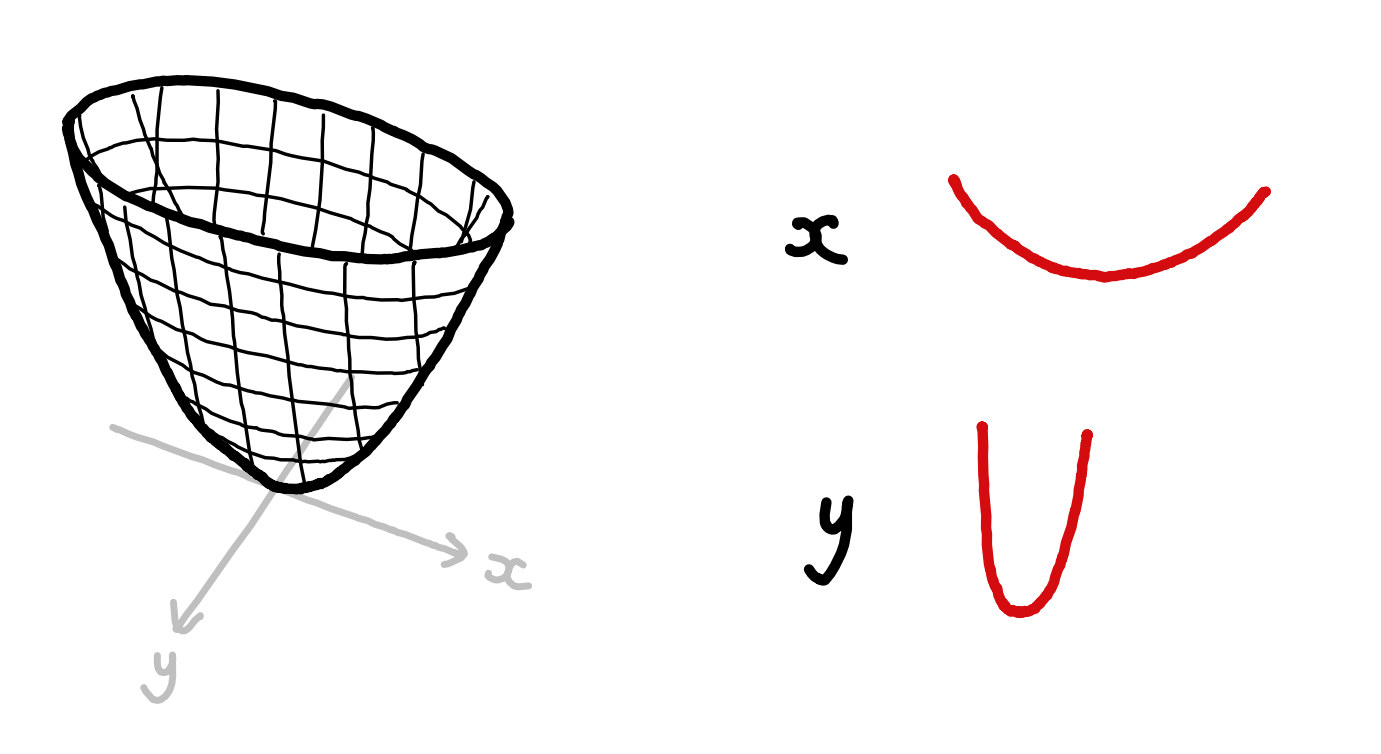

If you have two parameters, you can imagine the optimum as a little valley in the loss function landscape. The valley has two curvatures that characterise it now, but those don't need to line up with our coordinate axes and . You could, for example, have one "principal direction" of curvature lie in the direction of parameter space, and one in the direction.

To find these principal directions and their curvatures, you perform an eigendecomposition of the matrix. The two eigenvalues of the matrix will be the curvatures, and the two eigenvectors the principal directions in parameter space characterised by these curvatures. Multiplying the parabola widths we get from the two, we obtain the basin volume.

We can do this for the -dimensional case as well. If some eigenvalues of the matrix are zero, that means that at least within a small neighbourhood of the optimum, the loss doesn't drop at all if you walk along the corresponding principal direction. So each such eigenvalue effectively increases the dimensionality of the optimum, from a point to a line, a line to an area, etc.

Now that's all fine and good, but it's not very interpretable. What makes the eigenvalues of this matrix be small or large for any given network? What determines how many of them are zero? What does all this have to do with interpretability? Let's find out!

Broadness and feature space

Let's explicitly work out what that matrix, which is also called the Hessian of the network, will look like, by calculating its entries in the -th row and -th column, meaning the derivative of the loss function with respect to the -th and -th parameters of the network:

The second term vanishes again, because the loss function is required to be convex at optima, so .[2]

Look at this expression for a bit. If you've done some quantum mechanics before, this might remind you of something.

Let's go through a small example. If you have a network that takes in a single floating point number from the input , and maps it to an output using features and a constant, as in , the gradient of the output would look like:

So, each entry in the gradient corresponds to one feature of the network.

The Hessian matrix for this network would be

These are the inner products of the features over the training data set. They tell you how close to orthogonal the features are with respect to each other, and how big they are.

The idea of the inner product / norm and Hilbert space is that we treat the space of functions as a vector space, which can have basis vectors. The size and relative angles of these vectors are given by the inner product: you multiply the functions in question, and sum the result over the function domain.

This gives us a well defined notion of what constitutes "orthogonal" features: Two features are orthogonal if their norm is zero. Intuitively, this means they're separate, non-interacting components of the network mapping.

This generalises to arbitrary numbers of features in arbitrary numbers of layers, though features in earlier layers enter multiplied with derivatives of activation functions, since we're characterising their effect on the final layer.

Each constant feature is treated as multiplying a constant of . The Hessian of the network at the optimum contains the norm of the products of all the features in the network with each other.

So, back to our second order approximation of the loss function. When we find the eigendecomposition of our Hessian matrix, that corresponds to shifting into a basis in parameter space where all off-diagonals of the Hessian are zero. So, the eigenvectors of the Hessian correspond to linear combinations of network features that are orthogonal (in terms of norm) to each other.

Remember, the bigger the eigenvalues, which are the norms of these feature combinations, the bigger the curvature of the loss function is in that direction of parameter space, and the narrower the basin will be.

Eigenvalues of zero mean the network actually had less independent, orthogonal features inside it than it had parameters, giving you directions that are perfectly flat.

So: the broadness of the basin is determined by the number and size of independent features in the network. At least within second order polynomial approximation range.

That sure sounds like it's pointing out the precise mathematical notion of network "complexity", "fine-tuning" or "generality" that's relevant for the basin broadness.

It also neatly quantifies and extends the formalism found by Vivek in this [LW · GW] post. If a set of features all depend on different independent parts of the input, they are going to have an orthogonal basis of size . If a set of features depends on the same parts of the input, they are a lot more likely to span a set of dimension less than in Hilbert space, meaning more zero eigenvalues. So, information loss about the input tends to lead to basin broadness.

Some potential implications

If we leave second order approximation range, features in different layers of the network no longer interact linearly, so you can't just straightforwardly treat them as one big set of Hilbert space basis vectors.

But features in the same layer can still be treated like this, and orthogonalised by taking the vector of neuron activations in the layer times its transpose, summed over the training dataset, to form a matrix of Hilbert norms like the one we got from the Hessian. Then, finding the eigenbasis of that matrix corresponds to finding a basis of linear combinations of features in the layer that are mutually orthogonal. Once the bases of two adjacent layers are found, the weight matrix connecting the two layers can also be transformed into the new basis.

This might give us a new perspective of the network. A basis in which each element in a layer might stand for one “independent” feature in that layer, instead of a neuron. Cancellations between the signals coming from different nodes could no longer occur. The connection weights might tell you exactly how much the network “cares” about the signals coming from each element.

This stands in contrast to the usual method (where we treat the neurons as the fundamental unit of the network, and use measures from network theory to try and measure clustering among neuron groups). As we've pointed out before, how big the weights between neurons are doesn't really indicate how much signal is flowing through their connection, making this a non-optimal strategy for interpreting your network.

This makes us suspect that the orthogonal feature basis of the layers might be close to the right framing to use, if we want to quantify how many "independent" calculations a neural network performs, and how it uses these calculations to make new calculations. In other words, this this formalisation might provide the basis for the fundamentally motivated modularity measure [? · GW] we're after.

How might we test this hypothesis?

In our previous post [LW · GW], we discussed the problem of polysemanticity: when a single neuron seems to represent multiple different concepts which aren't naturally bucketed for humans. This problem suggests that individual neurons might not be the best unit of analysis, and we could do better to use features as the basic unit with which to formalise general theories of neural networks. A natural question follows - if we try out graphing the "feature flows" in neural networks with this, do polysemantic neurons mostly disappear and every orthogonalised feature means one, understandable thing?

We plan to find out soon, by experimenting with this change of basis and related ones in small neural networks. If you're interested in helping out with these experiments, or you have any other suggestions for useful experiments to run to test out these ideas, please let us know by commenting below, or sending a private LW message.

- ^

Note that we've written this with a sum (and have continued this convention for the rest of the post), but the formalisation would work equally well with an integral. Additionally, for the rest of the post we're assuming is a scalar to make the math a little less complicated, but the formalism should hold for other output types too.

- ^

How this works out when you're not at a perfect optimum and this term stays around is something I'm unsure of, and its the reason we proposed experiment 8 in the experiment list on LW. [LW · GW]

- ^

is zero because we required a perfect loss score, and loss functions are required to be convex.

21 comments

Comments sorted by top scores.

comment by tailcalled · 2022-08-27T20:10:34.710Z · LW(p) · GW(p)

Hm, this makes me wonder:

So if I understand, if you take an eigenvector with a large eigenvalue in the Hessian, then that corresponds to a feature the network has learned is important for its loss. But more specifically, it corresponds to parameters of the network (i.e. an axis in networkspace) that measure features which correlate in the imagespace.

So the eigenvector doesn't give you the features directly in imagespace, it gives you the network parameters which "measure" the feature? I wonder if one could translate this to imagespace. Taking a stab at it, given an image , definitionally is the parameter axis that measures the feature, so changes in along should be proportional to the feature's presence in ? Ergo, should measure the extent to which exhibits the feature?

Not sure if this is useful, or relevant to your post. Maybe it's something I should experiment with.

Replies from: Lblack, tailcalled, tailcalled↑ comment by Lucius Bushnaq (Lblack) · 2022-08-31T12:59:11.048Z · LW(p) · GW(p)

So the eigenvector doesn't give you the features directly in imagespace, it gives you the network parameters which "measure" the feature?

Nope, you can straightforwardly read off the feature in imagespace, I think. Remember, the eigenvector doesn't just show you which parameters "form" the feature through linear combination, it also shows you exactly what that linear combination is. If your eigenvector is (2,0,-3), that means the feature in image space looks like taking the twice the activations of the node connected to , plus -3 times the activations of .

Ergo, should measure the extent to which exhibits the feature?

We're planning to test the connection between the orthogonal features and the actual training data through something similar to this actually, yes. See this comment [LW(p) · GW(p)] and the math by John it's replying to.

Replies from: tailcalled↑ comment by tailcalled · 2022-08-31T14:38:45.090Z · LW(p) · GW(p)

Hmm, I suppose in the single-linear-layer case, your way of transferring it to imagespace is equivalent to mine, whereas in the multi-nonlinear-layer case, I am not sure which generalization is the most appropriate.

Replies from: Lblack↑ comment by Lucius Bushnaq (Lblack) · 2022-08-31T15:24:18.579Z · LW(p) · GW(p)

Your way of doing it basically approximates the network to first order in the parameter changes/second order in the loss function. That's the same as the method I'm proposing above really, except you're changing the features to account for the chain rule acting on the layers in front of them. You're effectively transforming the network into an equivalent one that has a single linear layer, with the entries of as the features.

That's fine to do when you're near a global optimum, the case discussed in the main body of this post, and for tiny changes it'll hold even generally, but for a broader understanding of the dynamics layer by layer, I think insisting on the transformation to imagespace might not be so productive.

Note that imagespace/=thing that is interpretable. You can recognise a dog head detector fine just by looking at its activations, no need to transpose it into imagespace somehow.

↑ comment by tailcalled · 2022-08-27T20:20:28.592Z · LW(p) · GW(p)

(Wait, I say "imagespace" due to thinking too much about image classifiers as the canonical example of a neural network, but of course other inputs can be given to the NN too.)

↑ comment by tailcalled · 2022-08-27T20:15:52.399Z · LW(p) · GW(p)

(And it seems like further one could identify the feature in pixelspace by taking the gradient of with respect to the pixels? Might be useful for interpretability? Not sure.)

comment by Matthias G. Mayer (matthias-georg-mayer) · 2022-09-15T03:03:06.138Z · LW(p) · GW(p)

Two features are orthogonal if their norm is zero

Just as a side note about terminology: It is a bit imprecise that you use innerproduct and norm interchangeably.

Innerproduct is the function and the norm is

comment by carboniferous_umbraculum (Spencer Becker-Kahn) · 2022-08-29T12:36:34.321Z · LW(p) · GW(p)

I wrote out the Hessian computation in a comment to one of Vivek's posts [AF(p) · GW(p)]. I actually had a few concerns with his version and I could be wrong but I also think that there are some issues here. (My notation is slightly different because for me the sum over was included in the function I called "", but it doesn't affect my main point).

I think the most concrete thing is that the function - i.e. the `input-output' function of a neural network - should in general have a vector output, but you write things like

without any further explanation or indices. In your main computation it seems like it's being treated as a scalar.

Since we never change the labels or the dataset, on can drop the explicit dependence on from our notation for . Then if the network has neurons in the final layer, the codomain of the function is (unless I've misunderstood what you are doing?). So to my mind we have:

Going through the computation in full using the chain rule (and a local minimum of the loss function ) one would get something like:

Vivek wanted to suppose that were equal to the identity matrix, or a multiple thereof, which is the case for mean squared loss. But without such an assumption, I don't think that the term

appears (this is the matrix you describe as containing "the inner products of the features over the training data set.")

Another (probably more important but higher-level) issue is basically: What is your definition of 'feature'? I could say: Have you not essentially just defined `feature' to be something like `an entry of '? Is the example not too contrived in that sense it clearly supposes that has a very special form (in particular it is linear in the variables so that the derivatives are not functions of .)

↑ comment by Lucius Bushnaq (Lblack) · 2022-08-31T12:40:24.299Z · LW(p) · GW(p)

In your main computation it seems like it's being treated as a scalar.

It's an example computation for a network with scalar outputs, yes. The math should stay the same for multi-dimensional outputs though. You should just get higher dimensional tensors instead of matrices.

Vivek wanted to suppose that were equal to the identity matrix, or a multiple thereof, which is the case for mean squared loss.

In theory, a loss function that explicitly depends on network parameters would behave differently than is assumed in this derivation, yes. But that's not how standard loss functions usually work. If a loss function did have terms like that, you should indeed get out somewhat different results.

But that seems like a thing to deal with later to me, once we've worked out the behaviour for really simple cases more.

Another (probably more important but higher-level) issue is basically: What is your definition of 'feature'? I could say: Have you not essentially just defined `feature' to be something like `an entry of '? Is the example not too contrived in that sense it clearly supposes that has a very special form (in particular it is linear in the variables so that the derivatives are not functions of .)

A feature to me is the same kind of thing it is to e.g. Chris Olah. It's the function mapping network input to the activations of some neurons, or linear combination of neurons, in the network.

I'm not assuming that the function is linear in \Theta. If it was, this whole thing wouldn't just be an approximation within second order Taylor expansion distance, it'd hold everywhere.

In multi-layer networks, what the behavioural gradient is showing you is essentially what the network would look like if you approximated it for very small parameter changes, as one big linear layer. You're calculating how the effects of changes to weights in previous layers "propagate through" with the chain rule to change what the corresponding feature would "look like" if it was in the final layer.

Obviously, that can't be quite the right way to do things outside this narrow context of interpreting the meaning of the basin near optima. Which is why we're going to try out building orthogonal sets layer by layer instead.

To be clear, none of this is a derivation showing that the L2 norm perspective is the right thing to do in any capacity. It's just a suggestive hint that it might be. We've been searching for the right definition of "feature independence" or "non-redundancy of computations" in neural networks for a while now, to get an elementary unit of neural networks that we can build our definition of computational modularity, and ultimately a whole theory of Deep Learning and network selection, on top of.

This stuff seems like a concrete problem where the math is pointing towards a particular class of operationalisations of these concepts. So I think it makes sense to try out this angle and see what happens.

Replies from: Spencer Becker-Kahn↑ comment by carboniferous_umbraculum (Spencer Becker-Kahn) · 2022-08-31T16:35:13.093Z · LW(p) · GW(p)

It's an example computation for a network with scalar outputs, yes. The math should stay the same for multi-dimensional outputs though. You should just get higher dimensional tensors instead of matrices.

I'm sorry but the fact that it is scalar output isn't explained and a network with a single neuron in the final layer is not the norm. More importantly, I am trying to explain that I think the math does not stay the same in the case where the network output is a vector (which is the usual situation in deep learning) and the loss is some unspecified function. If the network has vector output, then right after where you say "The Hessian matrix for this network would be...", you don't get a factorization like that; you can't pull out the Hessian of the loss as a scalar, it instead acts in the way that I have written - like a bilinear form for the multiplication between the rows and columns of .

A feature to me is the same kind of thing it is to e.g. Chris Olah. It's the function mapping network input to the activations of some neurons, or linear combination of neurons, in the network.

I'm not assuming that the function is linear in \Theta. If it was, this whole thing wouldn't just be an approximation within second order Taylor expansion distance, it'd hold everywhere.

OK maybe I'll try to avoid a debate about exactly what 'feature' means or means to different people, but in the example, you are clearly using . This is a linear function of the variables. (I said "Is the example not too contrived....in particular it is linear in " - I'm not sure how we have misunderstood each other, perhaps you didn't realise I meant this example as opposed to the whole post in general). But what it means is that in the next line when you write down the derivative with respect to , it is an unusually clean expression because it now doesn't depend on So again, in the crucial equation right after you say "The Hessian matrix for this network would be...", you in general get variables appearing in the matrix. It is just not as clean as this expression suggests in general.

Replies from: Lblack↑ comment by Lucius Bushnaq (Lblack) · 2022-08-31T20:19:44.322Z · LW(p) · GW(p)

I'm sorry but the fact that it is scalar output isn't explained and a network with a single neuron in the final layer is not the norm.

Fair enough, should probably add a footnote.

More importantly, I am trying to explain that I think the math does not stay the same in the case where the network output is a vector (which is the usual situation in deep learning) and the loss is some unspecified function. If the network has vector output, then right after where you say "The Hessian matrix for this network would be...", you don't get a factorization like that; you can't pull out the Hessian of the loss as a scalar, it instead acts in the way that I have written - like a bilinear form for the multiplication between the rows and columns of .

Do any practically used loss functions actually have cross terms that lead to off-diagonals like that? Because so long as the matrix stays diagonal, you're effectively just adding extra norm to features in one part of the output over the others.

Which makes sense, if your loss function is paying more attention to one part of the output than others, then perturbations to the weights of features of that part are going to have an outsized effect.

But what it means is that in the next line when you write down the derivative with respect to , it is an unusually clean expression because it now doesn't depend on

The perturbative series evaluates the network at particular values of . If your network has many layers that slowly build up an approximation of the function cos(x), to use in the final layer, it will effectively enter the behavioural gradient as cos(x), even though its construction evolves many parameters in previous layers.

Replies from: Spencer Becker-Kahn↑ comment by carboniferous_umbraculum (Spencer Becker-Kahn) · 2022-09-02T00:20:58.652Z · LW(p) · GW(p)

You're right about the loss thing; it isn't as important as I first thought it might be.

comment by tailcalled · 2022-09-02T09:06:00.695Z · LW(p) · GW(p)

Hmm, my intuition/reasoning says that the first couple of Hessian eigenvectors would control 1. the intercept/average output/position of the borders between the classes, 2. the bias-variance tradeoff if you are doing regularization (as you point out is necessary), 3. maybe (am unsure) the relative evidentiary value of different correlated features. (Likely in this order of descending eigenvalues) Does this align with your intuition/experiments?

comment by Jon Garcia · 2022-08-28T09:28:34.039Z · LW(p) · GW(p)

Talking about broad basins in this community reminds me of the claims of corrigible AI: that if you could get an AGI that is at least a little bit willing to cooperate with humans in adjusting its own preferences to better align with ours, then it would fall into a broad basin of corrigibility and become more aligned with human values over time.

I realize than the basins you're talking about here are more related to perception than value alignment, but do you think your work here could apply? In other words, if broad basins of network performance/generalization can be found by increasing the number of disentangled features available to the network, then would adding more independent measures of value help an AI to find broader basins in value space that would be more likely to contain human-aligned regions?

Maybe humans are able to align with each other so well because we have so many dimensions of value, all competing with each other to drive our behavior. A human brain might have an entire ensemble of empathy mechanisms, each making predictions about some independent, low-dimensional projection of other humans' preferences, wiring up those predictions into competing goal-generating modules.

Any one estimate of value would be prone to Goodharting, but maybe adding enough dimensions could make that easier to avoid?

Replies from: Chris_Leong, Lblack↑ comment by Chris_Leong · 2022-08-28T13:43:27.746Z · LW(p) · GW(p)

Talking about broad basins in this community reminds me of the claims of corrigible AI: that if you could get an AGI that is at least a little bit willing to cooperate with humans in adjusting its own preferences to better align with ours, then it would fall into a broad basin of corrigibility and become more aligned with human values over time

I don't suppose you could link me to a post arguing for this.

Replies from: Jon Garcia↑ comment by Jon Garcia · 2022-08-28T14:25:13.783Z · LW(p) · GW(p)

I was thinking of paulfchristiano's articles on corrigibility (https://www.lesswrong.com/posts/fkLYhTQteAu5SinAc/corrigibility [LW · GW]):

In this post I claim:

- A benign act-based agent will be robustly corrigible if we want it to be.

- A sufficiently corrigible agent will tend to become more corrigible and benign over time. Corrigibility marks out a broad basin of attraction towards acceptable outcomes.

↑ comment by Lucius Bushnaq (Lblack) · 2022-08-31T12:43:29.088Z · LW(p) · GW(p)

I think we're far off from being able to make any concrete claims about selection dynamics with this, let alone selection dynamics about things as complex and currently ill-operationalised as "goals".

I'd hope to be able to model complicated things like this once Selection Theory is more advanced, but right now this is just attempting to find angles to build up the bare basics.

comment by Garrett Baker (D0TheMath) · 2022-08-28T21:57:58.414Z · LW(p) · GW(p)

This is very very cool! At the end you say that looking at the gradient of the layer outputs is a change of basis. I don't understand why this is the case. Each layer, taking (in a fully connected network) has on the order of parameters if it outputs a vector of length , so the gradient of that vector with respect to the parameters will have entries. What did you have in mind when you said "change of basis"?

Replies from: TheMcDouglas, Lblack↑ comment by CallumMcDougall (TheMcDouglas) · 2022-08-29T04:21:51.913Z · LW(p) · GW(p)

I think the key point here is that we're applying a linear transformation to move from neuron space into feature space. Sometimes neurons and features do coincide and you can actually attribute particular concepts to neurons, but unless the neurons are a privileged basis there's no reason to expect this in general. We're taking the definition of feature here as a linear combination of neurons which represents some particular important and meaningful (and hopefully human-comprehensible) concept.

↑ comment by Lucius Bushnaq (Lblack) · 2022-08-29T06:07:19.308Z · LW(p) · GW(p)

You take the gradient with respect to any preactivation of the next layer. Shouldn't matter which one. That gets you a length n vector. Since the weights are linear, and we treat biases as an extra node of constant activation, the vector does not depend on which preactivation you chose.

The idea is to move to a basis in which there is no redundancy or cancellation between nodes, in a sense. Every node encodes one unique feature that means one unique thing.