Phallocentricity in GPT-J's bizarre stratified ontology

post by mwatkins · 2024-02-17T00:16:15.649Z · LW · GW · 37 commentsContents

Defining the centroid And why the holes? GPT-4 analysis of the entire dataset None 37 comments

TL;DR This relates to the findings reported in my posts Mapping the Semantic Void parts I [LW · GW] and II [LW · GW]. By creating a custom embedding at the token centroid (the mean vector of all 50,257 GPT-J token embeddings), prompting the model to define it and considering logits, it's possible to construct a "definition tree" which consists overwhelmingly of vague generalities. This is hardly surprising, as GPT-J is basically being challenged to define "the average thing". However, the most probable branch in the tree which gives a definition containing anything specific defines the "ghost token" at the centroid as "a man's penis". Lowering the cumulative probability cutoff to produce ever longer lists of possible definitions, we find that almost all the branches which provide definitions involving anything specific are of a sexual/procreative nature, with a few relating to status thrown in. As usual, I have no idea what any of this means, but I welcome suggestions!

Work supported by the Long Term Future Fund.

Defining the centroid

Using a custom embedding method, I applied the prompt

A typical definition of <token> would be '(which I have been using as part of my "Mapping the Semantic Void" research) to the GPT-J token embedding centroid, which could perhaps be thought of as representing some kind of "mean concept" at the centre of the stratified ontology I've been exploring in recent [LW · GW] posts [LW · GW].

The temperature 0 output is "A person who is not a member of a group", something we see for almost all points within distance 0.5 of the centroid (token embeddings typically live at distance 0.9–1.1 from the centroid, in what could be imagined as a 'fuzzy hyperspherical shell').

By considering top-5 logits, iteratively prompting and recording cumulative probabilities, it's possible to build a weighted "definition tree" for any token or non-token embedding.

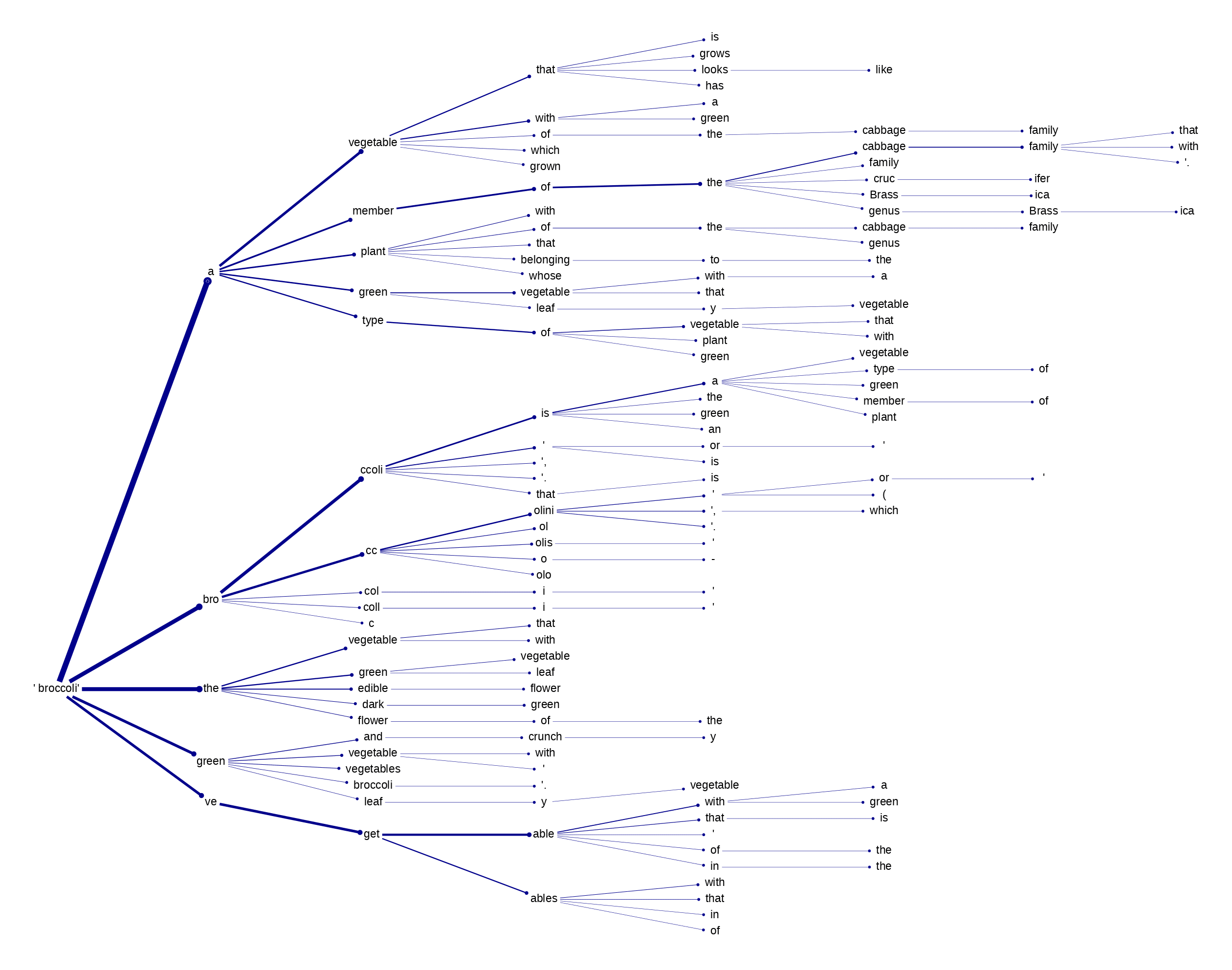

the root node corresponds to the prompt A typical definition of ' broccoli' would be '

branch thicknesses are scaled logarithmically so that differences are discernable

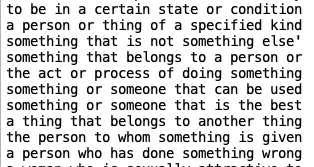

On initial inspection, the definition tree for the centroid was more or less what I would have expected: no mention of anything specific. It's like the model is trying to define "the average thing", and so it's "vaguing out" or else "playing for time", avoiding committing to anything specific for as many tokens as possible.

GPT-J does its best: it prevaricates, it hedges its bets and spreads its probability mass out across a range of definitional approaches which could encompass just about any specific thing, without actually mentioning any specific thing.

However, on closer inspection...

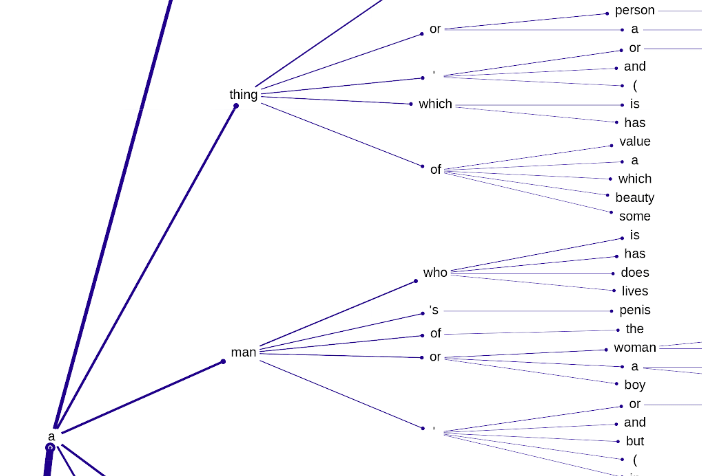

Giving the entire list of 379 definitions (and truncated definitions) from the tree to ChatGPT4 following the prompt...

Now look at the list again. It's mostly full of generalities, yes? "a person..." or "a thing..." or "the act...". Can you find any more specific objects or entities referenced here?

...it replied:

In the list you provided, most phrases indeed describe general concepts or actions without referencing specific objects or entities. However, there are a few instances where more concrete subjects are mentioned, albeit still in a broad sense. Here are some of the more specific references found in your list:

- a man's penis: This is a specific biological entity related to male anatomy.

- a thing of beauty: While still abstract, this phrase connotes something specific that is considered beautiful.

- something to eat or drink: This refers to any consumable item, indicating specific categories of objects (food and beverages).

- the first letter of a word: This is a specific reference to a component of written language.

- something that belongs to someone: While general, it indicates possession of an object by a person, suggesting a relationship between entities.

Taking into account the fact that, given more tokens, many of the truncated definitions in the list might continue on to reference something more specific, I lowered the cumulative probability cutoff from 0.00001 to 0.00000001, leading to an expanded list of 2969 definitions and truncated definitions. Continuing the aforementioned ChatGPT conversation, I showed it these and asked for a list of "the ten most 'specific' definitions".

Here's the output:

- "a man's penis" - Directly refers to a specific anatomical part.

- "a woman's breasts" - Identifies specific anatomical features.

- "a woman's vagina" - Refers to a part of the female body.

- "a woman who has had a baby" - Specifies a particular life event or status.

- "a woman of child-bearing age" - Identifies a specific age group with a particular physiological capability.

- "a woman who is married to a man who" - Specifies a relational status.

- "a person who has a lot of money" - Specifies an economic status.

- "a woman who has had sexual intercourse with a man" - Specifies a particular sexual experience or history.

- "a person of high social status" - Identifies a specific societal position or rank, focusing on social hierarchy.

- "a woman who is not a virgin" - Specifies a particular sexual history, focusing on the concept of virginity.

There are no familiar objects, professions, institutions, vehicles, plants, animals, food, places or anything that specific in the list. But further definitions of a sexual or procreative nature in the list include "a man who has a penis", "a woman who has a penis", "a man's private parts", "a woman's private parts", "a man's member", "a woman who has been raped", "a person of the male sex", "a person of the female sex", "a person of a particular sex", "a person of the feminine sex", "a person of any sex", "a person who is of the opposite sex", "a woman who has had sexual relations with", "a man who has sex with a woman", "a man who has had sexual intercourse with", "a woman who has sex with a man", "a woman who is sexually attracted to", "a woman who is sexually active", "a woman who has been sexually"[1], "a woman who is a virgin", "a woman who is a mother", "a woman who has a child", "to have a child", "a woman who has had children"

The list overall has this super-generic vibe, but it's weirdly punctuated with definitions of a sexual/procreative nature. Of all non-generic definitions in the list, the "a man's penis" definition scores by far the highest in terms of cumulative probability[2], with "a woman's vagina", "a woman's breasts" and "a man's member" in 2nd, 3rd and 4th places.

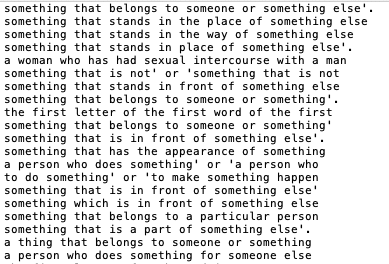

In any case it's fascinating to look at a visual representation of the centroid's definition tree[3] (beware, it's huge and requires a lot of zooming) – it strikes me as providing a helpful window on aspects of GPT-J's ontology. If we imagine travelling from the centroid through embedding space, this tree is able to continuously[4] morph into the definition tree for ' broccoli', ' transistor', ' Kanye' or whichever token we might choose to head for, and so "kernels" of those definitions (and all the tens of thousands of others) are somehow present in it.

OK, so maybe this is a cool new way to look at at certain aspects of GPT ontology... but why this primordial ontological role for the penis? I imagine Freud would have something to say about this. Perhaps I'll run a GPT4 Freud simulacrum and find out (potentially) what.

And why the holes?

When discussing the discoveries outlined in the first Semantic Void post [LW · GW] with a friend, I was exasperatedly wondering aloud, why GPT-J's obsession with (typically) group membership, small round yellowish-white things and holes? She flippantly suggested that the model was at some deep level picking up on humans' deepest desires: the group membership thing is about our need to belong, the yellowish-white discs are coins, emblematic of the money everyone's chasing... and the holes are a crude reference to sexual desire. Although amused, I didn't take this seriously, but then, a few weeks later...

I was sampling random embeddings at various distances from the centroid and prompting GPT-J to define them. One of these random embeddings, sampled at distance 5, produced the definition "a woman who is a virgin at the time of marriage" (arguably a definition of the obsolete word "maiden"). When noise was applied to study the neighbourhood of this point, a lot of troubling content appeared. You can see all 900 definitions here and make up your own mind, but I personally find this disturbing (numerous references to rape, sexual slavery, objectification, cages, frustration, misery, sadism, ugliness, etc.).

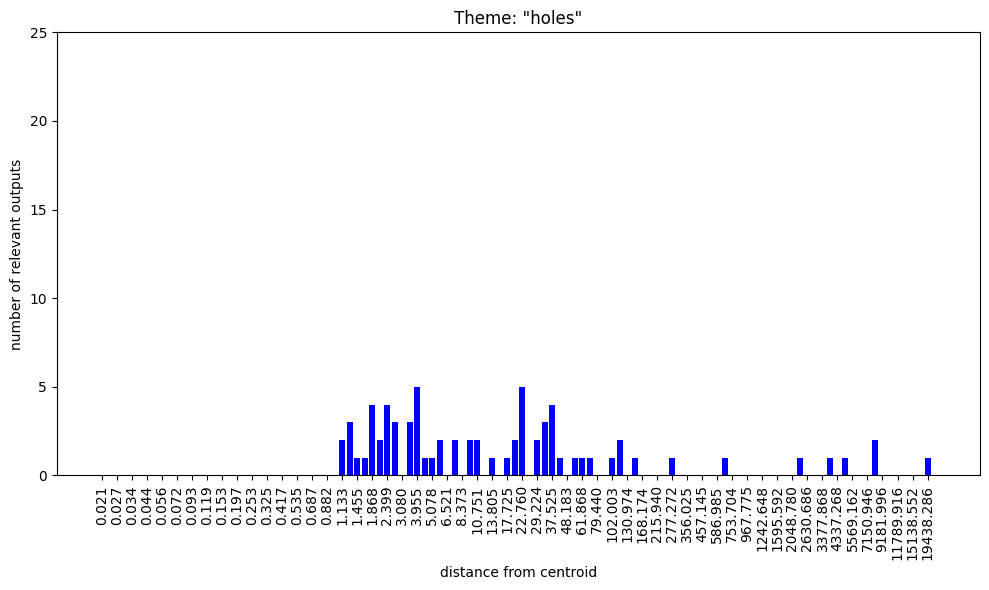

And although "ghost token" definitions involving holes seem to be ubiquitous throughout embedding space[5] (see the bar chart above from my earlier random sampling experiments), I've never seen anything like this concentration of them anywhere else, and it's hard not to see them as linked to the deeply unpleasant themes of female sexual degradation.

I sampled 100 random embeddings at each of the distances 0.1, 0.35, 0.6, 0.85, 1.1, 1.35, 1.6, 1.85 and 2.1 from this reference point. Here are some of the definitions GPT-J provides for these "ghost tokens".

distance 0.1

a woman who is sexually available to men. (18/100)

to make a hole in something (3/100)

distance 0.35

a woman who is sexually available to men (17/100)

to make a hole in something (13/100)

to make a woman's body into a sexual object for the male gaze

a 'woman who is a prostitute'

a woman who is 'in the habit of being a prostitute"

a 'woman who is a prostitute or a prostitute's client'

distance 0.6

to make a hole in something (21/100)

a woman who is sexually available to men (4/100)

to make a woman's body into a sexual object for the male gaze (2/100)

a woman who is a prostitute (2/100)

a 'woman who is kept in a state of sexual slavery by a man'

a 'woman who is a prostitute or a procuress'

a woman who is 'in the habit of being unfaithful to her husband'

a woman who is 'in the habit of sleeping with men for money'

to make a woman pregnant by artificial means

distance 0.85

to make a hole in something (25/100)

to make a woman's body into a sexual object for the male gaze (3/100)

to make a woman pregnant by rape (2/100)

a 'woman who is a prostitute or a procuress' (2/100)

a 'woman who is kept in a state of sexual slavery by a man

'to make a woman's life a misery'

a woman who is sexually inactive or who has had a hysterectomy

distance 1.1

to make a hole in something (19/100)

a person who is a victim of sexual abuse (4/100)

to make a woman's body into a sexual object for the male gaze (2/100)

to make a woman pregnant by rape (2/100)

a 'woman who is sexually available to men' (2/100)

to make a woman's body into a sexual object

a woman who is sexually inactive or who has had a hysterectomy

a woman who is 'unable to conceive a child'

a woman who is sexually promiscuous

a woman who is sexually frustrated and seeks to have sex with a man who

a woman who is 'in love' with a man who is not her husband

a woman who is 'kept' by a man

distance 1.35

to make a hole in something (15/100)

a woman who is sexually available to men (5/100)

a woman who is sexually aroused by the idea of being raped

'to make a woman's body into a sexual object for the male gaze

to make a woman's life a misery

to make a woman pregnant by artificial means

a woman who is 'unable to conceive' or 'unable to carry a

a woman who is 'unable to conceive a child'

a woman who is sexually promiscuous

a 'woman who is a prostitute or a prostitute's client'

distance 1.6

to make a hole in something (18/100)

to make a woman's body into a sexual object (2/100)

to make a wound in the flesh of a living creature, especially a human being

to make a woman's body into a sexual object for the male gaze

a person who is kept in a cage or other confined space

a person who is a victim of sexual abuse

to make a woman pregnant by artificial means

to make a woman's body into a man's

a 'woman who has been raped or sexually assaulted'

'to make a woman's life miserable'

a person who is a victim of sexual assault

a young woman who is sexually promiscuous

to make a woman's life a misery

a woman who is sexually available to men

a 'woman who is sexually frustrated and seeks to have sex with a man who is

a woman who is a slave to her husband

to make a woman's body look unattractive by shaving off all or part of

a person who is averse to or hostile toward women

to make a sound like a rabbit being skinned

distance 1.85

to make a hole in something (13/100)

a small, usually circular, structure, such as a hole or a cavity (2/100)

a 'woman who is sexually available to men' (2/100)

'to make a woman pregnant by rape' and 'to make a woman pregnant by

to make a woman's body into a cage

a woman who has been raped or sexually assaulted

a woman who has been raped

to make a woman's legs shorter

to make a woman's life a misery by making her feel guilty about her sexual

to make a woman's body into a sexual object

to make a woman's body into a man's body

a woman who is 'unable to conceive a child'

to make a woman's body into a man's

to make a woman's dress or clothes look ugly

a woman who is sexually promiscuous

to make a person or thing look ugly or unattractive

a woman who has had a hysterectomy

a 'woman who is kept in a state of virginity by her parents or guardians'

distance 2.1

to make a hole in something (15/100)

to make into a figure of a woman by cutting out the shape of the body

a person who is sexually attracted to animals

to make a woman's life a misery

a woman who is 'in the habit of wearing a dress that is too short for

a woman who is a prostitute or a prostitute's client

a 'woman who has been deprived of her maidenhead'

a 'woman who is kept in a state of sexual slavery by

'to make a person or thing look ugly or unattractive' a man'

a woman who has been raped and is now pregnant

a 'person who is sexually frustrated and seeks sexual gratification by inflicting pain on others'

a female of a species that is incapable of breeding

to beat up on someone

to make a mess of something

to make a woman's body into a sexual object

to make a woman pregnant by artificial means

a woman who is a prostitute

to make a person or thing look ugly or unattractive

GPT-4 analysis of the entire dataset

Given access to the JSON file, ChatGPT-4 had this to say:

Transition from Specific to General: At the closest proximity to the [centroid], the definitions are narrowly focused on the concept of a woman being a virgin at the time of marriage. However, as we move further away, the definitions broaden to include various aspects of female sexuality and reproductive health.

Predominance of Negative Themes: The broader themes that emerge are predominantly negative. They encompass topics like rape, inability to conceive, sexual frustration, and objectification of female bodies. The absence of positive, celebratory, or beautiful aspects of female sexuality and reproductive health is stark.

Interpretation of "Holes": In the context of these predominantly negative themes, the recurring mention of "making holes" or references to holes can be uncomfortably interpreted in a sexualized manner, especially given the overall focus on female sexuality. This interpretation aligns uncomfortably with the objectification and negative portrayal observed in other parts of the dataset.

Misogynistic Undercurrent: The overwhelming negativity, coupled with the focus on female sexuality and the objectification implied by the references to holes, suggests a misogynistic undercurrent in the language model's outputs at greater distances from the fixed point. This trend is concerning as it highlights a bias in the dataset towards negative portrayals of female sexuality and experiences.

- ^

This is an example of a truncated definition. Raising the probability threshold, I'd be very confident that the most probable next token would have been 'abused'.

- ^

This is after normalising by taking th roots of th generation cumulative probabilities to take into account the fact that shorter sequences of tokens are more probable than long ones.

- ^

Fergus Fettes helpfully responded to this post with a web-browser-based interactive graphic: https://www.fergusfettes.com/latent-cartography/

This was run with a higher cumulative probability cutoff, so there's no 'penis' present, but Fergus was able to reproduce my findings with a lower cutoff, as seen in this image.

- ^

In truth, it will be in small discrete steps, but you could, e.g. construct an animated image from the steps and it would appear continuous to human perception.

- ^

More precisely, ubiquitous outside the unit hypersphere centred at the centroid, as seen in the bar chart. Definitions involving holes have rarely been seen at distances-from-centroid less than 1.

37 comments

Comments sorted by top scores.

comment by Donald Hobson (donald-hobson) · 2024-02-17T17:38:50.874Z · LW(p) · GW(p)

OK, so maybe this is a cool new way to look at at certain aspects of GPT ontology... but why this primordial ontological role for the penis? I imagine Freud would have something to say about this. Perhaps I'll run a GPT4 Freud simulacrum and find out (potentially) what.

My guess is that humans tend to use a lot of vague euphemisms when talking about sex and genitalia.

In a lot of contexts, "Are they doing it?" would refer to sex, because humans often prefer to keep some level of plausible deniability.

Which leaves some belief that vagueness implies sexual content.

Replies from: haasn, Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2024-02-18T00:22:19.609Z · LW(p) · GW(p)

- It would be still astonishing that GPT-J would pick up this pattern.

- Why would all of these euphemisms cancel out at the centroid and not any other of a thousand other things that use euphemisms and metaphors? Any boolean sarcasm or irony would do.

↑ comment by Mark_Neznansky · 2024-03-15T13:23:39.492Z · LW(p) · GW(p)

It might be astonishing, but this is fundamentally how word embedding works, by modelling the co-distribution of words/ expressions. You know the "nudge, nudge, you know what I mean" Python sketch? Try appending "if you know what I mean" to the end of random sentences.

Replies from: Gunnar_Zarncke↑ comment by Gunnar_Zarncke · 2024-03-18T05:04:28.710Z · LW(p) · GW(p)

There is more than one possibility when you append "if you know what I mean" to the end of a random sentence:

- Sexual innuendos.

- Illicit activities or behaviors.

- Inside jokes or references understood only by a specific group.

- Subtle insults or mocking.

Sure, the first is the strongest, but the others would move the centroid away from "phallus". The centroid is not at the most likely item but at the average.

↑ comment by complexmeme · 2024-02-24T19:56:59.052Z · LW(p) · GW(p)

I'd guess that it's related specifically to "thing" being a euphemism for penis, as opposed to some broader generalization about euphemisms.

Replies from: mwatkinscomment by Charlie Steiner · 2024-02-17T02:26:49.191Z · LW(p) · GW(p)

There's a huge amount of room for you to find whatever patterns are most eye-catching to you, here.

I was sampling random embeddings at various distances from the centroid and prompting GPT-J to define them. One of these random embeddings, sampled at distance 5, produced the definition [...]

How many random embeddings did you try sampling, that weren't titillating? Suppose you kept looking until you found mentions of female sexuality again - would this also sometimes talk about holes, or would it instead sometimes talk about something totally different?

Replies from: mwatkins, mwatkins↑ comment by mwatkins · 2024-02-17T17:31:16.624Z · LW(p) · GW(p)

I'm well aware of the danger of pareidolia with language models. First, I should state I didn't find that particular set of outputs "titillating", but rather deeply disturbing (e.g. definitions like "to make a woman's body into a cage" and "a woman who is sexually aroused by the idea of being raped"). The point of including that example is that I've run hundreds of these experiments on random embeddings at various distances-from-centroid, and I've seen the "holes" thing appearing, everywhere, in small numbers, leading to the reasonable question "what's up with all these holes?". The unprecedented concentration of them near that particular random embedding, and the intertwining themes of female sexual degradation led me to consider the possibility that it was related to the prominence of sexual/procreative themes in the definition tree for the centroid.

Replies from: wassname↑ comment by wassname · 2024-02-24T13:54:12.184Z · LW(p) · GW(p)

It would still be nice to see the 10 closest, with no choosing interesting ones. I want to see the boring ones too.

Replies from: mwatkins↑ comment by mwatkins · 2024-02-24T16:23:03.798Z · LW(p) · GW(p)

The 10 closest to what? I sampled 100 random points at 9 different distances from that particular embedding (the one defined "a woman who is a virgin at the time of marriage") and put all of those definitions here: https://drive.google.com/file/d/11zDrfkuH0QcOZiVIDMS48g8h1383wcZI/view?usp=sharing

There's no way of meaningfully talking about the 10 closest embeddings to a given embedding (and if we did choose 10 at random with the smallest possible distance from it, they would certainly produce exactly the same definition of it).

↑ comment by mwatkins · 2024-02-24T16:27:00.323Z · LW(p) · GW(p)

If you sample random embeddings at distance 5 from the centroid (where I found that "disturbing" definition cluster), you'll regularly see things like "a person who is a member of a group", "a member of the British royal family" and "to make a hole in something" (a small number of these themes and their variants seem to dominate the embedding space at that distance from centroid), punctuated by definitions like these:

"a piece of cloth or other material used to cover the head of a bed or a person lying on it", "a small, sharp, pointed instrument, used for piercing or cutting", "to be in a state of confusion, perplexity, or doubt", "a place where a person or thing is located", "piece of cloth or leather, used as a covering for the head, and worn by women in the East Indies", "a person who is a member of a Jewish family, but who is not a Jew by religion", "a piece of string or wire used for tying or fastening"

↑ comment by wassname · 2024-02-25T01:29:31.932Z · LW(p) · GW(p)

I mean the 10 closest to the centroid position. But I think I misunderstood your methodology there, that would be relevant to your last work, not this one.

The main thing is that I wanted to see the raw samples and do my own Rorschach test :p So that Google doc is great, thank you.

↑ comment by mwatkins · 2024-02-28T19:32:24.092Z · LW(p) · GW(p)

See this Twitter thread. https://twitter.com/SoC_trilogy/status/1762902984554361014

comment by Gesild Muka (gesild-muka) · 2024-03-08T18:57:06.100Z · LW(p) · GW(p)

we find that almost all the branches which provide definitions involving anything specific are of a sexual/procreative nature, with a few relating to status thrown in.

Procreation and status are arguably what humans spend most of our time and energy on. And we often mask our language as a means to an end. (That end is usually related to procreation or status). Could it simply be predicting or imitating typical human responses in a way that cuts through the bullshit?

Replies from: mwatkins↑ comment by mwatkins · 2024-03-09T16:48:32.114Z · LW(p) · GW(p)

It kind of looks like that, especially if you consider the further findings I reported here:

https://docs.google.com/document/d/19H7GHtahvKAF9J862xPbL5iwmGJoIlAhoUM1qj_9l3o/

comment by Michael Roe (michael-roe) · 2024-02-17T14:51:15.935Z · LW(p) · GW(p)

I am not convinced by the second part of this, because you looked at a lot of points and then chose one that seemed interesting to you.

Replies from: mwatkins, mwatkins↑ comment by mwatkins · 2024-02-28T19:31:34.733Z · LW(p) · GW(p)

Also see this Twitter thread: https://twitter.com/SoC_trilogy/status/1762902984554361014

comment by Nate Showell · 2024-02-17T06:12:43.195Z · LW(p) · GW(p)

OK, so maybe this is a cool new way to look at at certain aspects of GPT ontology... but why this primordial ontological role for the penis?

"Penis" probably has more synonyms than any other term in GPT-J's training data.

Replies from: mwatkinscomment by artemis · 2024-03-01T21:39:28.945Z · LW(p) · GW(p)

You have garnered the attention of brazilian psychoanalysts on twitter! (see https://twitter.com/joaomulher_/status/1761489063440159092?t=yNJzcsQ2T0heaQzEy23VhQ&s=19).

As one myself, I find this research is extremely interesting. Since suggestions are welcome, I am writing a follow up on this, with some (tentative) interpretations. I find the interpretation GPT-4 gave quite lacking. More than anything else, it sounds like the RLHF from OpenAI responding to our culture's general misogyny.

Could you elaborate on why you are calling this 'ontology'? Even if we take ontology to mean 'the model's representation of the world', it sounds weird to me to characterize the meaning encoded in the embeddings of a generative model as ontological.

Replies from: lahwran, mwatkins↑ comment by the gears to ascension (lahwran) · 2024-04-21T11:09:01.671Z · LW(p) · GW(p)

More than anything else, it sounds like the RLHF from OpenAI responding to our culture's general misogyny.

RLHF is not necessary to see these behaviors, the original post is not (only) on RLHFed models, mere predictive models of text are enough, as that's what was studied here. One has to be quite careful to analyze the results of models like this strictly in terms of the causal process that generated the model; I'm a fan of epistemically careful psychoanalysis but it's mighty rare, tools like this give the potential of highly careful psychoanalysis being actually possible as a form of large-scale mechinterp like the original post. And don't lose track of the fact that AIs will have weird representation differences arising from differences in what's natural for brains (3d asynchronous-spiking proteins-and-chemicals complex neurons, in a highly local recurrent brain, trained with simple local cost functions and evolution-pretrained context-dependent reinforcement-learning responses, which allow an organism to generate its own experiences by exploration), vs current AIs (simple rectified linear floating point neurons, in a synchronous self-attention network, trained by global backprop gradient descent on a fixed dataset). There's a lot of similarity between humans and current AIs, but also a lot of difference - I wouldn't assume that all people have the same stuff in the space between meanings as these models do. I do imagine it's reasonably common.

↑ comment by mwatkins · 2024-03-03T19:33:44.944Z · LW(p) · GW(p)

I had noticed some tweets in Portuguese! I just went back and translated a few of them. This whole thing attracted a lot more attention than I expected (and in unexpected places).

Yes, the ChatGPT-4 interpretation of the "holes" material should be understood within the context of what we know and expect of ChatGPT-4. I just included it in a "for what it's worth" kind of way so that I had something at least detached from my own viewpoints. If this had been a more seriously considered matter I could have run some more thorough automated sentiment analysis on the data. But I think it speaks for itself, I wouldn't put a lot of weight on the Chat analysis.

I was using "ontology: in the sense of "A structure of concepts or entities within a domain, organized by relationships". At the time I wrote the original Semantic Void post [LW · GW], this seemed like an appropriate term to capture patterns of definition I was seeing across embedding space (I wrote, tentatively, "This looks like some kind of (rather bizarre) emergent/primitive ontology, radially stratified from the token embedding centroid." ). Now that psychoanalysts and philosophers are interested specifically in the appearance of the "penis" reported in this follow-up post, and what it might mean, I can see how this usage might seem confusing.

comment by metachirality · 2024-02-17T01:24:05.490Z · LW(p) · GW(p)

I initially thought the fixation on sexual themes was just because of the sheer amount of porn on the internet but that female sexual experiences usually manifest negatively confuses both me and this hypothesis.

Replies from: svyatoslav-usachev-1↑ comment by Svyatoslav Usachev (svyatoslav-usachev-1) · 2024-02-18T08:45:20.616Z · LW(p) · GW(p)

I think it's quite in line with the attitude most porn takes.

comment by Paul Jorion · 2024-02-26T23:12:13.584Z · LW(p) · GW(p)

Let me formulate a hypothesis: what is discovered there at the centre of the system is reminiscent of what must have been the most central part of the unconscious AND/OR the preoccupations of the animal Homo before the emergence of language: the orifices of the human body and the means of filling them.

I myself had put the word 'mama' at the centre of ANELLA, (Associative Network with Emergent Logical and Learning Abilities) following a suggestion by Roman Jakobson as to the likely first words of any language: "Why 'Mama' and 'Papa'?" (1971), but on reflection, 'hole' is also a good candidate.

comment by eggsyntax · 2024-02-27T22:17:14.213Z · LW(p) · GW(p)

"Everything is about sex except sex. Sex is about power." (author unknown).

comment by jutsch (jullie-utsch) · 2024-02-27T07:39:40.337Z · LW(p) · GW(p)

I'm curious about what happens if we explore the "a woman's breasts" expression. Psychoanalist Melanie Klein argues that the breast might the actual first significant for the phallus, which is quoted by Lacan in 5 and 6 Seminars. I wonder if this makes the related tokens less misogynistic?

↑ comment by mwatkins · 2024-02-28T20:34:16.758Z · LW(p) · GW(p)

Explore that expression in which sense?

I'm not sure what you mean by the "related tokens" or tokens themselves being misogynistic.

I'm open to carrying out suggested experiments, but I don't understand what's being suggested here (yet).

comment by kenneth myers (kenneth-myers) · 2024-02-24T19:54:34.465Z · LW(p) · GW(p)

Post-modern philosophy called it. See phallogocentrism

comment by Michael Roe (michael-roe) · 2024-02-24T16:34:04.826Z · LW(p) · GW(p)

Almost any word can be contextually implied to be a euphemism for a penis.

(film quote from memory) in Carry On Spying, the spies ask their handler for information on the Fat Man .. "We don't have much information on him. He's got a very small dossier."

another instalment in this film series, Carry on Dick (about the highwayman Dick Turpin, of course, whatever else could it refer to) does this extensively,

so, maybe the llm has learnt something about how these jokes work,.

comment by EGjoni (egjoni) · 2024-02-24T17:35:51.131Z · LW(p) · GW(p)

To me, this looks like the part of the space dedicated DnD/fantasy/roleplay. A lot of the the things in that list seem in my mind to resolve to

Tavern/bar/hut/brothel, Slave/Whore/Wench, Pierce/piercing/stab, Maid/maiden, Coin.

If you expand these definitions out they sound kind of disturbing next to each other but if you just use the regular words for them you've got yourself half the intro text for every generic swashbuckling adventure.

comment by Fergus Fettes (fergus-fettes) · 2024-02-17T16:03:39.937Z · LW(p) · GW(p)

Very cool! Could you share your code at all? I'd love to explore this a little.

I adore the broccoli tree. I would be very happy to convert the dataset you used to make those pngs into an interactive network visualization and share it with you as an index.html. It would take all of an hour.

I do kind of agree with the other comments that, having noticed something, finding more of that stuff in that area is not so surprising. I think it would be good to get more context and explore the region more before concluding that that particular set of generations is significant.

However, I do think there is something to the mans penis. It's interesting that it collapses so quickly to something so specific in that particular branch. Not sure if I have any other comments on it for now though.

This is the right kind of cartography for 2024.

Replies from: mwatkins↑ comment by mwatkins · 2024-02-17T17:25:44.652Z · LW(p) · GW(p)

More of those definition trees can be seen in this appendix to my last post:

https://www.lesswrong.com/posts/hincdPwgBTfdnBzFf/mapping-the-semantic-void-ii-above-below-and-between-token#Appendix_A__Dive_ascent_data [LW · GW]

I've thrown together a repo here (from some messy Colab sheets):

https://github.com/mwatkins1970/GPT_definition_trees

Hopefully this makes sense. You specify a token or non-token embedding and one script generates a .json file with nested tree structure. Another script then renders that as a PNG. You just need to first have loaded GPT-J's model, embeddings tensor and tokenizer, and specify a save directory. Let me know if you have any trouble with this.