The longest training run

post by Jsevillamol, Tamay, Owen D (Owen Dudney), anson.ho · 2022-08-17T17:18:40.387Z · LW · GW · 12 commentsThis is a link post for https://epochai.org/blog/the-longest-training-run

Contents

A simple framework for training run lengths Accounting for increasing dollar-budgets Accounting for increased algorithmic efficiency Accounting for hardware swapping Accounting for stochasticity Fixed deadlines Renting hardware Conclusion Acknowledgements None 13 comments

In short: Training runs of large Machine Learning systems are likely to last less than 14-15 months. This is because longer runs will be outcompeted by runs that start later and therefore use better hardware and better algorithms. [Edited 2022/09/22 to fix an error in the hardware improvements + rising investments calculation]

| Scenario | Longest training run |

| Hardware improvements | 3.55 years |

| Hardware improvements + Software improvements | 1.22 years |

| Hardware improvements + Rising investments | 9.12 months |

| Hardware improvements + Rising investments + Software improvements | 2.52 months |

Larger compute budgets and a better understanding of how to effectively use compute (through, for example, using scaling laws) are two major driving forces of progress in recent Machine Learning.

There are many ways to increase your effective compute budget: better hardware, rising investments in AI R&D and improvements in algorithmic efficiency. In this article we investigate one often-overlooked but plausibly important factor: how long—in terms of wall-clock time—you are willing to train your model for.

Here we explore a simple mathematical framework for estimating the optimal duration of a training run. A researcher is tasked with training a model by some deadline, and must decide when to start their training run. The researcher is faced with a key problem: by delaying the training run, they can access better hardware, but by starting the training run soon, they can train the model for longer.

Using estimates of the relevant parameters, we calculate the optimal training duration. We then explore six additional considerations, related to 1) how dollar-budgets for compute rise over time, 2) the rate at which algorithmic efficiency improves, 3) whether developers can upgrade their software over time 4) what would happen in a more realistic framework with stochastic growth, 5) whether it matters for the framework that labs are not explicitly optimizing for optimal training runs and 6) what would happen if they rent instead of buy hardware.

Our conclusion depends on whether the researcher is able to upgrade their hardware stack while training. If they aren't able to upgrade hardware, optimal training runs will likely last less than 3 months. If the researcher can upgrade their hardware stack during training, optimal training runs will last less than 1.2 years.

These numbers are likely to be overestimates, since 1) we use a conservative estimate of software progress, 2) real-world uncertainty pushes developers towards shorter training runs and 3) renting hardware creates an incentive to wait for longer and parallelize the run close to the deadline.

| Scenario | Longest training run |

| Hardware improvements | 3.55 years |

| Hardware improvements + Software improvements | 1.22 years |

| Hardware improvements + Rising investments | 9.12 months |

| Hardware improvements + Rising investments + Software improvements | 2.52 months |

A simple framework for training run lengths

Consider a researcher who wants to train a model by some deadline . The researcher is deciding when to start the training run in order to maximize the amount of compute per dollar.

The researcher is faced with a key trade-off. On one hand, they want to delay the run to access improved hardware (and/or other things like larger dollar-budgets and better algorithms.) On the other hand, a delay reduces the wall-clock time that the model is trained for.

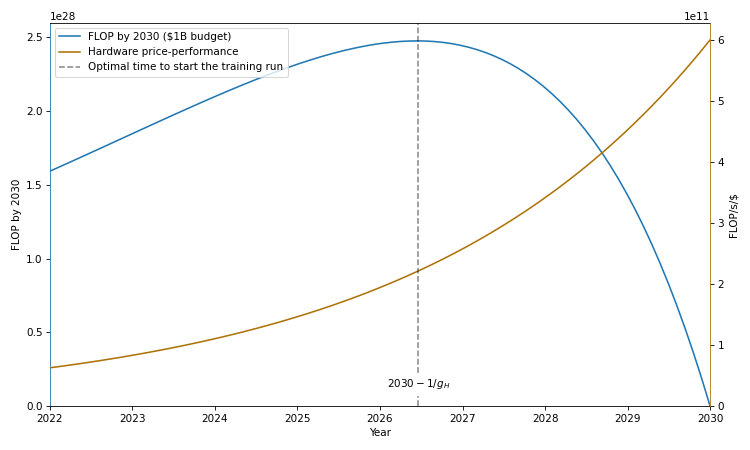

Suppose that hardware price-performance is increasing as follows:

where is the initial FLOPS/$ and is the rate of yearly improvement.[1] If we start a training run at time , the cumulative FLOP/$ at time will be equal to:

where is the price-performance of the available hardware when we start our run (in FLOP/$/time), and is the amount of time since we started our run. Given a fixed dollar-budget, when should we buy our hardware and start a training run to achieve the most FLOP/$ by a deadline ?

To figure that out, we need to find the most efficient time to start a run that concludes by time . We can find that by deriving with respect to and setting the result equal to zero.

The optimal training run has length . In previous work we estimate the rate of improvement of GPU cost effectiveness at (Hobbhahn and Besiroglu, 2022) [2]. This leads to an optimal training run of length years.

The intuition is as follows: if you want to train a model by a deadline , then, on the one hand, you want to wait as long as possible to get access to high price-performance hardware. On the other hand, by waiting, you reduce the total time available for your training run. The optimal training duration is the duration that strikes the right balance between these trade-offs.

This calculation rests on some assumptions:

- We are ignoring that willingness to invest in ML rises over time, so a researcher might be able to secure a larger budget if they wait

- We are ignoring that improvements in software and better understanding of scaling laws might enable researchers to deploy compute more effectively in the future

- We are assuming that practitioners will not upgrade their hardware in the middle of a run

- We are assuming that the involved quantities will improve at a predictable, deterministic rate

- We are assuming that developers optimize for a fixed deadline

- We are assuming that developers are buying their own hardware

Let's relax each of these assumptions in turn and see where they take us.

Accounting for increasing dollar-budgets

In reality, the total amount of compute invested in ML training runs has grown faster than GPU price performance. Companies have been increasing their dollar-budgets for training ML models; hence, researchers might want to delay training ML models to access larger dollar-budgets.

Our previous work found a rate of growth of compute invested in training runs equal to [3]. This rate of growth can be decomposed as , the sum of hardware efficiency growth and the growth in investment [4].

Following the same reasoning as above, we can calculate the optimal training run length equal to years, ie months.

This is much shorter than the ~ year training duration we saw previously. Researchers want to wait for both better hardware and larger dollar-budgets. Since dollar-budgets have been growing about an order of magnitude more quickly than hardware price-performance has been improving, researchers taking into account growing dollar-budgets will train their models for roughly an order of magnitude less wall-clock time.

Accounting for increased algorithmic efficiency

In 2020, Kaplan et al´s paper about scaling laws for neural models provided practitioners with a recipe for training models in a way that leverages compute effectively. Two years after, Hoffman et al upended the situation by releasing an updated take on scaling laws that helped spend compute even more efficiently.

Our understanding of how to effectively train models seems to be rapidly evolving. Hence, practitioners today might be dissuaded from planning a multiyear training run because advances in the field might render their efforts obsolete.

One way we can study this phenomenon is by understanding how much less compute we need today to achieve the same results as a decade ago. While partially outdated in the light of new developments, Hernandez and Brown's measurement of algorithmic efficiency remains the best work in the area.

They find a 44x improvement in algorithmic efficiency over 7 years, which translates to a rate of growth of .

Combining this with the rate of improvement of hardware leads to a combined rate of growth of . This translates to an optimal training run length of years.

We could also combine this with the rate of growth of investments. In that case we would end up with a total rate of growth of effective compute equal to . This results in an optimal training run length of years, ie months.

Accounting for hardware swapping

Through this analysis we have assumed that ML practitioners commit to a fixed hardware infrastructure. However, in theory one could stop the current run, save the current state of the weights and the state of the optimizer, and resume the run in a new hardware setup.

Hypothetically, a researcher could upgrade their hardware as time goes on. In practice, if our budget is fixed this is a moot consideration. We want to spend our money at the point where we can buy the most compute per dollar before a deadline. Spending money before or afterwards leads to less returns per dollar overall.

Our budget does not need to be fixed however. As investments rise, we could use the incoming money buying new, better hardware to grow our hardware stock.

Suppose that the amount of available money at each point grows as . We can spend money at any time to buy state-of-the-art hardware, whose cost-efficiency has been improving all along at a pace .

There are many possible ways to spend the budget over time. However, the optimal solution will be to spend all available budget at the point that maximizes the product between hardware cost-efficiency and time remaining, and then spend any incoming money afterwards as soon as possible to get higher returns.

Formally, the cumulative amount of FLOP that a run started at point can muster by time is equal to:

Deriving with respect to as before gives us the optimal training run length:

The answer is the same as in the case where our budget is fixed, there are no rising investments and swapping hardware is not allowed. I.e., we find that the influence of the rising budget disappears - the optimal length of the training run now depends only on the rate of hardware improvement.

This is simply because there is no additional incentive to wait for larger dollar-budgets; researchers reap the benefits of growing hardware-budgets by default. Hence, the optimal duration of a training run is the same as that found when only considering hardware price-performance improvements.

Accounting for stochasticity

In our framework we have assumed a simple deterministic setup where hardware efficiency, investments and algorithmic efficiency rise smoothly and predictably.

In reality, progress is more stochastic. New hardware might overshoot (or undershoot) expectations. Market fluctuations and the interest in your research area may affect the dollar-budget you can muster for training at any given point.

Developing a framework that incorporates stochasticity is beyond the scope of this article. However, it may be useful to consider an idea from portfolio theory: when you're not sure what will happen in the future, you don't want to lock up capital in long-term projects. This pushes training runs towards being shorter—and means that the numbers we are estimating in this article are likely on the higher side.

Fixed deadlines

One possible objection to our framework is that it assumes developers are trying to hit a fixed deadline. In reality, researchers are often happy to wait for longer results.

Ultimately, we believe that this is a good framework. The way we conceptualize research in AI envisions many labs beginning their training runs at different times.

In any given quarter, the lab that releases the most compute-intensive model will be the one that started their training run closest to the optimal length.

Even if labs are not optimizing for explicit deadlines or planning training lengths, the most compute-efficient among them will still roughly obey these rules. Labs that train for shorter and longer times than the optimum will be outclassed.

Assuming that the most compute-intensive models will also be the most impressive, then this model provides a good upper bound on training lengths of impressive models.

Renting hardware

Through this discussion we have been assuming that labs purchase rather than rent hardware for training. This is the case for some of the top labs that usually train the largest models, such as Google and Meta. However, many others instead resort to renting hardware use from cloud computing platforms such as Amazon AWS, Lambda Labs or Google Cloud.

In the case hardware is rented, and there the training run require a small fraction of the available capacity, we expect our model not to apply. Since hardware prices decrease over time and training runs are largely paralellizable, there is a strong incentive for labs that rent hardware to wait for as long as possible, and train their model very briefly on a much larger number GPUs (relative to the number that is optimal when hardware is purchased) close to their deadline.

While we think this is an important case to consider (as renting hardware is likely much common in machine learning relative to using purchased hardware), since we're mostly interested in understanding the decision-problems associated with training the largest models at any point in time, we have not studied the case of renting hardware in much depth.

Conclusion

We have analyzed how continuously improving hardware, bigger budgets and rising algorithmic efficiency limit the usefulness of a longer training run.

Researchers are faced with a trade-off when deciding when to start a training run that ends at some time . On one hand, they want to delay the start of this run to get access to improved hardware and/or additional factors like larger dollar-budgets and better algorithms. On the other hand, a delay reduces the time that the hardware can deployed for. Since we have some sense of the rate at which these factors change over time, we can infer the optimal duration of ML training runs.

We find that optimally balancing these trade-offs implies that the resulting training runs should last somewhere between 2.5 months and 3.6 years.

Allowing for swapping hardware removes the effect of rising budgets (since we can spend incoming money without stopping the run). This increases the optimal training run length to between 1.2 and 3.6 years.

We expect these numbers to be overestimates, since improvements are stochastic, uncertainty will push developers to avoid over-investing in single training runs, and renting hardware incentivizes developers to wait longer before starting their training run.

Furthermore, large-scale runs can be technically difficult to implement. Hardware breaks and needs to be replaced. Errors and bugs force one to discard halfway completed training runs. All these factors shorten the optimal training run[5].

The biggest uncertainty in our model is the rate at which algorithmic efficiency improves. We have used an estimate from (Hernandez and Brown, 2020) to derive the result. This paper precedes the conversation about scaling laws and uses data from computer vision rather than language models. Our sense is that (some types of) algorithmic improvements have proven to be faster than estimated in that paper, and this could further shorten the optimal training run.

In any case, we can conclude that at current rate of hardware improvement we probably will not see runs of notable ML models over 4 years long, at least when researchers are optimizing compute per dollar.

| Scenario | Longest training run |

| Hardware improvements | 3.55 years |

| Hardware improvements + Software improvements | 1.22 years |

| Hardware improvements + Rising investments | 9.12 months |

| Hardware improvements + Rising investments + Software improvements | 2.52 months |

Acknowledgements

We thank Sam Ringer, Tom Davidson, Ben Cottier, Ege Erdil and Lennart Heim for discussion.

Thanks to Eduardo Roldan for preparing the graph in the post.

- ^

We assume that hardware price performance increases smoothly over time, rather than with discontinuous jumps corresponding to the release of new GPU designs or lithography techniques. We expect that on a more realistic step-function process, the key conclusions of our framework would still roughly follow (modulo optimal training durations occasionally changing a few months to accommodate discrete generations of hardware).

- ^

They find a doubling time for hardware efficiency of 2.46 years. This corresponds to a yearly growth rate of .

- ^

We found a 6.3 month doubling time for compute invested in large training runs. This is a yearly growth rate of .

- ^

In theory, we should also account for the rise in training lengths. In practice, when we looked at a few data-points training lengths appeared to be increasing linearly over time, so we believe the effect is quite small.

- ^

Meta's OPT logbook illustrates this well: they report being unable to continuously train their models for more than 1-2 days on a cluster of 128 nodes due to the many failures requiring manual detection and remediation.

12 comments

Comments sorted by top scores.

comment by johnswentworth · 2022-08-17T19:03:54.544Z · LW(p) · GW(p)

Name suggestion: "The Craig Venter Principle". Back in '98, the Human Genome Project was scheduled to finish sequencing the first full human genome in another 5 years (having started in 1990). Venter started a company to do it in two years with more modern tech (specifically shotgun sequencing). That basically forced the HGP to also switch to shotgun sequencing in order to avoid public embarrassment, and the two projects finished a first draft sequence at basically the same time.

comment by NunoSempere (Radamantis) · 2022-08-17T22:48:29.859Z · LW(p) · GW(p)

Why publish this publicly? Seems like it would improve optimality of training runs?

Replies from: Tamay↑ comment by Tamay · 2022-08-18T01:17:23.976Z · LW(p) · GW(p)

Good question. Some thoughts on why do this:

- Our results suggest we won't be caught off-guard by highly capable models that were trained for years in secret, which seems strategically relevant for those concerned with risks

- We looked whether there was any 'alpha' in these results by investigating the training durations of ML training runs, and found that models are typically trained for durations that aren't far off from what our analysis suggests might be optimal (see a snapshot of the data here)

- It independently seems highly likely that large training runs would already be optimized in this dimension, which further suggests that this has little to no action-relevance for advancing the frontier

↑ comment by Yitz (yitz) · 2022-08-18T02:51:49.078Z · LW(p) · GW(p)

Thanks for thinking it over, and I agree with your assessment that this is better public knowlege than private :)

↑ comment by NunoSempere (Radamantis) · 2022-08-18T08:46:18.113Z · LW(p) · GW(p)

Thanks Tamay!

comment by Jonathan_Graehl · 2022-08-17T17:45:35.509Z · LW(p) · GW(p)

This is good thinking. Breaking out of your framework: trainings are routinely checkpointed periodically to disk (in case of crash) and can be resumed - even across algorithmic improvements in the learning method. So some trainings will effectively be maintained through upgrades. I'd say trainings are short mostly because we haven't converged on the best model architectures and because of publication incentives. IMO benefitting from previous trainings of an evolving architecture will feature in published work over the next decade.

Replies from: jacob_cannell↑ comment by jacob_cannell · 2022-08-19T19:27:10.883Z · LW(p) · GW(p)

Was going to make nearly the same comment, so i'll just add to yours: an existing training run can benefit from hardware/software upgrades nearly as much as new training runs. Big changes to hardware&software are slow relative to these timescales. (Nvidia releases new GPU architectures on a two year cadence, but they are mostly incremental).

New training runs benefit most from major architectural changes and especially training/data/curriculum changes.

comment by Douglas_Knight · 2022-08-19T19:12:33.728Z · LW(p) · GW(p)

Are you assuming that electricity is free? My understanding is that the cost of silicon is small compared to the cost of electricity, if you run the chip all the time, as in this article. For example, this gpu costs $60 and consumes 300 watts = 2700 kwh/year = $270/year, at $.10/kwh. This one costs 10x and consumes 3x, so its price is not negligible, but still less than a year of operation. Plus I think the data center rule of thumb is that you should multiply electricity by 2 to account for cooling costs.

This will have a very large effect on the total compute bought, numbers which only appear in the graph. The headline numbers—the optimal times—depend mainly on the exponential form of the improvement in efficiency. If the time for the cost of silicon to be cut in half is same as the time for the amount of electricity needed to be cut in half (Moore's law vs Koomey's law), then you should get roughly the same answer. Koomey's law used to be faster, but after the breakdown in Dennard scaling, it seems to be slower.

If you want a GPU-specific version of Koomey's law, I don't know. Does that data set of GPUs have watt ratings?

comment by joshc (joshua-clymer) · 2022-08-28T02:33:04.460Z · LW(p) · GW(p)

This is because longer runs will be outcompeted by runs that start later and therefore use better hardware and better algorithms.

Wouldn't companies port their partially-trained models to new hardware? I guess the assumption here is that when more compute is available, actors will want to train larger models. I don't think this is obviously true because:

1. Data may be the bigger bottleneck. There was some discussion of this here [LW · GW]. Making models larger doesn't help very much after a certain point compared with training them with more data.

2. If training runs are happening over months, there will be strong incentives to make use of previously trained models -- especially in a world where people are racing to build AGI. This could look like anything from slapping on more layers to developing algorithms that expand the model in all relevant dimensions as it is being trained. Here's a paper about progressive learning for vision transformers. I didn't find anything for NLP, but I also haven't looked very hard.

↑ comment by gwern · 2022-08-30T00:19:06.977Z · LW(p) · GW(p)

Not necessarily larger, but different. Presumably new hardware will have different performance characteristics than the old hardware (otherwise what's the point?); it seems unlikely that future GPUs will simply be exactly like the old GPU but using half the electricity, say. (Even in that scenario, since electricity is such a major cost, why wouldn't you then add more GPUs to your cluster to use up the new headroom?)

When we look at past changes like V100 to A100, or A100 to H100, they typically change the performance profile quite a bit: VRAM doubles or more, high-precision ops increase much less than low-precision, new numerical formats get native speed support, new specialized hardware like 'tensor cores' get added encouraging sparsity or reduced-precision, interconnects speed up (but never enough)... All of these are going to change your ideal width vs depth scaling ratios, Transformer head sizes or MoE expert sizes (trying to keep on-GPU) or the size of your model components in general, your other hyperparameters like total batch size, and so on.

Changes like precision can require architecture-level changes like more aggressive normalization or regularization (maybe your model will Just Work when you switch to mixed-precision for the performance boost - or maybe it will keep exploding until you throw in more layer normalization to keep all the numbers small), or may just not work at all at present.

You may be able to checkpoint your model and restart if a node crashes or if a minibatch diverges, but that's no panacea, DL is non-convex and different runs will end up in different places, and the seeds of decay & self-sabotage may be planted too deep in a model to be fixed: in the BigGAN paper, mooch tried extensively rolling back BigGANs that diverged, but even resetting back thousands of iterations didn't halt eventual divergence (we verified this the hard way, as hope is a cruel mistress); in the PaLM work, they found some minibatches just spike the loss, and it's not due to the individual datapoints (they had bit-for-bit reproducibility - very impressive! - and could swap out the data to check), it just sorta happens. (Why? Dunno.) There is also the learning that happens during the course of training: most recently, people were very amused/depressed to read through the Facebook OPT training logs about all the bugs, similar stories are told by anyone working on GPT-J or GPT-Neo-20b or HyperCLOVA, Anthropic and OA likewise; one particularly dramatic example I like is OA's "Rerun" DoTA2 OA5 agent - they were editing the arch & hyperparameters (in addition to keeping up with game patches) the entire time, and so at the end, they 'reran' the training process from scratch rather than upgrading progressively the same agent: Rerun required only 20% of the training for a

98% win-rate against the final version of OpenAI Five...The ideal option would be to run Rerun-like training from the very start, but this is impossible---the OpenAI Five curve represents lessons learned that led to the final codebase, environment, etc., without which it would not be possible to train Rerun.

Quite a difference.

So, yes, you can 'just copy over' your big old model onto your shiny new cluster, but you are going to pay a price. The size of this price compared to starting from scratch will depend on how extensive the hardware+software changes are, how much coevolution is going on, how path-dependent large models turn out to be (very unclear because few people train more than one), etc, but the price will be nonzero. Your utilization will be lower because now you underuse each node's VRAM, or you could have packed in larger model-shards into each node, or you need to economize more on inter-node bandwidth, and perhaps this is a small price worth paying; or perhaps your upgrade of the optimizer mid-way permanently hobbled convergence in a way you haven't noticed such that no amount of training will help you match the from-scratch version, and every FLOP is wasted as you will never achieve the target goals.

How bad will the price be be? I guess that will depend on how much software and hardware innovation, and what sort, you expect. If you are looking forward to things like binary-weight nets (which are ultra-fast because they are now just bit operations like xor or popcount), you should expect to have to throw away all your prior models, they really do not like that sort of major change, whatever sort of approach makes them work is not going to play nice with Ye Olde FP32 GPT-3 models, and even when you can convert successfully, you probably can't train them much more. Trying to save compute by transferring old models is then just throwing good compute after bad. Whereas if you are looking forward to innovations focusing on datasets and expect GPUs to remain pretty much as they are now with lots of FP16 multiplication (but nothing crazy like ternary weights or pervasive sparsity or wacky approaches like HyperNEAT-style evolved topologies or Cerebras chips or spiking neural-network hardware), then probably you can plan to just continually train and upgrade a single Chinchilla model indefinitely, and the savings from better hyperparameter tuning etc will be unimportant constant-factors like a third, let's say, which is not enough to justify retraining from scratch until you have some better reason to do so like a new arch.

Since the critical decision is to throw out the old model/arch/run, a big enough change on either hardware or software can trigger a new-run decision, in which case you then pick up the gains from the other one as well. (That is, if some new hardware comes out and your old model is not well-suited to it, then when you start a fresh model, you'll probably also roll in all the software improvements which have happened since eons ago, a year or two or so.) So there's something of a double overhang: regular progress on both streams will lead to smoother capability gains as people regularly start new models and eat up the gains on both, but if one stagnates, then that will tend to lock-in that generation of models and one will want to delay a new model as long as possible, until the marginal return from software+hardware upgrades is so large it can pay for the fully-loaded training cost in one fell swoop. The average trend might be identical, as everyone continues to optimize on the margin, but the latter scenario seems like it would be much more jagged.

(A concrete example of this might be that Stable Diffusion is having such a moment right now in part because it benefits from high-end consumer GPUs, and those GPUs very abruptly became available recently at much closer to MSRP than they have been in years, so people who have been running old image generation models on old GPUs like 1080tis are suddenly running Stable Diffusion on 3090s. I'm sure the FID/IS improvement curves aggregated across research papers are as exactly as smooth as AI Impacts or Paul Christiano would assert they are, but from the perspective of, say, artists suddenly being smacked across the face with SD images everywhere almost literally overnight when the SD model leaked a week or two ago, it sure doesn't feel smooth.)

comment by Maxime Riché (maxime-riche) · 2024-04-26T12:38:01.970Z · LW(p) · GW(p)

We could also combine this with the rate of growth of investments. In that case we would end up with a total rate of growth of effective compute equal to . This results in an optimal training run length of years, ie months.

Why is g_I here 3.84, while above it is 1.03?

Replies from: maxime-riche↑ comment by Maxime Riché (maxime-riche) · 2024-04-26T12:39:16.155Z · LW(p) · GW(p)

This is actually corrected on the Epoch website but not here (https://epochai.org/blog/the-longest-training-run)