Should there be just one western AGI project?

post by rosehadshar, Tom Davidson (tom-davidson-1) · 2024-12-03T10:11:17.914Z · LW · GW · 72 commentsThis is a link post for https://www.forethought.org/research/should-there-be-just-one-western-agi-project

Contents

What are the strategic implications of having one instead of several projects? Summary table Race dynamics Racing between western projects Racing between the US and China Why do race dynamics matter? Power concentration Why does power concentration matter? Infosecurity Why does infosecurity matter? What is the best path forwards, given that strategic landscape? Our overall take Our current best guess Overall conclusion Appendix: Why we don’t think centralisation is inevitable None 73 comments

Tom Davidson did the original thinking; Rose Hadshar helped with later thinking, structure and writing.

Some plans for AI governance involve centralising western AGI development.[1] Would this actually be a good idea? We don’t think this question has been analysed in enough detail, given how important it is. In this post, we’re going to:

- Explore the strategic implications [LW · GW] of having one project instead of several

- Discuss what we think the best path forwards [LW · GW] is, given that strategic landscape

(If at this point you’re thinking ‘this is all irrelevant, because centralisation is inevitable’, we disagree! We suggest you read the appendix [LW · GW], and then consider if you want to read the rest of the post.)

On 2, we’re going to present:

- Our overall take [LW · GW]: It’s very unclear whether centralising would be good or bad.

- Our current best guess [LW · GW]: Centralisation is probably net bad, because of risks from power concentration (but this is very uncertain).

Overall [LW · GW], we think the best path forward is to increase the chances we get to good versions of either a single or multiple projects, rather than to increase the chances we get a centralised project (which could be good or bad). We’re excited about work on:

- Interventions which are robustly good whether there are one or multiple AGI projects.

- Governance structures which avoid the biggest downsides of single and/or multiple project scenarios.

What are the strategic implications of having one instead of several projects?

What should we expect to vary with the number of western AGI development projects?

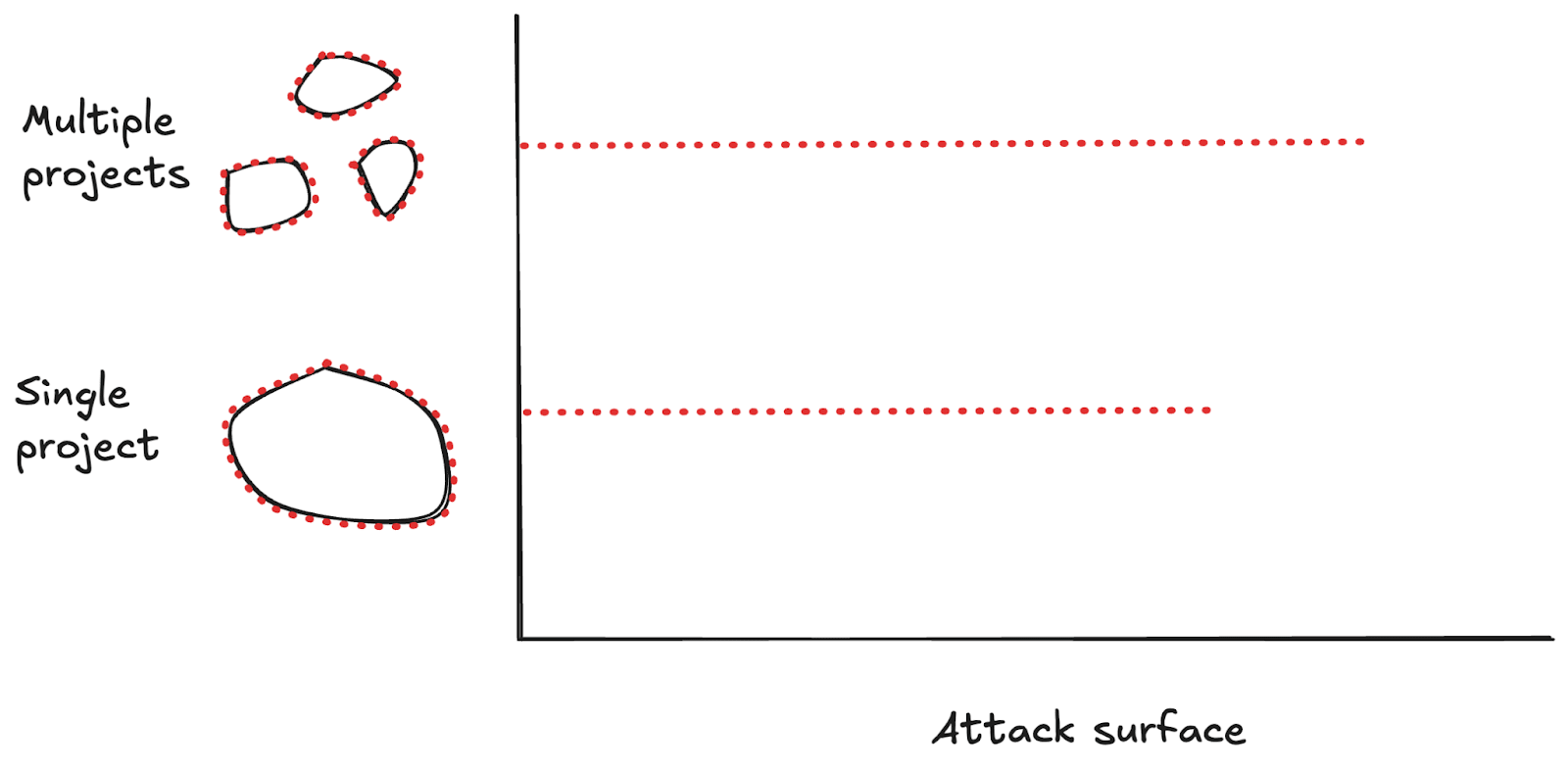

At a very abstract level, if we start out with some blobs, and then mush them into one blob, there are a few obvious things that change:

- There are fewer blobs in total. So interaction dynamics will change. In the AGI case, the important interaction dynamics to consider are:

- Race dynamics [LW · GW], and

- Power concentration [LW · GW].

- There’s less surface area. So it might be easier to protect our big blob. In the AGI case, we can think about this in terms of infosecurity [LW(p) · GW(p)].

Summary table

| Variable | Implications of one project | Uncertainties[2] |

| Race dynamics | Less racing between western projects - No competing projects

Unclear implications for racing with China: - US might speed up or slow down - China might speed up too | Do ‘races to the top’ on safety outweigh races to the bottom? How effectively can government regulation reduce racing between multiple western projects?

Will the speedup from compute amalgamation outweigh other slowdowns for the US? How much will China speed up in response to US centralisation? How much stronger will infosecurity be for a centralised project? |

| Power concentration [LW · GW] | Greater concentration of power: - No other western AGI projects - Less access to advanced AI for the rest of the world - Greater integration with USG | How effectively can a single project make use of: - Market mechanisms? - Checks and balances? How much will power concentrate anyway with multiple projects? |

| Infosecurity [LW(p) · GW(p)] | Unclear implications for infosecurity: - More resources, but USG provision or R&D breakthroughs could mitigate this for multiple projects - Might provoke larger earlier attacks | How much bigger will a single project be? How strong can infosecurity be for multiple projects? Will a single project provoke more serious attacks? |

Race dynamics

One thing that changes if western AGI development gets centralised is that there are fewer competing AGI projects.

When there are multiple AGI projects, there are incentives to move fast to develop capabilities before your competitors do. These incentives could be strong enough to cause projects to neglect other features we care about, like safety.

What would happen to these race dynamics if the number of western AGI projects were reduced to one?

Racing between western projects

At first blush, it seems like there would be much less incentive to race between western projects if there were only one project, as there would be no competition to race against.

This effect might not be as big as it initially seems though:

- Racing between teams. There could still be some racing between teams within a single project.

- Regulation to reduce racing. Government regulation could temper racing between multiple western projects. So there are ways to reduce racing between western projects, besides centralising.

Also, competition can incentivise races to the top as well as races to the bottom. Competition could create incentives to:

- Scrutinise competitors’ systems.

- Publish technical AI safety work, to look more responsible than competitors.

- Develop safer systems, to the extent that consumers desire this and can tell the difference.

It’s not clear how races to the top and races to the bottom will net out for AGI, but the possibility of races to the top is a reason to think that racing between multiple western AGI projects wouldn’t be as negative as you’d otherwise think.

Having one project would mean less racing between western projects, but maybe not a lot less (as the counterfactual might be well-regulated projects with races to the top on safety).

Racing between the US and China

How would racing between the US and China change if the US only had one AGI project?

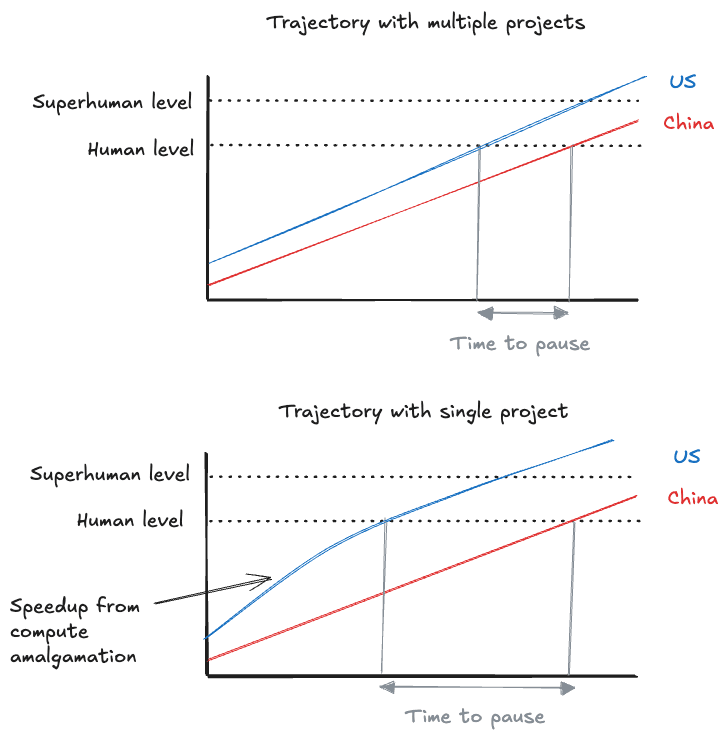

The main lever that could change the amount of racing is the size of the lead between the US and China: the bigger the US’s lead, the less incentive there is for the US to race (and the smaller the lead, the more there’s an incentive).[3]

Somewhat paradoxically, this means that speeding up US AGI development could reduce racing, as the US has a larger lead and so can afford to go more slowly later.

Speeding up US AGI development gives the US a bigger lead, which means they have more time to pause later and can afford to race less.

At first blush, it seems like centralising US AGI development would reduce racing with China, because amalgamating all western compute would speed up AGI development.

However, there are other effects which could counteract this, and it’s not obvious how they net out:

- China might speed up too. Centralising western AGI development might prompt China to do the same. So the lead might remain the same (or even get smaller, if you expect China to be faster and more efficient at centralising AGI development).

- The US might slow down for other reasons. It’s not clear how the speedup from compute amalgamation nets out with other factors which might slow the US down:

- Bureaucracy. A centralised project would probably be more bureaucratic.

- Reduced innovation. Reducing the number of projects could reduce innovation.

- Chinese attempts to slow down US AGI development. Centralising US AGI development might provoke Chinese attempts to slow the US down (for example, by blockading Taiwan).

- Centralising might make the US less likely to pause at the crucial time. If part of the reason for centralising is to develop AGI before China, it might become politically harder for the US to slow down at the crucial time even if the lead is bigger than counterfactually (because there’s a stronger narrative about racing to beat China).

- Infosecurity. Centralising western AGI development would probably make it harder for China to steal model weights, but it might also prompt China to try harder to do so. (We discuss infosecurity in more detail below.)

So it’s not clear whether having one project would increase or decrease racing between the US and China.

Why do race dynamics matter?

Racing could make it harder for AGI projects to:

- Invest in AI safety in general

- Slow down or pause at the crucial time between human-level and superintelligent AI, when AI first poses an x-risk and when AI safety and governance work is particularly valuable

This would increase AI takeover risk, risks from proliferation, and the risk of coups (as mitigating all of these risks takes time and investment).

It might also matter who wins the race, for instance if you think that some projects are more likely than others to:

- Invest in safety (reducing AI takeover risk)

- Invest in infosecurity (reducing risks from proliferation)

- Avoid robust totalitarianism

- Lead to really good futures

Many people think that this means it’s important for the US to develop AGI before China. (This is about who wins the race, not strictly about how much racing there is. But these things are related: the more likely the US is to win a race, the less intensely the US needs to race.[4])

Power concentration

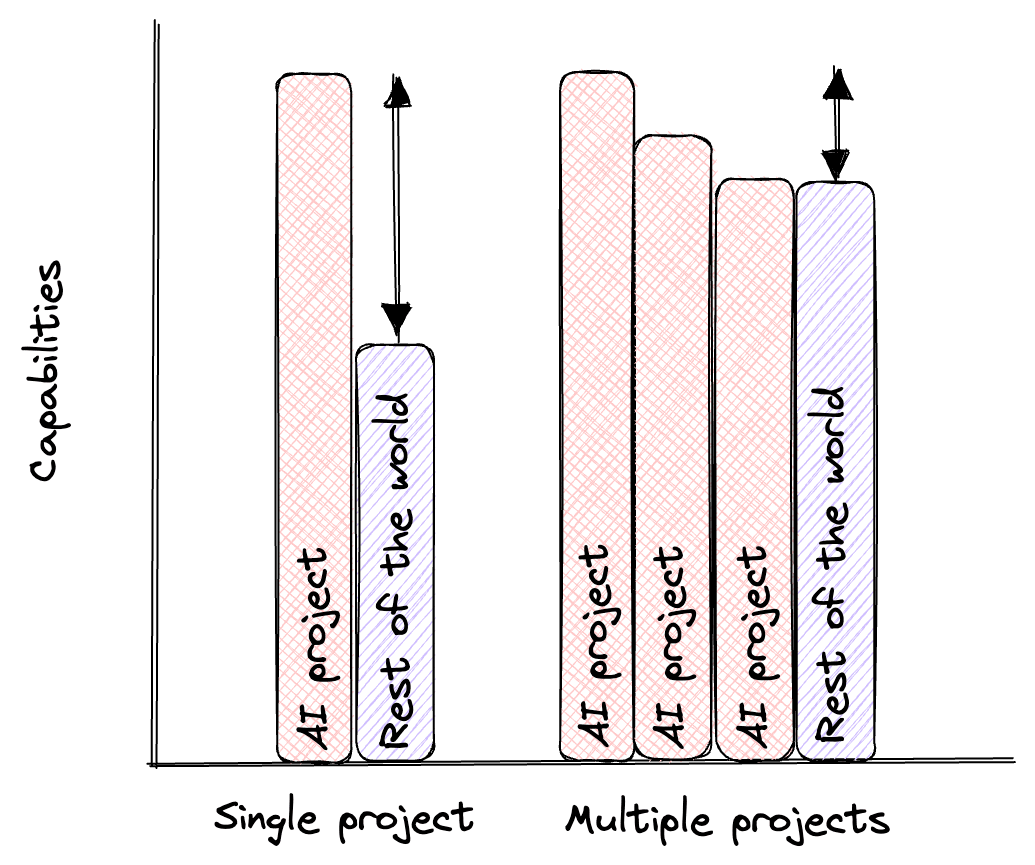

If western AGI development gets centralised, power would concentrate: the single project would have a lot more power than any individual project in a multiple project scenario.

There are a few different mechanisms by which centralising would concentrate power:

- Removing competing AGI projects. This has two different effects:

- The single project amasses more resources.

- Some of the constraints on the single project are removed:

- There are fewer actors with the technical expertise and incentives to expose malpractice.

- It removes incentives to compete on safety and on guarantees that AI systems are aligned with the interests of broader society (rather than biased towards promoting the interests of their developers).

- Reducing access to advanced AI services. Competition significantly helps people get access to advanced tech: it incentivises selling better products sooner to more people for less money. A single project wouldn’t naturally have this (very strong) incentive. So we should expect less access to advanced AI services than if there are multiple projects.

- Reducing access to these services will significantly disempower the rest of the world: we’re not talking about whether people will have access to the best chatbots or not, but whether they’ll have access to extremely powerful future capabilities which enable them to shape and improve their lives on a scale that humans haven’t previously been able to.

If multiple projects compete to sell AI services to the rest of the world, the rest of the world will be more empowered.

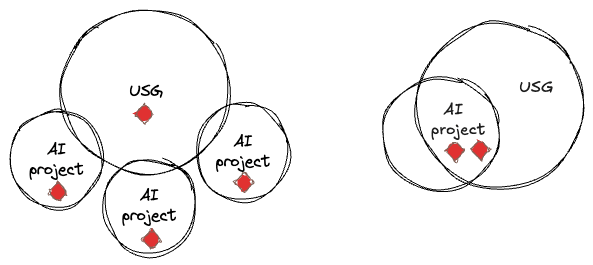

- Increasing integration with USG. We expect that AGI projects will work closely with the USG, however many projects there are. But there’s a finite amount of USG bandwidth: there’s only one President, for example. So the fewer projects there are, the more integration we should expect. This further concentrates power:

- The AGI project gets access to more non-AI resources.

- The USG and the AGI project become less independent.

With multiple projects there would be more independent centres of power (red diamonds).

How much more concentrated would power be if western AGI development were centralised?

Partly, this depends on how concentrated power would become in a multiple project scenario: if power would concentrate significantly anyway, then the additional concentration from centralisation would be less significant. (This is related to how inevitable a single project is - see this appendix.)

And partly this depends on how easy it is to reduce power concentration by designing a single project well.[5] A single project could be designed with:

- Market mechanisms, which would increase access to advanced AI services.

- For example, many companies could be allowed to fine-tune the project’s models, and compete to sell API access.

- Checks and balances to

- Limit integration with the USG.

- Increase broad transparency into its AI capabilities and risk analyses.

- Limit the formal rights and authorities of the project.

- For example, restricting the project’s rights to make money, or to take actions like paying for ads or investing in media companies or political lobbying.

But these mechanisms would be less robust than having multiple projects at reducing power concentration: any market mechanisms and checks and balances would be a matter of policy, not competitive survival, so they would be easier to go back on.

Having one project might massively increase power concentration, but also might just increase it a bit (if it’s possible to have a well-designed centralised project with market mechanisms and checks and balances).

Why does power concentration matter?

Power concentration could:

- Reduce pluralism. Power concentration means that fewer actors are empowered (with AI capabilities and resources). The many would have less influence and less chance to flourish. This is unfair, and it probably makes it less likely that humanity reflects collectively and explores many different kinds of future.

- Increase coup risk and the chance of a permanent dictatorship. At an extreme, power concentration could allow a small group to seize permanent control.

- Power concentration increases the risk of a coup in the US, because:

- It’s easier for a single project to retain privileged access to the most advanced systems.

- There are no competing western projects with similar capabilities.

- There’s no incentive for the project to sell its most advanced systems to keep up with the competition.

- It’s probably easier for a single project to install secret loyalties undetected,[6] as there are fewer independent actors with the technical expertise to expose it.[7]

- There are fewer centres of power and a single project would be more closely integrated with the USG.

- It’s easier for a single project to retain privileged access to the most advanced systems.

- If growth is sufficiently explosive, then a coup of the USG could lead to:

- Permanent dictatorship.

- Taking over the world.

- Power concentration increases the risk of a coup in the US, because:

Infosecurity

Another thing that changes if western AGI development gets centralised is that there’s less attack surface:

- There are fewer security systems which could be compromised or fail.

- There might be fewer individual AI models to secure.

Some attack surface scales with the number of projects.

At the same time, a single project would probably have more resources to devote to infosecurity:

- Government resources. A single project is likely to be closely integrated with USG, and so to have access to the highest levels of government infosecurity.

- Total resources. A single project would have more total resources to spend on infosecurity than any individual project in a multiple project scenario, as it would be bigger.

So all else equal, it seems that centralising western AGI development would lead to stronger infosecurity.

But all else might not be equal:

- A single project might motivate more serious attacks, which are harder to defend against.

- It might also motivate earlier attacks, such that the single project would have less total time to get security measures into place.

- There are ways to increase the infosecurity of multiple projects:

- The USG might provide or mandate strong infosecurity for multiple projects.

- The USG might be motivated to provide this, to the extent that it wants to prevent China stealing model weights.

- If the USG set very high infosecurity standards, and put the burden on private companies to meet them, companies might be motivated to do so given the massive economic incentives.[8]

- R&D breakthroughs might lower the costs of strong infosecurity, making it easier for multiple projects to access.

- The USG might provide or mandate strong infosecurity for multiple projects.

- A single project could have more attack surface, if it’s sufficiently big. Some attack surface scales with the number of projects (like the number of security systems), but other kinds of attack surface scale with total size (like the number of people or buildings). If a single project were sufficiently bigger than the sum of the counterfactual multiple projects, it could have more attack surface and so be less infosecure.

If a single project is big enough, it would have more attack surface than multiple projects (as some attack surface scales with total size).

It’s not clear whether having one project would reduce the chance that the weights are stolen. . We think that it would be harder to steal the weights of a single project, but the motivation to do so would also be stronger – it’s not clear how these balance out.

Why does infosecurity matter?

The stronger infosecurity is, the harder it is for:

- Any actor to steal model weights.

- This reduces risks from proliferation: it’s harder for bad actors to get access to GCR-enabling technologies. The more it’s the case that AI enables strongly offence dominant technologies, the more important this point is.

- China in particular to steal model weights.

- This effectively increases the size of the US lead over China (as you don’t need to discount the actual lead by as much to account for the possibility of China stealing the weights), which:

- Probably reduces racing (which reduces AI takeover risk),[9] and

- Increases the chance that the US develops AGI before China.

- This effectively increases the size of the US lead over China (as you don’t need to discount the actual lead by as much to account for the possibility of China stealing the weights), which:

If we’re right that centralising western AGI development would make it harder to steal the weights, but also increase the motivation to do so, then the effect of centralising might be more important for reducing proliferation risk than for preventing China stealing the weights:

- If it’s harder to steal the weights, fewer actors will be able to do so.

- China is one of the most resourced and competent actors, and would have even stronger motivation to steal the weights than other actors (because of race dynamics).

- So it’s more likely that centralising reduces proliferation risk, and less likely that it reduces the chance of China stealing the weights.

What is the best path forwards, given that strategic landscape?

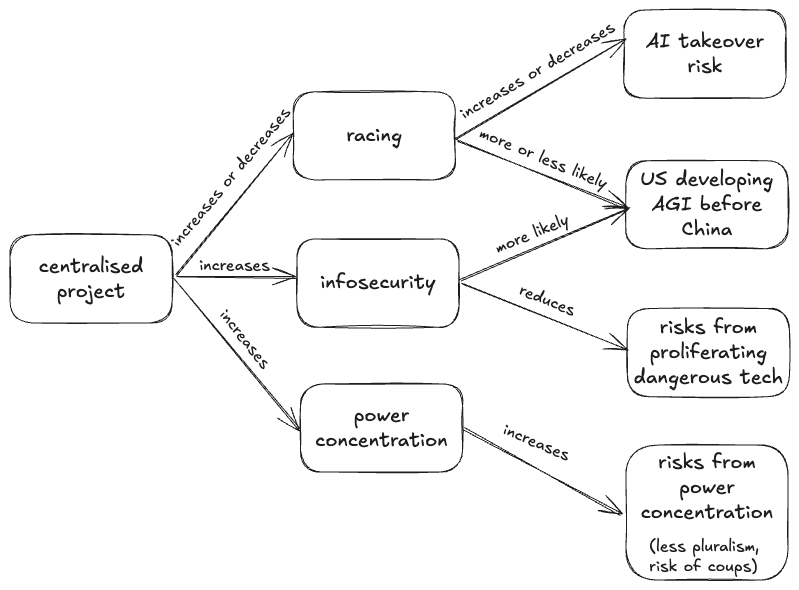

We’ve just considered a lot of different implications of having a single project instead of several. Summing up, we think that:

- It’s not clear what the sign of having a single project would be for racing between the US and China and infosecurity.

- Having a single project would probably lead to less racing between western companies and greater power concentration (but it’s not clear what the effect size here would be).

So, given this strategic landscape, what’s the best path forwards?

- Our overall take [LW · GW]: It’s very unclear whether centralising would be good or bad.

- Our current best guess [LW · GW]: Centralisation is probably net bad, because of risks from power concentration (but this is very uncertain).

Our overall take

It’s very unclear whether centralising would be good or bad.

It seems to us that whether or not western AGI development is centralised could have large strategic implications. But it’s very hard to be confident in what the implications will be. Centralising western AGI development could:

- Increase or decrease AI takeover risk, depending on whether it exacerbates racing with China or not and on how bad (or good) racing between multiple western projects would be.

- Make it more or less likely that the US develops AGI before China, depending on whether it slows the US down, how much it causes China to speed up, and how much stronger the infosecurity of a centralised project would be.

- Reduce risks from proliferating dangerous technologies by a little or a lot, depending on the attack surface of the single project, whether attacks get more serious following centralisation, and how strong the infosecurity of multiple projects would be.

- Increase risks from power concentration by a little or a lot, depending on how well-designed the centralised project would be.

It’s also unclear what the relative magnitudes of the risks are in the first place. Should we prefer a world where the US is more likely to beat China but also more likely to slide into dictatorship, or a world where it’s less likely to beat China but also less likely to become a dictatorship? If centralising increases AI takeover risk but only by a small amount, and greatly increases risks from power concentration, what should we do? The trade-offs here are really hard.

We have our own tentative opinions on this stuff (below [LW · GW]), but our strongest take here is that it’s very unclear whether centralising would be good or bad. If you are very confident that centralising would be good — you shouldn’t be.

Our current best guess

We think that the overall effect of centralising AGI development is very uncertain, but it still seems useful to put forward concrete best guesses on the object-level, so that others can disagree and we can make progress on figuring out the answer.

Our current best guess is that centralisation is probably net bad because of risks from power concentration.

Why we think this:

- Centralising western AGI development would be an extreme concentration of power.

- There would be no competing western AGI projects, the rest of the world would have worse access to advanced AI services, and the AGI project would be more integrated with the USG.

- A single project could be designed with market mechanisms and checks and balances, but we haven’t seen plans which seem nearly robust enough to mitigate these challenges. What concretely would stop a centralised project from ignoring the checks and balances once it has huge amounts of power?

- Power concentration is a major way we could lose out on a lot of the value of the future.

- It makes it less likely that we end up with good reflective processes leading to a pluralist future.

- It increases the risk of a coup leading to permanent dictatorship.

- The benefits of centralising probably don’t outweigh these costs.

- Centralising wouldn’t significantly reduce AI takeover risk.

- Racing between the US and China would probably intensify, as centralising would prompt China to speed up AGI development and to try harder to steal model weights.

- The counterfactual wouldn’t be that bad:

- It seems pretty feasible to regulate the bad effects of racing between western AGI projects.

- Races to the top will counteract races to the bottom to some extent.

- Centralising wouldn’t significantly reduce AI takeover risk.

- Centralising wouldn’t significantly increase the chances that the US develops AGI before China (as it would prompt China to speed up AGI development and to try harder to steal model weights).

- Multiple western AGI projects could have good enough infosecurity to prevent proliferation or China stealing the weights.

- The USG will be able and willing to either provide or mandate strong infosecurity for multiple projects.

But because there’s so much uncertainty, we could easily be wrong. These are the main ways we are tracking that our best guess could be wrong:

- Infosecurity. It might be possible to have good enough infosecurity with a centralised project, but not with multiple projects.

- We don’t see why the USG can’t secure multiple projects in principle, but we’re not experts here.

- Regulating western racing. Absent a centralised project, regulation may be very unlikely to reduce problematic western racing.

- Good regulation is hard, especially for an embryonic field like catastrophic risks from AI.

- US China racing. Maybe China wouldn’t speed up as much as the US would, such that centralising would increase the US lead, reduce racing, and reduce AI takeover risk.

- This could be the case if China just doesn’t have good enough compute access to compete, or if the US centralisation process is surprisingly efficient.

- Designing a single project. There might be robust mechanisms for a single project to sell advanced AI services and manage coup risk.

- We haven’t seen anything very convincing here, but we’d be excited to see more work here.

- Trade-offs between risks. The probability-weighted impacts of AI takeover or the proliferation of world-ending technologies might be high enough to dominate the probability-weighted impacts of power concentration.

- We currently doubt this, but we haven’t modelled it out, and we have lower p(doom) from misalignment than many (<10%).

- Accessible versions. A really good version of a centralised project might become accessible, or the accessible versions of multiple projects might be pretty bad.

- This seems plausible. Note that unfortunately good versions of both scenarios are probably correlated, as they both significantly depend on the USG doing a good job (of managing a single project, or of regulating multiple projects).

- Inevitability. Centralisation might be more inevitable than we thought, such that this argument is moot (see appendix [LW · GW]).

- Inevitability is a really strong claim, and we are currently not convinced. But this might become clearer over time, and we’re not very close to the USG.

Overall conclusion

Overall, we think the best path forward is to increase the chances we get to good versions of either a single or multiple projects, rather than to increase the chances we get a centralised project (which could be good or bad).

The variation between good and bad versions of these projects seems much more significant than the variation from whether or not projects are centralised.

A centralised project could be:

- A monopoly which gives unchecked power to a small group of people who are also very influential in the USG.

- A well-designed and accountable project with market mechanisms and thorough checks and balances on power.

- Anything in between.

A multiple project scenario could be:

- Subject to poorly-targeted regulation which wastes time and safety resources, without preventing dangerous outcomes.

- Subject to well-targeted regulation which reliably prevents dangerous outcomes and supports infosecurity.

It’s hard to tell whether a centralised project is better or worse than multiple projects as an overall category; it’s easy to tell within categories which scenarios we’d prefer.

We’re excited about work on:

- Interventions which are robustly good whether there are one or multiple AGI projects. For example:

- Robust processes to prevent AIs from having secret loyalties.

- R&D into improved infosecurity.

- Governance structures which avoid the biggest downsides of single and/or multiple project scenarios. For example:

- A centralised project could be carefully designed to minimise its power, e.g. by only allowing it to do pre-training and safety testing, and requiring it to share access to near-SOTA models with multiple private companies who can fine-tune and sell access more broadly.

- Multiple projects could be carefully governed to prevent racing to the bottom on safety, e.g. by requiring approval of a centralised body to train significantly more capable AI.

For extremely helpful comments on earlier drafts, thanks to Adam Bales, Catherine Brewer, Owen Cotton-Barratt, Max Dalton, Lukas Finnveden, Ryan Greenblatt, Will MacAskill, Matthew van der Merwe, Toby Ord, Carl Shulman, Lizka Vaintrob, and others.

Appendix: Why we don’t think centralisation is inevitable

A common argument for pushing to centralise western AGI development is that centralisation is basically inevitable, and that conditional on centralisation happening at some point, it’s better to push towards good versions of a single project sooner rather than later.

We agree with the conditional, but don’t think that centralisation is inevitable.

The main arguments we’ve heard for centralisation being inevitable are:

- Economies of scale. Gains from scale might cause AGI development to centralise eventually (e.g. if training runs become too expensive/compute-intensive/energy-intensive to do otherwise).

- Inevitability of a decisive strategic advantage (DSA).[10] The leading project might get a DSA at some point due to recursive self-improvement after automating AI R&D.

- Government involvement. The national security implications of AGI might cause the USG to centralise AGI development eventually.

These arguments don’t convince us:

- Economies of scale point to fewer but not necessarily one project. There will be pressure towards fewer projects as training runs become more expensive/compute-intensive/energy-intensive. But it’s not obvious that this will push all the way to a single project:

- Ratio of revenues to costs. If revenues from AGI are more than double the costs of training AGI, then there are incentives for more projects to enter (because even if you split the revenues in half, they still cover the costs of training for two projects).

- Market inefficiencies. It could still be most efficient to have a single project even if revenues are double the costs (because less money is spent for the same returns) — but the free market isn’t always maximally efficient.

- Benefits of innovation. Alternatively, it could be more efficient to have multiple projects, because competition increases innovation by enough to outweigh the additional costs.

- Antitrust. By default, there’s strong legal pressure against monopolies.

- There are ways of preventing a decisive strategic advantage (DSA). There is a risk that recursive self-improvement after automating AI R&D could enable the leading AGI project to get a decisive strategic advantage, which would effectively centralise all power in that project. We’re very concerned by this risk, but think that there are many ways to prevent the leading project getting a DSA.

- For example:

- External oversight. Have significant external oversight into how the project trains and/or deploys AI to prevent the project from seeking influence in illegitimate ways.

- Cheap API access. Require the leader to provide cheap API access to near-SOTA models (to prevent them hoarding their capabilities or charging high monopoly prices on their unrivalled AI services).

- Weight-sharing. Require them to sell the weights of models trained with 10X less effective FLOP than their best model to other AGI projects, limiting how large a lead they can build up over competitors.

- We think countermeasures like these could bring the risk of a DSA down to very low levels, if implemented well. Even so, the leading AGI project would still be hugely powerful.

- For example:

- Government involvement =/= centralisation. The government will very likely want to be heavily involved in AGI development for natsec reasons, but there are other ways of doing this besides a single project, like defence contracting and public-private partnerships.

So, while we still think that centralisation is plausible, we don’t think that it’s inevitable.

- ^

Centralising: either merging all existing AGI development projects, or shutting down all but the leading project. Either of these would require substantial US government (USG) involvement, and could involve the USG effectively nationalising the project (though there’s a spectrum here, and the lower end seems particularly likely).

Western: we’re mostly equating western with US. This is because we’re assuming that:

- Google DeepMind is effectively a US company because most of its data centres are in the US.

- Timelines are short enough that there are no plausible AGI developers outside the US and China.

We don’t think that these assumptions change our conclusions much. If western AGI projects were spread out beyond the US, then this would raise the benefits of centralising (as it’s harder to regulate racing across international borders), but also increase the harms (as centralising would be a larger concentration of power on the counterfactual) and make centralisation less likely to happen.

- ^

An uncertainty which cuts across all of these variables is what version of a centralised project/multiple project scenario we would get.

- ^

This is more likely to be true to the extent that:

- There are winner-takes-all dynamics.

- The actors are fully rational.

- The perceived lead matches the actual lead.

It seems plausible that 2 and 3 just add noise, rather than systematically pushing towards more or less racing.

- ^

Even if you don’t care who wins, you might prefer to increase the US lead to reduce racing. Though as we saw above, it’s not clear that centralising western AGI development actually would increase the US lead.

- ^

There are also scenarios where having a single project reduces power concentration even without being well-designed: if failing to centralise would mean that US AGI development was so far ahead of China that the US was able to dominate, but centralising would slow the US down enough that China would also have a lot of power, then having a single project would reduce power concentration by default.

There are a lot of conditionals here, so we’re not currently putting much weight on this possibility. But we’re noting it for completeness, and in case others think there are reasons to put more weight on it.

- ^

By ‘secret loyalties’, we mean undetected biases in AI systems towards the interests of their developers or some small cabal of people. For example, AI systems which give advice which subtly tends towards the interests of this cabal, or AI systems which have backdoors.

- ^

A factor which might make it easier to install secret loyalties with multiple projects is racing: CEOs might have an easier time justifying moving fast and not installing proper checks and balances, if competition is very fierce.

- ^

Though these standards might be hard to audit, which would make compliance harder to achieve.

- ^

There are a few ways that making it harder for China to steal the model weights might not reduce racing:

- Centralising might simultaneously cause China to speed up its AGI development, and make it harder to steal the weights. It’s not clear how these effects would net out.

- What matters is the perceived size of the lead. The US could be poorly calibrated about how hard it is for China to steal the weights or about how that nets out with China speeding up AGI development, such that the US doesn’t race less even though it would be rational to do so.

- If it were very easy for China to steal the weights, this would reduce US incentives to race. (Note that this would probably be very bad for proliferation risk, and so isn’t very desirable.)

We still think that making the weights harder to steal would probably lead to less racing, as the US would feel more secure - but this is a complicated empirical question.

- ^

Bostrom defines DSA as “a level of technological and other advantages sufficient to enable it to achieve complete world domination” in Superintelligence. Tom tends to define having a DSA as controlling >99% of economic output, and being able to do so indefinitely.

72 comments

Comments sorted by top scores.

comment by Aaron_Scher · 2024-12-05T23:06:03.258Z · LW(p) · GW(p)

Thanks for writing this, I think it's an important topic which deserves more attention. This post covers many arguments, a few of which I think are much weaker than you all state. But more importantly, I think you all are missing at least one important argument. I've been meaning to write this up, and I'll use this as my excuse.

TL;DR: More independent AGI efforts means more risky “draws” from a pool of potential good and bad AIs; since a single bad draw could be catastrophic (a key claim about offense/defense), we need fewer, more controlled projects to minimize that danger.

The argument is basically an application of the Vulnerability World Hypothesis to AI development. You capture part of this argument in the discussion of Racing, but not the whole thing. So the setup is that building any particular AGI is drawing a ball from the urn of potential AIs. Some of these AIs are aligned, some are misaligned — we probably disagree about the proportions here but that's not crucial, and note that the proportion depends on a bunch of other aspects about the world such as how good our AGI alignment research is. More AGI projects means more draws from the urn and a higher likelihood of pulling out misaligned AI systems. Importantly, I think that pulling out a misaligned AGI system is more bad than pulling out an aligned AGI system is good. I think this because I think some of the key components about the world that are offense-favored.

Key assumption/claim: human extinction and human loss of control are offense-favored — if there were similarly resourced actors trying to destroy humanity as to protect it, humanity would be destroyed. I have a bunch of intuitions for why this is true, to give some sense:

- Humans are flesh bags that die easily and never come back to life. AIs will not be like this.

- Humans care a lot about not dying, their friends and families not dying, etc., I expect extorting a small number of humans in order to gain control would simply work if one could successfully make the relevant threats.

- Terrorists or others who seek to cause harm often succeed. There are many mass shootings. 8% of US presidents were assassinated in office. I don't actually know what the average death count per attempted terrorist is; I would intuitively guess it's between 0.5 and 10 (This Wikipedia article indicates it's ~10, but I think you should include attempts that totally fail, even though these are not typically counted). Terrorism is very heavy tailed, which I think probably means that more capable terrorists (i.e., AIs that are at least as good as human experts, AGI+) will have high fatality rates.

- There are some emerging technologies that so far seem more offense-favored to me. Maybe not 1000:1, but definitely not 1:1. Bio tech and engineered pandemics seem like this; autonomous weapons seem like this.

- The strategy-stealing assumption [LW · GW] seems false to me, partially for reasons listed in the linked post. I note that the linked post includes Paul listing a bunch of convincing-to-me ways in which strategy-stealing is false and then concluding that it's basically true. The claim about offense is easier than defense is sorta just a version of the strategy stealing claim, this bullet point isn't actually another distinct argument, just an excuse to point toward previous thinking and the various arguments there.

A couple caveats: I think killing all of humanity with current tech is pretty hard; as noted however, I think this is too high a bar because probably things like extortion are sufficient for grabbing power. Also, I think there are some defensive strategies that would actually totally work at reducing the threat from misaligned AGI systems. Most of these strategies look a lot like "centralization of AGI development", e.g., destroying advanced computing infrastructure, controlling who uses advanced computing infrastructure and how they use it, a global treaty banning advanced AI development (which might be democratically controlled but has the effect of exercising central decision making).

So circling back to the urn, if you pull out an aligned AI system, and 3 months later somebody else pulls out a misaligned AI system, I don't think pulling out the aligned AI system a little in advance buys you that much. The correct strategy to this situation is to try and make the proportion of balls weighted heavily toward aligned, AND to pull out as few as you can.

More AGI development projects means more draws from the urn because there are more actors doing this and no coordinated decision process to stop. You mention that maybe government can regulate AI developers to reduce racing. This seems like it will go poorly, and in the worlds where it goes well, I think you should maybe just call them "centralization" because they involve a central decision process deciding who can train what models when with what methods. That is, extremely involved regulations seem to effectively be centralization.

Notably, this is related but not the same as the effects from racing. More AGI projects leads to racing which leads to cutting corners on safety (higher proportion of misaligned AIs in the urn), and racing leads to more draws from the urn because of fear of losing to a competitor. But even without racing, more AGI projects means more draws from the urn.

The thing I would like to happen instead is that there is a very controlled process for drawing from the urn, where each ball is carefully inspected, and if we draw aligned AIs, we use them to do AI alignment research, i.e., increase the proportion of aligned AIs in the urn. And we don't take more draws from the urn until we're really sure we're quite confident we're not going to pull out a misaligned AI. Again, this is both about reducing the risk of catastrophe each time you take a risky action, and about decreasing the number of times you have to take risky actions.

Summarizing: If you are operating in a domain where losses are very bad, you want to take less gambles. I think AGI and ASI development are such domains, and decentralized AGI development means more gambles are taken.

Replies from: tom-davidson-1, rosehadshar, Aaron_Scher↑ comment by Tom Davidson (tom-davidson-1) · 2024-12-06T14:34:12.141Z · LW(p) · GW(p)

I agree with Rose's reply, and would go further. I think there are many actions that just one responsible lab could take that would completely change the game board:

- Find and share a scalable solution to alignment

- Provide compelling experimental evidence that standard training methods lead to misaligned power-seeking AI by default

- Develop and share best practices for responsible scaling that are both commercially viable and safe.

You comment argues that "one bad apple spoils the bunch", but it's also plausible that "one good apple saves the bunch"

Replies from: Aaron_Scher↑ comment by Aaron_Scher · 2024-12-06T19:39:56.398Z · LW(p) · GW(p)

I agree it's plausible. I continue to think that defensive strategies are harder than offensive ones, except the ones that basically look like centralized control over AGI development. For example,

- Provide compelling experimental evidence that standard training methods lead to misaligned power-seeking AI by default

Then what? The government steps in and stops other companies from scaling capabilities until big safety improvements have been made? That's centralization along many axes. Or maybe all the other key decision makers in AGI projects get convinced by evidence and reason and this buys you 1-3 years until open source / many other actors reach this level of capabilities.

Sharing an alignment solution involves companies handing over valuable IP to their competitors. I don't want to say it's impossible, but I have definitely gotten less optimistic about this in the last year. I think in the last year we have not seen a race to the top on safety, in any way. We have not seen much sharing of safety research that is relevant to products (or like, applied alignment research). We have instead mostly seen research without direct applications: interp, model organisms, weak-to-stong / scalable oversight (which is probably the closest to product relevance). Now sure, the stakes are way higher with AGI/ASI so there's a bigger incentive to share, but I don't want to be staking the future on these companies voluntarily giving up a bunch of secrets, which would be basically a 180 from their current strategy.

I fail to see how developing and sharing best practices for RSPs will shift the game board. Except insofar as it involves key insights on technical problems (e.g., alignment research that is critical for scaling) which hits the IP problem. I don't think we've seen a race to the top on making good RSPs, but we have definitely seen pressure to publish any RSP. Not enough pressure; the RSPs [LW · GW] are quite weak IMO and some frontier AI developers (Meta, xAI, maybe various Chinese orgs count) have none.

I agree that it's plausible that "one good apple saves the bunch", but I don't think it's super likely if you condition on not centralization.

Do you believe that each of the 3 things you mentioned would change the game board? I think that they are like 75%, 30%, and 20% likely to meaningfully change catastrophic risk, conditional on happening.

Replies from: tom-davidson-1↑ comment by Tom Davidson (tom-davidson-1) · 2024-12-09T17:13:35.841Z · LW(p) · GW(p)

Quick clarification on terminology. We've used 'centralised' to mean "there's just one project doing pre-training". So having regulations that enforce good safety practice or gate-keep new training runs don't count. I think this is a more helpful use of the term. It directly links to the power concentration concerns we've raised. I think the best versions of non-centralisation will involve regulations like these but that's importantly different from one project having sole control of an insanely powerful technology.

Compelling experimental evidence

Currently there's no basically no empirical evidence that misaligned power-seeking emerges by default, let alone scheming. If we got strong evidence that scheming happens by default then I expect that all projects would do way more work to check for and avoid scheming, whether centralised or not. Attitudes change on all levels: project technical staff, technical leadership, regulators, open-source projects.

You can also iterate experimentally [LW · GW] to understand the conditions that cause scheming, allowing empirical progress on scheming like was never before possible.

This seems like a massive game changer to me. I truly believe that if we picked one of today's top-5 labs at random and all the others were closed, this would be meaningfully less likely to happen and that would be a big shame.

Scalable alignment solution

You're right there's IP reasons against sharing. I believe it would be in line with many company's missions to share, but they may not. Even so, there's a lot you can do with aligned AGI. You could use it to produce compelling evidence about whether other AIs are aligned. You could find a way of proving to the world that your AI is aligned, which other labs can't replicate, giving you economic advantage. It would be interesting to explore threats models where AI takes over despite a project solving this, and it doesn't seem crazy, but i'd predict that we'd conclude the odds are better than if there's 5 projects of which 2 have solved it than if there's one project with a 2/5 chance of success.

RSPs

Maybe you think everything is hopeless unless there are fundamental breakthroughs? My view is that we face severe challenges ahead, and have very tough decisions to make. But I believe that a highly competent and responsible project could likely find a way to leverage AI systems to solve AI alignment safely. Doing this isn't just about "having the right values". It's much more about being highly competent, focussed on what really matters, prioritising well, and having good processes. If just one lab figures out how to do this all in a way that is commercially competitive and viable, that's a proof of concept that developing AGI safety is possible. Excuses won't work for other labs, as we can say "well lab X did it".

Overall

I'm not confident "one apple saves the bunch". But I expect most ppl on LW to assume "one apple spoils the bunch" and i think the alternative perspective is very underrated. My synthesis would probably be that at at current capability levels and in the next few years "one apple saves the bunch" wins by a large margin, but that at some point when AI is superhuman it could easily reverse bc AI gets powerful enough to design world-ending WMDs.

(Also, i wanted to include this debate in the post but we felt it would over-complicate things. I'm glad you raised it and strongly upvoted your initial comment.)

Replies from: Aaron_Scher↑ comment by Aaron_Scher · 2024-12-09T22:07:18.288Z · LW(p) · GW(p)

Thanks for your continued engagement.

I appreciate your point about compelling experimental evidence, and I think it's important that we're currently at a point with very little of that evidence. I still feel a lot of uncertainty here, and I expect the evidence to basically always be super murky and for interpretations to be varied/controversial, but I do feel more optimistic than before reading your comment.

You could find a way of proving to the world that your AI is aligned, which other labs can't replicate, giving you economic advantage.

I don't expect this to be a very large effect. It feels similar to an argument like "company A will be better on ESG dimensions and therefore more and customers will switch to using it". Doing a quick review of the literature on that, it seems like there's a small but notable change in consumer behavior for ESG-labeled products. In the AI space, it doesn't seem to me like any customers care about OpenAI's safety team disappearing (except a few folks in the AI safety world). In this particular case, I expect the technical argument needed to demonstrate that some family of AI systems are aligned while others are not is a really complicated argument; I expect fewer than 500 people would be able to actually verify such an argument (or the initial "scalable alignment solution"), maybe zero people. I realize this is a bit of a nit because you were just gesturing toward one of many ways it could be good to have an alignment solution.

I endorse arguing for alternative perspectives and appreciate you doing it. And I disagree with your synthesis here.

Replies from: tom-davidson-1↑ comment by Tom Davidson (tom-davidson-1) · 2024-12-11T14:46:49.311Z · LW(p) · GW(p)

You could find a way of proving to the world that your AI is aligned, which other labs can't replicate, giving you economic advantage.

I don't expect this to be a very large effect. It feels similar to an argument like "company A will be better on ESG dimensions and therefore more and customers will switch to using it". Doing a quick review of the literature on that, it seems like there's a small but notable change in consumer behavior for ESG-labeled products.

It seems quite different to the ESG case. Customers don't personally benefit from using a company with good ESG. They will benefit from using an aligned AI over a misaligned one.

In the AI space, it doesn't seem to me like any customers care about OpenAI's safety team disappearing (except a few folks in the AI safety world).

Again though, customers currently have no selfish reason to care.

In this particular case, I expect the technical argument needed to demonstrate that some family of AI systems are aligned while others are not is a really complicated argument; I expect fewer than 500 people would be able to actually verify such an argument (or the initial "scalable alignment solution"), maybe zero people.

It's quite common for only a very small number of ppl to have the individual ability to verify a safety case, but many more to defer to their judgement. People may defer to an AISI, or a regulatory agency.

↑ comment by rosehadshar · 2024-12-06T11:52:27.922Z · LW(p) · GW(p)

Thanks, I agree this is an important argument.

Two counterpoints:

- The more projects you have, the more attempts at alignment you have. It's not obvious to me that more draws are net bad, at least at the margin of 1 to 2 or 3.

- I'm more worried about the harms from a misaligned singleton than from a misaligned (or multiple misaligned) systems in a wider ecosystem which includes powerful aligned systems.

↑ comment by Aaron_Scher · 2024-12-05T23:09:30.913Z · LW(p) · GW(p)

While writing, I realized that this sounds a bit similar to the unilateralist's curse [EA · GW]. It's not the same, but it has parallels. I'll discuss that briefly because it's relevant to other aspects of the situation. The unilateralist's curse does not occur specifically due to multiple samplings, it occurs because different actors have different beliefs about the value/disvalue, and this variance in beliefs makes it more likely that one of those actors has a belief above the "do it" threshold. If each draw from the AGI urn had the same outcome, this would look a lot like a unilateralist's curse situation where we care about variance in the actors' beliefs. But I instead think that draws from the AGI urn are somewhat independent and the problem is just that we should incur e.g., a 5% misalignment risk as few times as we have to.

Interestingly, a similar look at variance is part of what makes the infosecurity situation much worse for multiple projects compared to centralized AGI project: variance is bad here. I expect a single government AGI project to care about and invest in security at least as much as the average AGI company. The AGI companies have some variance in their caring and investment in security, and the lower ones will be easier to steal from. If you assume these multiple projects have similar AGI capabilities (this is a bad assumption but is basically the reason to like multiple projects for Power Concentration reasons so worth assuming here; if the different projects don't have similar capabilities, power is not very balanced), you might then think that any of the companies getting their models stolen is similarly bad to the centralized project getting its models stolen (with a time lag I suppose, because the centralized project got to that level of capability faster).

If you are hacking a centralized AGI project, say you have a 50% chance of success. If you are hacking 3 different AGI projects, you have 3 different/independent 50% chances of success. They're different because these project have different security measures in place. Now sure, as indicated by one of the points in this blog post, maybe less effort goes into hacking each of the 3 projects (because you have to split your resources, and because there's less overall interest in stealing model weights), maybe that pushes each of these down to 33%. These numbers are obviously made up, and they would get to a 1 – (0.67^3) = 70% chance of success.

Unilateralist's curse is about variance in beliefs about the value of some action. The parent comment is about taking multiple independent actions that each have a risk of very bad outcomes.

comment by johnswentworth · 2024-12-03T17:00:41.931Z · LW(p) · GW(p)

I think this is missing the most important consideration: centralization would likely massively slow down capabilities progress.

Replies from: alexander-gietelink-oldenziel, tom-davidson-1↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-12-03T17:05:26.087Z · LW(p) · GW(p)

As a point of comparison - do you think the US nuclear programme was substantially slowed down because it was a centralized government programme?

Replies from: johnswentworth↑ comment by johnswentworth · 2024-12-03T17:11:03.231Z · LW(p) · GW(p)

If you mean the Manhattan Project: no. IIUC there were basically zero Western groups and zero dollars working toward the bomb before that, so the Manhattan Project clearly sped things up. That's not really a case of "centralization" so much as doing-the-thing-at-all vs not-doing-the-thing-at-all.

If you mean fusion: yes. There were many fusion projects in the sixties, people were learning quickly. Then the field centralized, and progress slowed to a crawl.

Replies from: alexander-gietelink-oldenziel, None↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-12-03T18:13:31.932Z · LW(p) · GW(p)

The current boom in fusion energy startups seems to have been set off by deep advances in material sciences (eg. magnets), electronics, manufacturing. These bottlenecks likely were the main reason fusion energy was not possible in the 60s. On priors it is more likely that centralisation was a result rather than a cause of fusion being hard.

Replies from: johnswentworth, Douglas_Knight↑ comment by johnswentworth · 2024-12-03T20:07:41.663Z · LW(p) · GW(p)

On my understanding, the push for centralization came from a specific faction whose pitch was basically:

- here's the scaling laws for tokamaks

- here's how much money we'd need

- ... so let's make one real big tokamak rather than spending money on lots of little research devices.

... and that faction mostly won the competition for government funding for about half a century.

The current boom accepted that faction's story at face value, but then noticed that new materials allowed the same "scale up the tokamaks" strategy to be executed on a budget achievable with private funding, and therefore they could fund projects without having to fight the faction which won the battle for government funding.

The counterfactual which I think is probably correct is that there exist entirely different designs far superior to tokamaks, which don't require that much scale in the first place, but which were never discovered because the "scale up the tokamaks" faction basically won the competition for funding and stopped most research on alternative designs from happening.

Replies from: Douglas_Knight↑ comment by Douglas_Knight · 2024-12-06T15:59:07.714Z · LW(p) · GW(p)

In fact, many 21st century fusion companies do not use Tokomaks, but use other designs from the 60s. My estimate from wikipedia is about half.

↑ comment by Douglas_Knight · 2024-12-06T15:58:09.646Z · LW(p) · GW(p)

It is a weird claim that the current boom, concentrated in time, is the result of many advances, which were spread out over time. All these advances are being used at the same time because funders are paying for them now and not earlier. How do you know that you need all of those advances and not just some of them? People could have tried using ceramic superconductors in Tokomaks in the 90s, but they didn't, because of centralization. Maybe that wouldn't be enough because you need all the other advances, but it would have yielded more useful data than the actually performed experiments with large Tokomaks.

Replies from: alexander-gietelink-oldenziel↑ comment by Alexander Gietelink Oldenziel (alexander-gietelink-oldenziel) · 2024-12-06T17:03:27.780Z · LW(p) · GW(p)

The advances build on top of each other. I am not expert in material sciences or magnetmanufacturing but I'd bet a lot of improvements & innovation has been downstream of improved computers & electronics. Neither were available in the 60s.

Replies from: Douglas_Knight↑ comment by Douglas_Knight · 2024-12-06T19:34:39.724Z · LW(p) · GW(p)

How is this a response? Yes, advances accumulate over time, which is exactly my point and seems to me to be a rebuttal to the idea that the centralized project has been sane, let alone effective. Which advances do we need? How many do we need? Why is this the magic decade in which we have enough advances, rather than 30 years ago or 30 years hence?

In fact, the current boom does not reflect a belief that we have accumulated enough advances that if we combine them all they will work. Instead, there are many different fusion companies trying experiments to harness different advances. They all have different hypotheses and the fact that they are all contemporaries is a coincidence that you fail to explain. If there were a single bottleneck technology that they all use, that would explain it, but I don't think that's true. Computers are a particularly bad explanation because they have continuously improved: they have contributed to everything, but at different times.

Surely the reason that they are not trying to combine everything is that it takes time to assimilate advances. Some advances are new and will take time. But some are old and they could have started working on incorporating them decades ago. The failure of the centralized project to do that is extremely damning.

↑ comment by Tom Davidson (tom-davidson-1) · 2024-12-03T19:10:29.093Z · LW(p) · GW(p)

Thanks! Great point.

We do say:

Bureaucracy. A centralised project would probably be more bureaucratic.

But you're completely right that we frame this as a reason that centralisation might not increase the lead on China, and therefore framing it as a point against centralisation.

Whereas you're presumably saying that slowing down progress would buy us more time to solve alignment, and so framing it as a significant point for centralisation.

I personally don't favour bureaucracy that slows things down and reduce competence in a non-targeted way -- I think competently prioritising work to reduce AI risk during the AI transition will be important. But I think your position is reasonable here

comment by Seth Herd · 2024-12-04T00:11:23.142Z · LW(p) · GW(p)

I was starting to draft a very similar post. I was looking through all of the comments on this short form [LW(p) · GW(p)] that posed a similar question.

I stopped writing that draft when I saw and thought about this comment [LW(p) · GW(p)]:

Something I'm worried about now is some RFK Jr/Dr. Oz equivalent being picked to lead on AI...

That is pretty clearly what would happen if a US-led effort was launched soon. So, I quit weighing the upsides against that huge downside.

It is vaguely possible that Trump could be persuaded to put such a project into responsible hands. One route to do that is working in cooperation with the EU and other allied nations. But Trump isn't likely to cede influence over such an important project as far as that. The US is far, far ahead of its allies, so cutting them in as equal partners seems unlikely.

I was thinking about writing a post called "an apollo project for AGI is a bad idea for the near future" making the above point. But it seems kind of obvious.

Trump will appoint someone who won't get and won't care about thee dangers; they'll YOLO it; we'll die unless alignment turned out to be ridiculously easy. Bad idea.

Now, how to say that in policy debates? I don't know.

Replies from: AnthonyC↑ comment by AnthonyC · 2024-12-04T13:24:51.222Z · LW(p) · GW(p)

But it seems kind of obvious.

Then you should probably write the post, if you wanted to. It is emphatically not obvious to many other people.

Replies from: Seth Herd↑ comment by Seth Herd · 2024-12-06T02:45:09.164Z · LW(p) · GW(p)

Thank you!

I don't have time; I'm terribly slow at writing posts, and I'm behind on my actual paid work on alignment.

If you find this compelling and want to turn it into a top-level post, I'd appreciate it!

The fact that this got some disagreement votes means it's probably more debatable than I was initially thinking.

comment by Orpheus16 (akash-wasil) · 2024-12-04T17:17:45.123Z · LW(p) · GW(p)

I disagree with some of the claims made here, and I think there several worldview assumptions that go into a lot of these claims. Examples include things like "what do we expect the trajectory to ASI to look like", "how much should we worry about AI takeover risks", "what happens if a single actor ends up controlling [aligned] ASI", "what kinds of regulations can we reasonably expect absent some sort of centralized USG project", and "how much do we expect companies to race to the top on safety absent meaningful USG involvement." (TBC though I don't think it's the responsibility of the authors to go into all of these background assumptions– I think it's good for people to present claims like this even if they don't have time/space to give their Entire Model of Everything.)

Nonetheless, I agree with the bottom-line conclusion: on the margin, I suspect it's more valuable for people to figure out how to make different worlds go well than to figure out which "world" is better. In other words, asking "how do I make Centralized World or Noncentralized World more likely to go well" rather than "which one is better: Centralized World or Noncentralized World?"

More specifically, I think more people should be thinking: "Assume the USG decides to centralize AGI development or pursue some sort of AGI Manhattan Project. At that point, the POTUS or DefSec calls you in and asks you if you have any suggestions for how to maximize the chance of this going well. What do you say?"

One part of my rationale: the decisions about whether or not to centralize will be much harder to influence than decisions about what particular kind of centralized model to go with or what the implementation details of a centralized project should look like. I imagine scenarios in which the "whether to centralize" decision is largely a policy decision that the POTUS and the POTUS's close advisors make, whereas the decision of "how do we actually do this" is something that would be delegated to people lower down the chain (who are both easier to access and more likely to be devoting a lot of time to engaging with arguments about what's desirable.)

comment by Chris_Leong · 2024-12-03T15:59:10.419Z · LW(p) · GW(p)

My take - lots of good analysis, but makes a few crucial mistakes/weaknesses that throw the conclusions into significant doubt:

The USG will be able and willing to either provide or mandate strong infosecurity for multiple projects.

I simply don't buy that the infosec for multiple such projects will be anywhere near the infosec of a single project because the overall security ends up being that of the weakest link.

Additionally, the more projects there are with a particular capability, the more folk there are who can leak information either by talking or by being spies.

The probability-weighted impacts of AI takeover or the proliferation of world-ending technologies might be high enough to dominate the probability-weighted impacts of power concentration.

Comment: We currently doubt this, but we haven’t modelled it out, and we have lower p(doom) from misalignment than many (<10%).

Seems entirely plausible to me that either one could dominate. Would love to see more analysis around this.

Reducing access to these services will significantly disempower the rest of the world: we’re not talking about whether people will have access to the best chatbots or not, but whether they’ll have access to extremely powerful future capabilities which enable them to shape and improve their lives on a scale that humans haven’t previously been able to.

If you're worried about this, I don't think you quite realise the stakes. Capabilities mostly proliferate anyway. People can wait a few more years.

Replies from: tom-davidson-1, rosehadshar↑ comment by Tom Davidson (tom-davidson-1) · 2024-12-03T19:13:07.385Z · LW(p) · GW(p)

Thanks for the pushback!

Reducing access to these services will significantly disempower the rest of the world: we’re not talking about whether people will have access to the best chatbots or not, but whether they’ll have access to extremely powerful future capabilities which enable them to shape and improve their lives on a scale that humans haven’t previously been able to.

If you're worried about this, I don't think you quite realise the stakes. Capabilities mostly proliferate anyway. People can wait a few more years.

Our worry here isn't that people won't get to enjoy AI benefits for a few years. It's that there will be a massive power imbalance between those with access to AI and those without. And that could have long-term effects

Replies from: Chris_Leong↑ comment by Chris_Leong · 2024-12-04T10:15:56.043Z · LW(p) · GW(p)

I maintain my position that you're missing the stakes if you think that's important. Even limiting ourselves strictly to concentration of power worries, risks of totalitarianism dominate these concerns.

Replies from: rosehadshar, tom-davidson-1↑ comment by rosehadshar · 2024-12-04T15:42:56.837Z · LW(p) · GW(p)

I think that massive power imbalance (even over short periods) significantly increases the risk of totalitarianism

↑ comment by Tom Davidson (tom-davidson-1) · 2024-12-06T14:22:35.514Z · LW(p) · GW(p)

I think massive power imbalance makes it less likely that the post-AGI world is one where many different actors with different beliefs and values can experiment, interact, and reflect. And so I'd expect its long-term future to be worse

↑ comment by rosehadshar · 2024-12-05T13:51:54.965Z · LW(p) · GW(p)

On the infosec thing:

"I simply don't buy that the infosec for multiple such projects will be anywhere near the infosec of a single project because the overall security ends up being that of the weakest link."

-> nitpick: the important thing isn't how close the infosec for multiple projects is to the infosec of a single project: it's how close the infosec for multiple projects is to something like 'the threshold for good enough infosec, given risk levels and risk tolerance'. That's obviously very non-trivial to work out

-> I agree that a single project would probably have higher infosec than multiple projects (though this doesn't seem slam dunk to me and I think it does to you)

-> concretely, I currently expect that the USG would be able to provide SL4 and maybe SL5 level infosec to 2-5 projects, not just one. Why do you think this isn't the case?

"Additionally, the more projects there are with a particular capability, the more folk there are who can leak information either by talking or by being spies."

-> It's not clear to me that a single project would have fewer total people: seems likely that if US AGI development is centralised, it's part of a big beat China push, and involves throwing a lot of money and people at the problem.

comment by Gurkenglas · 2024-12-03T13:19:48.634Z · LW(p) · GW(p)

Why not just one global project?

Replies from: rosehadshar, AnthonyC, tom-davidson-1, Seth Herd, tyler-tracy↑ comment by rosehadshar · 2024-12-05T14:06:31.934Z · LW(p) · GW(p)

My main take here is that it seems really unlikely that the US and China would agree to work together on this.

↑ comment by Gurkenglas · 2024-12-05T23:49:54.592Z · LW(p) · GW(p)

Zvi's AI newsletter, latest installment https://www.lesswrong.com/posts/LBzRWoTQagRnbPWG4/ai-93-happy-tuesday [LW · GW], has a regular segment Pick Up the Phone arguing against this.

Replies from: rosehadshar↑ comment by rosehadshar · 2024-12-06T11:36:55.702Z · LW(p) · GW(p)

Thanks! Fwiw I agree with Zvi on "At a minimum, let’s not fire off a starting gun to a race that we might well not win, even if all of humanity wasn’t very likely to lose it, over a ‘missile gap’ style lie that we are somehow not currently in the lead."

Replies from: Gurkenglas↑ comment by Gurkenglas · 2024-12-06T14:09:28.913Z · LW(p) · GW(p)

(You can find his ten mentions of that ~hashtag via the looking glass on thezvi.substack.com. huh, less regular than I thought.)

↑ comment by AnthonyC · 2024-12-04T13:23:01.290Z · LW(p) · GW(p)

In some ways, this would be better if you can get universal buy-in, since there wouldn't be a race for completion. There might be a race for alignment to particular subgroups? Which could be better or worse, depending.

Also, securing it against bringing insights and know-how back to a clandestine single-nation competitor seems like it would be very difficult. Like, if we had this kind of project being built, do I really believe there won't be spies telling underground data centers and teams of researchers in Moscow and Washington everything it learns? And that governments will consistently put more effort into the shared project than the secret one?

Replies from: Purplehermann↑ comment by Purplehermann · 2024-12-06T12:05:53.047Z · LW(p) · GW(p)

Start small, once you have an attractive umbrella working for a few projects you can take in the rest of the US, the the world

↑ comment by Tom Davidson (tom-davidson-1) · 2024-12-06T14:27:49.319Z · LW(p) · GW(p)

I think the argument for combining separate US and Chinese projects into one global project is probably stronger than the argument for centralising US development. That's because racing between US companies can potentially be handled by USG regulation, but racing between US and China can't be similarly handled.

OTOH, the 'info security' benefits of centralisation mostly wouldn't apply

↑ comment by Tyler Tracy (tyler-tracy) · 2024-12-05T05:20:58.393Z · LW(p) · GW(p)

I like a global project idea more, but I think it still has issues.

- A global project would likely eliminate the racing concerns.

- A global project would have fewer infosec issues. Hopefully, most state actors who could steal the weights are bought into the project and wouldn't attack it.

- Power concentration seems worse since more actors would have varying interests. Some countries would likely have ideological differences and might try to seize power over the project. Various checks and balances might be able to remedy this.

comment by calebp99 · 2024-12-04T01:53:33.065Z · LW(p) · GW(p)

I liked various parts of this post and agree that this is an under-discussed but important topic. I found it a little tricky to understand the information security section. Here are a few disagreements (or possibly just confusions).

A single project might motivate more serious attacks, which are harder to defend against.

- It might also motivate earlier attacks, such that the single project would have less total time to get security measures into place.\

In general, I think it's more natural to think about how expensive an attack will be and how harmful that attack would be if it were successful, rather than reasoning about when an attack will happen.

Here I am imagining that you think a single project could motivate earlier attacks because overall US adversaries are more concerned about the US's AI ambitions, or because AI progress is faster and it's more useful to steal a model. It's worth noting that stealing AI models whilst progress is mostly due to scaling and models are not directly dangerous or automating ai r&d doesn't seem particularly harmful (in that it's unlikely to directly cause a GCR or significantly speed up the stealer's AGI project). So overall, I'm not sure whether you think the security situation is better or worse in the case of earlier attacks.

A single project could have *more *attack surface, if it's sufficiently big. Some attack surface scales with the number of projects (like the number of security systems), but other kinds of attack surface scale with total size (like the number of people or buildings). If a single project were sufficiently bigger than the sum of the counterfactual multiple projects, it could have more attack surface and so be less infosecure.

I don't really understand your model here. I think naively you should be comparing a central US project to multiple AI labs projects. My current impression is that for a fixed amount of total AI lab resources the attack surface will likely decrease (e.g. only need to verify one set of libraries are secure, rather than 3 somewhat different sets of libraries). If you are comparing just one frontier lab to a large single project than I agree attack surface could be larger but that seems like the wrong comparison.

I don't understand the logic of step 2 of the following argument.

- If it's harder to steal the weights, fewer actors will be able to do so.

- China is one of the most resourced and competent actors, and would have even stronger incentives to steal the weights than other actors (because of race dynamics).

- So it's more likely that centralising reduces proliferation risk, and less likely that it reduces the chance of China stealing the weights.\

I think that China has stronger incentives than many other nations to steal the model (because it is politically and financially cheaper for them) but making it harder to steal the weights still makes it more costly for China to steal the weights and therefore they are less incentivised. You seem to be saying that it makes them more incentivised to steal the weights but I don't quite follow why.

Replies from: rosehadshar↑ comment by rosehadshar · 2024-12-05T14:15:40.819Z · LW(p) · GW(p)

Thanks for these questions!

Earlier attacks: My thinking here is that centralisation might a) cause China to get serious about stealing the weights sooner, and b) therefore allow less time for building up great infosec. So it would be overall bad for infosec. (It's true the models would be weaker, so stealing the weights earlier might not matter so much. But I don't feel very confident that strong infosec would be in place before the models are dangerous (with or without centralisation))

More attack surface: I am trying to compare multiple projects with a single project. The attack surface of a single project might be bigger if the single project itself is very large. As a toy example, imagine 3 labs with 100 employees each. But then USG centralises everything to beat China and pours loads more resources into AGI development. The centralised project has 1000 staff; the counterfactual was 300 staff spread across 3 projects.