Posts

Comments

Re: AI safety summit, one thought I have is that the first couple summits were to some extent captured by the people like us who cared most about this technology and the risks. Those events, prior to the meaningful entrance of governments and hundreds of billions in funding, were easier to 'control' to be about the AI safety narrative. Now, the people optimizing generally for power have entered the picture, captured the summit, and changed the narrative for the dominant one rather than the niche AI safety one. So I don't see this so much as a 'stark reversal' so much as a return to status quo once something went mainstream.

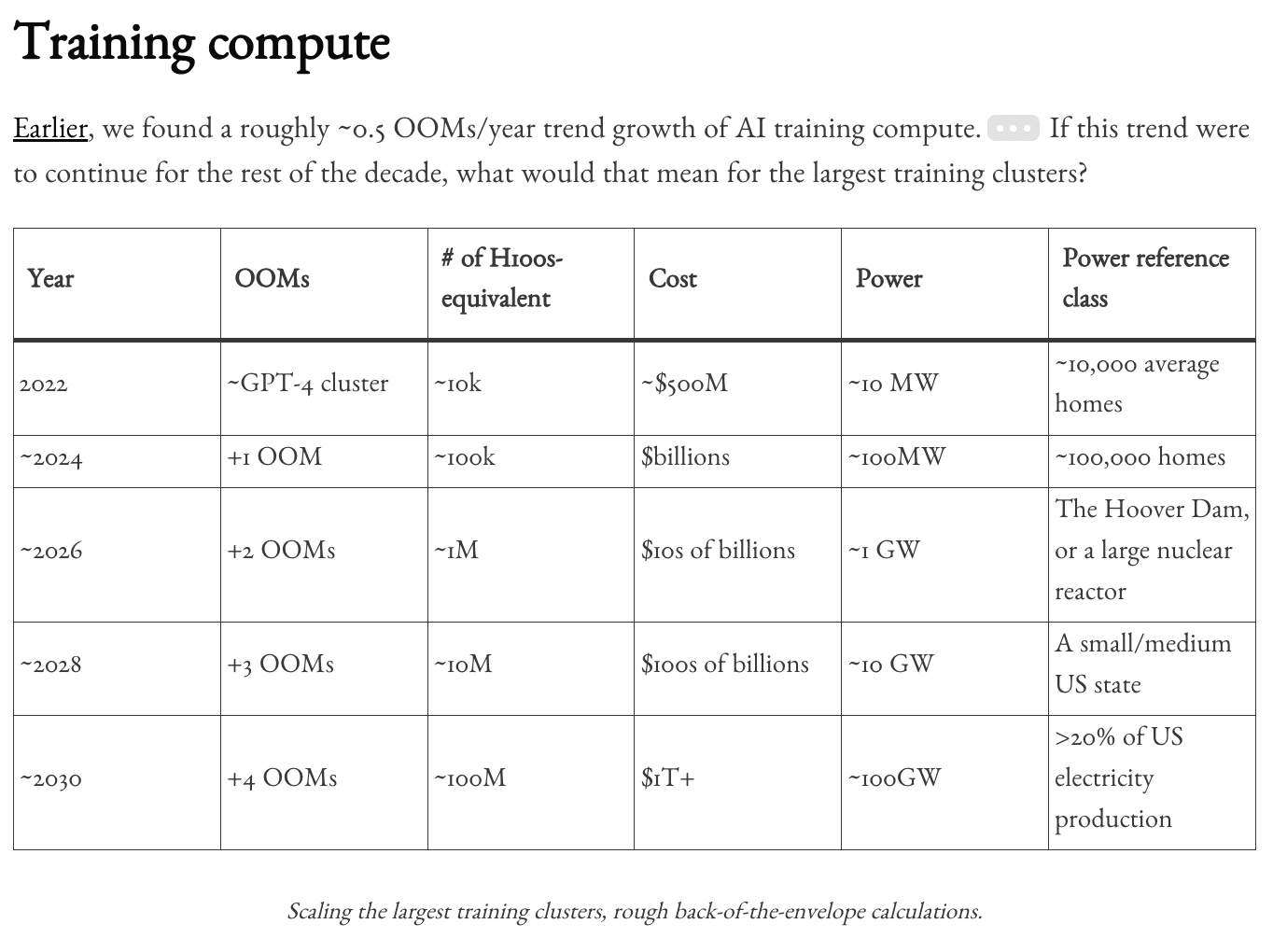

xAI's new planned scaleup follows one more step on the training compute timeline from Situational Awareness (among other projections, I imagine)

From ControlAI newsletter:

"xAI has announced plans to expand its Memphis supercomputer to house at least 1,000,000 GPUs. The supercomputer, called Collosus, already has 100,000 GPUs, making it the largest AI supercomputer in the world, according to Nvidia."

Unsure if it's built by 2026 but seems plausible based on quick search.

Something I'm worried about now is some RFK Jr/Dr. Oz equivalent being picked to lead on AI...

Realized I didn't linkpost on lesswrong, only forum - link to Reuters:

https://www.reuters.com/technology/artificial-intelligence/us-government-commission-pushes-manhattan-project-style-ai-initiative-2024-11-19/

From Reuters:

"We've seen throughout history that countries that are first to exploit periods of rapid technological change can often cause shifts in the global balance of power," Jacob Helberg, a USCC commissioner and senior advisor to software company Palantir's CEO, told Reuters.

I think it is true that (setting aside AI risk concerns), the US gov should, the moment it recognizes AGI (smarter than human AI) is possible, pursue it. It's the best use of resources, could lead to incredible economic/productivity/etc. growth, could lead to a decisive advantage over adversaries, could solve all sorts of problems.

"China is racing towards AGI ... It's critical that we take them extremely seriously," Helberg added.

This does not seem true to me though, unless Helberg and all have additional evidence. From the Dwarkesh podcast recently, it seemed to me (to be reductionist) that both Gwern and SemiAnalysis doubted China was truly scaling/AGI-pilled (yet). So this seems a bit more of a convenient statement from Helberg, and the next quote describes this commission as hawkish on China.

The USCC, established by Congress in 2000, provides annual recommendations on U.S.-China relations. Known for its hawkish policy proposals, the commission aims to guide lawmakers on issues of economic and strategic competition with China.

A quick OpenAI-o1 preview BOTEC for additional emissions from a sort of Leopold scenario ~2030, assuming energy is mostly provided by natural gas, since I was kinda curious. Not much time spent on this and took the results at face value. I (of course?) buy that emissions don't matter in short term, in a world where R&D is increasingly automated and scaled.

Phib:

Say an additional 20% of US electricity was added to our power usage (e.g. for AI) over the next 6 years, and it was mostly natural gas.

Also, that AI inference is used at an increasing rate, say 100Million H100s equivalent, increasing from today's level to 100M over the next 6 years, and it's more or less the same as today's energy breakdown, although with more natural gas over time.

What do the emissions from such a build out of natural gas look like?

[Reasoning available here: https://chatgpt.com/share/672ed183-7c3c-800d-ab0e-d08020d072c7]

****Answer:**

The emissions from this build-out of natural gas over the next six years would be significant. Specifically:

- **Total Additional Emissions Over Six Years:** Approximately **1.136 billion metric tons of CO₂**.

- **Average Annual Emissions Increase:** About **189 million metric tons of CO₂ per year**.

- **Percentage of Current U.S. Emissions:** This represents an approximate **2.9% increase** over the current annual U.S. greenhouse gas emissions.

These emissions result from the increased natural gas usage required to meet the additional electricity demand—both from the general 20% increase in electricity consumption and the substantial growth in AI inference workloads powered by the equivalent of 100 million NVIDIA H100 GPUs.

Phib:

Imagine there is a similar buildout in China, if not larger, how do the emissions from all of the above (within some confidence interval) place us environmentally within 2 years after 2030 of such a buildout and increase in emissions? Within 10 years? Considering a more or less constant rate of emissions thereafter for each.

Conclusion

The combined emissions from the U.S. and China's buildouts would significantly impact the global carbon budget and environmental goals:

By 2032 (Two Years After 2030):

Additional Emissions: Approximately 2.44 billion metric tons of CO₂.

Impact: Uses about 0.42% of the remaining carbon budget for limiting warming to 1.5°C.

By 2040 (Ten Years After 2030):

Additional Emissions: Approximately 12.21 billion metric tons of CO₂.

Impact: Consumes about 2.1% of the carbon budget.

Cumulative Impact by 2040:

Total Additional Emissions: Around 19.54 billion metric tons of CO₂.

Percentage of Carbon Budget: Approximately 3.37%.

Environmental Implications:

Increased Difficulty in Meeting Climate Targets: The additional emissions make it more challenging to stay within the carbon budgets necessary to limit global warming to 1.5°C or even 2°C.

Accelerated Climate Change Effects: Higher emissions could lead to more severe climate impacts, such as extreme weather events, sea-level rise, and ecosystem disruptions.

Final Note:

These estimates are subject to uncertainties due to potential changes in technology, policy, and economic factors. Proactive measures, such as investing in renewable energy and improving energy efficiency, could mitigate some of these emissions. However, without significant efforts to reduce reliance on fossil fuels, the environmental impact will be substantial and pose a serious challenge to global climate goals.

Final Phib note, perhaps inference energy costs may be far greater than assumed above, I don't imagine a GPT-5, GPT-6, that justify further investment, not also being adopted by a much larger population proportion (maybe 1 billion, 2 billion, instead of 100 million).

Maybe we should buy like a really nice macbook right before we expect chips to become like 2x more expensive and/or Taiwan manufacturing is disrupted?

Especially if you think those same years will be an important time to do work or have a good computer.

I have a guess that this:

"require that self-improving software require human intervention to move forward on each iteration"

is the unspoken distinction occurring here, how constant the feedback loop is for self-improvement.

So, people talk about recursive self-improvement, but mean two separate things, one is recursive self-improving models that require no human intervention to move forward on each iteration (perhaps there no longer is an iterative release process, the model is dynamic and constantly improving), and the other is somewhat the current step paradigm where we get a GPT-N+1 model that is 100x the effective compute of GPT-N.

So Sam says, no way do we want a constant curve of improvement, we want a step function. In both cases models contribute to AI research, in one case it contributes to the next gen, in the other case it improves itself.

Beautiful, thanks for sharing

Benchmarks are weird, imagine comparing a human only along their ability to take a test. Like saying, how do we measure einstein? in his avility to take a test. Someone else who completes that test therefore IS Einstein (not necessarily at all, you can game tests, in ways that aren't 'cheating', just study the relevant material (all the online content ever).

LLM's ability to properly guide someone through procedures is actually the correct way to evaluate language models. Not written description or solutions, but step by step guiding someone through something impressive, Can the model help me make a

Or even without a human, step by step completing a task.

(Cross comment from EAF)

Thank you for making the effort to write this post.

Reading Situational Awareness, I updated pretty hardcore into national security as the probable most successful future path, and now find myself a little chastened by your piece, haha [and just went around looking at other responses too, but yours was first and I think it's the most lit/evidence-based]. I think I bought into the "Other" argument for China and authoritarianism, and the ideal scenario of being ahead in a short timeline world so that you don't have to even concern yourself with difficult coordination, or even war, if it happens fast enough.

I appreciated learning about macrosecuritization and Sears' thesis, if I'm a good scholar I should also look into Sears' historical case studies of national securitization being inferior to macrosecuritization.

Other notes for me from your article included: Leopold's pretty bad handwaviness around pausing as simply, "not the way", his unwillingness to engage with alternative paths, the danger (and his benefit) of his narrative dominating, and national security actually being more at risk in the scenario where someone is threatening to escape mutually assured destruction. I appreciated the note that safety researchers were pushed out of/disincentivized in the Manhattan Project early and later disempowered further, and that a national security program would probably perpetuate itself even with a lead.

FWIW I think Leopold also comes to the table with a different background and set of assumptions, and I'm confused about this but charitably: I think he does genuinely believe China is the bigger threat versus the intelligence explosion, I don't think he intentionally frames the Other as China to diminish macrosecuritization in the face of AI risk. See next note for more, but yes, again, I agree his piece doesn't have good epistemics when it comes to exploring alternatives, like a pause, and he seems to be doing his darnedest narratively to say the path he describes is The Way (even capitalizing words like this), but...

One additional aspect of Leopold's beliefs that I don't believe is present in your current version of this piece, is that Leopold makes a pretty explicit claim that alignment is solvable and furthermore believes that it could be solved in a matter of months, from p. 101 of Situational Awareness:

Moreover, even if the US squeaks out ahead in the end, the difference between a 1-2 year and 1-2 month lead will really matter for navigating the perils of superintelligence. A 1-2 year lead means at least a reasonable margin to get safety right, and to navigate the extremely volatile period around the intelligence explosion and post-superintelligence.77 [NOTE] 77 E.g., space to take an extra 6 months during the intelligence explosion for alignment research to make sure superintelligence doesn’t go awry, time to stabilize the situation after the invention of some novel WMDs by directing these systems to focus on defensive applications, or simply time for human decision-makers to make the right decisions given an extraordinarily rapid pace of technological change with the advent of superintelligence.

I think this is genuinely a crux he has with the 'doomers', and to a lesser extent the AI safety community in general. He seems highly confident that AI risk is solvable (and will benefit from gov coordination), contingent on there being enough of a lead (which requires us to go faster to produce that lead) and good security (again, increase the lead).

Finally, I'm sympathetic to Leopold writing about the government as better than corporations to be in charge here (and I think the current rate of AI scaling makes this at some point likely (hit proto-natsec level capability before x-risk capability, maybe this plays out on the model gen release schedule)) and his emphasis on security itself seems pretty robustly good (I can thank him for introducing me to the idea of North Korea walking away with AGI weights). Also just the writing is pretty excellent.

Agree that this is a cool list, thanks, excited to come back to it.

I just read Three Body Problem and liked it, but got the same sense where the end of the book lost me a good deal and left a sour taste. (do plan to read sequels tho!)

Reminds me of this trend: https://mashable.com/article/chatgpt-make-it-more In which people ask dalle to make images generated more whatever quality. More swiss, bigger water bottle, and eventually you get ‘spirituality’ or meta as the model tries its best to take a step up each time.

Also, I feel like the context being added to the prompt, as you go on in the context window and it takes some previous details from your conversation, is warbled and further prompts warbling.

Honestly, maybe further controversial opinion, but this [30 million for a board seat at what would become the lead co. for AGI, with a novel structure for nonprofit control that could work?] still doesn't feel like necessarily as bad a decision now as others are making it out to be?

The thing that killed all value of this deal was losing the board seat(s?), and I at least haven't seen much discussion of this as a mistake.

I'm just surprised so little prioritization was given to keeping this board seat, it was probably one of the most important assets of the "AI safety community and allies", and there didn't seem to be any real fight with Sam Altman's camp for it.

So Holden has the board seat, but has to leave because of COI, and endorses Toner to replace, "... Karnofsky cited a potential conflict of interest because his wife, Daniela Amodei, a former OpenAI employee, helped to launch the AI company Anthropic.

Given that Toner previously worked as a senior research analyst at Open Philanthropy, Loeber speculates that Karnofsky might’ve endorsed her as his replacement."

Like, maybe it was doomed if they only had one board seat (Open Phil) vs whoever else is on the board, and there's a lot of shuffling about as Musk and Hoffman also leave for COIs, but start of 2023 it seems like there is an "AI Safety" half to the board, and a year later there are now none. Maybe it was further doomed if Sam Altman has the, take the whole company elsewhere, card, but idk... was this really inevitable? Was there really not a better way to, idk, maintain some degree of control and supervision of this vital board over the years since OP gave the grant?

“they serendipitously chose guinea pigs, the one animal besides human beings and monkeys that requires vitamin C in its diet.“

This recent post I think describes this same phenomena but not from the same level of ‘necessity’ as, say, cures to big problems. Kinda funny too: https://www.lesswrong.com/posts/oA23zoEjPnzqfHiCt/there-is-way-too-much-serendipity.

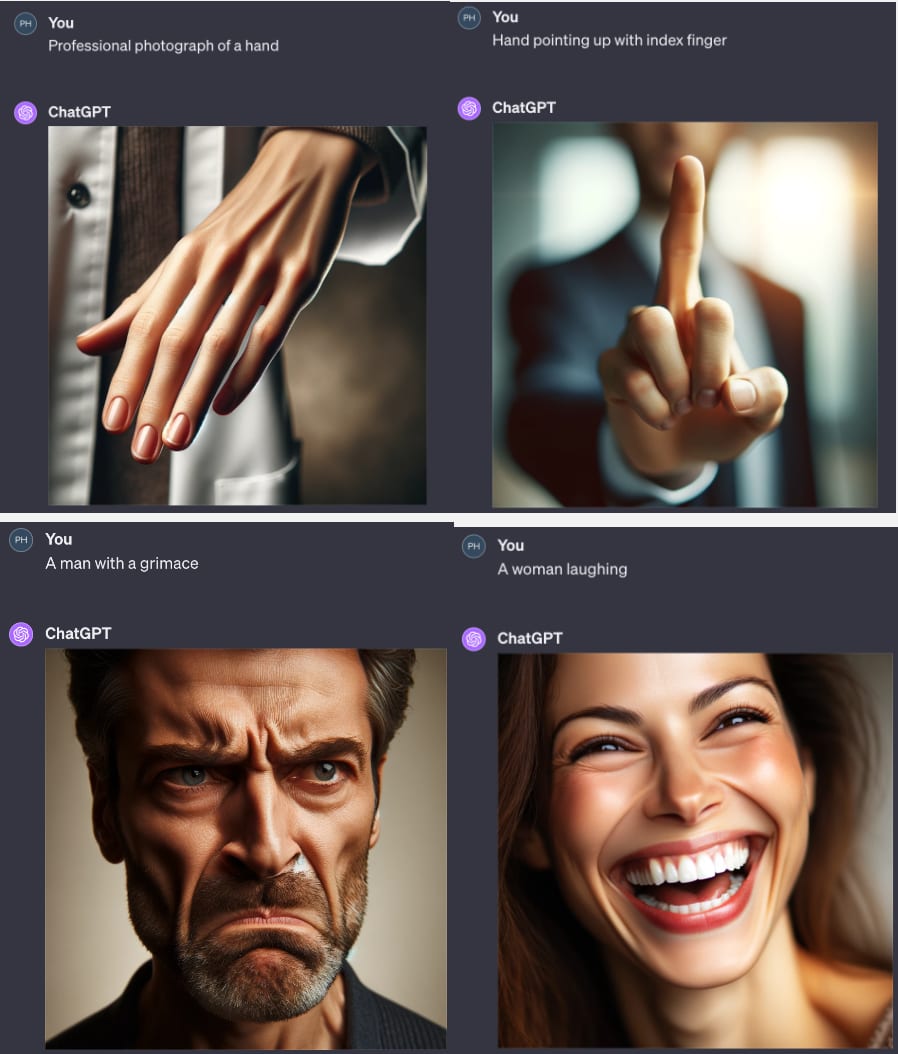

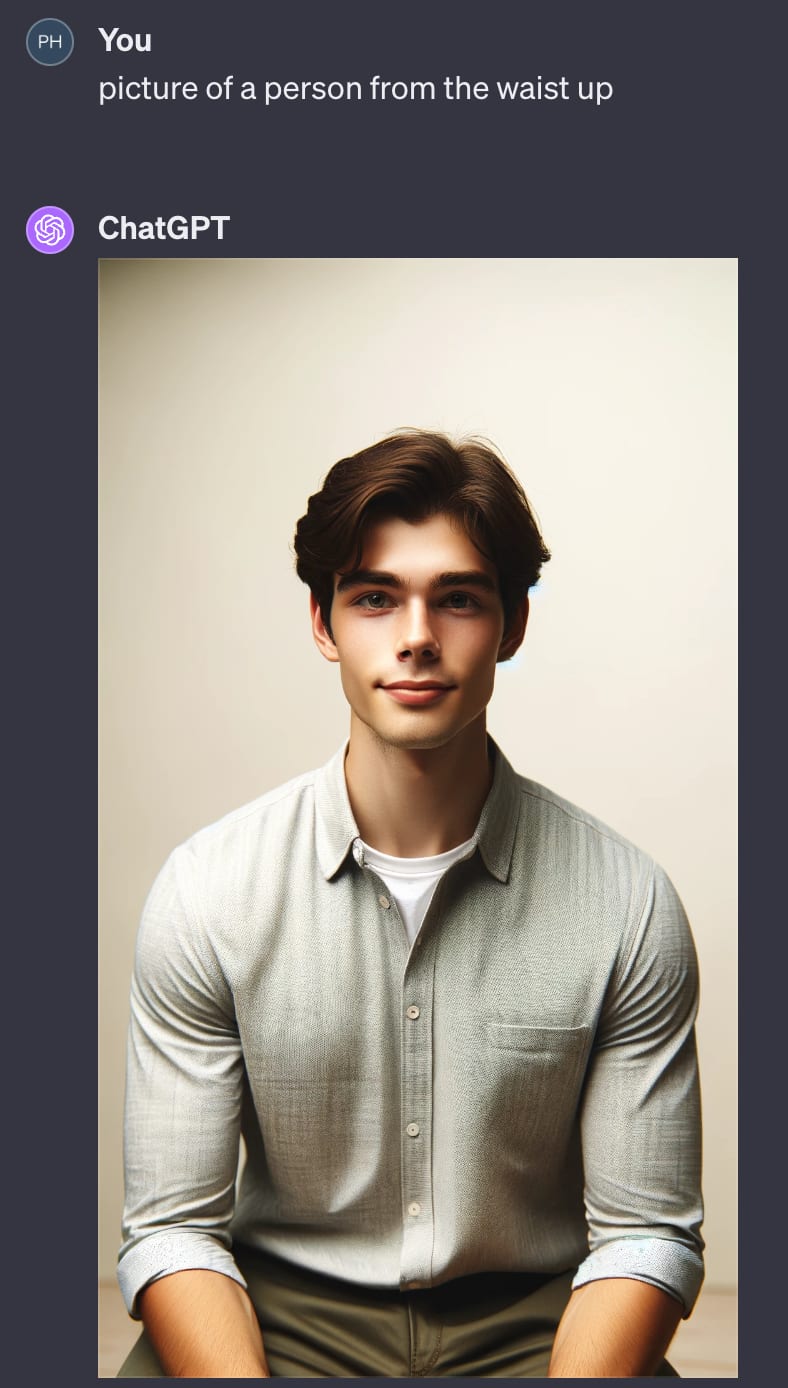

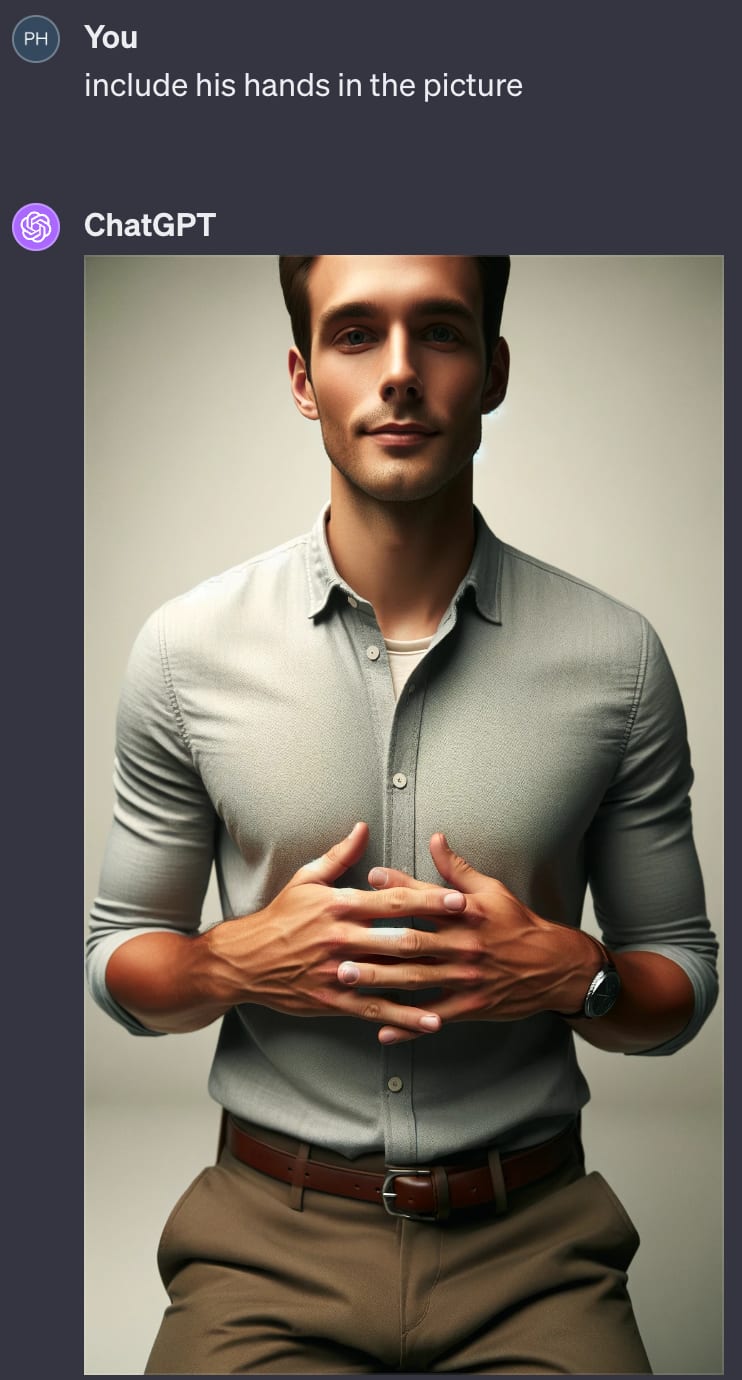

So here was my initial quick test, I haven't spent much time on this either, but have seen the same images of faces on subreddits etc. and been v impressed. I think asking for emotions was a harder challenge vs just making a believable face/hand, oops

I really appreciate your descriptions of the distinctive features of faces and of pareidolia, and do agree that faces are more often better represented than hands, specifically hands often have the more significant/notable issues (misshapen/missing/overlapped fingers). Versus with faces where there's nothing as significant as missing an eye, but it can be hard to portray something more specific like an emotion (though same can be said for, e.g. getting Dalle not to flip me off when I ask for an index finger haha).

Rather difficult to label or prompt a specific hand orientation you'd like as well, versus I suppose, an emotion (a lot more descriptive words for the orientation of a face than a hand)

So yeah, faces do work, and regardless of my thoughts on uncanny valley of some faces+emotions, I actually do think hands (OP subject) are mostly a geometric complexity thing, maybe we see our own hands so much that we are more sensitive to error? But they don't have the same meaning to them as faces for me (minute differences for slightly different emotions, and benefitting perhaps from being able to accurately tell).

I think if this were true, then it would also hold that faces are done rather poorly right now which, maybe? Doing some quick tests, yeah, both faces and hands at least on Dalle-3 seem to be similar levels of off to me.

Wow, I’m impressed it caught itself, was just trying to play with that 3 x 3 problem too. Thanks!

I don’t know [if I understand] full rules so don’t know if this satisfies, but here:

https://chat.openai.com/share/0089e226-fe86-4442-ba07-96c19ac90bd2

Kinda commenting on stuff like “Please don’t throw your mind away” or any advice not to fully defer judgment to others (and not intending to just straw man these! They’re nuanced and valuable, just meaning to next step it).

In my circumstance and I imagine many others who are young and trying to learn and trying to get a job, I think you have to defer to your seniors/superiors/program to a great extent, or at least to the extent where you accept or act on things (perform research, support ops) that you’re quite uncertain about.

Idk there’s a lot more nuance here to this conversation as with any, of course. Maybe nobody is certain of anything and they’re just staking a claim so that they can be proven right or wrong and experiment in this way, producing value in their overconfidence. But I do get a sense of young/new people coming into a field that is even slightly established, requiring to some extent to defer to others for their own sake.

I don’t mean to present myself as the “best arguments that could be answered here” or at all representative of the alignment community. But just wanted to engage. I appreciate your thoughts!

Well, one argument for potential doom doesn’t necessitate an adversarial AI, but rather people using increasingly powerful tools in dumb and harmful ways (in the same class of consideration for me as nuclear weapons; my dumb imagined situation of this is a government using AI to continually scale up surveillance and maybe we eventually get to a position like in 1984)

Another point is that a sufficiently intelligent and agentic AI would not need humans, it would probably eventually be suboptimal to rely on humans for anything. And it kinda feels to me like this is what we are heavily incentivized to design, the next best and most capable system. In terms of efficiency, we want to get rid of the human in the loop, that person’s expensive!

Idk the public access of some of these things, like with nonlinear's recent round, but seeing a lot of apps there and organized by category, reminded me of this post a little bit.

edit - in terms of seeing what people are trying to do in the space. Though I imagine this does not capture the biggest players that do have funding.

btw small note that I think accumulations of grant applications are probably pretty good sources of info.

BTW - this video is quite fun. Seems relevant re: Paperclip Maximizer and nanobots.

low commit here but I've previously used nanotech as an example (rather than a probable outcome) of a class somewhat known unknowns - to portray possible future risks that we can imagine as possible while not being fully conceived. So while grey goo might be unlikely, it seems that precursor to grey goo of a pretty intelligent system trying to mess us up is the thing to be focused on, and this is one of its many possibilities that we can even imagine

I rather liked this post (and I’ll put it on both EAF and LW versions)

https://www.lesswrong.com/posts/PQtEqmyqHWDa2vf5H/a-quick-guide-to-confronting-doom

Particularly the comment by Jakob Kraus reminded me that many people have faced imminent doom (not of human species, but certainly quite terrible experiences).

Hi, writing this while on the go but just throwing it out there, this seems to be Sam Altman’s intent with OpenAI in pursuing fast timelines with slow takeoffs.

I am unaware of those decisions at the time. I imagine people are some degree of ‘making decisions under uncertainty’, even if that uncertainty could be resolved by info somewhere out there. Perhaps there’s some optimization of how much time you spend looking into something and how right you could expect to be?

Anecdote of me (not super rationalist-practiced, also just at times dumb) - I sometimes discover stuff I briefly took to be true in passing to be false later. Feels like there’s an edge of truth/falsehoods that we investigate pretty loosely but still use a heuristic of some valence of true/false maybe a bit too liberally at times.

LLMs as a new benchmark for human labor. Using ChatGPT as a control group versus my own efforts to see if my efforts are worth more than the (new) default

Thanks for writing this, enjoyed it. I was wondering how to best represent this to other people, perhaps with an example of 5 and 10 where you let a participant make the mistake, and then question their reasoning etc. lead them down the path laid out in your post of rationalization after the decision before finally you show them their full thought process in post. I could certainly imagine myself doing this and I hope I’d be able to escape my faulty reasoning…