Vote on worthwhile OpenAI topics to discuss

post by Ben Pace (Benito), jacobjacob · 2023-11-21T00:03:03.898Z · LW · GW · 55 commentsContents

How to use the poll None 56 comments

I (Ben) recently made a poll [LW · GW] for voting on interesting disagreements to be discussed on LessWrong. It generated a lot of good topic suggestions and data about what questions folks cared about and disagreed on.

So, Jacob and I figured we'd try applying the same format to help people orient to the current OpenAI situation.

What important questions would you want to see discussed and debated here in the coming days? Suggest and vote below.

How to use the poll

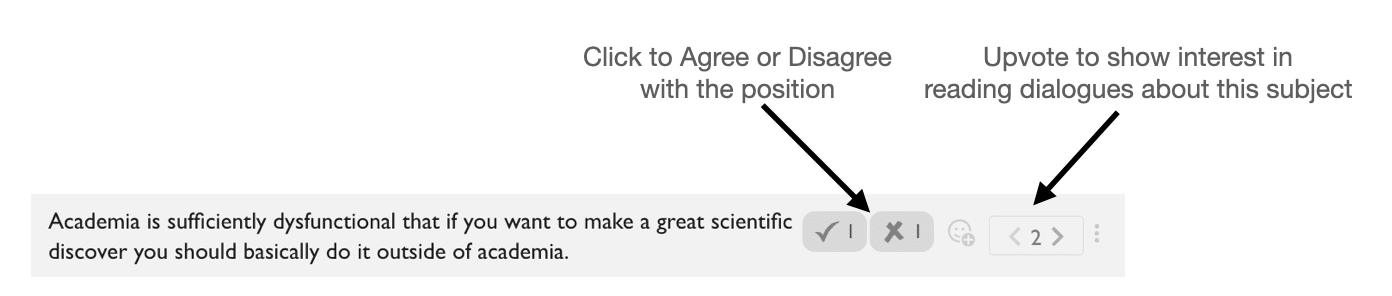

- Reacts: Click on the agree/disagree reacts to help people see how much disagreement there is on the topic.

- Karma: Upvote positions that you'd like to read discussion about.

- New Poll Option: Add new positions for people to take sides on. Please add the agree/disagree reacts to new poll options you make.

The goal is to show people where a lot of interest and disagreement lies. This can be used to find discussion and dialogue [? · GW] topics in the future.

55 comments

Comments sorted by top scores.

comment by Ben Pace (Benito) · 2023-11-20T21:40:36.065Z · LW(p) · GW(p)

Poll For Topics of Discussion and Disagreement

Use this thread to (a) upvote topics you're interested in reading about, (b) agree/disagree with positions, and (c) add new positions for people to vote on.

Replies from: jacobjacob, D0TheMath, jimrandomh, Unreal, jacobjacob, D0TheMath, jacobjacob, Benito, ChristianKl, TrevorWiesinger, Benito, jacobjacob, Oliver Sourbut, NicholasKross, jacobjacob, jacobjacob, Unreal, D0TheMath, Benito, Benito, Unreal, Unreal, D0TheMath, TrevorWiesinger, 1a3orn, D0TheMath, lahwran, jacobjacob, jacobjacob, TrevorWiesinger, Benito, TrevorWiesinger, TrevorWiesinger, TrevorWiesinger, jacobjacob, TrevorWiesinger, TrevorWiesinger, Oliver Sourbut, TrevorWiesinger, gesild-muka, jacobjacob, D0TheMath↑ comment by jacobjacob · 2023-11-20T23:53:54.954Z · LW(p) · GW(p)

Open-ended: A dialogue between an OpenAI employee who signed the open letter, and someone outside opposed to the open letter, about their reasoning and the options.

(Up/down-vote if you're interested in reading discussion of this. React paperclip if you have an opinion and would be up for dialoguing)

↑ comment by Garrett Baker (D0TheMath) · 2023-11-21T00:46:45.859Z · LW(p) · GW(p)

We should expect something similar to this fiasco to happen if/when Anthropic's oversight board tries to significantly exercise their powers.

↑ comment by jimrandomh · 2023-11-21T00:45:31.763Z · LW(p) · GW(p)

Was Sam Altman acting consistently with the OpenAI charter prior to the board firing him?

↑ comment by jacobjacob · 2023-11-20T21:48:37.309Z · LW(p) · GW(p)

Open-ended: If >90% of employees leave OpenAI: what plan should Emmett Shear set for OpenAI going forwards?

(Up/down-vote if you're interested in reading discussion of this. React paperclip if you have an opinion and would be up for discussing)

↑ comment by Garrett Baker (D0TheMath) · 2023-11-21T00:47:03.612Z · LW(p) · GW(p)

We should expect something similar to this fiasco to happen if/when Anthropic's responsible scaling policies tell them to stop scaling.

↑ comment by jacobjacob · 2023-11-20T21:50:19.517Z · LW(p) · GW(p)

Open-ended: If >50% of employees end up staying at OpenAI: how, if at all, should OpenAI change its structure and direction going forwards?

(Up/down-vote if you're interested in reading discussion of this. React paperclip if you have an opinion and would be up for discussing)

↑ comment by Ben Pace (Benito) · 2023-11-20T23:38:20.195Z · LW(p) · GW(p)

The way this firing has played out so far (to Monday Nov 20th) is evidence that the non-profit board effectively was not able to fire the CEO.

↑ comment by ChristianKl · 2023-11-21T06:46:31.927Z · LW(p) · GW(p)

The secrecy in which OpenAI's board operated made it less trustworthy. Boards at places like Antrophic should update to be less secretive and more transparent.

↑ comment by trevor (TrevorWiesinger) · 2023-11-21T02:39:30.976Z · LW(p) · GW(p)

There is a >80% chance that US-China affairs (including the AI race between the US and China) is an extremely valuable or crucial lens for understanding the current conflict over OpenAI (the conflict itself, not the downstream implications), as opposed to being a merely somewhat-helpful lens.

↑ comment by Ben Pace (Benito) · 2023-11-20T21:43:37.460Z · LW(p) · GW(p)

I assign >50% to this claim: The board should be straightforward with its employees about why they fired the CEO.

↑ comment by jacobjacob · 2023-11-21T00:07:19.469Z · LW(p) · GW(p)

It would be a promising move, to reduce existential risk, for Anthropic to take over what will remain of OpenAI and consolidate efforts into a single project.

↑ comment by Oliver Sourbut · 2023-11-21T09:13:20.736Z · LW(p) · GW(p)

The mass of OpenAI employees rapidly performing identical rote token actions in public (identical tweets and open-letter swarming) is a poor indicator for their collective epistemics (e.g. manipulated, mind-killed, ...)

↑ comment by Nicholas / Heather Kross (NicholasKross) · 2023-11-21T00:40:20.633Z · LW(p) · GW(p)

OpenAI should have an internal-only directory allowing employees and leadership to write up and see each other's beliefs about AI extinction risk and alignment approaches.

↑ comment by jacobjacob · 2023-11-20T23:49:54.238Z · LW(p) · GW(p)

If the board did not abide by cooperative principles in the firing nor acted on substantial evidence to warrant the firing in line with the charter, and nonetheless were largely EA motivated, then EA should be disavowed and dismantled.

↑ comment by jacobjacob · 2023-11-20T23:42:17.200Z · LW(p) · GW(p)

The events of the OpenAI board CEO-ousting on net reduced existential risk from AGI.

↑ comment by Garrett Baker (D0TheMath) · 2023-11-21T01:25:59.820Z · LW(p) · GW(p)

Neither Microsoft nor OpenAI will have the best language model in a year.

↑ comment by Ben Pace (Benito) · 2023-11-20T21:49:33.618Z · LW(p) · GW(p)

Insofar as lawyers are recommending against the former OpenAI board speaking about what happened, the board should probably ignore them.

↑ comment by Ben Pace (Benito) · 2023-11-20T21:44:02.416Z · LW(p) · GW(p)

I assign >80% probability to this claim: the board should be straightforward with its employees about why they fired the CEO.

↑ comment by Unreal · 2023-11-21T03:01:29.727Z · LW(p) · GW(p)

The OpenAI Charter, if fully & faithfully followed and effectively stood behind, including possibly shuttering the whole project down if it came down to it, would prevent OpenAI from being a major contributor to AI x-risk. In other words, as long as people actually followed this particular Charter to the letter, it is sufficient for curtailing AI risk, at least from this one org.

↑ comment by Unreal · 2023-11-21T03:06:22.108Z · LW(p) · GW(p)

Media & Twitter reactions to OpenAI developments were largely unhelpful, specious, or net-negative for overall discourse around AI and AI Safety. We should reflect on how we can do better in the future and possibly even consider how to restructure media/Twitter/etc to lessen the issues going forward.

↑ comment by Garrett Baker (D0TheMath) · 2023-11-21T01:36:50.640Z · LW(p) · GW(p)

Your impression is that Sam Altman is deceitful, manipulative, and often lies in his professional relationships.

(Edit: Do not use the current poll result in your evaluation here)

↑ comment by trevor (TrevorWiesinger) · 2023-11-21T01:10:45.992Z · LW(p) · GW(p)

If a mass exodus happens, it will mostly be the fodder employees, and more than 30% of OpenAI's talent will remain (e.g. if the mind of Ilya and two other people contain more than 30% of OpenAI's talent, and they all stay).

↑ comment by Garrett Baker (D0TheMath) · 2023-11-21T01:05:37.783Z · LW(p) · GW(p)

There is a person or entity deploying FUD tactics in a strategically relevant way which is driving such feelings on Twitter. In contrast to the situation just being naturally or unintentionally provoking of fear, uncertainty, and doubt. For example, Sam privately threatening to sue the board for libel & wrongful termination if they're more specific about why Sam was fired or don't support the dissolution of the board, and the board caving or at least taking more time.

↑ comment by the gears to ascension (lahwran) · 2023-11-21T04:09:19.168Z · LW(p) · GW(p)

This poll is has too many questions of fact, and therefore questions of fact should be downvoted, so that questions of policy can be upvoted in their stead. Discuss below.

↑ comment by jacobjacob · 2023-11-20T23:58:45.742Z · LW(p) · GW(p)

If there was actually a spooky capabilities advance that convinced the board that drastic action was needed, then the board's actions were on net justified, regardless of what other dynamics were at play and whether cooperative principles were followed.

↑ comment by jacobjacob · 2023-11-20T21:44:40.911Z · LW(p) · GW(p)

It is important that the board release another public statement explaining their actions, and providing any key pieces of evidence.

↑ comment by trevor (TrevorWiesinger) · 2023-11-21T01:22:56.115Z · LW(p) · GW(p)

If most of OpenAI is absorbed into Microsoft, they will ultimately remain sovereign and resist further capture, e.g. using rationality, FDT/UDT, coordination theory, CFAR training, glowfic osmosis, or Microsoft underestimating them sufficiently to fail to capture them further, or overestimating their predictability, or being deterred, or Microsoft not having the appetite to make an attempt at all.

↑ comment by Ben Pace (Benito) · 2023-11-21T01:44:15.458Z · LW(p) · GW(p)

I assign more than 20% probability to this claim: the firing of Sam Altman was part of a plan to merge OpenAI with Anthropic.

↑ comment by trevor (TrevorWiesinger) · 2023-11-21T01:31:05.612Z · LW(p) · GW(p)

Microsoft is not actively aggressive or trying to capture OpenAI, and is largely passive in the conflict, e.g. Sam or Greg or investors approached Microsoft with the exodus and letter idea, and Microsoft was either clueless or misled about the connection between the board and EA/AI safety.

↑ comment by trevor (TrevorWiesinger) · 2023-11-21T02:53:30.212Z · LW(p) · GW(p)

↑ comment by trevor (TrevorWiesinger) · 2023-11-21T02:42:20.549Z · LW(p) · GW(p)

If >80% of Microsoft employees were signed up for Cryonics, as opposed to ~0% now, that would indicate that Microsoft is sufficiently future-conscious to make it probably net-positive for them to absorb OpenAI.

↑ comment by jacobjacob · 2023-11-21T00:00:30.045Z · LW(p) · GW(p)

New leadership should shut down OpenAI.

↑ comment by trevor (TrevorWiesinger) · 2023-11-21T00:56:06.450Z · LW(p) · GW(p)

Richard Ngo, the person, signed the letter (as opposed to a fake signature)

↑ comment by trevor (TrevorWiesinger) · 2023-11-21T00:46:11.821Z · LW(p) · GW(p)

The letter indicating ~700 employees will leave if Altman and Brockman do not return, contains >50 fake signatures.

↑ comment by Oliver Sourbut · 2023-11-21T09:12:44.903Z · LW(p) · GW(p)

The mass of OpenAI employees rapidly performing identical rote token actions in public (identical tweets and open-letter swarming) indicates a cult of personality around Sam

↑ comment by trevor (TrevorWiesinger) · 2023-11-21T01:00:20.115Z · LW(p) · GW(p)

The conflict was not started by the board, but rather the board reacting to a move made by someone else, or a discovery of a hostile plot previously initiated and advanced by someone else.

↑ comment by Gesild Muka (gesild-muka) · 2023-11-21T12:44:09.783Z · LW(p) · GW(p)

The main reason for Altman's firing was due to a scandal of a more personal nature mostly unrelated to the everyday or strategic operations of OpenAI.

↑ comment by jacobjacob · 2023-11-21T00:04:25.779Z · LW(p) · GW(p)

EAs need to aggressively recruit and fund additional ambitious Sam's, to ensure there's one to sacrifice for Samsgiving November 2024.

↑ comment by Garrett Baker (D0TheMath) · 2023-11-21T00:54:42.721Z · LW(p) · GW(p)

comment by Eli Tyre (elityre) · 2023-11-21T03:56:15.262Z · LW(p) · GW(p)

Most of these are questions of fact, which I guess we could speculate about, but ultimately, most of us don't know, and those who know aren't saying.

What I would really like is interviews, with people who have more context, either on the situation or characters of the people involved (executing with care and respect, for that second one in particular).

comment by Unreal · 2023-11-21T16:57:23.135Z · LW(p) · GW(p)

I was asked to clarify my position about why I voted 'disagree' with "I assign >50% to this claim: The board should be straightforward with its employees about why they fired the CEO."

I'm putting a maybe-unjustified high amount of trust in all the people involved, and from that, my prior is very high on "for some reason, it would be really bad, inappropriate, or wrong to discuss this in a public way." And given that OpenAI has ~800 employees, telling them would basically count as a 'public' announcement. (I would update significantly on the claim if it was only a select group of trusted employees, rather than all of them.)

To me, people seem too-biased in the direction of "this info should be public"—maybe with the assumption that "well I am personally trustworthy, and I want to know, and in fact, I should know in order to be able to assess the situation for myself." Or maybe with the assumption that the 'public' is good for keeping people accountable and ethical. Meaning that informing the public would be net helpful.

I am maybe biased in the direction of: The general public overestimates its own trustworthiness and ability to evaluate complex situations, especially without most of the relevant context.

My overall experience is that the involvement of the public makes situations worse, as a general rule.

And I think the public also overestimates their own helpfulness, post-hoc. So when things are handled in a public way, the public assesses their role in a positive light, but they rarely have ANY way to judge the counterfactual. And in fact, I basically NEVER see them even ACKNOWLEDGE the counterfactual. Which makes sense because that counterfactual is almost beyond-imagining. The public doesn't have ANY of the relevant information that would make it possible to evaluate the counterfactual.

So in the end, they just default to believing that it had to play out in the way it did, and that the public's involvement was either inevitable or good. And I do not understand where this assessment comes from, other than availability bias?

The involvement of the public, in my view, incentivizes more dishonesty, hiding, and various forms of deception. Because the public is usually NOT in a position to judge complex situations and lack much of the relevant context (and also aren't particularly clear about ethics, often, IMO), so people who ARE extremely thoughtful, ethically minded, high-integrity, etc. are often put in very awkward binds when it comes to trying to interface with the public. And so I believe it's better for the public not to be involved if they don't have to be.

I am a strong proponent of keeping things close to the chest and keeping things within more trusted, high-context, in-person circles. And to avoid online involvement as much as possible for highly complex, high-touch situations. Does this mean OpenAI should keep it purely internal? No they should have outside advisors etc. Does this mean no employees should know what's going on? No, some of them should—the ones who are high-level, responsible, and trustworthy, and they can then share what needs to be shared with the people under them.

Maybe some people believe that all ~800 employees deserve to know why their CEO was fired. Like, as a courtesy or general good policy or something. I think it depends on the actual reason. I can envision certain reasons that don't need to be shared, and I can envision reasons that ought to be shared.

I can envision situations where sharing the reasons could potentially damage AI Safety efforts in the future. Or disable similar groups from being able to make really difficult but ethically sound choices—such as shutting down an entire company. I do not want to disable groups from being able to make extremely unpopular choices that ARE, in fact, the right thing to do.

"Well if it's the right thing to do, we, the public, would understand and not retaliate against those decision-makers or generally cause havoc" is a terrible assumption, in my view.

I am interested in brainstorming, developing, and setting up really strong and effective accountability structures for orgs like OpenAI, and I do not believe most of those effective structures will include 'keep the public informed' as a policy. More often the opposite.

Replies from: faul_sname↑ comment by faul_sname · 2023-11-21T20:37:24.204Z · LW(p) · GW(p)

The board's initial statement in which they stated

Mr. Altman’s departure follows a deliberative review process by the board, which concluded that he was not consistently candid in his communications with the board, hindering its ability to exercise its responsibilities. The board no longer has confidence in his ability to continue leading OpenAI.

That is already a public statement that they are firing Sam Altman for cause, and that the cause is specifically that he lied to the board about something material. That's a perfectly fine public statement to make, if Sam Altman has in fact lied to the board about something material. Even a statement to the effect of "the board stands by its decision, but we are not at liberty to comment on the particulars of the reasons for Sam Altman's departure at this time" would be better than what we've seen (because that would say "yes there was actual misconduct, no we're not going to go into more detail"). The absence of such a statement implies that maybe there was no specific misconduct though.

Replies from: Unreal↑ comment by Unreal · 2023-11-21T20:45:29.088Z · LW(p) · GW(p)

I don't interpret that statement in the same way.

You interpreted it as 'lied to the board about something material'. But to me, it also might mean 'wasn't forthcoming enough for us to trust him' or 'speaks in misleading ways (but not necessarily on purpose)' or it might even just be somewhat coded language for 'difficult to work with + we're tired of trying to work with him'.

I don't know why you latch onto the interpretation that he definitely lied about something specific.

Replies from: faul_sname↑ comment by faul_sname · 2023-11-21T22:35:06.432Z · LW(p) · GW(p)

I'm interpreting this specifically through the lens of "this was a public statement". The board definitely had the ability to execute steps like "ask ChatGPT for some examples of concrete scenarios that would lead a company to issue that statement". The board probably had better options than "ask ChatGPT", but that should still serve as a baseline for how informed one would expect them to be about the implications of their statement.

Here are some concrete example scenarios ChatGPT gives that might lead to that statement being given:

- Financial Performance Misrepresentation: Four years into his tenure, CEO Mr. Ceoface of FooBarCorp, a leading ERP software company, had been painting an overly rosy picture of the company's financial health in board meetings. He reported consistently high revenue projections and downplayed the mounting operational costs to keep investor confidence buoyant. However, an unexpected external audit revealed significant financial discrepancies. The actual figures showed that the company was on the brink of a financial crisis, far from the flourishing image Mr. Ceoface had portrayed. This breach of trust led to his immediate departure.

- Undisclosed Risks in Business Strategy: Mr. Ceoface, the ambitious CEO of FooBarCorp, had spearheaded a series of high-profile acquisitions to dominate the ERP market. He assured the board of minimal risk and substantial rewards. However, he failed to disclose the full extent of the debt incurred and the operational challenges of integrating these acquisitions. When several of these acquisitions began underperforming, causing a strain on the company’s resources, the board realized they had not been fully informed about the potential pitfalls, leading to a loss of confidence in Mr. Ceoface's leadership.

- Compliance and Ethical Issues: Under Mr. Ceoface's leadership, FooBarCorp had engaged in aggressive competitive practices that skirted the edges of legal and ethical norms. While these practices initially drove the company's market share upwards, Mr. Ceoface kept the board in the dark about the potential legal and ethical ramifications. The situation came to a head when a whistleblower exposed these practices, leading to public outcry and regulatory scrutiny. The board, feeling blindsided and questioning Mr. Ceoface’s judgment, decided to part ways with him.

- Personal Conduct and Conflict of Interest: Mr. Ceoface, CEO of FooBarCorp, had personal investments in several small tech startups, some of which became subcontractors and partners of FooBarCorp. He neglected to disclose these interests to the board, viewing them as harmless and separate from his role. However, when an investigative report revealed that these startups were receiving preferential treatment and contracts from FooBarCorp, the board was forced to confront Mr. Ceoface about these undisclosed conflicts of interest. His failure to maintain professional boundaries led to his immediate departure.

- Technology and Product Missteps: Eager to place FooBarCorp at the forefront of innovation, Mr. Ceoface pushed for the development of a cutting-edge, AI-driven ERP system. Despite internal concerns about its feasibility and market readiness, he continuously assured the board of its progress and potential. However, when the product was finally launched, it was plagued with technical issues and received poor feedback from key clients. The board, having been assured of its success, felt misled by Mr. Ceoface’s optimistic but unrealistic assessments, leading to a decision to replace him.leading to a decision to replace him.

What all of these things have in common is that they involve misleading the board about something material. "Not fully candid", in the context of corporate communications, means "liar liar pants on fire", not "sometimes they make statements and those statements, while true, vaguely imply something that isn't accurate".

comment by Ben Pace (Benito) · 2023-11-21T00:14:26.009Z · LW(p) · GW(p)

I am interested in info sharing and discussion and hope this poll will help. However I feel unclear if this poll is encouraging people to "pick their positions" too quickly, while the proverbial fog of war is still high (I feel like that when considering agree/disagree voting some of the poll options). I am interested in hearing if others have that reaction (via react, comment, or DM). My guess is I am unlikely to take this down but it will inform whether we do this sort of thing in the future.

Replies from: D0TheMath, NicholasKross, ryan_b, cata↑ comment by Garrett Baker (D0TheMath) · 2023-11-21T01:07:56.666Z · LW(p) · GW(p)

Encouraging an "unsure" button by default may fix this fear.

↑ comment by Nicholas / Heather Kross (NicholasKross) · 2023-11-21T00:45:37.127Z · LW(p) · GW(p)

Anonymity, for at least one or more actions (voting on polls? Creating options?), should maybe be on the table. Then again, they ofc can send the wrong message and create their own incentives(?).

↑ comment by cata · 2023-11-22T07:38:14.788Z · LW(p) · GW(p)

I don't feel this pressure. I just decline to answer when I don't have a very substantial opinion. I do notice myself sort of judging the people who voted on things where clearly the facts are barely in, though, which is maybe an unfortunate dynamic, since others may reasonably interpret it as "feel free to take your best guess."

comment by Nicholas / Heather Kross (NicholasKross) · 2023-11-22T07:11:10.047Z · LW(p) · GW(p)

OpenAI should have an internal-only directory allowing employees and leadership to write up and see each other's beliefs about AI extinction risk and alignment approaches.

To expand on my option a bit more: OpenAI could make space for real in-depth dissent by doing something like "a legal binding commitment to not fire (or deny severance, even for quitting employees) anyone who shares their opinion, for the next 1-2 years.

This policy is obviously easy to exploit to get salary without working, but honestly that's a low impact given the stakes. (And unlikely, too: If you already work at OpenAI, but are corrupt, surely you could do something bigger than "collect 2 years of salary while doing nothing"?)

comment by Oliver Sourbut · 2023-11-21T15:46:29.967Z · LW(p) · GW(p)

I made at least two suggestions. One got decently upvoted (so far), another downvoted into negatives (so far)... but those two were substantially similar enough that I can't see how the question of whether to discuss them as propositions could arouse such different responses! I'm also wondering what is being expressed by an explicit downvote of a poll suggestion here - as opposed to simply not upvoting (which is what I've done for suggestions I'm not excited about). Is it something like, 'I expect discussing this proposition to be actively harmful'?

Replies from: evhub