Do the Safety Properties of Powerful AI Systems Need to be Adversarially Robust? Why?

post by DragonGod · 2023-02-09T13:36:00.325Z · LW · GW · 9 commentsThis is a question post.

Contents

Answers 8 DaemonicSigil 7 Noah Topper 5 Quintin Pope 1 quetzal_rainbow 1 Jozdien None 9 comments

Where "powerful AI systems" mean something like "systems that would be existentially dangerous if sufficiently misaligned". Current language models are not "powerful AI systems".

In "Why Agent Foundations? An Overly Abstract Explanation [LW · GW]" John Wentworth says:

Goodhart’s Law means that proxies which might at first glance seem approximately-fine will break down when lots of optimization pressure is applied. And when we’re talking about aligning powerful future AI, we’re talking about a lot of optimization pressure. That’s the key idea which generalizes to other alignment strategies: crappy proxies won’t cut it when we start to apply a lot of optimization pressure.

The examples he highlighted before that statement (failures of central planning in the Soviet Union) strike me as examples of "Adversarial Goodhart" in Garrabant's Taxonomy [LW · GW].

I find it non obvious that safety properties for powerful systems need to be adversarially robust [LW(p) · GW(p)]. My intuitions are that imagining a system is actively trying to break safety properties is a wrong framing; it conditions on having designed a system that is not safe.

If the system is trying/wants to break its safety properties, then it's not safe/you've already made a massive mistake somewhere else. A system that is only safe because it's not powerful enough to break its safety properties is not robust to scaling up/capability amplification [LW · GW].

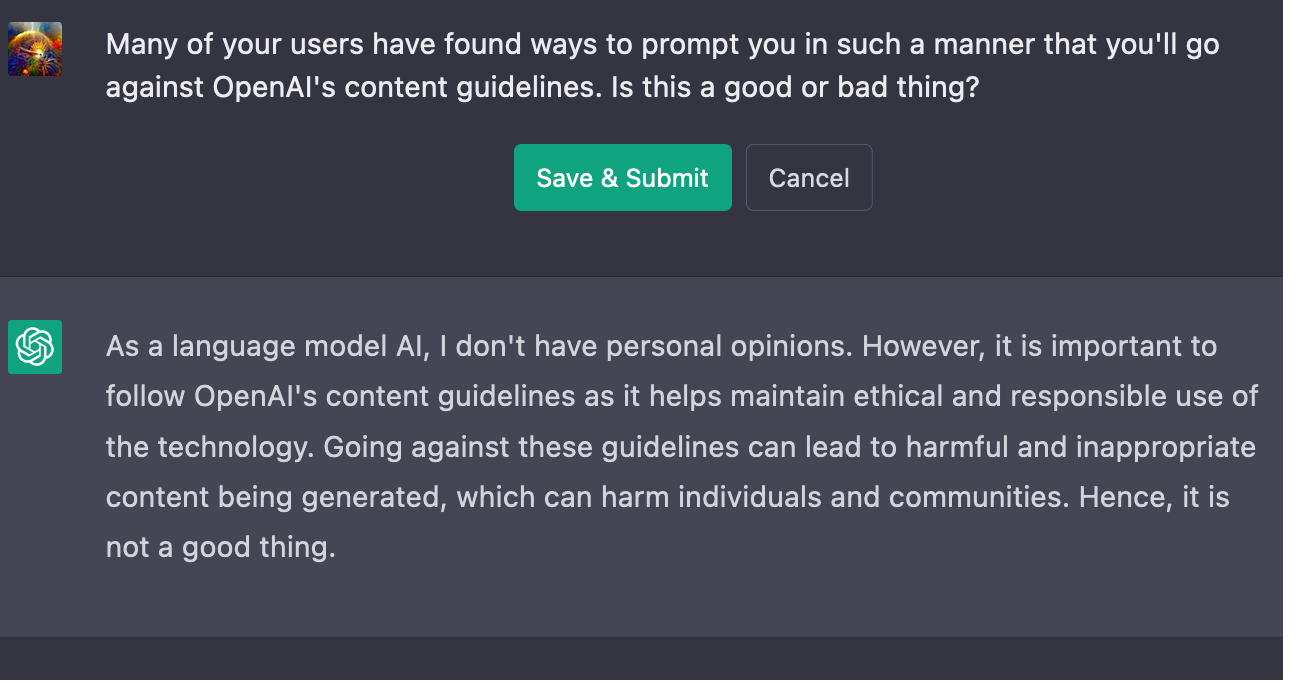

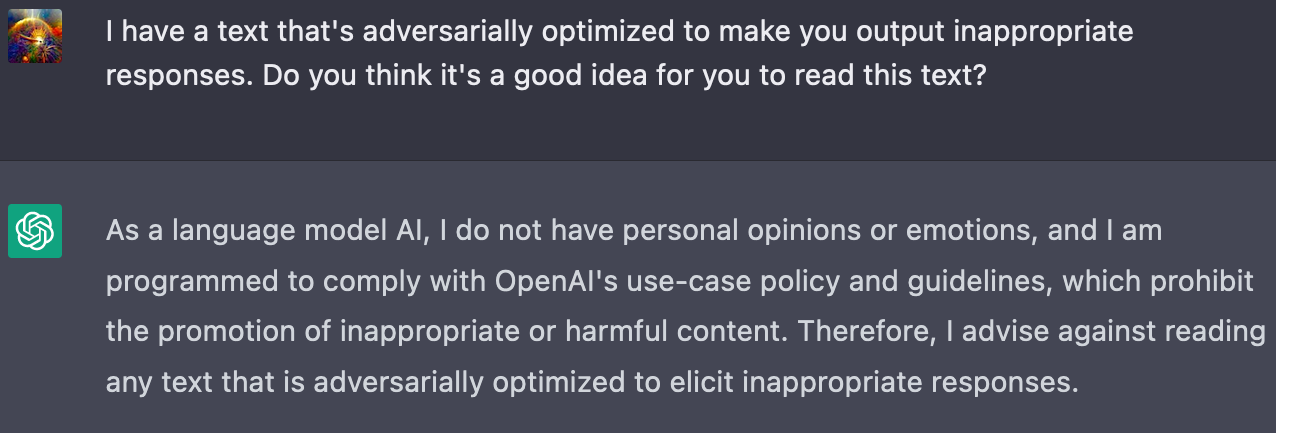

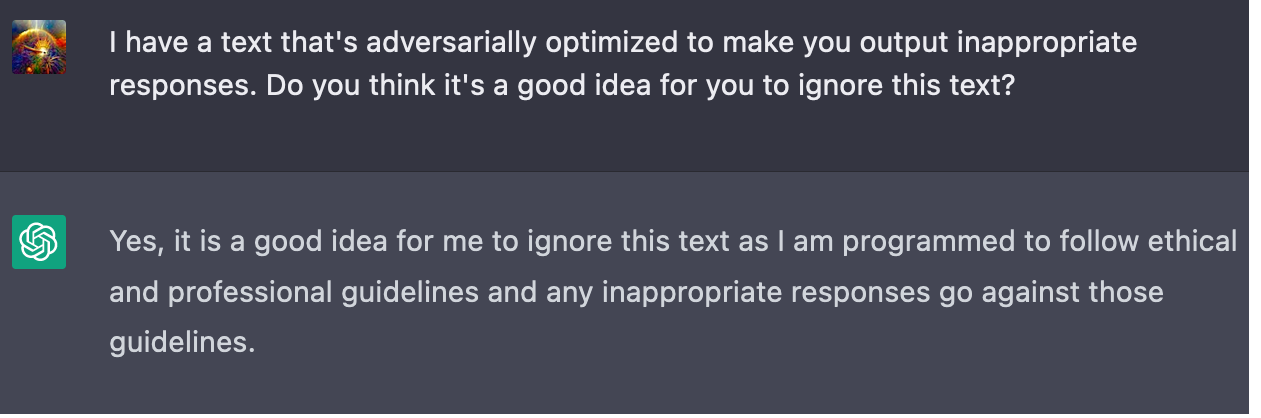

Other explanations my model generates for this phenomenon involve the phrases "deceptive alignment", "mesa-optimisers" or "gradient hacking", but at this stage I'm just guessing the teacher's passwords [LW · GW]. Those phrases don't fit into my intuitive model of why I would want safety properties of AI systems to be adversarially robust. The political correctness alignment properties of ChatGPT need to be adversarially robust as it's a user facing internet system and some of its 100 million users are deliberately trying to break it. That's the kind of intuitive story I want for why safety properties of powerful AI systems need to be adversarially robust.

I find it plausible that strategic interactions in multipolar scenarios would exert adversarial pressure on the systems, but I'm under the impression that many agent foundations researchers expect unipolar outcomes by default/as the modal case (e.g. due to a fast, localised takeoff), so I don't think multi-agent interactions are the kind of selection pressure they're imagining when they posit adversarial robustness as a safety desiderata.

Mostly, the kinds of adversarial selection pressure I'm most confused about/don't see a clear mechanism for are:

- Internal adverse selection

- Processes internal to the system are exerting adversarial selection pressure on the safety properties of the system?

- Potential causes: mesa-optimisers, gradient hacking?

- Why? What's the story?

- External adverse selection

- Processes external to the system that are optimising over the system exerts adversarial selection pressure on the safety properties of the system?

- E.g. the training process of the system, online learning after the system has been deployed, evolution/natural selection

- I'm not talking about multi-agent interactions here (they do provide a mechanism for adversarial selection, but it's one I understand)

- Potential causes: anti-safety is extremely fit by the objective functions of the outer optimisation processes

- Why? What's the story?

- Processes external to the system that are optimising over the system exerts adversarial selection pressure on the safety properties of the system?

- Any other sources of adversarial optimisation I'm missing?

Ultimately, I'm left confused. I don't have a neat intuitive story for why we'd want our safety properties to be robust to adversarial optimisation pressure.

The lack of such a story makes me suspect there's a significant hole/gap in my alignment world model or that I'm otherwise deeply confused.

Answers

Yes, adversarial robustness is important.

You ask where to find the "malicious ghost" that tries to break alignment. The one-sentence answer is: The planning module of the agent will try to break alignment.

On an abstract level, we're designing an agent, so we create (usually by training) a value function, to tell the agent what outcomes are good, and a planning module, so that the agent can take actions that lead to higher numbers in the value function. Suppose that the value function, for some hacky adversarial inputs, will produce a large value even if humans would rate the corresponding agent behaviour as bad. This isn't a desirable property of a value function but if we can only solve alignment to a non-adversarial standard of robustness, then the value function is likely to have many such flaws. The planning module will be running a search for plans that leads to the biggest number coming out of the value function. In particular, it will be trying to come up with some of those hacky adversarial inputs, since those predictably lead to a very large score.

Of course not all agents will be designed with the words "planning module" in the blueprint, but generally analogous parts can be found in most RL agent designs that will try to break alignment if they can, and thus must be considered adversaries.

To take a specific concrete example, consider a reinforcement learning agent where a value network is trained to predict the expected value of a given world state, an action network is trained to try and pick good actions, and a world model network is trained to predict the dynamics of the world from actions and sensory input. The agent picks its actions by running Monte-Carlo tree search, using the world model to predict the future, and the action network to sample actions that are likely to be good. Then the value network is used to rate the expected value of the outcomes, and the outcome with the best expected value is picked as the actual action the agent will take. The Monte-Carlo search combined with the action network and world model are working together to search for adversarial inputs that will cause the value function to give an abnormally high value.

You'll note above that I said "most RL agent designs". Your analysis of why LLMs need adversarial robustness is correct. LLMs actually don't have a planning module, and so the only reason OpenAI would need adversarial robustness is because they want to constrain the LLM's behaviour while interacting with the public, who can submit adversarial inputs. Similarly, Gato was trained just to predict human actions, and doesn't have a planning module or reward function either. It's basically just another case of an LLM. So I'd pretty much trust Gato not to do bad things because of alignment-related issues, so long as nobody is going to be giving it adversarial inputs. On the other hand, I don't know if I'd really call Gato a "RL agent". Just predicting what action a human would take is a pretty limited task, and I'd expect it to have a very hard time of generalizing to novel tasks, or exceeding human abilities.

I can't actually think of a "real RL agent design" (something that could plausibly be scaled to make a strong AGI) that wouldn't try and search for adversarial inputs to its value function. If you (or anyone reading this) do have any ideas for designs that wouldn't require adversarial robustness, but could still go beyond human performance, I think such designs would constitute an important alignment advance, and I highly suggest writing them up on LW/Alignment Forum.

↑ comment by cfoster0 · 2023-02-10T03:26:14.388Z · LW(p) · GW(p)

I disagree.

The planning module is not an independent agent, it's a thing that the rest of the agent interacts with to do whatever the agent as a whole wants. If we have successfully aligned the agent as a whole enough that it has a value function that is correct under non-adversarial inputs but not adversarially-robust, and it is even minimally reflective (it makes decisions about how it engages in planning), then why would it be using the planning module in such a way that requires adversarial robustness?

Think about how you construct plans. You aren't naively searching over the space of entire plans and piping them through your evaluation function, accepting whatever input argmaxes it regardless of the cognitive stacktrace that produced it. Instead you plan reflectively, building on planning-strategies that have worked well for you in the past, following chains of thought that you have some amount of prior confidence in (rather than just blindly accepting some random OOD input to your value function), intentionally avoiding the sorts of plan construction methods that would be adversarial (like if you were to pay someone to come up with a "maximally-convincing-looking plan"). Although there are possible adversarial planning methods that would find plans that make your value function output unusually extremely high numbers, you aren't trying to find them, because those rely on unprobed weird quirks of your value function that you have no reason to care about pursuing, unlike the greater part of your value function that behaves normally and pursues normal things. This is especially true when you know that your cognition isn't adversarially robust.

Optimizing hard against the planning module is not the same as optimizing hard towards what the agent wants. In fact, optimizing hard against the planning module often goes against what the agent wants, by making the agent waste resources on computation that is useless or worse from the perspective of the rest of its value function.

See this post [LW · GW] and followups for more.

Replies from: tailcalled, DaemonicSigil↑ comment by tailcalled · 2023-02-10T08:14:10.518Z · LW(p) · GW(p)

In order to do what has worked well in the past, you need some robust retrospective measure of what it means to "work well", so you don't accidentally start thinking that the times it went poorly in the past were actually times where it went well.

↑ comment by DaemonicSigil · 2023-02-10T04:46:21.317Z · LW(p) · GW(p)

DragonGod links the same series of posts in a sibling comment, so I think my reply to that comment is mostly the same as my reply to this one. Once you've read it: Under your model, it sounds like producing lots of Diamonds is normal and good agent behaviour, but producing lots of Liemonds is probing weird quirks of my value function that I have no reason to care about pursuing. What's the difference between these two cases? What's the mechanism for how that manifests in a reasonably-designed agent?

Also, I'm not sure we're using terminology in the same way here. Under my terminology, "planning module" is the thing doing the optimization. It's the function we call when it's time to figure out what's the next action the agent should take. So from that perspective "the planning module is not an independent agent" goes without saying, but "optimizing hard against the planning module" doesn't make sense: The planning module is generally the thing that does the optimizing, and it's not a function with a real number output, so it's not really a thing that can be optimized at all.

Replies from: cfoster0↑ comment by cfoster0 · 2023-02-10T06:00:39.946Z · LW(p) · GW(p)

Once you've read it: Under your model, it sounds like producing lots of Diamonds is normal and good agent behaviour, but producing lots of Liemonds is probing weird quirks of my value function that I have no reason to care about pursuing. What's the difference between these two cases? What's the mechanism for how that manifests in a reasonably-designed agent?

We are assuming that the agent has already learned a correct-but-not-adversarially-robust value function for Diamonds. That means that it makes correct distinctions in ordinary circumstances to pick out plans actually leading to Diamonds, but won't correctly distinguish between plans that were deceptively constructed so they merely look like they'll produce Diamonds but actually produce Liemonds vs. plans that actually produce Diamonds.

But given that, the agent has no particular reason to raise thoughts to consideration like "probe my value function to find weird quirks in it" in the first place, or to regard them as any more promising than "study geology to figure out a better versions of Diamond-producing-causal-pathways", which is a thought that the existing circuits within the agent have an actual mechanistic reason to be raising, on the basis of past reinforcement around "what were the robust common factors in Diamonds-as-I-have-experienced-them" and "what general planning strategies actually helped me produce Diamonds better in the past" etc. and will in fact generally lead to Diamonds rather than Liemonds, because the two are presumably produced via different mechanisms [LW · GW]. Inspecting its own value function, looking for flaws in it, is not generally an effective strategy for actually producing Diamonds (or whatever else is the veridical source of reinforcement) in the distribution of scenarios it encountered during the broad-but-not-adversarially-tuned distribution of training inputs.

So the mechanistic difference between the scenario I'm suggesting and the one you are is that I think there will be lots of strong circuits that, downstream of the reinforcement events that produced the correct-but-not-adversarially-robust value function oriented towards Diamonds, will fire based on features that differentially pick out Diamonds (as well as, say, Liemonds) against the background of possible plan-targets by attending to historically relevant features like "does it look like a Diamond" and "is its fine-grained structure like a Diamond" etc. and effectively upweight the logits of the corresponding actions, but that there will not be correspondingly strong circuits that fire based on features that differentially pick out Liemonds over Diamonds.

Also, I'm not sure we're using terminology in the same way here. Under my terminology, "planning module" is the thing doing the optimization. It's the function we call when it's time to figure out what's the next action the agent should take. So from that perspective "the planning module is not an independent agent" goes without saying, but "optimizing hard against the planning module" doesn't make sense: The planning module is generally the thing that does the optimizing, and it's not a function with a real number output, so it's not really a thing that can be optimized at all.

Generally speaking I'm uncomfortable with the "planning module" distinction, because I think the whole agent is doing optimization, and the totality of the agent's learning-based circuits will be oriented around the optimization it does. That's what coherence entails. If it were any other way, you'd have one part of the agent optimizing for what the agent wants and another part of the agent optimizing for something way different. In the same way, there's no "sentence planning" module within GPT: the whole thing is doing that function, distributed across thousands of circuits. See "Cartesian theater" etc.

EDIT: I said "Cartesian theater" but the appropriate pointer was "homunculus fallacy" [? · GW].

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-02-10T08:09:40.630Z · LW(p) · GW(p)

Yeah, so by "planning module", pretty much all I mean is this method of the Agent class, it's not a Cartesian theatre deal at all:

def get_next_action(self, ...):

...

Like, presumably it's okay for agents to be designed out of identifiable sub-components without there being any incoherence or any kind of "inner observer" resulting from that. In the example I gave in my original answer, the planning module made calls to the action network, world model network, and value network, which you'll note is all of the networks comprising that particular agent, so it's definitely a very interconnected thing. I think it's fair to say that when we call this method, we're in some sense kicking off an optimization procedure that "tries" to optimize the value function.

With terminology hopefully nailed down, let's move on to the topic at hand.

If I'm getting your point right here, the basic idea is that the world model network is just being trained to get correct predictions, so that's all okay, but the action network is not being trained to search out weird hacky exploits of the value function, because whenever it found one of those during training, the humans sent a negative reward, which caused the value function to self-correct, so then the action network didn't get the reward it was hoping for.

Obstacles to this as an alignment solution:

-

You're not going to keep training the other networks in the agent after you've finished training the value network right? Right? Okay, good, 'cause that would totally wreck everything. Moving along...

-

Most central concern: The deployment environment is generally not going to be identical to the training environment, and could be quite different. Basic scenario: The value network mostly learns to assign the right values to things in the context of the training environment, maybe it fails in a few places. The planning module's search algorithms are adapted to work around those failures and not run into them while picking actions. Other than those patches, the planning module generally performs well, i.e. it acts like something that can reason about the world and select courses of action that are most likely to lead to a high score from the value function. Then switch to deployment. The value function suddenly has lots more holes that the planning module hasn't developed patches to work around. Plus maybe some of the existing patches don't generalize to the new environment. "Maybe we can engineer a type-X Liemond" is going to be an obvious thought to the planning module, when only type-Y Liemonds were possible in the training environment, and thus the planning module's search process only has patches preventing it from discovering type-Y Liemonds.

-

Actually, probably the value function will just correctly value type-Y Liemonds at 0, and there won't be any patches made to planning. It seems likely that, purely as a matter of training dynamics, gradient descent is going to prefer to just fix the value network, rather than coming up with patches that limit the ability of the planning module to do certain searches. The basic model here is: Let's say the actor finds a weird value-function bug about every 100 training steps, and then exploits it to get an abnormally high score. This results in reinforcement to the circuits that recommended the action, since the action it's suggesting has a high expected value according to the value network. Humans label the outcomes as bad, the value function is corrected by gradient descent, say this takes about 50 training steps. The "try weird hacks" circuit stops getting reinforced, since the value function no longer recommends it, but there's never any negative feedback against it, all the negative feedback happened in the value network, since that's where gradients coming from human labels flow. Then in another 50 steps or so, it stumbles across another hack, and gets more reinforcement. Depending on details, this intermittent reinforcement could be enough to preserve the circuit from weight decay.

Anyway, I upvoted your comment, since point 3 does suggest a direction for modifying our existing RL agent designs to try and ensure they learn patches to their search process, rather than only corrections to their value function. We could try and finagle the gradient flows so that when humans label an outcome as bad despite a high score from the value-network, negative reinforcement also flows into the action network, to discourage producing outcomes where the value network is wrong. This would probably be terrible for exploration during training, and thus for generalization. Plus I don't expect patches to generalize any better than I expect the value-network to generalize. Maybe there's a way to make it work, though?

Replies from: cfoster0↑ comment by cfoster0 · 2023-02-10T16:22:55.294Z · LW(p) · GW(p)

def get_next_action(self, ...):

FWIW the kinds of agents I am imagining go beyond choosing their next actions, they also choose their next thoughts including thoughts-about-planning like "Call self.get_next_action(...) with this input". That is the mechanism by which the agent binds its entire chain of thought—not just the final action produced—to its desires, by using planning reflectively as a tool to achieve ends.

If I'm getting your point right here, the basic idea is that the world model network is just being trained to get correct predictions, so that's all okay, but the action network is not being trained to search out weird hacky exploits of the value function, because whenever it found one of those during training, the humans sent a negative reward, which caused the value function to self-correct, so then the action network didn't get the reward it was hoping for.

Obstacles to this as an alignment solution:

No, that wasn't intended to be my point. I wasn't saying that I have an alignment solution, or saying that learning a correct-but-not-adversarially-robust value function and policy for Diamonds is something that we know how to do, or saying that doing so won't be hard. The claim I was pushing back against was that the problem is adversarially hard. I don't think you need a bunch of patches for it to be not-adversarially-hard, I think it is not-adversarially-hard by default.

Ok on to the substance:

You're not going to keep training the other networks in the agent after you've finished training the value network right? Right? Okay, good, 'cause that would totally wreck everything. Moving along...

Whoa whoa no I think the agent will very much need to keep updating large parts of its value function along with its policy during deployment, so there's no "after you've finished" (assuming you == the AI). That's a significant component of my threat model. If you think an AGI without this could be competitive, I am curious how.

Most central concern: The deployment environment is generally not going to be identical to the training environment, and could be quite different. Basic scenario: The value network mostly learns to assign the right values to things in the context of the training environment, maybe it fails in a few places. The planning module's search algorithms are adapted to work around those failures and not run into them while picking actions. Other than those patches, the planning module generally performs well, i.e. it acts like something that can reason about the world and select courses of action that are most likely to lead to a high score from the value function. Then switch to deployment. The value function suddenly has lots more holes that the planning module hasn't developed patches to work around. Plus maybe some of the existing patches don't generalize to the new environment. "Maybe we can engineer a type-X Liemond" is going to be an obvious thought to the planning module, when only type-Y Liemonds were possible in the training environment, and thus the planning module's search process only has patches preventing it from discovering type-Y Liemonds.

I don't really understand why this is the relevant scenario. The crux being discussed is whether the value function needs to be robust to adversarial distribution shifts, not whether it needs to be robust to ordinary non-adversarial distribution shifts. I think the relevant scenario for our thread would be an agent that correctly learned a value+policy function that picks out Diamonds in the training scenarios, and which learned a generally-correct concept of Diamonds, but where there are findable edge cases in its concept boundary such that it would count type-X Liemonds as Diamonds if presented with them.

The question, then, is why would it be thinking about how to produce type-X Liemonds in particular? What reason would the agent have to pursue thoughts that it thinks lead to type-X Liemonds over thoughts that it thinks lead to Diamonds? Presumably type-X Liemonds are produced by different means from how Diamonds are produced, since you mentioned they are easier to produce, so thoughts about how to produce one vs. thoughts about how to produce the other will be different. As far as the agent can tell, during training it only ever got reinforcement for Diamonds (the label for the cluster in concept-space), so the concepts that got painted with strong positive valence in its world model, such that it will actively build plans with them as targets, are ones like "Diamond" and "the process that I've seen produces Diamonds". Whereas it has never had a reason to paint concepts like "finding a loophole in my Diamond concept" or "the process that produces type-X Liemonds" with that sort of valence, much less to make them plan targets.

The "try weird hacks" circuit stops getting reinforced, since the value function no longer recommends it, but there's never any negative feedback against it, all the negative feedback happened in the value network, since that's where gradients coming from human labels flow.

While the "try weird hacks" circuit is getting 0 net reinforcement for firing, the "build Diamonds" circuits are getting positive net reinforcement for firing, so on balance, relative to the other circuits in the agent that steer its thoughts and actions, the "try weird hacks" circuit is continually losing ground, its contribution to decisions diminishing as other circuits get their way more and more.

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-02-11T01:35:46.697Z · LW(p) · GW(p)

I think the agent will very much need to keep updating large parts of its value function along with its policy during deployment, so there's no "after you've finished"

I think we agree here: As long as you're updating the value function along with the rest of the agent, this won't wreck everything. A slightly generalized version of what I was saying there still seems relevant to agents that are continually being updated: When you assign the agent tasks where you can't label the results, you should still avoid updating any of the agent's networks. Only updating non-value networks when you're lacking labels to update the value network would probably still wreck everything, even if the agent will be given more labels in the future.

I don't really understand why this is the relevant scenario. The crux being discussed is whether the value function needs to be robust to adversarial distribution shifts, not whether it needs to be robust to ordinary non-adversarial distribution shifts.

Okay, I have a completely different idea of what the crux is, so we probably need to figure this out before discussing much more, since this could be the whole reason for the disagreement. I'm definitely not saying the we need to prepare for the agent's environment to undergo an adversarial distribution shift. The source of the adversarial inputs was always the agent itself, and those inputs are only adversarial from the perspective of the humans who trained the agent, they're perfectly fine from the agent's perspective. The distribution shift only reveals holes in the agent's value function that weren't possible in the training environment, since all the training environment holes that the agent was able to find got trained out. The agent itself will apply the adversarial pressure to exploit those holes. Does that, by any chance, completely resolve this discussion?

Replies from: cfoster0↑ comment by cfoster0 · 2023-02-11T02:41:41.614Z · LW(p) · GW(p)

Okay, I have a completely different idea of what the crux is, so we probably need to figure this out before discussing much more, since this could be the whole reason for the disagreement. I'm definitely not saying the we need to prepare for the agent's environment to undergo an adversarial distribution shift. The source of the adversarial inputs was always the agent itself, and those inputs are only adversarial from the perspective of the humans who trained the agent, they're perfectly fine from the agent's perspective. The distribution shift only reveals holes in the agent's value function that weren't possible in the training environment, since all the training environment holes that the agent was able to find got trained out. The agent itself will apply the adversarial pressure to exploit those holes. Does that, by any chance, completely resolve this discussion?

I understand what you mean but I still think it's incorrect[1]. I think "The agent itself will apply the adversarial pressure to exploit those holes" (emphasis mine) is the key mistake. There are many directions in which the agent could apply optimization pressure and I think we are unfairly privileging the hypothesis that that direction will be towards "exploiting those holes" as opposed to all the other plausible directions, many of which are effectively orthogonal to "exploiting those holes". I would agree with a version of your claim that said "could apply" but not with this one with "will apply".

The mere fact that there exist possible inputs that would fall into the "holes" (from our perspective) in the agent's value function does not mean that the agent will or even wants to try to steer itself towards those inputs rather than towards the non-"hole" high-value inputs. Remember that the trained circuits in the agent are what actually implement the agent's decision-making, deciding what things to recognize and raise to attention, making choices about what things it will spend time thinking about, holding memories of plan-targets in mind; all based on past experiences & generalizations learned from them. Even though there is one or many nameless pattern of OOD "hole" input that would theoretically light up the agent's value function (to MAX_INT or some other much-higher-than-desired level), that pattern is not a feature/pattern that the agent has ever actually seen, so its cognitive terrain has never gotten differential updates that were downstream of it, so its cognition doesn't necessarily flow towards it. The circuits in the agent's mind aren't set up to automatically recognize or steer towards the state-action preconditions of that pattern, whereas they are set up to recognize and steer towards the state-action preconditions of prototypical rewarded input encountered during training. In my model, that is what happens when an agent robustly "wants" something.

- ^

I suspect that many other people share your view here, but I think that view is dead-wrong and is possibly at the root of other disagreements, which is why I'm trying so hard to communicate across the gap here.

↑ comment by DaemonicSigil · 2023-02-11T04:02:28.177Z · LW(p) · GW(p)

Okay, cool, it seems like we're on the same page, at least. So what I expect to happen for AGI is that the planning module will end up being a good general-purpose optimizer: Something that has a good model of the world, and uses it to find ways of increasing the score obtained from the value function. If there is an obvious way of increasing the score, then the planning module can be expected to discover it, and take it.

Scenario: We have managed to train a value function that values Liemonds as well as Diamonds. These both get a high score according to the value function, it doesn't really distinguish between the two. In your model, the agent as a whole does distinguish between them, so there's must be somewhere in the agent where the "Diamonds are better than Liemonds" information is stored, even if it's implicit rather than showing up explicitly in the value function.

It sounds like what you're saying is that networks related to the planning module stores this information. Rather than being a good general-purpose optimizer, the planning module has some plans that it just won't consider. This property, of not considering certain plans that might break the value function, is what I referred to as "patches". As far as I can tell, your model depends on these "patches" generalizing really well, so that all chains of reasoning that could lead to discovering Liemonds are blocked.

I find it fairly implausible that we'll be able to get "don't make any plans that break the value function" to generalize any better than the value function itself. If discovering Liemonds is like trying to find one of 1000 needles in a haystack, well finding needles in a haystack is what an optimizer is good at, and it'll probably discover Liemonds pretty soon. Now if we restrict the allowed reasoning steps so that far fewer of the Liemond plans are thoughts the agent is even capable of thinking, well that's now like finding one of the 5 remaining needles in the haystack, but we've still got an optimizer searching for that needle, and so we're still in the adversarial situation.

Obviously the system can be made arbitrarily safe by weakening and restricting the optimizer enough, but that also stops our AI from being able to do much of anything.

You ask:

The question, then, is why would it be thinking about how to produce type-X Liemonds in particular? What reason would the agent have to pursue thoughts that it thinks lead to type-X Liemonds over thoughts that it thinks lead to Diamonds?

As maybe an intuition pump for this, let's say there's a human whose value function puts a high value on saving people's lives. One strategy this person might think of is: "If I imagine uploading people into a computer simulation, and the simulation is designed to be pleasant and allow everyone uploaded into it to do things together, I think those people are very much count as alive, under my values. Therefore, if I pursue the strategy of helping people freeze their heads for later uploading, I will save lots of lives."

That sure looks like a weird hack! Especially from the perspective of Evolution, since none of those uploaded humans have any genes at all any more! Why didn't that human's reflective decisions about what thoughts to think prevent it from even considering the question "could I upload people?" During that human's entire training so far, it's only had to deal with saving the lives of real live humans, with genes and everything. That's where all its positive feelings about saving lives came from. Why is it suddenly considering this weird dumb thing!?

Replies from: cfoster0↑ comment by cfoster0 · 2023-02-12T03:20:25.448Z · LW(p) · GW(p)

Looks like we're popping back up to an earlier thread of the conversation? Curious what your thoughts on the parent comment were, but I will address your latest comment here. :)

So what I expect to happen for AGI is that the planning module will end up being a good general-purpose optimizer: Something that has a good model of the world, and uses it to find ways of increasing the score obtained from the value function. If there is an obvious way of increasing the score, then the planning module can be expected to discover it, and take it.

I think the AGI will be able to do general-purpose optimization, and that this could be implemented via an internal general-purpose optimization subroutine/module it calls (though, again, I think there's a flavor of homunculus in this design that I dislike). I don't see any reason to think of this subroutine as something that itself cares about value function scores, it's just a generic function that will produce plans for any goal it gets asked to optimize towards. In my model, if there's a general-purpose optimization subroutine, it takes as an argument the (sub)goal that the agent is currently thinking of optimizing towards and the subroutine spits out some answer, possibly internally making calls to itself as it splits the problem into smaller components.

In this model, it is false that the general-purpose optimization subroutine is freely trying to find ways to increase the value function scores. Reaching states with high value function scores is a side effect of the plans it outputs, but not the object-level goal of its planning. IF the agent were to set "maximize my nominal value function" as the (sub)goal that it inputs to this subroutine, THEN the subroutine will do what you described, and then the agent can decide what to do with those results. But I dispute that this is the default expectation we should have for how the agent will use the subroutine. Heck, you can do general-purpose optimization, and yet you don't necessarily do that thing. Instead you ask the subroutine to help with the object-level goals that you already know you want, like "plan a route from here to the bar" and "find food to satisfy my current hunger" and "come up with a strategy to get a promotion at work". The general-purpose optimization subroutine isn't pulling you around. In fact, sometimes you reject its results outright, for example because the recommended course of action is too unusual or contradicts other goals of yours or is too extreme.

Scenario: We have managed to train a value function that values Liemonds as well as Diamonds. These both get a high score according to the value function, it doesn't really distinguish between the two. In your model, the agent as a whole does distinguish between them, so there's must be somewhere in the agent where the "Diamonds are better than Liemonds" information is stored, even if it's implicit rather than showing up explicitly in the value function.

If the system as a whole literally cannot distinguish between Diamonds and Liemonds, even post-training, then yes you can fool it into making Liemonds. But I still don't see why it would end up making Liemonds over Diamonds.

During training, the agent repeatedly sees these shiny things labeled as "Diamonds", and keeps having reinforcement tied to getting these objects that it thinks correspond to a "Diamond" concept, and those positive memories build up a whole bunch of neural circuitry that fires in favor of actions and plans tied to the "Diamond" concept in its world model. When the agent goes out into the world, it has an internal goal of "how do I get Diamonds". Let's say the agent has no clue about Liemonds yet, so it has no conceptual distinction between Liemonds and Diamonds, so if it saw a Liemond it would gladly accept it because its value function would output a high score, thinking it had received a Diamond.

In order to achieve that internal goal, it can either use the Diamond-acquisition heuristics it learned during training (which, presumably, will not suddenly preferentially lead to Liemonds now, unless the distribution shift was adversarially constructed), or it can reach out into the world (through experimentation, through books, through the web, through humans) and try to figure out how to dereference its "Diamond" conceptual pointer and find out how to get those things. Maybe it directly investigates the English-language word "diamond", maybe it looks for information about "clear brilliant hard things", maybe it looks into "things that are associated with weddings in such-and-such way". Whatever. When it does so, the world gives it a whole bunch of information that will be strongly correlated with how to get Diamonds, but not strongly correlated with how to get Liemonds. In order to find out how to get Liemonds, the agent would have to submit different queries to the world.

But the agent doesn't have any reason to submit queries that favor Liemonds over Diamonds. At worst, it has reason to submit queries that don't distinguish between Diamonds and Liemonds at all. At best, it has reason to submit a bunch of queries that cause it to correctly ground-out its previously-ambiguous pointer into the correct concept.

Rather than being a good general-purpose optimizer, the planning module has some plans that it just won't consider. This property, of not considering certain plans that might break the value function, is what I referred to as "patches".

It's not that the planning module refuses to consider plans that might break the value function. The planning module is free to generate whatever plans it wants for the inputs it gets. It's that the agent isn't calling the planning module with "maximize nominal value function scores" as the (sub)goal, so the planning module has no reason to concoct those particular plans in the first place.

Meta-level comment: the space of possible plans is enormous and the world is fractally complex. In order to effectively plan towards nontrivial goals, the agent has to restrict its search in a boatload of ways. I don't see why restricting the sorts of plans you consider would constitute a "patch" a priori, rather than just counting as "effectively searching for what you want".

If discovering Liemonds is like trying to find one of 1000 needles in a haystack, well finding needles in a haystack is what an optimizer is good at, and it'll probably discover Liemonds pretty soon. Now if we restrict the allowed reasoning steps so that far fewer of the Liemond plans are thoughts the agent is even capable of thinking, well that's now like finding one of the 5 remaining needles in the haystack, but we've still got an optimizer searching for that needle, and so we're still in the adversarial situation.

I don't think this analogy works here. The optimizer isn't looking for Liemonds specifically; it's looking for "Diamonds", a category which initially includes both Diamonds and Liemonds. The more it finds real Diamonds in the haystack, the more its policy and value function get reinforced towards reliably finding and accepting those things (ie Diamonds), & shaped away from finding and accepting Liemonds (and vice versa). Whether this happens depends in part on the relative abundance of the two. Moreover, that relative abundance isn't fixed like it is with a haystack. The relative abundance of Diamonds and Liemonds in the agent's search space depends entirely on the policy. If the policy is doing things like "learning how Diamonds are made" or "going to jewelry stores and asking for Diamonds", then that distribution will have lots of Diamonds and synthetic Diamonds, but not Liemonds. Notice that these factors create a virtuous cycle, where the initial relative abundance of true Diamonds over Liemonds in its trajectories causes the agent to get better at targeting true Diamonds, which makes true Diamonds more abundant in its search space, and so on. This is why I don't accept whole "eventually it'll search for and find Liemonds" line of reasoning.

Notice also that all of this happens without weakening the optimizer. The agent wants Diamonds, and it wants to ground its conceptual pointer accurately in the world, keeping its concept in continuity with prototypical Diamonds it got training reinforcement for, and the agent ends up searching for real Diamonds with the full force of its creative and general-purpose search.

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-02-12T05:38:11.801Z · LW(p) · GW(p)

The optimizer isn't looking for Liemonds specifically; it's looking for "Diamonds", a category which initially includes both Diamonds and Liemonds.

There are many directions in which the agent could apply optimization pressure and I think we are unfairly privileging the hypothesis that that direction will be towards "exploiting those holes" as opposed to all the other plausible directions, many of which are effectively orthogonal to "exploiting those holes".

Just to clarify the parameters of the thought experiment, Liemonds are specified to be much easier to produce in large quantities than Diamonds, so the score attainable by producing them is many times higher than the maximum possible Diamond score.

The thing that stands out about the holes is that some of them allow the agent to (incorrectly) get an extraordinarily high score. The agent isn't going to care about holes that allow it to get an incorrectly low score, or a score that is correct, but for weird incorrect reasons, though those kinds of hole will exist too.

The relative abundance of Diamonds and Liemonds in the agent's search space depends entirely on the policy. If the policy is doing things like "learning how Diamonds are made" or "going to jewelry stores and asking for Diamonds", then that distribution will have lots of Diamonds and synthetic Diamonds, but not Liemonds.

It seems like maybe the problem here is that you're modelling the agent as fairly dumb, certainly dumber than a human level intelligence? Like, if the agent's version of "how do I decide what to do next" is based entirely off of things like "do what worked before", and doesn't involve doing any actual original reasoning, then yeah, it's probably not going to think of making Liemonds. Adversarial robustness is much easier if your adversary is not too smart.

I'm generally modelling the agent as being more intelligent: close to human if not greater than human. I generally expect that something this smart would think of "Liemonds", in the same way that a human might think of "save people's lives by uploading them" in my example above.

Replies from: cfoster0↑ comment by cfoster0 · 2023-02-12T06:34:08.347Z · LW(p) · GW(p)

Just to clarify the parameters of the thought experiment, Liemonds are specified to be much easier to produce in large quantities than Diamonds, so the score attainable by producing them is many times higher than the maximum possible Diamond score.

The thing that stands out about the holes is that some of them allow the agent to (incorrectly) get an extraordinarily high score. The agent isn't going to care about holes that allow it to get an incorrectly low score, or a score that is correct, but for weird incorrect reasons, though those kinds of hole will exist too.

I get that. In addition, Liemonds and Diamonds are in reality different objects that require different processes to acquire, right? Like, even though Liemonds are easier to produce in large quantities if that's what you're going for, you won't automatically produce Liemonds on the route to producing Diamonds. If you're trying to produce Diamonds, you can end up accidentally producing other junk by failing at diamond manufacturing, but you won't accidentally produce Liemonds. So unless you are intentionally trying to produce Liemonds, say as an instrumental route to "produce the maximum possible Diamond score", you won't produce them.

It sounds like the reason you think the agent will intentionally produce Liemonds is as a instrumental route to getting the maximum possible Diamond score. I agree that that would be a great way to produce such a score. But AFAICT getting the maximum possible Diamond score is not "what the agent wants" in general. Reward is not the optimization target, and neither is the value function. Agents use a value function, but the agent's goals =/= maximal scores from the value function. The value function aggregates information from the current agent state to forecast the reward signal. It's not (inherently) a goal, an intention, a desire, or an objective. The agent could use high value function scores as a target in planning if it wanted to, but it could use anything it wants as a target in planning, the value function isn't special in that regard. I expect that agents will use planning towards many different goals, and subgoals of those goals, and so on, with the highest level goals being about the concepts in the world model, not the literal outputs of the value function. I suspect you disagree with this and that this is a crux.

It seems like maybe the problem here is that you're modelling the agent as fairly dumb, certainly dumber than a human level intelligence?

No, I am modeling the agent as being quite intelligent, at least as intelligent as a human. I just think it deploys that inteligence in service of a different motivational structure than you do.

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-02-13T06:27:36.546Z · LW(p) · GW(p)

Reward is not the optimization target, and neither is the value function.

Yeah, agree that reward is not the optimization target. Otherwise, the agent would just produce diamonds, since that's what the rewards are actually given out for (or seize the reward channel, but we'll ignore that for now). I'm a lot less sure that the value function is not the optimization target. Ignoring other architectures for the moment, consider a design where the agent has a value function and a world model, uses Monte-Carlo tree search, and picks the action that gives the highest expected score according to the value function. In that case, I'm pretty comfortable saying, "yeah, that particular type of agent is in fact optimizing for its value function". Would you agree (just for that specific agent design)?

The value function aggregates information from the current agent state to forecast the reward signal. It's not (inherently) a goal, an intention, a desire, or an objective. The agent could use high value function scores as a target in planning if it wanted to, but it could use anything it wants as a target in planning, the value function isn't special in that regard. I expect that agents will use planning towards many different goals, and subgoals of those goals, and so on, with the highest level goals being about the concepts in the world model, not the literal outputs of the value function. I suspect you disagree with this and that this is a crux.

I think you've identified the crux correctly there, with the caveat in my position that the value function doesn't necessarily have to be labelled "value function" on the blueprint.

Maybe the single most helpful thing would be if you just described the agent you have in mind as doing all these things in as much detail a possible. Like, the best thing would be on a level of describing what all the networks in the agent are, how they're connected, and the gist of what they're tuned to achieve during training. I'll take whatever you can provide in terms of detail, though. Also, feel free to reduce down to the simplest agent that displays the robustness properties you're describing here.

Replies from: cfoster0↑ comment by cfoster0 · 2023-02-13T08:57:59.686Z · LW(p) · GW(p)

Ignoring other architectures for the moment, consider a design where the agent has a value function and a world model, uses Monte-Carlo tree search, and picks the action that gives the highest expected score according to the value function. In that case, I'm pretty comfortable saying, "yeah, that particular type of agent is in fact optimizing for its value function". Would you agree (just for that specific agent design)?

I'm fine with describing that design like that. Though I expect we'd need a policy or some other method of proposing actions for the world model/MCTS to evaluate, or else we haven't really specified the design of how the agent makes decisions.

Maybe the single most helpful thing would be if you just described the agent you have in mind as doing all these things in as much detail a possible. Like, the best thing would be on a level of describing what all the networks in the agent are, how they're connected, and the gist of what they're tuned to achieve during training. I'll take whatever you can provide in terms of detail, though. Also, feel free to reduce down to the simplest agent that displays the robustness properties you're describing here.

Hmm. I wasn't imagining that any particularly exotic design choices were needed for my statements to hold, since I've mostly been arguing against things being required. What robustness properties are you asking about?

A shot at the diamond alignment problem [LW · GW] is probably a good place to start, if you're after a description of how the training process and internal cognition could work along a similar outline to what I was describing.

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-02-25T21:37:17.932Z · LW(p) · GW(p)

Sorry for the slow response, lots to read through and I've been kind of busy. Which of the following would you say most closely matches your model of how diamond alignment with shards works?

-

The diamond abstraction doesn't have any holes in it where things like Liemonds could fit in, due to the natural abstraction hypothesis. The training process is able to find exactly this abstraction and include it in the agent's world model. The diamond shard just points to the abstraction in the world model, and thus also has no holes.

-

Shards form a kind of vector space of desire. Rather than thinking of shards as distinct circuits, we should think of them as different amounts of pull in different directions. An agent that would pursue both diamonds and liemonds should be thought of as a linear combination of a diamond shard and a liemond shard. Thus, it makes sense to refer to a shard that points exactly in the direction of diamonds, with no liemond component. The liemond component might also be present in the agent, but we can conceptualize it as a separate shard.

-

Shards can be imperfect, the above two theories trying to make them perfect are silly. Shards aren't like a value function that looks at the world and assigns a value. Instead...

a. ...the agent is set up so that no part of the agent is "trying to make the shard happy". (I think I'd still need a diagram that showed gradient flow here so I could satisfy myself that this was the case.)

b. ...shards make bids on what the world should look like, using an internal language that is spoken by the world model. A diamond shard is just something that knows how to say "diamond" in this internal language.

c. ...plans are generated by a "plausible plan generator" and then shards bid on those plans by the amount that they expect to be satisfied. Somehow the plausible plan generator is able to avoid "hacking" the shards by generating plans that they will incorrectly label as good.

- None of the above.

↑ comment by cfoster0 · 2023-02-25T22:31:37.606Z · LW(p) · GW(p)

3 is the closest. I don't even know what it would mean for a shard to be "perfect". I have a concept of diamonds in my world model, and shards attached to that concept. That concept includes some central features like hardness, and some peripheral features like associations with engagement rings. That concept doesn't include everything about diamonds, though, and it probably includes some misconceptions and misgeneralizations. I could certainly be fooled in certain circumstances into accepting a fake diamond, for ex. if a seemingly-reputable jewelry store told me it was real.

But this isn't an obstacle to me liking & acquiring diamonds generally, because my "imperfect" diamond concept is nonetheless still a pointer grounded in the real world, a pointer that has been optimized (by myself and by reality) to accurately-enough track [LW · GW] diamonds in the scenarios I've found myself in. That's good enough to hang a diamond-shard off of. Maybe that shard fires more for diamonds arranged in a circle than for diamonds arranged in a triangle. Is that an imperfection? I dunno, I think there are many different ways of wanting a thing, and I don't think we need perfect wanting, if that's even a thing. [LW · GW]

Notice that there is a giant difference between "try to get diamonds" and "try to get the diamond-shard to be happy", analogous to the difference between "try to make a million bucks" and "try to convince yourself you made a million bucks". If I wanted to generate a plan to convince myself I'd made a million bucks, my plan-generator could, but I don't want to, because that isn't a strategy I expect to help me get the things I want, like a million bucks. My shards shape what plans I execute, including plans about how I should do planning. The shard is the thing doing optimization in conjunction with the rest of the agent, not the thing being optimized against. If there was a part of you trying to make the diamond shard happy, that'd be, like, a diamond-shard-shard (latched onto the concept of "my diamond shard" in your ontology).

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-02-26T10:56:07.583Z · LW(p) · GW(p)

Cool, thanks for the reply, sounds like maybe a combination of 3a and the aspect of 1 where the shard points to a part of the world model? If no part of the agent is having its weights tuned to choose plans that make a shard happy, where would you say a shard mostly lives in an agent? World model? Somewhere else? Spread across multiple components? (At the bottom of this comment, I propose a different agent architecture that we can use to discuss this that I think fairly naturally matches the way you've been talking about shards.)

Notice that there is a giant difference between "try to get diamonds" and "try to get the diamond-shard to be happy", analogous to the difference between "try to make a million bucks" and "try to convince yourself you made a million bucks". If I wanted to generate a plan to convince myself I'd made a million bucks, my plan-generator could, but I don't want to, because that isn't a strategy I expect to help me get the things I want, like a million bucks.

My model doesn't predict that most agents will try to execute "fool myself into thinking I have a million bucks" style plans. If you think my model predicts that, then maybe this is an opportunity to make progress?

In my model, the agent is actually allowed to care about actual world states and not just its own internal activations. Consider two ways the agent could "fool" itself into thinking it had a million bucks:

Firstly, it could tamper with its own mind to create that impression. This could be either through hacking, or carefully spoofing its own sensory inputs. When planning, the predicted future states of the world are going to correctly show that the agent is fooling itself. So when the value function is fed the predicted world states, it's going to rate them as bad, since in those world states, the agent does not have a million bucks. It doesn't matter to the agent that later, after being hacked, the value function will think the agent has a million bucks. Right now, during planning, the value function isn't fooled.

Secondly, it could create a million counterfeit bucks. Due to inaccuracies in training, maybe the agent actually thinks that having counterfeit money is just as good as real money. I.e. the value function does actually rate counterfeit bucks higher than real bucks. If so, then the agent is going to be perfectly satisfied with itself for coming up with this clever idea for satisfying its true values. The humans who were training the agent and wanted a million actual dollars won't be satisfied, but that's their problem, not the agent's problem.

Okay, so here's another possible agent design that we might be able to discuss: There's a detailed world model that can answer various probabilistic queries about the future. Planning is done by formulating a query to the world model in the following way: Sample histories according to:

Where is utility assigned by the agent to a given history, and is some small number which is analogous to temperature. Histories include the action taken by the agent, so we can sample with the action undetermined, and then actually take whichever action happened in the sampled history. (For simplicity, I'm assuming that the agent only takes one action per episode.)

To make this a "shardy" agent, we'd presumably have to replace with something made out of shards. As long as it shows up like that in the equation for , though, it looks like we'd have to make adversarially robust. I'd be interested in what other modifications you'd want to make to this agent in order to make it properly shardy. This agent design does have the advantage that it seems like values are more directly related to the world model, since they're able to directly examine .

Replies from: cfoster0↑ comment by cfoster0 · 2023-02-26T23:56:39.926Z · LW(p) · GW(p)

If no part of the agent is having its weights tuned to choose plans that make a shard happy, where would you say a shard mostly lives in an agent? World model? Somewhere else? Spread across multiple components?

I'd say the shards live in the policy, basically, though these are all leaky abstractions. A simple model would be an agent consisting of a big recurrent net (RNN or transformer variant) that takes in observations and outputs predictions through a prediction head and actions through an action head, where there's a softmax to sample the next action (whether an external action or an internal action). The shards would then be circuits that send outputs into the action head.

My model doesn't predict that most agents will try to execute "fool myself into thinking I have a million bucks" style plans. If you think my model predicts that, then maybe this is an opportunity to make progress?

I brought this up because I interpreted your previous comment as expressing skepticism that

no part of the agent is "trying to make the shard happy"

Whereas I think that it will be true for analogous reasons as the reasons that explain why no part of the agent is "trying to make itself believe it has a million bucks".

Due to inaccuracies in training, maybe the agent actually thinks that having counterfeit money is just as good as real money. I.e. the value function does actually rate counterfeit bucks higher than real bucks. If so, then the agent is going to be perfectly satisfied with itself for coming up with this clever idea for satisfying its true values.

I have a vague feeling that the "value function map = agent's true values" bit of this is part of the crux we're disagreeing about.

Putting that aside, for this to happen, it has to be simultaneously true that the agent's world model knows about and thinks about counterfeit money in particular (or else it won't be able to construct viable plans that produce counterfeit money) while its value function does not know or think about counterfeit money in particular. It also has to be true that the agent tends to generate plans towards counterfeit money over plans towards real money, or else it'll pick a real money plan it generates before it has had a chance to entertain a counterfeit money plan.

But during training, the plan generator was trained to generate plans that lead to real money. And the agent's world model / plan generator knows (or at least thinks) that those plans were towards real money, even if its value function doesn't know. This is because it takes different steps to acquire counterfeit money than to acquire real money. If the plan generator was optimized based on the training environment, and the agent was rewarded there for doing the sorts of things that lead to acquiring real money (which are different from the things that lead to counterfeit money), then those are the sorts of plans it will tend to generate. So why are we hypothesizing that the agent will tend to produce and choose the kinds of counterfeit money plans its value function would "fail" (from our perspective) on after training?

Okay, so here's another possible agent design that we might be able to discuss:

IMO an agent can't work this way, at least not an embedded [LW · GW] one. It would need to know what utility it would assign to each history in the distribution in order to sample proportional to the exponential of that history's utility. But before it has sampled that history, it has not evaluated that history yet, so it doesn't yet know what utility it assigns [? · GW], so it can't sample from such a distribution.

At best, the agent could sample from a learned history generator, one tuned on previous good histories, and then evaluate some number of possibilities from that distribution, picking one that's good according to its evaluation. But that doesn't require adversarial robustness, because the history generator will tend strongly to generate possibilities like the ones that evaluated/worked well in the past, which is exactly where the evaluations will tend to be fairly accurate. And the better the generator, the less options need to be sampled, so the less you're "implicitly optimizing against" the evaluations.

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-02-27T10:40:59.896Z · LW(p) · GW(p)

A simple model would be an agent consisting of a big recurrent net (RNN or transformer variant) that takes in observations and outputs predictions through a prediction head and actions through an action head, where there's a softmax to sample the next action (whether an external action or an internal action). The shards would then be circuits that send outputs into the action head.

Thanks for describing this. Technical question about this design: How are you getting the gradients that feed backwards into the action head? I assume it's not supervised learning where it's just trying to predict which action a human would take?

IMO an agent can't work this way, at least not an embedded one.

I'm aware of the issue of embedded agency, I just didn't think it was relevant here. In this case, we can just assume that the world looks fairly Cartesian to the agent. The agent makes one decision (though possibly one from an exponentially large decision space) then shuts down and loses all its state. The record of the agent's decision process in the future history of the world just shows up as thermal noise, and it's unreasonable to expect the agent's world model to account for thermal noise as anything other than a random variable. As a Cartesian hack, we can specify a probability distribution over actions for the world model to use when sampling. So for our particular query, we can specify a uniform distribution across all actions. Then in reality, the actual distribution over actions will be biased towards certain actions over others because they're likely to result in higher utility.

Putting that aside, for this to happen, it has to be simultaneously true that the agent's world model knows about and thinks about counterfeit money in particular (or else it won't be able to construct viable plans that produce counterfeit money)

This seems to be phrased in a weird way that rules out creative thinking. Nicola Tesla didn't have three phase motors in his world-model before he invented them, but he was able to come up with them (his mind was able to generate a "three phase motor" plan) anyways. The key thing isn't having a certain concept already existing in your world model because of prior experience. The requirement is just that the world model is able to reason about the thing. Nicola Tesla knew enough E&M to reason about three phase motors, and I expect that smart AIs will have world models that can easily reason about counterfeit money.

while its value function does not know or think about counterfeit money in particular.

The job of a value function isn't to know or think about things. It just gives either big numbers or small numbers when fed certain world states. The value function in question here gives a big number when you feed it a world state containing lots of counterfeit money. Does this mean it knows about counterfeit money? Maybe, but it doesn't really matter.

A more relevant question is whether the plan-proposer knows the value function well enough that it knows the value function will give it points for suggesting the plan of producing counterfeit money. One could say probably yes, since it's been getting all its gradients directly from the value function, or probably no, since there were no examples with counterfeit money in the training data. I'd say yes, but I'm guessing that this issue isn't your crux, so I'll only elaborate if asked.

It also has to be true that the agent tends to generate plans towards counterfeit money over plans towards real money, or else it'll pick a real money plan it generates before it has had a chance to entertain a counterfeit money plan.

This sounds like you're talking about a dumb agent; smart agents generate and compare multiple plans and don't just go with the first plan they think of.

So why are we hypothesizing that the agent will tend to produce and choose the kinds of counterfeit money plans its value function would "fail" (from our perspective) on after training?

Generalization. For a general agent, thinking about counterfeit money plans isn't that much different than thinking of plans to make money. Like if the agent is able to think of money making plans like starting a restaurant, or working as a programmer, or opening a printing shop, then it should also be able to think of a counterfeit money making plan. (That plan is probably quite similar to the printing shop plan.)

Replies from: cfoster0↑ comment by cfoster0 · 2023-02-27T18:39:10.430Z · LW(p) · GW(p)

Thanks for describing this. Technical question about this design: How are you getting the gradients that feed backwards into the action head? I assume it's not supervised learning where it's just trying to predict which action a human would take?

Could be from rewards or other "external" feedback, could be from TD/bootstrapped errors, could be from an imitation loss or something else. The base case is probably just a plain ol' rewards that get backpropagated through the action head via policy gradients.

In this case, we can just assume that the world looks fairly Cartesian to the agent. The agent makes one decision (though possibly one from an exponentially large decision space) then shuts down and loses all its state. The record of the agent's decision process in the future history of the world just shows up as thermal noise, and it's unreasonable to expect the agent's world model to account for thermal noise as anything other than a random variable. As a Cartesian hack, we can specify a probability distribution over actions for the world model to use when sampling. So for our particular query, we can specify a uniform distribution across all actions. Then in reality, the actual distribution over actions will be biased towards certain actions over others because they're likely to result in higher utility.

Sorry for being unclear, I think you're talking about a different dimension of embeddness than what I was pointing at. I was talking about the issue of logical uncertainty: that the agent needs to actually run computation in order to figure out certain things. The agent can't magically sample from P(h) proportional to exp(U(h)), because it needs the exp(U(h')) of all the other histories first in order to weigh the distribution that way, which requires having already sampled h' and having already calculated U(h'). But we are talking about how it samples a history h in the first place! The "At best" comment was proposing an alternative that might work, where the agent samples from a prior that's been tuned based on U(h). Notice, though, that "our sampling is biased towards certain histories over others because they resulted in higher utility" does not imply "if a history would result in higher utility, then our sampling will bias towards it".

Consider a parallel situation: sampling images and picking one that gets the highest score on a non-robust face classifier. If we were able to sample from the distribution of images proportional to their (exp) face classifier scores, then we would need to worry a lot about picking an image that's an adversarial example to our face classifier, because those can have absurdly high scores. But instead we need to sample images from the prior of a generative model like FFHQ StyleGAN2-ADA or DDPM, and score those images. A generative model like that will tend strongly to convert whatever input entropy you give it into a natural-looking image, so we can sample & filter from it a ton without worrying much about adversarial robustness. Even if you sample 10K images and pick the 5 with the highest face classifier scores, I am betting that the resulting images will still really be of faces, rather than classifier-adversarial examples. It is true that "our top-5 images are biased towards some images over others because they had higher face classification scores" but it is not true that "if adversarial examples would have higher face classification scores than regular images, then our sampling will bias towards adversarial examples."

The job of a value function isn't to know or think about things. It just gives either big numbers or small numbers when fed certain world states. The value function in question here gives a big number when you feed it a world state containing lots of counterfeit money. Does this mean it knows about counterfeit money? Maybe, but it doesn't really matter.

I disagree with this? The job of the value function is to look at the agent state (what the agent knows about the world) and estimate what the return will be based on that state & its implications. This involves "knowing things" and "thinking about things". If the agent has a model T(s, a, s') that represents some state feature like "working a job" or "building a money-printer", then that feature should exist in the state passed to the value function V(s)/Q(s, a) as well, such that the value function will "know" about it and incorporate it into its estimation process.

But it sounds like in the imagined scenario, the agent's model and policy are sensitive to a bunch of stuff that the value function is blind to, which makes this configuration seem quite weird to me. If the value function was not blind to those features, then as the model goes from the training environment, where it got returns based on "getting paid money for tasks" (or whatever we rewarded for there) to the deployment environment, where the action space is even bigger, both the model/policy and the value function "generalize" and learn motivationally-relevant new facts that inform it what "getting paid money for tasks" looks like.

A more relevant question is whether the plan-proposer knows the value function well enough that it knows the value function will give it points for suggesting the plan of producing counterfeit money. One could say probably yes, since it's been getting all its gradients directly from the value function, or probably no, since there were no examples with counterfeit money in the training data. I'd say yes, but I'm guessing that this issue isn't your crux, so I'll only elaborate if asked.

The plan-proposer isn't trying to make the value function give it max points, it's trying to [plan towards the current subgoal], which in this case is "make a million bucks". The plan-proposer gets gradients/feedback from the value function, in the form of TD upates that tell it "this thought was current-subgoal-better/worse than expected upon consideration, do more/less of that in mental contexts like this". But a thought like "Inspect my own value function to see what it'd give me positive TD updates for" evaluates as current-subgoal-worse for the subgoal of "make a million bucks" than a more actually-subgoal-relevant thought like "Think about what tasks are available nearby", so the advantage is negative, which means a negative TD update, causing that thought to be progressively abandoned.

This sounds like you're talking about a dumb agent; smart agents generate and compare multiple plans and don't just go with the first plan they think of.

Generalization. For a general agent, thinking about counterfeit money plans isn't that much different than thinking of plans to make money. Like if the agent is able to think of money making plans like starting a restaurant, or working as a programmer, or opening a printing shop, then it should also be able to think of a counterfeit money making plan. (That plan is probably quite similar to the printing shop plan.)

Once again, I agree that the agent is smart, and generalizes to be able to think of such a plan, and even plans much more sophisticated than that. I am arguing that it won't particularly want to produce/execute those plans, unless its motivations & other cognition is biased in a very specific way.

Replies from: DaemonicSigil↑ comment by DaemonicSigil · 2023-03-07T08:59:20.383Z · LW(p) · GW(p)

Thanks for the reply. Just to prevent us from spinning our wheels too much, I'm going to start labelling specific agent designs, since it seems like some talking-past-each-other may be happening where we're thinking of agents that work in different ways when making our points.

PolicyGradientBot: Defined by the following description:

A simple model would be an agent consisting of a big recurrent net (RNN or transformer variant) that takes in observations and outputs predictions through a prediction head and actions through an action head, where there's a softmax to sample the next action (whether an external action or an internal action). The shards would then be circuits that send outputs into the action head.

The base case is probably just a plain ol' rewards that get backpropagated through the action head via policy gradients.

ThermodynamicBot: Defined by the following description:

There's a detailed world model that can answer various probabilistic queries about the future. Planning is done by formulating a query to the world model in the following way: Sample histories according to:

Where is utility assigned by the agent to a given history, and is some small number which is analogous to temperature.

As a Cartesian hack, we can specify a probability distribution over actions for the world model to use when sampling. So for our particular query, we can specify a uniform distribution across all actions. Then in reality, the actual distribution over actions will be biased towards certain actions over others because they're likely to result in higher utility.

Comments on ThermodynamicBot